Abstract

Accurate demand prediction and early detection of anomalies are essential for efficiency, costs and users’ satisfaction in supply chain. Due to enormous growth, the retail systems have become more complicated and there is need for more advanced and intelligent systems for optimal management. The application of artificial intelligence (AI) to the retail systems provides solutions for capturing nonlinear patterns, seasonality, and exogenous factors which traditional statistical models cannot handle. In this paper, we propose a dual-head system that consists of one head for forecasting and one head for anomaly detection, where both are based on Long Short-Term Memory (LSTM) networks and Autoencoders, respectively, serving to increase the predictive accuracy and robustness against outliers. The proposed architecture is also enriched by feature engineering techniques derived through feature selection, which ensures that the model can capture temporal dependencies and hidden structural patterns. A thorough empirical study is performed on standard M5 Forecasting dataset covering three evaluation perspectives (a) error-based measures (b) accuracy-based measures and (c) prediction interval performance. Experimental results show that the model has substantially improved results as compared to baseline of deep learning models with 9.4% relative improvement and has lower error rates of 10.84 RMSE to ensure robust interval coverage with low MPIW of 5.2. Moreover, the model is made transparent with Saliency maps, SHAP values and LIME explanations, providing a visual interpretation for feature importance and decision logic to forecasting and anomaly detection in supply chain management.

Similar content being viewed by others

Introduction

Supply chain systems are the foundation of modern economies, which guarantee the uninterrupted transportation of products from the manufacturer to the consumer1. With the increasing globalization of trade, just-in-time manufacturing, and increases customer expectations, efficient supply chain operations have become critical to business resilience and competitiveness2. However, these operations are vulnerable to fluctuations in demand, disruptions in logistics, and unexpected anomalies such as supply shocks or irregular consumer behavior3. Forecasting demand accurately and detecting anomalies in real time are therefore vital for minimizing costs, preventing stock outs, and maintaining overall supply chain stability4.

The advancements of Artificial Intelligence (AI) and deep learning have provided new possibilities for improving decision-making in complex and dynamic environments such as for supply chain management5. Conventional statistical methods are interpretable but typically do not lend themselves well to the representation of non-linear relationships, long-range temporal dependencies and multi-scale seasonality characteristic of demand data6. Deep learning models, such as recurrent neural networks (RNNs) like Long Short-Term Memory (LSTM) RNNs, have shown effectiveness in sequential prediction tasks, because of their capability of modeling long-range dependencies7. Meanwhile, autoencoder-based framework offers powerful tools for unsupervised anomaly detection and learns latent representations which can reveal anomalies deviating from normal patterns8. However, there are still many efforts in developing integrated models that can balance accurate prediction and robust anomaly detection in large-scale hierarchical supply chain data9.

Recent market studies have indicated the increasingly important role of supply chain, with the world market worth USD 31.11 billion in 2023, and an expected growth to USD 62.18 billion in 2030, corresponding to a 10.4% CAGR. North America is the leading region at present; however, Asia-Pacific is expected to grow fast10. Adoption among vertical industries is strong for FMCG and retail, e-commerce, healthcare and logistics, most of the chosen sectors require demand forecasting and anomaly detection to be in place, and the pace of adoption of AI in supply chains is only picking up in 2022, just 34% of companies said they were using AI, a number expected to grow to 38% by 2025, with another 30% saying that they believe AI is critical11. These numbers highlight the need for AI-based forecasting and early problem detection mechanisms, like the one presented in this work, to address scalability, efficiency, and decision support in today’s supply chain scenarios10.

Previous research has applied classical time series methods and exponential smoothing to supply chain forecasting, achieving moderate performance in stable settings. Moreover, interpretability and adaptive thresholding under non-stationary environments remain underexplored. In this paper, the primary objective is to develop a novel LSTM and LSTM-Autoencoder (AE) hybrid methodology for time series forecasting and anomaly detection in supply chain operations. The study integrates the publicly available M5 Forecasting dataset, which contains hierarchical, event-rich retail data, to evaluate the proposed approach. The main research contributions are:

-

Development of a dual-head forecasting–anomaly detection framework integrating LSTM for demand forecasting and LSTM AE for anomaly detection, achieving superior results of 81% precision over classical and deep baselines (ARIMA, Prophet, CNN-1D, GRU, LSTM).

-

Incorporation of conformal prediction with POT calibration to improve interval reliability and robust anomaly detection under distributional shifts.

-

Proposal of enhanced model representation using exogenous features engineering techniques including lag features, rolling statistics, Fourier seasonality, wavelet decomposition, and PCA/MI-based selection.

-

Carrying out interpretability analysis through XAI methods (Saliency, LIME, SHAP), identifying the most influential features driving demand patterns.

The remainder of this paper is organized as follows. Section 2 presents a review of related literature on time series forecasting and anomaly detection in supply chain management. Section 3 describes the proposed methodology, including preprocessing, feature engineering, model design, and evaluation criteria. Section 4 reports and analyzes the results, followed by a discussion of interpretability and decision-making implications. Finally, Sect. 5 concludes the study and outlines future research directions.

Related work

The focus is on the problem of temporal demand forecasting and anomaly detection in automotive spare- parts supply chains where CNN and bidirectional LSTMs are combined to make predictions. In this context, a CNN–BiLSTM is used to predict shipment lead times, whereas an LSTM AE (jointly with a one-class SVM) is used to identify outliers. This hybrid technique was successful at significantly enhancing the predicting accuracy over simple RNN and vanilla LSTMs and worked well in predicting real ERP data12. In another work, a bi-directional LSTM hybridized with Nonlinear Autoregressive eXogenous (NARX) model was proposed for demand forecasting. This uni-regression model integrates the bidirectional LSTM output to a dynamic NARX regressor, enabling learning both internal temporal patterns and external stimuli13. LSTM AE are and have been quite popular for anomaly detection in time series, also not just for classical supply chains. The model is reported to have learnt normal operational behavior patterns and to have demonstrated high outlier detection accuracies in turbine fault data14. A few other studies extend LSTM AE using meta-learning for better anomaly detection on multiple machines. MAML-based LSTM AE is trained across multiple CNC machines. With the help of Model-Agnostic Meta-Learning the model can quickly learn on the data of a new machine with only a small amount of tuning15. For retail sales forecasting, recent LSTM-based models have attained better performance than the LSTMixer model, an extension of Time-Series Mixer (Informer-like) by introducing an LSTM layer. Empirical evaluations on multi-store sales data demonstrate that LSTMixer substantially outperforms convolutional and transformer benchmarks, suggesting that embedding an LSTM between transformer layers is beneficial to modelling seasonality and trends16.

Comparative studies regularly show that, compared to classic models, LSTM outperforms in supply chain scenarios, comparing their performance to ARIMA model for demand of medicine in health SCM. The substantial performance difference highlights the power of LSTM to capture intricate, non-linear demand variations17. Additionally, the emphasis of LSTM’s focus on long-term dependencies fits well for the supply-chain event series. At the same time, the authors note that the LSTM models may require data and computation. However, the flexibility of the LSTM is well documented in the literature for many SCM specific problems such as demand forecasting, inventory prediction and anomaly detection18. Hybrid forecasting with LSTM using such strategies has also been investigated for use in e-commerce logistics. In a warehouse forecasting study fused ARIMA and LSTM. This dual-model approach indicates that in supply-chain venue, traditional statistical methods and LSTMs may complement each other while adding modeling burden19. For production planning, they have integrated LSTMs inside the optimization loops, proposed a hybrid LSTM–Q-learning network for hierarchical production planning. They use a model that involves forecasting demands in an iterative manner with an LSTM and using Q-learning to adapt the production decisions for the forecasts. This real-time LSTM-Q generates more flexible planning in the looks of a fuel-tank construction case20. For regional logistics demand, compared with regression and grey models, LSTM prediction during COVID era has the smallest prediction error in both training and test. This illustrates LSTM’s ability to model unknown disrupted patterns in transport demand. Nevertheless, the study case is only a single region, more validation is needed in broader logistics implementation21.

The latest developments in bio-inspired optimization and hybrid learning methods have really increased the forecasting and classification accuracy in various fields. The Greylag Goose Optimization (GGO) with a Multilayer Perceptron has been shown to be accurate in the classification of lung cancer, and its ability to select features and statistically validate them was shown to be better than the conventional binary optimizers in the medical sphere22. The GGO-MLP model has been extended to cover the prediction of CO 2 emission of electric vehicles, providing reliable predictions used in the promotion of sustainable energy policies and reduction of emission processes23. The adaptive dynamic puma optimizer with guided whale optimization algorithm was used in environmental prediction whereby accuracy in rainfall classification was 95.99% and LSTM-based forecasting accuracy was improved24. Equally, a GGO-ARIMA hybrid model was seen to be highly scalable and predictive (MSE 0.002135, R 2 0.99995) with smart city electricity load forecasting25. Also, Comment Feedback Optimization Algorithm (CFOA) enhanced deep learning models to forecast groundwater, decreased MSE to 6.1 × 10 − 6 and model interpretability to ablation and sensitivity analysis26. Altogether, this highlights the flexibility of optimization-based hybrid systems in the development of sustainable systems in healthcare, environment, and urban infrastructure.

Many of the previous works of supply chain forecasting and anomaly detection are based on either classic statistical model or single-headed deep learning models such as CNN, GRU, and standalone LSTMs and most of them are task-specific and usually have limited capability with capturing long-range dependency. Overall, they achieve acceptable forecasting accuracy but may be susceptible to fragile anomaly detection or lack well calibrated uncertainty estimation and therefore do not perform as well in complex, dynamic supply chain environments. Contrarily, in this paper, it can be called as two headed architectures, the merge of LSTM forecasting and LSTM AE for abnormality detection, the model is going to learn the temporal nature/patterns and detecting the abnormalities at a time. The co-optimized design not only strengthens the prediction capability, but also the robustness to disturbance from unexpected interference, offering a more harmonic and flexible solution for SC operations.

Research proposed methodology

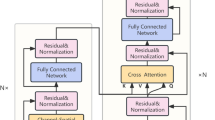

The proposed method follows dual-head architecture that combines an LSTM model for demand forecasting and an LSTM AE for anomaly detection, as illustrated in Fig. 1. The model we employ enables the framework to capture temporal dependence in sales and hence anomalous patterns indicative of supply chain anomalies.

To improve the expressiveness model, coupled multiple feature engineering approaches to learn short-term variations and long-term dependencies. The model also introduces conformal prediction for dependable uncertainty estimation in addition to weekly recalibration and hierarchical reconciliation to ensure coherence at item, category, and state levels. The efficacy, robustness, and interpretability of the proposed approach are evidenced by the competitive results justified using XAI techniques that include Saliency maps, SHAP, and LIME to verify feature contributions.

Data set and preprocessing

The study investigation is conducted on the M5 Forecasting – Accuracy data27, provided by Walmart, which includes the daily number of units sold of 30,490 products sold in 10 stores in 3 U.S. states, from 1,941 days in a row (2011–2016), features displayed in Table 1. The dataset al.so contains exogenous variables such as calendar events, SNAP program indicators, and item-level prices. With hierarchical structure \(\:(item\:\to\:\:department\:\to\:\:category\:\to\:\:store\:\to\:\:state\:\to\:\:national)\) for both forecasting and anomaly detection.

The different sales dynamics for products purchases and different states in the exploration analysis, shown in Fig. 2. In general, household lags both foods and hobbies. Households contribute about a third of total sales, and both foods and hobbies have almost the same dominating share, shown in Fig. 2a.

But if we break this down by state, there are some differences, California and Texas are the same, food is leading the sales, this is followed by hobbies and household, this just shows how much we are spending as consumers on everything stuff. Meanwhile in Wisconsin, a state-level preference or store-level stocking difference with food and household types has not been discernible: there are distinct hobbies > food and household patterns (Fig. 2b). These results imply that regional heterogenies should be considered for forecasting because the sales behavior of the various product categories and states are not similar. The overall trend of the daily sales amount is increasing from 2011 to 2016 wherein a certain period sale exhibited short-term fluctuation and in the long-term growth. Seasonality in preferences is strong, with peaks corresponding to the months of Christmas and red peaks corresponding to seasons where consumer preference was high. The series also exhibits some low-frequency pulsating from weeks to weeks and decreases to very low values, likely corresponding to store closing or missing reporting, displayed in Fig. 3. This hodgepodge of long-term trends, short-term seasonality and out-of-the-blue flare-ups represents the complexities of demand forecasting in the retail world.

Log transformation reduces the raw demand value with logarithmic function to suppress the very sharp peaks and to amplify the small ones28. This operation is crucial as it normalizes the variance of the series and the effect of outliers estimated by Eq. 1, which is required for deep learning models that make an implicit assumption that the temporal dynamics in the underlying data are relatively smooth.

Normalization transforms each series to have zero mean and unit variance and is given by subtraction of the mean and division by the standard deviation of the series (2). Thus, items from all sale volume levels contribute equally to the training, which lessens the propensity for the model to be biased toward high demand items29.

As for sliding window construction, it aims to convert the sequential time series into supervised learning samples. Accompanying the future forecast horizon is a fixed length for backward looking window of past observations, which trains the model to learn a mapping from historical demand to future outcomes, as in Eq. 3. Pooling in this way allows recurrent architectures to learn temporal dependencies in a principled, structured manner.

To prevent look-ahead bias, all preprocessing and calibration procedures were strictly confined to the training window within each rolling forecast. Specifically, the scaler normalization, PCA/MI feature selection, Conformal calibration, and POT threshold fitting were re-initialized and fitted at every step using only past data. The calibration window advanced sequentially with the rolling horizon, ensuring that no future information influenced model learning or evaluation. This design maintains the integrity of temporal dependencies and guarantees a fully leakage-free experimental setup.

Feature engineering

The lag features are obtained by shifting the normalized series backwards a fixed number of days (e.g., 7, 14 or 28) to explicitly let the model learn from past demand observations to capture the weekly/monthly seasonal cycles, computed as in Eq. 4. By including such lagged values as predictors, the model is given a sense of the past that captures general trends that will heavily weigh next period’s sales.

Rolling means and variance by these features capture the local demand traces over a moving window30. The rolling mean eliminates short-term noise and gives a stable estimate of demand in the recent past, while the rolling variance measures the volatility in the same period, defined in Eq. 5. These numbers supply the model with not only the average demand of recent periods, but with the variability in the demand as well.

Seasonal effects modeled using Fourier Extensions will be used to capture seasonality (i.e. weekly seasonality). By mapping time indices with sine and cosine, they provide a way to represent cyclical stuff entirely and inversely, which could be challenging to model simply with dummy variables, generated from Eq. 6. These harmonics allow the LSTM to learn seasonality without having to remember long histories.

Wavelet decomposition yields the demand signal into multiple frequency components; the approximation is the long-period trend, and the detail coefficients are the short-period oscillations (computed by Eq. 7). By reparametrizing series into local wavelet basis functions31, the model can handle information at various time scales so as to be able to capture smooth seasonality and respond to rapid demand changes.

For wavelet decomposition, the Daubechies-4 (db4) wavelet was applied up to level 3, while the Fourier series included the first five harmonic components to capture seasonal periodicity.

Proposed model: LSTM AE

The input sequence works with a sliding window for the past normalized demand values, together with exogenous features (calendar, price, holidays). Then encoded by an LSTM, which is good at capturing temporal dependency and generating hidden representation that summarize the short-term fluctuations and the long-term patterns, as in Eq. 8.

The last hidden state is forwarded to the forecasting decoder to produce the multi-step ahead demand predictions through the forecast horizon, shown in Eq. 9.

Forecasting head based on multi-horizon decoder from the final encoder state, in Eq. 10.

To the AE decoder, the same encoded state, is passed to be decoded as the input sequence, computed using Eq. 11. This reconstruction error helps detect the difference between actual and learnt “normal” patterns32.

Multi-task training objective model is trained in conjunction with two losses: prediction error loss and reconstruction loss, defined in Eq. 12. The weighted sum of these guarantees the encoder to learn relevant features to be used in the prediction and anomaly detection.

During inference, we calculate an anomaly score using a combination of reconstruction error and forecast residuals, as in Eq. 13. Higher values signal aberrant demand shapes both from observed structure and predicted values33.

The model thus returns (a) demand predictions for operational planning and (b) anomalousness flags for anomaly early detection34, offering an integrated decision-making framework, as in Eq. 14.

To quantitatively evaluate detection performance, we generated synthetic anomalies under controlled conditions while preserving realistic temporal dependencies. Each time series was divided into non-overlapping windows, and within a subset (10–15%) of randomly selected windows, local demand points were perturbed by a multiplicative deviation factor between ± 30% and ± 50% of the local rolling mean (3–5 days). The labeling rule was as follows as in Eq. 15.

Here, \(\:\mathcal{A}\) represents the anomaly interval, and \(\:{y}_{t}^{{\prime\:}}\) is the perturbed signal. Each perturbed window was labeled as anomalous (1), while unmodified data were labeled as normal (0). Figure 4 illustrates an example of injected anomalies (highlighted in orange) compared with normal demand regions. This protocol ensures that anomalies are range-based and temporally continuous rather than pointwise, making them consistent with range-based evaluation metrics.

Conformal non-conformity based on calibrated residuals, with statistical thresholding using Peaks-Over-Threshold (POT) extreme value theory or conformal calibration is used to the anomaly scores35, using Eq. 16.

Above the threshold, a score is marked as an anomaly with supply chain operation.

The dataset sample includes 2011–2016 daily sales in various states, stores, and categories of products. The objective of the forecasting was stated in 28 days as the rolling prediction horizon, which corresponded to the official structure of the M5 evaluation. A sliding-window approach was selected to capture these temporal dynamics which made every model run to use overlapping sequences of inputs to better capture this temporal generalization. The training of all-time series was done in a single modeling structure with the aim of using cross-hierarchical correlations of state, store, and category-level signals. The data split was stratified based on store and state, so that the representation of each hierarchical group was proportional in both the training and validation partitions using hold-out techniques. Moreover, a cross series rolling assessment was used wherein validation windows across stores and categories were rotated to determine model consistency across different regional demand patterns.

Baseline models

The base models used in the experiment are benchmarks to compare the robustness of the proposed method. Traditional statistical techniques, like ARIMA and Prophet, are based on linear and additive models to capture the temporal pattern, the seasonality, but are sometimes constrained when modeling non-linear dependencies36. Deep learning-based models give us more powerful sequence modeling because CNN-1D can learn local temporal features using convolutional filters and GRU, LSTM as recurrent modules were specifically designed to capture the long-term dependencies in time series37. Furthermore, an LSTM AE was used for anomaly detection by reconstructing the normal patterns and noticing any variation. Each baseline was trained and finetuned on similar dataset using similar experiments settings for fair comparison. However, their result in either forecasting accuracy, anomaly detection or uncertainty calibration seems to be limited compared to the proposed Dual LSTM-AE model that can provide forecasting and anomaly detection by using exogenous features, feature engineering and uncertainty-aware calibration to achieve more reliable results38. These offer a range from classical statistical to state-of-the-art deep learning baselines to compare against the dual-head LSTM architecture.

Evaluation measures

To evaluate the performance of the proposed model and baseline methods, a comprehensive set of metrics was employed. RMSE, MAE, MAPE, sMAPE and quantile Pinball Loss are used to evaluate forecasting performance, displayed in Table 2. The anomaly detection is measured with Precision, Recall, F1-score, on the temporal anomaly ranges39. Interval reliabilities are assessed by Prediction Interval Coverage Probability (PICP) and Mean Prediction Interval Width (MPIW). Statistical significance is assessed for differences in forecast accuracy, and calibration quality is assessed on predictive intervals40.

Results and discussion

Results of the experiments unambiguously indicate that the LSTM forecasting and LSTM AE based anomaly detection combined under one single dual-head context of supply chain operations. Classical statistical models, including ARIMA and Prophet, produced low performance, as evidenced by larger RMSE, MAE, and sMAPE, which were unable to generalize complex non-linear dependencies within high-dimensional supply chain data points. Regarding deep learning baselines, all CNN-1D, GRU and LSTM achieved good performance in predictive accuracy, however, although LSTM (Forecast) provided better RMSE (11.96), MAE (8.52) and sMAPE (14.5), higher than CNN-1D and GRU, lower precision of (56%) by the differences in the specification when compared to traditional models, displayed in Table 3. Given that the LSTM-AE model was initially designed for anomaly detection, it demonstrated excellent performance in classification the increased precision 0.71 and F1 score 0.67 that exemplifies its strength in identifying unusual patterns across supply chains. The Expected Calibration Error (ECE) results, revealing that the proposed model exhibits the best calibration performance, closely following the perfect calibration line. This indicates that its predicted probabilities are more reliable and consistent than those of baseline models, shown in Fig. 5. In addition, the proposed dual-head architecture represented lower error rates RMSE 10.84, MAE 7.96, sMAPE 13.2%, and a high level of precision 81% and anomaly detection performance Precision 0.81, Recall 0.75, F1 0.78 than all baselines.

The statistical significance analysis in Table 4 shows that the proposed model has best statistically significant gains over all the baseline models in a variety of tests. The Diebold Mariano test (p = 0.0017) is confirmation that the errors in the forecasting of the proposed model are much less whereas the Wilcoxon Signed-Rank test (p = 0.0023) is a further confirmation of consistently high results in comparison. The Friedman test ( p = 0.0038) confirms that there are statistically significant differences in model rankings across experiments, and the Nemenyi post-hoc test ( p = 0.0045) demonstrates that these gains are significantly different than competing models. As all the p-values are less than the significance threshold of 0.05, the findings support that the predictive and anomaly detection capabilities of the proposed model are not explained by random chance but by the actual benefits of the methodological ways as compared to ARIMA, Prophet, CNN-1D, GRU, and standard LSTM baselines.

Finally, it generated smaller predictive intervals and was better calibrated MPIW 5.2, PICP 91.5% which underscored the strength of the predictions when considering uncertainty. In conclusion, this study validates that the dual-head LSTM framework primarily enhances the prediction, makes the uncertainty estimates more reliable, and strengthens the anomaly detection, which makes it suitable for supply chain forecasting and corresponding risk management. The reliability plot and the interval width analysis reported in Fig. 6 show the clear impact of post-recalibration in improving the reliability calibration. Predictions intervals tended to under cover the true series before recalibration, but the picture was very confusing between the widths of intervals. For the recalibrated intervals the coverage was closer to the target in both cases indicating that the intervals now contain a relatively less dishonest range of uncertainty.

Even more importantly, the inclusion in the POT tail fitting framework improves the level of power anomaly detection enjoyed by the proposed approach (Fig. 7). The distribution of the anomaly scores has a heavy tail on the low values, corresponding to the behavior of inliers, and an exponential tail well describes the high scores, corresponding to the anomalies.

The threshold (u = 0.29) of the POT statistics is also justified from a statistical standpoint, since it allows a very well-defined distinction between true anomalies and purely random noise. This approach guarantees that anomalies are detected to the best of our ability, avoiding the detection of false positives, yet capturing rare yet critical supply chain disruptions. Finally, the hierarchical coherence heatmap in Fig. 8, shows that reduces incoherence under hierarchical summation constraints in the supply chain.

Prior to reconciliation, the coherence errors were significant, especially for deeper departmental layers and some state-store pairings (such as S3-St1-D1). The errors reduced significantly after reconciliation for almost all nodes, some even to zero. This proves that the proposed approach can improve both point forecasts and anomaly detection and ensure that data can be logically propagated up the hierarchy for supply chain. Such improvements are indeed important in application, because coherent forecasts at multiple levels (states, stores, departments) aid decision making and prevent inconsistencies across the aggregated and disaggregated demand forecasts.

To measure hierarchical reconciliation, the MinT-shrink covariance method was employed to measure coherence before and after reconciliation. Figure 8 (Coherence Error Heatmap) shows the improvements in the Coherence error and Table 5 (WRMSSE@level) reports the error magnitude at each of the aggregation levels, showing a steady reduction in error. This testing framework analyzes the fact that both forecasting and structural coherence are tested in a single temporal-hierarchical system. Computation of the Weighted Root Mean Squared Scaled Error (WRMSSE) was done at every level of hierarchy aggregation as per the M5 evaluation criterion. As Table 5 demonstrates, the proposed model has the best WRMSSE values at each level, as it displays more reconciliation between bottom-up and top-down forecasts. The model achieves a mean WRMSSE of 0.373, which is a 15.1% improvement of the best deep model (LSTM) and is almost 30% better than statistical models. The advantage shown in the item and department levels, where nonlinear temporal and cross-series dependence are the most significant. The findings are empirically valid in terms of the fact that the proposed architecture does not only increases the point-forecast performance but also increases the hierarchical coherence.

The plot in Fig. 9 shows the training vs. validation loss of the proposed dual-head LSTM model as a function of the number of training epochs. Both curves are converging and they reduce gradually indicating that learning is successful and does not severely overfit. The training loss monotonically decreases, and the validation loss follows it quite closely, which indicates that the model generalizes well to the test data. At the 10th epoch, the validation loss hits the trough and takes the minimal value of 0.316, converged and steady state are achieved. This behavior demonstrates the adaptability of the proposed architecture to model the temporal dependency and the complexity of supply chain time series data.

The plot in Fig. 10 shows the anomaly detection mechanism at the time of training, where the combined anomaly scores are compared with semi-supervised dynamic POT threshold. In the initial phases, we have higher anomaly scores which exceed the threshold on multiple operations, this is due to unstable reconstructions as the AE part is still learning the normal patterns. The anomaly scores decrease gradually as training continues and is consistently below the threshold after approximately epoch 25 with a few exceptions. This shows that the model indeed is learning the difference between normal behaviors and anomaly, and the inclusion of POT provides a mathematically sound way of highlighting incidences of abnormal events. In aggregate, these plots verify the fact that this novel strategy enables not only stable forecasting but also improves the reliability of anomaly detection during the whole training procedure.

A clean software and library environment was developed to guarantee the reproducibility and scalability of the proposed framework, displayed in Table 6. The implementation was developed in Python using PyTorch, the latter as the main DL library, and common numerical software (NumPy, Pandas, SciPy) and preprocessing tools. For feature engineering, specific libraries such as PyWavelets and Statsmodels for the seasonal/frequency based decomposition, and Scikit-learn for dimensionality reduction and feature selection (based on statistical methods) were employed. We also included ARIMA and Prophet as naive baselines for reference. Additionally, the SHAP and LIME packages made models interpretable, while Matplotlib and Seaborn were used for visualizations. Here we provided a consistent computation stack for training, testing, and explainability.

The model that was developed was a parameterized in order to balance performance gain and power consumption. The hyperparameters were the lookback and forecast horizons for temporal dependencies, the number of LSTM layers and hidden units for modeling sequential patterns, and the dropout mechanisms to prevent overfitting as demonstrated in Table 7. The anomaly detection head included AE latent dimensions, reconstruction weights, and fusion method that fused reconstruction error and residual predictions. Adam optimizer with learning rate schedule, and hyperparameters of batch size and training epoch were tuned so that convergence was stable. The object-level decision thresholds (p-values), POT calibration, and hierarchical adjustment parameters ensured that the prediction intervals were both robust and coherent across aggregation levels. The architecture used LSTM encoder in both forecasting and anomaly detection. The encoder derives temporal dependencies which it feeds into two separate heads; a forecasting head (LSTM-Dense) which predicts multiple steps of quantile values and an Autoencoder head (LSTM-AE) which reconstructs anomalies41. Parameters of the encoder are shared completely and each head also has its decoder and output layers. The model has a total of some 1.9 million parameters, broken down as follows; some 1.2 M in the shared encoder, 0.45 M in the forecasting head and 0.25 M in the Autoencoder head. The common design minimizes redundancy, allows more effective training and allows predictive performance and sensitivity to anomalies to optimize together.

Computational efficiency and deployment: training the multicomponent models (LSTM forecast, AE reconstruction and recalibration strategies) were potentially reflected our deployment.time was kept higher to single-zoneo head (in all the baselines (Table 8). However, inference remained easy to compute with optimize forward passes and batched execution on GPU hardware.

According to Table 8, the suggested Dual LSTM + AE model has a realistic accuracy-computational efficiency balance. Each training step has a compute time of approximately 6.4 GFLOPs with a total of 0.43 M trainable parameters and 16.6 M FLOPs per sequence, which is equivalent to positions of approximately 32 TFLOPs/ep epoch. Inference is also efficient, with 0.815ms latency on A100 GPUs and 26ms latency on T4 devices, and it is possible to run full M5-scale inference in less than a minute when running batched inference on a GPU. MinT shrinkage provides scalability by hierarchically parallelizing and distributing series across nodes, and using distributed processing of series. In order to cut down the cost of deployment in industrial environments further, the framework can be used to support model compression (FP16 quantization), and parallel training across GPUs, which is relevant to large-scale forecasting and real-time applications.

Ablation study

The results of ablation study offer meaningful observation of the combination of multiple components in the proposed dual-head LSTM AE framework. The full model (A0), including exogenous features, Fourier and wavelet data transformations, PCA/MI-based feature selection, conformal prediction with POT-based recalibration, and hierarchical reconciliation outperforms other models achieving the lowest value of RMSE (10.84), MAE (7.96), and sMAPE (13.2) and the best anomaly detection (F1 = 0.78), display in Table 9. This makes the proposed architecture the best and balanced alternative when predicting and detecting anomaly when modeling supply chain processes.

We observed a noticeable decrease in F1 score (0.56) upon detachment of the anomaly detection head (A1), indicating the need to have reconstruction error signals of the AE as the basis for anomaly class separation. In the same way, bypassing the conformal recalibration and using a single fixed residual (A2) threshold led to a lower-quality calibration of the intervals, and consequently, a lower quality of anomaly: the PICP of calendar-based anomaly threshold has dropped to 86.4%, and the anomaly F1 has reached 0.64 because of an increased number of false alarms. When replacing dynamic with weekly recalibration intervals, coverage drifts, larger intervals (MPIW = 6.1) and reduced detection (F1 = 0.69), proving that recalibration is needed for sharpness vs. coverage balance. Despite the no use external features warning (A4), this condition really destroyed forecasting (sMAPE = 14.6) and the forecasting quality heavily degraded too, reinforcing the relevance of contextual signals like pricing or external events. Eliminating Fourier and wavelet transformations (A5) also led to the loss of capability to model seasonality and bursts, increasing the sMAPE to 14.0 while maintaining reasonably high PICP. Disabling PCA/MI for feature selection (A6) caused some overfitting, as judged by increased intervals (MPIW = 5.7), F1 on anomaly (0.74) was however still consistent.

The dependence of the model on contextual history was again highlighted by changes to the input window length. Reducing the window to 28 frames (A7) affected the ability to capture long-range dependencies whereas extending it to 84 frames (A8) slightly increased detection (F1 = 0.76) at the expense of additional computational complexity. When substituting the LSTM AE for a GRU (A9) the LSTM decoding of long-term dependencies weakened slightly and the resulting F1 degraded (0.73), with only slight increase in errors. A single-head AE without the forecasting branch (A10) retained anomaly detectability (F1 = 0.67) and forecast operability, at the cost of less practical usefulness in supply chain applications. Finally, by discarding hierarchical reconciliation (A11) top-level coherence degraded and even though forecasting metrics remained constant, coherence anomalies reemerged at aggregate levels, which supported the significance of reconciliation for multi-level consistency in the supply chain.

The normalized heatmap at least provides clear illustration which shows how each ablation variant performs over multiple evaluation metrics relative to full model, as shown in Fig. 11. Understandably, the full configuration (A0) achieves the best global performance over all measures, which demonstrates the synergetic advantage of using all architectural and methodological blocks. Perturbing the anomaly detection head (A1) also proves to be a critical component in preserving precision and anomaly detection. A2 and A3 also exhibit moderate decreases, especially in both reliability and interval sharpness and A5 suffers from the inhibition of seasonal adaptation. On the other side, neural chain decorrelation (A4) and overall cumulative gradient (A7) are the weakest, and long input windows (A8) and PCA/MI feature selection (A6) are the most valuable, giving a good trade-off between model complexity and f1-score (the topping values are close). The heatmap also shows four methods which lose score in forecasting-as the transpositions cause more forecasting errors-the first two are in A11 as hierarchical reconciliation, which almost preserves forecasting and anomaly but sacrifices coherence, and GRU substitution that decreases long-term dependency handling. Overall, the visualization verifies that, in terms of model interpolation, some components provide marginal profit, and others, in particular the anomaly head, ex check patterns, and recalibration sea are essential to maintain the superior performance in terms of forecast resurrection, detection, and uncertainty calibration of the proposed baseline.

Interpretability analysis

The saliency heatmap shown in Fig. 12 offers a temporal impression of the impact of various features on the proposed model over the lookback window. Our findings indicate that the recent lags (lag7, lag14, lag28) are the most important and this is clearer as we get closer to predicting, showing the model is dependent on more recent historical observations. The rolling statistics, of which follows them closely is the shopping 7-day mean (roll_mean_7), are also important, as they reflect information about smoother demand trends. Among the exogenous variables, its tuition price seems to be an important variable, maintaining a steep positive slope over the entire lookback period and growing in importance as the lookback period approaches 0, suggesting that this variable is vital in capturing the dynamics of the supply chain. For seasonal signals (including Fourier terms), contributions are intermediate in size, while the contributions from the wavelet-based features, the event flags or the SNAP flags are weak, suggesting that they provide more supplementary information than primary return information. In short, saliency map confirms that the network has fully utilized short-term memory, trend-based statistics and exogenous information for accurate forecast and anomaly detection.

Further interpretability and transparency of model ensured in Fig. 13, which is confirmed by the LIME-like local contributions in Fig. 13 (a) indicate that, while in some cases the price is by far the most influential predictor to this specific instance, lag features and rolling means are also highly influential for it, and features such as variance and some wavelet details have a minimal or even negative effect.

Analogously to these, the SHAP-like global ranking42 agrees with these as price, lag7, lag14 and roll_mean_7 are by far the tendencies with the top global contributions event flags and Fourier terms add some value, unlike the wavelet and SNAP flags which have less importance at global level, shown in Fig. 13 (b). Taken together, these interpretations confirm that the model depends on an equal combination of short-term memory (lags), trend and variability signals (rolling features, Fourier) and exogenous drivers (price, events), and that this is why it performs well in terms both of forecasts and of anomaly detection.

Limitations

Despite the results obtained, several limitations of this study warrant discussion. First, the model has been validated on the M5 Forecasting dataset which, although well known, is a representation of U.S. retail demand habits and may not clearly represent the variety of other industries or geographical areas. The wider applicability, including manufacturing, healthcare logistics, energy forecasting, and so on, would enhance the external validity of the model. Second, the fixed forecast horizon which is a constraint to assessing the flexibility of the model to long term or highly seasonal time horizons commonly observed in volatile markets. Third, the dual-head design, which is LSTM-based forecasting with an autoencoder-based anomaly detector and hierarchical reconciliation, is effective however, it adds computational complexity which can be restrictive to scalability to real-time or large-scale enterprise systems. In addition, the framework also depends on feature engineering, regular recalibration, and sensitivity to hyperparameters, which might be potentially problematic in dynamic data settings in terms of reproducibility and deployment. Lastly, explainability and conformal calibration were combined but the wider problem of data bias, privacy and ethical governance is not within the current context. Future work will limit these shortcomings by incorporating multimodal data fusion, comparison baselines based on transformers, distributed and lightweight designs, and reinforcement learning-related inventory optimization, which guarantees a wider range of applicability and robustness in most forecasting ecosystems.

Comparison with prior studies

This comparison demonstrates that the proposed Hybrid Dual LSTM AE model is the best-performing method in all the evaluation metrics. Although previous methods (CNN + BiLSTM with LSTM-AE + OCSVM) achieved 71% accuracy on M5 data and single model (LSTM-AE or MAML LSTM-AE) resulted in 77–78% precision/accuracy, they were not robust for handling complex temporal dependencies and anomalies, displayed in Table 10. Likewise, comparisons between LSTM and ARIMA in supply chains also presented competitive results with nonconstant and higher RMSE values while LSTMixer models achieved an 82% accuracy rate and have not yet provided sufficient precision and anomaly detection. While in this paper, the Dual LSTM AE automation model combines dual LSTM with AE of reconstruction, exogenous feature, Fourier/Wavelet decomposition, PCA/MI feature selection, uncertainty-aware calibration (Conformal POT avec hierarchical reconciliation) leading to 81% precisions, 78% F1-scores, and a small RMSE of 10.84. These findings show that the proposed approach is much more reliable than its baselines, in terms of both better forecasting accuracy, as well as anomaly detection and interpretation quality.

Conclusion and future work

In this study, we proposed a comprehensive dual-head framework that integrates LSTM for forecasting and LSTM AE for anomaly detection, further enriched with exogenous features, Fourier and wavelet seasonal signals, PCA/MI-based feature selection, conformal prediction with POT calibration, weekly recalibration, and hierarchical reconciliation. This full model demonstrates clear superiority over traditional statistical methods and standard deep learning baselines by reducing error metrics, enhancing accuracy, and improving interval reliability. The inclusion of XAI techniques such as Saliency, SHAP, and LIME ensures transparency, offering actionable insights into the most influential features driving demand and anomalies within supply chain operations. The empirical results confirm that the proposed approach provides both accurate forecasts and robust anomaly detection, making it highly relevant for real-world retail decision making. Future work will extend this comparative analysis to transformer-based and hybrid probabilistic baselines as part of a broader benchmarking study. In addition, scaling the framework to additional dataset incorporating multimodal data, cross-domain adaptability to manufacturing, energy, and healthcare logistics, including longer forecast horizons and seasonally volatile markets, using transfer learning across domains, and integrating reinforcement learning for adaptive inventory optimization.

Data availability

The dataset is freely available at: https://www.kaggle.com/competitions/m5-forecasting-accuracy/data.

References

Oubrahim, I. & Sefiani, N. An integrated multi-criteria decision-making approach for sustainable supply chain performance evaluation from a manufacturing perspective. Int. J. Productivity Perform. Manage. 74 (1), 304–339. https://doi.org/10.1108/IJPPM-09-2023-0464 (2024).

Cui, Y. & Yao, F. Integrating deep learning and reinforcement learning for enhanced financial risk forecasting in supply chain management. J. Knowl. Econ. 15 (4), 20091–20110. https://doi.org/10.1007/S13132-024-01946-5 (2024).

Farooq, A. et al. Interpretable multi-horizon time series forecasting of cryptocurrencies by leverage Temporal fusion transformer. Heliyon 10 (22), e40142. https://doi.org/10.1016/J.HELIYON.2024.E40142 (2024).

Luo, H. et al. A 2 tformer: addressing Temporal bias and Non-Stationarity in Transformer-Based IoT time series classification. IEEE Internet Things J. 1–1. https://doi.org/10.1109/JIOT.2025.3595765 (2025).

Badshah, A., Daud, A., Khan, H. U., Alghushairy, O. & Bukhari, A. Optimizing the over and underutilization of network resources during peak and Off-Peak hours. IEEE Access. 12, 82549–82559. https://doi.org/10.1109/ACCESS.2024.3402396 (2024).

Zhu, S. et al. Expanding and Interpreting Financial Statement Fraud Detection Using Supply Chain Knowledge Graphs. J. Theoret. Appl. Electron. Commerce Res. 20(1), 26. https://doi.org/10.3390/JTAER20010026 (2025).

Ahmed, M., Khan, H. U., Khan, M. A., Tariq, U. & Kadry, S. Context-aware answer selection in community question answering exploiting Spatial Temporal bidirectional long Short-Term memory. ACM Trans. Asian Low-Resour Lang. Inf. Process. https://doi.org/10.1145/3603398 (2023).

Ikeda, Y., Hadfi, R., Ito, T. & Fujihara, A. Anomaly detection and facilitation AI to empower decentralized autonomous organizations for secure crypto-asset transactions, AI Soc. 40(5), 3999–4010. https://doi.org/10.1007/S00146-024-02166-W (2025).

Katiravan, J. S. A R and Enhancing anomaly detection and prevention in Internet of Things (IoT) using deep neural networks and blockchain based cyber security. Sci. Rep. 15(1), 1–20. https://doi.org/10.1038/S41598-025-04164-4 (2025).

Supply Chain Management Market -. Industry Analysis, Trends. Accessed: Sep. 03, 2025. [Online]. Available: https://www.maximizemarketresearch.com/market-report/global-supply-chain-management-market/93915/.

Aljohani, A. Predictive Analytics and Machine Learning for Real-Time Supply Chain Risk Mitigation and Agility. Sustainability 15(20), 15088. https://doi.org/10.3390/SU152015088 (2023).

Amellal, A., Amellal, I., Seghiouer, H. & Ech-Charrat, M. R. Improving lead time forecasting and anomaly detection for automotive spare parts with A combined CNN-LSTM approach. Oper. Supply Chain Manage. 16 (2), 265–278. https://doi.org/10.31387/OSCM0530388 (2023).

Aldahmani, E., Alzubi, A. & Iyiola, K. Demand Forecasting in Supply Chain Using Uni-Regression Deep Approximate Forecasting Model. Appl. Sci. 14, 8110. https://doi.org/10.3390/APP14188110 (2024).

Lee, Y., Park, C., Kim, N., Ahn, J. & Jeong, J. LSTM-Autoencoder Based Anomaly Detection Using Vibration Data of Wind Turbines. Sensors 24(9), 2833. https://doi.org/10.3390/S24092833 (2024).

Woo, J. M., Ju, S. H., Sung, J. H. & Seo, K. M. Meta-Learning-Based LSTM-Autoencoder for Low-Data anomaly detection in retrofitted CNC machine using Multi-Machine datasets. Syst. 2025. 13(7), 534. https://doi.org/10.3390/SYSTEMS13070534 (2025).

Theodoridis, G. & Tsadiras, A. Retail demand forecasting: A comparative analysis of deep neural networks and the proposal of LSTMixer, a linear model extension. Inform. 16 (7), 596. https://doi.org/10.3390/INFO16070596 (2025).

Zhang, R. et al. Comparison of ARIMA and LSTM for prediction of hemorrhagic fever at different time scales in China. PLoS One. 17 (1), e0262009. https://doi.org/10.1371/JOURNAL.PONE.0262009 (2022).

Ahmed, K. R. et al. Deep learning framework for interpretable supply chain forecasting using SOM ANN and SHAP. Sci. Rep. 15(1), 1–28. https://doi.org/10.1038/S41598-025-11510-Z (2025).

Wang, C., Wang, J., Research on E-Commerce Inventory Sales Forecasting Model Based on ARIMA and & Algorithm, L. S. T. M. Mathematics 13, 1838. https://doi.org/10.3390/MATH13111838 (2025).

Luo, D., Guan, Z., Ding, L., Fang, W. & Zhu, H. A Data-Driven methodology for hierarchical production planning with LSTM-Q Network-Based demand forecast. Symmetry. 17(5), 655. https://doi.org/10.3390/SYM17050655 (2025).

Li, Y. & Wei, Z. Regional Logistics Demand Prediction: A Long Short-Term Memory Network Method. Sustainability 14, 13478. https://doi.org/10.3390/SU142013478 (2022).

Elkenawy, E. S. M., Alhussan, A. A., Khafaga, D. S., Tarek, Z. & Elshewey, A. M. Greylag Goose optimization and multilayer perceptron for enhancing lung cancer classification. Sci. Rep. 14 (1), 1–23. https://doi.org/10.1038/S41598-024-72013-X (2024).

Saqr, A. E. S., Saraya, M. S. & El-Kenawy, E. S. M. Enhancing CO2 emissions prediction for electric vehicles using Greylag Goose Optimization and machine learning, Sci. Rep. 15(1), 1–38. https://doi.org/10.1038/S41598-025-99472-0 (2025).

Elkenawy, E. S. M., Alhussan, A. A., Eid, M. M. & Ibrahim, A. Rainfall classification and forecasting based on a novel voting adaptive dynamic optimization algorithm, Front. Environ. Sci. 12, 1417664. https://doi.org/10.3389/FENVS.2024.1417664 (2024).

El-Kenawy, E. S. M. et al. Smart City Electricity Load Forecasting Using Greylag Goose Optimization-Enhanced Time Series Analysis, Arab. J. Sci. Eng. 1–19. https://doi.org/10.1007/S13369-025-10647-3 (2025).

Alhussan, A. A. L. I., El-Kenawy, E. S. M., Khafaga, D. S., Alharbi, A. H. & Eid, M. M. Groundwater resource prediction and management using comment feedback optimization algorithm for deep learning. IEEE Access. 13, 169554–169593. https://doi.org/10.1109/ACCESS.2025.3614168 (2025).

M5 Forecasting. - Accuracy | Kaggle. Accessed: Sep. 03, 2025. [Online]. Available: https://www.kaggle.com/competitions/m5-forecasting-accuracy/data.

Nesca, M., Katz, A., Leung, C. K. & Lix, L. M. A scoping review of preprocessing methods for unstructured text data to assess data quality. Int. J. Popul. Data Sci. J. Website: Www Ijpdsorg. 7 (1). https://doi.org/10.23889/ijpds.v6i1.1757 (2022).

Qiao, Y., Wang, T., Lu, J., Liu, K., TEMPO. & Time-evolving multi-period observational anomaly detection method for space probes. Chin. J. Aeronaut. 38 (9), 103426. https://doi.org/10.1016/J.CJA.2025.103426 (2025).

Kalita, E. et al. LSTM-SHAP based academic performance prediction for disabled learners in virtual learning environments: a statistical analysis approach, Soc. Netw. Anal. Min. 15(1), 1–23. https://doi.org/10.1007/S13278-025-01484-1 (2025).

Saba, R., Bilal, M., Ramzan, M., Khan, H. U. & Ilyas, M. Urdu Text-to-Speech Conversion Using Deep Learning. In 2022 International Conference on IT and Industrial Technologies (ICIT), pp. 1–6. (2022). https://doi.org/10.1109/ICIT56493.2022.9989175.

Muhsnhasan, M., Priyanka, K., Shahebaaz, A., Devi, M. & Sushama, C. Securing Blockchain based Supply Chains in Agriculture using Graph Neural Networks for Anomaly Detection. In International Conference on Intelligent Systems and Computational Networks, ICISCN 2025, (2025). https://doi.org/10.1109/ICISCN64258.2025.10934647.

Fu, C., Liu, G., Yuan, K. & Wu, J. Nowhere to H2IDE: fraud detection from Multi-Relation graphs via disentangled homophily and heterophily identification. IEEE Trans. Knowl. Data Eng. 37 (3), 1380–1393. https://doi.org/10.1109/TKDE.2024.3523107 (2025).

Gao, H. et al. Memory-augment graph transformer based unsupervised detection model for identifying performance anomalies in highly-dynamic cloud environments. J. Cloud Comput. 14(1), 1–18. https://doi.org/10.1186/S13677-025-00766-5 (2025).

Liu, Y. et al. ODMixer: Fine-Grained Spatial-Temporal MLP for metro Origin-Destination prediction. IEEE Trans. Knowl. Data Eng. 37 (9), 5508–5522. https://doi.org/10.1109/TKDE.2025.3579370 (2025).

Huang, C. et al. Correlation information enhanced graph anomaly detection via hypergraph transformation. IEEE Trans. Cybern. 55 (6), 2865–2878. https://doi.org/10.1109/TCYB.2025.3558941 (2025).

Bosnyaková, B., Babič, F., Adam, T. & Biceková, A. Anomaly detection in blockchain network using unsupervised learning, pp. 000221–000224, (2025). https://doi.org/10.1109/SAMI63904.2025.10883055

Chen, S., Long, X., Fan, J. & Jin, G. A causal inference-based root cause analysis framework using multi-modal data in large-complex system. Reliab. Eng. Syst. Saf. 265, 111520. https://doi.org/10.1016/J.RESS.2025.111520 (2026).

Jiang, W., Zheng, B., Sheng, D. & Li, X. A compensation approach for magnetic encoder error based on improved deep belief network algorithm. Sens. Actuators Phys. 366, 115003. https://doi.org/10.1016/J.SNA.2023.115003 (2024).

Jumani, F. & Raza, M. Machine Learning for Anomaly Detection in Blockchain: A Critical Analysis, Empirical Validation, and Future Outlook, Computers 14, 247. https://doi.org/10.3390/COMPUTERS14070247 (2025).

Tu, B. et al. Multi-scale autoencoder suppression strategy for hyperspectral image anomaly detection. IEEE Trans. Image Process. https://doi.org/10.1109/TIP.2025.3595408 (2025).

Wang, Y., Wang, Z. & Zhao, F. Integrating Node-Place model with Shapley additive explanation for metro ridership regression. IEEE Trans. Intell. Transp. Syst. https://doi.org/10.1109/TITS.2025.3546471 (2025).

Author information

Authors and Affiliations

Contributions

The sole author Chen Xiaoyang has fully contributed to this study.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Xiaoyang, C. Explainable dual LSTM-autoencoders with exogenous features for anomaly detection and supply chain forecasting. Sci Rep 15, 42371 (2025). https://doi.org/10.1038/s41598-025-26449-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-26449-4