Abstract

Today, communication using digital media has increased rapidly. In this digital communication era, providing security to sensitive images is essential at the time of transmission. The images are mostly focused nowadays because they are used directly or indirectly in every field of information sharing, for example, healthcare, military, intellectual property, and many more areas. In this paper a new approach to image scrambling is proposed to secure the sensitive image information. The proposed method is focused on the core concept of data structure. It involves the use of binary trees and the efficiency of hash tables, which enhances image security during transmission. The dynamic data structure properties enhanced the scrambling and descrambling process. In the scrambling method, the image pixels are first stored in the binary tree using the hash table. After the binary tree arrangement, pixels are collected into a one-dimensional array using the tree traversing process. Now, the one-dimensional array is converted into the two-dimensional array to match the size of the original image. The descrambling method is the inverse of the scrambling method. The proposed method maintains the quality of the image for both sender and receiver; different quality assessment parameters like PSNR, MSE, NCC, AD, SC, MD, Corr, HC, VC, DC, NPCR, UACI and Entropy are used to check the outcome. The outcome of PSNR between the original image and the scrambled image is less than 4 dB. For the descrambled image and the original image, the PSNR is infinite. According to the obtained results, there is a 100% similarity between the original image and the descrambled image. The proposed method was also compared with the existing methods, and it showed a negative or near to ‘0’ correlation between the scrambled image and the original image. In future work the proposed scrambling method can be used in image watermarking or image steganography techniques.

Similar content being viewed by others

Introduction

In the past few years, working habits have changed in terms of communication, shopping and transaction techniques. As a result, a lot of visual content is exchanged on the media and on networks. This type of transmission can lead to hacking, copyright infringement, and unauthorized access. Visual data related to the medical, military and government sectors is very secret. To ensure the safety and security of the data, different techniques are used, such as cryptography, steganography, encryption, watermarking, etc. To protect the content of the visual data, research has been carried out over the years.

Cryptography1 is a study that is used to ensure the security of images during multimedia transmission. In order to hide the original content of the visual data, plaintext has been converted to ciphertext, which is not readable and understandable by third parties. In the decryption phase, the ciphertext will be decrypted to its original content using different algorithms and keys. The working of cryptography is shown in Fig. 1.

Steganography2 is another method of image security that has been increasingly used in recent years. In this method, secret messages are embedded within the cover image and the resultant image is called the stego image. In the decoding phase, using the stego image and keys, the secret original message can be revealed. In recent years, interest in steganography has shifted from traditional and ancient practices to hiding secret data and media objects3, especially secret image files, in image files4. The mechanism of Steganography is shown through a block diagram in Fig. 2.

Watermarking5 is a technique similar to steganography. In watermarking, the visual content will be encoded with the watermark integration and the noise. Watermarks can be visible (e.g., overlaying text or logo) or invisible (embedded within the content). On the receiver end, the encoded image can be decoded by removing the watermarking. The framework of the watermarking system is represented by a block diagram in Fig. 3.

A 2023 analysis by Cybersecurity Ventures estimates that by 2025, cybercrime will cost the global economy $10.5 trillion yearly, with digital picture manipulation and data breaches accounting for a sizable amount of this total. Particularly, industries such as healthcare and defence are more vulnerable to image tampering, where unapproved changes might have catastrophic repercussions. The creation of safe and reversible picture scrambling techniques to ensure data integrity and secrecy during transmission is highly motivated by these growing concerns.

In this paper, an efficient image scrambling and descrambling method is proposed that provides high security for image transmission through multimedia. The use of dynamic data structures, i.e., binary trees and hash tables, leads to the high efficiency of the proposed method. This paper is structured in different sections. Section 1, i.e., “Introduction,” consists of a discussion about cryptography, steganography, and watermarking techniques. Some image security-related work has been discussed in Section 2, i.e., “Literature Review.” In Section 3, i.e., “Proposed Method,” the proposed scrambling and descrambling methods, followed by their algorithms, have been presented. Section 4, i.e., “Experimental Results,” consists of an experimental setup, and experimental results from the proposed method have been discussed. And the last section, Section 5, i.e., “Comparative Study,” consists of a comparison between different image security techniques and the proposed method based on different performance measure metrics.

The proposed method differentiates itself from existing image scrambling techniques by introducing a novel, structure-aware approach based on data structures such as binary trees and hash tables. Although most traditional techniques rely on fixed transformations, permutation matrices, or chaotic maps, our method takes advantage of the logical organization and dynamic properties of these data structures to enhance both security and retrieval accuracy.

Literature review

In this section, the required terminologies related to image scrambling and descrambling methods are discussed. Also, the section highlighting the previously developed scrambling methods.

History of scrambling methods

As current threads on image security are increasing, this opens up new research fields for researchers in this domain. This section discusses some of the previously completed work, where image scrambling techniques can be broadly divided into structural scrambling (6,7), chaotic scrambling (8,9), and hybrid techniques (10,11). References are discussed in detail to provide a better understanding of each approach, and several articles that compare different scrambling methods across these categories are also reviewed (12,13,14,15).

In6 a comparative study has been performed between different scrambling techniques such as 2D mapping, bit plane scrambling, matrix transformation, and key-based row and column shifting techniques. As a result, it is revealed that matrix-based image scrambling applied to the Arnold transform is performing better than other techniques. In7 (steganography and cryptography), two techniques have been executed that help secure transmission. This includes the techniques of hiding the text in the image using the LSB (Least-significant bit) algorithm, image inside the image using the least significant bit and genetic algorithm, video steganography using hamming code, steganography using cryptography, and deep neural networks.

In8 different chaotic maps depending on different scrambling methods and performance measures have been discussed. Scrambling methods include the Fibonacci-Lucas sequence, the logistic map, the Baker map, the Henon map, the Arnold map, etc. And performance measures like NPCR (Number of Pixels Change Rate), UACI (Unified Average Changing Intensity), mean value analysis, entropy, and peak signal-noise ratio are measured. In9 three different cryptographic techniques, such as Arnold Transform, Rivest Code 4 (RC4), and one-time pad (OTP), have been implemented, and performance measures like UACI, SSIM (structural similarity index measure), NPCR, entropy, computation time, and histogram analysis have also been calculated for comparison. Three techniques have their own advantages, like Arnold transform has an advantage on histogram, RC4 has an advantage on entropy value, and OTP has an advantage on SSIM and UACI.

In10 the use of DWT (Discrete Wavelet Transform) in different steganography techniques and its algorithm have been discussed. Image steganography techniques based on DWT include Haar DWT, diamond encoding in DWT, redundant DWT, QR factorization, DWT, and the Data Mining ID3 (Iterative Dichotomiser 3) algorithm. In11 two image watermarking techniques have been discussed using DCT, DWT, and SVD. The first is a hybrid watermarking technique using DCT-DWT-SVD, and the second is a conventional DCT-DWT-SVD hybrid watermarking technique with an image scrambling method (Arnold transform). For the validation of techniques, normalized correlation (NC) has been executed, and as a result, it was found that the second one is performing better.

In12 image encryption techniques based on 2D chaotic maps like Arnold 2D cat map, Baker map, Henon map, cross chaos map, and 2D logistic map have been studied. To check the efficiency of every technique, NPCR (Number of Pixels Change Rate) and UACI (Unified Average Changing Intensity) values have been calculated, and a comparison has been implemented. As a result of the comparison, NPCR is around 99%. The experimental result shows that the Arnold 2D cat map is better than other chaotic maps. In13 different digital steganography techniques have been discussed, and for validation, mean square error (MSE) and peak signal-to-noise ratio (PSNR) have been calculated. The techniques include Mod 10, DCT, PVD (pixel value differencing) 2, PVD 1, Stream of 1s and 0s, IP (Inverted Pattern Approach), and OPAP (Optimum Pixel Adjustment Procedure).

In14 a comparative analysis of different scrambling methods and their parameters has been executed. Analysis consists of two methods: one is queue transformation, and another is logistic mapping using chaotic sequence scrambling. As a result, SSIM for the logistic transform is less than the queue transform, which makes transmission safer. In15 various CA-based DIS techniques with the same parameters have been implemented. CA-based DIS techniques have been analyzed based on varying image sizes. The robustness of all the techniques has been validated using correlation coefficient analysis and the number of pixels change rate. In “A novel DNA-based key scrambling technique for image encryption,” an efficient DNA-based key scrambling method has been proposed. The proposed method has been evaluated using 15 different datasets, and performance has been measured based on entropy, key space, cipher pixel correlations, variance of the histogram, time complexity, and PSNR.

Overview of famous scrambling methods

Some Famous scrambling methods has been discussed in Table 1, which provides an edge in the era of image security. Table 1 is designed to give an overview of the performance and characteristics of each method based on these features, which are key for determining the appropriate use case and the level of security or efficiency needed.

Proposed methods

The proposed methods are divided into two folds: one is scrambling, and the other is descrambling. The scrambling part involves rearranging the pixels of the original image, which makes the original image more private from unauthorized users. The descrambling part involves reordering the pixel of the scrambled image using the proposed algorithm and keying it to its own original format. The central approach of this proposed method is the integration of dynamic data structures like binary trees and hash tables, which makes the image security technique easier and more efficient. In this section proposed scrambling method and proposed descrambling method has been elucidated through detailed implementation using Proposed Algorithm and Flow Chart. Figure 4, showing the proposed scrambling method framework.

Proposed scrambling method

The proposed scrambling method divides the whole scrambling process into different substages. Starting with the loading of the input image, which may or may not be a color image22, the image is preprocessed by converting the input image to a grayscale image if not and reshaping the grayscale 2D image array to a 1D image array. Next, finding the presence of pixel value 0 can be a limitation of the proposed image scrambling method. Continue with hash table creation from the 1D image array along with the creation of binary trees from the 1D image array indices, perform the in-order and pre-order traversals, and return the scrambled image from the pre-order traversal. The framework of the proposed scrambling method is shown in Fig. 5 and described in Algorithm ’1’.

Below is a formal mathematical representation of the hash structure and traversal logic used in proposed image scrambling method, including the hash function, collision handling, and tree traversal are discussed.

Image pixel representation

Let an input grayscale image be represented as a 2D matrix:

-

H: height (number of rows)

-

W: width (number of columns)

Each pixel I(i, j) has an intensity value in the range:

To process the image, flatten it into a 1D array:

Hash table structure

We define a hash table H to store pixel values and their positions:

To determine the index in the table, we use a hash function:

Where:

-

\(p_k = I(i_k, j_k)\) is the intensity value at pixel position \((i_k, j_k)\).

-

a, b, c are constants (preferably prime numbers) to reduce collisions.

-

M is the size of the hash table, ideally \(M \ge N\).

-

h returns the hash index for each pixel entry.

Collision handling

In case of hash collisions, we use chaining, meaning each index in H holds a linked list of pixel entries:

The expected number of collisions using chaining in a well-designed hash table is:

To minimize collisions, choose M such that \(M \approx N\) or select a small prime number greater than N.

Binary tree construction

Once hashed, pixels are inserted into a Binary Search Tree (BST) or a Balanced BST (e.g., AVL Tree), using the hash index as the key:

Each node in the binary tree stores the following information:

Where:

-

h is the hash index.

-

\((p_k, i_k, j_k)\) is the pixel value and its position in the original image.

-

The tree T maintains order based on h to enable efficient traversal and retrieval.

Tree traversal for scrambling

To scramble the pixels, perform an in-order traversal of the binary tree:

Where:

is the one-dimensional scrambled sequence of pixels obtained by visiting nodes in the order: Left Subtree \(\rightarrow\) Root Node \(\rightarrow\) Right Subtree.

After that, to scramble the pixels using a pre-order traversal, apply a recursive traversal function:

Where:

is the one-dimensional scrambled sequence obtained by visiting nodes in the order: Root Node \(\rightarrow\) Left Subtree \(\rightarrow\) Right Subtree.

Reshape this 1D array S into a 2D scrambled image:

Example: Binary Tree Insertion and Traversal on a 2D Grayscale Image

Step 1: Input 2D Image (3\(\times\)3)

We use a simple \(3 \times 3\) grayscale image matrix:

Step 2: Flatten the Image with Coordinates

Index | Value | Coordinates (i, j) |

|---|---|---|

0 | 34 | (0, 0) |

1 | 120 | (0, 1) |

2 | 45 | (0, 2) |

3 | 78 | (1, 0) |

4 | 255 | (1, 1) |

5 | 60 | (1, 2) |

6 | 90 | (2, 0) |

7 | 10 | (2, 1) |

8 | 200 | (2, 2) |

Step 3: Apply Hash Function

We define the hash function:

Value | (i,j) | Hash (mod 100) |

|---|---|---|

34 | (0,0) | 102 \(\rightarrow\) 2 |

120 | (0,1) | 367 \(\rightarrow\) 67 |

45 | (0,2) | 141 \(\rightarrow\) 41 |

78 | (1,0) | 239 \(\rightarrow\) 39 |

255 | (1,1) | 782 \(\rightarrow\) 82 |

60 | (1,2) | 209 \(\rightarrow\) 9 |

90 | (2,0) | 289 \(\rightarrow\) 89 |

10 | (2,1) | 83 \(\rightarrow\) 83 |

200 | (2,2) | 574 \(\rightarrow\) 74 |

Step 4: Insert into Binary Search Tree (BST)

Using the hash values as keys, the binary tree is built as follows:

-

Insert (2, 34) \(\rightarrow\) root

-

Insert (67, 120) \(\rightarrow\) right of 2

-

Insert (41, 45) \(\rightarrow\) left of 67

-

Insert (39, 78) \(\rightarrow\) left of 41

-

Insert (82, 255) \(\rightarrow\) right of 67

-

Insert (9, 60) \(\rightarrow\) right of 2

-

Insert (89, 90) \(\rightarrow\) right of 82

-

Insert (83, 10) \(\rightarrow\) left of 89

-

Insert (74, 200) \(\rightarrow\) left of 82

Tree structure (in key, value format):

Step 5: Tree Traversals Pre-order Traversal (Root \(\rightarrow\) Left \(\rightarrow\) Right):

Order of visit:

Pre-order Result:

In-order Traversal (Left \(\rightarrow\) Root \(\rightarrow\) Right):

Order of visit:

In-order Result:

According to the Fig. 6. , the proposed scrambling method applied on image Flower, size of 250 x 250. The image is not containing any zero-pixel value. The outcome 6(b), represent the image is properly scrambled and having PSNR 3.73 dB and MSE 27559.04, which shows that image is properly scrambled and differ from the original input image. According to23, if the PSNR is lesser and MSE is high the image is properly scrambled.

Proposed descrambling method

The proposed descrambling method divides the descrambling process into different substages. Starting with loading the in-order traversal, pre-order traversal, and hash table, use them as keys for further processing. Then, building the binary tree from preorder traversal and inorder traversal, followed by level-order traversal on the created binary tree, is performed. Continue with fetching the real values from the hash table associated with the corresponding indices and replacing them in the level-order traversal result. Then, from the resultant array, the descrambled image was reconstructed and displayed. The framework of the proposed descrambling method is shown in Fig. 7 and described in Algorithm ’2’.

According to the Fig. 8., the proposed Descrambling method applied on image Flower, size of 250 x 250.The PSNR and MSE value has been calculated between Descrambled Image and Original Image. The PSNR is Inf dB and MSE is 0, which shows that image is totally Descrambled and same as the original input image. According to23, if the PSNR is higher and MSE is lower the image is totally Descrambled.

Experimental results

This section is divided into two subsections: Subsection 4.1, i.e., “Experimental Setup,” and Subsection 4.2, i.e., “Results.” In Subsection 4.1, a tabulation of the different software and hardware requirements required for this experiment to implement the proposed method has been presented. In the next section, Subsection 4.2 consists of different figures and outputs of different performance measure parameters from the proposed method. The aim of this section is to provide evidence to support the theoretical framework discussed in the previous section and to demonstrate the effectiveness and validity of the proposed method.

Experimental setup

Table 2, outlines the software and hardware needed to run the program of the proposed method. The software side specifies a compatible operating system (Windows, Linux, or Mac), the programming language (MATLAB), and a specific version of an integrated development environment (IDE) for creating the program. On the hardware side, it details the minimum processor (Intel Core i5), hard drive space (10 GB), and random-access memory (256 MB).

Results

For experimental purpose proposed Method has been applied on different images i.e. “Dog.jpg”, “Lenna.jpg”, “Goldhill.jpeg” and “flower.jpg”. As a result, Original Image, Grayscale Image, Scrambled Image and descrambled image have been represented through tabulation in Table 3.

The IQM, which means Image Quality Measurement system, approaches the development of image processing algorithms. In prediction of the processed image performance, so performed, IQM can take place. Quality of the Imagery is defined as the attribute of an image that assesses the quality of services in a processed image by comparing it with an optimal image.

People often use imaging systems to look at pictures, so judging the quality of images by asking people is usually seen as a reliable way to do it. But in situations where things need to be done quickly, like in real-time applications, using people to judge isn’t practical because it is complicated and hard to set up. That is why, in recent years, more people have been using objective methods to assess image quality. The quality assessment parameters details are discussed in this section.

Peak signal to noise ratio (PSNR)

Peak Signal-to-Noise Ratio (PSNR)24 is a standard way to measure how good a reconstructed image or video looks compared to the original. It helps us understand how much noise is added during processes like compressing, sending, or securing the data. A higher PSNR means better quality, with less noise. PSNR shows the ratio between the strongest part of the signal and the noise that messes it up, usually measured on a scale called decibels. PSNR can be calculated like this:

Mean squared error (MSE)

Mean Squared Error (MSE)24 in image encryption is usually used to measure how much the original image and the encrypted image differ. In image encryption, MSE helps us see how much the encryption process changes the image. This ensures that the encrypted image is very different from the original one, which makes it more secure. The formula for MSE is:

Where:

-

1.

I(i,j) represents the pixel intensity of the original image at position (i,j)

-

2.

K(i,j) represents the pixel intensity of the encrypted image at position (i,j)

-

3.

m and n are the dimensions (rows and columns) of the image.

A high MSE value in image encryption means there’s a big difference between the original and encrypted images, which is good for strong encryption. On the other hand, a lower MSE suggests that the encrypted image is too similar to the original, which could weaken security.

Normalized cross correlation (NCC)

Normalized Cross-Correlation (NCC)24 is commonly used in image processing, especially for finding patterns in images, but it’s also useful for checking how well an image encryption method works. NCC helps measure how similar the original image is to the encrypted one. For a good encryption method, this similarity should be very small, meaning the encrypted image looks very different from the original.

NCC is calculated using this formula:

An NCC close to zero between the original and encrypted image, for a secure encryption scheme, indicates that the two images are uncorrelated. Hence, a high value of the NCC can indicate poor encryption or, in other words, too much information is retained by the encrypted image from the original image.

Average difference (AD)

Average Difference-AD24 is a statistical method of analysis for security in the field of image encryption techniques. It denotes how much the pixel values of an original image differ on average from that of the encrypted image. The high value of AD states that the encryption has changed the image significantly, which is quite desirable in strong encryption schemes.

The formula for the calculation of Average Difference (AD) is:

Where:

-

1.

m denotes the number of rows and n denotes columns.

-

2.

\(\hbox {P}\_{0}\) (i,j) represents the pixel value of the original image at a position (i,j)

-

3.

\(\hbox {P}\_{\textrm{e}}\) (i,j) is the pixel value at position (i,j) from the encrypted image.

-

4.

The sum of the absolute difference between every original and encrypted pixel value is averaged.

Structural content (SC)

Republished from Nat Commun 10, 2864 (2019) Full size imageStructural Content (SC)24 is standard metric for quality assessment in Image Processing. It verifies whether structural content of the original image and its corrupted version are similar. It works really well when two images have different level of detail and structure like in this case.

We define the Metric as follows: Structural Content (SC)

Understanding Structural Content (SC):

-

1.

SC = 1: Shows that the images are exactly the same.

-

2.

SC > 1: Means the changed image has less structure compared to the original.

-

3.

SC < 1: Means the changed image has more structure than the original.

SC is very helpful in tasks like compressing images, making them better, and reducing noise, where keeping the original image’s structure is important for quality. But it might not notice small changes in textures or fine details as well as other measures like SSIM (Structural Similarity Index).

Maximum difference (MD)

Maximum Difference (MD)24, in image processing, is a measure indicating the maximum difference between two images with respect to pixel intensities. It is used in comparing the similarity or difference between a reference image and some processed or otherwise altered version of it. Maximum Difference looks for corresponding pixels between two images and calculates the maximum absolute difference between their intensities.

Calculation:

Where:

\(\hbox {I}\_{1}\) (x, y) and \(\hbox {I}\_{2}\) (x, y) represent the pixel intensities at the location (x, y) in two images.

The difference is calculated for each pixel, and the maximum value is selected.

Let’s take an example:

Original Image (\(I_1\)):

Processed Image (\(I_2\)):

To find the MD, we calculate the absolute differences for each pixel and then find the maximum value:

The maximum difference here is ’2’, so (MD = 2).

Correlation coefficient

In image processing, the correlation coefficient8 is a tool used to measure how similar two images are. It’s especially helpful for tasks like finding a pattern in an image, aligning images correctly, and checking how well an image has been rebuilt. The correlation coefficient, usually represented by \(\rho\) or r, is a statistical tool that shows how strongly two variables, like two images, are connected in a straight line. In image processing, this helps us see how similar two images are.

For two images \(I_1\) and \(I_2\), the correlation coefficient can be calculated as:

where,

\(\textrm{Cov}(I_1, I_2)\) is the covariance between the two images, and

\(\sigma _{I_1}\) and \(\sigma _{I_2}\) are the standard deviations of the two images.

Number of pixels change rate (NPCR)

NPCR (Number of Pixels Change Rate) is a widely used metric to assess how well encryption or picture scrambling works25. It measures the proportion of pixels that differ between two images, typically the original and the encrypted or scrambled version.

where

Here, \(I_1\) is the original image, \(I_2\) is the scrambled/encrypted image, and \(M \times N\) is the total number of pixels.

Unified average changing intensity (UACI)

Another common way to compare the average intensity difference between two images is UACI (Unified Average Changing Intensity)26. This is usually done between an original image and a scrambled or encrypted image. It works with NPCR by measuring changes in pixel intensity, not just if a pixel changed.

Here, \(I_1\) is the original image, \(I_2\) is the scrambled/encrypted image, and \(M \times N\) is the total number of pixels. The division by 255 normalizes the pixel intensity difference for 8-bit images.

Table 3 and Fig. 9 shows how well the new scrambling method works on four different pictures: Dog.jpg, Lenna.jpg, Goldhill.jpeg, and flower.jpg. To figure out which picture is scrambled the best, we need to look at several important measurements: PSNR (Peak Signal-to-Noise Ratio), MSE (Mean Squared Error), NCC (Normalized Cross-Correlation), AD (Absolute Difference), SC (Structural Content), MD (Maximum Difference), NPCR ( Number of Pixels Change Rate), and Unified Average Changing Intensity (UACI).

A low PSNR between the original and scrambled data confirms that scrambling is effective at concealing the original content, while a high PSNR upon descrambling confirms that the original data remains intact and is accurately recoverable. PSNR and MSE, these measures are connected in a way where one increases as the other decreases. A higher PSNR means better image quality, while a higher MSE means more errors. Among the images, “Goldhill.jpeg” has the highest PSNR (4.54 dB) and the lowest MSE (22881.51), showing it keeps the most quality after being changed. On the other hand, “flower.jpg” has the lowest PSNR (3.73 dB) and the highest MSE (27559.04), meaning it is the most distorted.

NCC is a way to measure how similar the original image is to the scrambled one. A smaller NCC number means the image is more scrambled. “Lenna.jpg” has the smallest NCC value (0.26), which shows it is the most scrambled, making it very different from the original. “Dog.jpg” has the biggest NCC value (0.71), meaning it is less scrambled and still looks a lot like the original image.

AD (Average Difference): This measure calculates the average difference between the original and scrambled images. A higher AD value means a bigger difference, which suggests better scrambling. According to the table, “Dog.jpg” has the highest AD (0.37), with “Goldhill.jpeg” coming next (0.13). This shows that “Dog.jpg” is more effectively scrambled in terms of average pixel difference compared to the other images.

SC (Structural Content) measures how similar the structure of the original image is to the scrambled one. A lower SC value means the structure is less similar, which suggests better scrambling. In the table, “Dog.jpg” has an SC value of 0.99, which is slightly less than 1. This shows it is more effectively scrambled in terms of structure compared to the other images, which all have an SC value of 1.

MD reveals the greatest difference in pixel intensity between the original and scrambled images. A higher MD means a bigger maximum change in corresponding pixels, which suggests better scrambling. Goldhill.jpeg has the highest MD value (222), indicating a stronger scrambling effect in some areas, while Lenna.jpg has the lowest MD (140).

Finally, The proposed scrambling method achieves an NPCR of 100% across all tested images, indicating that every pixel changes after scrambling, demonstrating strong resistance against differential attacks. The UACI values are also very high (ranging from 17.13% to 69.07%), showing substantial intensity changes between the original and scrambled images (27,28,29), which confirms the effectiveness of the scrambling at the pixel level.

Table 4 presents the summary statistics of the tested images using the proposed scrambling method. The analysis indicates that a lower average PSNR (mean \(\approx 4.16 dB\)) and a relatively high MSE (mean \(\approx 25,032\)) confirm effective scrambling. The low average NCC (\(\approx 0.525\)) and AD (\(\approx 0.165\)) suggest minimal similarity between original and scrambled images. The SC value remains close to 1, while the MD shows a moderate variation (\(\sim\) 183). Additionally, the NPCR and UACI values demonstrate high randomness, indicating a strong decorrelation between the original and scrambled images.

Table 5 and Fig. 9 shows the outcomes of using the suggested descrambling technique on four images: “Dog.jpg”, “Lenna.jpg”, “Goldhill.jpeg”, and “Flower.jpg”. The results show that the descrambling process works very well, as all images are fully recovered to their original state. The Peak Signal-to-Noise Ratio (PSNR) for all images is infinite (Inf), which means there is no noise or loss in the recovered images. Also, the Mean Squared Error (MSE) is 0, meaning there is no mistake or difference between the original and descrambled images.

The Normalized Cross-Correlation (NCC) is 1 for all images, showing that the descrambled images are exactly the same as their original versions in terms of pixel connection. Moreover, the Absolute Difference (AD) is 0, meaning there are no pixel-level differences between the original and descrambled images. The Structural Content (SC) for all images is 1, confirming that the structure of the recovered images is identical to that of the original images.

Comparative study

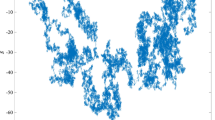

This paper proposed a new image scrambling method which uses dynamic data structures for encrypting the image. Table 6 compares different methods of scrambling image pixels to evaluate their effectiveness. The values for other scrambling methods are taken directly from Reference30 for comparative purposes. We use a common test image called Lenna.jpg. Mixing up the pixels is important for keeping images safe and private by making it hard to see the original picture. We look at several well-known methods and also our new method to see which one does the best job.

The comparison involves various scrambling methods such as Arnold’s Transformation, Fibonacci Transformation, Gray Code Bit Plane, 2D Mapping, Row and Column Shifting, and Row and Column Shifting with Bit Plane Scrambling. Each of these techniques is evaluated against a novel method aimed at improving the scrambling process for increased security and effectiveness.

We assess the performance of these methods using specific parameters, which include Horizontal Correlation (HC), Vertical Correlation (VC), Diagonal Correlation (DC), General Correlation (Corr), and Entropy. These parameters serve as common metrics employed to evaluate the efficacy of scrambling techniques. Correlation measurements (Corr, HC, VC, DC) determine the correlation among adjacent pixels, where a value nearing zero implies superior scrambling effectiveness by diminishing pixel value predictability. On the other hand, Entropy gauges the randomness present in the scrambled image, aiming for an optimal value around 8 for an 8-bit grayscale image to achieve maximum randomness.

The new scrambling method has a correlation value very close to zero (-0.0002), which means it does a good job of reducing the connection between the scrambled image and the original. On the other hand, methods like Arnold’s transformation (2.4868) and Fibonacci transformation (3.1170) have much higher correlation values, showing that the scrambled image still has some link to the original. Other methods, like Gray Code Bit Plane and 2D Mapping, also have low correlation, but the new method does better than most by getting even closer to zero, which is perfect for making the image less predictable and more random.

Figure 10, show that The Proposed Method demonstrates excellent performance in reducing correlation (Corr, HC, VC, DC), indicating effective disruption of pixel relationships — key for security. Entropy31 is slightly lower but still acceptable. Overall, the method prioritizes security (low correlation) and structure-aware scrambling, possibly at the slight expense of maximum entropy, which is a reasonable trade-off.Existing scrambling methods often lack reversibility, have high complexity, or offer limited security due to predictable patterns. The proposed approach overcomes these issues by using binary trees and hash tables, ensuring efficient, reversible scrambling with strong security and perfect image reconstruction.

Conclusion & future scope

In the last few years, the use of digital media transmission has increased. So, providing security to the images is very important so that they cannot be easily accessed by unauthorized third-party users32. This paper successfully developed a novel image scrambling and descrambling technique utilizing Dynamic Data Structures like binary trees and hash tables etc. This paper also includes a comparative study between different image encryption techniques. Proposed Scrambling method has been tested over multiple images like “Dog.jpg”, “Lenna.jpg”, “Goldhill.jpeg”, “flower.jpg” and efficiency has been evaluated based on different performance metrices like PSNR33, MSE, NCC, SC, AD and MD. From the result section it was observed that Flower.jpg shows the most distortion with the lowest PSNR (3.73 dB) and highest MSE (27559.04). Lenna.jpg has the lowest NCC score (0.26), meaning it is the most scrambled image when compared to the original. Dog.jpg has the highest AD score (0.37) and an SC value of 0.99, showing good scrambling in both average pixel difference and structure. Also, Goldhill.jpeg has the highest MD score (222), indicating the largest differences in pixel intensity, while Lenna.jpg has the lowest MD score (140). These measurements show different levels of how well the images are scrambled. From the comparative study section, it was also observed that the new scrambling method has a correlation value very close to zero (-0.0002), which means it does a good job of reducing the connection between the scrambled image and the original. On the other hand, methods like Arnold’s transformation (2.4868) and Fibonacci transformation (3.1170) have much higher correlation values, showing that the scrambled image still has some link to the original. Other methods, like Gray Code Bit Plane and 2D Mapping, also have low correlation, but the new method does better than most by getting even closer to zero, which is perfect for making the image less predictable and more random.

In future work, we plan to modify the hash function and data structure handling to fully incorporate 0-valued pixels in the scrambling process. This enhancement is expected to improve robustness and ensure better compatibility with practical image datasets.To keep improving the image scrambling algorithm for better speed and efficiency, it’s important to reduce the time it takes to process and use less memory without losing the quality of the scrambled images. Also, using advanced methods like deep learning, specifically convolutional neural networks (CNNs), can help the algorithm learn from data and create more effective scrambling techniques, making the images more secure. Making a simple and easy-to-use interface would help users easily add images, change scrambling settings, see the scrambled results, and use different parts of the algorithm. Lastly, adding strong ways to handle errors will make sure the system can deal with problems like wrong image formats, damaged data, or unexpected user actions, making it more reliable and better for users.

Data availability

The image data set used to support the findings of this study is freely available at following datasets links https://www.kaggle.com/datasets/jyotikhandelwal/dynamic-data-structure-based-image-security-method

References

Venkatesan, C. et al. A novel fuzzy system design for data-adaptive secret key generation and robust audio encryption. Nonlinear Dyn. https://doi.org/10.1007/s11071-025-11565-7 (2025).

Venkatesh, V. et al. Separable reversible data hiding by vacating room after encryption using encrypted pixel difference. Sci. Rep. 15, 11916. https://doi.org/10.1038/s41598-025-96152-x (2025).

Yahya, A. Steganography Techniques for Digital Images 1st ed. (Springer, 2018). https://doi.org/10.1007/978-3-319-78597-4.

Abdulla, A. A. Exploiting similarities between secret and cover images for improved embedding efficiency and security in digital steganography, Ph.D. dissertation, Dept. of Applied Computing, Univ. of Buckingham, United Kingdom (2015).

Patel, R. & Bhatt, P. A review paper on digital watermarking and its techniques. Int. J. Comput. Appl. 110, 10–13. https://doi.org/10.5120/19279-0692 (2015).

Mondal, B., Biswas, N. & Mandal, T. A comparative study on cryptographic image scrambling. Ann. Comput. Sci. Inf. Syst. 10, 261–268 (2017).

Agrawal, P., Bhowmick, O. & Prince, Y. Comparative analysis of various steganographic techniques. Turk. J. Comput. Math. Educ. 12(11), 5018–5025 (2021).

Agarwal, S. A review of image scrambling technique using chaotic maps. Int. J. Eng. Technol. Innov. 8(2), 77–98 (2018).

Setiadi, D. R. et al. A comparative study of image cryptographic method. In Proc. 5th Int. Conf. Inf. Technol. Comput. Electr. Eng. (ICITACEE) (2018). https://doi.org/10.1109/ICITACEE.2018.8576907.

Surse, N. M. & Vinayakray-Jani, P. A comparative study on recent image steganography techniques based on DWT. In Proc. Int. Conf. Wireless Commun., Signal Process. Netw. (WiSPNET), vol. 1 1308–1314. https://doi.org/10.1109/WiSPNET.2017.8299975 (2017).

Yadav, A. S. & Kumar, S. Comparative analysis of digital image watermarking based on DCT, DWT and SVD with image scrambling technique for information security. In Proc. Int. Conf. Comput. Characterizat. Techn. Eng. Sci. (CCTES), vol. 4 89–93. https://doi.org/10.1109/CCTES.2018.8674135 (2018).

Kabi, K. K., Pradhan, C., Saha, B. J. & Bisoi, A. K. Comparative study of image encryption using 2D chaotic map, In Proc. Int. Conf. Inf. Syst. Comput. Netw. (ISCON) 4, 105–108. https://doi.org/10.1109/ICISCON.2014.6965227 (2014).

Amirtharajan, R., Akila, R. & Deepikachowdavarapu, P. A comparative analysis of image steganography. Int. J. Comput. Appl. 2, 41–47. https://doi.org/10.5120/644-900 (2010).

Pawar, S. S. & Nandusekar, S. Improvised image scrambling technique with shuffling of pixel values and position. In Proc. Int. Conf. Commun. Electron. Syst. (ICCES) 31, 1–5. https://doi.org/10.1109/CESYS.2016.7889836 (2016).

Jeelani, Z. & Qadir, F. A comparative study of cellular automata-based digital image scrambling techniques. Evol. Syst. 12, 359–375. https://doi.org/10.1007/s12530-020-09326-5 (2020).

Turan, M., Gökçay, E. & Tora, H. An unrestricted Arnold’s Cat Map transformation. Multimed. Tools Appl. 83, 70921–70935. https://doi.org/10.1007/s11042-024-18411-9 (2024).

Mishra, M. Image encryption using Fibonacci-Lucas Transformation. Int. J. Cryptogr. Inf. Secur. 2, 131–141. https://doi.org/10.5121/ijcis.2012.2312 (2012).

Zhou, R. G., Sun, Y. J. & Fan, P. Quantum image gray-code and bit-plane scrambling. Quantum Inf. Process. 14, 1717–1734. https://doi.org/10.1007/s11128-015-0964-6 (2015).

Chen, C. F. & Lucas, A. Finite speed of quantum scrambling with long-range interactions. Phys. Rev. Lett. https://doi.org/10.1103/PhysRevLett.123.250605 (2019).

Bashir, Z., Iqbal, N. & Hanif, M. A novel gray scale image encryption scheme based on pixels’ swapping operations. Multimedia Tools Appl. 80, 1029–1054. https://doi.org/10.1007/s11042-020-09695-8 (2020).

Song, F. Y., Xu, G.-B., Wang, H.-K. & Jiang, D.-H. A quantum image encryption algorithm based on chaotic system and Rubik’s cube principle. Quantum Inf. Process. https://doi.org/10.1007/s11128-024-04492-w (2024).

Ponnambalam, M., Ponnambalam, M. & Jamal, S. S. A robust color image encryption scheme with complex whirl wind spiral chaotic system and quadrant-wise pixel permutation. Phys. Scr. 99(10), 105239. https://doi.org/10.1088/1402-4896/ad7075 (2024).

Sara, U., Akter, M. & Uddin, M. S. Image quality assessment through FSIM, SSIM, MSE and PSNR–a comparative study. J. Comput. Commun. 7, 8–18. https://doi.org/10.4236/jcc.2019.73002 (2019).

Memon, F., Unar, M. A. & Memon, S. Image quality assessment for performance evaluation of focus measure operators (2016).

Ponnambalam, M. et al. Hybrid inter woven scrambling with spiral shell 3D hyperchaotic diffusion for secure color image encryption. Nonlinear Dyn. https://doi.org/10.1007/s11071-025-11460-1 (2025).

Devi, C. S. & Amirtharajan, R. A novel 2D MTMHM based key generation for enhanced security in medical image communication. Sci. Rep. 15(1), 25411. https://doi.org/10.1038/s41598-025-10485-1 (2025).

Banu, A. & Amirtharajan, R. A robust medical image encryption in dual domain: Chaos-DNA-IWT combined approach. Med. Biol. Eng. Comput. 58, 1445–1458. https://doi.org/10.1007/s11517-020-02178-w (2020).

Ravichandran, D., Praveenkumar, P., Rayappan, J. B. B. & Amirtharajan, R. DNA chaos blend to secure medical privacy. IEEE Trans. Nanobiosci. 16(8), 850–858. https://doi.org/10.1109/TNB.2017.2780881 (2017).

Ravichandran, D., Praveenkumar, P., Rayappan, J. B. B. & Amirtharajan, R. Chaos-based crossover and mutation for securing DICOM image. Comput. Biol. Med. 72, 170–184. https://doi.org/10.1016/j.compbiomed.2016.03.020 (2016).

Mondal, B. Cryptographic image scrambling techniques. In Cryptographic and Information Security 37–65 (2018). https://doi.org/10.1201/9780429435461-2.

Nithya, C. et al. Secure gray image sharing framework with adaptive key generation using image digest. Sci. Rep. 15, 8854. https://doi.org/10.1038/s41598-025-92752-9 (2025).

Abdulla, A. A., Sellahewa, H. & Jassim, S. A. Stego quality enhancement by message size reduction and fibonacci bit-plane mapping. In Security Standardisation Research (SSR 2014). Lecture Notes in Computer Science (eds Chen, L. & Mitchell, C.) vol. 8893 149–164 (Springer, 2014). https://doi.org/10.1007/978-3-319-14054-4_10.

Ravichandran, D. et al. An efficient medical data encryption scheme using selective shuffling and inter-intra pixel diffusion IoT-enabled secure E-healthcare framework. Sci. Rep. 15, 4143. https://doi.org/10.1038/s41598-025-85539-5 (2025).

Acknowledgements

The authors sincerely thank Manipal University Jaipur for supporting this research and covering the Article Processing Charges (APC).

Funding

Open access funding provided by Manipal University Jaipur. This research received no external funding.

Author information

Authors and Affiliations

Contributions

S.K.S., J.K., and A.K.B. jointly conceived the idea and developed the methodology. A.K.B. implemented the proposed image scrambling algorithm and conducted the experiments. S.K.S. and J.K. contributed to the analysis and interpretation of results. A.K.B. wrote the main manuscript text and prepared the figures. All authors reviewed and approved the final manuscript.

Corresponding authors

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Shee, S.K., Khandelwal, J. & Bairwa, A.K. Dynamic structure driven image scrambling technique for data protection. Sci Rep 16, 123 (2026). https://doi.org/10.1038/s41598-025-28925-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-28925-3