Abstract

Corpus linguistics provides an organized framework for examining a massive set of texts (corpora) to reveal structures, patterns, and interpretations in the language. Under the field of text-based emotion recognition (TER), corpus linguistics enables the extraction of linguistic features that inherently denote emotional expressions. With the application of annotated corpora encompassing emotionally labelled text, several studies train machine learning (ML) models for the identification and classification of emotions such as happiness, sad, anger, etc. The integration of linguistic corpus-based and data-driven approaches improves the accuracy and depth of emotion recognition, posing valuable understandings for applications in sentiment analysis (SA), social media monitoring, mental health assessment, and human-computer interaction (HCI). Therefore, this paper proposes an Emotion Detection in Text via Integrated Word Vector Representation and Multi-Model Deep Neural Networks (EDTIWVR-MDNN) approach. The EDTIWVR-MDNN approach intends to develop an effective textual emotion recognition and analysis to enhance sentiment understanding in natural language. Initially, the text pre-processing stage involves various levels for extracting the relevant data from the text. Furthermore, the word embedding process is performed by the fusion of the term frequency-inverse document frequency (TF-IDF), bi-directional encoder representations from transformers (BERT), and the GloVe technique to transform textual data into numerical vectors. For the classification process, the EDTIWVR-MDNN technique employs a hybrid of an attention mechanism-based temporal convolutional network and a bi-directional gated recurrent unit (AM-T-BiG) model. The experimental evaluation of the EDTIWVR-MDNN methodology is examined under the Emotion Detection from Text dataset. The comparison analysis of the EDTIWVR-MDNN methodology portrayed a superior accuracy value of 99.26% over existing approaches.

Similar content being viewed by others

Introduction

Corpus linguistics plays an essential role in TER by offering empirical linguistic data and logical methods for the detection of emotions in the text. With the systematic examination of an enormous, illustrative text corpus, researchers can reveal linguistic patterns, keywords, syntactic structures, etc., which are widely integrated to diverse emotional states1. Corpus linguistics aids in building an emotion-labelled dataset to identify culture- or context-specific emotions, and modelling emotion lexicons which enhance the outcome of emotion identification approaches. Moreover, corpus-driven works exhibit refined linguistic cues, such as metaphor use, intensifier, or negation pattern, which are significant to recognize emotions in text2 precisely. Emotion recognition holds a promising position in the domain of artificial intelligence (AI) and HCI. Different kinds of methods are employed to identify emotions in humans, such as textual information, facial expressions, heartbeat, body movements, and other physiological cues. In the domain of computational linguistics, detecting emotions in text is gaining importance from a practical perspective. Currently, the internet contains a vast volume of textual data. Extracting emotions for various objectives, such as business, is highly interesting3. As the most fundamental and straightforward medium, textual data produced during emotional communications is frequently employed to deduce an individual’s emotional condition. Furthermore, in recent years, with the swift progress of social platforms and intelligent devices, online social networks have emerged as an unmatched worldwide trend, including Facebook, Weibo, Line, and Twitter. TER aims at automatically detecting emotional states in textual expressions, namely Happiness, Sadness, and Anger4. TER involves a deeper examination than SA and has attracted significant attention from the academic community. For individuals, emotions are recognized swiftly through their internal feelings. At the same time, for automated TER systems, computational approaches must be regularly enhanced and refined to provide more precise emotion detection5. The increasing attention to TER is driven by the expansion of social networking platforms and online data, which enable users to interact and express views on diverse subjects. The interest in TER has also led to novel natural language processing (NLP) techniques centred on TER detection and classification.

Studies on TER have influenced numerous practical applications, such as healthcare, HCI, consumer evaluation, and education. Yet, the domain of NLP remains ambiguous due to its computational and linguistic approaches that enable machines to interpret and generate HCI through text and speech6. It focuses on building models for various functions such as emotions, interpretation, thoughts, and sentiment. SA identifies the sentiment of the given text as positive, neutral, or negative. Textual data frequently holds and conveys emotion in multiple forms, thus posing challenges in capturing and understanding it7. Emotions can be communicated clearly through emotion-related terms and strong language, making them easy to examine. However, that is not always the case. Factors like sentence formation, word choice, structure, and the use of punctuation can make emotion recognition more challenging8. In today’s world, technological progress is indispensable, impacting lives globally by improving productivity and efficiency. Artificial intelligence (AI) is increasingly valued for its ability to perform tasks similar to humans, driving significant advancements9. Deep learning (DL) is among the well-known branches of AI, where experts are exploring, implementing, and applying its strengths in different technological sectors, such as software engineering and information processing. Lately, DL methods have reduced the reliance on manual feature extraction and attained satisfactory outcomes10. However, based on the latest surveys on deep emotion recognition approaches, the majority of works emphasize visual, audio, and multimodal modalities. Business organizations and researchers significantly benefit from automatic detection of emotional cues to assist businesses and researchers in gaining deeper insights into user sentiments and behaviours. Using linguistic patterns within extensive text collections allows for more accurate and culturally aware emotion detection. Thus, a model is necessary for capturing subtle discrepancies in language, such as sarcasm or emphasis, resulting in more reliable emotion analysis in diverse contexts.

This paper proposes an Emotion Detection in Text via Integrated Word Vector Representation and Multi-Model Deep Neural Networks (EDTIWVR-MDNN) approach. The EDTIWVR-MDNN approach intends to develop an effective textual emotion recognition and analysis to enhance sentiment understanding in natural language. Initially, the text pre-processing stage involves various levels for extracting the relevant data from the text. Furthermore, the word embedding process is performed by the fusion of the term frequency-inverse document frequency (TF-IDF), bi-directional encoder representations from transformers (BERT), and the GloVe technique to transform textual data into numerical vectors. For the classification process, the EDTIWVR-MDNN technique employs a hybrid of an attention mechanism-based temporal convolutional network and a bi-directional gated recurrent unit (AM-T-BiG) model. The experimental evaluation of the EDTIWVR-MDNN methodology is examined under the Emotion Detection from Text dataset. The significant contribution of the EDTIWVR-MDNN methodology is listed below.

-

The framework employs a comprehensive multi-level text pre-processing process for clearing, normalizing, and refining raw textual data, ensuring the extraction of relevant and noise-free information. This process includes tokenization, stop-word removal, stemming or lemmatization, and case normalization. It also improves the semantic quality of features and lays a robust foundation for reliable word embedding and accurate classification.

-

The integrated TF-IDF, BERT, and GloVe techniques are utilized for the word embedding process for transforming textual data into rich and informative numerical vectors. This incorporation employs the merits of both statistical and contextual embeddings. It significantly enhances the representation of semantic relationships and improves model performance in intrinsic text classification tasks.

-

The classification process involves the hybrid TCN and BiGRU techniques for effectively capturing both long-range dependencies and local contextual features in sequential data. This hybrid architecture enhances the model’s ability to focus on relevant temporal patterns. It results in improved accuracy, robustness, and interpretability in intrinsic text classification scenarios.

-

The novelty of the EDTIWVR-MDNN technique lies in its integration of a hybrid word embedding scheme, combining TF-IDF, BERT, and GloVe, with the attention-enhanced AM-T-BiG models, which unite TCN and BiGRU. This unique incorporation enables deeper semantic understanding and temporal pattern recognition. It also improves classification performance on complex textual data while maintaining interpretability and generalization.

The rest of the paper is systematized as shown. “Survey of existing emotion detection approaches” comprehensively reviews the related literature and mechanisms that use existing models to detect emotion. The proposed design is presented in “Materials and methods”. Performance assessment and comparative study are given in “Evaluation and results”. “Conclusion” concludes by defining the research.

Survey of existing emotion detection approaches

Moorthy and Moon11 proposed a multimodal learning approach to recognize emotions, known as the hybrid multi-attention network (HMATN) model. A collaborative cross-attentional model for audio-visual amalgamation, focusing on efficiently capturing salient features through modalities, although protecting both inter- and intra-modal associations. This paradigm computes cross-attention weights through examining the association between merged distinct modes and feature illustrations. In the meantime, this paradigm leverages the hybrid attention of a single and parallel cross-modal (HASPCM) module, encompassing a single-modal attention module and a parallel cross-modal attention module, to utilize multimodal data and unknown features to enhance representation. Mengara et al.12 presented a new Mixture of Experts (MoE) architecture, solving the issues by incorporating a dynamic gating mechanism, transformer-driven sub-expert networks alongside a cross-modal attention and sparse Top-k activation mechanisms. All modalities are refined by various dedicated sub-experts created to extract a complex contextual and temporal pattern. In contrast, the dynamic gating particularly influences the aids of the most related experts. Jon et al.13 presented the SER in naturalistic conditions (SERNC) challenge, which addresses emotional state assessment and categorical emotion recognition. To manage the difficulties of natural speech, such as intra- and inter-subject inconsistency, the authors proposed multi-level acoustic-textual emotion representation (MATER). This new hierarchical approach incorporates textual and acoustic features at the text, word, and embedding levels. By combining lower-level acoustic and lexical cues alongside higher-level contextualized representations, MATER efficiently extracts semantic nuances and fine-grained prosodic variations. Al Ameen and Al Maktoum14 presented a technique named SVM-ERATI, an SVM-assisted technique for emotion recognition that involves audio and text information (ATI). The audio features that are extracted comprise prosody-like pitch and energy, and Mel-frequency cepstral coefficients (MFCCs) regarding the audio emotion properties. Tahir et al.15 introduced a new emotion recognition paradigm, which utilizes DL techniques to detect five essential human emotions and the pleasure dimensions (valence) related to these emotions, utilizing keystroke and text dynamics. DL techniques are then utilized to forecast an individual’s emotions through text data. Semantic analysis of the text data is attained through the global vector (Glove) representation of words. In16, an innovative multimodal incessant emotion recognition architecture is introduced to overcome the above difficulties. The multimodal attention fusion (MAF) technique is presented for multimodal fusion to utilize redundancy and complementarity among several modalities. To resolve temporal context relationships, the global contextual TCN (GC-TCN) and the local contextual TCN (LC-TCN) were introduced. Those models can gradually combine multiscale temporal contextual data from input streams of various modalities. Zhang et al.17 proposed a novel MER methodology that leverages both text and audio by utilizing hybrid attention networks (MER-HAN). Particularly, an audio and text encoder (ATE) block furnished with DL approaches alongside the local intra-modal attention mechanism is primarily developed for learning higher-level text and audio feature representations from the related text and audio sequences.

In Ref.18, a new hybrid multimodal emotion recognition system, InceptionV3DenseNet, is recommended for enhancing the detection precision. At first, contextual features are captured from various modalities, namely text, audio, and video. Zero-crossing rate, MFCC, pitch, and energy are captured from audio modalities, and Term Frequency–Inverse Document Frequency (TF-IDF) are captured from textual modalities. Liu et al.19 proposed SemantiCodec. This advanced audio codec leverages a dual-encoder architecture by integrating a semantic encoder based on self-supervised Audio Masked Autoencoder (AudioMAE) and an acoustic encoder. Bárcena Ruiz and Gil Herrera20 proposed a model by using an ensemble of partially trained Bidirectional Encoder Representations from Transformers (BERT) models within Condorcet’s Jury Theorem (CJT) framework. The approach utilizes the Jury Dynamic (JD) algorithm with reinforcement learning (RL) to adaptively improve performance despite limited training data. Noor et al.21 proposed a Stacking Ensemble-based Deep learning (SENSDeep) technique by integrating multiple pre-trained transformer models, including BERT, Robustly Optimized BERT Pre-training Approach (RoBERTa), A Lite BERT (ALBERT), Distilled BERT (DistilBERT), XLNet, and Bidirectional and Auto-Regressive Transformers (BART) technique to improve detection accuracy and robustness. Luo et al.22 explored the correlation between single-hand gestural features and emotional expressions using statistical correlation analysis and participant interviews. Hu et al.23 improved speech emotion recognition (SER) by using Label Semantic-driven Contrastive Learning (LaSCL) and integrating emotion Label Embeddings (LE) with Contrastive Learning (CL) and Label Divergence Loss (LDL) to strengthen the relationship between semantic labels and speech representations. Tang et al.24 introduced a methodology by integrating convolutional neural networks (CNN) and state space sequence models (SSM) for capturing both local features and long-range dependencies. The FaceMamba and attention feature injection (AFI) approaches are also utilized to improve hierarchical feature integration. Zhang et al.25 proposed the Implicit Expression Recognition model of Emojis in Social Media Comments (IER-SMCEM) technique for enabling more accurate sentiment recognition in financial texts. Shawon et al.26 developed RoboInsight. This museum guide robot utilizes Robotics for precise line-following navigation, Image Processing (IP) for accurate exhibit recognition, and NLP for seamless multilingual chatbot interactions. Kodati and Tene27 developed an advanced multi-task learning (MTL) model by employing soft-parameter sharing (SPS) integrated with attention mechanisms (AMs) like long short-term memory with AM (LSTM-AM), bidirectional gated recurrent units with self-head AM (BiGRU-SAM), and bidirectional neural networks with multi-head AM (BNN-MHAM) for analysis. Xia et al.28 introduced a model by utilizing systematic design space exploration and critical evaluation methods. Comparison analysis of existing textual emotion recognition in Table 1.

Materials and methods

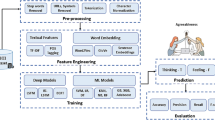

In this paper, the EDTIWVR-MDNN approach is proposed. The primary intention of the EDTIWVR-MDNN methodology is to develop an effective textual emotion recognition and analysis on linguistic corpora. It encompasses various processes, including text pre-processing techniques, word embedding strategies, and hybrid DL-based emotion classification. Figure 1 represents the entire flow of the EDTIWVR-MDNN methodology.

Dataset description

The utilized Emotion Detection from Text dataset contains 39,173 samples under 12 sentiments, as depicted below in Table 229. The dataset, sourced from the data. The world platform provides a valuable resource for creating precise emotion detection models from short, informal text, despite issues like class imbalance and the complexity of distinguishing diverse emotional categories. Table 3 represents the sample texts.

Algorithm: emotion detection on textual data using MLPFE-DBMMC model

Algorithm 1 illsutrates the steps involved in the MLPFE-DBMMC methodology, capturing the text pre-processing, word embedding, classification stages.

Algorithm 1: MLPFE-DBMMC methodology.

Pre-processing techniques for text

The text pre-processing techniques are executed for extracting the relevant data in text30. The various text pre-processing steps are explained below.

Sentence tokenization: For NLP, NLTK is a general-purpose Python library that is mainly employed. The detached text files were introduced, and the raw text is known as sentence tokenized. The sentences are assembled into a CSV file and recorded with a file name.

Expanding contraction: The Python library called contraction is employed for expanding the short words in a sentence. For instance, ‘you’ re‘ is fixed as you ‘are‘. This step is completed to help the detection of grammatical classes in the POS tag.

Part-of-speech (POS) tag: The POS taggers process a series of words and assign a POS tag to each word. For instance, VD denotes a verb, JJ indicates an adjective, NN represents a noun, and IN denotes a preposition. Moreover, the meaningful words are considered to be adjectives, nouns, and verbs, which are proposed to be mined to reduce redundancy. To avoid inconsistencies, only non-meaningful words (e.g., stopwords or words with tags other than V, N, and J) are eliminated, while the meaningful words (V/N/J) are retained and transformed to lowercase for consistent processing, ensuring reproducibility.

Word tokenization: A well-defined regular expression was used to remove words consisting only of alphabetic characters.

Lower case conversion: Every removed word is converted to lowercase to reduce duplicate entries in the vocabulary. Also, when equated with a definite dictionary, this helps to eliminate the stop word.

Removal of stop words: English stop words, such as he, is, the, and has, do not contribute meaning to the text and were eliminated. Also, a few modified words were eliminated, which are the highly common verbs in the new language, like know, feel, said, come, and the character’s name.

Lemmatization: This model eliminates the affixes of words, which are in its vocabulary. It results in a list of root words for eliminating the redundant words in text. For instance, lemmatize ‘plays’ to ‘play’.

Combined semantic feature extraction

Besides, the word embedding process is performed by using the fusion of TF-IDF, BERT, and GloVe to transform textual data into numerical vectors. The TF-IDF model effectively captures the significance of words based on their frequency and distribution across documents, providing a robust statistical foundation. The GloVe also presents global co-occurrence data, capturing semantic relationships between words efficiently. BERT, a contextualized transformer-based model, outperforms in comprehending word meanings in varying contexts, which is significant for intrinsic and complex textual data. Altogether, this hybrid approach utilizes both conventional and DL embeddings for improving feature richness, semantic depth, and context awareness, outperforming models that depend on any single embedding method alone. These embeddings are concatenated to form a rich, unified feature vector, incorporating conventional and DL representations. Thus, the integration enhances the generalization and classification accuracy of the model, specifically in scenarios with diverse and complex language patterns.

TF-IDF

Every feature extracted value is verified using the TF-IDF model, where a weight \(\:w\) is assigned to each phrase \(\:t\)31.

The number of times \(\:t\) acts in text \(\:d\) is calculated using the term frequency by Eq. (1).

Here, S \(\:{f}_{d}\left(t\right)\) denotes the rate of phrase \(\:t\) in text \(\:d\).

The relevance of a term is projected utilizing IDF. A term’s rarity is defined utilizing Eq. (2).

While \(\:"N"\) represents the total number of texts, \(\:"\text{d}\text{f}\:\text{t}"\) denotes the number of texts that contain the word \(\:t\). To attain TF-IDF, it is necessary to multiply Eqs. (1) and (2).

To group and classify the data, the proposed method utilizes ML models. The growth of a feature set is a vital module to attain that. The real writing method of every author depends on the specific processes by which they develop and demonstrate their skill. This information serves as a basis for removing the style dimension feature for every author in the text database. The arithmetical measure of the TF-IDF vectorizer is employed to define the weight by computing how relevant a term is to every text. TF-IDF is a multi-purpose model that converts text into a beneficial mathematical or vector representation. TF-IDF is essentially an occurrence-based vector that regularises word frequency to dimension and number of times, utilizing a combination of dual metrics. A count vectorizer and n‐gram study of words are employed for managing the cleaned text database. By using an exact resemblance, the reciprocal is expressed below.

\(\:Freq(w,\:e)\) denotes the term frequency in text \(\:e\), \(\:tf(w,\:e)\) indicates the regularised frequency of the \(\:tth\) term, \(\:idf(w,e)\) produces an inverse document frequency of \(\:0\); or else, it creates a reverse of 1. The text for the complete database was signified as \(\:\left|E\right|\). \(\:(e:E:W)\) shows the no. of texts that contain the letter \(\:"w"\). Figure 2 illustrates the general configuration of the TF-IDF model.

BERT

The encoded layer of BERT is a vital part of the text classification method, which leads to an intricate transformation of an input text into complete data of a vector that takes good context information32. The full study of its characteristics is described below:

-

Contextual embedding: Unlike traditional word embedding, which delivers secure illustration for every word regardless of context, the BERT encoded layer forms an embedding of context. The present embedding can understand the word’s primary meaning based on a series of entire inputs that permit the system to recognize the semantic difference and explain words with dissimilar meanings.

-

Transformer structure: The layer of encoding uses the structure of the Transformer, which attributes a self-attention mechanism that permits recognizing context association and longer‐term dependencies in an input. By executing the self‐attention technique with manifold heads, BERT manages an input sequence, which captures both local and global context data.

-

Pre-trained language representation: This model is naturally pre‐trained on massive databases using unsupervised learning for purposes like masked language modelling and sentence prediction. Throughout the pre-training stage, this technique acquires the capability to encode text into a contextual and effective representation through an optimizer for diverse language understanding tasks. The earlier trained representation is a foundation for subsequent functions, allowing the system to use extensive linguistic data acquired throughout pre-training.

-

Refining for a specific task: While the pre-trained representation of BERT recognizes broad linguistic trends, the encoded layer was enhanced using data relevant through supervised learning. The network alteration representation enhances its efficiency on a specific classification task.

-

Vector representation with higher dimension: This encoded layer makes a vector representation with a higher dimension for every token in an input sequence. The current representation generally contains numerous sizes, which.

While the \(\:ith\:\)word is signified as \(\:{w}_{i}\), and the word embedding vector is denoted as \(\:e\left({w}_{i}\right)\). The vector space of \(\:dth\:\)dimension was signified as \(\:{R}^{d}.\).

The BERT pre-trained language model’s main feature is its execution of Transformer encoding in dual directions. The BERT was prepared to handle input sentences of many words with 512 tokens. Every sentence is pre-processed before processing by including a CLS token at the start and adding a SEP token to the completion for representing its closure. Afterwards, the tokenization and insertion of special tokens were employed to create three distinct types of embeddings. Figure 3 shows the architecture of the BERT method.

-

Word vector: This embedding specifies the single token in an input sentence. The tokens are mapped to a representation of the vector attained throughout the pre-training process. Its goals are to encode the semantic data about context and token meaning.

-

Segment vector: It was employed for handling an input sequence which contains numerous sentences or segments. The current vector delivers a different identifier for every token, which signifies the segment or sentence it is derived from. This enables the system to differentiate among various components and reflect their contextual significance in processing.

-

Location embedding vector: In addition to word and segment embeddings, BERT includes a location embedding vector to encode the data position within a sequence of input.

GloVe

Joined local contextual window model and global matrix factorization to learn the word’s space representations. During the Global Vectors (GloVe) method, the statistics of word existences in the corpus are the primary information source accessible to each unsupervised model to learn the word representation33. While numerous models exist, the question remains as to how meaning is made from the statistics and how the resultant word vectors may characterize the meaning.

whereas, \(\:V\) refers to vocabulary size,\(\:\:X\) signifies the word matrix of co-occurrence (\(\:{X}_{i,j}\) denote time counts that word \(\:j\) takes place in terms of word \(\:i\)), the weights \(\:f\) is provided by \(\:f\left(x\right)=(x/{x}_{\text{m}\text{a}\text{x}}{)}^{\alpha\:}\) if \(\:x<{x}_{\text{m}\text{a}\text{x}}\) and one or else, \(\:{x}_{\text{m}\text{a}\text{x}}=100\) and \(\:\alpha\:=0.75\) (empirically established), \(\:{u}_{i},{u}_{j}\) denotes word vector’s dual layers, \(\:{b}_{i},{c}_{j}\) means bias term. Easily, it denotes weight matrix factorization with the biased terms. Figure 4 demonstrates the infrastructure of Glove.

Hybrid neural network architecture-based emotion detection

At last, the hybrid of the AM-T-BiG technique is utilized for the classification operation34. The integration is highly effective for contextual understanding and sequence modelling. The AM model facilitates focusing on the most relevant parts of the input, thus enhancing the interpretability and handling long-range dependencies better than conventional methods. The TCN technique presents effectual parallel processing and stable gradient flow over long sequences, outperforming standard recurrent networks in temporal feature extraction. The BiGRU model efficiently assists in capturing data from both past and future contexts, which is significant for precise sequential data classification. This hybrid technique presents superior performance and robustness compared to standalone models, making it appropriate for intrinsic textual classification tasks where understanding context and sequence is crucial.

This method intends to precisely acquire the short-term and long-term dynamic features represented by key parameters. This model effectively utilizes the TCN module’s capability to capture long-term dependencies and periodic features during processing. The time-series data is efficiently modelled by the TCN technique due to its unique causal convolutional framework, while avoiding gradient explosion or vanishing gradients common in conventional recurrent neural network (RNN) techniques, thereby accurately capturing long-term dependencies during processing.

Then, these enhanced long-term features from the TCN module are passed to the layer of Bi-GRU to discover further the short-term volatility and bi-directional temporal correlation in data. The past and future influences, along with the current data, are efficiently captured by the forward and backwards GRU components, thereby significantly enhancing sensitivity to immediate changes and improving feature representations.

Here, \(\:\overrightarrow{{h}_{t}}\) and \(\:\overleftarrow{{h}_{t}}\) refer to the forward and backwards outputs of Bi-GRU; \(\:{y}_{t}\) indicates the complete output of Bi-GRU.

To enhance the accuracy of prediction, this method integrates the AM as an optimization tool. Attention is a key technology in the area of neural networks, which concentrates on intelligently assigning computation resources to prioritize or highlight data that is more significant for the completion of a task. The TCN-Bi-GRU framework demonstrates exceptional long-sequence modelling capability, with its AM enabling adaptive temporal feature elimination while preventing gradient vanishing. The attention computation is specified:

Now, \(\:\sqrt{{d}_{k}}\) refers to the adjustment factor, and \(\:Q\) signifies the output vector of the output unit. The softmax value is both zero and one, depicting normalization; \(\:K\) indicates spatial feature relations among data.

Table 4 indicates the hyperparameters of the AM-T-BiG technique for ensuring effective learning and stable convergence. The speed and accuracy is effectually balanced by the learning rate and batch size, while dropout assisted in preventing overfitting. The TCN layers captured long-term dependencies utilizing dilated convolutions, and Bi-GRU layers modeled short-term bi-directional relations. The AM model additionally refined significant temporal features, resulting in an enhanced generalization across emotion categories.

Evaluation and results

The simulation validation of the EDTIWVR-MDNN model is examined under the Emotion Detection from Text dataset29. The technique is simulated using Python 3.6.5 on a PC with an i5-8600k, 250GB SSD, GeForce 1050Ti 4GB, 16GB RAM, and 1 TB HDD. Parameters include a learning rate of 0.01, ReLU activation, 50 epochs, 0.5 dropout, and a batch size of 5.

Figure 5 shows a countplot graph that visualizes the distribution of several emotional sentiments in a dataset. Every bar in the chart corresponds to a specific emotion. The x-axis is labelled as “sentiment”, whereas the y-axis demonstrates the count, offering a clear and colourful distinction between the emotional categories and their respective frequencies.

Figure 6 displays the histplot graph that exemplifies the distribution of multiple emotions in a dataset. Every bar in the histogram signifies the frequency (count) of a specific emotional category. The bar height denotes how frequently each emotion occurs.

Table 5; Fig. 7 depict the textual emotion detection of the EDTIWVR-MDNN approach on 80:20. Under 80% of the training phase (TRPHE), the EDTIWVR-MDNN model obtains an average \(\:acc{u}_{y}\) of 99.18%, \(\:pre{c}_{n}\) of 91.42%, \(\:rec{a}_{l}\) of 79.73%, \(\:{F1}_{Score}\:\)of 82.16%, and \(\:{G}_{Measure}\) of 83.60%. Likewise, at 20% of the testing phase (TSPHE), the EDTIWVR-MDNN model obtains an average \(\:acc{u}_{y}\) of 99.26%, \(\:pre{c}_{n}\) of 93.13%, \(\:rec{a}_{l}\) of 80.14%, \(\:{F1}_{Score}\:\)of 82.54%, and \(\:{G}_{Measure}\)of 84.14%.

Figure 8 exhibits the training (TRAIN) \(\:acc{u}_{y}\) and validation (VALID) \(\:acc{u}_{y}\) of an EDTIWVR-MDNN method on 80:20 over 30 epochs. In the beginning, both TRAIN and VALID \(\:acc{u}_{y}\:\)rise quickly, denoting efficient pattern learning from the data. Around the epoch, the VALID \(\:acc{u}_{y}\) minimally exceeds the training accuracy, signifying good generalization without over-fitting. As training advances, it reflects maximum performance and a minimum performance gap between TRAIN and VALID. The close alignment of both curves in training suggests that the method is well-regularised and generalized. This reveals the method’s stronger capability in learning and retaining valuable features across both seen and unseen data.

Figure 9 exhibits the TRAIN and VALID losses of the EDTIWVR-MDNN method at 80:20 over 30 epochs. Initially, both TRAIN and VALID losses are higher, denoting that the process begins with a partial understanding of the data. As training evolves, both losses persistently reduce, displaying that the method is efficiently learning and updating its parameters. The close alignment between the TRAIN and VALID loss curves during training suggests that the technique hasn’t over-fitted and upholds good generalization to unseen data.

Table 6; Fig. 10 illustrate the textual emotion detection of the EDTIWVR-MDNN technique at 70:30. Under 70% TRPHE, the EDTIWVR-MDNN model attains an average \(\:acc{u}_{y}\) of 98.89%, \(\:pre{c}_{n}\) of 81.37%, \(\:rec{a}_{l}\) of 73.72%, \(\:{F1}_{Score}\:\)of 74.93%, and \(\:{G}_{Measure}\) of 75.76%. Similarly, at 30% TSPHE, the EDTIWVR-MDNN model attains an average \(\:acc{u}_{y}\) of 98.74%, \(\:pre{c}_{n}\) of 81.10%, \(\:rec{a}_{l}\) of 72.86%, \(\:{F1}_{Score}\:\)of 74.32%, and \(\:{G}_{Measure}\) of 75.23%.

Figure 11 exemplifies the TRAIN \(\:acc{u}_{y}\) and VALID \(\:acc{u}_{y}\) of an EDTIWVR-MDNN technique on 70:30 over 30 epochs. At first, both TRAIN and VALID \(\:acc{u}_{y}\:\)rise rapidly, specifying an effective pattern learning from the data. Around the epoch, the VALID \(\:acc{u}_{y}\) exceeds the training accuracy minimally, indicating good generalization without over-fitting. As training increases, it reflects maximum performance and a minimum performance gap between TRAIN and VALID. The close alignment of both curves in training suggests that the model is well-regularised and generalized. This establishes the model’s strong capability in learning and retaining informative features across both seen and unseen data.

Figure 12 exemplifies the TRAIN and VALID losses of the EDTIWVR-MDNN methodology at 70:30 over 30 epochs. Initially, both TRAIN and VALID losses are high, signifying that the model begins with a partial understanding of the data. As training progresses, both losses persistently decline, indicating that the model is effectively learning and enhancing its parameters. The close alignment among the TRAIN and VALID loss curves during training demonstrates that the model hasn’t over-fitted and retains good generalization to unseen data.

Table 7; Fig. 13 portray the comparative investigation of the EDTIWVR-MDNN methodology with existing approaches under the Emotion Detection from Text dataset20,21,35,36,37. The outcomes underscored that the presented EDTIWVR-MDNN approach achieved the highest \(\:acc{u}_{y},\) \(\:pre{c}_{n}\), \(\:rec{a}_{l},\) and \(\:{F1}_{Score}\) of 99.26%, 93.13%, 80.14%, and 82.54%, respectively. While present methodologies, such as RL, RoBERTa, XLNet, ALBERT, Bi-RNN, BiLSTM + CNN, TextCNN, Dialogue RNN, Naïve Bayes (NB), and VC(LR-SGD) have shown worse performance under various metrics.

Table 8; Fig. 14 indicate the comparison assessment of the EDTIWVR-MDNN technique with existing methods under the Emotion Analysis Based on Text (EAT) dataset38,39. The MFN model model attained an \(\:acc{u}_{y}\) of 86.50%, \(\:pre{c}_{n}\) of 79.56%, \(\:rec{a}_{l}\) of 77.88%, and \(\:{F1}_{Score}\) of 86.08%. Additionally, the MCTN achieved an \(\:acc{u}_{y}\) of 80.50%, \(\:pre{c}_{n}\) of 86.77%, \(\:rec{a}_{l}\) of 83.06%, and \(\:{F1}_{Score}\) of 91.77%. The RAVEN model depicted an \(\:acc{u}_{y}\) of 77.00%, \(\:pre{c}_{n}\) of 88.53%, \(\:rec{a}_{l}\) of 83.19%, and \(\:{F1}_{Score}\) of 90.27% and HFusion reached an \(\:acc{u}_{y}\) of 74.30%, \(\:pre{c}_{n}\) of 88.52%, \(\:rec{a}_{l}\) of 80.60%, and \(\:{F1}_{Score}\) of 90.62%. MulT obtained an \(\:acc{u}_{y}\) of 84.80%, \(\:pre{c}_{n}\) of 86.12%, \(\:rec{a}_{l}\) of 80.84%, and \(\:{F1}_{Score}\) of 91.80% while SSE-FT achieved an \(\:acc{u}_{y}\) of 86.50%, \(\:pre{c}_{n}\) of 89.30%, \(\:rec{a}_{l}\) of 84.31%, and \(\:{F1}_{Score}\) of 86.87%. The EDTIWVR-MDNN model demonstrated greater performance with an \(\:acc{u}_{y}\) of 97.45%, \(\:pre{c}_{n}\) of 97.09%, \(\:rec{a}_{l}\) of 97.06%, and \(\:{F1}_{Score}\) of 96.91%.

Table 9; Fig. 15 specify the comparison evaluation of the EDTIWVR-MDNN method with existing techniques under the Text Emotion Recognition (TER) dataset40,41. The SVM model attained an \(\:acc{u}_{y}\) of 78.97%, \(\:pre{c}_{n}\) of 81.45%, \(\:rec{a}_{l}\) of 78.36%, and \(\:{F1}_{Score}\) of 79.67%. Moreover, RF achieved an \(\:acc{u}_{y}\) of 76.25%, \(\:pre{c}_{n}\) of 79.42%, \(\:rec{a}_{l}\) of 75.66%, and \(\:{F1}_{Score}\) of 77.02%. The NB model illustrated an \(\:acc{u}_{y}\) of 68.94%, \(\:pre{c}_{n}\) of 61.75%, \(\:rec{a}_{l}\) of 51.41%, and \(\:{F1}_{Score}\) of 49.61% and DT reached an \(\:acc{u}_{y}\) of 69.42%, \(\:pre{c}_{n}\) of 72.48%, \(\:rec{a}_{l}\) of 69.70%, and \(\:{F1}_{Score}\) of 70.94%. GRU obtained an \(\:acc{u}_{y}\) of 78.02%, \(\:pre{c}_{n}\) of 71.19%, \(\:rec{a}_{l}\) of 73.91%, and \(\:{F1}_{Score}\) of 72.35% while CNN achieved an \(\:acc{u}_{y}\) of 79.32%, \(\:pre{c}_{n}\) of 77.30%, \(\:rec{a}_{l}\) of 75.21%, and \(\:{F1}_{Score}\) of 74.10%. The proposed XXX model outperformed the existing techniques with an \(\:acc{u}_{y}\) of 97.26%, \(\:pre{c}_{n}\) of 96.68%, \(\:rec{a}_{l}\) of 96.20%, and \(\:{F1}_{Score}\) of 95.94%, thus emphasizing its capability in precisely detecting emotions from textual data.

Table 10; Fig. 16 specify the computational time (CT) analysis of the EDTIWVR-MDNN technique with existing models. The EDTIWVR-MDNN technique significantly outperforms all baseline models with the lowest CT of 5.11 s. Other models, such as TextCNN and BiLSTM + CNN, required higher CTs of 28.39 and 24.84 s, respectively. Models namely XLNet and Bi-RNN recorded CT values of 21.86 and 21.43 s, while ALBERT and RoBERTa took 18.27 and 13.42 s. Moreover, the LR-SGD reported higher CT of 15.27 and 16.19 s. This indicates that the EDTIWVR-MDNN model is highly efficient, making it appropriate for real-time or resource-constrained applications.

Table 11; Fig. 17 portray the error analysis of the EDTIWVR-MDNN approach with existing models. The EDTIWVR-MDNN approach depicts the lowest error values with an \(\:acc{u}_{y}\) of 0.74%, \(\:pre{c}_{n}\) of 6.87%, \(\:rec{a}_{l}\) of 19.86%, and \(\:{F1}_{Score}\) of 17.46%. The very low \(\:acc{u}_{y}\) and \(\:pre{c}_{n}\) highlights the weak classification capability of the model. The \(\:rec{a}_{l}\) of 19.86% exhibits that the model retrieves some relevant emotional instances but misses most others, while the \(\:{F1}_{Score}\) of 17.46% demonstrates an overall imbalance between identifying and correctly labeling emotions. Thus, the EDTIWVR-MDNN model shows that it effectively captured contextual or semantic variances in text, likely due to limited representation learning and inadequate handling of emotional subtleties within intrinsic linguistic patterns.

Table 12; Fig. 18 indicate the ablation study of the EDTIWVR-MDNN methodology. The EDTIWVR-MDNN methodology attained an \(\:acc{u}_{y}\) of 99.26%, \(\:pre{c}_{n}\) of 93.13%, \(\:rec{a}_{l}\) of 80.14%, and \(\:{F1}_{Score}\) of 82.54%, which integrates the AM-T-BiG model with a fusion of word embedding processes, highlighting superior performance over other approaches. Furthermore, distinct techniques namely TCN without attention and BiGRU achieved an \(\:acc{u}_{y}\) of 95.71%, \(\:pre{c}_{n}\) of 88.93%, \(\:rec{a}_{l}\) of 75.74%, and \(\:{F1}_{Score}\) of 78.47%, while BiGRU without TCN and attention achieved an \(\:acc{u}_{y}\) of 96.35%, precision of 89.71%, \(\:rec{a}_{l}\) of 76.57%, and \(\:{F1}_{Score}\) of 79.02%. AM-T-BiG with attention improved the results to \(\:acc{u}_{y}\) of 97.02%, \(\:pre{c}_{n}\) of 90.54%, \(\:rec{a}_{l}\) of 77.46%, and \(\:{F1}_{Score}\) of 79.84%. Word embedding techniques alone such as TF-IDF, BERT, and Glove achieved varying performance with \(\:acc{u}_{y}\) ranging from 94.23% to 97.98%, \(\:pre{c}_{n}\) from 90.45% to 92.70%, \(\:rec{a}_{l}\) from 75.44% to 79.89%, and \(\:{F1}_{Score}\) from 75.92% to 79.27%. Thus, the EDTIWVR-MDNN method emphasized the effectiveness of integrating corpus linguistics with DL approaches for improved semantic-driven emotion detection on textual data.

Table 13 illustrates the computational efficiency of diverse emotion recognition models42. The SET model took 22.23 G FLOPs, 2277 M GPU memory, and 29.9470 s for inference while MET utilized 16.41 G FLOPs, 2863 M GPU memory, and 21.6060 s. The m-CNN-LSTM achieved 21.77 G FLOPs, 2307 M GPU memory, and 17.9080 s and m-MLP required 29.65 G FLOPs, 2917 M GPU memory, and 27.0900 s. The m-LSTM model recorded 27.83 G FLOPs, 2176 M GPU memory, and 29.2230 s and HADLTER-NLPT depicted higher efficiency with 10.58 G FLOPs, 536 M GPU memory, and 11.0400 s. The EDTIWVR-MDNN model portrayed the highest computational efficiency with only 7.90 G FLOPs, 205 M GPU memory, and 8.5900 s for inference, highlighting a significant mitigation in resource needs without losing performance.

Conclusion

In this paper, the EDTIWVR-MDNN model is proposed. The EDTIWVR-MDNN model intends to develop an effective textual emotion recognition and analysis on language corpora. It encompasses various processes, including text pre-processing techniques, word embedding strategies, and hybrid DL-based emotion classification. Initially, the text pre-processing stage involves various levels for extracting the relevant data from the text. Furthermore, the word embedding process is applied by the fusion of TF-IDF, BERT, and GloVe to transform textual data into numerical vectors. Finally, the hybrid of the AM-T-BiG technique is utilized for the classification process. The experimental evaluation of the EDTIWVR-MDNN methodology is examined under the Emotion Detection from Text dataset. The comparison analysis of the EDTIWVR-MDNN methodology portrayed a superior accuracy value of 99.26% over existing approaches. The limitations comprise the dependence on a relatively limited dataset, which may affect the generalizability of the findings across diverse textual domains and languages. Additionally, the performance may degrade while capturing highly complex or context-dependent emotions, particularly for mixed or subtle emotional expressions. The model also face difficulty with sarcasm and idiomatic language that often alter the intended emotional meaning. Future work may concentrate on expanding the dataset with more varied and multilingual text sources to enhance robustness. Integrating multimodal data such as audio or visual cues could additionally improve emotion recognition accuracy. Moreover, exploring adaptive learning methods to better handle evolving language use and emotional expressions in real-time applications is recommended. Finally, addressing computational efficiency to enable deployment in resource-constrained environments will be a valuable direction. Also, future studies may include integrating multimodal features such as text, audio, and visual cues, as well as incorporating multilingual datasets for addressing challenges related to sarcasm, idiomatic expressions, and linguistic diversity.

Data availability

The data that support the findings of this study are openly available in the Kaggle repository at [https://www.kaggle.com/datasets/pashupatigupta/emotion-detection-from-text], [https://www.kaggle.com/datasets/simaanjali/emotion-analysis-based-on-text], [https://www.kaggle.com/datasets/shreejitcheela/text-emotion-recognition], reference number34,35,36.

References

Deng, J. & Ren, F. A survey of textual emotion recognition and its challenges. IEEE Trans. Affect. Comput. 14 (1), 49–67 (2021).

Yuan, Y., Duo, S., Tong, X. & Wang, Y. A Multimodal affective interaction architecture integrating BERT-based semantic understanding and VITS-based emotional speech synthesis. Algorithms. 18(8), 513 (2025).

Yohanes, D., Putra, J. S., Filbert, K., Suryaningrum, K. M. & Saputri, H. A. Emotion detection in textual data using deep learning. Proc. Comput. Sci. 227, 464–473 (2023).

Guo, J. Deep learning approach to text analysis for human emotion detection from big data. J. Intell. Syst. 31 (1), 113–126 (2022).

Chatterjee, A. et al. Understanding emotions in text using deep learning and big data. Comput. Hum. Behav. 93, 309–317 (2019).

Rashid, U. et al. Emotion detection of contextual text using deep learning. In 2020 4th International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT), 1–5 (IEEE, 2020).

Cho, J. et al. Deep neural networks for emotion recognition combining audio and transcripts. arXiv preprint arXiv:1911.00432. (2019).

Hossain, M. S. & Muhammad, G. Emotion recognition using deep learning approach from audio–visual emotional big data. Inform. Fusion. 49, 69–78 (2019).

Zad, S., Heidari, M., James Jr, H. & Uzuner, O. Emotion detection of textual data: an interdisciplinary survey. In 2021 IEEE World AI IoT Congress (AIIoT), 0255–0261 (IEEE, 2021).

Devasenapathy, D., Bhimaavarapu, K., Sholapurapu, P. K. & Sarupriya, S. Real-time classroom emotion analysis using machine and deep learning for enhanced student learning. J. Intell. Syst. Internet Things. 16 (2), 82–101 (2025).

Moorthy, S. & Moon, Y. K. Hybrid multi-attention network for audio–visual emotion recognition through multimodal feature fusion. Mathematics. 13(7), 1100 (2025).

Mengara Mengara, A. G. & Moon, Y. K. CAG-MoE: Multimodal emotion recognition with cross-attention gated mixture of experts. Mathematics. 13(12), 1907 (2025).

Jon, H. J. et al. MATER: Multi-level acoustic and textual emotion representation for interpretable speech emotion recognition. arXiv preprint arXiv:2506.19887 (2025).

Al Ameen, R. & Al Maktoum, L. Machine learning algorithms for emotion recognition using audio and text data. PatternIQ Min. 1 (4), 1–11 (2024).

Tahir, M., Halim, Z., Waqas, M., Sukhia, K. N. & Tu, S. Emotion detection using convolutional neural network and long short-term memory: A deep multimodal framework. Multimed. Tools Appl. 83 (18), 53497–53530 (2024).

Shi, C., Zhang, Y. & Liu, B. A multimodal fusion-based deep learning framework combined with local-global contextual TCNs for continuous emotion recognition from videos. Appl. Intell. 54 (4), 3040–3057 (2024).

Zhang, S. et al. Multimodal emotion recognition based on audio and text by using hybrid attention networks. Biomed. Signal Process. Control. 85, 105052 (2023).

Alamgir, F. M. & Alam, M. S. Hybrid multimodal emotion recognition framework based on InceptionV3DenseNet. Multimed. Tools Appl. 82 (26), 40375–40402 (2023).

Liu, H. et al. Semanticodec: an ultra low bitrate semantic audio codec for general sound. IEEE J. Sel. Top. Signal. Process. (2024).

Bárcena Ruiz, G. & Gil Herrera, R. D. J. Textual emotion detection with complementary BERT Transformers in a condorcet’s jury theorem assembly. Knowl. Based Syst. 326, 114070 (2025).

Noor, K., Rehman, M., Anjum, M., Hussain, A. & Saleem, R. Sentiment analysis for depression detection: A stacking ensemble-based deep learning approach. Int. J. Inf. Manag. Data Insights. 5(2), 100358 (2025).

Luo, Y. et al. May. Emotion embodied: Unveiling the expressive potential of single-hand gestures. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, 1–17 (2024).

Hu, J., Qu, L., Li, H. & Li, T. Label semantic-driven contrastive learning for speech emotion recognition. In Proc. Interspeech 2025, 4348–4352 (2025).

Tang, D. et al. Toward identity preserving in face sketch-photo synthesis using a hybrid CNN-Mamba framework. Sci. Rep. 14(1), 22495 (2024).

Zhang, J. et al. IER-SMCEM: an implicit expression recognition model of emojis in social media comments based on prompt learning. In Informatics, vol. 12, No. 2, 56. (MDPI, 2025).

Shawon, N. J. et al. RoboInsight: Towards deploying an affordable museum guide robot with natural language processing, image processing and interactive engagement. In International Conference on Human-Computer Interaction, 355–374 (Springer Nature Switzerland, 2024).

Kodati, D. & Tene, R. Advancing mental health detection in texts via multi-task learning with soft-parameter sharing transformers. Neural Comput. Appl. 37 (5), 3077–3110 (2025).

Xia, W. et al. Video visualization and visual analytics: A task-based and application-driven investigation. IEEE Trans. Circuits Syst. Video Technol. 34 (11), 11316–11339 (2024).

https://www.kaggle.com/datasets/pashupatigupta/emotion-detection-from-text

Low, H. Q., Keikhosrokiani, P. & Pourya Asl, M. Decoding violence against women: analyzing harassment in middle Eastern literature with machine learning and sentiment analysis. Humanit. Social Sci. Commun. 11 (1), 1–18 (2024).

Krishnamoorthy, P., Sathiyanarayanan, M. & Proença, H. P. A novel and secured email classification and emotion detection using hybrid deep neural network. Int. J. Cogn. Comput. Eng. 5, 44–57 (2024).

Chen, Y., Sun, N., Li, Y., Peng, R. & Habibi, A. Text classification using SVD, BERT, and GRU optimized by improved seagull optimization (ISO) algorithm. AIP Adv. 15(6). (2025).

Atmaja, B. T., Sasou, A. & Akagi, M. Survey on bimodal speech emotion recognition from acoustic and linguistic information fusion. Speech Commun. 140, 11–28 (2022).

Cheng, Y., Liang, C. & Yang, Q. Hybrid deep learning-based early warning for lithium battery thermal runaway under multi-charging scenarios.

Dikbiyik, E., Demir, O. & Dogan, B. BiMER: Design and implementation of a bimodal emotion recognition system enhanced by data augmentation techniques. IEEE Access. 13, 64330–64352 (2025).

Peng, S. et al. Deep broad learning for emotion classification in textual conversations. Tsinghua Sci. Technol. 29 (2), 481–491 (2023).

Yousaf, A. et al. Emotion recognition by textual tweets classification using voting classifier (LR-SGD). IEEE Access. 9, 6286–6295 (2020).

https://www.kaggle.com/datasets/simaanjali/emotion-analysis-based-on-text

Liu, X., Xu, Z. & Huang, K. Multimodal emotion recognition based on cascaded multichannel and hierarchical fusion. Comput. Intell. Neurosci. 2023 (1), 9645611 (2023).

https://www.kaggle.com/datasets/shreejitcheela/text-emotion-recognition

Bharti, S. K. et al. Text-based emotion recognition using deep learning approach. Comput. Intell. Neurosci. 2022(1), 2645381 (2022).

Al-Hagery, M. A., Alharbi, A. A., Alkharashi, A. & Yaseen, I. A novel hybrid attention based deep learning framework for textual emotion recognition using natural Language processing technologies for disabled persons. Sci. Rep. 15 (1), 1–22 (2025).

Acknowledgments

The authors extend their appreciation to the Deanship of Research and Graduate Studies at King Khalid University for funding this work through Large Research Project under grant number RGP2/224/46. Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2025R263), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia. The authors would like to thank Ongoing Research Funding Program, (ORFFT-2025-133-1), King Saud University, Riyadh, Saudi Arabia for financial support. The authors extend their appreciation to the Deanship of Scientific Research at Northern Border University, Arar, KSA for funding this research work through the project number “NBU-FFR-2025-2248-08. The authors are thankful to the Deanship of Graduate Studies and Scientific Research at University of Bisha for supporting this work through the Fast-Track Research Support Program.

Author information

Authors and Affiliations

Contributions

B.B.A.: Conceptualization, methodology, validation, investigation, writing—original draft preparation, H.A.: Conceptualization, methodology, writing—original draft preparation, writing—review and editing. H.D.: methodology, validation, writing—original draft preparation. W.A.: software, visualization, validation, data curation, writing—review and editing. O.A.: validation, original draft preparation, writing—review and editing. B.A.: methodology, validation, conceptualization, writing—review and editing. N.A.: methodology, validation, original draft preparation. A.E.Y.: validation, original draft preparation, writing—review and editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Al-onazi, B.B., Alshamrani, H., Dafaalla, H. et al. Integration of corpus linguistics and deep learning techniques for enhanced semantic-driven emotion detection on textual data. Sci Rep 15, 45455 (2025). https://doi.org/10.1038/s41598-025-28929-z

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-28929-z