Abstract

Software cost estimation (SCE) is among the most critical task in software development and project management, that can directly impact the budgeting, planning, and resource allocations. The current study proposes a hybrid model combining TabNet, a deep learning architecture designed for tabular data, with Harris Hawks Optimization (HHO) for feature engineering. HHO algorithm is being used to identify the most significant feature among all the input and construct informative feature combinations that enhance model performance. Then the TabNet is used in analyzing the dataset for predicting the cost incurred in software development process. The TabNet-HHO framework is evaluated on standard datasets, including COCOMO and NASA project data. Explainable AI (XAI) technology based on SHAP is used in the current study to analyze the feature contribution in the decision process. Furthermore, the dependencies graphs are presented to analyze the relationships among the features. The proposed model is being evaluated using the standard metrics like mean square error (MSE), root mean square error (RMSE), mean absolute error (MAE), median magnitude relative error (MdMRE), and prediction accuracy (PA). The experimental outcome has proven that the proposed TabNet-HHO model has outperformed various existing software cost estimation models. The performance of the TabNet-HHO model is further evaluated across divergent datasets like Desharnais, China, Albrecht for SCE. TabNet-HHO has performed well across all the datsets, with a better prediction accuracy of 98.82% on evaluation over the COCOMO dataset.

Similar content being viewed by others

Introduction

With the advent of the machine intelligence in the field of software project management (SPM), there are tremendous transformations that have underwent in recent times. SCE is one among the challenging fields in the SPM. The ability to reliably predict the effort, resources, and time required to develop a software system is crucial for effective budgeting, resource allocation, risk management, and ultimately, project viability1. Precise SCE is very significant in the early phases of the software development lifecycle, where decisions have long-lasting impacts. However, the inherent complexities associated with the software development, changing project needs, new or unclear technologies, and not having enough past data often make traditional cost estimation methods inaccurate. These problems can cause projects to go over budget or take longer than planned. This shows the need for better and more flexible ways to estimate software costs2.

Software cost estimation is the process of predicting the effort, duration, and resources required in developing the complete software based on identified project requirements. This estimation extends beyond user requirement specifications and incorporates technical, software, and hardware requirements during the early phases of the development lifecycle3. The recent advancement in the technology like machine learning have proven to have better SCE, that act as powerful tools to analyze complex, non-linear relationships within large historical project datasets, where most of the traditional models struggle. Techniques like neural networks, and ensemble methods learn directly from data to identify key cost drivers, and it also enables automation of parts of the estimation process and allows models to adapt over time, offering more robust, efficient, and insightful support for software project planning and management.

The are various most widely used approaches like Constructive Cost Model (COCOMO)4, Function Points (FP)5, Use Case Points (UCP)6 and Software Life Cycle Management (SLCM)7 are often relied on expert judgment and predefined formulas, which may not adapt well to modern software development practices. COCOMO and SLIM largely relaying in accurate initial size estimates, often Lines of Code (LOC)8, which are exceptionally difficult to predict early in the project lifecycle, and both require significant historical data for appropriate calibration to a desired development environment. FP and UCP involve subjective counting processes and complexity assessments that can vary between estimators and require specific training, potentially impacting the consistency and reliability. Furthermore, FP and UCP may not fully capture the effort associated with non-functional requirements and complex algorithms. UCP is largely dependent on the quality and detail level of the initial use cases. The factors influencing the software development cost and the various software cost estimation approaches are presented in Fig. 1.

This study proposes a hybrid approach that would combine both TabNet9, a deep learning (DL) model specifically designed for tabular data, with Harris Hawks Optimization10, which is a nature-inspired metaheuristic algorithm. TabNet would assist in better learning by using the attentive feature selection and decision steps, making it much suitable for structured software project datasets. On the other hand, HHO is used to optimize model parameters and enhance feature selection, which helps improve performance and reduce overfitting. The study uses transfer learning11 to improve performance on small or specialized datasets by reusing knowledge from related data. The proposed TabNet–Harris Hawks Optimization framework would enhance software cost estimation by synergistically combining data-driven feature engineering with deep attention-based learning. The HHO-enabled feature selection and transformation mechanism plays a pivotal role in systematically identifying dominant cost determinants while suppressing noisy variables. This strategic dimensionality refinement makes sure that the predictive model focusses on the most important features, even when it has to deal with real-world datasets that are not perfect or are made up of different types of data.

TabNet further reinforces model robustness through its sequential attentive feature-masking mechanism, enabling selective focus on contextually relevant features for each prediction instance, rather than treating all inputs uniformly. This adaptive feature prioritization contributes to improved predictive accuracy and enhances generalization across a spectrum of software project environments, ranging from smaller academic repositories to large-scale industrial datasets. Moreover, the integration of SHAP-based explainability augments the interpretability of the framework by elucidating the contribution and influence of each feature on cost estimations. This transparency supports informed decision-making for project managers and technical stakeholders, facilitating early identification of high-impact cost drivers and promoting more reliable planning and resource allocation in software development life cycles.The contributions of the current study are listed below.

-

The current study would introduce a novel cost estimate approach based on the combination of TabNet, HHO, and transfer learning.

-

Evaluating the proposed approach across standard metrics concerning to benchmark datasets like COCOMO and NASA.

-

Comparing the TabNet-HHO model with the State-of-the-art (SOTA) models.

The overall.

The overall organization of the manuscript is as follows; the Sect. “Literature review” outlines the various conventional approaches used in the SCE. Section “Material and approaches” outlines the necessary information like dataset description and the details of implementation environment along with the details of hyperparameter configuration. Section “TabNet with HHO model for cost estimation” provides the comprehensive outline of the proposed TabNet + HHO. Section “Results and discussions” presents the results and discussion of the experimental outcome. It also covers the comparative analysis of the models. Section “Conclusion” presents the conclusion and future research directions.

Literature review

software cost estimation has been a long-standing challenge in software development process. Traditional model like the Constructive Cost Model is introduced in 1981 rely on mathematical formulation and a predefined cost driver, offering a well-structured mechanism but often lacking the flexibility like the modern, dynamic software projects. Even the Function Points has come into existence since 1979, which attempts to quantify the software size based on functionality that depend largely over the expert judgment and static metrics. Software Lifecycle Management was introduced in 1978, which models software project effort over time, assuming the rise and fall in the staffing levels. SLCM is particularly effective for large-scale projects and aims to provide a top-down estimation approach. However, SLCM is limited to agile based model, which lacks the flexibility in changing requirements.

To overcome the challenges of the conventional SCE models, a lot of research has underwent in machine learning (ML) and soft computing techniques. The are various techniques that are extensively used in the SCE, which includes Support Vector Machines (SVM), Artificial Neural Networks (ANN), K-Nearest Neighbourhood (KNN)12, and ensembled models13. These models can learn from historical project related data to uncover complex patterns and dependencies among the given input features and development effort. Unlike traditional models that rely on fixed formulas, ML approaches adapt to the data, offering more flexibility and often achieving higher prediction accuracy. As a result, they are increasingly being adopted in real-time project estimation.

A study by Sultan Aljahdali et al.14 that has discussed the use of Linear Regression (LR), SVM, and ANN for SCE based on the parameter Lines of Code (LOC). The findings showed that SVM and ANN models have outperformed the LR model, yielding more accurate estimates with MAE values of 71.8 for LR, 61.6 for SVM, and 77.3 for ANN, and RMSE values of 81.2, 103.2, and 86.94, respectively. However, all these conventional models depend heavily on high-quality datasets and am effective feature selection mechanism to be incorporated. LR assumes linear relationships that may not capture the real-world complexity; SVM performance is sensitive to kernel selection and noise; and whereas the ANN requires significant computational resources and that may be overfit with limited or imbalanced data15. Furthermore, all three techniques may require additional pre-processing and domain knowledge to ensure meaningful and reliable predictions16.

Ensembled model are the other widely used ML techniques that are used in SCE, Qassem, and Ibrahim17 have experimented the stacking ensemble learning approach, Random Forest (RF), LR AdaBoost (AB), XGBoost (XGB), Gradient Boost (GB), and K-Nearest Neighbors (KNN), trained on the International Software Benchmarking Standards Group (ISBSG) dataset18. The method achieved 98% prediction accuracy with lower error rates compared to individual models alone, demonstrating its effectiveness in SCE, where the stacking model has attained MAE of 207.33, RMSE of 533.14, and MMRE of 0.092. Similarly, a study on a Random Forest-based stacked ensemble approach has evaluated both single models and ensemble techniques, such as averaging, weighted averaging, bagging, boosting, and stacking across divergent benchmark datasets like Albrecht, China, Desharnais, Kemerer, Kitchenham, Maxwell, and Cocomo81. Among all these, stacking models using base learners like GLM, decision trees, SVM, and RF consistently delivered better performance in SCE than the rest.

DL approaches have emerging technology in SCE due to their ability to automatically learn complex, non-linear relationships from large datasets without the need for extensive manual feature engineering. Unlike traditional models, DL architectures such as deep neural network (DNN), convolutional neural network (CNN), and recurrent neural network (RNN) can model intricate patterns and dependencies between project features. Draz et al.2 has used the CNN along with particle swarm optimization (PSO)19 for predicting the SCE, the CNN with PSO model has outperformed Mutual Information based neural network (LNI-NN), Neuro-fuzzy logic (NFL), Adaptive GA-based neural network (AGANN) approaches. Other study by Kaushik et al.20, has proposed RNN with Long Short-Term Memory (LSTM), which was tested over COCOMO81, NASA93, and MAXWELL datasets.

The proposed model offers a more robust and accurate approach to SCE, when compared to the other conventional approaches. By integrating advanced learning techniques with automated optimization and knowledge transfer from related datasets, the model effectively captures complex patterns in project data and adapts well to varying scenarios. This results in improved prediction accuracy, better generalization across datasets, and reduced estimation errors, making it a more reliable tool for software project planning and management.

Material and approaches

In this section, the details of the datasets that are used in the current analysis, the details of the implementation environment and the details of the hyperparameters that are being used in the current study.

Dataset description

The current study has used three different datasets, which includes the COCOMO, and NASA for evaluating the performance of the TabNet-HHO model. The COCOMO dataset provides a detailed framework for estimating software development effort based on factors like project size, project type, and the associated cost drivers. NASA dataset includes the details of a project-level attributes like team experience, software size, development type, and effort. The details of instances and feature correlation are being discussed. Additionally, feature correlation analysis was performed to identify the relationships between various input attributes and the target variable.

COCOMO dataset: COCOMO dataset is the most widely used dataset in the software cost estimation21. It analyze the relationships among various project attributes and the actual effort expended. The dataset consists of 63 project records across 21 attributes. The feature attributed along with their description in presented in Table 1 and feature correlation heatmap is presented in Fig. 2. The feature correlation that illustrates the strength and direction of a monotonic dependencies between pairs of features is presented using a heatmap.

NASA dataset: NASA dataset22 is a widely used benchmark in SCE studies. It is primarily used to evaluate effort estimation, and it contains project-level data collected from NASA software development projects. It is highly probable that many of these features are correlated due to their inherent relationships. The dataset consists of 93 records that are extracted from NASA’s software engineering laboratory and PROMISE repository. It consists of 14 features that are listed in Table 2 along with their description. The corresponding feature correlation heatmap is presented in Fig. 3.

Experimental environment

The proposed model is evaluated in the online platform available in the Kaggle workspace, which is accessed through the local machine. The same workspace is used throughout the experimentation process. The details of the implementation environment are presented in Table 3.

Hyperparameter configuration

The hyperparameters that are used in the current study for implementing TabNet + HHO is being presented in Table 4, the hyperparameters are being selected based on the values that are configured in the existing studies, without undertaking a dedicated hyperparameter optimization process in this study. The specifications that are considered in the current study are default values that are considered for the TabNet and HHO algorithms.

TabNet with HHO model for cost Estimation

The current study used the TabNet + HHO for the SCE, where the TabNet model is used for the cost estimation through the task modelling, where it leverages sequential attention mechanisms to select relevant features and model complex nonlinear relationships in tabular data. Its interpretability and ability to handle heterogeneous features make it well-suited for accurately predicting project costs. and the HHO approach is being used for the feature engineering in identifying the significant features in that contributes to the precise cost estimation. The overall architecture of the proposed TabNet + HHO model is presented in Fig. 4.

Harris Hawks Optimization for feature engineering

Harris Hawks Optimization is a nature-inspired, and population-based metaheuristic algorithm which is proposed by Heidari et al.23 and Alkanhel, et al.24. HHO algorithm mimics the behavior of hawks while dynamically switching between exploration and exploitation phases based on the escaping energy of the prey25. Let the notation \(\:P\) designates the size of the initial population and the notation \(\:F\) representing the dimensionality of the problem i.e., the total count of features in the problem. Where Each hawk \(\:i\) is being represented over the solution vector \(\:{X}_{i}\), as shown in Eq. (1).

The initial population in the given problem is randomly generated within the search space bounds across the lower bound \(\:{L}_{j}\) and the upper bound\(\:{\:U}_{j}\). A random number \(\:{r}_{j}\:\)uniformly sampled in the range \(\:\left[\text{0,1}\right]\) for the \(\:{j}^{th}\)feature, the corresponding Eq. (2).

The above equation would ensure that each variable for the hawk starts within the problem’s specific range. The prey energy and phase transition are associated with the escaping energy \(\:E\), that determined whether the hawks should explore or exploit, the corresponding formula is shown in Eq. (3).

From the equation, if the value of \(\:\left|E\right|\ge\:1\) the hawks explore, where it searches new location in a much wider manner. When \(\:\left|E\right|\le\:1\) the hawks exploit by searching the best possible solution locally. The notation \(\:m\) denotes the current iteration and \(\:M\) designates the maximum number of iterations, i.e., the stopping criteria. The formula causes the prey’s energy to reduce over the time, assisting the algorithm gradually shift from exploration to exploitation phase. The overall architecture of HHO is presented in Fig. 5.

In the exploration phase, When the prey has high energy, hawks explore the solution search space. The parameter \(\:g\) is the random number sampled from a uniform distribution in the range \(\:\left[\text{0,1}\right]\). The value of parameter \(\:g\) would randomly switch among two exploration strategies during the high-energy phase of the prey and it controls diversity. The corresponding formula for exploration phase would be presented in Eq. (4).

The notation \(\:{X}_{rand}^{m}\) is the randomly selected hawk, \(\:{X}_{best}^{m}\) is the current best solution i.e., prey, and \(\:{X}_{mean}^{m}\) designates the mean position of all hawks. The random numbers \(\:{r}_{1},\:{r}_{2},{r}_{3},{r}_{4}\) are drawn from uniform distribution [\(\:{r}_{i}\sim U\left(\text{0,1}\right)\)]. The exploitation phase executes when the prey has low energy usually at later phases that mimics different attacking tactics depending on the prey’s escaping strategy. There are four main strategies are used, namely soft besiege, hard besiege, soft besiege with dive, and hard besiege with dive. The mathematical formulation for soft besiege, hard besiege strategies are presented in Eqs. (5) and (6) respectively.

The notation \(\:v\) is associated with the jump strength of the prey. \(\:\varDelta\:X\) is the distance between the hawk and the prey. The progressive rapid dives for both soft and hard besieges would rely on levy flight \(\:{l}_{f}\). The mathematical formulation for soft besiege dive are presented in Eqs. (7) and (8).

The best among the \(\:\alpha\:\) and \(\:\beta\:\) is chosen based on the fitness. The notation \(\:Z\) is the random vector, and \(\:{l}_{f}\left(F\right)\) is the Levy distribution-based perturbation. The mathematical formulation for soft besiege dive are presented in Eqs. (9) and (10).

After each iteration, hawk positions are updated, and the best solution is retained, and it continues till it reaches the maximum iteration \(\:M\). In feature selection process, the position of each hawk is encoded as a binary vector using the sigmoid transfer function26. Most of the optimization algorithms would work with the same logic of finding the best optimal feature for better prediction accuracy27,28. The most significant features in both COCOMO dataset and NASA dataset are presented in Fig. 6.

Algorithm: Harris Hawks Optimization

TabNet model

TabNet is a DL model that is used in the SCE, which is specifically designed to deal with the tabular data, combining the interpretability of DT along with the power of representation learning29. TabNet uses the sequential attention, sparse feature selection, and gradient-based optimization to process features effectively30. Initially the features are being transformed, the associated formula for input data is shown in Eq. (11) and the transformed function \(\:{I}_{t}\) is shown in Eq. (12).

Feature transformer uses a combination of shared and decision step-specific layers, based on Gated Linear Units (GLU) which used element-wise multiplication \(\:\odot\) and a sigmoid activation function denoted by \(\:\sigma\:\) as shown in Eq. (13).

At each decision step \(\:s\), TabNet applies an attention mechanism over the significant feature. The corresponding sparse mask \(\:{Q}^{m}\) applied over a trainable projection matrix \(\:{T}^{m}\) in the sparse matrix \(\:{spar}_{matrix}\) normalized activation like softmax, but it can return zeros, enforcing sparsity.

TabNet model would proceeds till \(\:M\) decision steps, combining outputs at each step as shown in Eq. (15).

The final output is aggregated over all steps as shown in Eq. (16).

This formulation supports stepwise interpretability, as each \(\:{Q}^{m}\) presents the most significant features used in each phase. The loss function associated with estimated value and the actual value are estimated using the following Eq. (17).

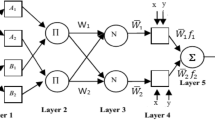

It is always desired to have a minimal loss value, as the minimal loss value indicates the model is able to precisely estimate the cost associated with software development. The overall architecture diagram for the TabNet is illustrated in Fig. 7.

TabNet architecture consists of several identical algorithmic blocks, each functioning as a decision block. These blocks are identical to the functioning of core concept of DT but are implemented using neural networks31. Similar to how DT filter input data through threshold-based rules to make decisions, each TabNet decision block processes data using two key components: a feature transformer (FT) and an attentive transducer (AT). In both the Feature Transformer (FT) and the Attentive Transducer (AT), the feature block includes a fully connected (FC) layer followed by batch normalization (BN). The key distinction lies in their output activation functions: FT uses the GLU for activation, while AT applies the sparsemax function after processing32. The primary role of the FT module is to handle feature processing. Once the input data are processed by the FT, they are split into two parts where one contributing to the final decision and the other passed on for subsequent decision steps. To improve parameter effectiveness and learning performance, the FT is further divided into a shared feature layer, which captures common features, and an independent feature layer, which focuses on unique characteristics.

Hyperparameter

The performance of the TabNet-HHO model is being evaluated concerning to the training and validation loss measures along with the training and validation accuracy measures. The corresponding graphs would assist in analyzing the under fitting and overfitting scenarios of the model33. The obtained graphs are shown in Fig. 8.

SHAP based feature analysis

The corresponding features across both the datasets COCOMO and NASA dataset that are used for SCE are selected using the HHO techniques. The contribution of the features and their impact on the decision process is being analyzed using the XAI techniques based on SHAP analysis34. The significant features in either of the datasets are analyzed and the corresponding feature significance graph is presented in Fig. 9.

Furthermore, the dependencies among the feature are being analysed. The colours associated with each data points in the dependency graphs indicates their significance in the decision process of the model. The data points that are shaded in pink indicate higher values of the interacting feature, While the blue colour in the datapoints represents the lower values of the interacting feature. This colouring highlights how the interaction between the main feature and a secondary feature contributes to the model’s predictions. These assist in comprehensive understanding of model’s response to one feature varies depending on the levels of another. The dependencies among the features in COCOMO dataset are presented in Fig. 10 and the dependencies in NASA dataset are illustrated in Fig. 11. The dependencies among only a few features are presented to confine the study.

The dependency graphs assist in visualizations that enhances the interpretability by highlighting not just individual feature impacts, but also how they behave in combination with each other’s. Together, these patterns would provide a better understanding of the model’s decision process and assists in identify key drivers behind prediction variability.

Results and discussions

The performance of the TabNet-HHO model is being evaluated concerning to various standard metrics like MAE, MSE, RMSE, MdMRE, and PRED across both the COCOMO and NASA dataset. MAE is used in the context where it needs a measure that quantifies the average magnitude of errors in a set of predictions, without considering their direction35. The mathematical representation for MAE is shown in Eq. (18). MSE36 assess the mean value of the squares of the errors among the computed and actual values, emphasizing larger errors due to squaring, the corresponding mathematical representation is shown in Eq. (19). RMSE is other standard metric that has been evaluated from MSE which is the square root of the mean value squared differences among the computed and actual values, highlighting the impact of larger errors37, the corresponding mathematical representation is shown in Eq. (20). MdMRE is also a robust metric that denotes the median value of the relative errors magnitudes, reducing the influence of outliers in predictive accuracy assessment38, the corresponding mathematical representation is shown in Eq. (21). PA is a metric that approximates the proportion of correct predictions made by a model relative to the total sum of predictions, the corresponding mathematical representation is shown in Eq. (22).

From the above equations, the notation \(\:x\) is the value and the \(\:x{\prime\:}\) is the computed value using the proposed model. The number of instances is represented using the notation \(\:n\), the notation \(\:\stackrel{\sim}{m}\) is the median value. The acronyms \(\:EE\) represents the estimated efforts and \(\:AE\) designated the actual estimated efforts. The notation \(\:e{\prime\:}\) designated the error percentage among the actual and computed values. The corresponding obtained results are shown in Table 5 for both the datasets with and without the HHO approach.

It can be observed from the above experimental analysis, the TabNet-HHO model has outperformed the TabNet model alone. Among both the datasets, the model has performed well over the COCOMO dataset than the NASA dataset. This enhanced performance on the COCOMO dataset can be attributed to its relatively structured and less noisy nature, as well as the presence of well-defined input features aligned with the underlying cost estimation model. In contrast, the NASA dataset tends to exhibit higher variability and complexity, potentially making it more challenging for both models to capture underlying patterns with the same level of accuracy. The corresponding graphs obtained from experimental outcome are presented in Fig. 12. The integration of the HHO algorithm into TabNet incurs an additional average time delay of approximately 14.56 s across both datasets, which nearly doubles the execution time in exchange for significant performance improvements in accuracy of the model.

The model is evaluated concerning to the K-fold validation approach as the datasets that are considered in the current study are smaller in size. Moreover, the k-fold validation would effectively deal with bias and variance in the dataset. In the current evaluation, Mean Absolute Percentage Error (MAPE) is being considered to calculate the average of the absolute differences among the predicted value and actual value, which is expressed as a percentage of the actual values. The corresponding formula for MAPE is shown in Eq. (23). The model is evaluated for all the values of \(\:k\) from 1 to 5. The corresponding values on evaluating the K-fold validation for both the datasets concerning to, the outcome of COCOMO dataset is presented in Table 6 and outcome of NASA dataset is presented in Table 7.

From the above equation, \(\:{a}_{i}\) is the actual value and the \(\:{p}_{i}\) is the predicted values across \(\:n\) instances in the problem.

The experimental outcome on various values of \(\:k\) demonstrate that the TabNet-HHO has consistently outperforms standard TabNet model across both COCOMO and NASA datasets, achieving lower MAE, RMSE, and MAPE values. Furthermore, the performance of the proposed model is compared with existing contemporary techniques for the SCE. Due to scarcity in the studies on COCOMO and NASA datasets, the current study has evaluated those models that are used in SCE, rather than specifically to the datasets that are being used in the current study. For those studies which is not evaluated the model concerning to a particular parameter would be mentioned as not available N/A in the table. Some of the conventional approaches includes RF, LR, Multilayer Perceptron (MP), CNN, ANN, KNN, SVM, Genetic Algorithm (GA), particle swarm optimization (PSO) and Neural Networks (NN). The performances of various models are presented in Table 8.

It can be observed from the analysis with the other contemporary techniques, that the proposed model has outperformed the other models. In comparison, traditional methods like LR and RF showed significantly higher error rates, highlighting the superior accuracy and robustness of the TabNet-HHO model.

Performance across divergent datasets

The performance of the proposed model is further evaluated across the divergent publicly accessible datasets that are used for SCE. The TabNet-HHO model has been evaluated across Desharnais Dataset, China Dataset, Albrecht Dataset44 with the same performance evaluation metrics. The experimental outcome is presented in Table 9.

The integration of HHO with TabNet consistently improves performance across all datasets. Although the execution time of the model has increases moderately, the prediction accuracy has notably improved, demonstrating the effectiveness of HHO in enhancing prediction precision.

The performance of the proposed model are being analyzed concerning to the GPU utilization and the memory utilization of the TabNet model and TabNet with HHO model. The observed utilization is represented as graphs in Fig. 13. It can be observed that TabNet with HHO has consumed more resources than TabNet model alone.

Potential limitations

The current section of the manuscript presents some of the potential limitations of the proposed TabNet-HHO in the software cost estimation. The hyperparameter specifications that are considered in the current study are selected based on the default values of the corresponding models. Those models that are can be fine-tuned using some of the techniques like random Search, grid search, and Bayesian optimization to identify optimal configurations. Optimizing the hyperparameters does have a significant impact on the prediction accuracy of the machine learning model. The dataset in the current study is not processed to make it balanced, or the reliability of effort labels in not assessed. However, the class balancing and effort labels would have a significant impact on the prediction outcome of the model. Hence, this is also considered as one of the potential limitation of the current study. The implementation of optimization algorithm would need additional computational cost and in the current study, only the execution time has been observed and no further analysis was made towards the computational cost, which is among the potential limitations of the current study.

Threats to the validity and Real-World relevance

The current research has been performed based on the observation made from previous studies that are tabulated under the constraint environment. The real-time effort assessment and the cost estimation might be different from the parameters that are considered from the datasets. The current datasets that are used in the dataset are standard and obsolete, the cost parameters would vary over the time, the complexity of the software being developed, duration of the project and the process model being used will have a significant impact on the software development cost, which are not being considered in the current study. The real-time software development cost would vary from the cost-estimation that was done based on the datasets. Moreover, the current study is implemented over the limited datasets, but evaluating over divergent datasets would assist in better comprehensive understanding over the model robustness, generalizability, and real-world applicability.

Even though the proposed TabNet–Harris Hawks Optimisation framework greatly improves the accuracy of software cost estimates, it is important to address concerns about data fairness and transparency. High-performance models frequently function as intricate black-box systems, complicating stakeholders’ comprehension of the estimation process. In the current study, we have used the XAI to make the model comprehensible. The quality, variety, and representativeness of the training data also have a big effect on how well the model works. If historical cost datasets have built-in biases, like certain types of projects, levels of team expertise, or organization-specific rules, the model might unintentionally spread those patterns, which could lead to unfair or inaccurate cost estimates for projects or resource settings that are not typical. Furthermore, total dependence on automated estimations may diminish the significance of expert judgement, which is crucial for managing dynamic and context-sensitive factors such as organisational culture, advancing technology stacks, or unexpected development risks. So, even though the proposed hybrid approach looks good for making accurate and scalable estimates, it is important to keep things clear, have human oversight, and keep improving the data inputs to make sure that software cost predictions are ethical and reliable.

Conclusion

The current study has proposed a novel TabNet with Harris Hawks Optimization model for the software cost estimation. The model is being evaluated across the bench mark datasets like COCOMO and NASA and the experimental observation has proven that the TabNet-HHO model has exceptionally performed well compared to that of the conventional cost estimation models. TabNet + HHO model, has achieved the lowest MAE of 0.0006, RMSE of 0.0055, MdMRE of 0.0059, and prediction accuracy of 99.88%. The integration of TabNet’s sequential attention mechanism with HHO’s global optimization capabilities, enabled the model to effectively capture complex underlying patterns in cost estimation datasets. The current model needs a considerable overhead on implementing both the standalone models, which is one of the challenging aspects of the proposed model and adding optimization algorithm for fine tuning the hyperparameters needs a considerable resources for evaluation. The model is being independently evaluated across both the datasets and the performances are analyzed against various other studies. Despite more complex model implementation, the proposed has yielded a higher performance.

Furthermore the model can be evaluated across divergent datasets for comprehensive analysis of the model’s performance. More real-time data can be used for training and assessing the model along with the integration of the software development model for more realistic analysis. Additionally, incorporating dynamic project parameters such as changing team sizes, process models, and evolving technological factors could improve the real-time and adaptability of the estimation process. The hyperparameter tuning has not been performed in the current analysis which is considered to be the potential limitation of the current study, optimizing the hyperparameters would have a significant impact on the models performances. Explainable Artificial Intelligence (XAI) techniques like shapely and lime can be used for better interpretability of the model.

Data availability

The dataset that is used in the current study is accessible at[https://github.com/Derek-Jones/Software-estimation-datasets] and [https://github.com/timm/ourmine/blob/master/our/arffs/effest/nasa93.arff].

References

Shamim, M. M. I., Hamid, A. B. A., Nyamasvisva, T. E. & Rafi, N. S. B. Advancement of artificial intelligence in cost Estimation for project management success: A systematic review of machine Learning, deep Learning, Regression, and hybrid models. Modelling 6, 35. https://doi.org/10.3390/modelling6020035 (2025).

Draz, M. M., Emam, O. & Azzam, S. M. Software cost Estimation predication using a convolutional neural network and particle swarm optimization algorithm. Sci. Rep. 14, 13129. https://doi.org/10.1038/s41598-024-63025-8 (2024).

Singh, S. & Kumar, K. Software Cost Estimation: A Literature Review and Current Trends, 2023 Third International Conference on Secure Cyber Computing and Communication (ICSCCC), Jalandhar, India, 2023, pp. 469–474. https://doi.org/10.1109/ICSCCC58608.2023.10176495

Murad, M. A., Abdullah, N. A. S. & Rosli, M. M. Software Cost Estimation for Mobile Application Development - A Comparative Study of COCOMO Models, IEEE 11th International Conference on System Engineering and Technology (ICSET), Shah Alam, Malaysia, 2021, pp. 106–111, (2021). https://doi.org/10.1109/ICSET53708.2021.9612528

Qin, M., Shen, L., Zhang, D. & Zhao, L. Deep Learning Model for Function Point Based Software Cost Estimation -An Industry Case Study, International Conference on Intelligent Computing, Automation and Systems (ICICAS), Chongqing, China, 2019, pp. 768–772, (2019). https://doi.org/10.1109/ICICAS48597.2019.00165

Azzeh, M., Nassif, A. B. & Attili, I. B. Predicting software effort from use case points: A systematic review. Sci. Comput. Program. 204, 102596. https://doi.org/10.1016/j.scico.2020.102596 (2021).

Huq, F. Testing in the software development Life-cycle: now or later. Int. J. Project Manage. 18 (4), 243–250. https://doi.org/10.1016/S0263-7863(99)00024-1 (2000).

Alhumam, A. and Shakeel Ahmed. Software Requirement Engineering over the Federated Environment in Distributed Software Development Process. Journal of King Saud University - Computer and Information Sciences, 36(9), 102201, (2024). https://doi.org/10.1016/j.jksuci.2024.102201

Kevin McDonnell, F., Murphy, B., Sheehan, L., Masello, G. & Castignani Deep Learning in Insurance: Accuracy and Model Interpretability Using TabNet. Expert Systems with Applications, 217, 119543, (2023). https://doi.org/10.1016/j.eswa.2023.119543

Ali, A., Aadil, F., Khan, M. F., Maqsood, M. & Lim, S. Harris Hawks Optimization-Based Clustering Algorithm for Vehicular Ad-Hoc Networks. IEEE Trans. Intell. Transp. Syst. 24(6), 5822–5841. https://doi.org/10.1109/TITS.2023.3257484 (2023).

Asmaul Hosna, E., Merry, J., Gyalmo, Z., Alom, Z. & Aung Mohammad Abdul Azim, transfer learning: a friendly introduction. J. Big Data. 9, 102. https://doi.org/10.1186/s40537-022-00652-w (2022).

Mohamed, A. & Mahmood Shahid. K-Nearest Neighbors Approach to Analyze and Predict Air Quality in Delhi. Journal of Artificial Intelligence and Metaheuristics, 34–43. (2025). https://doi.org/10.54216/JAIM.090104

Maher, M. & Alneamy, J. S. An Ensemble Model for Software Development Cost Estimation, 2022 5th International Seminar on Research of Information Technology and Intelligent Systems (ISRITI), Yogyakarta, Indonesia, 2022, pp. 346–350. https://doi.org/10.1109/ISRITI56927.2022.10052861

Aljahdali, S., Sheta, A. F. & Debnath, N. C. Estimating software effort and function point using regression, Support Vector Machine and Artificial Neural Networks models, IEEE/ACS 12th International Conference of Computer Systems and Applications (AICCSA), Marrakech, Morocco, 2015, pp. 1–8, (2015). https://doi.org/10.1109/AICCSA.2015.7507149

Yuvalı, M., Yaman, B. & Tosun, Ö. Classification comparison of machine learning algorithms using two independent CAD datasets. Mathematics 10, 311. https://doi.org/10.3390/math10030311 (2022).

Guleria, P., Ahmed, S., Alhumam, A. & Srinivasu, P. N. Empirical study on classifiers for earlier prediction of COVID-19 infection cure and death rate in the Indian States. Healthcare 10, 85. https://doi.org/10.3390/healthcare10010085 (2022).

Qassem, N. T., Ibrahim, A. & Saleh Prediction of Software Cost Estimation Using Stacking Ensemble Learning Method. Procedia Comput. Sci., 258, 727–736, https://doi.org/10.1016/j.procs.2025.04.305. (2024).

Ünlü, H. et al. Software Effort Estimation Using ISBSG Dataset: Multiple Case Studies, 2021 15th Turkish National Software Engineering Symposium (UYMS), Izmir, Turkey, 2021, pp. 1–6. https://doi.org/10.1109/UYMS54260.2021.9659655

Alhussan, A. A. et al. Pothole and Plain Road Classification Using Adaptive Mutation Dipper Throated Optimization and Transfer Learning for Self Driving Cars, in IEEE Access, 10, 84188–84211, (2022). https://doi.org/10.1109/ACCESS.2022.3196660

Kaushik, A. & Choudhary, N. Priyanka Software Cost Estimation Using LSTM-RNN. In: Bansal, P., Tushir, M., Balas, V., Srivastava, R. (eds) Proceedings of International Conference on Artificial Intelligence and Applications. Advances in Intelligent Systems and Computing, vol 1164. Springer, Singapore. (2021). https://doi.org/10.1007/978-981-15-4992-2_2

Reifer, D., Boehm, B. W. & Chulani, S. The Rosetta Stone: Making COCOMO 81 Estimates Work with COCOMO II. Crosstalk. J. Def. Softw. Eng. 11–15 (1999).

Menzies, T., Krishna, R. & Pryor, D. The Promise Repository of Empirical Software Engineering Data; (2016). https://openscience.us/repo. North Carolina State University, Department of Computer Science Source: https://openscience.us/repo/

Heidari, A. A. et al. Harris Hawks Optimization: Algorithm and Applications. Future Generation Computer Systems, 97, 849–872, (2019). https://doi.org/10.1016/j.future.2019.02.028

Reem Alkanhel, El-Sayed, M., El-kenawy, A. A., Abdelhamid, A., Ibrahim, M. A. & Alohali Mostafa Abotaleb, Doaa Sami Khafaga, network intrusion detection based on feature selection and hybrid metaheuristic optimization. Comput. Mater. Contin. 74 (2), 2677–2693. https://doi.org/10.32604/cmc.2023.033273 (2023).

Hussien, A. G. et al. Recent advances in Harris Hawks optimization: A comparative study and applications. Electronics 11, 1919. https://doi.org/10.3390/electronics11121919 (2022).

Ouyang, C. et al. Integrated improved Harris Hawks optimization for global and engineering optimization. Sci. Rep. 14, 7445. https://doi.org/10.1038/s41598-024-58029-3 (2024).

El-Sayed, M. et al. Greylag Goose Optimization: Nature-inspired Optimization Algorithm. Expert Systems with Applications, 238, 122147, (2024). https://doi.org/10.1016/j.eswa.2023.122147

Abdelaziz, A. et al. Amel Ali Alhussan, Innovative Feature Selection Method Based on Hybrid Sine Cosine and Dipper Throated Optimization Algorithms. IEEE Access 11, 79750–79776. https://doi.org/10.1109/ACCESS.2023.3298955 (2023).

Shah, C., Du, Q. & Xu, Y. Enhanced tabnet: attentive interpretable tabular learning for hyperspectral image classification. Remote Sens. 14, 716. https://doi.org/10.3390/rs14030716 (2022).

Lionel, P., Joseph, E. A. & Joseph, R. P. Explainable Diabetes Classification Using Hybrid Bayesian-optimized TabNet Architecture. Computers in Biology and Medicine, vol. 151, p. 106178, (2022). https://doi.org/10.1016/j.compbiomed.2022.106178

Yingze, S. et al. Comparative analysis of the TabNet algorithm and traditional machine learning algorithms for landslide susceptibility assessment in the Wanzhou region of China. Nat. Hazards. 120, 7627–7652. https://doi.org/10.1007/s11069-024-06521-4 (2024).

Kumar, T. & Ujjwal, R. L. TabNet unveils predictive insights: a deep learning approach for parkinson’s disease prognosis. Int. J. Syst. Assur. Eng. Manag. https://doi.org/10.1007/s13198-024-02450-4 (2024).

Sravya, V. S., Srinivasu, P. N. & Shafi, J., Waldemar Hołubowski and Adam Zielonka Advanced Diabetic Retinopathy Detection with the R–CNN: A Unified Visual Health Solution International Journal of Applied Mathematics and Computer Science, vol. 34, no. 4, Sciendo, pp. 661–678. (2024). https://doi.org/10.61822/amcs-2024-0044

Srinivasu, P. N., Lakshmi, J. & Gudipalli, G. XAI-driven catboost multi-layer perceptron neural network for analyzing breast cancer. Sci. Rep. 14, 28674. https://doi.org/10.1038/s41598-024-79620-8 (2024).

Kulkarni, A. R., Shivananda, A., Kulkarni, A. & Krishnan, V. A. Machine Learning Regression–based Forecasting. In: Time Series Algorithms Recipes. Apress, Berkeley, CA. (2023). https://doi.org/10.1007/978-1-4842-8978-5_4

Salamai, A. A., El-Sayed, M., El-kenawy, I. & Abdelhameed Dynamic voting classifier for risk identification in supply chain 4.0. Computers. Mater. Continua. 69 (3), 3749–3766. https://doi.org/10.32604/cmc.2021.018179 (2021).

Karunasingha, D. S. K. Root Mean Square Error or Mean Absolute Error? Use Their Ratio As Well. Inf. Sci. 585, 609–629. https://doi.org/10.1016/j.ins.2021.11.036 (2022).

Stensrud, E., Foss, T., Kitchenham, B. & Myrtveit, I. An empirical validation of the relationship between the magnitude of relative error and project size, Proceedings Eighth IEEE Symposium on Software Metrics, Ottawa, ON, Canada, 3–12, (2002). https://doi.org/10.1109/METRIC.2002.1011320

Al Asheeri, M. M. & Hammad, M. Machine Learning Models for Software Cost Estimation, 2019 International Conference on Innovation and Intelligence for Informatics, Computing, and Technologies (3ICT), Sakhier, Bahrain, 2019, 1–6. https://doi.org/10.1109/3ICT.2019.8910327

Rodríguez Sánchez, E., Vázquez Santacruz, E. F. & Cervantes Maceda, H. Effort and cost Estimation using decision tree techniques and story points in agile software development. Mathematics 11, 1477. https://doi.org/10.3390/math11061477 (2023).

Abdulmajeed, A. A., Al-Jawaherry, M. A. & Tawfeeq, T. M. Predict the required cost to develop software engineering projects by using machine learning. J. Phys. Conf. Ser. 1897, 012029 (2021).

Sharma, S. & Vijayvargiya, S. Modeling of software project effort estimation: A comparative performance evaluation of optimized soft computing-based methods. Int. J. Inf. Technol. 14 (5), 2487–2496 (2022).

Sofian Kassaymeh, M., Alweshah, M. A., Al-Betar, Abdelaziz, I. & Hammouri Mohammad Atwah Al-Ma’aitah, software effort Estimation modeling and fully connected artificial neural network optimization using soft computing techniques. Cluster Comput. 27 (1), 737–760 (2024).

Azzeh, M. & Nassif, A. B. Analogy-based effort estimation: a new method to discover set of analogies from dataset characteristics. IET Softw. 9, 39–50. https://doi.org/10.1049/iet-sen.2013.0165 (2015).

Funding

This work was supported by the Deanship of Scientific Research, Vice Presidency for Graduate Studies and Scientific Research, King Faisal University, Saudi Arabia [Grant No. KFU252814].

Author information

Authors and Affiliations

Contributions

A.A. conceptualized, acquired, investigated the necessary data and analyzed the results for evaluation and prepared the initial and the final draft.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Alhumam, A. Software cost estimation using TabNet and Harris Hawks Optimization. Sci Rep 15, 45434 (2025). https://doi.org/10.1038/s41598-025-29908-0

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-29908-0