Abstract

Network security is crucial in today’s digital world, since there are multiple ongoing threats to sensitive data and vital infrastructure. The aim of this study to improve network security by combining methods for instruction detection from machine learning (ML) and deep learning (DL). Attackers have tried to breach security systems by accessing networks and obtaining sensitive information.Intrusion detection systems (IDSs) are one of the significant aspect of cybersecurity that involve the monitoring and analysis, with the intention of identifying and reporting of dangerous activities that would help to prevent the attack.Support Vector Machine (SVM), K-Nearest Neighbors (KNN), Random Forest (RF), Decision Tree (DT), Long Short-Term Memory (LSTM), and Artificial Neural Network (ANN) are the vector figures incorporated into the study through the results. These models are subjected to various test to established the best results on the identification and prevention of network violation. Based on the obtained results, it can be stated that all the tested models are capable of organizing data originating from network traffic. thus, recognizing the difference between normal and intrusive behaviors, models such as SVM, KNN, RF, and DT showed effective results. Deep learning models LSTM and ANN rapidly find long-term and complex pattern in network data. It is extremely effective when dealing with complex intrusions since it is characterised by high precision, accuracy and recall.Based on our study, SVM and Random Forest are considered promising solutions for real-world IDS applications because of their versatility and explainability. For the companies seeking IDS solutions which are reliable and at the same time more interpretable, these models can be promising. Additionally, LSTM and ANN, with their ability to catch successive conditions, are suitable for situations involving nuanced, advancing dangers.

Similar content being viewed by others

Introduction

Network security risen to the forefront of importance in this age of rapid technological development and growing reliance on digital infrastructure. There are always new possibilities and new cyber risks the ever changing digital world. As the digital sentinels that keep an eye on network traffic and sniff out malicious activity, Intrusion Detection Systems (IDS) have taken on a crucial function in this environment. This article introduces the topic of how modern machine and deep learning approaches might strengthen these IDS, hence improving network security and better adapting to the difficulties of the digital age.

The need of bolstering network security using novel methods is emphasized as this chapter opens with a review of its essential components. Focuses on the evolution of network security over time and discusses the flaws of modern intrusion detection approaches. These restrictions make this study necessary, which causes us to seek new approaches that utilize machine learning and deep neural networks. This research is motivated by the enhanced complexity of cybersecurity threats; shortcomings of conventional intrusion detection methodologies; and above all, the need to adopt a preventive security approach to network vulnerability. The study is important because it holds the potential to revolutionalize network security is now implemented. The research of the present work offers original insights on the methodological approaches such as proposing the new data fusion method, developing the adaptive cybersecurity model and the advanced intrusion detection systems. Altogether, these contributions enhance the security of networks since they present practical solutions through which organizations may effectively secure their assets.

Intrusion detection systems (IDS)

Intrusion Detection System (IDS) is also a security system in the environment that monitors for any behaviors that indicate suspicious activities in a network or system, for instance attempts to penetrate for unauthorized access1. They constantly search for anything suspicious including network traffic, system logs and many more. There are broadly two categories of IDS, namely the signature-based IDS and the anomaly-based IDS, the former of which involves the searching for patterns of assaults that are well known and the latter of which involves the searching for deviations from normality2.

Early approaches to intrusion detection

Intrusion detection was considered as a new technology during early days when computing and communication systems were developed. The first models of intrusion detection relied on manually coded rules and heuristics3.

Alerts would be raised whenever the input data resembled previous attacks, usually in terms of the identified patterns or signatures that well-trained security personnel and administrators would define. Despite these early systems being somewhat effective in defending against already known risks, they were no match for enhanced threats2.

Transition to digital networks

The environment of intrusion detection as noted has changed significantly after the coming of the digital network4. DSince computer networks became more of a medium for the exchange of data between the businesses, both the communication and the traffic also increased immensely. That is because, with the shift, intrusion detection has had to be more scalable as well as much automated. the IDS continued to change in its information present in the networks and increasing threats that emerged, new models of the IDS were then formed5. This change has however created an opportunity to enhance new complex intrusion detection systems including machine and deep learning algorithms to meet the current cybersecurity gap.

Anomaly detection techniques

Almost all fields including cybersecurity employ a class of methods called anomaly detection methods to identify unusual occurrences in data. The goal of anomaly-based low level strategies interrelating to intrusion detection is to classify situations which are characterized by occurrence of activity that is not typical within a certain given context. The following are the typical steps involved in such methods: Compile data which could be used as reference information regarding routine activities. This information may be related to human behavior, system activities, or network traffic. Use the information gathered to fine-tune a model that accurately represents typical behavior. Statistical models, clustering algorithms, and machine learning models are all examples of popular methods2. Anomaly Detection: The model performs continuous analysis of incoming data after it is deployed in a real-world setting. Any information that considerably deviates from the established norm is identified as suspicious. Notifying Security Personnel or Triggering Automated Actions to Mitigate Potential Threats Once anomalies are detected, the system can issue warnings or trigger replies6.

Challenges in anomaly-based detection

Anomaly-based detection has many benefits, such as the capacity to spot brand new and changing threats, but it also has its fair share of difficulties. A high percentage of false positives are produced by anomaly detection systems because even statistically normal events may be misidentified as abnormalities. Keeping detection rates high while decreasing false positives in such systems can be difficult to fine-tune. Data Imbalance: In many real-world situations, normal conduct considerably surpasses criminal activity, resulting in an unbalanced set of data. This may compromise the model’s sensitivity to detect extremely unusual events7. Changing Environments and Networks: Anomaly-based systems may have trouble adjusting to new circumstances. Maintaining their efficacy calls for regular updates and training. The inability to interpret the results of complicated machine learning models might slow down the decision-making and response time necessary in the face of anomalies. The issue of scalability arises when data volumes increase. Real-time anomaly detection requires massive data processing and analysis capabilities. Successful implementation of anomaly-based intrusion detection systems relies on overcoming these obstacles so that cybersecurity professionals can rely on the systems’ insights and put them to use.

Protecting the privacy, security, and availability of digital assets is the primary goal of these systems, which are built to constantly monitor and analyze network traffic in order to detect and counteract any suspicious or harmful activity8. The development of new ID systems shows the necessity of adjusting security measures to meet the evolving nature of cyber threats. There have been three major eras in the development of ID systems so far:

Traditional signature-based systems

Early intrusion detection systems relied heavily on signatures. These programs utilized previously established attacking patterns, or signatures. Malicious data was marked as such when it was detected on a network and matched a signature. These systems fared well against typical threats, but they were helpless against zero-day exploits and constantly changing dangers9.

Anomaly-based systems

The shortcomings of signature-based systems motivated the development of anomaly-based alternatives. These programs determined what constituted “normal” network activity and reported any changes as possible security threats. They were more flexible but needed a lot of adjusting and they sometimes gave false positives.

Machine and deep learning-based systems

A new age of intrusion detection was born with the introduction of machine learning and deep learning. These systems are very good at spotting both common and uncommon threats because they use sophisticated algorithms to learn and adapt on their own based on how a network operates. There is some examples that are Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) in the deep learning model that can detect several patterns in the network traffics and improved the detection accuracy to a higher level10. The background study pointed out some of the challenges of modern ID systems such as the growing volume and complexity of network traffic data, the use of evasion techniques by the adversaries and the need to offer a real-time intrusion detection. This study is therefore important given the emergence of ID systems and more so, the challenges they encounter. Their task is to enhance the intrusion detection and develop the systems that can prevent and respond to diverse numbers of threats based on the latest machine learning and deep learning strategies in advance11. In an age where data accuracy and privacy become vital this research intends to provide solutions to improve networking security and digital support.

The rationale behind this research relates to the fact that there is an increasing threat that permeates networks hence the need to advance knowledge in this field. Increased accessibility and connectivity through the adoption of the web and digital technology pose security challenges As the capacity for innovation and access has grown networks have weakened and become susceptible to increasingly persistent and complex external attacks. When it comes to the identification and prevention of such specific types of attack, intrusion detection system provides very little assistance. The motivation for this study stems from the fact that recent advances in machine learning and deep learning can be utilised to develop effective proactive and self-learning intrusion detection systems12. Facing the urgency of the data’s integrity and availability nowadays, cybersecurity plays a crucial role in it, and these technologies will contribute to keeping the data protected from any threat. Another reason for this study is the hope of making long-lasting contributions in the area of network security, given that it could enhance our capability to resist the advancement of smarter and smarter hackers13.

This study has significant implications for network security because it was the first to use cutting-edge machine and deep learning methods to significantly improve the efficiency of intrusion detection systems14. Given the constantly evolving and diversifying nature of threats in the cyberspace, the new ideas of this work form a robust line of defense. It assists in protecting the main information and databases of a firm without the weaknesses of traditional security measures making it easier to keep data secure, private and easily accessible to those who need it. The findings and the procedures discussed herein do not only enhance the efficiency of intrusion detection, but also establish the framework for an adaptive security system15. As cybersecurity becomes paramount for each person, businesses, and the entire society this strategic approach helps to maintain the dynamic view on the network protection against current and new types of threats. In the fields of network security and intrusion detection, this paper makes a number of significant contributions. This study offers novel solutions to extant issues that are experienced due to continually emerging and dynamic cyber threats by leveraging on enhanced machine and deep learning mechanisms. Preliminary intrusion detection models, new multi-source data fusion techniques, and some of the framework to develop an adaptive Cybersecurity framework are some of the major findings of this research. These contributions combined enhance the efficiency of the network and fortification of defenses against the increasing complex threat environment.

Advanced intrusion detection models

Three intrusion detection algorithms based on machine learning and deep learning that are introduced here are more effective than conventional approaches. These models contribute to enhance the overall protection of digital networks against a rich set of threats including new and complex attacks.16.

Multi-source data fusion techniques

The current study introduces some innovative data fusion methodologies that if employed in IDSs can help improve the accordance and reliability of IDSs through utilizing some data sources such as network traffic data and log files and traces of system calls. All these methods offered here pose a practical and efficient approach to solving this issue of poor network security.

Adaptive cybersecurity framework

The study presents a dynamic architecture of cybersecurity to enable meeting the continually emerging threats experienced by IDSs. By implementing this improvement, we ensure that our networks will remain safe and secure despite increasing threats As for our contributions, they all contribute to improving the overall field of network security by advancing intrusion detection technologies, overcoming the limitations of traditional approaches, and encouraging more dynamic and effective development of the cybersecurity environment.

Digital networks are vulnerable because traditional intrusion detection systems have a hard time reliably identifying novel and sophisticated infiltration attempts. In an increasingly interconnected and vulnerable digital world, there is a pressing need to improve network security by leveraging the power of machine and deep learning techniques to develop robust and adaptable intrusion detection systems capable of proactively identifying emerging threats, minimizing false positives, and protecting the confidentiality and integrity of vital data. Let X be a dataset representing network traffic data, where \(X=\{x_1,x_2,\ldots ,x_N\}\), and each \(x_i\) is a feature vector representing network traffic attributes. The goal is to design an intrusion detection model, M, parameterized by \(\Theta\), that can classify network traffic instances as either normal (\(y=0\)) or malicious (\(y=1\)), where y represents the ground truth labels. The model M is defined as \(M(x_i;\Theta )\) and aims to minimize the following objective function:

where:

-

L: Loss function, measuring the discrepancy between the predicted and actual labels.

-

\(R(\Theta )\): Regularization term to prevent overfitting.

-

\(\lambda\): Regularization parameter, controlling the trade-off between fitting the data and regularization.

-

N: Total number of network traffic instances.

The problem formulation seeks to optimize the model’s parameters, \((\Theta )\), to maximize detection accuracy while minimizing false positives and false negatives in identifying network intrusions.

This study has the following research objectives:

-

1.

To evaluate and compare the performance of various machine and deep learning models, including convolutional neural networks (CNNs), recurrent neural networks (RNNs), and ensemble methods, for intrusion detection, and to develop optimization strategies that enable real-time detection of novel threats.

-

2.

To investigate the fusion of multiple data sources, including network traffic data, log files, and system call traces, to enhance the accuracy and robustness of intrusion detection systems. This objective includes exploring data preprocessing techniques and fusion algorithms.

-

3.

To design and implement intrusion detection systems that can adapt to the dynamic nature of cyber threats in modern networks. This involves the creation of ways and means of updating the countermeasures against risks in real-time and enhancing the protection of data for the longest time possible.

Literature review

In intrusion detection, machine learning methods have proven to be rather requisite in the mainstays of all approaches. Some of the methods which have been implemented are decision trees, support vector machines, and k-nearest neighbors17. A further advantage mentioned is that models might be trained with new data to maintain their performance, which can counter changing threats18. For instance, decision tree based models are well applicable to the anomaly detection because they can easier recognize the differences between useful and destructive patterns of networks behavior. SVMs rely on the ability of separating the data space by a hyper plane to classify the occurrence and differentiate between safe and risky behaviors. As the K-nearest neighbors method defines it, comparing data is the way to find intruders. Strengthen the security of the network with the help of a large number of machine learning algorithms.

The capacity of deep learning approaches to automatically learn and extract complex patterns from vast datasets has propelled them to the forefront of machine learning. One popular application of deep learning architectures in intrusion detection is the use of Convolutional Neural Networks (CNNs), while Recurrent Neural Networks (RNNs) are also used16. CNNs excel at handling image-based attacks or multidimensional arrays of network traffic data. In contrast, RNNs excel at processing sequences and are hence well-suited to time-series network data. Improved precision in identifying complicated intrusion patterns is a direct result of their ability to capture temporal and geographical relationships in data.

In the evolution of swarm intelligence, some adaptations have been brought closer to a mix of exploitation and exploration models to achieve a much better correlation between as well as global and local research. The general PSO and ABC algorithms have been made adaptive and have been applied to actual life problems including scheduling and structural design. However, to date, new approaches are still being investigated continuously in an attempt at providing better algorithms especially for the constrained problem and for the multi-objective one. The Optimization Algorithm proposed in this paper19 is called the Greylag Goose Optimization, or GGO for short, and is a swarm-based heuristic model based on the motion of geese. GGO emulates the pattern of formation of geese to improve exploration as well as exploitation, with more effective searches realized. It has been verified on different benchmarks and engineering design problems and outperforms the existing optimization algorithms, especially in solving engineering design problems.

Meta-heuristic algorithms have become a favorite tool in solving optimization problems for their simplicity and ability to avoid convergence to local optima. Such algorithms, derived from natural analogues, differ in effectiveness due to the corresponding operators and heuristics used within a system. Among phenomena such as swarm intelligence and evolutionary algorithms, the most utilized in engineering, data mining, and machine learning. The Puma Optimizer, or PO for short, is a new algorithm that has just been created and is based on the life and intelligence of pumas with new exploration and exploitation approaches20. In contrast, PO has some other unique characteristics, including a flexible phase-changing mechanism of the phase that can adapt to the specific problem of optimization for changing problems and increasing the efficiency for different optimization problems. The efficiency of the proposed PO algorithm has been tested on 23 standard benchmark functions, CEC2019 functions, and several machine learning tasks to perform clustering and feature selection tasks. Measurements indicate that the proposed PO is better than some current optimal solution search methods: in optimization tests, PO is better in 27 out of 33 problems and in clustering tests, PO provides better quality solutions in 7 out of 10 datasets. Also, PO has promising performance while addressing issues related to multi-layer perceptron (MLP) networks and community detection. These outcomes reveal that PO is a successful optimization tool for real-world applications for generating a stable solution with good opportunities to advance research work based on multi-objective optimization and civil engineering problems in the future.

One of the most important issues encountered in data preprocessing for building classification models is feature selection, which is aimed at dimensionality reduction. The “Apple Perfection” study used the Waterwheel Plant Algorithm (WWPA) to determine the key attributes affecting the quality of apples and succeeded in a considerable reduced computational load and an average error rate of 0.52153. This study showed what had been mentioned earlier that feature selection can bring about an improvement in the result by considering only the relevant attribute; Therefore, the logistic regression model that was used in the study had a classification accuracy of 88.63 %. In the same way, in intrusion detection, domain adapted feature selection can help improve the identification and categorization of the intrusion. By applying such methods as bWWPA or some other one, vital network features can be chosen, noise can be eliminated, and the machine learning and deep learning models’ performance can be maximized.21 Despite being a relatively new field, intrusion detection is now regarded as an essential component of network security that utilizes both classical and contemporary mathematical approaches. Intrusion Detection Systems (IDS) have been enhanced in the current world through new advancements within machine learning and deep learning methodologies. Self-organizing nature-inspired metaheuristic algorithms have shown some capabilities in the model optimization of IDS. This paper22 proposed the Greylag Goose Optimization (GGO) Algorithm based on the birds’ ‘V’ formation structure to improve exploration/exploitation. As a result, GGO was judged to be more effective than other methods in optimizing feature selection for IDS and solving complex engineering problems. It is important to use GGO to enhance ML models for IDS22. The synergism between dynamic strategies such as FbOA and the DL architectures represents the best practices profile. As a result, FbOA successfully applies high-dimensional and nonlinear problems, improving their convergence and making the whole process more robust as compared to the operations of football teams. In IDS contexts, such algorithms could help in adjusting deep learning models such as LSTM or CNN, applicable in analyzing sequential and complex data from network traffic.23 Most of the reviewed nature-inspired optimization techniques can be incorporated into the proposed hybrid ML and DL models approach used in the current study. The usage of such optimization techniques can even advance model effectiveness, cut down the processing time, and increase the detection ratio. For example, incorporating GGO or PO during the feature selection step could enhance interpretability and part resistance against the zero-day attack.

AI and ML have huge possibilities of revolutionizing the education sector through the delivery of differentiated learning end products, identifying struggling learners, and designing content in response to student needs. Several prior works have investigated AI and ML approaches to education with most works using predictive modeling and feature selection for enhancing learning. Some of these algorithms include Particle Swarm Optimization (PSO) and Whale Optimization Algorithm (WOA) wherein to make good predictions; the most relevant features are selected in Educational Data Mining. These techniques have shown promising results in such areas as students’ performance prediction and learning adapted models. In this study24, Grand Canyon University examined the effects of AI on students’ learning with the aid of the following techniques: bPSO-Guided WOA for feature selection and Linear Regression for predicting. The results depict a comparatively small average error and an exceptionally small MSE underscoring the importance of these AI methods for forecasting and decision-making among educators. Examples include such advancements in AI as a way of creating more consistent and fuller education environments in the process of improving both teaching and learning.

Deep learning models are increasingly being adopted as key application tools in smart city development that enable traffic forecasting and streamlining of city frameworks. Among all these models such as VGG16Net, VGG19Net, GoogLeNet, ResNet-50, and AlexNet have been used for traffic data analysis where ResNet-50 and AlexNet exhibited higher accuracy sensitivity and specificity in traffic prediction25. Further conclusions using valid statistical measures, ANOVA, and Wilcoxon Signed Rank Test are displayed and these show a significant difference between the performance of the specified groups of models while Alex Net yields an impressive accuracy of 0.93178. These outcomes signify that AI-powered solutions in the challenging field of traffic can improve the quality of urban mobility and support smart city projects; thus, organically feeding urbanists and policymakers with vital information on creating better and more efficient infrastructures.

Clustering and auto encoders are two examples of unsupervised learning techniques that might be useful for spotting anomalies.Anomalies depending on the level of network activity can be easily identified using a clustering algorithm like the K-means26. Popularity of auto-encoder increase due to their capacity to reconstitute original data that can reconstruct “normal” network traffic with any discrepancies indicating a breach. These methods effective for autonomously identifying new attacks.

Similar to how people learn and adapt, reinforcement learning (RL) honors the constantly changing embodiment of network security. An agent in the RL-based intrusion detection works in a way that it tries to select a safety action that will lead to a highest long-term reward. Such decisions27 include the ability to block or allow network traffic, turn on or off security policies, or response to a particular threat. The next information is acquired through ’learning from experience,’ where the agent modifies the strategy with reference to information, which in this case is provided by the network. This is the reason why, flexibility of RL is found very much useful in the field of intrusion detection.The traditional rule-based systems face difficulties to keep up with the ever-changing nature of cyber threats. RL agents have the capacity to learn optimal security rules and adapt new attack techniques in real-time through interaction with the network environment.Agent’s job in RL-based intrusion detection make safety choices optimize long-term rewards. Blocking allowing network traffic, modifying security policies, responding specific threats are examples of such decisions. The agent acquires knowledge by trial and error, constantly modifying its approach in light of the network’s input. The flexibility of RL is what makes it so effective in the field of intrusion detection. Traditional rule-based systems have difficulty keeping up with the ever-changing nature of cyber threats28.

The use of ensemble learning techniques for intrusion detection in networks has shown promising results29. Random Forests and Gradient Boosting are two examples of ensemble approaches that use the combined power of numerous models to boost detection precision. In essence, they pool the results of various models in order to make more informed decisions with less chance of error. Using a random sampling of characteristics and data from the training set, Random Forests construct numerous decision trees. The combined results from these trees form the basis for the ultimate choice30. This method strengthens the model’s resistance to noise and outliers while enhancing its capacity for generalization. In contrast, the Gradient Boosting method builds a robust predictive model by iteratively constructing weak models that correct the shortcomings of their predecessors. This repeated technique yields an effective ensemble model that is particularly good at representing subtle interconnections in data. Ensemble methods have a reputation for being able to manage datasets in which malicious events are vastly outnumbered by benign ones. In the field of network security, they are used to examine heatmaps and other traffic data visualizations. Since attacks tend to look different from regular network traffic, CNNs are incredibly useful for spotting these abnormalities and identifying patterns within these heatmaps.

When it comes to dealing with sequential data, RNNs provide an alternative to LSTMs. In contrast to LSTMs’ strength in processing long-range relationships, RNNs’ strength lies in their ability to process and remember short-term dependencies and recent occurrences31. When it comes to intrusion detection, RNNs shine when they’re being put to use to identify multiple simultaneous threats. For spotting attacks that other models might overlook, their capacity to assess short time frames is crucial. RNNs improve intrusion detection systems’ real-time capabilities by taking recent network behavior into account.

When it comes to learning and reconstruction, auto encoders are the best type of neural network to use. Auto encoders are used to reconstruct regular network data for use in intrusion detection32. Input data is encoded into a lower-dimensional representation before being decoded back into its original form. When abnormal network traffic is introduced, the resulting reconstruction will also be abnormal. Since auto encoders may spot outliers in patterns without relying on preset attack signatures, they are ideally suited for detecting unknown or unique attacks.

Protecting user anonymity and data is essential for any secure network. With federated learning, models may be trained using data from numerous distributed sources without compromising on data’s locality33. It enables companies to work together on threat detection and intrusion detection without disclosing private network information. Each region has its own dataset for training models, and only the most recent modifications to these models are shared between regions. In settings where regulatory compliance and data protection are of the utmost importance, such as healthcare and finance, this privacy-preserving method is vital, all the while reaping the benefits of the collective intelligence to improve network security.

Graph Neural Networks (GNNs) have become an indispensable resource for ensuring the safety of computer networks. Modern network topologies are notoriously complicated, with many devices and systems interconnected in complex ways that make intrusion detection particularly difficult. Graph-based data representation is harnessed by GNNs to solve this problem. In a network graph, nodes represent devices and edges represent relationships between them34. For this purpose, GNNs can learn and propagate information across this graph structure to detect abnormalities that could be hard to identify using more traditional techniques. One of GNNs’ main advantages is that they can perform the analysis from two aspects at the same time, that is, the global features of the network data and the local features. As an ability to bring much-needed additional information into the decision-making process, XAI has become essential for intrusion detection systems. High levels of accuracy presented by a number of machine and deep learning techniques used for intrusion detection are often associated with the lack of explicit understanding of how and why specific decisions were made. But in real-world security systems where security professionals are to rely on and understand why the current warning is being given, this obscuration pose a real challenge. To address this issue, the main XAI methods have been created, LIME and SHAP35. These methods generate justifications for model predictions, providing insight into the considerations that went into a given choice. XAI techniques, for instance, can shed light on the traits and patterns that lead to the detection of an intrusion. After gathering this data, security analysts will be better equipped to make choices, conduct investigations, and fine-tune security plans.

By assisting with the analysis of log files and textual data provided by network devices, Natural Language Processing (NLP) has expanded its reach into network security36 System logs, firewall logs, and event logs are all examples of logs that offer useful information for intrusion detection but are also typically large and poorly organized. Natural language processing methods save the day by extracting and organizing this textual material, rendering it analyzable. The ability to recognize abnormalities or patterns in log data is an important NLP application for intrusion detection. Natural language processing (NLP) can analyze log entries and spot suspicious behavior like attempted intrusions or configuration changes. In the case of early intrusion detection, where the identification of security problems in a timely manner might reduce possible damage, this study is of paramount importance Detecting threats and associated hazards in a network is facilitated by their ability to incorporate past information and probabilistic reasoning to evaluate the likelihood of various network events. The capacity to model uncertainty is one of Bayesian Networks’ main capabilities. Managing uncertainty is particularly important in the context of intrusion detection, when both false positives and negatives can have severe repercussions37.

Homomorphic encryption is a revolutionary method for protecting private information while still processing calculations on it. Homomorphic encryption provides a novel approach to protecting the privacy of network information in the context of intrusion detection. The adaptability of Markov models is one of its main selling points. Since circumstances in the network and the means of attacks vary they will be able to find themselves new opportunities and carry on with their malicious activity.

Ensemble clustering works as follows: in an attempt to improve the analysis of network traffic and hence the recognition of intrusions, the pieces unite various clustering algorithms. Algorithms for cluster analysis attempt to classify data into groups with comparable characteristics. Effective segmentation of network traffic data into meaningful groups, each representing a particular network behavior, is possible through the use of an ensemble of clustering algorithms such as K-means, DBSCAN, and hierarchical clustering. Ensemble clustering is a more general method of intrusion detection as it takes into consideration numerous different patterns in the network. Because different phases of an intrusion may display distinct behaviors, this is especially helpful in spotting multi-stage intrusions38. Security professionals can better detect breaches and identify anomalies in network behavior by aggregating the results of various clustering exercises. Overfitting can be avoided, and the problem of datasets where benign activity is outnumbered by instances of malice can be overcome, thanks to ensemble clustering. This method improves the accuracy of intrusion detection systems by drawing on the features of several clustering methods, making it a flexible and effective tool for network security.

When dynamic security policies are required, reinforcement learning (RL) with a focus on Q-learning provides an attractive approach to intrusion detection. Since cybercriminals are always coming up with new ways to circumvent security measures, the landscape of network protection is anything from stable. Q-learning excels in this setting. Using this method of reinforcement learning, security experts may teach agents to take precautions that will have the greatest long-term impact39. The optimal responses to network threats can be defined and rewarded in this way. Q-learning stands out because of its flexibility to adjust to new dangers. Q-learning allows businesses to implement dynamic security policies in response to new threats immediately. Q-learning agents successfully learn to adjust defensive strategies depending on their perception of the surrounding network environment in order to achieve the maximum level of certain benefits and risks. This flexibility is important in dynamic network situations where the strict application of rules of the static rule-based systems may not work. In order to assist businesses to enhance an improved level of security to safeguard their networks from current and emerging potent cyber threats that pose fatal consequences from security breaches, reinforcement learning with Q-learning is proven to be a proactive strategy for intrusion detection.

Intrusion detection depends mostly on the Principal Component Analysis because it can minimize the numbers of independent variables in a network. In a network setting, data could be very high dimensional in such a way that there are many irrelevant or redundant features. This is particularly so, especially when working with big data or big sets of data or when working in an environment with limited resources of time. Intrusion detection models benefit from data simplification since it allows them to run more quickly and with less computational cost.

Federated learning is a novel approach that improves the state of collaborative intrusion detection without compromising the safety of confidential information. In this approach, no data is centralized at any point, allowing participant businesses, endpoints, or edge devices to train machine learning models on their own data. The privacy of the network is protected because only model changes are shared instead of the original data. When it comes to protecting sensitive network information and staying in compliance with severe data privacy requirements, this cooperative technique shines40. As the old saying goes, “united we stand, divided we fall.” Through federated learning, businesses can increase the swarm intelligence of their intrusion detection systems by combining knowledge and insights from a variety of sources. This federated method takes advantage of the unique qualities of each dataset to produce a flexible and powerful intrusion detection system. When information from several sources is combined, the ability to identify and counteract security threats is strengthened. Data remains decentralized and secure, while collective knowledge equips businesses to protect against sophisticated cyber threats.

When dealing with time-series data, Hidden Markov Models (HMMs) are very useful in the intrusion detection field. HMMs are a good fit for capturing temporal relationships in the context of network security monitoring, where activity patterns can change over time. Whether it’s the gradual appearance of anomaly or sequential acts of a multi-stage attack, HMMs excel at predicting such complex behavior. They offer a framework for figuring out and picking up on patterns of network behavior that could otherwise go undiscovered. HMMs are exceptional because their flexibility41. From more conventional corporate networks more complex industrial control systems, they are applicable across a wide range of network contexts. The flexibility of HMMs makes them useful in intrusion detection in a wide variety of settings. They are crucial the protection of networks because they help security professionals spot novel and sophisticated threats. HMMs guarantees that security measures are always in step with developing threat landscape as cyber adversaries continuously enhance their strategies.

XGBoost, short for Extreme Gradient Boosting, is a flexible machine learning algorithm that performs particularly ensemble learning for intrusion detection. pooling together model predictions, ensemble learning improves detection precision. XGBoost excels because it can handle large datasets and scale well. These features make it an excellent tool for ensuring safety of computer networks. XGBoost reduces the possibility of false positives and false negatives by combining predictions from many models42. It’s an effective intrusion detection system that can adjust new types of attacks and boost network safety. When dealing with complex, ever-changing threats in the real world, XGBoost shines. Due to high flexibility together with the ability to process massive data in a short time, it offers businesses a strong line of defense against the ever-emerging threat in the field of cyber security. XGBoost is not just an addition to the security arsenal in the field of Intrusion Detection System but also a key that is instrumental in enhancing the accuracy and dependability of such systems.

However, when it comes to intrusions detection, the highlight feature of Isolation Forests is that it employs the method of ensemble based anomaly detection. Isolation Forests are classified from standard ensemble methods that are directed on outliers compared to usual cases. The concept of them is such that while outliers are reasonably rare and therefore noticeable when set apart from the rest. The method utilizes individual decision trees where the complexity at which an individual tree is developed enables fast determination of anomalous points43. This guarantees the efficient detection of intrusions by IDS while minimizing on false alarms normally associated with networks.

Intelligence for intrusion detection can greatly benefit from the organized representation of information offered by studies. Knowledge graphs make it easier to store, link retrieve information about dangers in this age of interconnected threats and adversaries. They give a complete picture of the dangers that exist by linking together things like vulnerabilities, exploits, malware, and attack methods. Informed decisions, the ability to spot new risks, and efficient responses network incursions are all made possible by this organized body of knowledge available to security analysts44. Ability of intrusion detection systems to detect and prevent advanced threats in real time is greatly improved by the incorporation of knowledge graphs into the fusion of threat intelligence. Each of these methods exemplifies a different strategy for intrusion detection, revealing the many options that can be used. An adaptable and flexible security approach is necessary since best method to use is determined by factors such as the nature of the data being protected, the nature of the threats being faced, and the requirements at hand.

When it comes to finding security flaws in a system, quantum machine learning (QML) is a huge step forward. The theoretical foundations of quantum computing hold the promise of unprecedented processing power and information security. The quantum bits (qubits) that define a quantum computer enable it to perform calculations at speeds that are inconceivable on a classical computer45. In terms of intrusion detection it means that whatever threats the network poses can be assessed and addressed on the spot. Another promising area where quantum computing can be applied to detect intrusions is by using methods of quantum encryption. These methods are prepared to change the experience of data encryption through concept of quantum mechanics. This means that no hacker no matter the sophistication level in hacking will be able to intercept or decrypt your data. In the context of network security, quantum encryption may lead to new level of reliability and safety of the sensitive data, that was previously thought, to be unattainable.

The dependency of nodes and events in today’s networks is broader and is developing in terms of space and time. In such complex network configurations, the application of a contemporary technique for intrusion detection is offered by the ST-GCN or Spatial-Temporal Graph Convolutional Networks. Due to the fact that these networks depict such complex relationships in a very accurate manner, these kinds of networks excel in architectures where aspects such as layout and time play a role in the traffic of the network. ST-GCNs are constructed on top of graph convolutional networks, making them capable of handling the complexity of actual networks. Accurate intrusion detection in highly dynamic situations is made possible by modeling the spatial and temporal elements of network data, which allows for the identification of anomalies that evolve over time. Where spatial-temporal dynamics play a crucial role in guaranteeing network security, such as in smart cities, industrial control systems, or cloud settings, traffic patterns can be analyzed. ST-GCNs are distinguished by their flexibility46. In this way, they can be adapted to the distinctive spatial-temporal properties of different network environments. This adaptability makes them a useful resource for IT departments and other businesses concerned with network security, especially in complex and ever-changing environments.

Since VAEs are adept at both anomaly detection and data creation, they provide a holistic approach to intrusion detection. Generative models, of which VAEs are a subset, are used to discover the structure of raw data. When used for intrusion detection, they serve a dual purpose: identifying out-of-the-ordinary activity providing artificial data for use in training models. In the context of anomaly detection, VAEs are particularly effective at identifying outliers relative to the learned data distribution. They can detect abnormalities in real time by creating a model of typical network activity47. This is especially helpful in cases where new threats don’t yet have well-defined signatures but can be recognized due to their departure from the usual. When businesses need synthetic data supplement their intrusion detection models, VAEs’ generative features come into play. VAEs help businesses strengthen their intrusion detection systems by creating a wide variety of datasets that simulate both benign malicious network activity.

In the face of advanced persistent threats (APTs) and other complicated, multi-stage attacks, application of game theory is important. Because enemies in these situations are constantly changing their approach, it is crucial be able analyze and anticipate their actions. Organizations can model and simulate these interactions with the use of game theory, which sheds light on potential attack routes enables proactive protection. Organizations improve their intrusion detection preventative measures by using a game-theoretic approach10. This long-term outlook guarantees that network defenders do more than simply respond to threats; they also anticipate and neutralize them. The incorporation of game theory into intrusion detection provides a preventative technique of securing networks from increasingly sophisticated adversaries, which is especially important as the threat landscape continues to grow.

Although hyperparameter tuning isn’t technically a machine learning method, it is crucial to the success of intrusion detection models. The hyperparameters of a machine learning model are the variables and configurations that determine its behavior. The effectiveness of an intrusion detection system relies heavily on the careful selection of models and tweaking of hyperparameters. Optimizing a model’s hyperparameters involves a methodical search for the optimal values for those variables48. Many intrusion detection models include a number of settings and characteristics that must be calibrated before they can function at peak efficiency. By following these steps, you can rest assured that your intrusion detection system is functioning at peak efficiency, with the optimal configuration for reliable threat detection. Some of the hyperparameter optimization methods include Grid Search, Random Search and Bayesian optimization among others.

Recent advances in Explainable Artificial Intelligence or XAI are aimed at making Intrusion Detection Systems more transparent and interpretable. The sub-discipline of machine learning and deep learning produces models, which delivers high accuracy but it is challenging to comprehend and trust the output due to the black-box nature of these models10. Interpretations of the model’s predictions can then be made using XAI methods such as LIME or SHAP, and therein explaining the thought-process. In this way, due to the ability of observing the intrusion warning thoughts, security analysts are able to consider occurrences and set various security plans. Besides, it supports the concern for responsible and ethical use of AI in security, without which people cannot be assured of trusting their security and wellbeing in the hands of the subsequently automated security systems.

The encryption methods utilized by intrusion detection systems stand to benefit greatly from the implementation of Quantum Key Distribution (QKD). The mathematical algorithms used in conventional encryption systems are theoretically vulnerable to powerful computers. In contrast, QKD employs quantum mechanical principles to ensure the absolute safety of data transmission48. It allows two people generate a secret key that can’t be hacked or intercepted by anyone, regardless of how powerful their computers are. In the context of intrusion detection, where protecting sensitive information from malevolent actors is of fundamental importance, this level of protection is essential.

It is a common problem in the field of intrusion detection to use models trained on data from one domain detect intrusions in another domain. Intrusion detection models can benefit from domain adaptation strategies like adversarial domain adaptation and transfer learning when being deployed in novel, previously unexplored network settings. These methods guarantee the accuracy of models in new settings by bringing the source and target domains into alignment49. Because of this, intrusion detection systems may be easily adapted to the changing network landscape without sacrificing effectiveness, which is especially helpful when companies increase their network infrastructure or acquire new subsidiaries.

An efficient method for anomaly detection in IDSs is semi-supervised learning, especially when combined with One-Class Support Vector Machines (SVM). One-Class SVMs are able to establish a border that captures normal network behavior since they are trained on a labeled dataset consisting of only normal data. When presented with fresh information, they are able to spot outliers that don’t fit inside the norm. Because it makes use of a small bit of labeled data in conjunction with a large number of unlabeled data, this method shines in situations when labeled incursion data is sparse50. In this way, intrusion detection systems may keep their false positive rate low while yet successfully detecting new and developing threats.

Edge AI, or the execution of machine learning models locally on edge devices, is reshaping intrusion detection by allowing for the detection of threats in real time without the need for centralized servers. Edge AI is crucial for securing the network at the device level in the age of IoT (Internet of Things) and edge computing, when billions of devices are interconnected. Deployed intrusion detection models on edge devices may rapidly assess network traffic and device behavior, enabling swift action against security threats51. When low-latency intrusion detection is crucial and centralized systems are at risk of being attacked, this method becomes indispensable.

Homomorphic encryption is a game-changer for intrusion detection since it allows for secure data analysis without the risk of sensitive information disclosure. Data is often exposed to breaches during the decryption phase of traditional techniques to data analysis since the data must first be read in plaintext before it can be processed. When using homomorphic encryption, however, it is possible to perform computations on encrypted data without first decrypting it. This allows businesses to do intrusion detection on encrypted data while keeping the data as secure as possible on the network. There are two main advantages to using homomorphic encryption. First, it protects individuals’ identities and personal data. Data stored in a network can be of volatile nature and leakage of such information may result in undesirable impacts52. The risks of unauthorized data breaches or access are reduced by homomorphic encryption because the data remains encrypted the entire analytical process. Secondly, it maintains the procedures for intrusion detection safe and secure in order to prevent external threat from reaching them. To decipher the encrypted data and spot dangers, businesses might use machine learning and deep learning methods. The encrypted data serves as the basis for any detections, keeping the data secure.

In the case of intrusion detection, transfer learning is a more versatile technique that relies on deep learning models. It can take a lot of time and resources to train a deep learning model from scratch53. However, most of the pretrained models have already been trained several times on large dataset and have become very much familiar with many types of patterns and characteristics. It may be utilized in improving more factors concerning the intrusion detection models such as accuracy of the detection and the reliability of the results. A convolutional neural network (CNN) that has been trained to recognize characteristics in images is an example of a pretrained model that can be used for transfer learning and thus network threat detection. To transform such a pretrained model to be more suitable for the current task, we retrain it using data from intrusion detection54. AFine-tuning means that some features of the model have to be adjusted as to its architecture, weights that were used and the chosen hyperparameters should now match the specifics of the given network traffic data. The first advantage of transfer learning, therefore, is when developing models, the amount of time it takes. A fresh model can be created by firms with less difficulty and work due to the ability of building new models on the pretrain models.

This study55 proposed HDLNIDS model based on deep learning model used local features and temporal features for an intrusion detection. The malicious threats are emerged and constantly evolving therefore we need advanced security system for it. Because of new forms of text-based threats, intrusion detection is experiencing a need for using Natural Language Processing (NLP) techniques. The textual components that may be identified by NLP correspond to a wide range of assaults involving the use of text, from the phishing of messages and network invasions that control textual interfaces. By applying NLP, the intrusion detection systems are capable of understanding, analyzing and comprehending the language of these texts to factors threats. Phishing emails, for instance, frequently use misleading wording or URLs natural language processing models can identify. linguistic patterns used by malicious code in network communication may also be identifiable by NLP algorithms.

Optimizing intrusion detection models with the help of Neural Architecture Search (NAS) is a novel approach. By automatically probing a wide range of design options and hyperparameters, it can quickly zero on the optimal neural network architecture. Organizations that want strengthen their security posture can benefit from NAS since it will let them spend less time manually designing effective intrusion detection models56. Different neural network designs, layer combinations, activation functions, and optimization methods are all part of the wide design space that must be explored during the NAS process. During this investigation, NAS compares the efficacy of several architectural frameworks using information from intrusion detection systems. More precise and productive models are given greater weight. Among the many advantages of NAS is the ease with which unique intrusion detection models may be developed. The search process considers the specific characteristics and requirements of the network data, resulting in models that are finely tuned to the organization’s needs.

Intrusion detection often grapples with uncertain and imprecise data, which can be challenging to classify as purely normal or malicious. Fuzzy logic systems offer a robust solution for handling uncertainty and providing nuanced decision-making in intrusion detection57. Fuzzy logic is based on the concept of “fuzzy sets,” which allow gradual transitions between different membership grades. Unlike traditional binary classifications, where data is either entirely in one category or another, fuzzy logic accommodates the idea data can belong to multiple categories simultaneously. This is particularly advantageous in intrusion detection, where network behavior may exhibit subtle deviations or uncertainty. Fuzzy logic systems use linguistic variables rules and make decision making in way that is quite easier to understand by human beings58. This is most helpful where there is a risk of getting high false positives in a simple and cleaner binary classification.

One of a major concern is the relative absence of the single, thorough and adaptive approach in dealing with the constant evolution of the threats. At first, new and innovative approaches to attack paths are discovered at once, and in such cases old intrusion detection systems may often fail. Many of these systems utilize labeled data and this can be limiting since it means a certain level of know-how of typical attacks. Reliance on them may mean that one misses other attacks such as the zero-day or those that are completely unknown. Still, flexibility and scalability are the matters that should be paid attention to. It is concerning therefore that while there have been high achievements in the achievement of machine learning as well as deep learning there is the need to come up with models as well as algorithms that are capable of being scaled to support numerous geometries. This is especially important in the context of the IoT and industrial networks, where limited resources are a typical occurrence. To guarantee the safety of these increasingly networked systems, intrusion detection technologies that function well in such situations are essential. Furthermore, there is a need to bridge the gap between theoretical improvements in intrusion detection systems and their implementation in real-world network security.

Materials and methods

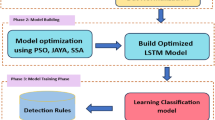

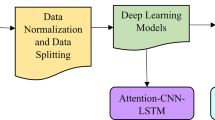

This article presented a flow of study with the help of the UNSW-NB15 dataset which is considered a benchmark dataset for network intrusion detection(NID) in Figure 1. The diagram illustrates the methodology followed in this study for enhancing intrusion detection using machine learning and deep learning models. The process begins with the UNSW-NB15 dataset, a benchmark dataset for network intrusion detection. Key steps include: Input Data: The raw data file has been called for use in the current analysis. Data Preprocessing: Finally, missing values are managed, and the categorical features are transformed, in order to prepare clean data for analysis. Feature Selection: Some particularly relevant features are selected and stored with the purpose of dimensionality reduction and consequent enhancement of model accuracy. Data Splitting: Training and testing sets are applied in order to split the dataset and make the work with the model convenient. Model Development: Random forest, and support vector machine models are used while a deep learning model such as Long Short-Term Memory can also be employed. Evaluation: Evaluation of models occurs through the use of the following performance indicators: accuracy, precision, recall, and Fall score. Anomaly Prediction: The trained models then forecast the abnormalities that separate normal and attack episodes in the network traffic. This structured flow also guarantees a systematic method of constructing and testing IDS.

The dataset was offered and released by University of New South Wales (UNSW) of Australia that is specialized in analyzing IDS. The dataset was complied from real network traffic data, making it suitable for real-world intrusion detection research. The UNSW-NB15 dataset is one of the large networks traffic records which includes the two million five hundred forty thousand forty-four instances. Each of them is a network connection; which is a rather complex object containing various properties and tags for classification activities. The dataset has the following key characteristics: The main characteristics of the dataset are as follows:

Feature Dimensionality: The dataset consists of features that capture various aspects of network traffic, including connection duration, protocol, service, state, packet counts, byte counts, and more. These features provide valuable information for intrusion detection.

Binary Classification: Our research focuses on binary classification tasks, where network connections are categorized as either “normal” or “attack.” The dataset includes a label column that indicates the category of each connection, making it suitable for binary classification experiments.

Attack Categories: The dataset covers a wide range of attack categories, encompassing different types of network intrusions, such as denial of service (DoS), intrusion detection evasion (IDE), and probe attacks. Knowing the variety of attacks that are present in the dataset is important to assess model performance.

In the exploratory data analysis (EDA), visualization plays a significant role in understanding the features and the distribution of the features in the UNSW-NB15 dataset. Here we present various graphical techniques to analyze the structure of the data and perhaps detect any pattern that can be used for decision making. The visualizations are grouped by feature in order to present an entire description of the attributes of the dataset. Data pre-processing becomes important in determining the quality and tuning of the dataset to feed into the machine learning and deep learning models. In this section, we present some of the transformations practiced on UNSW-NB15 dataset prior to conducting any classification tasks on it. However, it must be mentioned that prior to any attempts at construction of an analysis or a model, some pre-processing is necessary, namely missing and erroneous data handling. For the UNSWNB15 dataset which we used in this study, we looked at each column and checked for missing values if any, and then proceeded to deal with the missing values appropriately. Fortunately, the dataset did not contain any missing values, so no imputation was necessary.

This figure 2 shows the traffic distribution for the UNSW-NB15 dataset based on the network traffic data recorded. Normal and intrusive traffic instances are compared through the use of the pie chart while the histogram provides the frequency count of the instances. This distribution raises the issue of class skewness that is often observed in intrusion detection datasets, and of which this study employs oversampling and under-sampling to overcome.

This figure 3 also depicts the breakdown of the number of network protocols that are available in the dataset. The pie chart gives an idea about the percentage of different protocols (like TCP, UDP, etc.) and the histogram gives an exact count of the number of them. This section of the paper reflects how protocol distribution is important in feature engineering because protocol type usually affects the network traffic and protocol is a good indication of normal and abnormal traffic.

This figure 4 shows the distribution of attack categories in the UNSW-NB15 dataset in terms of diverse types of attacks. In the pie chart above, each percentage represents a type of attack such as DoS, Probe, and Worms, while the histogram illustrates the number of occasions of each kind. This visualization assists in determining how often particular versions of an attack exist, and it is crucial in analyzing the dataset organization and improving algorithms for intrusion detection.

This figure 5 illustrates the connection states of the dataset that we analyzed. The pie chart on the right illustrates the ratio of each connection state Such as FIN, SYN, RST &etc. The histogram on the right is depicting the further distribution of each specific state. The connection states are important when measuring the peculiarity of a flow or the presence of unlawful activities to use during training and weighing normal and intrusive traffic.

The Figure 6 shows the method focuses on feature selection based on correlation scores in the UNSW-NB15 dataset, evaluating the relationship between features and the intrusion/normal label. Features are ranked from 0 to 1, with higher ranks indicating stronger correlations. Key features such as \(ct\_state\_ttl\), sbytes, and dbytes show significant correlations, making them ideal for modeling. This approach enhances both the model’s performance and its interpretability by retaining the most relevant attributes.

Feature selection is the process of choosing relevant features from the dataset while removing irrelevant or redundant ones. In this study, we retained all features present in the UNSW-NB15 dataset in Table 1. However, it’s worth noting that feature selection techniques can be applied when working with datasets with a large number of features to reduce dimensionality and potentially improve model performance. Figure 2 provides the insights into the balance or distribution of labels. It indicating network intrusion or normal traffic in the dataset is visualized. It provides insights into the balance or distribution of labels.Using the Pearson correlation coefficient, we calculate the correlation between each attribute and the label attribute. Attributes with correlation coefficients greater than 0.3 are considered highly correlated and are selected for further analysis. Here are the attributes found to be highly correlated with the label attribute: These attributes are highly correlated with the binary label attribute and are considered valuable for modelling the classification task. Figure 3 displays the distribution of network protocols (such as TCP and UDP) used in the dataset. It helps understand the prevalence of different protocols. Each feature is represented as a horizontal bar, and the length of the bar corresponds to its selection score in Figure 4 visualizes the distribution of attack categories within the dataset. It helps to understand the frequency of different types of attacks. In the current methodology, we make use of a rainbow colormap so that each feature will appear in a different color to facilitate identification. The plot shows values of all selected attributes to let the analyst see the difference and correlation in the features of the dataset in Figure 5 presents a distribution plot showing the frequency of different connection states in the dataset. It helps to understand the prevalence of different states. The bar plot is actually helpful as it gives the viewer a glimpse of the selected attributes and their scores to help make decisions regarding which features to include in the machine-learning models which is showed in Figure 6 . It represented each feature as a horizontal bar, and the length of the bar corresponds to its selection score. These attributes will be employed to construct as well as train machine learning models for network intrusion detection. The feature selection significantly helps refine the models that are fed with the most informative attributes of the network traffic thus enhancing the capability of the system in identifying the different network attacks. A lot of machine learning algorithms demand numerical inputs in their training data hence the need to encode categorical features. In the UNSW-NB15 dataset, some of the columns include categorical data which are proto, service, state, and attack_cat. These categorical features were further encoded by using the one-hot encoding in which such features are encoded into specific binary vectors for each feature category. This transformation helps make the categorical data fit for use in building the model.

In Table 1: The following area enlists the important features used in the UNSW-NB15 dataset for intrusion detection. It discusses every feature in respect to their usefulness in extracting profiling information and detecting anomalous traffic from an IP network. Some quantitative context includes proto(protocol type), being informative regarding the connection state of a network, and \(attack\_cat\), explaining the nature of an attack, and sbytes and dbytes (byte counts) which allows for an understanding of the quantities of specific activities. The label column, which is the target column, is a binary target variable where normal instances are assigned a score of 0 and attack instances 1 so as to train various machine learning and deep learning algorithms. This feature description is beneficial for comprehending the nature of datasets and their application in building reliable intrusion detection models.

In this section we discuss how to proceed with the encoding of the categorical data in the dataset for the machines learning algorithms. Categorical features are those in which the data is non numerical, hence it belongs to the categories of labels. Most machine learning models expect the data to enter in the numerical form, thus this categorical features have to be converted to numbers. One-hot encoding is also another common method of encoding categorical data where the data is encoded into a binary format. This makes new binary columns for each category or labels in a categorical features where each of those columns shows if the category is in the given data or not. Figure 7 represented one-hot Encoded features correlation Heatmap. First of all, the data is divided and we decide which of them are numeric data and which of them is categorical data. Numeric columns do not require any change of format as they are while the categorical data would require to be encoded. Then we define a new DataFrame, data_cat, that contains only the categorical attributes from the initial Dataframe. To do so, we use the pd.get_dummies() to perform one-hot encoding on the data_catDataFrame. The works of this function are to convert each categorical variable into binary variables: for each category of a categorical variable, the function creates a binary variable equal to 1 if the record belongs to that category and 0 otherwise. In order to bring back the one-hot encoded categories back to its original format, we merged the data_cat with the original data along the columns (Axis 1). Last of all, we remove the original categorical features from NoSQL database as they have been replaced by the binary yes/no columns encoded out of them. The use of one-hot encoding escalates the sparsity of the dataset since each category has its own columns which are binary in nature. This transformation is critical to meet this objective to guarantee that the categorical information is saved and can be consumed by the machine learning algorithms. It also stops any ordinal connections or other numerical connotation in the categorical data which might mislead the models being built. Mean scaling is important feature scaling data process that brings the scale of the selected features in numerical data to the range 0;1 or -1 ... 1. Normalization is important to avoid the fact that the features having the larger scales will tend to control the learning process of the model and impart more influence on the learning outcomes than the other features. Figure 8 shows the correlation before and after normalization.Since the numerical features of UNSW-NB15 data set are continuous in nature, we adopted Min-Max scaling to scale down the range of numerical data.

The Figure 7 correlation heatmap of one-hot encoded features will look like the one shown below. This heat map shall display the association of one-hot encoded features in the UNSW-NB15 dataset. Values for correlation coefficients lie between +1.00 and -1.00; the close to either 1 or -1 depict a high relationship, while values close to zero depict a low or no relationship. The diagonal line indicates a direct relationship between each feature with itself, or in other words 100 percent correspondence. This visualization helps remove duplicate or very correlated features that are essential for deciding in the dimensionality reduction process. One hot encoding keeps categorical data in machine learning algorithms friendly while preserving categorical data for non-structured models.

The Figure 8 shows a comparison of correlation coefficients before and after normalization is presented below. The corresponding correlation matrices of features in the UNSW-NB15 dataset are shown in the following figure before and after normalization. In the raw correlation of the results for each individual bin, the features exhibiting larger scales have higher coefficients. In the right panel, the correlations are shown as these have all been through Min-Max normalization where all numerical features are scaled to unit distance, making them easier to compare. Normalization adjusts the values to scale, eliminating the domination of a specific feature which enhances the reliability and functionality of intrusion detection systems, with regard to machine learning model training. The heat maps show how normalization takes care of relationships and at the same time eliminates scales’ distortions in the data set.

In this work, the dataset is divided into training and testing datasets which are used to train and test the machine learning and Deep learning models. The training set is used to train the models, while the testing set is used to assess their performance. We used an 80-20 split ratio, where 80% of the data was used for training, and 20% was used for testing. Class imbalance is a common issue in classification tasks, where some classes have significantly fewer instances than others. To address this problem, we explored techniques such as oversampling and under sampling to balance the class distribution within the training set. These techniques ensure that the models are not biased towards the majority class.

Feature engineering is a critical step in the data preparation process, where we aim to create, modify, or select features (attributes) that are most relevant to the problem at hand and can enhance the performance of machine learning models. In this section, we will explore feature engineering in the context of the UNSW-NB15 dataset. Feature correlation analysis involves assessing the relationships between different attributes (features) in the dataset. Figure 8 show the correlation before and after normalization. Such relationships can be useful in understanding how the features relate to each other and how they may affect the target variable (the ‘label’ which indicates whether an instance is an attack or not).

To check interactions of the features and their dependence on the binary labels we compute a correlation matrix. This matrix help to measure the linear regression between the features and the target variable or between two features.

The Figure 9 shows a correlation matrix between Binary class labels is constructed, illustrated in the following table: This figure depicts the correlation matrix of features in the UNSW-NB15 dataset, highlighting their relationships with binary class labels (0: normal, 1: attack). The heatmap uses color-coordination to show how strong correlation values are and in which direction they lie, ranging from negative highest degree to positive highest degree of correlation. Of the features, those that seem to be presented most frequently or are most strongly correlated with the class labels (e.g., \(ct\_state\_ttl\) ,dbytes) are meaningful for intrusion detection tasks. This matrix is also useful in determining features that can be used in training machine learning models to improve classification capabilities by concentrating on features that have the most effect.

In this section, we will explore feature engineering in the context of the UNSW-NB15 dataset. Feature correlation analysis involves assessing the relationships between different attributes (features) in the dataset. Such relationships can be useful in understanding how the features relate to each other and how they may affect the target variable (the ‘label’ which indicates whether an instance is an attack or not). To check interactions of the features and their dependence on the binary labels we compute a correlation matrix shows in Figure 9 . This matrix helps to measure the linear regression between the features and the target variable or between two features. In this section, the specific machine learning algorithms employed for intrusion detection will also be discussed, as well as the mathematical equations for categorizing anomalies and normal instances. We will cover three commonly used models for intrusion detection: SVM, RF and k-NN are popular methods of the classification algorithms.

Support vector machines (SVM)