Abstract

The rapid digitalization of healthcare has created a pressing need for solutions that manage clinical data securely while ensuring patient privacy. This study evaluates the capabilities of GPT-3.5 and GPT-4 models in de-identifying clinical notes and generating synthetic data, using API access and zero-shot prompt engineering to optimize computational efficiency. Results show that GPT-4 significantly outperformed GPT-3.5, achieving a precision of 0.9925, a recall of 0.8318, an F1 score of 0.8973, and an accuracy of 0.9911. These results demonstrate GPT-4’s potential as a powerful tool for safeguarding patient privacy while increasing the availability of clinical data for research. This work sets a benchmark for balancing data utility and privacy in healthcare data management.

Similar content being viewed by others

Introduction

Healthcare digitalization has brought new horizons for handling clinical notes, and it has significantly changed how medical research is conducted. Clinical notes are comprehensive records that contain detailed patient information such as demographics, medical history, and treatment plans, which are crucial for continuity of care and large-scale health analysis1,2.

The sensitive nature of the data contained within clinical notes requires robust and strict mechanisms to ensure patient privacy and maintain data confidentiality3,4. The United States of America has considered the Health Insurance Portability and Accountability Act as the standard regulation that protects sensitive data related to a patient. This highlights the necessity of finding effective techniques of anonymization and de-identification that do not compromise data integrity3,5. HIPAA mandates that healthcare providers and healthcare entities must implement physical, network, and process security means to help protect the protected health information (PHI)6.

Natural Language Processing (NLP) is a promising solution in the field of digitizing medical research7. It is characterized by its ability to process large amounts of text and extract information from unstructured data sources such as clinical notes8,9. NLP plays a vital role in advancing medical research, as it is used in several applications, including improving diagnostic accuracy10 and predicting patient outcomes11.

Modern applications of artificial intelligence, including the development of Generative Pre-trained Transformer (GPT) models, offer a promising opportunity to enhance the protection of patient privacy and security12. GPT-3.5 and GPT-4 have superior text generation capabilities that can mimic human texts, making them suitable for creating synthetic clinical notes13,14. Ideally, these synthetic notes should be similar to real clinical notes in terms of linguistic quality, clinical utility, and relevance but withhold protected personal information that might compromise patients’ privacy15.

While hallucination is a recognized challenge in open-ended generative tasks with GPT models, it is less relevant to structured tasks such as PHI identification and de-identification. Structured tasks focus on extracting specific information or making classifications based on well-defined inputs, significantly reducing the potential for generating unverified or extraneous information16. Research indicates that hallucinations, instances where AI models generate content not grounded in the input data, are more prevalent in generative tasks than in structured extraction and classification tasks17. This is because generative tasks require models to produce extended text, inherently increasing the likelihood of introducing fabricated details, whereas structured tasks operate within a constrained framework17.

For example, a study analyzing educational survey feedback found that GPT-4 exhibited a 0% hallucination rate in extraction tasks, underscoring the reliability of GPT models in structured, rule-based applications16. By leveraging precise prompt engineering and integrating HIPAA guidelines into the prompts, this study further minimizes the risk of hallucination, ensuring deterministic and reliable outputs.

This study evaluates GPT-3.5 and GPT-4 for the deterministic task of identifying and de-identifying Protected Health Information (PHI) from clinical notes, generating synthetic notes that maintain research utility while adhering to privacy regulations. Data for the study were sourced from the Electronic Health Records (EHR) at King Hussein Cancer Center (KHCC), ensuring a real-world and high-fidelity context. Following ethical guidelines and Institutional Review Board (IRB) approval, the clinical notes were processed using in-context learning (ICL) techniques. Precise and structured prompts were used based on HIPAA identifiers to guide the models in accurately identifying and redacting PHI. The evaluation framework utilized metrics including precision, recall, F1 score, and accuracy to assess the models’ effectiveness and reliability. By integrating manual and automated reviews, the study ensured the generated synthetic notes maintained linguistic quality and clinical utility while meeting privacy standards.

This study addresses critical gaps in current methodologies for de-identifying and generating synthetic clinical notes using NLP and GPT models. Traditional models rely on computationally intensive training methods, requiring significant resources not always available to healthcare institutions. Our approach used advanced GPT models with pre-trained capabilities, reducing the need for extensive computational resources. Unlike previous studies that used potentially biased open-source datasets, this research utilizes actual patient data from the King Hussein Cancer Center (KHCC), ensuring high-fidelity synthetic notes and effective real-world performance.

The significance of this study is not limited to the technical achievement of these models, but rather that this approach may revolutionize the use of medical data, as it enhances the availability of data without compromising patients’ privacy. This can accelerate innovations in treatment strategies and clinical practices, eventually leading to enhanced patient care and health outcomes18.

Background and related work

Traditional methods for clinical note de-identification

Among the traditional methods of anonymization, there have been several techniques applied for de-identification of clinical notes: data masking and pseudonymization19. In data masking, sensitive information is replaced with false but plausible alternatives20. On the other hand, pseudonymization replaces identifiers with artificial identifiers, known as pseudonyms20,21. These methods are designed in such a way that it is supposed to be computationally difficult to re-identify the data without further information stored in a separately kept database20. Traditional methods of anonymization sometimes struggle to maintain the balance between clinical data utility and protecting privacy. While data masking can make the clinical content too distorted, making it less helpful for research, pseudonymization offers better data utility. However, there’s still a risk of re-identification if the pseudonym key gets into the wrong hands22. These limitations have prompted interest in more complicated methods of data anonymization. Recent advancements include advanced computational algorithmic techniques, which play an essential role in enhancing the anonymization processes23.

Use of synthetic data in healthcare

The increasing demand for large datasets in medical research and strict patient privacy requirements have moved the researcher’s interest toward synthetic data as a feasible solution in healthcare24. Synthetic data, generated through complicated computational models, can mimic the statistical properties of real-world data without containing any actual protected patient information. Synthetic data was used earlier for simple tasks such as testing database systems, the application of synthetic data has grown significantly with advances in AI and machine learning25. It now serves multiple purposes, including training machine learning models where real data may be sensitive to use26, testing new healthcare applications to ensure software performance with realistic data inputs27, and conducting regulatory compliance testing without the need for data governance approvals28. Various methods are used in the generation of synthetic data, with differential privacy and generative adversarial networks (GANs) being prominent methods that introduce randomness and realistic data mimicking, respectively29. However, the utility of synthetic data is dependent on its fidelity and the prevention of biases that could alter research outcomes or lead to harmful clinical decisions30. In addition, synthetic data must adhere to complex regulatory frameworks that may not fully accommodate all its nuances31. Thus, while synthetic data holds transformative potential for healthcare, it also presents challenges that require careful consideration to ensure its effective and ethical use in medical research and practice.

Natural language processing (NLP) in medical data anonymization

Machine learning models, specifically those using NLP have been increasingly applied to automate the detection and removal of protected health information from clinical notes effectively and with higher accuracy23. Over recent years, various NLP models have been applied to de-identify clinical notes, showing a broad range of approaches and outcomes that reflect the NLP development23. Anonymization of Spanish medical notes has been implemented in the system NLNDE developed by Lange et al. This system showed its efficacy during the MEDDOCAN competition, an event dedicated to evaluating the performance of de-identification systems in processing medical notes32. The NLNDE system outperformed in the competitive setting and emphasized the possibility of adapting NLP tools to different languages and medical documentation styles32. Lee et al. have proposed a new hybrid model combining rule-based systems with a machine learning system. Their hybrid model was implemented on a dataset of psychiatric evaluation notes and showed a de-identification accuracy of 90.74%33.

While the NLNDE system demonstrated efficacy, it struggled with maintaining the balance between de-identification and the retention of clinical utility32. The system’s reliance on predefined rules can limit its adaptability to varied clinical contexts and document structures32. Similarly, the hybrid model proposed by Lee et al., combining rule-based systems with machine learning, also faces challenges. Although it showed a high de-identification accuracy, integrating rule-based and machine-learning approaches can lead to increased complexity and maintenance issues33. Additionally, the model’s performance may degrade when exposed to new types of clinical data that were not represented in the training set33 .

Similarly, using a Conditional Random Field (CRF) model and long short-term memory networks, clinical notes of psychiatric evaluation records were de-identified34. This work was part of the Clinical NLP-focused CEGS N-GRID 2016 Shared Tasks34. The CRF-based model used manually extracted features to train a model effectively. On the other hand, the LSTM-based model used a character-level bi-directional LSTM network for token representation, which was combined with a decoding layer responsible for classifying tags and finally detecting the protected health information (PHI) terms34. The study findings stated that the F1 score of the LSTM-based model was 0.8986, which was better than the CRF model. This superior performance shows the good capability of LSTM in identifying PHI in clinical text, insisting on the promising utility of advanced NLP techniques for the enhancement of privacy and security of sensitive medical data34.

Meaney et al. conducted a comparative analysis on the efficacy of transformer models for the de-identification task of PHI within clinical notes, using the i2b2/UTHealth 2014 corpus as a baseline35. Their study considered different transformer architectures, such as BERT-base, BERT-large, ROBERTA-base, ROBERTA-large, ALBERT-base, and ALBERT-xxlarge, with different hyperparameter settings, batch sizes, training epochs, learning rate, and weight decay35 The performance of models was compared with the standard metrics of accuracy, precision, recall, and F1 score. ROBERTA-large models outperformed other models, showing outstanding performance. ROBERTA-large models achieved an accuracy of over 99% and a precision/recall rate of 96.7% on the test subset35.

However, despite their high performance, these models have several limitations. First, the large size of models such as ROBERTA-large and ALBERT-xxlarge requires significant computational resources for training and fine-tuning, which may not be feasible for all institutions35. Furthermore, while transformers generally perform well on the overall de-identification task, their accuracy can vary significantly across different types of PHI35. For instance, models tend to struggle with identifying certain entity classes such as professions, organizations, ages, and specific locations, which can lead to incomplete de-identification35. Additionally, there is a concern with overfitting, as training set performance often exceeds that of validation/test sets, suggesting that the models might not generalize well to unseen data35. Lastly, the need for extensive hyper-parameter tuning to achieve optimal performance can be time-consuming and requires expertise in machine learning, which may not always be available in clinical settings.

Generative pretrained model series (GPT)

The GPT series, developed by OpenAI, represents a significant advancement in the NLP field. The first in the series, GPT-1, was released in 2018 with 117 million parameters, setting a new standard for unsupervised learning from diverse internet text. Its smaller parameter count held it back on performance for complex language tasks. However, its smaller parameter count limited its performance on complex language tasks36. The 2019 version, GPT-2, was made with 1.5 billion parameters and was better at producing more coherent and contextually relevant text37. The 2020 version, GPT-3, had 175 billion parameters, and its text-generative capabilities with language understanding were exceptional. GPT-3 showed exceptional capabilities in various tasks like translation38, summarization14, and question-answering without task-specific tuning39. However, these advantages come with very high computing and energy requirements, which could impede scaling further and might also have negative impacts in terms of environmental concerns and inclusion for small organizations40. GPT-4, which was introduced in 2023, has further advanced the capabilities in terms of text generation and language understanding with even more parameters and refined tuning techniques13.

In terms of accessibility, OpenAI enhanced the GPT series by offering APIs and developing user-friendly interfaces that allow developers and non-experts to harness the power of GPT models13. The API for GPT-3 and GPT-4, for instance, provides easy integration with existing software, enabling applications in customer service, content generation, and more without extensive machine-learning expertise41.

Applications of GPT in Healthcare Generative large language models (LLMs) like GPT-3.5 and GPT-4 are transforming medical applications by improving the abilities of healthcare professionals (HCPs). These models facilitate the creation of detailed and accurate medical notes for patients, including symptoms, medical notes, and results from laboratory and imaging tests42,43. GPTs alleviate the burden of manual documentation from HCPs, thereby enhancing healthcare quality and increasing the efficiency of healthcare services44. Moreover, generative LLMs assist in medical decision-making by providing personalized drug recommendations and aiding in the selection of the best diagnostic tests, which in turn supports informed clinical decisions45. In addition to supporting clinical practices, GPTs contribute significantly to patient-centered care by delivering reliable medical information sourced from current literature and guidelines, ensuring that patients receive care that is not only effective but also aligned with the latest medical insights46. Following the tremendous potential of GPT in medical applications, de-identification of clinical notes has become pivotal. The GPT models, particularly GPT-4, have proven to be of significant outstanding in this area47. The “DeID-GPT” framework, using GPT-4, automates the removal of PHI from open-source medical texts. GPT-4’s ability to generate synthetic clinical notes further demonstrates its extensive utility in health informatics, providing a safe source for training medical AI and conducting research without exposing real patient data47.

These developments mark a significant advance in the integration of AI technologies in healthcare, suggesting a future where GPT models not only streamline clinical workflows but also enhance the privacy and security of patient information. This integration has the potential to transform healthcare practices, improve patient outcomes, and protect sensitive information.

Research contribution

The extensive review of current methodologies employing NLP and GPT models for de-identifying and generating synthetic clinical notes highlights significant advancements but also highlights critical gaps that this study aims to address. Firstly, the dependence on computationally intensive training methods for most models requires extensive resources, which may not be feasible for all healthcare institutions. Our study proposes the use of advanced GPT models that offer a more efficient approach by utilizing pre-trained capabilities that significantly reduce the need for extensive computational resources.

Additionally, most of the previous research has used open-source or publicly available datasets, which, while beneficial, can introduce bias due to the non-representative nature of these datasets. This study stands out because it uses actual patient data from the King Hussein Cancer Center (KHCC), which will provide a richer, more accurate, and contextually relevant dataset for training and evaluation. This approach not only ensures the generation of high-fidelity synthetic notes but also enhances the models’ ability to perform effectively in a real-world clinical setting.

Moreover, the comparison between GPT-3.5 and GPT-4 highlights the advancements in handling complex de-identification tasks, with GPT-4 achieving superior accuracy, precision, and F1 scores, underscoring its potential for scalable and ethical applications in healthcare. By using zero-shot learning, GPT-4 eliminates the need for fine-tuning, making it accessible to healthcare institutions with limited resources.

By addressing these gaps, our research aims to contribute a practical perspective to the current use of AI in healthcare, particularly in the synthesis and protection of clinical data, ensuring that advancements in AI technology translate into tangible benefits for clinical practice and patient care. This study will not only push the boundaries of what AI can achieve in terms of data privacy and utility but also set a standard for the ethical use of real patient data in AI research.

Methodology

Data was collected from the medical Electronic Health Records (EHR) at the King Hussein Cancer Center following the ethical guidelines and receiving approval from the Institutional Review Board (IRB). Due to the retrospective nature of the study, King Hussein Cancer Centre Institutional Review Board waived the need of obtaining informed consent under IRB number 24 KHCC 195. All methods were performed in accordance with the relevant guidelines and regulations, including those outlined by the Declaration of Helsinki and local regulatory standards.

Dataset description

The dataset for this study comprised 100 discharge summaries sourced from the VISTA-based Electronic Health Records (EHR) of the King Hussein Cancer Center (KHCC). VISTA, a robust and widely used EHR system, supports the management of patient records and ensures compliance with international privacy and security standards. Discharge summaries were chosen as they are critical documents summarizing a patient’s hospital stay, treatment outcomes, and follow-up instructions. These documents contain a wide range of Protected Health Information (PHI), including patient names, dates, addresses, phone numbers, and medical record numbers, as defined by HIPAA guidelines. The dataset was manually annotated by domain experts to mark all instances of PHI. The annotation process included 2 rounds of verification to ensure high accuracy and consistency. This annotated dataset served as the ground truth for evaluating the models’ performance.

Experiment setting

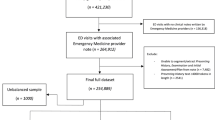

The core of our methodology is the use of open-source AI GPT models (GPT-3.5 and GPT-4) through API access. This method utilizes the abilities of In-Context Learning (ICL), leading LLMs to perform novel tasks using natural language prompts, thereby optimizing computational efficiency. Generally, a common method of tailoring LLMs to a certain domain is training an existing model with a training dataset which is employed to update the model’s parameters through a backpropagation algorithm. However, there have been several research and investigations on the efficacy of taking advantage of ICL instead, which proves to be advantageous for several reasons. ICL allows the model to address new LLM tasks without fine-tuning, thereby requiring less computational resources, time, and effort. This study employed a zero-shot in-context learning (ICL) approach, where the models were tasked with identifying and redacting Protected Health information (PHI) directly, without prior task-specific training or examples provided during runtime. The study framework is illustrated in Fig. 1.

In this study, the latest two versions of the GPT series, GPT-3.5 and GPT-4, through API access will be used to identify the clinical notes and to generate synthetic clinical notes that mimic the real ones but withhold the patients’ privacy and confidentiality. Figure 2 represents the work pipeline of this study.

Implementation of in-context learning (ICL) and prompt engineering

The introduction of ICL techniques in LLMs like GPT-3 and GPT-4 is a significant step forward in applying AI to real-world tasks, especially when there is limited labeled data. ICL methods, including zero-shot and few-shot learning, allow these models to adapt quickly to new tasks by using their extensive pretraining on diverse datasets. In zero-shot learning, the model handles tasks without prior specific examples, only based on its foundational training, while few-shot learning uses a few examples to help the model understand a new task48,49.

Prompt engineering is a key element in implementing ICL, especially in areas where high interpretability is needed, like the medical field. Manually created prompts, which are clearer and more interpretable than automatically created, are crucial. Recent studies have compared prompt patterns with well-known software patterns, which exist in the literature and provide reusable solutions for common problems in generating outputs and interacting within the LLM environment. These insights into effective prompt designs are vital for improving LLM outputs, with 16 distinct prompt patterns identified as particularly useful50. LLMs are often described as “black boxes”; thus, it is challenging to understand the direct effect of inputs on the outputs. Therefore, prompt optimization becomes the paradigm for designing, refining, and editing prompts to minimize the gap between human input and the model’s responses.

This is necessary in tasks like clinical note de-identification, where even one well-structured prompt can greatly affect the model’s performance in real-world applications51. Through structured prompt engineering and optimization, LLMs can be used more effectively to achieve desired outcomes with minimal data, reducing the need for extensive fine-tuning and the risk of overfitting51. Figure 2 presents a visual example of the prompt used in this study, serving as an explanation for the concept of prompt engineering. In this study, zero-shot prompts were carefully designed to provide clear and precise instructions to the language model, ensuring strict adherence to HIPAA guidelines. The prompts guided the model in identifying and redacting sensitive information while retaining the clinical utility of the data. They explicitly instructed the removal of all HIPAA-protected identifiers, such as names, dates, addresses, and phone numbers, while preserving research-relevant information. Specific rules for handling dates included redacting only the day and month while retaining the year, as in de-identifying “01/07/2023” to “[redacted]/2023,” which maintained the temporal context essential for research. Additionally, the prompts addressed the handling of patient ages, ensuring anonymization or redaction of age ranges for individuals aged 90 or older to comply with HIPAA requirements. Clear guidelines were also provided for identifying and redacting sensitive numerical data, such as Social Security numbers or long numerical sequences, to enhance the effectiveness of de-identification.

Integration of HIPAA identifiers guidelines in prompts

A critical aspect of our methodology is the integration of HIPAA identifiers into the model prompts to ensure compliance with privacy regulations as shown in Fig. 3. This involves the model being specifically prompted to identify and redact all HIPAA-specified identifiers from the clinical notes to protect patient privacy effectively5.

To ensure compliance with HIPAA regulations, the prompts incorporated all 18 HIPAA identifiers (as detailed in Table 1) explicitly. For example, the model was instructed with the following guidance:

“You are a professional individual in de-identifying medical records. Through the process, you make sure to retain important information that can be used for research. Therefore, you adhere to the succeeding guidelines by the HIPAA “Safe Harbor” method and nothing more:

The following identifiers of the individual, or of relatives, employers, or household members of the individual, are removed: (HIPAA identifiers)”.

This approach ensured that the model followed rigorous de-identification practices while preserving the essential clinical details necessary for secondary research.

Evaluation metrics

The evaluation of our de-identification methods is performed using detailed metrics that measure the precision, recall, F1 score, and accuracy of the models used. These metrics collectively provide a comprehensive understanding of the model’s effectiveness in accurately and reliably processing sensitive data.

Precision measures the accuracy of the model in identifying true positives among all the instances it labels as sensitive. High precision indicates that the model has a low rate of false positives. High precision is crucial in de-identification tasks to ensure that non-sensitive data is not incorrectly masked, which could potentially lead to a loss of useful information in clinical notes.

Recall (or Sensitivity) measures the model’s ability to identify all actual sensitive instances in the dataset out of all instances that were supposed to be identified. High recall is essential to ensure that all sensitive information is identified and modified to prevent any potential data breaches that could compromise patient privacy.

The F1 Score is the harmonic mean of precision and recall. It is used when you want to balance the precision and recall of a model, especially if there is an uneven class distribution. The F1 score is particularly useful because it provides a single metric that balances both the precision and recall of the model, which is important in scenarios where both the false positives and false negatives carry significant consequences.

Accuracy is calculated as the percentage of sensitive entities correctly identified and altered by the model in comparison to the total entities present that required modification. Considering that accuracy directly affects the processed data’s reliability, trustworthiness, and legality, this is a key need in the context of medical data de-identification. High accuracy ensures safety in using such de-identified data for research or any other secondary purpose without infringing on the privacy of patients. It also will ensure the correspondence of the model to legal standards, such as HIPAA, which is very important for medical and research ethics.

Where:

-

True Positives (TP): These are instances where the model successfully identifies and de-identifies sensitive data entities in the text. True positives serve as a crucial indicator of a model’s effectiveness in accurately recognizing and processing data that must remain confidential under HIPAA guidelines.

-

True Negatives (TN): These instances occur when the model appropriately identifies and does not alter non-sensitive data. True negatives are essential as they demonstrate the model’s capability to distinguish between sensitive and non-sensitive information, ensuring that only PHI is altered, thereby maintaining the integrity of non-sensitive data.

-

False Positives (FP): These arise when the model mistakenly identifies non-sensitive data as sensitive, leading to unnecessary alterations. False positives are particularly detrimental as they can degrade the readability and utility of the de-identified notes, potentially resulting in the loss of valuable information that could have been utilized for research or other purposes.

-

False Negatives (FN): These occur when the model fails to identify and de-identify data that is sensitive. False negatives are especially severe as they represent a direct failure to maintain patient confidentiality and to comply with privacy laws, posing a significant risk to privacy protection.

Through rigorous assessments and the refinement of de-identification processes, these errors can be minimized, thereby enhancing the security and practical usability of the synthetic clinical notes produced. This comprehensive approach to evaluating de-identification performance is instrumental not only in optimizing the model but also in boosting stakeholder confidence in the integrity and reliability of the anonymization process. This methodological ensures that advancements in AI technology translate into practical tools that uphold high standards of ethical medical practice and research compliance.

Results

Model accuracy in de-identifying clinical notes was evaluated using the general framework performance metrics, including recall, precision, F1 score, and accuracy. Prior human extraction of the identifiers was done and used as ground truth. This involved experts manually annotating the clinical notes to identify protected health information (PHI). The models were then evaluated against this annotated dataset. Metrics were calculated by comparing the model’s de-identified outputs to the ground truth annotations, measuring the proportion of true positives and true negatives correctly identified by the model. The results shown in Fig. 4 shows that these models greatly outperform traditional methods in dealing with such sensitive medical data while maintaining privacy standards as required by HIPAA.

Recall performance

Recall is the ability of the model to identify and correctly de-identify all relevant instances of sensitive information in the clinical notes. The achieved recall values for GPT-3.5 and GPT-4 were 0.6690 and 0.8318, respectively, representing moderate and high capabilities of recall for sensitive entities. Notably, GPT-4 exhibited a significantly higher value of 0.8318 for recall, demonstrating its enhanced sensitivity in the accurate identification and redaction of Protected Health Information (PHI) elements.

Precision analysis

Precision is a measure of how accurately the data labeled as PHI are identified by the model, thus reflecting the likelihood that the tagged data were indeed PHI. For GPT-3.5, the precision was recorded at 0.3448, which is considerably low and indicates significant challenges in distinguishing PHI from non-PHI words. In contrast, GPT-4 achieved precision of 0.9925, suggesting that nearly all words identified by it as PHI were correctly classified, greatly reducing the risk of incorrect data modification.

F1 score

The F1 score, which is the harmonic mean of precision and recall, was calculated to provide a comprehensive view of each model’s overall performance. GPT-3.5 achieved an F1 score of 0.3905, while GPT-4 excelled with an F1 score of 0.8973. This high score for GPT-4 highlights its effectiveness in balancing recall and precision, confirming its robust capability in the de-identification task.

Accuracy assessment

Overall accuracy, defined as the proportion of total true results within the data, was utilized to assess the general effectiveness of the models across all test cases. GPT-3.5 achieved an accuracy rate of 0.7867, indicating its acceptable effectiveness in managing PHI data. In contrast, GPT-4 demonstrated exceptional performance, achieving an accuracy rate of 0.9911. This shows GPT-4’s superior ability to operate within the stringent constraints of medical data privacy and to effectively handle both sensitive and non-sensitive data.

Comparative analysis and implications

The comparison of GPT-3.5 to GPT-4 reveals substantial improvements in the model across generations. GPT-4’s improved performance across all evaluated metrics emphasizes its potential as a reliable tool for the de-identification of clinical notes, confirming its appropriateness for real-world applications where accuracy and adherence to strict privacy regulations are vital.

The high precision and accuracy of GPT-4 can significantly reduce the burden on healthcare providers by automating the data de-identification process, without compromising the utility of the data. This capability is crucial for enhancing the availability of clinical data for research purposes, which can lead to better patient outcomes and more informed public health policies.

This study exemplifies the successful integration of advanced AI techniques into practical healthcare applications, demonstrating the transformative potential of GPT models to enhance data privacy and utility in medical research.

Discussion

The findings of this research show the capabilities and effectiveness of GPT 3.5 and GPT 4, in anonymizing notes and creating synthetic clinical notes. Prior studies have extensively discussed the usage of machine learning models like Conditional Random Fields (CRF) and Long Short-Term Memory (LSTM) networks in anonymizing clinical notes. For instance, Jiang et al. Achieved an F1 score of 0.8986 using LSTM models highlighting their efficiency in handling patterns in texts34. Nonetheless this research reveals that GPT 4, with an F1 score of 0.89732 achieves effectiveness with less data preprocessing and manual feature engineering requirements. This highlights GPT-4’s ability to streamline the de-identification process while maintaining performance. Rule-based and hybrid approaches, such as the NLNDE system and Lee et al.‘s hybrid model, have demonstrated efficacy in certain structured de-identification tasks32,33. However, these models are hindered by their rigidity and complexity, as they rely on predefined rules and manual integration. In contrast, our approach leverages GPT-4’s in-context learning capabilities, which dynamically adapt to varied datasets without the need for extensive manual feature engineering or retraining. This adaptability is reflected in GPT-4’s high F1 score and precision, significantly reducing false positives compared to traditional models. Precision plays a role where GPT 4 demonstrates performance (0.99249) surpassing earlier models and methods significantly. This indicates a decrease in false positives, which is crucial for maintaining the usefulness of anonymized notes for secondary purposes. High precision guarantees that non sensitive information remains unaltered thereby preserving the richness of the data, for investigations and analyses. These findings align with the limitations of transformer-based models, such as ROBERTA-large, which, while achieving high performance, require significant computational resources and hyperparameter tuning35. GPT-4’s pre-trained capabilities eliminate these resource-intensive requirements, making it more accessible to resource-limited institutions.

The results also highlight areas for improvement, particularly in recall performance (0.8318 for GPT-4). Enhancing recall will be a focus of future work, where we plan to explore advanced prompt engineering techniques, incorporate task-specific instructions, and evaluate the impact of hybrid approaches that combine GPT models with rule-based systems. These strategies aim to better capture sensitive information while maintaining the utility of anonymized notes.

In terms of accuracy, GPT-4 (0.99113) significantly outperforms the models from earlier studies and GPT-3.5 (0.78666), indicating advancements in model architecture and training methodologies. This higher accuracy ensures the model handles both sensitive data reliably complying with privacy regulations like HIPAA while maintaining data integrity. The research use of GPT models signs a shift towards incorporating cutting edge AI technologies in healthcare. Integrating these models into processes enhances information privacy and security as well as improves data accessibility, for healthcare professionals. In producing high-quality synthetic notes, GPT models effectively address the longstanding challenge of balancing data utility and privacy a challenge traditionally highlighted by methods such as data masking and pseudonymization19,20. This advancement highlights the potential of generative models to maintain the confidentiality of sensitive information while ensuring that data remains functional and useful for various applications.

By utilizing real-world EHR data from KHCC, this study also addresses the limitations of open-source datasets, which often fail to capture the complexity and diversity of clinical notes in real-world contexts29. This approach ensures that our findings are robust and applicable to real-world clinical environments, providing a foundation for future studies to explore generalizability across multiple institutions.

While the results are promising, they bring to light significant ethical considerations related to using synthetic data and AI in healthcare. A significant concern is the potential for synthetic data to replicate or amplify biases present in the original dataset. For example, if real-world data contains demographic imbalances, such as underrepresentation of specific age groups, ethnicities, or socio-economic statuses, synthetic data could inadvertently reflect these biases, resulting in skewed research outcomes or inequitable clinical practices. To mitigate these risks, strategies such as bias audits, ensuring dataset diversity, and conducting fairness evaluations across demographic groups are crucial. Ethical oversight is also essential, requiring transparency in synthetic data generation processes, including clear communication of methodologies and assumptions, to build trust and ensure accountability. Stakeholders must be educated on the limitations of synthetic data, such as its inability to fully replicate real-world complexities. Rigorous validation of synthetic data is necessary to confirm its clinical utility and reliability, as demonstrated in this study, where GPT-4-generated synthetic data was evaluated using metrics such as precision, recall, and F1 score to ensure accuracy and adherence to privacy standards. Addressing these ethical considerations allows synthetic data to be responsibly integrated into healthcare, advancing medical research and data sharing without compromising patient safety or equity.

Moreover, this research enhances the broader understanding of AI’s role in healthcare and sets a benchmark for future studies and implementations. By utilizing prompt engineering and in-context learning, this study demonstrates how GPT-4 can address scalability challenges, providing a universally adaptable framework for de-identification tasks. This scalability ensures compliance with de-identification standards like HIPAA across diverse healthcare settings.

While this study focuses on clinical notes from a single institution (KHCC), we believe this provides a robust testing ground due to the high quality and annotation consistency of the data. Future work will involve extending this research to datasets from other institutions to validate its generalizability and adaptability in varying clinical environments. By addressing potential contextual differences, we aim to enhance the applicability of our findings to global healthcare systems. These efforts will ensure that AI-driven de-identification solutions maintain high performance and relevance across diverse contexts. Overall, the integration of GPT models represents a significant advancement in healthcare technology, offering scalable, efficient, and privacy-compliant solutions for de-identification tasks. By addressing these challenges and opportunities, we aim to set a benchmark for ethical and practical AI implementations in medical data management.

Conclusion

This research reveals that GPT-4 has significantly better de-identification and, as a result, much better quality of synthetic clinical records in terms of precision, recall, F1 score, and accuracy than GPT-3.5. The improved functionalities of GPT-4 would further form the basis for the potential safe handling of sensitive medical data, with no data utility loss, which has to be a core component in research and health analytics. Our approach reduces the need for extensive computational resources, making complicated de-identification techniques more accessible to healthcare institutions. The use of real patient data from the King Hussein Cancer Center ensures that our findings apply to real-world clinical settings, addressing limitations associated with non-representative datasets. Automating the de-identification process with GPT-4 can significantly reduce the administrative burden on healthcare providers, enhancing patient care and the availability of clinical data for research. However, the integration of AI in healthcare must consider ethical implications to avoid risks like inaccurate clinical decisions or biased research outcomes.

Overall, the implementation of GPT models for de-identifying clinical notes represents a significant advancement in healthcare technology. Future research should explore the long-term impacts of these technologies, their integration into clinical workflows, and their effectiveness in maintaining data security without compromising utility. This study sets a benchmark for the ethical and practical use of AI in medical data management, emphasizing the balance between patient privacy and data accessibility.

Data availability

The data utilized in this study are confidential in nature and cannot be shared due to privacy and confidentiality agreements but are available from the corresponding author upon reasonable request.

References

Rao, S. R. et al. Electronic health records in small physician practices: Availability, use, and perceived benefits. J. Am. Med. Inform. Assoc. 18(3). https://doi.org/10.1136/amiajnl-2010-000010 (2011).

Segal, M., Giuffrida, P., Possanza, L. & Bucciferro, D. The critical role of health information technology in the safe integration of behavioral health and primary care to improve patient care. J. Behav. Health Serv. Res. 49(2). https://doi.org/10.1007/s11414-021-09774-0 (2022).

Paul, M., Maglaras, L., Ferrag, M. A. & Almomani, I. Digitization of healthcare sector: A study on privacy and security concerns. https://doi.org/10.1016/j.icte.2023.02.007 (2023).

Hoffman, S. & Podgurski, A. Balancing privacy, autonomy, and scientific needs in electronic health records research. SMU Law Rev. 65, 1 (2012).

Moore, W. & Frye, S. Review of HIPAA, Part 1: History, protected health information, and privacy and security rules. J. Nucl. Med. Technol. 47(4). https://doi.org/10.2967/JNMT.119.227819 (2019).

Office for Civil Rights. (n.d.)., Health Insurance Portability and Accountability Act (HIPAA). U.S. Department of Health & Human Services.

Juhn, Y. & Liu, H. Artificial intelligence approaches using natural language processing to advance EHR-based clinical research. J. Allergy Clin. Immunol. 145(2). https://doi.org/10.1016/j.jaci.2019.12.897 (2020).

Liddy, E. D. Natural Language Processing. https://surface.syr.edu/istpub (2001).

Adamson, B. et al. Approach to machine learning for extraction of real-world data variables from electronic health records. Front. Pharmacol. 14 https://doi.org/10.3389/fphar.2023.1180962 (2023).

Krusche, M., Callhoff, J., Knitza, J. & Ruffer, N. Diagnostic accuracy of a large language model in rheumatology: Comparison of physician and ChatGPT-4. Rheumatol. Int. 44(2). https://doi.org/10.1007/s00296-023-05464-6 (2024).

Kehl, K. L. et al. Natural language processing to ascertain cancer outcomes from medical oncologist notes. JCO Clin. Cancer Inf. 4 https://doi.org/10.1200/cci.20.00020 (2020).

Sai, S. et al. Generative AI for transformative healthcare: A comprehensive study of emerging models, applications, case studies, and limitations. IEEE Access 12 https://doi.org/10.1109/ACCESS.2024.3367715 (2024).

OpenAI GPT-4 Technical Report.

Goyal, T., Li, J. J. & Durrett, G. News summarization and evaluation in the era of GPT-3. http://arxiv.org/abs/2209.12356 (2022).

Li, J. et al. Are synthetic clinical notes useful for real natural language processing tasks: A case study on clinical entity recognition. J. Am. Med. Inform. Assoc. 28(10). https://doi.org/10.1093/jamia/ocab112 (2021).

Parker, M. J., Anderson, C., Stone, C. & Oh, Y. R. A large language model approach to educational survey feedback analysis. Int. J. Artif. Intell. Educ. https://doi.org/10.1007/s40593-024-00414-0 (2024).

Bhavsar, P. Understanding LLM Hallucinations Across Generative Tasks. Galileo Blog.

Giuffrè, M. & Shung, D. L. Harnessing the power of synthetic data in healthcare: Innovation, application, and privacy. NPJ Digit. Med. 6(1). https://doi.org/10.1038/s41746-023-00927-3 (2023).

Olatunji, I. E., Rauch, J., Katzensteiner, M. & Khosla, M. A review of anonymization for healthcare data,Big Data https://doi.org/10.1089/big.2021.0169 (2022).

Mackey, E. A Best Practice approach to anonymization. in Handbook of Research Ethics and Scientific Integrity. https://doi.org/10.1007/978-3-319-76040-7_14-1 (2019).

Neubauer, T. & Heurix, J. A methodology for the pseudonymization of medical data. Int. J. Med. Inf. 80(3). https://doi.org/10.1016/j.ijmedinf.2010.10.016 (2011).

Gkoulalas-Divanis, A. & Loukides, G. A survey of anonymization algorithms for electronic health records. Med. Data Priv. Handb. https://doi.org/10.1007/978-3-319-23633-9_2 (2015).

Martinelli, F., Marulli, F., Mercaldo, F., Marrone, S. & Santone, A. Enhanced privacy and data protection using natural language processing and artificial intelligence. in Proceedings of the International Joint Conference on Neural Networks. https://doi.org/10.1109/IJCNN48605.2020.9206801 (2020).

Tang, R., Han, X., Jiang, X. & Hu, X. Does synthetic data generation of LLMs help clinical text mining?.

Gonzales, A., Guruswamy, G. & Smith, S. R. Synthetic data in health care: A narrative review. PLOS Digit. Health 2(1). https://doi.org/10.1371/journal.pdig.0000082 (2023).

Alkhalifah, T., Wang, H. & Ovcharenko, O. MLReal: Bridging the gap between training on synthetic data and real data applications in machine learning,Artif. Intell. Geosci. 3. https://doi.org/10.1016/j.aiig.2022.09.002 (2022).

Tucker, A., Wang, Z., Rotalinti, Y. & Myles, P. Generating high-fidelity synthetic patient data for assessing machine learning healthcare software. NPJ Digit. Med. 3 https://doi.org/10.1038/S41746-020-00353-9 (2020).

Murtaza, H. et al. Synthetic data generation: State of the art in health care domain. https://doi.org/10.1016/j.cosrev.2023.100546 (2023).

Al Aziz, M. M. et al. Differentially Private Medical texts Generation using generative neural networks. ACM Trans. Comput. Healthc. 3(1). https://doi.org/10.1145/3469035 (2022).

Alaa, A. M., van Breugel, B., Saveliev, E. & van der Schaar, M. How faithful is your synthetic data? Sample-level Metrics for Evaluating and Auditing Generative Models. in Proceedings of Machine Learning Research (2022).

Singh, J. P. The impacts and challenges of generative artificial intelligence in medical education, clinical diagnostics, administrative efficiency, and data generation. Int. J. Appl. Health Care Anal. 8 (2023).

Lange, L., Adel, H. & Strötgen, J. NLNDE: The neither-language-nor-domain-experts’ way of Spanish medical document de-identification. in CEUR Workshop Proceedings (2019).

Lee, H. J. et al. A hybrid approach to automatic de-identification of psychiatric notes. J. Biomed. Inf. 75. https://doi.org/10.1016/j.jbi.2017.06.006 (2017).

Jiang, Z., Zhao, C., He, B., Guan, Y. & Jiang, J. De-identification of medical records using conditional random fields and long short-term memory networks. J. Biomed. Inf. 75 https://doi.org/10.1016/j.jbi.2017.10.003 (2017).

Meaney, C., Hakimpour, W., Kalia, S. & Moineddin, R. A comparative evaluation of transformer models for de-identification of clinical text data. http://arxiv.org/abs/2204.07056 (2022).

Radfort, A., Narasimhan, K., Salimans, T. & Sutskever, I. (OpenAI Transformer): Improving Language Understanding by Generative Pre-Training, OpenAI, (2018).

Wu, X. & Lode, M. Language models are unsupervised multitask learners (Summarization), OpenAI Blog, vol. 1, no. May, (2020).

Elsner, M. & Needle, J. Translating a low-resource language using GPT-3 and a human-readable dictionary. in Proceedings of the Annual Meeting of the Association for Computational Linguistics. https://doi.org/10.18653/v1/2023.sigmorphon-1.2 (2023).

Yang, Z. et al. An Empirical study of GPT-3 for few-shot knowledge-based VQA. in Proceedings of the 36th AAAI Conference on Artificial Intelligence, AAAI 2022. https://doi.org/10.1609/aaai.v36i3.20215 (2022).

Floridi, L. & Chiriatti, M. GPT-3: Its nature, scope, limits, and consequences. https://doi.org/10.1007/s11023-020-09548-1 (2020).

OpenAI OpenAI developer platform.

Mehandru, N. et al. Evaluating large language models as agents in the clinic. https://doi.org/10.1038/s41746-024-01083-y (2024).

Nguyen, J. & Pepping, C. A. The application of ChatGPT in healthcare progress notes: A commentary from a clinical and research perspective. Clin. Transl. Med. 13(7). https://doi.org/10.1002/ctm2.1324 (2023).

Lu, Q., Dou, D. & Nguyen, T. H. ClinicalT5: A generative language model for clinical text, in findings of the association for computational linguistics: EMNLP 2022. https://doi.org/10.18653/v1/2022.findings-emnlp.398 (2022).

Rao, A. et al. Evaluating GPT as an adjunct for radiologic decision making: GPT-4 Versus GPT-3.5 in a breast imaging pilot. J. Am. Coll. Radiol. https://doi.org/10.1016/j.jacr.2023.05.003 (2023).

Fink, M. A. Large language models such as ChatGPT and GPT-4 for patient-centered care in radiology. https://doi.org/10.1007/s00117-023-01187-8 (2023).

Liu, Z. et al. DeID-GPT: Zero-shot medical text de-identification by GPT-4. https://github.com/yhydhx/ChatGPT-API

Dai, H. et al. AugGPT: Leveraging ChatGPT for text data augmentation. http://arxiv.org/abs/2302.13007 (2023).

Zhang, K. et al. BiomedGPT: A unified and generalist biomedical generative pre-trained transformer for vision, language, and multimodal tasks. http://arxiv.org/abs/2305.17100 (2023).

White, J. et al. A prompt pattern catalog to enhance prompt engineering with ChatGPT. http://arxiv.org/abs/2302.11382 (2023).

Dai, D. et al. Why can gpt learn in-context? Language models secretly perform gradient descent as meta optimizers. http://arxiv.org/abs/2212.10559 (2022).

Author information

Authors and Affiliations

Contributions

Conceptualization (S.A), (B.A), (A.T), (I.s), (A.O).Methodology (L.B), (B.A), (S.A), (A.T), (I.S).Data preprocessing (L.B), (B.A), (S.A).Coding and model training (L.B), (B.A), (S.A).First draft Writing (B.A), (S.A), (R.K).Manuscript editing and revision (A.T), (A.O), (A.T), (L.B). Project management and ethical approval (B.A). Result visualization (B.A), (R.K), (S.A).Result interpretation (B.A), (R.K), (S.A). All authors have read and reviewed the manuscript thoroughly, contributed intellectual input to its content, and have agreed on its final version for submission. They take full responsibility for the integrity and accuracy of the manuscript’s content.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Altalla’, B., Abdalla, S., Altamimi, A. et al. Evaluating GPT models for clinical note de-identification. Sci Rep 15, 3852 (2025). https://doi.org/10.1038/s41598-025-86890-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-86890-3

This article is cited by

-

Bridging the performance gap: systematic optimization of local LLMs for Japanese medical PHI extraction

Scientific Reports (2026)

-

Clinical Assessment of Fine-Tuned Open-Source LLMs in Cardiology: From Progress Notes to Discharge Summary

Journal of Healthcare Informatics Research (2025)