Abstract

Underwater images collected are often of low clarity and suffer from severe color distortion due to the marine environment and Illumination conditions. This directly impacts tasks such as marine ecological monitoring and underwater target detection, which rely on image processing. Therefore, enhancing Underwater images to improve their quality is necessary. A generative adversarial network with an encoder-decoder structure is proposed to improve the quality of Underwater images. The network consists of a generative network and an adversarial network. The generative network is responsible for enhancing the images, while the adversarial network determines whether the input is an enhanced image or a real high-quality image. In the generative network, we first design a residual convolution module to extract more texture and edge information from underwater images. Next, we design a multi-scale dilated convolution module to capture underwater features at different scales. Then, we design a feature fusion adaptive attention module to reduce the interference of redundant features and enhance the local perception capabilities. Finally, we construct the generative network using these modules along with conventional modules. In the adversarial network, we first design a multi-scale feature extraction module to improve the feature extraction ability. We then use the multi-scale feature extraction module along with conventional convolution modules to design the adversarial network. Additionally, we propose an improved loss function by introducing color loss into the conventional loss function. The improved loss function can better measure the color discrepancy between the enhanced image and the real image. It is useful to reduce color distortion in the enhanced images. In experimental simulations, the images enhanced by the proposed methods have the highest PSNR, SSIM, and UIQM values, indicating that the proposed method has superior Underwater image enhancement capabilities compared to other methods.

Similar content being viewed by others

Introduction

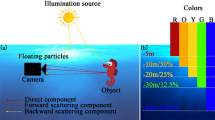

In recent years, artificial intelligence has played a crucial role in predicting and detecting marine corrosion, reducing pollution in marine ecosystems, and supporting offshore wind energy1. Underwater ocean images contain a wealth of information and are vital for researchers to explore the ocean. However, due to the complexity of the underwater environment and the absorption and scattering effects of light in water, these images often suffer from color distortion, texture loss, detail blurring, and low contrast. These typical degradations present challenges for underwater tasks and hinder the effective exploration and utilization of underwater resources2. Therefore, it is essential to enhance underwater ocean images. This will better restore color and detail information, improving image clarity. High-quality images will then be available for subsequent visual tasks based on underwater images.

With the advancement of deep learning technology, it has been widely applied in fields such as image segmentation3, fault diagnosis4, object detection5, prediction of wind power6, and image enhancement7. Deep learning-based methods for enhancing underwater ocean images involve training models on large sets of paired underwater images, allowing the models to automatically learn the characteristics of underwater images without human intervention. As a result, these methods offer superior image enhancement effects and generalization capabilities compared to traditional methods. Deep learning-based underwater image enhancement can be categorized into methods based on single deep convolutional neural networks and those based on generative adversarial networks (GANs). The former consists of a single network dedicated to enhancing underwater images. The latter comprises a generative network and an adversarial network. The generative network enhances the underwater images, while the adversarial network determines whether the input is an enhanced image or a real high-definition image. Although generative adversarial networks (GANs) have been widely applied to underwater image enhancement8, several limitations remain. For instance, many existing methods result in enhanced images with local color distortions, low contrast, and loss of fine texture details. These issues make the enhanced images fall short of meeting the visual quality requirements for practical applications, affecting subsequent tasks like underwater detection. This paper proposes an efficient generative adversarial network to address these problems and further improve the quality of the enhanced images. The main contributions of this paper are summarized as follows:

-

We propose a generative network with encoder-decoder structure. Firstly, we design residual convolution modules to extract more texture and edge information. Secondly, we design multi-scale dilated convolution modules to extract more features with different scales. Thirdly, we design feature fusion adaptive attention module to reduce the interference of redundant features and enhance the local perception capabilities. In the end, we use the proposed modules to design the generative network.

-

We propose a multi-scale adversarial network. Firstly, we design a multi-scale feature extraction module to extract more features with different scales. Secondly, we use the designed module to construct the adversarial network.

-

We propose an improved loss function by introducing the color loss into the conventional loss function. The improved loss function can better measure the color difference between the enhanced images and normal illumination images, which is useful to reduce the color distortion of enhanced images.

Related works

In recent years, many methods for underwater image enhancement have been proposed. These methods can be divided into three main categories: enhancement methods based on non-physical models, enhancement methods based on physical models, and enhancement methods based on deep learning. Enhancement methods based on non-physical models do not consider the underwater image formation model. They utilize techniques such as histogram stretching or linear transformations to alter the pixel values of underwater images, thereby improving contrast and correcting color distortion9. Zhuang et al.10 combined Bayesian inference with the classical Retinex theory, applying multi-step gradient priors to the reflectance and illumination components to enhance individual underwater images. Although these methods are easy to implement and cost-effective, they often produce unsatisfactory results due to the lack of consideration for the underwater imaging model and relevant parameters. They tend to introduce additional noise, artifacts, and over-enhancement during the enhancement process. Enhancement methods based on physical models fully consider the attenuation characteristics of light propagation underwater. These methods obtain enhanced images by selecting an underwater imaging model and prior knowledge11. Zhou et al.12 proposed using feature priors from the image to estimate the background light and then optimizing the transmission map by compensating for the red channel in the color-corrected image. Finally, the restored underwater image is obtained using the image formation model. However, this method relies heavily on the established model and the selection of prior knowledge, leading to high complexity, bias in parameter estimation, and weak model generalization. These methods struggle to guarantee the quality of enhanced images and are not suitable for various underwater scenarios.

In recent years, deep learning techniques have been widely applied in various fields. Due to the successes achieved by deep learning techniques in areas such as image recognition13, computer vision14, and natural language processing15, an increasing number of researchers have begun to apply this technology to underwater image enhancement tasks16. Deep learning-based methods for underwater image enhancement utilize large datasets of degraded and high-quality images to train network models without degradation models. Therefore, they are more suitable for diverse underwater environments. In recent years, generative adversarial networks (GANs) have significantly progressed in image enhancement tasks. However, training GANs presents several challenges, such as mode collapse, instability, and limitations in the quality of generated images. These issues are particularly pronounced in underwater image enhancement, where the environment introduces more complex image degradation phenomena and feature distributions. To address these problems, various regularization and normalization techniques have been proposed to stabilize GAN training and improve the quality of generated images17. These techniques effectively alleviate mode collapse in GANs and enhance the model’s robustness when handling complex tasks, such as underwater image enhancement. Karras et al.18 further optimized the structure of the generator network in their analysis and improvement of StyleGAN, achieving significant advancements in restoring image details and quality. These improvements are essential for resolving underwater image enhancement detail recovery and artifact issues. In particular, introducing more efficient feature generation methods can enhance the generative network’s generalization ability across diverse scenarios. Additionally, Li et al.19 proposed a direct optimization approach for adversarial training to address stability issues in GAN training, further improving network training stability. This direct adversarial training effectively enhances the model’s robustness and generation quality, demonstrating better generalization and image detail recovery, especially when dealing with datasets characterized by complex distributions. Due to the advantages of generative adversarial networks, researchers have widely explored underwater image enhancement methods based on generative adversarial networks.Wang et al.20 proposed Class-condition attention GAN for underwater image enhancement , establishing an underwater image dataset. They utilized an attention mechanism to fuse concurrent channel and spatial attention features. This network effectively restores color casts in underwater images. The strength of this study lies in introducing a class-conditional attention mechanism, enabling the network to generate more accurate enhancement effects for different categories of images. However, this approach demonstrates poor performance in eliminating unwanted artifacts. Liu et al.21 proposed Integrating a physical model and a generative adversarial network for underwater image enhancement , employing an embedded physical model for network learning and coefficient estimation through a generative adversarial network. This method effectively combines the advantages of physical models with the generative capabilities of GANs, achieving effective color restoration of underwater images. Experimental results indicate that this approach can successfully restore the colors of underwater images, but it struggles with recovering texture details. Furthermore, the physical model’s complexity may increase the model’s training difficulty and computational cost. Yang et al.22 proposed a conditional generative adversarial network-based method for underwater image enhancement. They applied a multi-scale module in the generator and employed dual discriminators to capture local and global semantic information. The study demonstrates that this method achieves good results in enhancing the quality of underwater images, particularly excelling in color cast removal and contrast enhancement. However, this method relies heavily on parameter selection and network structure design during training, which may lead to insufficient adaptability to different underwater scenes.

Wu et al.23 proposed underwater image enhancement using a generative adversarial network with multi-scale fusion , introducing an adaptive fusion strategy that integrates multi-source and multi-scale features in the encoder part while employing a channel attention mechanism in the decoder to calculate the attention between the prior feature map and the decoded feature map. Experimental results demonstrate that FW-GAN achieves commendable performance in color correction; however, there is still room for improvement in addressing artifacts that appear in images. Cong et al.24 proposed Physical model-guided underwater image enhancement using gan with dual-discriminators , which uses a physical model as guidance and employs a designed parameter estimation subnet to learn the parameters inverted from the physical model. They utilize dual discriminators for judgment, guiding the generator to produce higher-quality images. Experimental results indicate that PUGAN excels in color restoration. However, introducing a physical model may increase training and inference time costs, posing challenges for real-time applications and large-scale image processing. Xu et al.25 proposed a gan-based approach towards underwater image enhancement using non-pairwise supervision , an unsupervised underwater image enhancement method that does not require paired datasets. This network employs a multi-input constrained loss function, significantly improving color restoration and global contrast enhancement performance. Although UUGAN performs excellently in addressing typical underwater image degradation phenomena, its enhancement effects may be less satisfactory in extreme situations, such as severe color distortion or insufficient illumination. Guang et al.26 proposed Adaptive Underwater Image Enhancement Based on Generative Adversarial Networks , designing a residual-based feature extractor and enhancing the model’s adaptability by incorporating trainable weights. This network employs a dual-path discriminator and a multi-weighted fusion loss function. Experimental results on multiple underwater image datasets demonstrate that this method effectively mitigates issues such as color distortion and insufficient contrast in underwater images. Liu et al.27 proposed a weak-strong dual-supervised learning method for underwater image enhancement , utilizing a dual-supervision learning strategy. In the weak supervision learning phase, unpaired underwater images train the model. In contrast, in the strong supervision learning phase, a limited number of paired images serve as inputs for training, enabling better restoration of image details. This method effectively combines the advantages of both unsupervised and supervised learning, achieving favorable enhancement results even with limited data. Lin et al.28 proposed a Conditional generative adversarial network with a dual-branch progressive generator for underwater image enhancement , designing a dual-branch progressive generator that incorporates a progressive enhancement algorithm to improve the quality of underwater images gradually. This branching strategy combines multi-scale information extraction, allowing for better detail recovery and reduction of color distortion. However, the progressive generator’s dual-branch design and multi-stage processing increase model complexity, leading to higher computational costs and training time. While generative adversarial network-based underwater enhancement methods have made certain advancements in improving image quality, there still needs to be improvement in addressing color distortion, enhancing image contrast, and detail recovery.

Methods

To improve the quality of underwater images, we design a generative adversarial network (GAN) for underwater image enhancement. The network consists of two parts: a generative network and an adversarial network. The generative network enhances underwater images, while the adversarial network determines whether the image is a high-quality underwater image or an enhanced one. The adversarial network feeds back its judgment to the generative network, which, under this guidance, generates higher-quality underwater images.

Generator

We first design the residual convolution module, multi-scale dilated convolution module, and feature fusion adaptive attention module. Then, we use these modules to construct a generative network with an encoder-decoder structure. The proposed generative network is shown in Fig. 1. First, the residual convolution module performs initial feature extraction on the input image, increasing the number of channels from 3 to 64 while keeping the size unchanged. Next, pooling layers and the residual convolution module halve the feature map size and double the number of channels, extracting edge and texture features of underwater images. The numbers of channels in the output feature maps of the three residual convolution modules are 128, 256, and 512, respectively. Then, the multi-scale dilated convolution module increases the receptive field size and extracts multi-scale contextual information without changing the feature map size and number of channels. Following this, three hybrid modules composed of upsampling and 3 × 3 convolutions extract detailed and local feature information of the underwater images. Additionally, we use three skip connections with feature fusion adaptive attention module to introduce the output features of the three residual convolution modules into the output features of the three upsampling stages, fusing them through concatenation. This fusion of low-level and high-level convolutional features preserves richer detail information. Finally, a 3 × 3 convolution and a Tanh function recover high-quality underwater images from the extracted features, achieving underwater image enhancement. In the following, we will provide a detailed introduction to the proposed residual convolution module, the multi-scale dilated convolution module, and the feature fusion adaptive attention module.

Proposed residual convolution module

Traditional generative networks typically use a single 3 × 3 convolution for feature extraction. This approach often leads to incomplete feature extraction and information loss. To better extract the textures and edges in underwater images, we designed a residual convolution module and incorporated it into our generative network architecture. In the designed network structure, we used this module four times to fully extract features at different levels. The proposed residual convolution module is shown in Fig. 2. The module consists of three branches. The first branch comprises a 1 × 1 convolution layer with a stride of 1, followed by a LeakyReLU activation function, then a 3 × 3 convolution layer with a stride of 1, and another LeakyReLU activation function. The second branch consists of a 3 × 3 convolution layer with a stride of 1, a LeakyReLU activation function, a 1 × 1 convolution layer with a stride of 1, and another LeakyReLU activation function. The first branch passes through a 1 × 1 convolution layer with a LeakyReLU activation function. Although this does not change the number of channels, it introduces a nonlinear transformation that enhances the expressive capability of the feature map. The subsequent 3 × 3 convolution further extracts more localized spatial features, capturing subtle details within the image. This branch focuses on the meticulous extraction of local features, effectively capturing fine structures and variations in the image, such as textures and edges. Conversely, the second branch prioritizes the 3 × 3 convolution, directly extracting broader spatial information from the input feature map. Although the following 1 × 1 convolution does not alter the number of channels, it allows for the reprocessing of features to distill more useful representations. The design of this second branch leans towards the extraction of global features, enabling the effective capture of the overall structure and primary characteristics of underwater images, such as large fish or underwater terrain. While both branches ultimately have a receptive field of 3 × 3, their feature extraction methods differ, resulting in features with distinct focal points. This design allows the two branches to complement each other when processing the input feature map, ultimately forming a richer feature representation. The convolution layers in both branches do not change the size or the number of channels of the feature maps, ensuring the extraction of features and semantic information at different levels. To reduce information loss, we use the skip connection to transmit the input features and element-wise addition to fuse the input features and the output features extracted by both branches. The fused feature map is then processed by a 1 × 1 convolution layer with a stride of 1, which increases the number of channels without altering the size of the feature map.

Proposed multi-scale dilated convolution module

To extract richer feature information, we design a multi-scale dilated convolution module. This module is capable of extracting features at multiple scales simultaneously. The structure of the proposed multi-scale dilated convolution module is shown in Fig. 3. It consists of four branches. The first branch consists of a sequence of a 1 × 1 convolution layer, a LeakyReLU activation function, a 3 × 3 dilated convolution with a dilation rate of 3, and another LeakyReLU activation function. The second branch comprises two concatenated parallel modules. The first parallel module consists of parallel 3 × 3 and 5 × 5 convolutions, and the feature maps from both convolutions are concatenated and used as input for the second parallel module. The second parallel module consists of two branches. The first branch includes a 3 × 3 convolution with a LeakyReLU activate function, a 3 × 3 dilated convolution with a dilation rate of 3, followed by a LeakyReLU activate function. The second branch includes a 5 × 5 convolution with a LeakyReLU activate function, a 3 × 3 dilated convolution with a dilation rate of 5 followed by a LeakyReLU activate function. The outputs of these branches are concatenated to obtain the output feature map of the second parallel module. The third branch comprises a 1 × 1 convolution with a LeakyReLU activate function. The fourth branch serves as a shortcut connection, primarily aimed at retaining the input features to minimize feature loss. The output feature maps of the first, second, and third branches are fused through concatenation operation. In the end, we use a 1 × 1 convolution with a LeakyReLU activate function to adjust the channel number of the fused feature map. The output feature map is fused with the input feature map by element-wise addition operation to obtain the final output feature map of the multi-scale dilated convolution. Due to the different receptive fields of each branch, features of underwater images at various scales can be effectively extracted.

Proposed feature fusion adaptive attention module

To reduce the interference of redundant feature information and enhance the model’s ability to perceive local information, we designed a feature fusion adaptive attention module. The network structure of this module is shown in Fig. 4. Firstly, a 1 × 1 convolution with a stride of 1 is applied to the input feature map for preliminary processing, reducing the number of channels and computational complexity. Secondly, a module composed of three parallel branches is used for further feature extraction from the initially extracted features. The first branch consists of max pooling and a 3 × 3 dilated convolution with a dilation rate of 3. Max pooling highlights details and local texture features of underwater images by preserving the maximum feature values. The second branch includes an average pooling layer and a 3 × 3 dilated convolution with a dilation rate of 3. The average pooling layer balances the weights of all channels, reducing abnormal weight values and improving the model’s stability, which helps in extracting global features of underwater images (such as overall brightness, color distribution, and structural features). The output feature maps from the first and second branches are fused using element-wise addition. The fused feature map is then processed by a 3 × 3 convolution layer with a stride of 1 and an upsampling module to achieve deep feature fusion and restore the feature map size. The third branch only contains a 1 × 1 convolution layer with a stride of 1. To prevent the loss of important information, we use an element-wise addition operation to fuse the output features maps of the third branch with the output features maps of the upsampling. The fused feature map is then processed by a 1 × 1 convolution layer with a stride of 1 and a sigmoid function to obtain the weights of the feature map. This 1 × 1 convolution layer is used to restore the number of channels, and the sigmoid function generates attention weights ranging from 0 to 1. Finally, this weight value is multiplied by the input feature map to obtain the output feature map.

Discriminator

We first design a multi-scale feature extraction module and then utilize this module along with conventional convolutional modules to design an adversarial network. The designed adversarial network is illustrated in Fig. 5. This network consists sequentially of a 4 × 4 convolution with a stride of 2, followed by a LeakyReLU activation function, three identically structured modules, a multi-scale feature extraction module, and a 1 × 1 convolution with a stride of 1. Each of the three identically structured modules comprises a 4 × 4 convolution with a stride of 2, batch normalization, and LeakyReLU activation function. The output feature map size of each 4 × 4 convolution with a stride of 2 is reduced to half of the input feature map size while the number of channels is doubled. By gradually reducing the feature map size, global structural features of underwater images (such as background and main object positions) are extracted. The multi-scale feature extraction module is primarily used to extract features of different scales in underwater images, including local detailed features (such as object shapes and edge information), texture features, and color features. This module consists of four branches: the first branch comprises a 3 × 3 convolution with a stride of 2 and a 2 × 2 deconvolution with a stride of 2. The second branch comprises a 5 × 5 convolution with a stride of 2 and a 2 × 2 deconvolution with a stride of 2. The third branch comprises a 7 × 7 convolution with a stride of 2 and a 2 × 2 deconvolution with a stride of 2. The fourth branch comprises a 9 × 9 convolution with a stride of 2 and a 2 × 2 deconvolution with a stride of 2. As the receptive fields of the four branches are different, they can effectively extract feature information of different scales in underwater images. The output features of the four branches are fused through concatenation. Then, a 1 × 1 convolution is utilized to adjust the channel number of the fused feature map, yielding the output feature map of multi-scale features. In the final 1 × 1 convolution of the adversarial network, the channel number is adjusted to 1, converting the extracted features into judgment scores, thereby achieving the judgment of whether the input image is a generated clear image or a real clear image.

Loss function

In order to better measure the difference between the generated clear images and the real clear images, we introduce color loss into the conventional loss function and propose an improved loss function as follows:

where \({\lambda _1}\), \({\lambda _2}\), \({\lambda _3}\) and \({\lambda _4}\) represent scale coefficient. Their values represent the importance ratio of each loss function accordingly. After a series of experiments, the enhanced images are better when \({\lambda _1} = 0.2\), \({\lambda _2} = 0.49\), \({\lambda _3} = 0.21\) and \({\lambda _4} = 0.1\). Therefore, we set \({\lambda _1} = 0.2\), \({\lambda _2} = 0.49\), \({\lambda _3} = 0.21\) and \({\lambda _4} = 0.1\). The \(L_{GAN} \left( \right)\) represents adversarial loss function. It is expressed as:

where \(X\) and \(Y\) represent the low-quality image and high-quality image, respectively. \(G\left( \right)\) and \(D\left( \right)\) represents the generator and discriminator, respectively.

Although the L2 loss function is widely applied in image processing tasks, it is sensitive to outlier pixel values. There are some outlier pixel values in generated underwater images. Therefore, we use the L1 function that is less sensitive to outliers as the loss function. The L1 loss function in (1) is expressed as:

The perceptual loss function used in (1) is expressed as:

where \(\phi ( \cdot )\) is high-level features extracted by a pre-trained VGG-19 network.

Underwater images often suffer from uneven lighting, leading to over-enhancement near light sources during the enhancement process, resulting in color distortion. The color loss can be better measure the color difference between the enhanced image and high-quality image. We introduce the color loss into loss function to reduce the color distortion in enhanced underwater images. The color loss function is as follows:

where \({R_m}\) is the average of the pixel values in the red channel of the high-quality image and the enhanced image. The \(r\), \(g\) and \(b\) respectively represent the average of the differences in pixel values between the real high-quality image and the enhanced image in the red channel, green channel, and blue channel. They are expressed as:

where \(N\) is the number of images, \(H\) and \(W\) are the height and width of the images respectively. \({Y_R}\), \({Y_G}\) and \({Y_B}\) respectively represent the images in red channel, green channel, and blue channel in the high-quality image. \(G{\left( x \right) _R}\), \(G{\left( x \right) _G}\) and \(G{\left( x \right) _B}\) respectively represent the images in the red channel, green channel, and blue channel in the enhanced image.

Simulation and discussion

To validate the effectiveness of the proposed algorithm against existing methods such as FUnIEGAN29, LANET30, UIESS31, UW-CycleGAN32, TACL33, MFFN34, and CRN-UIE35, we conduct training and testing all models on the UIEB36 dataset. The dataset comprises 890 pairs of images, consisting of original underwater images and their corresponding high-quality underwater images. Among these, 712 pairs of underwater images are utilized for model training, while the remaining 178 pairs are used for model testing. To demonstrate the generalization ability of the proposed method, we further tested our model on the test-samples subset of the EUVP dataset. This subset contains 515 pairs of raw underwater images and their corresponding high-quality underwater images. We further tested our model using the test subset of the UFO-120 dataset, which contains 120 pairs of raw underwater images and their corresponding high-quality underwater images. To quantitatively measure the quality of the enhanced images, we employed four different performance metrics: Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM)37, No-Reference Underwater Image Quality Metric (UIQM)38, and Frechet Inception Distance (FID) to evaluate the quality of the generated images. PSNR is used to calculate the peak signal-to-noise ratio between the enhanced images and the original high-quality images. Higher PSNR and SSIM values indicate that the enhanced underwater images are closer to their corresponding high-quality underwater images. A higher UIQM value indicates better color fidelity, saturation, and contrast in the enhanced underwater images. FID evaluates the quality and diversity of generated images by comparing the feature distributions between generated images and real images. A smaller FID indicates that the feature distribution of the generated images is closer to that of the real images, signifying higher image quality.

The code for this work was developed using the PyCharm platform, with Python 3.9 as the programming language, and the model was implemented and trained using the PyTorch 1.8.0 deep learning framework. All experiments were conducted on an NVIDIA GeForce GTX 1080 Ti GPU with 11 GB of memory, running on an Ubuntu 18.04 operating system. During training, all input images were resized to a uniform dimension of 256 × 256, with a batch size of 4. The Adam optimizer was employed for network optimization, with an initial learning rate 0.0001. The network was trained for a total of 200 epochs.

Simulation on the UIEB dataset

We randomly selected three pairs of images from the 178 pairs of test images. The degraded images in these three pairs are used as the input images of the image enhancement method. The degraded images(raw images), high-quality images (reference images), and enhanced images are shown in Fig. 6. The first column represents the degraded images, the second column represents the high-quality images, and the third to tenth columns represent the images enhanced by FUnIEGAN, LANET, UIESS, UW-CycleGAN, TACL, MFFN, CRN-UIE, and our proposed method, respectively. The images in the first, third, and fifth rows are full-size images, while those in the second, fourth, and sixth rows are locally enlarged images.

For the first image, the image enhanced by FUnIEGAN exhibits green color deviation. The background of the image enhanced by LANET shows a green color deviation and appears darker and less clear. The image enhanced by UIESS displays blue color deviation in the background and severe red color deviation in the objects. The image enhanced by UW-CycleGAN exhibits varying degrees of blue, red, and green color distortion in the background from top to bottom, and pseudo-artifacts are present in the objects. In the images enhanced by TACL, the upper part of the background exhibits a bluish color deviation, while the lower part shows a greenish color deviation. The images enhanced by MFFN exhibit some deviations in detail restoration. The images enhanced by CRN-UIE show color deviations, with the lower background appearing overly white and reddish. In contrast, the enhanced image produced by our proposed method demonstrates more prominent color correction and contrast enhancement. For the second image, the image enhanced by FUnIEGAN still exhibits blue color deviation, and the enhancement effect is not significant. The background of the image enhanced by LANET displays a blue color deviation, and the objects appear darker. The image enhanced by UIESS exhibits a blue color deviation. The image enhanced by UW-CycleGAN shows severe blue color distortion in the background, and partial red color deviation is present in the objects. In the images enhanced by TACL, the upper part of the background shows a bluish color deviation. The images enhanced by MFFN exhibit a bluish color deviation in the upper background. The images enhanced by CRN-UIE have relatively low contrast. In comparison, the enhanced image produced by our proposed method exhibits better color restoration and clearer visual features. For the third image, the image enhanced by FUnIEGAN appears blurry, with insufficient sharpness and artifacts, negatively impacting its visual quality. The image enhanced by LANET exhibits inaccurate color restoration in some background areas and objects. The image enhanced by UIESS is relatively dark and lacks clarity. The image enhanced by UW-CycleGAN shows severe pinkish color distortion in the background and objects. The images enhanced by TACL exhibit low contrast, resulting in a less pronounced enhancement effect. The images enhanced by MFFN are blurry and have low sharpness. The images enhanced by CRN-UIE exhibit slight color restoration deviations in some objects and backgrounds. In contrast, the image enhanced by our proposed method is more similar to the real underwater image, demonstrating better color accuracy, higher clarity, and contrast.

In summary, FUnIEGAN, LANET, and MFFN methods have limitations in effectively enhancing image clarity and contrast. UIESS, TACL, UW-CycleGAN and CRN-UIE methods have limitations in addressing color distortion, removing artifacts, and fully sharpening image details. In comparison, our proposed method successfully improves image color, contrast, and details, making them more similar to the corresponding reference images and exhibiting excellent performance.

To objectively analyze the performance of underwater image enhancement methods, we utilize PSNR, SSIM, UIQM, and FID to measure the quality of the enhanced images. We apply FUnIEGAN, LANET, UIESS, UW-CycleGAN, TACL, MFFN, CRN-UIE, and our proposed method to enhance all degraded images in the test set. The average evaluation metric values for the enhanced images are shown in Table 1. The average PSNR values of the images enhanced by FUnIEGAN, LANET, UIESS, UW-CycleGAN, TACL, MFFN, CRN-UIE, and our proposed method are 20.839, 22.574, 22.415, 21.217, 22.581, 22.879, 22.913, and 23.975, respectively. The average SSIM values of the images enhanced by FUnIEGAN, LANET, UIESS, UW-CycleGAN, TACL, MFFN, CRN-UIE, and our proposed method are 0.758, 0.876, 0.839, 0.843, 0.875, 0.875, 0.876, and 0.878, respectively. The average UIQM values of the images enhanced by FUnIEGAN, LANET, UIESS, UW-CycleGAN, TACL, MFFN, CRN-UIE, and our proposed method are 3.165, 3.143, 3.128, 2.515, 3.132, 3.152, 3.168, and 3.197, respectively. The average FID values for images generated by FUnIEGAN, LANET, UIESS, UW-CycleGAN, TACL, MFFN, CRN-UIE, and the proposed method are 41.095, 35.643, 43.953, 49.005, 37.731, 35.213, 33.745, and 31.423, respectively. Our proposed method has the highest PSNR and SSIM values, indicating that the images enhanced by our proposed method are closer to real high-quality images. Additionally, our proposed method has the highest UIQM value, indicating that it performs best in improving the visual quality of underwater images. Our proposed method achieves the lowest FID value, indicating that the images enhanced by our approach exhibit a feature distribution closer to real images, resulting in higher image quality.

Simulation on the EUVP dataset

We evaluated the effectiveness of our proposed method using the test-samples subset of the EUVP dataset. This subset comprises 515 pairs of degraded underwater images and their corresponding high-definition underwater images. From these 515 pairs of test images, we randomly selected three pairs to enhance the degraded underwater images in each pair. The degraded underwater images, corresponding high-definition underwater images, and enhanced underwater images are displayed in Fig. 7. The first column contains the degraded images, the second column contains the high-definition images, and the third to tenth columns display the enhanced images produced by FUnIEGAN, LANET, UIESS, UW-CycleGAN, TACL, MFFN, CRN-UIE, and our proposed method, respectively. The images in the first, third, and fifth rows are full-size images, while those in the second, fourth, and sixth rows are locally enlarged images.

For the first image, the image enhanced by FUnIEGAN appears darker, with noticeable color recovery deviations in the objects. The image enhanced by LANET exhibits low contrast. The objects in the image enhanced by UIESS show some red color bias. The image enhanced by UW-CycleGAN suffers from severe bluish color distortion in the background, with the object colors appearing black. The objects in the TACL-enhanced image also exhibit some red color bias. The images enhanced by MFFN exhibit deviations in the color restoration of the objects. The images enhanced by CRN-UIE have low contrast. In contrast, the enhanced image produced by our proposed method demonstrates better color correction and contrast enhancement. For the second image, the one enhanced by FUnIEGAN appears blurry and has low contrast. The objects in the image enhanced by LANET exhibit some yellow color bias. The UIESS-enhanced image fails to effectively enhance the objects, which still display a greenish color bias. The image enhanced by UW-CycleGAN suffers from significant reddish color distortion in the background and contains black artifacts. The objects in the TACL-enhanced image do not show restored color details. The images enhanced by MFFN are relatively blurry and have low sharpness. The images enhanced by CRN-UIE exhibit some deviations in detail restoration. In comparison, the enhanced image produced by our method exhibits better color fidelity. For the third image, the one enhanced by FUnIEGAN lacks sufficient contrast, resulting in a less noticeable enhancement effect. The image enhanced by LANET contains white artifact distortion in the objects. The image enhanced by UIESS shows a red color bias. The UW-CycleGAN-enhanced image suffers from severe bluish color distortion in the background, while the objects in the TACL-enhanced image display reddish color distortion. The images enhanced by MFFN exhibit a greenish color deviation in the upper background, and the objects in the image are blurry. The images enhanced by CRN-UIE show a bluish color deviation in the upper background, and the objects in the image exhibit some deviations in detail restoration. In contrast, the images enhanced by our method closely resemble the real underwater images, exhibiting superior color accuracy and contrast.

In summary, images enhanced by the FUnIEGAN method often show low contrast. The LANET, UIESS, UW-CycleGAN, TACL, MFFN, and CRN-UIE methods demonstrate shortcomings in addressing color distortion and image detail restoration. The results indicate that our enhanced images are closer to real underwater images, showcasing better generalization capability in underwater image enhancement.

To quantitatively analyze the performance of different methods, we employed PSNR, SSIM, UIQM, and FID to assess the quality of the enhanced images. We applied FUnIEGAN, LANET, UIESS, UW-CycleGAN, TACL, MFFN, CRN-UIE, and our proposed method to enhance all degraded images in the EUVP test set. The average values of the evaluation metrics for the enhanced images are presented in Table 2. The average PSNR values for images generated by FUnIEGAN, LANET, UIESS, UW-CycleGAN, TACL, MFFN, CRN-UIE, and our method are 22.390, 21.245, 22.110, 20.180, 21.341, 21.712, 21.965, and 23.769, respectively. The average SSIM values for the same methods are 0.786, 0.776, 0.737, 0.697, 0.753, 0.788, 0.802, and 0.826, respectively. The average UIQM values are 3.135, 2.963, 3.048, 2.145, 3.011, 3.097, 3.154, and 3.192, respectively. The average FID values for images generated by FUnIEGAN, LANET, UIESS, UW-CycleGAN, TACL, MFFN, CRN-UIE, and our method are 36.765, 33.921, 43.472, 48.830, 35.329, 33.203, 32.504, and 31.480, respectively. Our proposed method achieves the highest PSNR, SSIM, and UIQM values, indicating that the images enhanced by our approach are closer to real high-definition images. Furthermore, our method exhibits the lowest FID value, suggesting that the enhanced images are visually more similar to real images and possess higher realism and diversity.

Simulation on the UFO-120 dataset

We evaluated the effectiveness of our proposed method using the test subset of the UFO-120 dataset. This subset consists of 120 pairs of degraded underwater images and their corresponding high-quality underwater images. We randomly selected three pairs of images from these 120 test images and enhanced the degraded underwater images in each pair. The degraded underwater images, corresponding high-quality underwater images, and enhanced underwater images are shown in Fig. 8. The first column shows the degraded images, the second column shows the high-quality images and the third to tenth columns show the enhanced images using FUnIEGAN, LANET, UIESS, UW-CycleGAN, TACL, MFFN, CRN-UIE, and the proposed method, respectively. The first, third, and fifth rows show full-size images, while the second, fourth, and sixth rows show zoomed-in regions.

For the first image, the background enhanced by FUnIEGAN exhibits a bluish color deviation, and the objects in the image are blurry. The image enhanced by LANET shows a bluish color deviation in the background, with color restoration issues in the objects. The image enhanced by UIESS exhibits a bluish color deviation in the background, and some objects show a reddish color deviation. The image enhanced by UW-CycleGAN shows exposure distortion in the background. The images enhanced by TACL and MFFN exhibit color restoration deviations in the background. The image enhanced by CRN-UIE shows slight deviations in the detail restoration of the objects. In contrast, the enhanced image produced by our proposed method exhibits better color correction. For the second image, the image enhanced by FUnIEGAN shows color restoration issues in the objects. The images enhanced by LANET and UIESS exhibit color restoration deviations in the background. The image enhanced by UW-CycleGAN exhibits severe bluish color distortion in the background. The images enhanced by TACL and MFFN fail to recover some color details in the objects. The image enhanced by CRN-UIE shows some color restoration deviations in both the objects and the background. In contrast, the image enhanced by our proposed method exhibits better color restoration. For the third image, the images enhanced by FUnIEGAN and LANET show bluish color distortion in the lower background, and the objects are blurry. The image enhanced by UIESS exhibits some reddish color deviation in the objects. The image enhanced by UW-CycleGAN shows severe bluish color distortion. The images enhanced by TACL, MFFN, and CRN-UIE exhibit bluish color distortion in the lower background, and the objects show some deviations in color restoration and detail. In contrast, the image enhanced by our proposed method is closer to the real underwater image and shows better color accuracy.

In summary, the images enhanced by the above comparison methods still have color and detail restoration shortcomings. The results show that our enhanced images are closer to the real underwater images, with our method performing better in color restoration.

To quantitatively analyze the performance of different methods, we used PSNR, SSIM, UIQM, and FID to measure the quality of the enhanced images. We applied FUnIEGAN, LANET, UIESS, UW-CycleGAN, TACL, MFFN, CRN-UIE, and our proposed method to enhance all degraded images in the UFO-120 test set. The average values of the evaluation metrics for the enhanced images are shown in Table 3. The average PSNR values of the images generated by FUnIEGAN, LANET, UIESS, UW-CycleGAN, TACL, MFFN, CRN-UIE, and our proposed method are 22.137, 21.165, 21.098, 20.173, 21.218, 21.653, 21.904, and 23.765, respectively. The average SSIM values of the images generated by FUnIEGAN, LANET, UIESS, UW-CycleGAN, TACL, MFFN, CRN-UIE, and our proposed method are 0.771, 0.763, 0.731, 0.705, 0.749, 0.782, 0.802, and 0.823, respectively. The average UIQM values of the images generated by FUnIEGAN, LANET, UIESS, UW-CycleGAN, TACL, MFFN, CRN-UIE, and our proposed method are 3.102, 3.014, 3.012, 2.513, 3.006, 3.093, 3.137, and 3.164, respectively. The average FID values of the images generated by FUnIEGAN, LANET, UIESS, UW-CycleGAN, TACL, MFFN, CRN-UIE, and our proposed method are 36.907, 34.108, 43.680, 48.916, 35.733, 33.439, 32.811, and 31.517, respectively. Our proposed method achieves the highest PSNR, SSIM, and UIQM values, indicating that the images enhanced by our method are closer to the real high-quality images. Furthermore, our method achieves the lowest FID value, suggesting that the images enhanced by our method visually resemble real images more closely.

Ablation experiment

To analyze the effectiveness of the proposed residual convolutional module, multi-scale dilated convolution module, feature fusion adaptive attention module, multi-scale feature extraction module, and color loss function in this paper, we conducted the following ablation experiments. w/o RCM(without residual convolution module). w/o MDCM(without multi-scale dilated convolution module). w/o FAAM( without feature fusion adaptive attention module). w/o MFEM(without multi-scale feature extraction module). w/o Color loss( without color loss).

We conducted a qualitative analysis to evaluate the visual performance of output images after removing different modules. We randomly selected five pairs of images from the UIEB test set for visual comparison. The degraded underwater images, corresponding high-definition underwater images, and enhanced images without specific modules, as well as those enhanced by our proposed method, are presented in Fig. 9. The first column displays the degraded images, the second column shows the high-definition images and the third to eighth columns represent the enhanced images produced by removing the residual convolution module (w/o RCM), removing the multi-scale dilated convolution module (w/o MDCM), removing the feature fusion adaptive attention module (w/o FAAM), removing the multi-scale feature extraction module (w/o MFEM), removing the color loss function (w/o Color loss), and the enhanced images generated by our complete method. After removing the RCM module, the overall image contrast decreased, and there were noticeable deviations in color recovery. When the MDCM module was removed, the background regions and details of the objects in the images became blurred, accompanied by color recovery deviations. The removal of the FAAM module resulted in unnatural color recovery, rendering the images somewhat dark, particularly in areas with significant color variations, which diminished the visual emphasis. When the MFEM module was excluded, the images lacked sufficient detail restoration and color recovery exhibited discrepancies. Lastly, the absence of the color loss function led to color distortion, reduced color saturation, and a grayish appearance in certain areas of the images, resulting in poor visual quality. In contrast, the output images produced by the complete model demonstrated good performance in contrast, color recovery, and detail preservation.

In summary, through qualitative analysis of the ablation study, we can intuitively observe the varying effects of different modules on image enhancement. Removing any module adversely affects the quality and visual appeal of the final output images. The model’s overall performance relies on the synergistic interaction of these modules, and the absence of any one module leads to a decline in image quality.

We used all paired images in the UIEB test set as test images and calculated the PSNR, SSIM, UIQM and FID of the model under the absence of different proposed modules. The results are shown in Table 4. The designed residual convolutional module can extract shallow features of underwater images, including texture and edge feature information. From Table 4, it can be seen that compared to the method without the residual convolutional module (w/o RCM), our complete method improves the PSNR, SSIM, and UIQM scores by nearly 3%, 3% and 2%, respectively. Additionally, the FID value is reduced by approximately 12%. The results indicate that the residual convolutional module can effectively improve the performance of the network model. The multi-scale dilated convolution module in the generation network is aimed at increasing the receptive field, deep -ening the network, and extracting richer feature information. From Table 4, it can be seen that compared to the method without the multi-scale dilated convolution module(w/o MDCM), our complete method improves the PSNR, SSIM, and UIQM values by nearly 5%, 3%, and 3%, respectively. Additionally, the FID value is reduced by approximately 15%. The results indicate that the multi-scale dilated convolution module plays an important role in restoring image color and enhancing detail information. The feature fusion adaptive attention module aims to extract important features and reduce the influence of unimportant features. From Table 4, it can be seen that compared to the method without the feature fusion adaptive attention module (w/o FAAM), our complete method improves the PSNR, SSIM, and UIQM values by nearly 1%, 1%, and 1%, respectively. Additionally, the FID value is reduced by approximately 2%. The results indicate that the feature fusion adaptive attention module can effectively improve the image quality generated by our method.

The multi-scale feature extraction module aims to integrate features with different receptive fields, enabling the discriminator to perform multi-scale discrimination on the input feature maps. From Table 4, it can be seen that compared to the method without the multi-scale feature extraction module (w/o MFEM), our complete method improved the PSNR, SSIM, and UIQM scores by nearly 2%, 1%, and 1%, respectively. Additionally, the FID value is reduced by approximately 7%.The results indicate that the multi-scale feature extraction module can improve the performance of the adversarial network and thus enhance the overall network model performance. By introducing the color loss function to reduce color distortion caused by enhancement, from Table 4, it can be seen that compared to the method without the color loss function( w/o Color loss), our complete method improves the PSNR, SSIM, and UIQM values by nearly 2%, 2%, and 1%, respectively. Additionally, the FID value is reduced by approximately 8%. The results indicate that combining the color loss function with traditional loss functions can improve color bias in images and enhance their visual effects.

In summary, the residual convolutional module, multi-scale dilated convolution module, feature fusion adaptive attention module, multi-scale feature extraction module, and color loss function are effective in enhancing the network’s ability to enhance underwater images.

Conclusion

In this paper, we propose a generative adversarial network for enhancing the underwater image. It consists of a generative network and an adversarial network. Firstly, we design the residual convolution module, multi-scale dilated convolution module, and feature fusion adaptive attention module. Then, we construct the generative network using these designed modules. We also design a multi-scale feature extraction module first and then utilize the multi-scale feature extraction module to construct the adversarial network. The generative network enhances underwater images, while the adversarial network indirectly improves the performance of the generative network. Meanwhile, we introduce color loss into the conventional loss function to enhance the ability to measure color distortion. We test the performance of our proposed method on the UIEB, EUVP, and UFO-120 datasets. Compared to other methods, our proposed method exhibits better color restoration and detail recovery effects on enhanced underwater images, and it achieves the highest PSNR, SSIM, and UIQM metrics. The results demonstrate that our proposed method outperforms other comparative methods in enhancing the quality and visualization of underwater images. Additionally, we conduct ablation experiments on the designed modules to validate the effectiveness of this method further.

Data availability

The dataset used in this article is publicly available and can be accessed at https://li-chongyi.github.io/proj_benchmark.html, http://irvlab.cs.umn.edu/resources/euvp-dataset and https://irvlab.cs.umn.edu/resources/ufo-120-dataset.

References

Mitchell, D. et al. A review: Challenges and opportunities for artificial intelligence and robotics in the offshore wind sector. Energy AI 8, 100146 (2022).

Liu, C., Shu, X., Xu, D. & Shi, J. Gccf: A lightweight and scalable network for underwater image enhancement. Eng. Appl. Artif. Intell. 128, 107462 (2024).

Huang, Z., Wang, L. & Xu, L. Dra-net: Medical image segmentation based on adaptive feature extraction and region-level information fusion. Sci. Rep. 14, 9714 (2024).

Wang Zhongxing, Z. X. & Yuangui, Zhou. Review of artificial intelligence algorithms-based wind turbine condition monitoring and fault diagnosis techniques. Northeast Electr. Power Univ. 44, 42–51 (2024).

Peng, D., Ding, W. & Zhen, T. A novel low light object detection method based on the yolov5 fusion feature enhancement. Sci. Rep. 14, 4486 (2024).

Zhang Zhe, W. B. Short-term power prediction method of wind power cluster based on cbam-lstm. Northeast Electr. Power Univ. 44, 1–8 (2024).

Fan, G.-D., Fan, B., Gan, M., Chen, G.-Y. & Chen, C. P. Multiscale low-light image enhancement network with illumination constraint. IEEE Trans. Circuits Syst. Video Technol. 32, 7403–7417 (2022).

Zhang, D. et al. Hierarchical attention aggregation with multi-resolution feature learning for gan-based underwater image enhancement. Eng. Appl. Artif. Intell. 125, 106743 (2023).

Lin, S., Li, Z., Zheng, F., Zhao, Q. & Li, S. Underwater image enhancement based on adaptive color correction and improved retinex algorithm. IEEE Access 11, 27620–27630 (2023).

Zhuang, P., Li, C. & Wu, J. Bayesian retinex underwater image enhancement. Eng. Appl. Artif. Intell. 101, 104171 (2021).

Liu, Y. et al. Model-based underwater image simulation and learning-based underwater image enhancement method. Information 13, 187 (2022).

Zhou, J., Wang, Y., Zhang, W. & Li, C. Underwater image restoration via feature priors to estimate background light and optimized transmission map. Opt. Express 29, 28228–28245 (2021).

Sun, Q. & Redei, A. Knock knock, who’s there: Facial recognition using cnn-based classifiers. Int. J. Adv. Comput. Sci. Appl. 13, 9–16 (2022).

Le, N., Rathour, V. S., Yamazaki, K., Luu, K. & Savvides, M. Deep reinforcement learning in computer vision: A comprehensive survey. Artif. Intell. Rev. 55, 2733–2819 (2022).

Lauriola, I., Lavelli, A. & Aiolli, F. An introduction to deep learning in natural language processing: Models, techniques, and tools. Neurocomputing 470, 443–456 (2022).

Menon, A. & Aarthi, R. A hybrid approach for underwater image enhancement using cnn and gan. Int. J. Adv. Comput. Sci. Appl. 14, 6 (2023).

Li, Z. et al. A systematic survey of regularization and normalization in gans. ACM Comput. Surv. 55, 1–37 (2023).

Karras, T. et al. Analyzing and improving the image quality of stylegan. in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 8110–8119 (2020).

Li, Z., Xia, P., Tao, R., Niu, H. & Li, B. A new perspective on stabilizing gans training: Direct adversarial training. IEEE Trans. Emerg. Top. Comput. Intell. 7, 178–189 (2022).

Wang, J. et al. Ca-gan: Class-condition attention gan for underwater image enhancement. IEEE Access 8, 130719–130728 (2020).

Liu, X., Gao, Z. & Chen, B. M. Ipmgan: Integrating physical model and generative adversarial network for underwater image enhancement. Neurocomputing 453, 538–551 (2021).

Yang, M. et al. Underwater image enhancement based on conditional generative adversarial network. Signal Process. Image Commun. 81, 115723 (2020).

Wu, J. et al. Fw-gan: Underwater image enhancement using generative adversarial network with multi-scale fusion. Signal Process. Image Commun. 109, 116855 (2022).

Cong, R. et al. Pugan: Physical model-guided underwater image enhancement using gan with dual-discriminators. IEEE Trans. Image Process. 32, 4472–4485 (2023).

Xu, H., Long, X. & Wang, M. Uugan: A gan-based approach towards underwater image enhancement using non-pairwise supervision. Int. J. Mach. Learn. Cybern. 14, 725–738 (2023).

Guan, F., Lu, S., Lai, H. & Du, X. Auie-gan: Adaptive underwater image enhancement based on generative adversarial networks. J. Mar. Sci. Eng. 11, 1476 (2023).

Liu, Q. et al. Wsds-gan: A weak-strong dual supervised learning method for underwater image enhancement. Pattern Recogn. 143, 109774 (2023).

Lin, P. et al. Conditional generative adversarial network with dual-branch progressive generator for underwater image enhancement. Signal Process. Image Commun. 108, 116805 (2022).

Islam, M. J., Xia, Y. & Sattar, J. Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 5, 3227–3234 (2020).

Liu, S. et al. Adaptive learning attention network for underwater image enhancement. IEEE Robot. Autom. Lett. 7, 5326–5333 (2022).

Chen, Y.-W. & Pei, S.-C. Domain adaptation for underwater image enhancement via content and style separation. IEEE Access 10, 90523–90534 (2022).

Yan, H. et al. Uw-cyclegan: Model-driven cyclegan for underwater image restoration. IEEE Trans. Geosci. Remote Sens. 61, 1–17 (2023).

Liu, R., Jiang, Z., Yang, S. & Fan, X. Twin adversarial contrastive learning for underwater image enhancement and beyond. IEEE Trans. Image Process. 31, 4922–4936 (2022).

Chen, R., Cai, Z. & Cao, W. Mffn: An underwater sensing scene image enhancement method based on multiscale feature fusion network. IEEE Trans. Geosci. Remote Sens. 60, 1–12 (2021).

Panetta, K., Kezebou, L., Oludare, V. & Agaian, S. Comprehensive underwater object tracking benchmark dataset and underwater image enhancement with gan. IEEE J. Oceanic Eng. 47, 59–75 (2021).

Li, C. et al. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 29, 4376–4389 (2019).

Wang, Z., Bovik, A. C., Sheikh, H. R. & Simoncelli, E. P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 13, 600–612 (2004).

Panetta, K., Gao, C. & Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Oceanic Eng. 41, 541–551 (2015).

Funding

This work is partially supported by Jilin Provincial Department of Education Project (JJKH20230125KJ, JJKH20250871KJ)

Author information

Authors and Affiliations

Contributions

Study conception and design: LZ, YL; data collection: LZ, YL, TZ; analysis and interpretation of results: LZ, YL; draft manuscript preparation: LZ, YL, TZ. All authors reviewed the results and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhao, L., Li, Y. & Zhong, T. A generative adversarial network with multiscale and attention mechanisms for underwater image enhancement. Sci Rep 15, 2787 (2025). https://doi.org/10.1038/s41598-025-86949-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-86949-1

Keywords

This article is cited by

-

Lightweight underwater image enhancement based on improved AquaTri-UNet++

Journal of Real-Time Image Processing (2026)

-

Image colorization based on transformer

Scientific Reports (2025)