Abstract

This paper introduces a new probabilistic composite model for the detection of zero-day exploits targeting the capabilities of existing anomaly detection systems in terms of accuracy, computational time, and adaptability. To address the issues mentioned above, the proposed framework consisted of three novel elements. The first key innovations are the introduction of “Adaptive WavePCA-Autoencoder (AWPA)” for pre-processing stage which address the denoising and dimensionality reduction, and contributes to the general dependability and accuracy of zero-day exploit detection. Additionally, a novel “Meta-Attention Transformer Autoencoder (MATA)” for enhancing feature extraction which address the subtlety issue, and improves the model’s ability and flexibility to detect new security threats, and a novel “Genetic Mongoose-Chameleon Optimization (GMCO)” was introduced for effective feature selection in the case of addressing the efficiency challenges. Furthermore, a novel “Adaptive Hybrid Exploit Detection Network (AHEDNet)” was introduced which address the dynamic ensemble adaptation issue where the accuracy of anomaly detection is very high with low false positives. The experimental results show the proposed model outperforms the other models of dataset 1 in accuracy of 0.988086 and 0.990469, precision of 0.987976 and 0.990628, recall of 0.988298 and 0.990435, with the lowest Hamming Loss of 0.011914 and 0.009531, also, the proposed model outperforms the other models of dataset 2 in accuracy of 0.9819 and 0.9919, precision of 0.9868 and 0.9968, recall of 0.9813 and 0.9923, with the lowest Hamming Loss of 0.0209 and 0.0109, thus the proposed model outperformed the other models in detecting zero-day exploits.

Similar content being viewed by others

Introduction

Zero-day exploits are a critical form of cyber threat whereby vulnerabilities in software or hardware are utilized by an attacker before their detection by developers1. The window of opportunity that they create is very important and can be leveraged by malicious actors, since there would not be any patching or defence strategies. A zero-day vulnerability refers to the one that the vendor is unaware of and has not patched yet2. Due to these types of vulnerabilities, the attackers are able to conduct various malicious activities such as data theft, system compromise, and many others long before software developers can even possibly remediate the problem. Zero-day exploits are among the most difficult to recognize since there is no signature or pattern, as traditional security systems have3,4. As a result, traditional detection methods-for example, signature-based antivirus solutions and intrusion detection systems-can’t detect zero-day threats because they can’t recognize attacks that haven’t yet been documented. Figure 1 represents the detection of Zero-day exploit.

Application of hybrid approach-ensemble neural network techniques for feature extraction and selection may be one promising strategy in the detection of zero-day exploits5,6. The proposed model uses several ways of extracting important features from the data, such as a number of statistical measures, correlation analysis, and other domain-specific measures. Techniques like univariate feature selection and Extra Trees classifiers help identify the most significant features associated with zero-day attacks7. Feature selection after the feature extraction helps in getting more information with reduced dimensions, which most of the time would enhance the performance of the models8,9. This could be achieved using methods such as correlation matrices and importance scoring from ensemble methods. Among others, selected features train the ensemble models that may combine neural networks with other machine learning algorithms10,11. This can make use of labelled and unlabelled data while in the training phase, enhancing it to generalize for zero-day attacks seen so far12.

The difficulty with zero-day exploits is that, because they take advantage of unknown vulnerabilities and thus have no patches or available signatures, they’re hard to detect. Traditional methods of detection, such as signature-based methods or databases of known threats, won’t pick up a zero-day exploit13. Machine learning models, which are trained on data from previously detected exploits, can then be used for the identification of new attacks. However, the correct identification and prioritization of new, sophisticated threats using machine learning models often faces a problem14. This can lead to a number of different inaccuracies, such as false positives and false negatives. The premise of zero-day exploits seems to be one of those cat-and-mouse-game-like defences. While one vulnerability is being patched up, attackers are already digging for the next one to use. It requires full attention and an upgrade cycle of security measures. The consequences can be disastrous when the zero-day exploits have been successful. They allow an attacker to execute code remotely, steal sensitive data, disrupt critical systems, and much more15. Incidents such as the Stuxnet worm and Equifax breach have shown the terrible consequences. Since zero-day vulnerabilities remain unknown and unpatched, organizations can’t account for them in cybersecurity risk management or mitigation efforts. This puts the security teams at a disadvantage. The contributions of this research are summarized below:

-

Adaptive WavePCA-Autoencoder (AWPA) is proposed for enhancing pre-processing, incorporating noise reduction, PCA dimensionality reduction, and feature extraction in one go to improve general detection accuracy and reliability.

-

Meta-Attention Transformer Autoencoder-(MATA) is proposed to handle subtlety in feature extraction with the purpose of strengthening the model’s ability to detect previously unknown security threats with improved flexibility.

-

Genetic Mongoose-Chameleon Optimization (GMCO) has been proposed to solve the efficiency feature selection problems, which balance exploration and precision for maximum detection accuracy with high computational efficiency.

-

Adaptive Hybrid Exploit Detection Network (AHEDNet) has been designed to solve DynamicEnsembleAdaptation problems. The features of interest are dynamically updated so that the accuracy of the detection will always remain high while reducing false positives.

The context of the paper is organised as Literature Survey in Section "Literature survey"; Proposed Methodology in Section "Problem statement"; Results and Discussion in Section "Proposed methodology"; Conclusion in Section "Results and discussion".

Literature survey

In 2022, Barros et al.16 have proposed a new zero-shot method of learning, Malware-SMELL to classify malware using the visual representation. It did this by proposing a new representation space, i.e., S-Space, to estimate the similarity value between a pair of objects in Malware-SMELL. The enriched representation strengthened the class separation capability, which in turn made the classification procedure far less complex. Malware-SMELL attained 80% recall and outperformed other methodologies in the ratio of 9.58%, while being trained in a classification model only with good ware code, under training on real-world datasets in the paradigm of GZSL.

In 2023, Sarhan et al.17 proposed another advanced zero-shot learning technique afforded the evaluation of the abilities of ML-based NIDSs to detect zero-day attack scenarios. At the attribute learning step, the models of learning translated the features of network data into semantic attributes to distinguish between the known attacks and legitimate activities; the established models related known attacks to zero-day attacks, labelling them as malicious at the inference phase. The authors thus constructed the Zero-day Detection Rate (Z-DR) as a means to appraise the success of the learning model in identifying the unknown assault. The authors evaluated their method against two recent NIDS datasets along with two widely used MLalgorithms. Additional research by the authors revealed that low Z-DR attacks spanned higher Wasserstein Distances and differed in distribution from the other attack classes.

In 2022, Hairab et al.18 have aimed to test the robustness of the detection method in the case of an encounter with an attack on which it had not been trained. Therefore, they gauged how good the CNN classifier was at identifying malicious attack traffic particularly those hitherto unknown in the network, also referred to as Zero-Day attacks. In an effort to curb overfitting and manage the complexity of a classifier, several regularization methods such as HER, L1, and L2 were adopted. According to the evaluation results, regularization-based approaches provided superior performance in terms of all criteria compared to a canonical CNN model. Furthermore, the augmented CNN extended the capabilities by effectively detecting unseen intrusion situations.

In 2023, Tokmak and Nkongolo19 talked about the use of artificial neural networks or SAEs for feature selection, and LSTM-based zero-day threat classification. UGRansome dataset was pre-processed, and features were extracted using unsupervised SAE. The model was fine-tuned by supervised learning with the objective of increasing its discriminative ability. The important features separating the zero-day threats from normal system behaviour were inferred by studying the learned weights and the activations of this autoencoder. The classification was possible because of the reduced size of the feature set presented by these selected features. The SAE-LSTM gave a very high F1 score, precision, and recall values with a uniform distribution of values for the different attack categories. Also, the model was very predictive for the identification of different types of zero-day attacks.

In 2022, Nkongolo et al.20 have proposed a cloud-based technique to classify zero-day attacks by the UGRansome1819 dataset. In this paper, the core aim was the classification of probable unknown threats using the resources of clouds and machine learning approaches. Training and testing various machine learning algorithms through Amazon Web Services, comprising an S3 bucket and SageMaker, was done with an anomaly detection dataset which included zero-day attacks for the contribution in this study. In the proposed methodology, three machine learning algorithms Naive Bayes, Random Forest, and Support Vector Machine (SVM) are combined with Ensemble Learning techniques along with a Genetic Algorithm (GA) optimizer. Combining each classifier, these algorithms examined the dataset and ascertained the zero-day threat classification accuracy.

Habeeb and Babu21 have proposed a new approach by hybridizing two powerful optimization algorithms, namely the Whale Optimization Algorithm and Simulated Annealing, in the feature selection of NIDS. In this regard, the proposed WOA-SA methodology was designed to outperform previous ones by balancing the exploration capability of global and local improvement. Besides, Deep Learning techniques were adopted to improve feature extraction and classification accuracy for zero-day and new types of attacks with optimal DL models. The paper focused on an elaborate presentation of WOA-SA for feature selection and its practical implementation for optimizing the NIDSs. This research project aimed to reduce false alarms and computational complexity while maximizing the detection rate of zero-day attacks. For this purpose, a comprehensive investigation on original and optimal feature sets of the BOT-IOT 2020 dataset was conducted in this work using various deep learning techniques including Long Short-term Memory, Convolutional Neural Network, Recurrent Neural Network, and Deep Neural Networks.

In 2024, Cen et al.22 introduced Zero-Ran Sniff (ZRS)-an early zero-day ransomware detection method that, via a zero-shot learning model, detects zero-day attacks at an early stage. ZRS successfully utilized PE header functionality of executable files for identifying malware. It was further separated into two stages based on the auto-encoding network, which were AE-CAL and SA-CNN-IS. In the AE-CAL stage, self-encoding networks were used to extract the necessary characteristics of both known and unknown ransomware classes, and the ransomware was identified in the SA-CNN-IS phase. So far, it has not encountered any attempts to use zero-shot learning for early detection of zero-day ransomware. Experimental data shows that the proposed ZRS outperformed the conventional machine by a large margin.

In 2024, Alshehri et al.23 proposed a generic FL-based deep anomaly detection framework for energy theft detection that is useful, dependable, and private. In the proposed system, each user trained local deep autoencoder-based detectors using their private electricity usage data to iteratively create a global anomaly detector. They then shared only the parameters of their trained detectors with an EUC aggregation server. According, to experimental results from the previous section, this anomaly detector outperformed the supervised detectors. Unbeknownst to the consumer, this proposed FL-based anomaly detector identifies zero-day threats of electricity theft.

In 2024, Roopak et al.24 proposed an unsupervised approach-based algorithm for detecting zero-day DDoS attacks against IoT networks. Random projection geometrically maps high-dimensional quantities into a low number of dimensions, which were then selected as features to reduce network data dimension. The authors have utilized an ensemble model comprising K-means, GMM, and one-class SVM for unsupervised classification to identify the coming data as an attack or normal using a hard voting method. The proposed approach was subjected to rigorous testing against the CIC-DDoS2019 datasets. The proposed approach, in comparison to other cutting-edge unsupervised learning-based methods, achieved an accuracy of 94.55%.

In 2024, Nhlapo and Nkongolo25 have selected the UGRansome dataset to identify the Zero-Day and Ransomware attacks by training different subsets with various ML models. These showed that while SVM and NB modes did not reach 100% on any of the metrics, RFC, XGBoost, and the Ensemble Method achieved a perfect score on all of them: accuracy, precision, recall, and F1 score. This construed that a similar pattern was viewed in comparison with those findings regarding research on ransomware detection. For instance, in a ransomware detection study using the UGRansome dataset, Decision Trees were found to perform better than SVM and Multilayer Perceptron (MLP), with accuracies of 98.83%, precision of 99.41% [(missing value)], recall of [(missing value)], and F-measure of [(missing value)]. Another comparative study on six algorithms, including Decision Trees, RFC, and XGBoost, demonstrated that the models were quite efficient; each of them had an accuracy score around 99.4% and was suited for real-time detection scenarios.

In 2021, Zheng et al.26 presented the deep convolutional autoencoder (MR-DCAE) model for identifying unlicensed broadcasting that is based on manifold regularization. After optimizing the specially created autoencoder (AE) using entropy-stochastic gradient descent, the reconstruction errors during the testing phase were used to ascertain whether the signals received are permitted. This model create a similarity estimator for manifolds with different dimensions as the penalty term to guarantee their invariance during the back-propagation of gradients in order to increase the discriminativeness of this indicator. Theoretically, an upper bound can be guaranteed for the degree of consistency between discrete approximations in the manifold regularization (MR) and the continuous objects that motivate it.

In 2025, Zheng et al.27 presented a robust AMC technique that used the asymmetric trilinear attention net (Tri-Net) with noisy activation function. The asymmetric trilinear representation module in Tri-Net was designed to handle different radio signal channels and extract rich information to enhance generalization. The model was then assisted in adapting to variable SNRs by a hybrid coding module that uses an attention mechanism based on squeeze and excitation (SE) blocks. Lastly, the classification module generate the predicted modulation schemes.

In 2025, Daneshfar et al.28 suggested Elastic Deep AE to improve the robustness of theElastic Deep Multi-view Autoencoder with Diversity Embedding (EDMVAE-DE) technique. Additionally, to increase the diversity of particular representations across different perspectives, this approach incorporate an exclusivity constraint term, which boosts modeling consistency and diversity inside a single framework. This model included a graph constraint term to handle issues caused by neighbors that are improperly classified.

From the above study it is clear that in16 S-Space complexity limits scalability to larger datasets and diverse malware families, in17 Z-DR metric do notcapture subtle feature variations, leading to undetected zero-day attacks, in18 regularization may not fully address overfitting, especially with highly imbalanced attack datasets, in19 SAE-LSTM feature extraction might overlook rare or subtle zero-day attack patterns, in20 ensemble approach complexity was increase computational cost and delay real-time detection, in21 WOA-SA hybrid optimization introduce convergence issues, affecting NIDS performance in dynamic environments, in22 ZRS’s reliance on PE header features miss non-standard or obfuscated ransomware attacks, in23 FL-based anomaly detection struggle with data heterogeneity across distributed consumer datasets, in24 unsupervised approach misclassify benign but uncommon network behaviours as zero-day attacks, in25 RFC, XGBoost was less effective in handling evolving or highly sophisticated ransomware variants. Hence a novelty is need to over come these challenges.

Problem statement

-

Pre-processing Stage: Dealing with high-dimensional data with noise while maintaining important information for accurate detection of zero-day exploits.

-

Feature Extraction Stage: The feature extraction stage captures the subtle patterns and anomalies associated with zero-day exploits that sparse and not easily discernible from normal behaviour.

-

Feature Selection Stage: Finding the most relevant features that deliver detection accuracy while minimizing resource costs in terms of computations, especially in cases where attack vectors are evolving.

-

HybridSeqEnsembleNet-based Zero-Day Exploits Detection: Create a method for real-time detection that deliver accuracy with low false positives under a dynamic environment where characteristics of attacks are changing and require quick adaptation of the ensemble model.

For instance, previous studies have extensively investigated ensemble techniques like stacking, bagging, and boosting to improve anomaly detection by utilizing the strengths of multiple models; a clear connection between these and the Adaptive Hybrid Exploit Detection Network (AHEDNet) would demonstrate how its dynamic ensemble adaptation expands upon or outperforms these conventional methods, particularly in terms of lowering false positives and adjusting to changing threats. Similarly, hybrid optimization techniques like genetic algorithms, particle swarm optimization (PSO), and other nature-inspired strategies have been used for feature selection and parameter tuning in cybersecurity contexts; a comparison between the Genetic Mongoose-Chameleon Optimization (GMCO) algorithm and these techniques would highlight its special advantages in striking a balance between exploration and exploitation in high-dimensional data. Additionally, the theoretical foundation of the Adaptive WavePCA-Autoencoder (AWPA) and Meta-Attention Transformer Autoencoder (MATA) would be strengthened by linking them to relevant work on preprocessing and feature extraction methods, such as the application of wavelet transforms, PCA, and transformer-based architectures in cybersecurity.

Proposed methodology

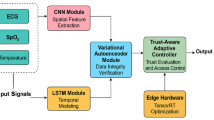

In order to mitigate issues associated with the pre-processing, feature extraction, feature selection, and detection in zero-day exploits, this manuscript proposed an innovative method that integrated advanced feature extraction techniques with strong feature selection methods. By utilizing stacked autoencoders for deep feature extraction as well as the multiple base algorithms of an ensemble neural network for improved accuracy for detection, this method is more effectively identify and utilize important features. This new hybrid framework improved detection rates and determined lower false positives, ensuring a more robust and adaptable defence against dynamic cyber adversaries. Figure 2 shows the Architecture of the proposed methodology.

Data acquisition

Data acquisition encompasses the crucial process of translating real-world signals into the digital realm, enabling visualization, storage, and analysis. Given that physical phenomena inherently manifest in the analog domain, the imperative arises for measurements in the tangible world, mandating subsequent conversion into the digital realm for comprehensive understanding and utilization.

Pre-processing stage

To overcome the denoising issues in the pre-processing stage a novel “Adaptive WavePCA-Autoencoder (AWPA)” was introduced in this approach. It tackles the challenge of high-dimensional data contaminated with noise by utilizing three distinctive methodologies. The first innovation, AWPA enhances the preprocessing stage by combining wavelet denoising, PCA-based dimensionality reduction, and autoencoding into a unified framework. This method ensures that only clean and relevant data is passed to subsequent stages, tackling the common issues of noise and redundancy in cybersecurity datasets. By isolating multi-resolution patterns using wavelet transforms, AWPA improves the signal-to-noise ratio, while PCA reduces the dimensionality of the data without compromising critical information. The autoencoder further denoises and compresses the data, ensuring that meaningful patterns are retained, which significantly improves the accuracy and efficiency of the detection system. The AWPA method is designed to enhance the preprocessing stage by addressing two key challenges—denoising and dimensionality reduction. This step is crucial in any anomaly detection system, especially when dealing with zero-day exploits, as it ensures that the model receives clean and relevant data for feature extraction and decision-making. First, it performs a Wavelet transform to decompose the data to isolate and reduce the noise through frequency components. Second, Principal Components Analysis (PCA) is used to perform dimensionality reduction on the denoised data and extracts the most critical features. Finally, an Autoencoder adapts to learn a global model effectively with higher accuracy, and ultimately performs a more compact yet efficient representation of the PCA features, while retaining the most meaningful information in the data set. This hybrid approach offers noise reduction, PCA dimensionality reduction, and feature extractions, which aids the overall accuracy and reliability of zero-day exploit detection. AWPA helps in transforming raw, noisy, and high-dimensional input data into a cleaner, more manageable format for the subsequent layers of the detection framework. This preprocessing ensures that the model can focus on identifying exploit patterns rather than being overwhelmed by irrelevant or noisy data, thereby improving the overall detection performance. The more details on this technique will be explained detailed in further section.

Wavelet transform

Wavelet transformation is effective in evaluating tiny waves. It is used for many purposes that include quick computation, pattern recognition, noise reduction, data compression, and shifting and scaling signals to change the temporal extension.

The basic working process of wavelet transform is shown in the Fig. 3. The Wavelet Transform compensates for high-dimensional data with noise by separating the data into different frequencies. The Wavelet Transform decomposes the data into different scales, which allows us to separate the high frequency noise from the low frequency signal. Then, to threshold the wavelet coefficients enables to more accurately suppress the noise while retaining critical data as it relates to detection. This ability to denoise the signals and retain important information across multiple scales aids one in the ability to better identify subtle non-patterns and anomalous behaviours associated with exploits. Additionally, the Wavelet Transform effectively suppresses non-significant statistical behaviours and work to augment the data quality, thus improving detection capabilities. To describe the wavelet transform process for handling high-dimensional data with noise in the decomposition is shown in the Eq. (1):

where \(\psi (t)\) is the wavelet function, \(a\) is the scale parameter, \(b\) is the translation parameter, and \({\psi }^{*}\) is the complex conjugate of the wavelet function. For discrete signals, the discrete wavelet transform will typically be represented as in Eq. (2):

where \({\psi }_{j,k}[n]\) is the discrete wavelet function at scale \(j\) and position \(k.\) It performs thresholding on the wavelet coefficients to suppress noise. A common thresholding function is represented in the Eq. (3):

where \(\lambda\) is the threshold value. This equation eliminates all coefficients that are lower than the threshold (they become zero), and thus it has reduced noise. To recover the signal from the thresholded coefficients, then it invokes the inverse wavelet transform given in Eq. (4):

where \({W}_{x}\left(a,b\right)\) stands for the thresholded wavelet coefficients. These equations collectively describe the action of decomposing, thresholding, and reconstructing the signal to handle noise and retain meaningful features for reliable zero-day exploit detection. The wavelet component of AWPA is used to capture multi-resolution details in the data. In the context of cybersecurity, network traffic or system behavior data can have multi-scale patterns, making wavelet transforms particularly useful for detecting irregularities. They help isolate useful features from noise, ensuring that the model is trained on more informative inputs.

Principal components analysis (PCA)

A method of statistics referred to as principal component analysis (PCA) separates a collection of variables into a smaller collection of principal components (PCs), therefore the dimensionality of the data set is reduced.

The Fig. 4 shows the basic structure of PCA. As discussed earlier, PCA is a great tool to lower dimensional feature space of high-dimensional data wherein the data is transformed into a different set of co-ordinates known as principal components. Every component is an aggregation of the initial characteristics, which is arranged according to the degree of variance from the data. Mathematically, for PCA the eigenvectors and the eigenvalues of the covariance matrix are then calculated. \(\Sigma\) of the data is expressed in Eq. (5):

where, \({x}_{i}\) are the information, hence put together a series of contexts below that contain the data points and information that wanted to pass across. \(\underline{x}\) is the mean vector. The principal components are the first eigenvectors gotten from the first largest eigenvalues. Thus, by concentrating on principal components, the PCA technique excludes noise and preserves the maximum variance that will in turn help detect meaningful correlation with the zero-day exploits and improve their detection rates. PCA is employed to reduce the dimensionality of the feature space. This is important for speeding up model training and inference, as well as improving detection accuracy. By reducing the number of features, PCA helps the system focus on the most influential characteristics of the data, improving the signal-to-noise ratio.

Autoencoders

A particular kind of neural network called an autoencoder is able to decompress and then reassemble text, pictures, and other data. Three layers comprise an autoencoder: encoder. Code. Breaker.

The basic structure of autoencoders is shown in the Fig. 5. Autoencoders are complex neural networks that specifically have been developed for the purpose of encoding and decoding data sets. They consist of two main parts: the encoder and the decoder. The encoder function maps the input data to a lower-dimensional manifold space, thus removing run-time noise and concentrating only on the important aspects. This compression brings out the relevant patterns and structures that are in the data. The decoder then converts this compressed representation back into data, trying its best to replicate the input data. This reconstruction process allows the important information to be retained as much as possible while reducing the effects of noise. Autoencoders improve how zero-day exploits are detected since by maintaining key features and filtering out other noises, it becomes easier to identify complex patterns of attacks. To explain how autoencoders work, operating in learning effective representation of data as well as dealing with noise, the encoder transforms the input \(x\) into the latent and the output space \(x^{\prime }\) in the latent space. \(z\) using a function \(f\) as represented in Eq. (6):

where, \({\theta }_{e}\) represents variables of the encoder network. The decoder reconstructs the input \(\widehat{x}\) from the latent representation \(z\) using a function \(g\) which shown in Eq. (7):

where, \({\theta }_{d}\) indicates the hyper-parameters of the decoding neural network. The reconstruction loss estimates the model by comparing it with an original input. \(x\) and the reconstructed output, where the latter is the converted document \(\widehat{x}\). Commonly used loss functions include Mean Squared Error (MSE) which shown inthe Eq. (8):

where \(n\) is the number of samples, and \({||\bullet ||}^{2}\) denotes the squared Euclidean distance. The overall objective of the training an autoencoder is to minimize the reconstruction loss as described in the Eq. (9):

This optimization process makes sure that the networks learn to encode and decode the information in an optimal manner and selectively ignore the noise. To further improve noise handling, regularization techniques such as dropout or L1/L2 regularization may be applied to the encoder or decoder layers as shown in the Eq. (10):

where, \(\lambda\) is the regularization strength and \({\theta }_{k}\) represents the weights of the network. These equations collectively describe the process of encoding, reconstructing, and optimizing data in autoencoders to achieve efficient feature extraction and noise reduction. The autoencoder is used in the AWPA to further enhance feature extraction by learning a compact, low-dimensional representation of the input data. This component helps in denoising and reducing the input size without losing critical information.

Feature extraction

After pre-processing stage, the output is taken as an input for the feature extraction in which a novel “Meta-Attention Transformer Autoencoder (MATA)” was introduced in this approach in order to solve the subtlety issue in the feature extraction stage. It solves the problem of recognizing complex patterns and outliers inherent in zero-day attacks with integrated approaches. The second innovation, MATA, advances feature extraction by addressing the subtlety issue in anomaly detection. Zero-day exploits often exhibit subtle, complex, and variable patterns that evade traditional methods. MATA leverages a meta-attention mechanism to dynamically focus on the most relevant features in the data, adapting its focus to changing contexts. By integrating a transformer architecture, MATA captures long-range dependencies and intricate relationships, making it particularly effective for identifying multi-step attack sequences. The autoencoder component further abstracts and compresses the features, helping isolate exploit-relevant signals even when masked by normal system behavior. This innovation enhances the model’s ability to detect both known and novel threats, significantly improving its adaptability and flexibility. The MATA method aims to address the subtlety issue in feature extraction, enhancing the model’s ability to detect new, previously unseen zero-day exploits. Detecting such exploits requires the model to not only recognize known attack patterns but also to generalize well to novel threats. First, the Meta-Attention Autoencoder is designed to reconstruct data while utilizing attention mechanisms to emphasize features and alleviating the noise which helps capture enhanced details of anomalies. Next, the Transformer with Self-Attention processes these encoded features for the detection of relevant features’ weights and to identify relations that could indicate potential zero-day exploitations. Finally, Few-Shot Meta-Learning allows the framework to quickly recognize new, previously unseen patterns with a few labelled samples, enhancing its capacity for recognizing rare and sparse anomalies efficiently. This combined approach improves the model’s ability and flexibility to detect new security threats that are hitherto unknown to the system. The more details on these techniques will be explained in further section. MATA enhances the model’s ability to extract meaningful features from complex and subtle data. By focusing on the most relevant information using attention mechanisms, the model is better equipped to detect both known and novel security threats, improving the adaptability of the overall detection system.

Deep autoencoders with attention mechanisms

Six distinct activation functions are used to build a deep multiple auto-encoder with attention mechanism network. These functions extract various defect features and adaptively assess each DAE’s contribution.

Figure 6 displays the basic structure of Deep Autoencoders with Attention Mechanisms. This is very important for detecting zero-day exploits because deep autoencoders incorporated with attention mechanisms improve the model’s ability to learn. In simple terms, the objective of constructing an autoencoder is to minimize the dimensionality of the data and then learn how it is reconstructed. Introducing the attention mechanism to the model lets it pay more attention to something in the input data, leaving less attention to other parts or increasing attention to features of the input data that contribute least to the reconstruction error. This has the advantage in pointing out areas that have small variations, which do not be detected by autoencoders using the ordinary approach. By focusing on these critical features, the model identifies strange and rare outliers which, in this case, represent zero-day exploits, thus enhancing the detection capability in general. Attention mechanism computes a set of attention weights over input elements, when it is combined with stacked denoising autoencoders, it enables the network to pay attention to subtle patterns and anomalies in the input data. \(\alpha\) for each feature \({x}_{i}\) in the input data \(X.\) These weights are used to weigh the important features when encoding as described in Eq. (11):

where, \(score({x}_{i})\) is an importance of feature function. \({x}_{i}\) based on its contribution to the reconstruction error. The attention weights \({\alpha }_{i}\) bring out the characteristics that have the most variance, and thus making it easier to spot the subtle abnormalities. The use of an autoencoder in MATA continues the feature extraction process but with a focus on learning a compressed and more abstract representation of the input data. This is useful for detecting hidden patterns in data and distinguishing between normal system behavior and potential attacks. The use of an autoencoder in MATA continues the feature extraction process but with a focus on learning a compressed and more abstract representation of the input data. This is useful for detecting hidden patterns in data and distinguishing between normal system behavior and potential attacks.

Transformers with self-attention

Self-attention turns the input sequence into three vectors: query, key, and value. To create these vectors, the input goes through a linear transformation. The attention process then figures out a weighted sum of the values. It does this by looking at how similar the query and key vectors are to each other. Transformers are effective in capturing long-range dependencies and subtle relationships between features. In the context of zero-day exploit detection, the transformer helps in modeling complexinteractions and dependencies in the input data, which might be crucial for identifying subtle anomalies that would go undetected by traditional methods.

The basic structure of transformers with self-attention is shown in the Fig. 7. Transformers use self-attention to figure out what matters most in data. This self-attention looks at each part of the data in relation to all the other parts. It helps the model zero in on what’s important and ignore what’s not. This skill is key to spotting faint scattered signs, like those of new unknown threats. The self-attention process creates scores that show how different parts of the data connect. This lets the model catch odd things that do not be obvious at first glance. This method works well with big complex data sets where important clues are spread out. It studies how all the pieces fit together to find strange rare changes that signals a new kind of attack. To explain how transformers use self-attention mechanisms to assess feature importance and spot subtle patterns, the self-attention mechanism calculates the output for each feature \({x}_{i}\) as a weighted sum of all features. The weights in this calculation are the attention scores \({a}_{ij}\), which Eq. (12) details:

\({V}_{j}\) represents the value vector linked to feature \({x}_{j}\). This equation combines information from all features \({x}_{j}\), weighted by their attention scores \({a}_{ij}\), enabling the model to concentrate on significant features and identify subtle patterns associated with zero-day exploits.ransformers are effective in capturing long-range dependencies and subtle relationships between features. In the context of zero-day exploit detection, the transformer helps in modeling complex interactions and dependencies in the input data, which might be crucial for identifying subtle anomalies that would go undetected by traditional methods.

Few-Shot learning with meta-learning

Few-shot learning combined with meta-learning allows models to rapidly learn from a small number of examples by utilizing prior knowledge gained from various tasks. While meta-learning prepares models to adjust effectively to new tasks, few-shot learning emphasizes the ability to generalize from minimal data. This method is particularly beneficial in situations where labelled data is limited.

Figure 8 shows the working process of Few shot learning with Meta learning. Few-shot learning with the help of meta-learning methods is meant for addressing situations, where there are very few labelled examples available, a common problem in zero-day exploits detection. Meta-learning models such as Model Agnostic Meta Learning (MAML) are designed to quickly adjust themselves to unseen tasks using small amounts of data. These models utilize knowledge acquired from a broad range of in related tasks primarily to generalize better on subtle and sparse patterns that characterize zero-day exploits. This approach enhances the system’s ability to detect rare anomalies by rapidly adapting to new patterns after only some few examples thereby improving its proficiency in spotting previously undetected threats much significantly leading to an increase in detection capabilities. Few-Shot Learning with Meta-Learning in the aspects of Few-Shot Learning which particularly focused on Model-Agnostic Meta-Learning (MAML) which tries to learn an initial set of model weights \(\theta\) such that by taking a few gradient steps, this is get good generalization for a new task. For brevity, this is typically abbreviated to an Eq. (13):

where, \({T}_{i}\) for indicating a single task sampled from the distribution \(p(T).\) \({L}_{{T}_{i}}\) is the loss for task \({T}_{i}\). \(\theta\) are the model parameters to be enforced. Where \(\alpha\) is a learning rate for adaptation to task \(i\). Once the optimized meta-learned parameters \({\theta }^{*}\), belonging to a task \({T}_{new}\) after seeing it for \(K\) training steps, using Eq. (14) the model generalizes on new tasks as:

where, \({\theta }_{new}\) refers to the adapted parameters for a new task \({T}_{new}\). \({L}_{{T}_{new}}\left({\theta }^{*}\right)\) is the new loss in terms of a function. These equations describe how the meta-learning model is optimised so it quickly generalises to new few-shot tasks useful for zero-day exploitation detection. The meta-attention component improves the focus of the model on the most salient features during the feature extraction process. By applying attention mechanisms in a meta-learning context, the system can dynamically adjust which features are given more weight based on their relevance to the task at hand. This is particularly useful in cybersecurity, where new, subtle attack patterns might emerge that are distinct from historical threats.

Feature selection

After the feature extraction stage, the output will be considered as an input for the feature selection stage, in which to overcome the efficiency challenges in the feature selection stage a novel “Genetic Mongoose-Chameleon Optimization (GMCO)” was introduced in this approach. It solves the problem of selecting highly discriminative features with respect to an attack while minimizing costs in terms of computational resources, especially during periods when new types or versions of attacks become common. The third innovation, GMCO introduces a novel biologically-inspired optimization algorithm to improve feature selection. GMCO balances exploration and exploitation in the feature space by combining mongoose-inspired aggressive searching with chameleon-inspired adaptive strategies. This dual mechanism ensures that the system identifies the most informative features, reducing computational overhead while maximizing detection accuracy. The chameleon-like adaptability of GMCO is particularly valuable in cybersecurity, where data distributions and exploit characteristics can change rapidly. By selecting only themost relevant features, GMCO improves computational efficiency and ensures the system’s scalability, making it suitable for real-time applications. GMCO is introduced as a novel optimization algorithm aimed at improving feature selection, which is critical for handling large, complex datasets efficiently. By selecting the most relevant features, GMCO balances the trade-off between computational efficiency and detection accuracy. GMCO expects the use of Genetic Algorithms (GA) to help explore a large search space, experimenting with many different subset features. The Dwarf Mongoose Optimization Algorithm (DMOA) subsequently increases the search in a bid of better precision by improving GA discovered quite certain subsets. Relying on the Chameleon Swarm Algorithm (ChSA) as a means of dynamically adjusting to shifts in the data landscape, this approach maintains feature selection validity and efficacy with respect to increasingly sophisticated attack vectors. By combining these distinct tools, this hybrid methodology balances the need for exploration and precision while maximizing detection accuracy and computational efficiency. GMCO optimizes the feature selection process within the detection framework, ensuring that the model is not overwhelmed by irrelevant or excessive features. By improving the efficiency of feature selection, GMCO enhances the computational speed of the detection system and ensures that the model remains adaptable to varying data inputs. The more details on these techniques will be explained in further section.

Genetic algorithms (GA)

The Genetic Algorithm (GA) for short (specifically a kind of evolutionary algorithm), mimics the selection operator from natural evolution. Genetic algorithms are often used to find high quality solutions for optimisation and search problems which use biologically inspired operators such as mutation, crossover and selection.

The basic structure of Genetic Algorithm is represented in the Fig. 9. GA optimizes the search of features too, but with lower computational cost. The GA works by emulating natural selection, iteratively evaluating candidate feature subsets according to their impact on detection accuracy by concentrating on the high-quality features, GA reduces the complexity of detection model which results in reduced computation. Not only does this streamline the process, but it also provides an additional layer of flexibility as these relevant new features is selected in response to trending changes in attack vectors. This way, GA guarantees that the model is accurate and robust with time as well a fair trade-off to get an optimal solution for both detection accuracy & resource utilization. To introduce the main idea of feature selection with GA, let fitness function to be as follows, to evaluate how well each candidate feature subset \({S}_{i}\). The aim is to find the optimal subset of actionable features that minimizes computational operational time and ensures a high rate of detection. The fitness function is an expression as Eq. (15):

where, \({S}_{i}\) is a subset of features. \(Accuracy\left({S}_{i}\right)\) conceive to be represent detection accuracy which subset \(|{S}_{i}|\) is the number of characteristics in subset \({S}_{i}.\) This will be equal to the number of features available, denoted by \(\mid F\mid .\) \(\alpha\) and \(\beta\) is the trade-off is balanced by the weighting factors \(\beta\). A function that the algorithm uses to pick parent feature subsets \({S}_{i}\) and \({S}_{j}\) according to their fitness scores. The offspring \({S}_{new}\) generated through crossover are new subsets of the population as shown in the Eq. (16):

There, \(Crossover\) refers to a method for merging attributes belonging to \({S}_{i}\) and \({S}_{j}\) such that they yield a range that is different from their parents. In order to keep variety among members of the community as well as producing alternative possibilities, mutation activities have been included in the descendants according to Eq. (17):

where there is a will there is away, \(Mutate\) is used to generate \({S}_{mut}\), developed by \({S}_{new}\) with some randomly altered parameters and will then include more applicable combinations of features within it. These equations explain how one selects features using GAs and optimizes detection accuracy while minimizing computing costs at the same time. The genetic algorithm is used for its ability to explore large search spaces and converge toward optimal solutions. In feature selection, it helps identify the most relevant features for detection by evaluating multiple combinations and selecting the best-performing subsets.

Dwarf Mongoose optimization algorithm

The dwarf mongoose optimization algorithm is a metaheuristic algorithm designed to address optimization issues by imitating the foraging habits of dwarf mongooses. The social structure and foraging behaviours of dwarf mongooses in their native habitat served as the basis for the development of the algorithm.

The working process of Dwarf Mongoose Optimization Algorithm is described in the Fig. 10. The Dwarf Mongoose Optimization Algorithm seeks to improve relevant variable search efforts by paying special attention to promising regions in the feature space. First, the algorithm identifies candidate feature subsets for evaluation, with regards to their initial performance. Then, Dwarf Mongoose Optimization Algorithm carries out a selective search based on these regions of interest, consequently modifying the set of features selected for enhancement of identification accuracy. This process minimizes the inclusion of irrelevant or redundant features, thereby reducing computational costs. Further still, its versatility enables rapid adjustments during datasets’ alterations making it particularly useful when the attack vectors are shifting dynamically. Therefore, through refinement and optimization of the selection process Dwarf Mongoose Optimization Algorithm achieves both precision and optimization of zero-day exploits detection. For the purpose of elucidating how Dwarf Mongoose Optimization Algorithm tackles feature selection for identifying zero-day attacks, The Dwarf Mongoose Optimization Algorithm evaluates every possible set of features by means of a fitness function \(F(S),\) which is generally defined according to the Eq. (18):

Here, \(S\) depicts a feature subcollection. \(Accuracy(S)\) signifies the precision of detection when applying the fraction \(S.\) \(Cost(S)\) signifies the computational resource cost associated with \(S.\) \(\alpha\) and \(\beta\) are weighting factors that balance the importance of accuracy and cost. Dwarf Mongoose Optimization Algorithm tightens prospective feature subsets by concentrating the search around them. This is expressed mathematically with the help of Eq. (19):

The feature subset at cycle t is represented by \({S}_{t}\). The gradient of the fitness function \(\nabla F\left({S}_{t}\right)\) tells us how \(F\left({S}_{t}\right)\) is changing. The size of the step deciding how much the update changes is denoted by \(\lambda .\) Dwarf Mongoose Optimization Algorithm automatically updates a selection of features based on some evolving dataset, so it remains meaningful ‘forever’. In Eq. (20) this adjustment is written out as:

Here, the current and past appraisals’ impact is moderated through the application of \(\gamma\) that helps this algorithm move along with evolving kinds of assaults. In summary, these mathematical expressions showcase Dwarf Mongoose Optimization Algorithm’s ability to keep an excellent balance between the precision of recognition and computation expenses thus making it possible for effective selection of features in changing conditions.

Chameleon swarm algorithm

Chameleon Swarm Algorithm (ChSA) is optimization methodology using Chameleons adaptive and dynamic behaviour in a natural environment as the main source of its inspiration. The ability of Chameleons to change colour in conjunction with their surroundings makes them adaptable mechanisms that are precise and is respond dynamically to stimuli from the environment.

Figure 11 shows the basic structure of ChSA. The ChSA presents a solution to the difficulty in locating relevant features to ensure detection accuracy in the face of evolving attack vectors by exploiting the adaptive properties of chameleons. ChSA adjusts its exploration and exploitation phases in a versatile manner so that it searches for optimal features in high-dimensional searches. This adaptability allows the algorithm to respond to changes in attack vectors, while still maintaining high detection accuracy. Furthermore, the suitable search mechanism of ChSA minimizes resource costs by only probing into the most promising features of the feature space; reducing the need for unrestricted searching. The combination of adaptability and efficiency is well suited to the challenges of evolving cybersecurity. To discuss how the ChSA addresses the challenge of establishing relevant features while minimizing the costs of computational resources, the algorithm adjusts each chameleon’s (representing a potential solution) position in the search space, based on its current position, velocity, and adaptively controlled exploration–exploitation trade-off in Eq. (21):

where, \({X}_{i}\left(t\right)\) represents the position of chameleon \(i\) at time \(t\). \({V}_{i}(t)\) represents the velocity of chameleon \(i\) at time \(t\). Each chameleon’s velocity was adjusted to provide a balance between exploration and exploitation, focusing the search thoroughly and intentionally as expressed in Eq. (22):

where, \(\omega\) is inertia weight, which balance between exploitation and exploration is regulated. \({c}_{1}\) and \({c}_{2}\) are acceleration coefficients. \({r}_{1}\) and \({r}_{2}\) are values which are random and uniformly distributed in the interval [0, 1]. \({P}_{best,i}\left(t\right)\) will be in the form of \(i\) up to time \(t\). \({G}_{best}\left(t\right)\) is the optimum position for the swarm that has been found out during the execution of the program. The fitness function compares the efficiency of selected features for relevance in detection accuracy and computation time. A typical fitness function is expressed in the Eq. (23):

where, \(A\left({X}_{i}\right)\) is the detection accuracy for the feature set \({X}_{i}\). \(C\left({X}_{i}\right)\) is one of the issues with this approach is its computational complexity associated with \({X}_{i}\). \(\alpha\) and \(\beta\) are factors for accuracy-cost trade-off. ChSA includes an adaptive mechanism where the exploration–exploitation balance is adjusted dynamically based on the diversity of solutions in the swarm as described in the Eq. (24):

where, \(\omega \left(t\right)\) is, thus,the inertia weight adopted at time \(t\) to be adjusted. \(D(t)\) conceives the about diversity of the swarm at time \(t\). \({D}_{max}\) is the general degree of polymorphism which characterizes the population is identified as being the measure of diversity at its maximum. These equations describe how ChSA modifies and makes the search efficient with an accurate detection rate depending upon the system. This hybrid mechanism adapts the genetic algorithm by introducing elements inspired by mongoose and chameleon behaviors. The mongoose represents a proactive search for optimal solutions, while the chameleon adapts its strategies based on environmental factors, allowing GMCO to adapt to varying conditions in the data. This flexibility is crucial when dealing with diverse and dynamic security data.

HybridSeqEnsembleNet-based zero-day exploits detection

After the completion of feature selection stage, the output was taken for the detection purpose as an input, in which to overcome the DynamicEnsembleAdaptation challenges in the detection stage related to zero-day exploits a novel “Adaptive Hybrid Exploit Detection Network (AHEDNet)” was introduced in this approach. AHEDNet addresses the dynamic ensemble adaptation challenge, allowing the system to adapt to changing conditions in the data and continuously update the features of interest to improve detection accuracy. The fourth and final innovation, AHEDNet addresses the critical challenge of dynamic ensemble adaptation. AHEDNet combines the strengths of multiple models, such as CNN, GRU, RNN, and LSTM, to create a hybrid system that dynamically adapts to evolving threats. By continuously updating its ensemble configuration and feature selection, AHEDNet ensures high detection accuracy while reducing false positives. This adaptability is crucial for maintaining the system’s relevance and effectiveness in the face of emerging zero-day exploits. Additionally, its ability to balance high detection rates with low false positive rates minimizes alarm fatigue, a common issue in cybersecurity environments. It tackles the real-time detection in dynamic surroundings using GRU-CNN for pattern-recognition in sequences, RCNN-BiLSTM for context-analysis and an Adaptive Ensemble Neural Network, for dynamic model switching. AHEDNet is flexible to the changing characteristics of an attack by adjusting their sub-network’s contributions according to the real-time data feeds. The hybrid nature of AHEDNet enables it to combine the strengths of multiple models or classifiers, creating an ensemble that adapts to new threats. This approach helps ensure that the model remains accurate over time as new zero-day exploits emerge. This ensures high detection accuracy while at the same time reducing the possibilities of false positives since the network keeps on updating the chosen features of interest. AHEDNet continually updates its feature selection process, ensuring that the model is always focused on the most relevant and current features. This adaptability is essential in cybersecurity, where the landscape of threats evolves rapidly. Thus, adaptability of the ensemble enables AHEDNet to remain effective in spite of a dynamic environment and changing attack vectors. AHEDNet ensures that the system maintains a high detection rate while minimizing false positives. This is critical in practical scenarios where minimizing alarm fatigue and ensuring accurate threat identification are key priorities. The more details on this technique will be explained in further section.

Adaptive ensemble neural network

Neural networks of the adaptive ensembles class are used in the event of dynamic environments. That is what they offer as a unique learning experience online learning. Neurons the internal parameters of which are defined by several factors, consists of the following: network structure modification, weight modification and neuronal properties’ manipulation.

The basic structure of adaptive ensemble neural network is displayed in the Fig. 12. The Adaptive Ensemble Neural Network improves real time detection because of its adaptive weighting of its model’s part that constantly change. In case of introduction of a new type of attack, the gating network adjusts to the most accurate base models so as to keep the ensemble relevant. This framework mitigates the false positive probability since gating network eliminates unrelated patterns and noise, giving only the most significant models their weights on the final decision of the Adaptive Ensemble Neural Network model. This employ ability allows Adaptive Ensemble Neural Network radar to adapt to changing attack patterns in real-time without necessary retraining, thereby achieving high detection rates in ever changing settings. It denotes the outputs of the base models to be \({O}_{1},{O}_{2},{O}_{3},\dots ,{O}_{n}\), and the weights of the parameters which are given by the gating network be \({w}_{1},{w}_{2},{w}_{3},\dots ,{w}_{n}\). The final output \({O}_{final}\) is given by in the Eq. (25):

where the weights \({w}_{i}\) that change their value in dependence to the input features which are currently set in the system. Such architecture keeps the AENN updated to the changing environment reducing false alarms but at the same time boosting the detection rate.

CNN-GRU

A CNN-GRU model with an attention mechanism helps predict load and power prices in power systems. This approach aims to create an effective energy storage policy while tackling the problems of limited historical data and the intricacies of previous deep learning methods when dealing with long sequences. What’s more, the model boosts the accuracy of the prediction.

Figure 13 illustrates the working process of CNN-GRU. Annoying real-time detection in dynamic settings, the CNN-GRU method leverages the hasty detection of Convolutional Neural Networks (CNNs) and the controllable propagation capability of Gated Recurrent Units (GRUs). CNNs as a result are great in spatial features where they identify patterns of the data that indicate an attack. While, the GRUs are capable of handling sequential data by identifying the temporal properties and dynamic patterns of the attacks. This combination enables the CNN-GRU model to learn from new data and adjust quickly on new type of attacks accordingly. Moreover, long-term memory offered by the GRU makes a classifier less likely to produce false positives, as all the noise is blocked. Altogether, working in parallel, CNN-GRU achieves reliable, low delay detection that respond to the shifting of threats in real-time mode. To explain how the CNN-GRU approach tackles real-time detection with precision and few false alarms, the convolutional layer in CNN pulls out spatial characteristics from the input data as shown in Eq. (26):

where, \({F}_{l}\left(i,j\right)\) stands for the feature map output at position \((i, j)\) in layer \(l\). \(X\) represents the input data. \(K\) signifies the convolutional kernel/filter. \(b\) denotes the bias term. \(\sigma\) indicates the activation function (e.g., ReLU). The GRU handles sequential data to capture temporal dependency and updates according to Eq. (27):

where, \({z}_{t}\) is the update and reset gates. \({h}_{t}\) is the hidden state at time \(t.\) \({x}_{t}\) is the input at time \(t.\) \(W, U\) and \(b\) are weights and biases. Combining CNN and GRU it gets in the Eq. (28) form:

where, \(F\) signifies the CNN’s feature map output. \(H\) depicts the GRU’s series of concealed states. After passing through CNN and GRU such that it reaches an Eq. (29):

where, \(\widehat{y}\) is the predicted class probabilities. \({W}_{c}\) and \({b}_{c}\) are the weights and biases of the final classification layer. These mathematical representations explain the methods by which CNN extracts spatial features while GRU captures temporal dependencies to achieve accurate real-time detection with fewer false positives.

RCNN-BiLSTM

It is a BiLSTM (Bi-directional Long Short-Term Memory)-RCNN (Region based Convolutional Neural Networks) that works like the above network. This is the source of where it was published from. Using Deep Learning Model with Attention Mechanism for ECG Based Psychological Stress Detection.

Figure 14 shows the basic structure of RCNN-BiLSTM. The combination of Region-based Convolutional Neural Networks (RCNN) and Bidirectional Long Short-Term Memory (BiLSTM) frameworks improves real-time detection in variable environments through the RCNN-BiLSTM algorithm. Extracting spatial features out of segmented regions of interest is what RCNN does effectively thus enabling the identification of unique attack patterns. However, it is through BiLSTM taking these features in time sequence that one will be able to note both previous and subsequent points which are crucial when understanding changing characteristics of an attack. Therefore, this model promptly responds even to new threats because it analyses spatial characteristics and temporal dependencies at once. With its ability to effectively adjust to changing patterns of attacks and having low false positives, the RCNN-BiLSTM algorithm offers precise detection statistics by combining detailed feature extraction from RCNN with context analysis from BiLSTM. Real-time detection problems are addressed by the RCNN-BiLSTM approach, in which the RCNN extracts features from the region of interest (ROIs) as shown in Eq. (30):

Stated as, \({P}_{i}\) is the given region proposal set. \(X\) is an example of an input. The extracted features do capture temporal dependencies that are processed by the BiLSTM and updated in Eq. (31):

where, \({h}_{t}^{f}\) is the forward hidden state at time \(t\), \({h}_{t}^{b}\) is the backward hidden state at time \(t\). \({LSTM}_{f,b}\) represents the forward and backward of the LSTM cell. \({h}_{t}\) is the combined hidden state of the both directions. After processing through RCNN and BiLSTM the final classification is expressed in the Eq. (32):

where, \(\widehat{y}\) is the predicted class probabilities. \({W}_{c}\) and \({b}_{c}\) are the weights and biases of the final classification layer. These equations illustrate how RCNN-BiLSTM leverages detailed feature extraction and temporal context to adapt to changing attack patterns, achieving accurate real-time detection with low false positives. AHEDNet operates as the final layer of the detection framework, where its dynamic, adaptive nature allows it to integrate the outputs of the previous stages (AWPA, MATA, and GMCO) and make accurate, real-time decisions about the presence of zero-day exploits. By continuously refining its understanding of what constitutes a threat, AHEDNet ensures high detection performance with minimal false alarms.

Results and discussion

The section presents this proposed methods’ performances on yet unseen vulnerabilities and, in extensiveness, details on accuracy, detection rates, and false positives from this approach. The discussion will look at how the method adapts to evolving attack vectors, its efficiency in real-time detection, and its ability to minimize false positives. These findings give insight toward much-needed enhancement in the efficacy of detecting zero-day exploit detection and response methodologies. The dataset is split into 70 and 80% of training also 30 and 20% of testing for both the datasets.

Dataset description

UGRansome dataset

A flexible cybersecurity resource, the UGRansome dataset is intended for the examination of ransomware and zero-day cyberattacks (https://www.kaggle.com/datasets/nkongolo/ugransome-dataset), especially those thathave cyclostationary patterns. Time stamps for tracking attack duration, flags for classifying attack kinds, protocol information for comprehending attack pathways, network flow details for observing data transfer patterns, and ransomware family classifications are just a few of the crucial elements included in this collection. Additionally, it estimates financial harm in both USD and bitcoins (BTC), offers information about the related virus, and uses quantitative clustering for pattern recognition.

Zero-day attack detection in logistics networks

This dataset includes 400,000 network traffic entries gathered from logistics networks at major U.S. airports, such as those in Washington and Texas which is included in (https://www.kaggle.com/datasets/datasetengineer/zero-day-attack-detection-in-logistics-networks). Because it offers a realistic perspective of network activity with both malicious and benign traffic, the dataset is a priceless tool for network analysis and cybersecurity academics and practitioners.

Overall performance metrics for 70 of training for dataset 1

Table 1 depicts the performance metrics for CNN, GRU, RNN, LSTM, and the proposed model with 70% of training. For most of these metrics, the proposed model achieves the best results on accuracy, precision, recall, F1 score, R2 score, MCC, Cohen’s Kappa, and Jaccard score. The highest accuracy has been achieved by RNN at 0.980143 than the proposed model at 0.988086. However, the proposed model outperforms RNN in all aspects: precision, recall, F1 score, R2 score, MCC, Cohen’s Kappa, and Jaccard score. The proposed model precision, recall and F1-score are 0.987976, 0.988298, 0.988089. Besides, GRU and LSTM also achieved quite good performance, but GRU slightly outperformed LSTM on most metrics. The CNN model tends to be the poorest among these other existing models. The overall performance of the proposed model is the best with a minimum Hamming loss of 0.011914.

Overall performance metrics for 80 of training for dataset 1

Table 2 depicts the performance metrics for CNN, GRU, RNN, LSTM, and a proposed model with an 80% of training. the proposed model outperformed the other models in terms of all the metrics it has towards depicting more power in handling the dataset. The maximum accuracy for the model proposed stands as 0.990469 while being higher than that of RNN at 0.983320. The proposed model also leads in precision with a score of 0.990628. The R2 score from the proposed model comes out to be 0.968296. The proposed model has the lowest hamming loss of 0.009531, which indicates the minimal rate of misclassification. By the proposed model, this would result in a Jaccard score of 0.981206.

Graphic representation for 70% and 80% of training of dataset 1

Figure 15 illlustrates Graphical representation of accuracy of suggested and other current models. In 70% of training, the proposed model outperforms with maximum accuracy of 0.988086. Its closest competitor was the RNN model that achieved an accuracy of 0.980143, thereby establishing that this is the best among the traditional models. For 80% of training also, the proposed model was leading with an accuracy of 0.990469. The RNN, too, remained aggressive, with an accuracy of 0.983320.

Figure 16 shows the graphical representation for Precision in the proposed model, along with other existing models. The proposed model gave the highest precision of 0.987976 for a training of 70%. It was followed closely by the RNN model at 0.980113. Lastly, the proposed model gave the highest precision of 0.990628 for 80% of training. RNN maintained a high precision score of 0.983125.

Figure 17 illustrates Graphical representation of Recall for proposed and other existing models. For 70% of training, the highest recall for the proposed model is 0.988298. The RNN model is coming near with a recall of 0.979658. The proposed model again topped during 80% of training with a recall score of 0.990435. The RNN maintained its recall at a competitive score of 0.983285.

Figure 18: Graphical representation of F1 score for proposed and other existing models. At 70% of training, the proposed model gave an F1 score of 0.988089, showing that precision and recall are well-balanced. The next best model is the RNN model with an F1 score of 0.979874. During 80% of training, the proposed model led the race with an F1 score of 0.990512.

Figure 19 presents the graphical representation of the R2 score developed by the proposed model, along with other existing models. For 70% of training, the proposed model developed the highest R2 score of 0.967138, showing how well the predicted and actual values fitted. The RNN model comes close with a developed R2 score of 0.950213. During 80% of training, the proposed model takes another lead in an R2 score of 0.968296. RNN keeps close competition in its R2 score to 0.961792.

Figure 20 represents the Graphical representation of MCC for proposed and other existing models. The proposed model reached a maximum MCC of 0.982166 for 70% of training. Its closest competitor is the RNN model, which recorded an MCC of 0.970148 for the same training percentile. In the case of 80% training, the proposed model wins with an MCC of 0.985715. Again, RNN is close with a MCC of0.974929.

Figure 21 shows the graphical representation for Cohen’s Kappa proposed and other existing models. For 70% training, the highest Cohen’s Kappa of 0.982121 was achieved by a proposed model. Next to this is RNN with a Cohen’s Kappa score of 0.970136. During 80% of training, the proposed model still tops the ranking with a Cohen’s Kappa of 0.985695, which again is followed by the RNN that achieved a Kappa of 0.974922.

Figure 22 presents a graph of Hamming Loss in the proposed model and other existing models. During 70% of training, the proposed model reached the minimum Hamming Loss of 0.011914. The RNN model showed the second-best Hamming Loss of 0.019857. During 80% of training, again the proposed model topped with the minimum Hamming Loss of 0.009531. The RNN continued to be the second-best performer, but now with a Hamming loss of 0.016680.

Figure 23 illustrates Graphical representation of Jaccard Score for the proposed and other existing models regarding 70% and 80% of training. The highest Jaccard Score for the proposed model is 0.976460 regarding 70% of training. The RNN model performs well and has a Jaccard Score of 0.960568 for 70% of training. For 80% of training, the proposed model has the highest Jaccard Score of 0.981206. Again, the performance of the RNN model is competitive with a Jaccard Score of 0.966976 regarding 80% of training.

Graphic representation for 70% and 80% of training of dataset 2

Figure 24 represents the Graphical representation of performance metrics for dataset 2. The performance metrics in Table 3 provide a comparative evaluation of the proposed model and four other models (CNN, GRU, RNN, LSTM) on 70% of training data from Dataset 2, showcasing the superior performance of the proposed model across nearly all metrics. The accuracy of the proposed model is the highest at 0.9819, indicating its ability to correctly classify zero-day exploits with greater reliability than the others, particularly outperforming RNN (0.95034) and GRU (0.94546). It also achieves the highest precision (0.9868) and recall (0.9813), which signify its capability to identify true positives accurately while minimizing false negatives, crucial for zero-day exploit detection. Furthermore, the proposed model outshines others in the F1 Score (0.9801), a balance of precision and recall, and the R2 Score (0.9654), indicating strong predictive power. Metrics such as MCC (0.9852) and Cohen’s Kappa (0.9768) reinforce its robustness in classification. The model achieves the lowest Hamming Loss (0.0209) and the highest Jaccard Score (0.9705), emphasizing its minimal misclassification rate and better overlap with the true positive class. Lastly, the computation cost of the proposed model is the lowest at 0.38, demonstrating its computational efficiency despite its superior performance. This underscores its capability to deliver high accuracy, precision, and reliability while maintaining computational and resource efficiency, making it the most effective choice among the compared models.

Table 4 provides a comparative analysis of the performance metrics for various models (CNN, GRU, RNN, LSTM) and the proposed model using 80% training data from Dataset 2, showing that the proposed model consistently outperforms others in accuracy, precision, recall, and computational efficiency. The accuracy of the proposed model is the highest at 0.9919, demonstrating its superior ability to classify zero-day exploits correctly, surpassing RNN (0.97005) and CNN (0.97505). It achieves the best precision (0.9968) and recall (0.9923), indicating its reliability in identifying true positives while avoiding false positives and negatives, which is critical in cybersecurity applications. Additionally, its F1 score (0.9921) reflects a perfect balance between precision and recall, while the R2 score (0.9784) signifies strong predictive reliability. Metrics like MCC (0.9902) and Cohen’s Kappa (0.9869) confirm the robustness of the proposed model’s predictions compared to the other methods. With the lowest Hamming Loss (0.0109) and the highest Jaccard Score (0.9822), the model demonstrates minimal misclassification and excellent overlap with the ground truth. The proposed model also exhibits the lowest computation cost (0.32), highlighting its computational efficiency compared to GRU (0.64), RNN (0.65), and CNN (0.56). This efficiency, combined with high performance across all metrics, establishes the proposed model as the most accurate, reliable, and cost-effective solution for zero-day exploit detection among the models tested.

The proposed model offers high accuracy, precision, and recall, efficiently detects zero-day exploits with minimal false positives, and has low computational cost due to its optimized architecture. Furthermore, the model’s complexity may increase implementation challenges, and its real-world applicability might require further testing on diverse datasets and dynamic environments.

Discussion

Theresearch presents a novel method for identifying zero-day vulnerabilities by combining a number of cutting-edge approaches, such as the Adaptive WavePCA-Autoencoder (AWPA) for pre-processing, which helps with feature extraction, dimensionality reduction, and denoising. A consideration of the shortcomings of current methods, such as PCA or t-SNE, could, nevertheless, bolster the case for AWPA’s superiority. Although PCA is frequently used to reduce dimensionality, AWPA’s autoencoding structure allows it to handle noise reduction and the nuances of feature extraction. The use of t-SNE in large datasets is limited due to its difficulties with scalability and global structure preservation, despite its effectiveness in visualizing high-dimensional data. In comparison, AWPA offers a more comprehensive and flexible solution by combining denoising with dimensionality reduction. The study could delve into greater detail on how the AWPA gets around these restrictions, especially with regard to scalability and generalization to unidentified threats, establishing it as a more effective and dependable tool for identifying zero-day exploits than conventional techniques.

Figure 25 show the Receiver Operating Characteristic (ROC) curves for a model’s performance on two datasets, with areas under the curve (AUC) of 0.97 (first graph) and 0.98 (second graph). The ROC curve evaluates the trade-off between the true positive rate (sensitivity) and the false positive rate. Both curves indicate strong performance, with the model achieving high AUC scores close to 1, reflecting excellent classification ability. The second graph (AUC = 0.98) slightly outperforms the first graph (AUC = 0.97), showcasing better discrimination capability.

Conclusion