Abstract

Recently, superpixel segmentation has been widely employed in hyperspectral image (HSI) classification of remote sensing. However, the structures of land-covers in HSI commonly vary greatly, which makes it difficult to fully fit the boundaries of land-covers by single-scale superpixel segmentation. Moreover, the shape-irregularity of superpixel brings challenge for depth feature extraction. To overcome these issues, a multiscale superpixel depth feature extraction (MSDFE) method is proposed for HSI classification in this article, which effectively explores and integrates the spatial-spectral information of land-covers by adopting multiscale superpixel segmentation, constructing statistical features of superpixel, and conducting depth feature extraction. Specifically, to exploit rich spatial information of HSI, multiscale superpixel segmentation is firstly applied on the HSI. Once superpixels on different scales are obtained, two-dimensional statistical features with a united form are constructed for these superpixels with different spatial shapes. Based on these two-dimensional statistical features, a convolutional neural network is utilized to learn deeper features and classify these depth features. Finally, an adaptive strategy is adopted to fuse the multiscale classification results. Experiments on three real hyperspectral datasets indicate the superiority of the proposed MSDFE method over several state-of-the-art methods.

Similar content being viewed by others

Introduction

Hyperspectral images (HSI) contain spectral information with hundreds of continuous bands and two-dimensional spatial information, which can be widely applied in various fields, such as military reconnaissance, environmental monitoring, and precision agriculture1,2,3,4,5. In many remote sensing applications, it is necessary to classify each pixel in HSI6,7. However, the high-dimensional characteristics of HSI may lead to the Hughes phenomenon8, which decreases the classification performance. Furthermore, the complex spatial-spectral characteristics are difficult to articulate9,10. To alleviate these problems, various feature extraction methods for HSI have been explored.

Recently, deep learning models have shown great potential in the HSI classification11. Deep learning methods utilize multi-layer neural networks to extract abstract semantic features from data, which emulate the structure and function of the human brain’s neural networks. For example, recurrent neural network (RNN) is proposed to learn discriminative features by considering the spectral feature as sequential data12. Hang et al.13 propose a cascaded RNN model utilizing gated recurrent units, which is further extended into a spectral-spatial joint model by incorporating convolutional layers. Liu et al.14 first explore the usefulness and effectiveness of a generative adversarial network (GAN) for HSI classification. In various deep learning models, convolutional neural networks (CNNs) are extensively deployed in HSI classification. The CNNs execute convolutional operations on hyperspectral data framed within rectangular windows, which can extract profound semantic features combining spatial and spectral characteristics15. For instance, Roy et al.16 propose a hybrid spectral CNN (HybridSN), where 3DCNN first performs joint spatial-spectral feature representation, and 2DCNN further captures spatial representations at higher abstraction levels. Yu et al.17 adopt a simplified 2D-3D CNN architecture for HSI classification. Specifically, the 2-D convolutional layer aims to extract the spatial features encapsulated spectral information. The 3-D CNN approach focuses on harnessing band co-relation data. Lee et al.18 describe a contextual deep CNN, which forms joint spatial-spectral feature maps through multi-scale filters. Various CNN-based HSI feature extraction methods continue to emerge19,20,21, the above methods have demonstrated satisfactory classification performance, proving that CNN can improve the representation of spatial-spectral features. However, these methods still have a key problem. Most CNN methods extract spatial-spectral features through rectangular windows, it is difficult to characterize the irregularities of terrain boundaries. The limited ability of the rectangular window to describe boundary features may lead to misclassification of edge pixels.

In order to solve the problem of rectangular windows in effectively capturing boundary information, superpixel segmentation technology is considered. Superpixel segmentation adaptively divides adjacent pixels in natural images with similar characteristics such as color, brightness, and texture into non-overlapping sub-regions22,23. Each sub-region exhibits high internal pixel similarity, thereby preserving the spatial structural information of the image more effectively24. Utilizing superpixel segmentation for feature extraction in HSI is a promising approach. For instance, in25 and26, the segmented superpixels are combined with principal component analysis (PCA) for unsupervised feature extraction. Zhao et al.27 propose a superpixel-guided deformable convolution to make the shape of the deformable convolution align with the land coverage shape. Zhang et al.28 use superpixel-level hybrid discriminant analysis to exploit local/non-local spatial-spectral correlation information among/between superpixels for learning feature representations. However, the above-mentioned methods are based on single-scale superpixel segmentation. For the single-scale superpixel segmentation, it is a challenge to determine the optimal numbel of segmented superpixel. Furthermore, single-scale segmentation may result in over-segmentation or under-segmentation of some local areas, leaving the complex boundary information of certain land covers insufficiently captured. This limitation ultimately hinders the improvement in classification performance.

To overcome the limitations of single-scale superpixel segmentation, a multiscale approach is introduced to capture richer and more comprehensive boundary information. Multiscale superpixel segmentation methods can obtain richer feature information at different spatial scales, thereby improving the accuracy of classification algorithms. For example, in29 and30, multiscale superpixel-level data is used as a substitute for pixel-level data, where the average spectral vector of the superpixels is taken as their feature. Zhang et al.31 utilize multiscale superpixel-based sparse representation to acquire diverse spatial information through multiple scales of segmentation. Dundar et al.32 present multiscale superpixels and guided filters to get local information from different region scales. Wang et al.33 employ a multi-scale superpixel-guided structural profile method for HSI classification. Li et al.34 utilize a band-by-band adaptive multiscale superpixel feature extraction method to mitigate the difficulty of choosing the optimal superpixel scale, effectively harnessing available spectral and spatial information across bands. All these methods have been demonstrated to achieve satisfactory classification performance. However, the fusion of multi-scale information has the problem of assigning appropriate weights to each scale.

Based on the above comprehensive analysis, a novel multiscale superpixel depth feature extraction (MSDFE) method is proposed for HSI classification. Specifically, the superpixel segmentation method is applied to the dimensionality-reduced HSI to generate multi-scale 3D superpixel blocks. Then, two-dimensional statistical features, which are only determined by the spectral dimension, are constructed. After that, the statistical features of different scales are passed through a deep convolution module to extract deeper features. Finally, for each single-scale depth feature, the single-scale classification result of the HSI is obtained through a fully connected module, and an adaptive voting strategy is adopted to allocate weights and merge classification results from different scales. In this method, the statistical features effectively integrate the spatial-spectral information of the superpixel in the HSI. At the same time, the statistical features from different scales share the same size, which facilitates uniform input to the CNN model and performs deep feature extraction. Moreover, the adaptive fusion strategy comprehensively integrates multi-scale information, resulting in finer and more detailed predictions for HSI classification.

The rest of this article is structured as follows. The “Related works” Section briefly introduces the related works. In the “Proposed method” Section, the proposed MSDFE method is described in detail. In the “Experimental Results and Discussions” Section, the experimental results and analysis are provided. Finally, the conclusion is given in the “Conclusion” Section.

Related works

Simple linear iterative clustering

Simple linear iterative clustering (SLIC), proposed in 2010, adapts a K-means clustering approach. Despite its speed and simplicity, SLIC handles boundaries as well as or better than other segmentation methods35,36. It transforms color images into five-dimensional feature vectors composed of the CIELAB color space \([l\ a\ b]^T\) and the pixel’s position \([x\ y]^T.\) Then, it establishes a distance measurement standard and performs local clustering on the image pixels.

To create approximately similarly sized superpixels, the distance between the centers of the superpixels is set as:

where N is the number of pixels in the image and k represents the number of superpixels. Thus, it can be understood that the average area of the superpixel is N/k.

Subsequently, during the iteration process, the ith pixel is associated with the closest cluster center, where the search region of the cluster center overlaps the pixel’s location. Since the average spatial size of the superpixel is \(S \times S,\) the cluster center searches for similar pixels in a \(2S \times 2S\) region around it. In order to evaluate distance \(d_s\) between the ith pixel and cluster center, the algorithm fuses the color proximity and spatial proximity into a single measure. \(d_s\) is defined as:

where \(d_{lab}\) is the color distance, \(d_{xy}\) is the spatial distance, \(d_s\) is the final distance metric used, \((l_j, a_j, b_j)\) and \((l_i, a_i, b_i)\) represent respectively the CIELAB color spaces of pixel j and the cluster center i, \((x_j, y_j)\) and \((x_i, y_i)\) are the spatial coordinates of pixel j and the cluster center i, respectively. The constant m is used to weigh the balance between color similarity and spatial proximity.

Once each pixel has been associated with the closest superpixel center, the average vector of all pixels belonging to the superpixel is calculated as the new cluster center. The Euclidean distance is then used to compute a residual error between the previous superpixel center and the new superpixel center. The process is repeated iteratively until the residual error falls below a predefined threshold.

Covariance statistical feature

Covariance is a statistical measure used to quantify the linear relationship between two random variables. It describes how two variables vary together, reflecting the trend of their correlation. Covariance measures the degree of linear dependence between two variables, X and Y. The formula is expressed as follows:

where \(X_i\) and \(Y_i\) represent the sample values of two variables, while \(\bar{X}\) and \(\bar{Y}\) denote the means of variables X and Y, respectively. n is the sample size. Covariance is an important metric for describing the linear relationship between two variables. It measures the trend of variation between the variables. The covariance statistical characteristic in the proposed method will be used to depict the correlation information between different bands of HSI.

Proposed method

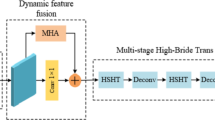

In this article, we propose a novel MSDFE method, which mainly consists of the following three parts: (1) generation of 3D superpixel maps; (2) multiscale deep feature extraction; and (3) fusion classification. The flowchart of the proposed MSDFE method is illustrated in Fig. 1 and the method details are provided as follows.

Generation of 3D superpixel maps

In HSI, neighboring pixels always show similar spectral characteristics and high spatial correlation, it is effective to use superpixel segmentation to capture spectral similarity and spatial correlation between pixels. As shown in Fig. 1, to select more informative bands and reduce computational complexity, the PCA method is applied to the original HSI. Specifically, given an HSI defined by \({\textbf {Z}} \in \mathbb {R} ^{M \times H \times K},\) the dimension-reduced image \({\textbf {X}} \in \mathbb {R}^{M \times H \times L}\) can be obtained by the PCA method, where M and H are the size of the spatial dimensions, K is the number of original spectral bands, and L represents the number of PCA principal components (\(L \ll K\)). Then a 2D superpixel map for the first three principal components is gained by adopting the SLIC method, which consists of m irregular and non-overlapping superpixel regions. The 2D superpixel map is finally combined with the dimension-reduced HSI to generate a 3D image labeled by superpixels. To obtain multiscale structural information, multiscale segmentation with different superpixel numbers for the same HSI is applied, which generates multi-scale superpixel maps.

Multiscale deep feature extraction

For each pixel, a series of superpixel blocks with different shapes are obtained by multiscale superpixel segmentation. Although different superpixel blocks have different shapes, their statistical features usually share the same size, which simultaneous extracts the spatial and spectral information from the superpixel blocks. Moreover, the shallow feature expression in a unified form can be further input into the deep network to mine the corresponding deep features. Therefore, for multiscale superpixel blocks of the same pixel, two-dimensional statistical features are first constructed, and subsequently, these obtained two-dimensional statistical features are input into a deep convolution module to extract the multiscale deep features of the pixel. The illustration of the 2D statistical feature generation for a superpixel block is shown in Fig. 2, where N is the number of pixels contained in a superpixel block, and L represents the number of dimension-reduced bands. The two-dimensional statistical feature map, which is the covariance matrix of the superpixel block, is calculated by Eq. (6). It can be noted that the size of the obtained covariance matrix is determined only by the number of dimension-reduced bands L, which means that the covariance matrices coming from superpixel blocks with different spatial shapes share the same size. The formula for the covariance matrix on one scale is extracted as follows:

where \(x_i\) is the ith pixel within the superpixel block, and \(\mu\) denotes the mean spectral feature of N pixels within a superpixel block. Moreover, assuming a total of n segmentation scales, \(C_k (k=1,\cdots ,n)\) is used to denote the kth covariance maps, which are obtained with (6) from the computation of kth scale superpixel block containing the sample pixel.

The obtained statistical feature, namely the covariance map, only represents the shallow feature of the superpixel block. Therefore, a deep network is considered to extract deeper features. As shown in Fig. 3, the statistical features are fed into a deep convolution module to extract deep features. The module consists of two convolutional layers followed by pooling layers. The ReLU activation functions are applied after each layer to introduce non-linearity. The module parameters are shown in Table 1. After the above process, multi-scale superpixel blocks of the same pixel obtain corresponding multi-scale depth features.

Fusion classification

For each single-scale depth feature, the single-scale classification result of HSI is obtained through a fully connected module, which contains two fully connected layers consisting of a Dense layer and an activation function layer. In order to obtain the final classification result, we adopt an adaptive multi-scale fusion strategy. Most multiscale decision fusion methods nowadays use majority voting with uniform weights, which indicates that each scale has the same impact on the predicted results. By this approach, scales with poor classification performance may have an excessive influence on the fused classification result, which will reduce the final classification performance. In light of this, an adaptive decision fusion strategy is employed to assign different weights to different scales. The weight distribution rule37 is expressed as follows:

where P represents the predicted probability of sample x for each category, \(P_k(y = c \mid x)\) represents the predicted probability at kth scale that sample x belongs to category c, n is the number of scales, and Y is the number of categories. The weight coefficients \(\lambda _k\) of each scale are determined by Eq. (8). Finally, the category with the highest probability in P is chosen as the final prediction. \(X_k\) is the overall classification accuracy (OA) of the kth scale, \(X_{max}\) and \(X_{min}\) represent the maximum and minimum values in the OA values after classification at all scales, respectively.

Experimental results and discussions

Datasets

To verify the performance of the proposed method, three real hyperspectral image datasets are used in the experiments: the Indian Pines dataset, the Salinas dataset, and the Pavia University dataset.

Experimental setup

(1) Indian Pines: The Indian Pines dataset was gathered by AVIRIS sensor over the Indian Pines test site in northwestern Indiana, USA. The dataset consists of a spatial size of \(145 \times 145\) pixels and 200 spectral reflectance bands after removing bands covering the region of water absorption. The wavelength ranges from 0.4 to 2.5 \(\mu\)m, and the spatial resolution is 20m per pixel. The dataset contains 16 categories, and detailed information about the dataset categories is shown in Table 2.

(2) Salinas: The Salinas dataset was collected by the AVIRIS sensor over Salinas Valley, California, USA. The dataset contains 204 spectral bands after discarding 20 water absorption bands. The spatial size is \(512\times 217,\) and has the characteristic of high spatial resolution (3.7m per pixel). The dataset contains 16 categories, and detailed information about the dataset categories is shown in Table 2.

(3) Pavia University: The Pavia University dataset was captured by the ROSIS sensor during a flight campaign over the University of Pavia, Italy. The number of spectral bands in the dataset is 103, with \(610\times 340\) pixels and a spatial resolution of 1.3 m. The dataset contains 9 categories, and detailed information about the dataset categories is shown in Table 2.

To validate the performance of the proposed MSDFE method, various HSI classification methods, including 2D-CNN, multiscale covariance maps (MCMs)7, HybridSN16, spatial-spectral feature tokenization transformer (SSFTT)38, CNN-enhanced GCN (CEGCN)39, multilevel superpixel structured graph U-Nets (MSSGU)40, superpixel-based Brownian descriptor (SBD)41, superpixel-level hybrid discriminant analysis (SHDA)28, attention multi-hop graph and multi-scale convolutional fusion network (AMGCFN)42, are used to be compared. Considering that the proposed method is based on superpixel segmentation and utilizes CNN for deep feature extraction, the compared methods selected for the experiment focus on two aspects: feature learning respectively based on superpixel segmentation and CNN with fixed windows. The most of the compared methods (except for 2D-CNN, MCMs, HybridSN, and SFFTT) rely on superpixel. Among these methods, MCMs, MSSGU, and AMGCFN employ a multiscale strategy. In specific, 2D-CNN, MCMs, HybridSN, and SFFTT extract spectral-spatial features by utilizing the spectral-spatial information within a fixed square window neighborhood. In CEGCN, MSSGU, SBD, SHDA, and AMGCFN, the adaptive spatial structure information is obtained by employing the superpixel segmentation method to extract the spatial-spectral features. In CEGCN, the CNN and GCN branches are used to generate complementary spatial-spectral features for feature learning at the pixel and superpixel levels, respectively. In MSSGU, different-scale features are fused in a coarse-to-fine progressive manner to generate more subtle fused features for the pixelwise classification task. In SBD, the Brownian descriptor based on superpixels is used to extract linear and nonlinear spectral information. In SHDA, superpixels and discriminant analysis are integrated to learn feature representations.

For all the comparative algorithms, the corresponding public codes and consistent hyperparameters are employed to ensure that the comparative experiments are more convincing. The Xavier method is utilized to initialize all weights, while the biases are initialized to zero. The Adam optimizer is adopted for training. The learning rate is set to 0.001 and adaptively changes during the training process. The batch size is set to 100, and five samples per class are randomly selected as the training set. In addition, the experiments are conducted on a hardware environment composed of an i7-12400F CPU, 48 GB of RAM, and a graphics processing unit (GPU) NVIDIA GeForce RTX 4070 with 12 GB video memory. All experiments are repeated ten times, and four evaluation metrics, including the overall classification accuracy (OA), the average classification accuracy (AA), the kappa coefficient (k), and the classification accuracy per category, are introduced to analyze the effectiveness of these comparative methods. The higher the value of four metrics is, the better classification performance is.

Parameter analysis experimental results of the proposed method. (a) Influence of the reduced dimensionality L on the classification performance of datasets under single-scale segmentation condition. (b) Influence of the parameter S related to the average superpixel area on the classification performance of datasets. (c) Influence of the number of superpixel scale n on classification performance for three datasets.

Parameter analysis

In this section, a detailed explanation of the important parameters in the proposed MSDFE method is provided. For single-scale superpixel segmentation, the reduced dimensionality L and the parameter S, which is related to the average superpixel area (i.e., the basic superpixel center spacing S in Eq. (1)) will be discussed. For multiscale information fusion, the number of scales n will be analyzed in detail. When the influence of one parameter is analyzed, the other parameters are fixed to the default values.

Firstly, the influence of reduced dimensionality L on the classification results is analyzed. In the experiments, the parameter L is varied from 10 to 50 with step of 1. Figure 4a presents the connections of OA with the reduced dimensionality for the three datasets. With the increase in the number of dimensions L, the OA values first increase and then show a relatively stable trend around a certain value. For the Indian Pines, Salinas, and Pavia University datasets, the OA values tend to stabilize when L is greater than 22, 23, and 26, respectively. Considering that, when L is set to 30, compared methods exhibit relatively high and stable OA values for the three datasets. Therefore, in all comparative algorithms, the reduced dimensionality L is set to 30.

Then, the impact of the parameter S related to the average superpixel area, which reflects the distribution density of the superpixels, on the proposed MSDFE method is discussed. Larger spacing usually implies a smaller number of superpixels and a coarser segmentation, while smaller spacing indicates a larger number of superpixels and a more detailed segmentation. The impact of the OA values with the superpixel center spacing S for the three datasets is shown in Fig. 4b. In the experiments, the range of the parameter S is [3, 25], with a step size of 1. Theoretically, the resulting superpixel region becomes larger as S becomes larger. Too small superpixel may not effectively utilize the spatial information in one homogeneous region, while too large superpixel may contain some pixels from different classes in one superpixel. With the continuous increase of S, the OA values begin to rise, subsequently maintain relative stability, and finally continually decrease. When S is around 11, the OA values remain relatively stable for the three datasets. Hence, the basic superpixel center spacing is set to 11.

Finally, the effect of the number of the scale n on classification performance is evaluated. The different superpixel center spacings are obtained around the basic superpixel center spacing S with the step of 1 by adding and subtracting simultaneously. Figure 4c shows the correlation of the classification performance with the number of the scale for the three datasets. With the increase in the number of the scale n, the OA values initially increase continuously and then stabilize, when n reaches a certain level. This is mainly due to the fact that, with the increase of the number of the scale, the features of different classes can be captured more effectively and the spatial structure in HSI can be expressed comprehensively. However, an excessive number of scales may increase the redundancy of information within the samples, which could lead to the inability to extract more easily distinguishable features affecting classification. For the three datasets, the value of n for obtaining the best classification results is 15. Therefore, the number of the scale n is fixed to 15 for the proposed MSDFE method.

Module ablation analysis

We conduct a module ablation analysis to verify the effectiveness of different components in the proposed method. Specifically, the experiments are divided into four groups: the 2DCNN with the composition of majority voting and the covariance features from rectangular windows of different sizes as a baseline method, the baseline method with the module of superpixel covariance maps (SCM), the baseline method with the module of adaptive decision fusion (ADF), and the baseline framework combining SCM and ADF (i.e., the proposed MSDF method).

The results of the ablation experiments are shown in Table 3 and the corresponding classification maps are provided in Figs. 5, 6, and 7. From the experimental results, it can be observed that the baseline method exhibits the poorest classification performance. Compared to the baseline, adding the ADF module improves classification accuracy by 1.06%, 0.08%, and 2.14%, respectively. This indicates that adaptive decision fusion effectively captures subtle differences across multiple scales and makes better use of multi-scale information. Additionally, the baseline method incorporating the SCM module achieves performance improvements of 19.53%, 0.81%, and 19.11%, respectively. It means that the superpixel covariance map effectively preserves the spatial-spectral information of HSI while capturing the boundary information of different land-cover types, significantly enhancing classification performance. To show the differences in the classification map more clearly, certain regions have been enlarged. It can be seen from the classification maps that the proposed method classifies the boundary pixels more accurately. For example, the classification map obtained by the proposed method on the Salinas data set is highly consistent with the ground truth. Therefore, it is further shown that the MSDFE method can effectively extract spatial information in HSI.

Comparison with other methods

In this section, the proposed MSDFE method is compared with the classic and state-of-the-art classification methods to verify its effectiveness. The parameters of various comparative algorithms are set according to the related research articles or open-source codes. For the three datasets used in the experiments, five samples from each class are randomly selected as the training set, while the remaining samples are used as the testing set. The validation set is not split from the training or testing set (except for CEGCN, MSSGU, and AMGCFN). This means that the proportions of the training sets for the Indian Pines, Salinas, and Pavia University datasets are 0.78%, 0.15%, and 0.11%, respectively. A validation set is set up in CEGCN, MSSGU, and AMGCFN methods, which shares the same number of samples as the training set and is included in the test set.

Indian Pines dataset. (a) Ground truth. Classification maps obtained by different classification methods. (b) 2DCNN, OA = 61.76%, (c) MCMs, OA = 75.13%, (d) HybridSN, OA = 49.72%, (e) SSFTT,OA = 70.56%, (f) CEGCN,OA = 66.74%, (g) MSSGU,OA = 75.89%, (h) SBD, OA = 76.95%, (i) SHDA, OA = 82.03%, (j) AMGCFN, OA = 70.66%, (k) MSDFE, OA = 97.85%, (l) Labels.

Salinas dataset. (a) Ground truth. Classification maps obtained by different classification methods. (b) 2DCNN, OA = 84.22%, (c) MCMs, OA = 90.32%, (d) HybridSN, OA = 90.78%, (e) SSFTT, OA = 93.99%, (f) CEGCN, OA = 92.79%, (g) MSSGU, OA = 94.91%, (h) SBD, OA = 94.30%, (i) SHDA, OA = 92.07%, (j) AMGCFN, OA = 92.91%, (k) MSDFE, OA = 97.85%, (l) Labels.

Pavia University dataset. (a) Ground truth. Classification maps obtained by different classification methods. (b) 2DCNN, OA = 67.83%, (c) MCMs, OA = 70.30%, (d) HybridSN, OA = 62.08%, (e) SSFTT, OA = 63.50%, (f) CEGCN, OA = 84.97%, (g) MSSGU, OA = 83.96%, (h) SBD, OA = 78.46%, (i) SHDA, OA = 77.48%, (j) AMGCFN, OA = 85.46%, (k) MSDFE, OA = 97.85%, (l) Labels.

The quantitative results on the three datasets are listed in Tables 4, 5, and 6, with the best results highlighted in bold. The corresponding classification maps are provided in Figs. 8, 9, and 10. The results indicate that the MSDFE method exhibits outstanding classification accuracy and robustness, especially under conditions with complex categories and limited training samples.

For the Indian Pines dataset, the OA of the proposed method is 98.12%, which is 43.98% higher than the worst-performing method, HybridSN, and 16.17% higher than the best-performing method, SHDA. For the categories with highly similar spectral features but localized differences in spatial distribution, such as Corn-notill and Corn-mintill, the proposed method achieves high accuracies of 92.29% and 98.69%, respectively, which are much higher than other comparison methods. This high accuracy is mainly attributed to the MSDFE method, which aggregates spatially adjacent and spectrally similar pixels into regions through superpixel segmentation, thereby reducing the impact of spectral aliasing.

For the Salinas dataset, the proposed method shows an OA of 97.40%, along with an AA of 99.27% and a \(\kappa\) coefficient of 0.9734. These results surpass the best-performing method, MCMs, by 3.95%, 2.28%, and 4.36%, respectively. Due to the uniform distribution of categories and the obvious spectral differences between categories in the Salinas dataset, most methods achieve good classification accuracy. However, the proposed MSDFE method still achieves the best performance because it effectively utilizes multi-scale superpixel features to capture complex boundary and local details, improving classification accuracy.

For the Pavia University dataset, methods that combine superpixels and deep learning, such as CEGCN, MSSGU, and AMGCFN, perform well, achieving a maximum OA of 89.17%. However, methods solely relying on CNN or superpixels perform poorly, with a maximum OA of 78.28%. In contrast, the proposed method demonstrates significant superiority on this dataset, achieving an OA of 98.23%, which surpasses the best result from the aforementioned methods by 9.06%. This may be attributed to the fact that the proposed method puts different scales of superpixels through a deep learning module and performs adaptive fusion to more accurately capture the complex spatial and spectral information of HSI.

The improvement in classification performance is also reflected in the classification maps generated by the proposed method, and some hard-to-distinguish regions are enlarged to display the details of the classification results. From these results, it can be seen that for the Indian Pines and Pavia University datasets, boundaries of complex categories are more clearly defined, with fewer misclassified regions. For the Salinas dataset, the boundary transitions between categories are smooth. Particularly for complex classes such as Lettuce-romaine, the proposed method accurately identifies subtle variations, further validating its remarkable enhancement of classification accuracy in HSI.

To further validate the proposed MSDFE method, we have also investigated the influence of the number of training sample for the compared methods on three datasets. In experiments, we randomly select different numbers of sample from each class to serve as the training set. The number of the selected labeled samples per class is set from 2 to 30. Specifically, the step size is 2 in the range from 2 to 10 and the step size is 5 in the range from 10 to 30, respectively. Considering that the labeled sample number of some categories for the Indian Pines dataset (Alfalfa, Grass-pasture-mowed, Oats) is less than 50, half of the labeled samples are selected as the training samples when the number of training sample is over half of the total number of samples in these categories. As shown in Fig. 11, with the number of training sample increases, the performance for all the considered HSI classification methods are generally improved. Most importantly, the classification performance of the proposed MSDFE method consistently outperforms all comparison methods. Although the number of training sample is small, the proposed MSDFE method shows significant advantage in classification performance. These results further evidence that the proposed MSDFE method, by constructing covariance matrices and utilizing deep learning network to fuse spatial and spectral information coming from different scale superpixels, can obtain features with more discriminative capability for HSI classification.

Conclusion

In this article, a novel MSDFE method has been proposed for HSI classification. In this method, by constructing two-dimensional statistical features, the spatial-spectral features contained within the superpixel block can be naturally fused and effectively extracted. Moreover, the statistical features extracted from superpixel blocks of different shapes share the same size, which facilitates further learning depth features through a unified CNN model. In addition, the complex structure of ground objects in HSI makes single scale superpixel segmentation prone to over-segmentation or under-segmentation, while multiscale segmentation can effectively address these issues. Therefore, in the proposed method, the multiscale superpixel segmentation is used to capture the information of different scales and effectively fuse, further enhancing the classification accuracy. Experiments on three real-world HSI datasets show that the proposed MSDFE method outperforms the existing classical and state-of-the-art HSI classification methods, especially in terms of classification performance under small sample conditions. In future work, we consider fusing pixel-level features with multiscale superpixel-level features to construct a more discriminative feature, which will improve classification performance. Moreover, we will also adaptively select fusion scales to accommodate different datasets, which can better balance the computational cost and classification performance of the algorithm.

Data availability

The datasets analyzed during the current study are available in https://www.ehu.eus/ccwintco/index.php?title=Hyperspectral_Remote_Sensing_Scenes.

References

Shimoni, M., Haelterman, R. & Perneel, C. Hypersectral imaging for military and security applications: Combining myriad processing and sensing techniques. IEEE Geosci. Remote Sensing Magaz. 7, 101–117 (2019).

Gross, W. et al. A multi-temporal hyperspectral target detection experiment: Evaluation of military setups. In Target and Background Signatures VII 11865, 38–48 (SPIE, 2021).

Zheng, Z., Zhang, S., Song, H. & Yan, Q. 3d lightweight spatial-spectral attention network for hyperspectral image classification. In Chinese Conference on Pattern Recognition and Computer Vision (PRCV), 297–308 (Springer, 2023).

Grøtte, M. E., Birkeland, R. & Honoré-Livermore. Ocean color hyperspectral remote sensing with high resolution and low latency–the hypso-1 cubesat mission. IEEE Trans. Geosci. Remote Sensing60, 1–19 (2022).

Wang, C., Liu, B. & Liu, L. A review of deep learning used in the hyperspectral image analysis for agriculture. Artif. Intell. Rev. 54, 5205–5253 (2021).

He, L., Li, J. & Liu, C. Recent advances on spectral-spatial hyperspectral image classification: An overview and new guidelines. IEEE Trans. Geosci. Remote Sens. 56, 1579–1597 (2017).

He, N., Paoletti, M. E. & Haut, J. M. Feature extraction with multiscale covariance maps for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 57, 755–769 (2018).

Hughes, G. On the mean accuracy of statistical pattern recognizers. IEEE Trans. Inf. Theory 14, 55–63 (1968).

Kumar, B., Dikshit, O. & Gupta, A. Feature extraction for hyperspectral image classification: A review. Int. J. Remote Sens. 41, 6248–6287 (2020).

Hong, D., Yokoya, N. & Chanussot, J. An augmented linear mixing model to address spectral variability for hyperspectral unmixing. IEEE Trans. Image Process. 28, 1923–1938 (2018).

Zhu, X. X., Tuia, D. & Mou, L. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sensing Mag. 5, 8–36 (2017).

Mou, L., Ghamisi, P. & Zhu, X. X. Deep recurrent neural networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 55, 3639–3655 (2017).

Hang, R., Liu, Q. & Hong, D. Cascaded recurrent neural networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 57, 5384–5394 (2019).

Zhu, L., Chen, Y., Ghamisi, P. & Benediktsson, J. A. Generative adversarial networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 56, 5046–5063 (2018).

Shi, C. & Pun, C.-M. Superpixel-based 3d deep neural networks for hyperspectral image classification. Pattern Recogn. 74, 600–616 (2018).

Roy, S. K., Krishna, G. & Dubey, S. R. Hybridsn: Exploring 3-d-2-d CNN feature hierarchy for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 17, 277–281 (2019).

Yu, C., Han, R. & Song, M. A simplified 2d–3d CNN architecture for hyperspectral image classification based on spatial-spectral fusion. IEEE J. Select. Topics Appl. Earth Observ. Remote Sensing 13, 2485–2501 (2020).

Lee, H. & Kwon, H. Going deeper with contextual CNN for hyperspectral image classification. IEEE Trans. Image Process. 26, 4843–4855 (2017).

Bhatti, U. A., Yu, Z. & Chanussot, J. Local similarity-based spatial-spectral fusion hyperspectral image classification with deep CNN and gabor filtering. IEEE Trans. Geosci. Remote Sens. 60, 1–15 (2021).

Anand, R. et al. Hybrid convolutional neural network (CNN) for Kennedy space center hyperspectral image. Aerospace Syst. 6, 71–78 (2023).

Paoletti, M. E., Moreno-Álvarez, S., Xue, Y., Haut, J. M. & Plaza, A. Aatt-CNN: Automatic attention-based convolutional neural networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 61, 1–18 (2023).

Ren & Malik. Learning a classification model for segmentation. In Proceedings Ninth IEEE International Conference on Computer Vision, 10–17 vol.1 (2003).

Zhang, S., Lu, T. & Fu, W. Superpixel-level hybrid discriminant analysis for hyperspectral image feature extraction. IEEE Trans. Geosci. Remote Sens. 60, 1–13 (2022).

Jia, S., Deng, X., Xu, M., Zhou, J. & Jia, X. Superpixel-level weighted label propagation for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 58, 5077–5091 (2020).

Jiang, J., Ma, J. & Chen, C. Superpca: A superpixelwise pca approach for unsupervised feature extraction of hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 56, 4581–4593 (2018).

Zhang, X., Jiang, X. & Jiang, J. Spectral-spatial and superpixelwise PCA for unsupervised feature extraction of hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 60, 1–10 (2021).

Zhao, C., Zhu, W. & Feng, S. Superpixel guided deformable convolution network for hyperspectral image classification. IEEE Trans. Image Process. 31, 3838–3851 (2022).

Zhang, S., Lu, T. & Fu, W. Superpixel-level hybrid discriminant analysis for hyperspectral image feature extraction. IEEE Trans. Geosci. Remote Sens. 60, 1–13 (2022).

Yu, H., Gao, L. & Liao, W. Multiscale superpixel-level subspace-based support vector machines for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 14, 2142–2146 (2017).

Li, S., Ni, L. & Jia, X. Multi-scale superpixel spectral-spatial classification of hyperspectral images. Int. J. Remote Sens. 37, 4905–4922 (2016).

Zhang, S., Li, S. & Fu, W. Multiscale superpixel-based sparse representation for hyperspectral image classification. Remote Sensing 9, 139 (2017).

Dundar, T. & Ince, T. Sparse representation-based hyperspectral image classification using multiscale superpixels and guided filter. IEEE Geosci. Remote Sens. Lett. 16, 246–250 (2018).

Wang, N., Zeng, X. & Duan, Y. Multi-scale superpixel-guided structural profiles for hyperspectral image classification. Sensors 22, 8502 (2022).

Li, J., Sheng, H., Xu, M., Liu, S. & Zeng, Z. Bams-fe: Band-by-band adaptive multiscale superpixel feature extraction for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 61, 1–15 (2023).

Kodinariya, T. M. et al. Review on determining number of cluster in k-means clustering. Int. J. 1, 90–95 (2013).

Wu, K.-L. & Yang, M.-S. Mean shift-based clustering. Pattern Recogn. 40, 3035–3052 (2007).

Ye, Z., Dong, R., Chen, H. & Bai, L. Adjustive decision fusion approaches for hyperspectral image classification. J. Image Graph. 26, 6899–6910 (2021).

Sun, L., Zhao, G. & Zheng, Y. Spectral-spatial feature tokenization transformer for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 60, 1–14 (2022).

Liu, Q., Xiao, L. & Yang, J. CNN-enhanced graph convolutional network with pixel-and superpixel-level feature fusion for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 59, 8657–8671 (2020).

Liu, Q., Xiao, L. & Yang, J. Multilevel superpixel structured graph u-nets for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 60, 1–15 (2021).

Zhang, S., Lu, T. & Li, S. Superpixel-based Brownian descriptor for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 60, 1–12 (2021).

Zhou, H. et al. Attention multihop graph and multiscale convolutional fusion network for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 61, 1–14 (2023).

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 62461027; in part by the Natural Science Foundation of Hunan Province under Grant 2024JJ7393; in part by the Hunan Provincial Education Department Key Research Program under Grant 22A0371; and in part of the Graduate Research Project of Jishou University under Grant Jdy23040.

Author information

Authors and Affiliations

Contributions

Conceptualization, Q.Y.; methodology, Q.Y. and S.Z.; software, Q.Y.; validation, Q.Y.; formal analysis, X.C. and Z. Z.; investigation, Q.Y.; writing-review and editing, Q.Y. and S.Z.; visualization, Q.Y. and X.C.; funding acquisition, Q.Y. and S.Z.. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yan, Q., Zhang, S., Chen, X. et al. Multiscale superpixel depth feature extraction for hyperspectral image classification. Sci Rep 15, 13529 (2025). https://doi.org/10.1038/s41598-025-90228-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-90228-4