Abstract

Research on basic emotions has expanded beyond the traditional six categories, identifying over 20 distinct emotional states. However, differentiating some emotions remained challenging due to partially overlapping facial expressions. Grief and sadness are two such emotions that are difficult to distinguish. This study investigated the behavioral and neural mechanisms of grief perception in the prefrontal cortex (PFC), comparing it with sadness. Participants categorized and rated emotional facial images in grief and sadness conditions on valence, arousal, and dominance scales. While participants perceiving emotional facial images prefrontal cortex (PFC) hemodynamic activities were measured using functional near-infrared spectroscopy (fNIRS). Explicit behavioral responses showed no significant differences, however implicit measures (reaction times) revealed distinctions between grief and sadness perception. Further fNIRS results indicated increased oxy-Hb in the right dorsolateral PFC for grief condition images compared to sadness condition images. Additionally, cultural differences were observed, with Asian participants showing higher oxy-Hb responses in the dorsal PFC for unpleasant facial stimuli in grief conditions. These findings support cultural variability in emotion perception and regulation. The combination of behavioral reaction time and neuroimaging data suggests distinct implicit perceptual and neural processing mechanisms for grief and sadness. This indicates separate automatic implicit mechanisms for these emotions.

Similar content being viewed by others

Introduction

Conveying your emotions through cues such as body language and facial expressions is an important aspect of interacting with others. You may also choose to explicitly convey your feelings by telling your friends or family members how you feel, thus providing information upon which they can act. Understanding the emotional expressions of others is also important in responding appropriately in a given situation. Darwin’s "The Expression of the Emotions in Man and Animals" laid the foundation for the emotion theory, suggesting the innateness and universality of certain emotions and their expressions across cultures and species1. Since then, much research has been devoted to understanding its complexity. Previous research has suggested the existence of six basic universal emotions. These are anger, disgust, fear, happiness, sadness, and surprise2. These, they argue, have unique facial expressions and physiological correlates, and can be observed in distinct cultural groups, as supported by Ekman3, Panksepp4, and Tracy and Randles5. More recently, Keltner and colleagues6 reported more than 20 distinct facial expressions representing different emotional categories, each with a unique and recognizable configuration. Based on this body of recent research, we can say that there are more than six basic emotions, each with distinctive physiological states and subjective evaluations and prototypical expressions associated with them.

A vital source of information for decoding cues of emotion is the face. However, the perception of emotions in a face can be influenced by a variety of factors, such as visual scenes, voices, bodies, cultural background, and even the words used7. Recent studies have indicated that human faces may not always accurately reflect their self-reported emotional experiences8,9. For example, Aviezer and colleagues10 reported that an identical facial expression can convey different emotions depending on the context. Considering all these studies, it becomes clear that contextual information is necessary when conducting research on emotion perception.

Our research presented in this paper centers on understanding human emotions, particularly the similarities and differences of grief and sadness. Grief is a very emotionally challenging experience that has similarities with other emotions, particularly sadness, when viewed superficially11. Perhaps that is the reason so many of us use the word sadness and grief interchangeably. In this study, we examined the behavioral mechanism and neural correlates of grief perception in the PFC and compared it with sadness. Grief occurs after the loss of a closely attached individual; it is irreversible, while sadness is a low arousal emotion generally associated with failure and powerlessness12. As one of the basic emotions, sadness has been studied vastly, although results are mostly mixed in comparison to other basic emotions. In the following section, we describe the background of this research.

Grief

Grief is defined as a psychobiological response to bereavement whose hallmark is a combination of yearning and sadness, along with thoughts, memories, and images of the deceased person13. Few studies have been performed investigating the neural mechanisms of grief. In a study, grief was elicited by photographs of deceased versus a stranger, combined with words specific to the death event versus neutral words. The photos activated the superior lingual gyrus, insula, dorsal anterior cingulate cortex, inferior temporal gyrus, and fusiform gyrus, whereas words activated the precuneus, precentral gyrus, midbrain, and vermis14. Another study exploring the activation of brain areas associated with mourning by inducing it with iconography as a stimulus (using symbols to represent the idea) revealed an activation of cortical areas commonly observed in studies of social cognition (temporoparietal junction, superior temporal gyrus, and inferior temporal lobe), empathy for pain (somatosensory cortex), and loss (precuneus, middle/posterior cingular gyrus)15. Some limitations to the abovementioned studies on the biological basis of grief emotion are sample size, culture-specific stimuli, and a population that cannot be generalized widely (based on only western population).

Sadness

Sadness, a basic emotion, has been extensively studied across cultures with mixed findings16,17. Its characteristics are considered the least clear among basic emotions. Previous research has shown that sadness can decrease peripheral nervous system activity while increasing analytical thinking abilities16,17. Exposure to sadness-inducing stimuli typically increases physiological responses like skin conductance and heart rate18,19,20,21,22. Brain imaging studies associate sadness with various regions: the subgenual frontal cortex (affective change and cardiovascular control), posterior cingulate cortex (executive and cognitive processes), prefrontal cortex (decreased activity in higher-order executive functions), and temporal lobe and insula, including the right amygdala (increased activity, linked to physiological arousal and automatic emotional processing)23. These findings suggest complex neural and physiological mechanisms underlying sadness experience and perception.

Differences between grief and sadness

Human experience includes inevitable and universal feelings of sadness and grief. While the two terms are often used interchangeably, because they are conceptually and phenomenally so similar, it is important to understand that they are not the same. In his book "The Expression of Emotions in Man and Animals," Darwin made a distinction between grief and sorrow, noting that grief is characterized by “frantic” and “energetic,” while sorrow is marked by a more “resigned” and “languid.” However, in contemporary emotion research, this distinction is often overlooked, and sadness is typically viewed as a singular, basic emotion. In the twentieth century, while rare, some research on emotion attempted to understand the difference between grief and sadness and reported that they are different and have distinct functions24. Parker and colleagues25 defined sadness as a temporary feeling of sorrow without decreased self-esteem, while grief involves heartache from a seemingly permanent social bond break. Recent investigations have demonstrated that loss-related and failure-related sadness produce distinct subjective evaluations, physiological reactions, and emotional word encodings20,26. Furthermore, Tsikandilakis and colleagues27 identified melancholy, misery, bereavement, and despair as distinguishable emotional states associated with sadness, each exhibiting dissociable physiological responses. These findings underscore the complexity and nuanced nature of sadness and grief as separate emotional experiences.

Present study

Our study utilized functional near-infrared spectroscopy to investigate the neural mechanisms of grief and sadness, alongside behavioral responses. To our knowledge, only one prior brain imaging study by Shirai and Soshi28 has attempted to differentiate these emotions using EEG. Their research examined the neurological processing of loss-related and failure-related sadness, finding distinct middle-phase neurophysiological processing for each. Failure contexts showed greater contextual conflict, while loss contexts demonstrated stronger congruency effects under sadness bias. Late-phase neural processing, however, supported similarities between these emotions. It is important to recognize certain constraints in the study conducted by28. Initially, the onomatopoeic emotional words utilized in this research conveyed the method of crying and were formulated within the context of Japanese culture. Given that the manner of crying may differ across various cultures29, this could influence the differentiation between sadness subtypes, leading to varying patterns of event-related potential (ERP) effects observed in diverse cultural groups.

In our study, we define loss-related sadness as a separate concept or emotional state that is best categorized as grief. Failure-related sadness can be defined or categorized as sadness. While long-term sadness can result in depression, little is known about distinguishing sadness from grief, which can lead to complicated grief. Understanding the differences between these emotions and their neural correlates can help with related disorders and long-term treatment.

Research aim

To date, no standard method has been developed to study grief’s basic behavioral and neural mechanisms and attempt to differentiate it from other overlapping emotions. Considering the importance of contextual cues based on previous research, in this study, faces expressing basic emotion sadness were presented as a target, preceded by contextual prime stimuli (graveyards/caskets for grief condition, natural neutral scenes for sadness condition). We aimed to differentiate grief and sadness in behavioural and neural responses. fNIRS was applied to the PFC region to explore underlying neural mechanisms, measuring oxy/deoxy hemoglobin correlated with cortical neural activity.

Results

Behavioral results

Weak association between stimulus type and emotion categorization in grief and sadness perception

The results are illustrated in (Fig. 1) and show the effect of stimulus type. A chi-square analysis shows a significant correlation between stimulus type (sadness and grief images) and categories (Sadness, Anger, Disgust, Happiness, Fear, Grief, Neutral, Surprise), χ2 (7, N = 840) = 18.68, p < 0.01. However, further post hoc tests (Bonferroni) did not show any difference between grief stimuli and categorization of emotional word grief; and sadness stimuli and categorization of emotional word sad. Furthermore, we performed the cramerV test for measuring the strength of association between stimuli and categorization of emotion given the eight options of categorization, and the results showed very weak strength of association between stimuli and categorization (CramerV = 0.1496).

Valence, arousal, and dominance: indicates no difference between grief from sadness

Wilcoxon signed rank tests showed significant differences between positive and neutral stimuli in valence (Z = 4.02, p < 0.001) and arousal ratings (Z = 3.01, p < 0.002). Positive stimuli were rated as more pleasant and arousing than neutral stimuli. However, no significant difference was found in dominance ratings (Z = 0.8, p < 0.41) (Figs. S2–S4). In contrast, comparisons between grief and sadness stimuli showed no statistically significant differences in valence (Z = 0.6, p < 0.5), arousal (Z = 0.7, p < 0.9), or dominance (Z = 1.2, p < 0.2) ratings. Participants reported similar emotional experiences for both conditions, suggesting a lack of distinguishable impact between grief and sadness stimuli on these emotional dimensions (Figs. S5–S7).

Significant faster reaction time to grief vs. sadness condition emotional facial images

While explicit behavioral responses showed no significant differences, our extended analysis of implicit behavioural measures, which is reaction time (RT) data revealed important distinctions. RT results (Wilcoxon signed rank test) for valence, arousal, and dominance demonstrated significant differences between grief and sadness conditions. Participants consistently responded faster to grief-related stimuli across all measures (valence: V = 21, p = 0.0004; arousal: V = 31, p = 0.0021; dominance: V = 19, p = 0.0002), suggesting enhanced cognitive processing for grief-related content (Fig. 2a–c). We also observed the faster RTs for positive target stimuli in comparison to neutral stimuli in the control conditions (Fig. S8a–c).

fNIRS results

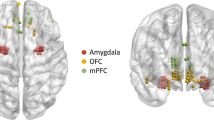

The results of the fNIRS analysis showed region-specific PFC activity in response to emotion-eliciting images. The R6 corresponding to the right PFrd (Fig. 3) showed significantly higher Oxy-Hb for grief-related stimulus conditions in comparison to sadness, LMM, p < 0.0001; post hoc (Tukey), GR-SD, estimate = − 56, p < 0.0001 (Table 1, Figs. 4 and 5). This observed effect in dorsal PFC region, roughly corresponding to the Brodmann areas 10/9/8. However, such differences were not observed for deoxy-Hb (Table 2). Given our preliminary results, we can suggest that there is a difference between overlapping emotions grief and sadness at the neural processing level.

Diagram illustrating the emitter and detector positions. The red square indicates the emitters, and the blue circles indicate the positions of the detectors, which are located 3 cm apart from the emitters. 1–10 indicate the areas between emitters and detectors that have been recorded. The right side of the image shows the corresponding regions (1–10) being recorded.

Radar chart showing the mean difference of Oxy-Hb (a) and Deoxy-Hb (b) change for grief (red) and sadness (green) conditions for all participants across all channels. R1 to R10 represent the recorded barin regions/sites (for detail, see Fig. 3).

Furthermore, we identified a noteworthy main effect of ethnicity (LMM, estimate = -52.92, SE = 18.20, p = 0.009), as well as an interactive effect of stimuli and ethnicity (linear mixed model, estimate = 54.20, SE = 7.07, p < 0.001) on oxy-Hb levels in the dorsal PFC region (Table 1; Fig. 6). Post hoc analysis using Tukey’s method for p-value adjustment revealed that overall Asian participants exhibited a significantly higher oxyhemoglobin (oxy-Hb) response to facial stimuli preceded by death-related images (grief condition) compared to facial stimuli preceded by neutral images (sad condition) (estimate = 56.91, SE = 5, p < 0.0001). Additionally, a significant disparity was observed when comparing the oxy-Hb response of Asian and Western participants to facial images in the grief condition. Asian participants exhibited notably higher oxy-Hb levels than Western participants in response to grief condition images (estimate = 52.92, SE = 18.20, p = 0.042), as well as sad condition images response of Western participants (estimate = 55.63, SE = 18.21, p = 0.031).

Discussion

This study investigated the behavioral and neural mechanisms of grief and sadness, two similar but distinct emotions. Previous research demonstrates that contextual information significantly influences emotion perception, even in early stages10. We hypothesized that grief-related contextual cues (graveyards/caskets) would lead to categorization of negative facial expressions as grief, while neutral contextual images would result in sadness categorization. Depending on the context more closely associated with, each will show a different neural signature.

Unlike previous studies, our behavioral data showed no significant difference in sad and grief emotion categorization despite contextual cues. This could be due to the separate presentation of context and faces, reducing ecological validity compared to studies like Aviezer and collegues10 where emotional faces and context were paired, which makes the stimuli more natural/ecologically relevant. Additionally, cultural and linguistic factors may influence emotion categorization, primarily through top-down mechanisms. In our study, this potential effect was considered by recruiting both Japanese and Caucasian participants. While the Japanese language distinguishes between sadness (かなし, ‘kanashi’) and grief (悲嘆, ‘hitan’)30, the everyday usage of these terms may not consistently reflect this linguistic differentiation. This discrepancy between formal language and colloquial use could impact how participants categorize and process these emotions.

While explicit behavioral responses for valence, arousal, and dominance showed no significant differences, interestingly our extended analysis of implicit behavioural measures, that is reaction time (RT) data revealed important distinctions. RT results for valence, arousal, and dominance demonstrated significant differences between grief and sadness conditions. Participants consistently responded faster to grief-related stimuli across all measure’s valence, arousal, and dominance suggesting enhanced cognitive processing for grief-related content. These findings corroborate previous research on basic emotion recognition31, which reported significant RT differences across basic emotions, with sadness eliciting slower responses compared to other emotions. The observed discrepancy in implicit (RT) measures may indicate subtle processing differences between grief and sadness. Furthermore, observed faster response times to grief-condition images compared to sadness-condition images, extend Carroll and Russell’s32 concept that context significantly influences emotion perception. This supports the view that situational information can override facial cues in emotional interpretation, highlighting the nuanced nature of emotional recognition and the importance of considering multiple information sources in emotion research. Additionally, observed faster response times to grief-condition images potentially be due to the specificity of the emotional context, with grief uniquely associated with loss, facilitating rapid cognitive processing. Furthermore, our study’s discrepancy between explicit and implicit measures aligns with previous research33, suggesting that implicit measures can effectively capture emotional states when explicit self-reports may not. Mauss and Robbinson34 proposed that implicit and explicit measures capture distinct aspects of emotion. These findings underscore the importance of employing both measurement types in emotion research for comprehensive insights.

Our fNIRS data showed a significant difference between grief and sadness conditions. Brain imaging results showed significantly increased oxygenated hemoglobin in the right dorsolateral prefrontal cortex (dlPFC) in response to grief image conditions compared to sadness images. Previous studies using fNIRS have shown that oxy-Hb levels increase in the PFC region in response to the experience, recognition, and regulation of negative emotions35,36,37,38,39,40. Danushka and colleagues built a model using fNIRS data to demonstrate its capability for discriminating between affective states on valence and arousal, and their model’s prediction of valence relied heavily on Brodmann regions 9 and 4641, which correspond to the dorsolateral prefrontal cortex (DLPFC). They concluded that the reason could be the involvement of the DLPFC in emotion regulation. It is worth pointing out that in the current experiment, participants were instructed to categorize or label the external stimuli rather than their own internal affective state. Affect labeling is a process that involves consciously converting either one’s internal emotional experience or an external evocative stimulus into a linguistic symbol, which can be challenging. Despite the effort needed, affect labeling can serve as an implicit form of emotion regulation. This is because its counterintuitive effects can occur without conscious awareness42. Therefore, while affect labeling may feel like an explicit process due to the effort involved, it can still be considered a form of emotion regulation. In the present study, participants had to categorize the emotion (a form of labeling) that might have caused automatic reappraisal and regulation of their emotion subconsciously, resulting in increased oxy-Hb for the grief condition in comparison to sadness. Thus, these results suggest that grief and sadness are different at least at the level of engagement of the dlPFC.

One can speculate that this after effect may be associated with priming stimuli rather than face stimuli. Given the time sensitivity of electroencephalography (EEG), in an event-related potential study, researchers investigated how emotional scenes impact the early processing of faces. They found that the N170 component, associated with face processing, showed larger amplitudes for faces in fearful scenes compared to happy and neutral scenes. Notably, N170 amplitudes were significantly higher for fearful faces within fearful scenes than for fearful faces in happy scenes. They concluded that facial expressions and scene context are combined during early face processing stages43. However, there exists a distinction between the present study and the aforementioned research, as the previous study introduced face and context stimuli simultaneously, whereas we presented a priming stimulus independent of the target stimulus. Additionally, we incorporated an interstimulus interval of two seconds, effectively eliminating the influence of contextual stimuli on the initial processing of facial targets. It is worth noting that fNIRS, in comparison to EEG, suffers from a limited temporal resolution, which poses challenges in capturing millisecond temporal changes. However, these findings suggest that death-related primes can significantly influence neural responses to facial stimuli, even with temporal separation, highlighting the enduring impact of emotional context on face processing.

In the current study, we also observed hemispheric asymmetry; a significant effect was found only in the right hemisphere. Such results partially support the right hemisphere hypothesis (dominance of the right hemisphere for processing all emotions) and valence-specific hypothesis that explains the relative dominance of the right hemisphere for negative affect processing44,45. These findings align with previous studies showing elevated BOLD signals in the right DLPFC for unpleasant emotional experiences46 and increased activation in the right frontopolar region for negative emotions47. Additionally, our results corroborate fMRI studies associating downregulation of negative emotion with increased right lateral PFC activity48. While in previous study participants were not explicitly instructed to downregulate emotions, this may reflect a natural tendency to reduce negative emotional experiences.

Additionally, we also observed cultural differences in the emotion perception of the presented stimuli. Ethnicity significantly impacted oxy-Hb levels in the dorsal PFC, with an interaction between stimuli and ethnicity. Asian participants showed higher oxy-Hb responses in the dorsal PFC for facial stimuli preceded by death-related images (grief condition) compared to neutral images (sad condition). They also demonstrated significantly higher oxy-Hb levels than Western participants in response to grief condition images. This effect persisted when comparing Asian participants’ responses to grief conditions with Western participants’ responses to sad conditions. The ongoing debate on cultural differences in emotion perception explores the universality hypothesis, which suggests that basic emotions are universally expressed and recognized, and the social constructivist view, which argues that different human groups adapt to their unique environments, resulting in cultural variability in emotions. In this study, disparity in culture might be associated with the latter. Previous research by Masuda and colleagues49 revealed that Asian individuals, specifically Japanese individuals, have a broader focus when assessing others’ emotions compared to Westerners, who primarily rely on facial expressions without considering contextual information. This suggests that cultural variability influences emotion perception, with Japanese individuals being more sensitive to emotional context while Americans show lower sensitivity. Further, the disparity in emotional face perception between Asian and Western participants may be attributed to cultural factors such as display rules, cultural norms, and emotional accents. Western cultures encourage the expression of emotions, leading to more intense displays of negative emotions. Therefore, Westerners may anticipate greater intensity in negative emotional displays, which may influence their perception of emotional faces following death-related primes.

Overall, while explicit behavioral responses for categorization along with valence, arousal, and dominance showed no significant differences, interestingly implicit behavioural measures revealed important distinctions between grief and sadness. Further, our brain imaging results showed significantly increased oxygenated hemoglobin in the right dorsolateral prefrontal cortex in response to grief images compared to sadness images. Additionally, cultural differences in the emotion perception of the presented stimuli shed light on the cultural variability in the perception of these two emotions (grief vs sadness).

This study was subject to several limitations. First, the relatively small sample size was a notable constraint. However, this was mitigated by a high-density data collection design, which yielded 200 data points per block per participant. This approach aligns with neuroimaging research practices, where numerous observations per participant often compensate for smaller sample sizes50. Robust statistical methods, including mixed-effects models suited for nested data structures, were utilized to account for both within-subject and between-subject variability. Second, the age range of the participants was limited, with the majority being around 30 years old, which restricts the generalizability of the results. Further studies are needed with a wider age spectrum, encompassing young adults and older individuals, to enhance the generalizability of the results. Third, the study was constrained to two categories of contextual images: neutral and death-related. This limitation was partly to avoid long trials due to concerns about potential headaches from prolonged fNIRS cap use. In future research, a more comprehensive understanding of how context affects perception of sadness and grief emotion could be achieved by incorporating a broader range of contextual images, including positive ones. Future studies could benefit from integrating and comparing facial images displaying different emotions with a range of contextual stimuli (e.g., death-related, neutral, and positive) to yield more nuanced outcomes.

Conclusion and implications

Together behavioral reaction time and neuroimaging data indicate perceptual and neural processing differences between grief and sadness, despite similarities in conscious emotion reporting. These findings suggest distinct automatic implicit mechanisms for these emotions. However, generalizing these findings requires caution. Further research is encouraged to investigate these emotions as potentially distinct entities, utilizing varied approaches, stimuli, and rigorous testing protocols to validate and expand upon these observations.

Our findings suggest questioning the long-lasting basic emotion approach and rethinking having more than six emotions that are perceived and regulated by a separate set of neural circuits, resulting in different brain activations for each. Some recent studies have attempted to differentiate these overlapping emotions20,27, with the purpose of differentiating sadness into subtypes. This can have numerous implications in the context of differentiating emotional states and further in clinical applications in diagnosing emotion-related disorders such as depression and prolonged grief disorder. These observed areas in response to grief and sadness stimuli can be used as biomarkers for understanding the perception of grief and sadness, along with the regulation of these emotions in the normal population and compared with the clinical population for further development of newer treatments.

Methods

Participants

Twenty-one participants, twelve females and nine males, with ages ranging from 23 to 41 (28.95 ± 4.52; mean ± SD), participated in the experiment. Among all, eleven were from Asian cultural backgrounds (Chinese and Japanese students), and ten participants were from Western cultural backgrounds (Table 3). All the participants were right-handed and had normal vision or corrected-to-normal vision. Before participating in the experiment, each participant received a form for reporting any psychological and neurological disorders and was only included for participation when reported to be free from any abnormalities.

The human ethics committee of Kyoto University Primate Research Institute approved the project. Prior to the experiment, all participants were given the informed consent and monetary incentive of 1000 JPY (equivalent to approximately 8 USD) and were instructed to avoid consuming coffee at least before two hours of the starting experiment.

Material and equipment’s

Our study comprised two experimental (grief and sadness) and two control conditions (positive and neutral). Experimental conditions stimuli consisted of forty facial images expressing sadness emotion. Twenty images of faces expressing negative valence were from Caucasians, ten were Chinese, and ten were Japanese. The images of Caucasians were taken from the database “The Karolinska Directed Emotional Faces” (KDEF), which is a set of pictures of humans displaying seven different facial emotional expressions (neutral, happy, angry, afraid, disgusted, sad, surprised) from five different angles (full left profile, half left profile, straight, half right profile, full right profile)51. In this study, we chose twenty pictures expressing sad emotion with straight angle and number of male and female pictures was balanced (10 male and 10 female models). To balance the stimuli between Asians and Caucasians, we used the remaining twenty images from the Chinese and Japanese databases. For the Chinese pictures, we used the “Tsinghua facial expression database,” which comprises Chinese young and older adults displaying eight different facial emotional expressions: neutral, happiness, anger, disgust, surprise, fear, content, and sadness52. In this experiment, we selected ten young Chinese images displaying sadness. For the Japanese pictures, we used the images from the database “The Japanese Female Facial Expression (JAFFE).” This database contains images of seven facial expressions (6 basic facial expressions + 1 neutral), and we selected ten images displaying sadness53. In addition to facial images, twenty non-social priming/contextual images were employed: ten death-related (e.g., caskets, graveyards) and ten neutral (e.g., fields, streets) images, all sourced from the internet and matched for size, resolution, and brightness. Emotional responses were assessed using the Self-Assessment Manikin (Fig. S1) scale54, measuring valence, arousal, and dominance. The control condition comprised forty facial images (twenty positive, twenty neutral) from the same databases as the experimental condition. Unlike experimental conditions, no contextual images were used for control conditions. Functional near-infrared spectroscopy (fNIRS) used across all conditions to measure the hemodynamic activity in the brain with control conditions serving to validate fNIRS as an appropriate measure for distinguishing emotional and non-emotional states. The experiment totaled eighty trials divided into four blocks, each defined by stimulus type (positive, neutral, grief, sadness) and consisting of twenty trials.

Procedure

Participants were seated in front of the computer screen, and instructions were given (see S1 for details of instruction). In experimental conditions (grief and sadness) the trial sequence began with a white central fixation cross displayed for 300 ms, followed by a 300 ms presentation of the prime stimulus. After a 2-s inter-stimulus interval (ISI), the target (emotional facial image) stimulus was presented for 20 s (Fig. 7). In the control conditions the trial sequence was the same, except presentation of priming stimulus, which was omitted in control conditions. Stimuli were presented in pseudorandom order within a block, and presentation of each block was counterbalanced across participants. Participants were instructed to perceive and evaluate emotional facial images using the 9-point SAM scale (Fig. S1) across three dimensions.: valence (1 most unpleasant to 9 most pleasant), arousal (1 most relaxing to 9 most stimulating), and dominance (1 most submissive to 9 most dominant). They were also instructed to categorize each facial expression from eight given options: Sadness, Anger, Disgust, Happy, Fear, Grief, Neutral, Surprise. While participants were observing the emotional facial images (target), oxygenated and deoxygenated (oxy and deoxy) hemoglobin (Hb) dynamics in the PFC were observed using fNIRS (Fig. 3), to investigate the hemodynamic changes across different experimental conditions. A tutorial was given to the participants, and they had the chance to practice prior to the main experiment. After each block, a break was given to all the subjects to allow for rest and to maintain their performance efficiency.

Basic emotion is universal and easy to recognize only by looking at the facial expression, while emotions like grief often require contextual cues for accurate interpretation. Previous studies have shown that emotion perception is malleable and context dependent. The same facial expression can be categorized differently based on contextual cues10,43, highlighting the interplay between visual processing of facial features and cognitive integration of contextual information in emotion recognition. We hypothesized that altering contextual information would lead to the same sad facial expression being perceived differently. In the grief condition, we presented images of caskets and graveyards, which are associated with death, to potentially shift the perception of sad faces towards grief. Conversely, in the sadness condition, we displayed neutral natural scenes that provided no additional contextual information. We assumed that without relevant contextual cues, participants would perceive the faces as expressing sadness based solely on facial information, given that sadness is a basic emotion typically recognizable from facial expressions alone.

Behavioral data analysis

For behavioral data, categorization of emotion testing for the association between the presented emotional stimuli and emotion categorization, a chi-square test was performed. We hypothesized that, given the contextual information associated with grief and sadness, with unpleasant similar facial stimuli as targets, will result in the categorization of different emotions associated with the specific context in which the facial stimulus (target) was presented.

H0: Contextual information and facial stimuli are independent, thus resulting in no difference in categorization of sadness and grief along with no difference in neuronal activity.

H1: Contextual information and facial stimuli are associated, thus resulting in differences in the categorization of sadness and grief along with neuronal activity differences.

To investigate potential differences in participants’ evaluations of valence, arousal, and dominance between grief and sadness condition stimuli, as well as their associated reaction times (RT), we employed a Wilcoxon signed-rank test. Due to the control conditions (positive and neutral stimuli) lacking contextual images, rendering them directly incomparable to the experimental conditions (grief and sadness). To address this, we conducted separate analyses for the control conditions using the same Wilcoxon signed-rank test.

fNIRS data acquisition and processing

A fNIRS device (NIRO-200, Hamamatsu Photonic K.K., Japan) with eight probes allowing ten points of measurement was used to measure oxygenation and deoxygenation of blood hemoglobin in the left and right prefrontal cortical regions (Fig. 3). The distance between the source and detector was 3 cm. The experimental condition is a 2x (blood hemoglobin: oxy-Hb and deoxy-Hb) 2x (images: grief (GR); sadness (SD)) 10 (channels/regions) factorial design. For control conditions the factorial design is a 2x (blood hemoglobin: oxy-Hb and deoxy-Hb) 2x (images: neutral (NU); positive (POS)) 10 (channels/regions). The fNIRS system employed two emitters with laser pulse wavelengths of 775, 810, and 850 nm, and eight detectors. These were positioned over the frontal lobe with a 3 cm source-detector separation. This configuration allowed for the simultaneous recording of oxygenated (oxy-Hb) and deoxygenated (deoxy-Hb) hemoglobin concentrations at a sampling rate of 0.5 Hz while participants observed emotional facial images.

fNIRS data analysis

Data preprocessing involved three key steps, first, unresponsive channels, due to poor scalp coupling were identified by straight-line patterns in the graph and were excluded for further analysis. Second, artifacts (sudden amplitude changes) were corrected based on visual inspection of raw time-series data. Finally, twenty-sec moving average methods were performed to attenuate high-frequency components such as motion artifacts. All the data analysis was performed using R 3.6.255, and the graphs were created using the ggplot2 package in R.

To validate fNIRS as a measure for differentiating neural mechanisms in prefrontal cortex (PFC) regions, underlying the perception of affective (positive) and non-affective (neutral) stimuli in control conditions, we employed linear mixed effects models (LMMs). Hemodynamic response (HDR) served as the dependent variable, with control conditions (positive and neutral facial images) and channels as predictor variables. Participant numbers were included as a random factor to account for individual variability. This approach allowed us to assess the sensitivity of fNIRS in detecting distinct neural activation patterns associated with affective and non-affective visual stimuli processing.

Further, our main interest was in experimental conditions to investigate the neural mechanism of grief perception in the PFC and its comparison with another overlapping emotion, sadness LMMs were run with HDR as a dependent variable, emotion conditions (facial images in sadness and grief block conditions), channels (PFC regions, the values for the 10 channels were not simultaneously entered into the model; rather, the value of each channel was entered individually), ethnicity as predictor variables, and participant number as a random factor, using the lme function (nlme R-package). Post hoc Tukey’s HSD tests were used to explore the specific effects of the predictor variables on HDR. LMMs were compared using AIC (Akaike’s information criterion), R2 values and ANOVA.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Darwin, C. The Expression of the Emotions in Man and Animals (Murray, 1872).

Ekman, P. & Friesen, W. V. Constants across cultures in the face and emotion. J. Pers. Soc. Psychol. 17, 124–129 (1971).

Ekman, P. Facial expressions. Handb. Cogn. Emot. 16, 301–306 (1999).

Panksepp, J. Cross-species affective neuroscience decoding of the primal affective experiences of humans and related animals. PLoS ONE 6(9), e21236 (2011).

Tracy, J. L. & Randles, D. Four models of basic emotions: a review of Ekman and Cordaro, Izard, Levenson, and Panksepp and Watt. Emot. Rev. 3(4), 397–405 (2011).

Keltner, D., Sauter, D., Tracy, J. & Cowen, A. Emotional expression: Advances in basic emotion theory. J. Nonverbal Behav. 43, 133–160 (2019).

Barrett, L. F., Mesquita, B. & Gendron, M. Context in emotion perception. Curr. Dir. Psychol. Sci. 20(5), 286–290 (2011).

Dols, J. M. F. & Russell, J. A. (eds) The Science of Facial Expression (Oxford University Press, 2017).

Gendron, M., Crivelli, C. & Barrett, L. F. Universality reconsidered: Diversity in making meaning of facial expressions. Curr. Dir. Psychol. Sci. 27(4), 211–219 (2018).

Aviezer, H. et al. Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychol. Sci. 19(7), 724–732 (2008).

Lazarus, R. S. Emotion and Adaptation (Oxford University Press, 1991).

Huron, D. (2018). On the Functions of Sadness and Grief. The Function of Emotions: When and Why Emotions Help Us, 59–91.

Shear, M. K. Grief and mourning gone awry: Pathway and course of complicated grief. Dialogs Clin. Neurosci. 14, 119–128 (2012).

Gündel, H., O’Connor, M. F., Littrell, L., Fort, C. & Lane, R. D. Functional neuroanatomy of grief: An FMRI study. Am. J. Psychiatry 160(11), 1946–1953 (2003).

Labek, K. et al. The iconography of mourning and its neural correlates: A functional neuroimaging study. Soc. Cogn. Affect. Neurosci. 12(8), 1303–1313 (2017).

Welling, H. An evolutionary function of the depressive reaction: The cognitive map hypothesis. New Ideas Psychol. 21(2), 147–156 (2003).

Overskeid, G. The slave of passions: Experiencing problems and selecting solutions. Rev. Gen. Psychol. 4(3), 284–309 (2000).

Robinson, J. L. & Demaree, H. A. Experiencing and regulating sadness: Physiological and cognitive effects. Brain Cogn. 70(1), 13–20 (2009).

Banks, S. J., Bellerose, J., Douglas, D. & Jones-Gotman, M. Bilateral skin conductance responses to emotional faces. Appl. Psychophysiol. Biofeedback 37(3), 145–152 (2012).

Shirai, M. & Suzuki, N. Is sadness only one emotion? Psychological and physiological responses to sadness induced by two different situations: Loss of some one and failure to achieve a goal. Front. Psychol. 8, 288. https://doi.org/10.3389/fpsyg.2017.00288 (2017).

Rottenberg, J. & Gross, J. J. When emotion goes wrong: Realizing the promise of affective science. Clin. Psychol. Sci. Pract. 10(2), 227–232 (2003).

Tsikandilakis, M., Bali, P., Derrfuss, J. & Chapman, P. Anger and hostility: Are they different? An analytical exploration of facial-expressive differences, and physiological and facial-emotional responses. Cogn. Emot. 34(3), 581–595 (2020).

Arias, J. A. et al. The neuroscience of sadness: A multidisciplinary synthesis and collaborative review. Neurosci. Biobehav. Rev. 111, 199–228 (2020).

Bonanno, G. A., Goorin, L. & Coifman, K. G. Sadness and Grief (Springer, 2008).

Parker, G., Paterson, A. & Hadzi-Pavlovic, D. Emotional response patterns of depression, grief, sadness, and stress to differing life events: A quantitative analysis. J. Affect. Disord. 175, 229–232 (2015).

Shirai, M., Soshi, T. & Suzuki, N. Knowledge of sadness: Emotion-related behavioral words differently encode loss and failure sadness. Curr. Psychol. https://doi.org/10.1007/s12144-018-0010-9 (2018).

Tsikandilakis, M. et al. “The many faces of sorrow”: An empirical exploration of the psychological plurality of sadness. Curr. Psychol. 43(5), 3999–4015 (2024).

Shirai, M. & Soshi, T. Neurophysiological foundations of loss and failure sadness differently modulate emotional conceptual processing. J. Gen. Psychol. 149(1), 29–56 (2022).

Vingerhoets, A. J. J. M. Why Only Humans Weep: Unraveling the Mysteries of Tears (Oxford University Press, 2013).

Klass, D. Continuing bonds in the resolution of grief in Japan and North America. Am. Behav. Sci. 44(5), 742–763 (2001).

Kosonogov, V. & Titova, A. Recognition of all basic emotions varies in accuracy and reaction time: A new verbal method of measurement. Int. J. Psychol. 54(5), 582–588 (2019).

Carroll, J. M. & Russell, J. A. Do facial expressions signal specific emotions? Judging emotion from the face in context. J. Personal. Soc. Psychol. 70(2), 205 (1996).

Bartoszek, G. & Cervone, D. Toward an implicit measure of emotions: Ratings of abstract images reveal distinct emotional states. Cogn. Emot. 31(7), 1377–1391 (2017).

Mauss, I. B. & Robinson, M. D. Measures of emotion: A review. Cogn. Emot. 23(2), 209–237. https://doi.org/10.1080/02699930802204677 (2009).

Ernst, L. H. et al. Prefrontal activation patterns of automatic and regulated approach: Avoidance reactions: A functional near-infrared spectroscopy (fNIRS) study. Cortex 49(1), 131–142 (2013).

Giles, G. E. et al. Endurance exercise enhances emotional valence and emotion regulation. Front. Hum. Neurosci. 12, 398–411 (2018).

Herrmann, M. J., Ehlis, A. C. & Fallgatter, A. J. Prefrontal activation through task requirements of emotional induction measured with NIRS. Biol. Psychol. 64(3), 255–263 (2003).

Hosokawa, M., Nakadoi, Y., Watanabe, Y., Sumitani, S. & Ohmori, T. J. P. Association of autism tendency and hemodynamic changes in the prefrontal cortex during facial expression stimuli measured by multichannel near infrared spectroscopy. Psychiatry Clin. Neurosci. 69(3), 145–152 (2015).

Ozawa, S., Matsuda, G. & Hiraki, K. Negative emotion modulates prefrontal cortex activity during a working memory task: A NIRS study. Front. Hum. Neurosci. 8, 46 (2014).

Roos, A., Robertson, F., Lochner, C., Vythilingum, B. & Stein, D. J. Altered prefrontal cortical function during processing of fear-relevant stimuli in pregnancy. Behav. Brain Res. 222(1), 200–205 (2011).

Bandara, D., Velipasalar, S., Bratt, S. & Hirshfield, L. Building predictive models of emotion with functional near-infrared spectroscopy. Int. J. Hum. Comput. Stud. 110, 75–85 (2018).

Gyurak, A. & Etkin, A. A neurobiological model of implicit and explicit emotion regulation. Handb. Emot. Regul. 2, 76–90 (2014).

Righart, R. & De Gelder, B. Rapid influence of emotional scenes on encoding of facial expressions: An ERP study. Soc. Cogn. Affect. Neurosci. 3(3), 270–278 (2008).

Borod, J. C. et al. Right hemisphere emotional perception: Evidence across multiple channels. Neuropsychology 12(3), 446 (1998).

Davidson, R. J. Anterior cerebral asymmetry and the nature of emotion. Brain Cogn. 20(1), 125–151 (1992).

Colibazzi, T. et al. Neural systems subserving valence and arousal during the experience of induced emotions. Emotion 10(3), 377 (2010).

Balconi, M., Vanutelli, M. E. & Grippa, E. Resting state and personality component (BIS/BAS) predict the brain activity (EEG and fNIRS measure) in response to emotional cues. Brain Behav. 7(5), e00686 (2017).

Ochsner, K. N. et al. For better or for worse: neural systems supporting the cognitive down and upregulation of negative emotion. Neuroimage 23(2), 483–499 (2004).

Masuda, T. et al. Placing the face in context: Cultural differences in the perception of facial emotion. J. Personal. Soc. Psychol. 94(3), 365 (2008).

Condy, E. E. et al. Characterizing the action-observation network through functional near-infrared spectroscopy: A review. Front. Hum. Neurosci. 15, 627983 (2021).

Lundqvist, D., & Litton, J. E. The Averaged Karolinska Directed Emotional Faces—AKDEF, CD ROM from Department of Clinical Neuroscience, Psychology Section, Karolinska Institutet (1998). ISBN 91-630-7164-9.

Yang, T. et al. Tsinghua facial expression database: A database of facial expressions in Chinese young and older women and men: Development and validation. PLoS ONE 15(4), e0231304. https://doi.org/10.1371/journal.pone.0231304 (2020).

Lyons, M., Akamatsu, S., Kamachi, M., & Gyoba, J. Coding facial expressions with gabor wavelets. In Proceedings Third IEEE International Conference on Automatic Face and Gesture Recognition, 200–205. (IEEE, 1998).

Bradley, M. M. & Lang, P. J. Measuring emotion: The self-assessment manikin and the semantic differential. J. Behav. Ther. Exp. Psychiatry 25(1), 49–59 (1994).

R Core Team. R: A Language and Environment for Statistical Computing. (R Foundation for Statistical Computing, 2019). https://www.R-project.org/.

Acknowledgements

This study is supported by Japan Student Services Organization (JASSO), Kyoto university foundation and Daiko foundation. We’d like to express our gratitude to various individuals for their valuable contributions: Dr. Yukiori Goto for providing us with laboratory equipment, Mona Claes, Yui Asaoka, and Sanjana Kadam for their assistance in gathering data, Dr. André Gonçalves for his feedback on the study’s design, Dr. Takuya Yoshiike and Dr. Gao Jie for their support and feedback on every stage of the manuscript. Dr. Michael Huffman for his meticulous language proofreading, and Eric Buchwald for his support with coding. I am very thankful to all participants for their participation and cooperation in the experiment. Last but not least I’m very grateful to all the anonymous reviewers for providing me valuable feedback.

Author information

Authors and Affiliations

Contributions

S.T. conceptualized the study, collected the data, performed the statistical analysis, interpreted the results, and prepared the initial draft of the manuscript. I.A. supervised this study, reviewed, and edited the manuscript. H.I. reviewed the manuscript. Please direct any correspondence, requests for materials, or inquiries pertaining to this study to S.T.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

All experiments were conducted in accordance with the Declaration of Helsinki and the Ethical Guidelines for Medical and Health Research Involving Human Subjects by the Japanese Ministry of Health, Labor, and Welfare, and approved by the Kyoto University Primate Research Institute Human Ethics Committee (no. 2018-16).

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Tripathi, S., Imai, H. & Adachi, I. Behavioral (reaction time) and prefrontal cortex response revealed differences in grief vs. sadness perception. Sci Rep 15, 6356 (2025). https://doi.org/10.1038/s41598-025-90387-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-90387-4

This article is cited by

-

Short-term high-altitude exposure protects working memory by balancing intestinal microbiota

BMC Microbiology (2026)