Abstract

Ensuring safety in geotechnical engineering has consistently posed challenges due to the inherent variability of soil. In the case of slope stability problems, performing on-site tests is both costly and time-intensive due to the need for sophisticated equipment (to acquire and move) and logistics. Consequently, the analysis of simulation models based on soft computing proves to be a practical and invaluable alternative. In this research work, learning abilities of the Class Noise Two (CN2), Stochastic Gradient Descent (SGD), Group Method of Data Handling (GMDH) and artificial neural network (ANN) have been investigated in the prediction of the factor of safety (FOS) of slopes. This has been successfully done through literature search, data curation and data sorting. A total of three hundred and forty-nine (349) data entries on the FOS of slopes were collected from literature and sorted to remove odd values and unlogic results, which had been used together in a previous research work. After the sorting process, the remainder of the realistic data entries was 296. The previous work which had included unrealistic data entries had unit weight, γ (kN/m3), cohesion, C (kPa),angle of internal friction (Φ°), slope angle (\({\Theta}\)°), slope height H (m), and pore water pressure ratio, ru as the studied parameters, which formed the independent variables. After careful checks, the initial results showed poor correlation with the individual factors and the factors were collected into three non-dimensional parameters based on the understanding of the physics of flows, which are: C/γ.h-Cohesion/unit weight x slope height, tan(ϕ)/tan(β)-the tangent of internal friction angle/Tangent of slope angle, and ρ/γ.h-Water pressure/unit weight x slope height, which are deployed as inputs and FOS-the safety factor of the slope as the output. At the end of the exercise, the ANN outclassed the other techniques with SSE of 62%, MAE of 0.27, MSE of 0.21, RMSE of 0.46, average total error of 24%, and R2 of 0.946 thereby becoming the decisive intelligent model in this exercise. However, there is an advantage the deployment of GMDH, which comes second in order of superiority, has over the ANN. This is the development of a closed-form equation that allows its model to be applied manually in the design of slope stability problems. Overall, the present research models outperformed the eleven (11) models of the previous work due to sorting and elimination of unrealistic data entries deposited in the literature, the application of dimensionless combination of the studied slope stability parameters and the superiority of the selected machine learning techniques.

Similar content being viewed by others

Introduction

Background and review of literatures

Forecasting slope stability is a crucial task in geotechnical engineering, and it can be accomplished through either physics-based or data-driven approaches1,2. Physics-based methods rely on soil mechanics principles, such as limit equilibrium analysis and shear strength theories, to assess slope stability, typically focusing on slope-specific analyses3,4. On the other hand, data-driven approaches predict slope stability conditions by learning relationships between influential factors and stability outcomes from historical observations of slope failures, requiring substantial big data resources that can be challenging to obtain4,5,6. This study investigates three practical and efficient strategies to incorporate geotechnical engineering domain knowledge into data-driven models for slope stability prediction: a hybrid knowledge-data model, knowledge-based model initiation, and a knowledge-guided loss function7,8. These approaches are compared against pure data-driven models and domain knowledge–based models, including a physics-based solution chart and a physics-based empirical model9. The benchmark database comprises slope stability case histories compiled from the literature, and model performance is evaluated using five-fold cross-validation10. The validation results indicate that machine learning models surpass domain knowledge–based models across several evaluation metrics11. Moreover, the three proposed methods outperform both domain knowledge–based models and pure data-driven models12. Notably, hybrid knowledge-data models and knowledge-guided loss functions demonstrate a reduction in discrepancies between predicted slope stability conditions and reported factor-of-safety values, aligning more closely with the underlying physics associated with slope stability13. This study offers an initial assessment of the benefits of integrating domain knowledge and data-driven methods in geotechnical engineering applications, using slope stability prediction as an illustrative example10.

When it comes to geophysical flows on slopes, several types of mass movements can occur, including landslides, debris flows, rockfalls, and avalanches14. Geophysical flows are typically driven by the force of gravity acting on soil, rock, or snow and can be triggered by various factors, such as heavy rainfall, snowmelt, seismic activity, or human activities15,19. Predicting and managing geophysical flows on slopes involves understanding the complex interactions between geological, hydrological, and geotechnical factors20,21. In slope stability analysis, a thorough geotechnical analysis is conducted to assess the stability of the slope18,19,22,23,24. This may involve evaluating the shear strength of the soil or rock mass, pore water pressures, and the slope’s geometry24,25. Various methods, such as limit equilibrium analysis, slope stability charts, and numerical modeling, can be used to assess the potential for slope failure26,27 (Olamide et al., 2024; Rabbani et al., 2024b). Further, the role of water in slope stability is considered28. Heavy rainfall, snowmelt, or changes in groundwater levels can significantly influence the likelihood of slope failure by increasing pore water pressures and reducing the effective stress within the slope29. To understand the geological characteristics of the slope, including the type of rock or soil, the presence of geological structures (e.g., faults, joints), and the potential for weathering and erosion is important30. These factors can impact the stability of the slope and the likelihood of geophysical flows20,31. Identify potential triggering mechanisms for geophysical flows, such as intense rainfall, rapid snowmelt, seismic activity, or human activities (e.g., construction or excavation)32. Understanding the triggers for slope failure is crucial for predicting and preparing for geophysical flows33,34,35. For certain types of geophysical flows, such as debris flows and avalanches, it’s important to model the runout behavior to understand the potential impact area and the forces involved9,36,37,38,39. This may involve using empirical relationships, numerical modeling, or a combination of both5. Develop early warning systems to monitor and detect conditions that could lead to geophysical flows40,41. This might involve installing instrumentation to measure factors such as rainfall, pore water pressures, slope movement, or seismic activity42,43,44. Identify and implement appropriate mitigation measures to reduce the risk associated with geophysical flows45. This could include structural measures (e.g., retaining walls, barriers) and non-structural measures (e.g., land use planning, reforestation, drainage management)46. Engage with local communities to raise awareness about the risks associated with geophysical flows and develop emergency response plans to mitigate the potential impact on human life and infrastructure47. Ultimately, predicting and managing geophysical flows on slopes requires a comprehensive understanding of the geological, hydrological, and geotechnical factors that contribute to slope instability39. It often involves a multidisciplinary approach that integrates expertise from geologists, geotechnical engineers, hydrologists, and other relevant professionals48. Slope instability can give rise to a variety of geohazards, which have the potential to pose significant risks to infrastructure, communities, and the environment. Geohazardsassociated with slope instability include landslides which are one of the most common geohazards associated with slope instability49. They involve the downslope movement of rock, soil, and debris under the influence of gravity50. Landslides can be triggered by factors such as heavy rainfall, rapid snowmelt, seismic activity, or human activities33. They pose risks to infrastructure, transportation routes, and human safety. Rockfalls occur when rock fragments detach from a steep rock face and fall to the base of the slope44. They can be triggered by weathering, freeze–thaw cycles, seismic activity, or human interference. Rockfalls pose hazards to infrastructure, roads, and individuals in the vicinity of steep rock slopes. Debris flows, also known as mudflows, are fast-moving mixtures of water, rock, soil, and organic matter. They can be triggered by intense rainfall, snowmelt, or the failure of debris dams51. Debris flows can cause significant damage to infrastructure and pose risks to human life, particularly in mountainous regions. Slope instability can lead to erosion, which can result in the loss of soil and sediment from the slope46. This can lead to downstream sedimentation, increased flood risks, and the loss of agricultural land. Instability in slopes can lead to the undermining of infrastructure such as buildings, roads, and utility lines48. This can occur gradually over time or suddenly during a slope failure event. Slope instability can result in the displacement of large volumes of soil and rock into rivers and lakes, leading to sedimentation, altered water courses, and increased flood hazards32. Instability can trigger secondary hazards such as tsunamis, if large volumes of material are displaced into bodies of water, or seismic events, if significant mass movements occur in seismically active regions18.

Mitigation and management of these geohazards often involve a combination of structural and non-structural measures, including slope stabilization, construction of retaining structures, land use planning, early warning systems, and emergency preparedness12. Geotechnical investigations, risk assessments, and ongoing monitoring are crucial for identifying and addressing slope instability and its associated geohazards11,38. The factor of safety is a crucial concept when assessing the stability of slopes and predicting the potential for geophysical flows3. In the context of geotechnical and geophysical engineering, the factor of safety is a measure of how close a slope is to failure6. It is calculated by comparing the forces resisting slope failure to the forces driving slope failure. When considering geophysical flows on slopes, the factor of safety takes on particular significance. The factor of safety is used to assess the likelihood of slope failure and the potential for geophysical flows such as landslides, debris flows, or avalanches24,52. Geotechnical engineers and geoscientists use various methods to calculate the factor of safety for slopes. This often involves analyzing the forces acting on the slope, including the shear strength of the soil or rock, the slope geometry, and the external forces such as water pressure or seismic loading49. The factor of safety is calculated as the ratio of the resisting forces to the driving forces. In the context of geophysical flows, a factor of safety less than 1 indicates that the slope is potentially unstable and at risk of failure23. For example, a factor of safety of 0.8 would suggest that the driving forces are 1.25 times greater than the resisting forces, indicating a high risk of slope failure and potential for a geophysical flow event5,16,43. Factors such as changes in pore water pressure due to rainfall or snowmelt, seismic activity, or human activities can significantly impact the factor of safety48. Rapid changes in these external factors can lead to a decrease in the factor of safety, increasing the likelihood of geophysical flows49. In the case of certain geophysical flows, such as debris flows and avalanches, the dynamic nature of the flow must be considered when assessing the factor of safety14. This involves evaluating the impact of the flowing mass on structures and infrastructure downslope, as well as the potential for increased driving forces during flow propagation. The factor of safety is an integral part of risk assessment for geophysical flows9. It helps in determining the level of risk associated with potential slope failure and geophysical flow events, guiding decisions related to land use planning, infrastructure development, and emergency preparedness34. In summary, the factor of safety is a critical parameter when assessing the potential for geophysical flows on slopes49,53,54. It provides a quantitative measure of slope stability and is essential for understanding and managing the risks associated with geophysical flow events53. Geotechnical and geophysical engineers, along with other relevant experts, use the factor of safety as a fundamental tool in evaluating and mitigating the potential for slope instability and geophysical flows.

The factor of safety (FOS) is a critical concept in geotechnical engineering and slope stability analysis. It is used to assess the stability of slopes and is defined as the ratio of the resisting forces to the driving forces acting on a slope55. A factor of safety greater than 1 indicates that the resisting forces are greater than the driving forces, suggesting that the slope is stable3. Conversely, a factor of safety less than 1 indicates that the driving forces exceed the resisting forces, implying that the slope is potentially unstable and at risk of failure30. Calculating the factor of safety for slopes involves considering various factors, including the shear strength of the soil or rock, the slope geometry, external loads (such as water pressure or seismic loads), and other relevant parameters56,57. The factor of safety can be determined using different methods, including analytical approaches, limit equilibrium methods, and numerical modeling58. Understanding the shear strength properties of the soil or rock mass is fundamental to calculating the factor of safety38,39. This involves conducting laboratory and field tests to determine parameters such as cohesion and internal friction angle48. The geometry of the slope, including its inclination and shape, influences the distribution of forces and the potential for instability58. External factors, such as water infiltration, seismic activity, or dynamic loads, can significantly affect the stability of a slope. These forces must be considered when calculating the factor of safety. Different methods, such as the method of slices, Bishop’s method, or numerical modeling using finite element or finite difference techniques, can be employed to calculate the factor of safety for slopes10. However, it’s important to account for uncertainty in input parameters and to conduct sensitivity analyses to assess the impact of variations in soil properties, slope geometry, and external loads on the factor of safety.

The factor of safety plays a crucial role in slope stability assessment, engineering design, and risk management3. It is used to make decisions regarding slope reinforcement, slope angles, and the design of structures on or near slopes8. Furthermore, it forms the basis for risk assessment and mitigation strategies related to potential slope failure and associated hazards59. Ultimately, the factor of safety is a key tool for geotechnical engineers and geoscientists in evaluating and managing the stability of slopes, embankments, and other natural and engineered landforms6. Forecasting the factor of safety for slopes and predicting geophysical flow involves complex analysis that typically requires a multidisciplinary approach56,58,60,61. Gathering data on the geological and geotechnical properties of the slope, including soil type, rock type, strength parameters, groundwater conditions, and any relevant geophysical data is a very important stage of this investigation30. So is to conduct a detailed site investigation to understand the local geological and geotechnical conditions30. This may involve drilling, sampling, and laboratory testing of soil and rock samples62,63,64,65,66,67,68,69,70. It is important utilizing geotechnical engineering principles to analyze the stability of the slope61. This may also involve methods such as limit equilibrium analysis, finite element analysis, or distinct element modeling to assess the factor of safety45. Depending on the specific geophysical flow you are interested in (e.g., landslides, debris flows, or avalanches), it is required to employ appropriate models and methods to predict the initiation, propagation, and runout of the flow9,34. This could involve numerical modeling, empirical relationships, or a combination of both71,72,73,74,75. Calculate the factor of safety for the slope based on the results of your geotechnical analysis72. The factor of safety is a measure of how close the slope is to failure61,62,63,64. A factor of safety less than 1 indicates that failure is likely65,66,67,68. Consider the consequences of potential geophysical flow events and perform a risk assessment to understand the potential impact on infrastructure, human life, and the environment67. Develop and evaluate potential mitigation strategies to reduce the risk associated with geophysical flow events68. This might include structural measures, such as retaining walls or erosion control structures, as well as non-structural measures, such as land use planning and early warning systems69. Implement a monitoring plan to continuously assess the stability of the slope and to provide early warning of any impending geophysical flow events70. Geophysical conditions can change over time due to natural and anthropogenic factors75. It’s important to continually evaluate the slope’s stability and the effectiveness of any implemented mitigation measures3,70. It is to be noted that the specific methods and tools used for forecasting and prediction will depend on the unique characteristics of the site and the type of geophysical flow (slope failure) you are concerned with75.

Aminpour et al.55 examined the performance of five machine learning models, namely support vector machines (SVM), k-nearest neighbors (k-NN), multilayer perceptron (MLP), random forest (RF), and decision tree (DT) in predicting slope safety factors. This study aims to assess and enhance these models for FS calculations, which are vital for stabilization techniques and slope stabilization. The prediction of FS was made using geo-engineering index parameters. The results indicated that the MLP model exhibited the most favorable assessment, with a precision of 0.938 and an accuracy of 0.90. The MLP model had an estimated error rate of MAE = 0.103367, MSE = 0.102566, and RMSE = 0.098470. Sahoo et al.6 demonstrated the application of Machine Learning methodologies for the anticipation of slope failure occurrences in mining operations. The assessment of slope stability is conducted using the random forest, support vector classifier, and logistic regression techniques. The dataset comprises the parameters of cohesion, angle of friction, and unit weight for the designed slopes. An analysis and comparison are conducted on the performance of various models for the prediction of factor of safety. Another study by Khajehzadeh and Keawsawasvong7 developed a hybrid machine learning methodology that can reliably predict the factor of safety (FOS) of earth slopes. The Global-Best Artificial Electric Field (GBAEF) approach is employed for the optimization of the Support Vector Regression (SVR) model, subsequently fine-tuning it to enhance the accuracy of predictions. The model utilizes 153 data sets and a case study from Chamoli District, Uttarakhand, to provide a comparison with conventional slope stability methodologies. Empirical evidence demonstrates a 7% enhancement in the accuracy of predicting FOS and a robust correlation between the observed and projected FOS. Aminpour et al.55 evaluated the effectiveness of machine learning (ML) models and Artificial Neural Networks in accurately predicting the likelihood of failure by utilizing random field slope stability simulations. This study investigates the variations in soil composition and the directional dependence of soil properties on slopes that are both uniform and stratified. The study demonstrates that machine learning (ML) predictions exhibit exceptional accuracy across various soil heterogeneity and anisotropy conditions. The errors in the predicted likelihood of failure are a mere 0.46% for the remaining unobserved 95% of data. This strategy significantly enhances computational speed by around 100 times. According to Wang et al.8 studied the matter of slope stability in open pit mines in China, emphasizing the notable influence on both efficiency and safety. The study used a deep learning algorithm to analyze the stability of large-scale mines under wet conditions, taking into account a 15% probability of landslide danger. The results indicate that the safety coefficient of the algorithm is 7% lower compared to previous methods, while the stability factor matches the specified requirements. The utilization of the deep learning algorithm enhances the effectiveness in forecasting the slope stability factor and ensuring safety in project development, rendering it a crucial topic in open-pit mine engineering. Zhang et al.19 introduced a novel approach using ensemble learning to forecast the stability of slopes in landslide events. This strategy is used to 786 landslide cases in Yun yang County, China. The findings indicate that XGBoost and RF have superior performance compared to SVM and LR in forecasting slope stability, with profile shape being identified as the most critical determinant. This concept presents a favorable strategy for forecasting the stability of slopes in other regions susceptible to landslides. Mahmoodzadeh et al.3 utilized six machine learning algorithms to predict the factor of safety (FOS) for slope stability, using a dataset of 327 slope examples from Iran. The GPR model had the highest level of accuracy, as evidenced by an R2 value of 0.8139, an RMSE value of 0.160893, and a MAPE value of 7.209772%. The backward selection method was employed to assess the individual contribution of each parameter in the prediction problem. The study determined that φ and γ exhibited the highest and lowest levels of effectiveness, respectively, in relation to slope stability. Pei et al.73 investigated three approaches to incorporating geotechnical engineering domain expertise into data-driven models for predicting slope stability. The techniques comprise a combination of a knowledge-data model, the commencement of a knowledge-based model, and the utilization of a knowledge-guided loss function. The models were compared to both data-driven models and domain knowledge-based models, and the machine learning models demonstrated superior performance compared to the domain knowledge-based models. The utilization of hybrid knowledge-data models and knowledge-guided loss function resulted in the mitigation of disparities in the forecasted slope stability conditions, hence achieving a closer alignment with the fundamental principles of slope stability physics. This paper presents an initial evaluation of the benefits of combining domain expertise with data-driven techniques in the field of geotechnical engineering. Other studies Meng et al.10 used artificial neural networks to forecast the stability of slopes in three dimensions, utilizing a software application known as SlopeLab. The model employs dimensionless parameters to articulate slope stability in traditional charts. The model is trained using a dataset derived from slope stability charts specifically designed for cohesive and cohesive-frictional soils. The trained models are assessed by measuring their performance using correlation coefficient and root mean square error. The software assists engineers in rapidly estimating the impact of 3D slopes, particularly in cases when the FS3D/FS2D ratio is significant. Chakraborty and Goswami71 introduced a model for estimating the stability of slopes in three dimensions. The model utilizes input parameters related to geotechnical properties and geometric characteristics, and produces output data in the form of three-dimensional critical safety factors (Fcs). The performance of the model is evaluated and compared to standard analytical methods, indicating that the predicted values closely align with the analytical values and exhibit strong correlation with the input variables. This demonstrates the capability of computational tools such as regression analysis and neural networks in addressing intricate engineering problems. Zhao et al.75 introduced predictive models for the run-out distance in clay slopes utilizing the material point method (MPM). The model accurately replicates the gradual deterioration process of slopes, taking into account the weakening of soil due to tension. The study utilizes a dataset of 100 ground movements extracted from the NGA-West2 database. It determines that the peak ground velocity exhibits the strongest correlation with the run-out distance in categories when failure does not occur. The model additionally forecasts the run-out distance for various slope angles, utilizing parameters such as H, unit weight, residual cohesion, and residual friction angle. Hsiao et al.72 presented a pre-trained algorithm that utilizes machine learning techniques to accurately estimate safety factors and the trace of slope slip surfaces. The program employs artificial and convolutional neural networks to provide predictions regarding safety factors and slide surfaces. The convolutional neural network model is superior in accuracy and efficiency when dealing with complicated scenarios, specifically in resolving random field problems and spatial relationships. Consequently, it significantly reduces the time required in comparison to the standard random finite element method. In this research paper, various advanced machine learning techniques have been deployed to predict the factor of safety of slopes under failure state conditions such as the artificial neural network (ANN), the group method of data handling (GMDH), the stochastic gradient descent (SGD), and the Class Noise Two (CN2).

Limitations of selected machine learning techniques

Using Class Noise Two (CN2), Stochastic Gradient Descent (SGD), Group Method of Data Handling (GMDH), and artificial neural networks (ANN) to evaluate slope behavior for geophysical flow predictions involves specific limitations related to each method’s characteristics, the complexity of the task, and the nature of the data. CN2, a rule-based learning algorithm, may struggle with capturing highly nonlinear and dynamic relationships often present in geophysical data, such as the interactions between soil properties, hydrology, and slope geometry. While CN2 is interpretable due to its rule-based structure, it might oversimplify complex relationships, leading to suboptimal predictive performance when dealing with intricate geophysical processes. SGD, a common optimization technique for training machine learning models, including ANNs, relies on iteratively minimizing a loss function. Although it is computationally efficient and scalable, it is sensitive to hyperparameter tuning, particularly the learning rate, and can converge to suboptimal solutions if not properly configured. For geophysical flow prediction, where data may exhibit non-stationarity and high variability, SGD’s reliance on consistent gradients can result in instability or poor convergence, especially in small or noisy datasets. GMDH, a self-organizing modeling approach, can identify complex nonlinear relationships by iteratively selecting and combining variables. However, it is computationally intensive and may overfit the data, especially when applied to datasets with limited size or high levels of noise. Its dependence on predefined criteria for selecting models or variables can also lead to suboptimal results if those criteria are not well-suited to the problem at hand. Additionally, GMDH can be challenging to interpret, limiting its practical utility for decision-making in slope management. ANNs, while powerful for capturing complex patterns and nonlinearities, have several limitations when applied to geophysical flow predictions. They require large amounts of data for effective training, and their performance can degrade with insufficient or imbalanced datasets, which are common in slope stability studies. ANNs also act as “black-box” models, making it difficult to interpret their predictions or understand the underlying relationships between input variables. This lack of interpretability can hinder their adoption in practical applications where transparency is critical. Additionally, ANNs are computationally expensive and prone to overfitting, especially when dealing with high-dimensional data or when noise and outliers are present. Overall, while CN2, SGD, GMDH, and ANN offer valuable capabilities for modeling slope behavior, their limitations in handling nonlinearity, noise, small datasets, interpretability, and computational efficiency highlight the need for careful preprocessing, model selection, and validation to ensure reliable geophysical flow predictions. Combining these methods with domain expertise or hybrid approaches may help mitigate these challenges.

Methodology

Governing equations of slope failure

The governing equations of slope failure are typically derived from soil mechanics and geotechnical engineering principles. Slope stability analysis involves assessing the stability of natural or engineered slopes to prevent failures like landslides or slope collapse. The analysis considers factors such as soil properties, slope geometry, and external loading conditions. Slope stability analysis applies the following concepts: Shear Strength Equations: The shear strength of the soil is a critical parameter in slope stability analysis. Common shear strength equations include the Mohr–Coulomb equation, which relates shear strength (τ) to normal stress (σn):

Factor of Safety (FOS): The factor of safety is a ratio that compares the resisting forces to the driving forces in a slope. It is defined as:

In the context of slope stability analysis, the resisting force refers to the forces within the soil that act to resist the downslope movement or failure of the slope. The resisting force is crucial in determining the factor of safety, which assesses the stability of the slope. For a stable slope, the factor of safety should be greater than 1, indicating that the resisting forces are sufficient to prevent failure. If the factor of safety is less than 1, it suggests that the driving forces (e.g., downslope gravitational forces) exceed the resisting forces, and the slope may be prone to failure. Similarly, in the context of slope stability analysis, the driving force refers to the forces or factors that contribute to the downslope movement or failure of the slope. It represents the forces that tend to induce or cause slope failure. The determination of the driving force is crucial in assessing the overall stability of the slope. The primary driving force in slope failure is the gravitational force acting on the mass of soil or rock material on the slope. This force can be broken down into components that contribute to driving the movement of soil particles downslope. For static equilibrium, a factor of safety greater than 1 indicates stability. It’s important to note that slope stability analysis considers both resisting and driving forces, and a comprehensive assessment involves evaluating various factors such as soil properties, slope geometry, and external loading conditions. Further developments have been proposed by the Bishop’s Method: Bishop’s method is a common method for slope stability analysis. The factor of safety (FOS) in Bishop’s method is expressed as:

where: c′ and ϕ′ are the effective cohesion and effective friction angle, N is the stability number, σ′ is the vertical effective stress, W is the weight of the potential sliding mass, and β is the slope angle. The stability number applied in the Bishop’s development of the FOS has been proposed by Taylor as:

where: γ is the unit weight of the soil, and H is the height of the slope. Critical Slip Surface: Determining the critical slip surface is a crucial step in slope stability analysis. It involves finding the potential sliding surface that result in the lowest factor of safety. These equations and methods are foundational in geotechnical engineering for assessing slope stability and preventing slope failure. The actual analysis may involve numerical methods, such as the finite element method or limit equilibrium analysis, to account for the complex geometry and material behavior in real-world slope conditions. These are very expensive laboratory and field experiments demanding lots of time and logistics. A global database has been collected through literature investigation to forecast cheaper slope failure solutions with which preliminary designs and constructions are carried out as well as the monitoring exercise on the performance of slope stability infrastructure.

Theoretical framework of machine learning

ANN

An Artificial Neural Network (ANN) is a computational model inspired by the structure and functioning of the biological neural networks found in the human brain. ANNs are a subset of machine learning models used for various tasks, including pattern recognition, classification, regression, and optimization. The network consists of interconnected nodes, also known as neurons or artificial neurons, organized into layers. Neurons are the basic computational units of an ANN. They receive input signals, process them, and produce an output signal. ANNs are typically organized into layers, including an input layer, one or more hidden layers, and an output layer. The input layer receives input data, the hidden layers process this information, and the output layer produces the final result. Weights and Connections: Each connection between neurons is associated with a weight. These weights are parameters that the network learns during the training process. The weights determine the strength and influence of the connections between neurons. Activation Function: Neurons apply an activation function to the weighted sum of their inputs to produce an output. Common activation functions include sigmoid, hyperbolic tangent (tanh), and rectified linear unit (ReLU). Feedforward Propagation: During the forward pass, input data is processed layer by layer through the network, producing an output. The input signal is multiplied by the weights, and the result is passed through the activation function. ANNs learn from data through a training process, often using a method called backpropagation. Backpropagation involves adjusting the weights based on the error between the predicted output and the actual target output, with the goal of minimizing this error. Loss Function: A loss function is used to quantify the difference between the predicted output and the actual target output. During training, the goal is to minimize this loss, and the optimization algorithm adjusts the weights accordingly. Bias: Each neuron typically has an associated bias term, which allows the network to model relationships even when all input values are zero. The typical architecture of the ANN is presented in Fig. 1. Artificial Neural Networks can be designed in various architectures, including feedforward neural networks, recurrent neural networks (RNNs), convolutional neural networks (CNNs), and more. They have been successful in solving complex problems across different domains, such as image and speech recognition, natural language processing, and various other tasks in machine learning and artificial intelligence. The mathematical principles underlying artificial neural networks entail grasping the essential elements and procedures within the network. Every neuron within the network analyzes input data by computing the weighted sum of its inputs and then applying an activation function to the result.

where: Y is the output, wi are the weights, xi are the inputs, b is the bias term, and f is the activation function. The pictorial representation of the ANN or the architecture is shown in Fig. 1.

Stochastic Gradient Descent (SDG)

Stochastic Gradient Descent is a variant of the gradient descent optimization algorithm used to minimize the cost function in machine learning models as presented in the framework in Fig. 2. Unlike traditional gradient descent, which computes the gradient of the entire dataset, SGD updates the model parameters based on the gradient of the cost function computed on a small, randomly selected subset of the data (a mini-batch). This introduces randomness into the optimization process. The general update rule for a parameter θ in SGD is as follows:

where: \({\theta }_{old}\) is the current value of the parameter, \({\theta }_{new}\) is the updated value of the parameter, \(\eta\) is the learning rate, determining the step-by-step size in the parameter space, \(\nabla J\left({\theta }_{old}, {X}_{i}{, Y}_{i}\right)\) is the gradient of the cost function J with respect to the parameter computed on the mini-batch of data (Xi, Yi). The key advantage of SGD is its efficiency, especially for large datasets, as it updates the model parameters more frequently using smaller batches of data. This can lead to faster convergence and reduced computation time compared to regular gradient descent. Additionally, the stochastic nature of the updates can help escape local minima and explore the parameter space more effectively. However, the randomness in SGD can also introduce noise, making the optimization process more erratic. To address this, learning rate schedules and momentum-based techniques are often employed to adaptively adjust the learning rate and smooth out the updates. Mini-batch SGD is a compromise between the computational efficiency of SGD and the stability of regular gradient descent.

GMDH

The Group Method of Data Handling (GMDH) is a family of machine learning algorithms used for data analysis, feature selection, and modeling as shown in the framework in Fig. 3. It was initially proposed by Alexey Grigorievich Ivakhnenko in the 1960s. GMDH belongs to the category of self-organizing models, and its main goal is to automatically select the most relevant features and construct a model that best describes the relationships within the data. The GMDH algorithm typically operates in the following manner: Data Preprocessing: Standardize or normalize the input data to ensure that all variables are on a comparable scale. Initialization: Initialize the GMDH process by creating a set of simple models using individual input variables. Model Expansion: Iteratively expand the set of models by combining and refining them. This involves considering combinations of input variables and adjusting model parameters to improve performance. Model Selection: Evaluate the performance of each model using a specific criterion (e.g., accuracy, correlation, error). Select the models that exhibit the best performance. Convergence: Repeat the expansion and selection steps until a convergence criterion is met, or until a desired level of model complexity is reached. Final Model: The final GMDH model is a set of interconnected models that collectively represent the relationships within the data. GMDH can be applied to both regression and classification problems. The algorithm is particularly known for its ability to automatically select relevant features and construct models without a priori knowledge of the underlying relationships in the data. While GMDH has been widely used, it may face challenges with overfitting, especially in the presence of noise in the data. Various modifications and enhancements to the original GMDH algorithm have been proposed over the years to address these issues and improve its overall performance.

The Group Method of Data Handling (GMDH) algorithm involves the construction and evaluation of multiple models with different complexity levels. While the specific details may vary depending on the GMDH variant and the problem being addressed, and a general representation of the algorithm is as follows: Initialization: Create a set of simple models using individual input variables. Model Expansion: Expand the set of models by iteratively combining and refining them. For a given model, M, the expansion involves adjusting the model parameters and considering combinations of input variables.

where; Expand (.) is a function that expands the model to create a new, more complex model.

Model Selection: Evaluate the performance of each model based on a specific criterion (e.g., accuracy, correlation, error). Select the models that exhibit the best performance.

where; “Select Best Model (.)” is a function that selects the best-performing models.

Convergence: Repeat the expansion and selection steps until a convergence criterion is met or until a desired level of model complexity is reached. Final Model: The final GMDH model consists of the selected models from the previous step. The specific expressions for the model expansion and selection steps will depend on the details of the GMDH variant being used. GMDH may involve polynomial approximations, neural network-like structures, or other methods for combining and refining models. The essence of GMDH lies in the automatic selection and combination of features to construct models that capture the underlying relationships in the data. The mathematics behind GMDH can be complex and may involve optimization techniques, polynomial coefficients, and other parameters specific to the chosen GMDH variant. The detailed mathematical formulations are often specific to the particular GMDH algorithm being implemented.

CN2

CN2 (Class Noise Two) is a machine learning technique used for rule-based classification with Fig. as its typical framework. It is a popular algorithm for inducing classification rules from data. The CN2 algorithm was proposed by Peter Clark and Tim Niblett in 1989. CN2 belongs to a family of rule-based machine learning algorithms that build decision rules from data as shown in the working framework in Fig. 4. CN2 starts by generating a set of rules from the training data. It begins with an empty rule set. The algorithm iteratively expands the rules by adding conditions that improve their accuracy. It considers all possible conditions that can be added to a rule, evaluates their impact on rule accuracy, and adds the condition that provides the maximum improvement.After expanding the rules, CN2 prunes them to remove conditions that do not significantly improve accuracy. This helps in preventing overfitting. The algorithm selects the best rules based on accuracy and other criteria. The selected rules form the final rule set.CN2 has mechanisms to handle noise and outliers in the data. It can detect and disregard noisy instances during rule induction.The CN2 algorithm is particularly well-suited for datasets with discrete attributes and is commonly used in domains where interpretable rules are desirable. It has been used in various applications, including medical diagnosis, fraud detection, and other areas where understanding the decision-making process is crucial.The rules generated by CN2 are typically in the form of “IF–THEN” statements, making them easy to interpret. While CN2 is effective in certain scenarios, it may not perform as well as some other algorithms on datasets with continuous attributes or very large datasets. Depending on the specific characteristics of the data, other rule-based or non-rule-based algorithms might be more suitable.

Data gathering and statistical analysis

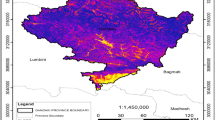

A total of three hundred and forty-nine (349) data entries on the FOS of slopes were collected from literature5, and sorted to remove odd values and unlogic results, which had been used together in a previous research work by Lin et al.5. After the sorting process, the remainder of the realistic data entries was 296. The 296 sorted realistic records collected from literature for slopes with different configurations and their safety factors previously contained the following data parameters; unit weight, γ (kN/m3), cohesion,C (kPa),angle of internal friction (Φ°), slope angle (\({\Theta}\)°), slope height H (m), and pore water pressure ratio, ru as shown in Fig. 55. After careful checks, the initial results showed poor correlation and poor internal consistency with the individual factors and the factors were collected into three non-dimensional parameters, which are: C/γ.h-Cohesion/unit weight x slope height, tan(ϕ)/tan(β)-the tangent of internal friction angle/Tangent of slope angle, and ρ/γ.h-Water pressure/unit weight x slope height, which are deployed as inputs and FOS-the safety factor of the slope as the output as illustrated in Fig. 5. The collected records were divided into training set (250 records) and validation set (46 records). Tables 1 and 2 summarize their statistical characteristics and the Pearson correlation matrix. Finally, Fig. 6 shows the histograms for both inputs and outputs and Fig. 7 shows the relations between the inputs and the outputs.

Homogenous finite slopes failure mechanism 10.

Research framework and performance evaluation

The Fig. 8 presents the research framework of this project. Four different ML techniques were used to predict the Safety factor (FOS) of soil slope using the collected database. These techniques are (ANN), (GMDH), (CN2) & (SGD). All the four developed models were used to predict (FOS) using (C/γh), (tan(ϕ)/tan(β)) and (ρ/γh).The following performance indices; the mean absolute errors (MAE), mean squared errors (MSE), root mean squared errors (RMSE), sum of squared error (SSE), and the coefficient of determination or the R-squared (R2) were used to evaluate the performance between predicted and calculated shear strength parameters values.

Sensitivity analysis and performance evaluation

Hoffman and Gardner’s sensitivity analysis is a systematic method used to evaluate the influence of input variables on the output of a model. It is particularly useful in complex systems where multiple variables interact in nonlinear ways. This method aims to identify which inputs have the most significant impact on model predictions, enabling better understanding of the system and prioritization of influential factors. The analysis involves generating a large number of input–output combinations through random sampling or structured variation of input variables. By systematically varying one input at a time or examining combinations of inputs, the method measures how changes in each variable affect the output. This approach allows for the quantification of the sensitivity of the model to specific inputs, expressed in terms of metrics such as partial correlation coefficients or variance-based indices. Hoffman and Gardner’s method is advantageous in its ability to handle high-dimensional models and complex relationships, making it applicable across fields like environmental modeling, engineering, and finance. However, it requires computationally intensive simulations, especially for large datasets or models with a high number of variables. Despite this, it remains a valuable tool for understanding model behavior, identifying critical variables, and improving model design or decision-making processes. A preliminary sensitivity analysis was carried out on the collected database to estimate the impact of each input on the (Fc) values. “Single variable per time” technique is used to determine the “Sensitivity Index” (SI) for each input using Hoffman & Gardener’s formula76 as follows:

Furthermore, by using these performance parameters, the factor of safety’s precision and error quantification have been evaluated as follows;

SSE is Sum of Squares Error.

The sum of squared errors (SSE) is a measure used to quantify the overall discrepancy between the predicted values and the actual values in a regression or optimization problem. Specifically, it is commonly used in the context of linear regression. For a set of observed values and corresponding predicted values, the sum of squared errors is calculated as follows:

N is number of data \({y}_{i}\) is actual value, \(\overline{x }\) is predicted value

Mean Absolute Error (MAE) is a metric used to evaluate the performance of a regression model. It measures the average absolute difference between the predicted values and the actual values. MAE is defined as: MAE is the formula, which is given by Equation

N is the total number of trials, \({y}_{i}\) is the value predicted for the ith neuron, and \({x}_{i}\) is the value obtained experimentally. The formula calculates the absolute difference between each predicted value and its corresponding actual value, sums up these absolute differences, and then takes the average over all observations. MAE is advantageous because it provides a straightforward and interpretable measure of the average prediction error. It is less sensitive to outliers than the Mean Squared Error (MSE) or the Sum of Squared Errors (SSE), making it a robust metric in cases where outliers might significantly impact the model evaluation. The smaller the MAE the better the model performance. MAE is expressed in the same units as the original data, making it easy to interpret and compare across different models or datasets.

Mean Squared Error (MSE) is a common metric used to evaluate the performance of a regression model. It measures the average squared difference between the predicted values and the actual values. MSE is defined as:

N is number of data \({y}_{i}\) is actual value \({x}_{i}\) is predicted value. The formula calculates the squared difference between each predicted value and its corresponding actual value, sums up these squared differences, and then takes the average over all observations. MSE has some properties that can make it sensitive to outliers since it squares the differences. This means that larger errors have a disproportionately larger impact on the overall score.

The Root Mean Squared Error (RMSE) is a metric used to evaluate the performance of a regression model. It is a variation of the Mean Squared Error (MSE) and provides a more interpretable measure by taking the square root of the average squared differences between predicted values and actual values. The formula for RMSE is as shown in Eq. 14. RMSE is the average deviation of a data point (targeted) from the model’s predicted value (expected), expressed as the square root of the mean square error. A lower RMSE Equation indicates a better performing model

The formula calculates the squared difference between each predicted value and its corresponding actual value, sums up these squared differences, takes the average, and then computes the square root. RMSE is expressed in the same units as the original data, making it directly comparable to the scale of the dependent variable. Lower RMSE values indicate better model performance, as they represent smaller average differences between predicted and actual values. RMSE is widely used in regression tasks and is particularly useful when you want to emphasize the impact of larger errors, as it penalizes them more than the Mean Absolute Error (MAE). However, it can be sensitive to outliers, and careful consideration should be given to the nature of the data and the specific goals of the modeling task. The initial parameter is the R2 coefficient, which represents the absolute proportion of a variable’s variance. R-squared (Coefficient of Determination) is a statistical measure that represents the proportion of the variance in the dependent variable that is predictable from the independent variables in a regression model. It is a common metric used to assess the goodness of fit of a regression model. The formula for R-squared is given by:

The formula essentially compares the performance of the regression model to a simple model that predicts the mean of the dependent variable for all observations. If the model fits the data well, SSR will be much smaller than SST, leading to a higher R-squared value. R-squared ranges from 0 to 1, where 0 indicates that the model does not explain any variance in the dependent variable, and 1 indicates perfect prediction. R-squared alone does not provide information about the goodness of fit for individual coefficients or the appropriateness of the model. A high R-squared does not necessarily mean that the model is well-specified or that it has predictive power. To account for the number of predictors in the model, the adjusted R-squared adjusts the R-squared value based on the model’s degrees of freedom. Overall, R-squared (R2) is a valuable metric for assessing the overall fit of a regression model, but it should be used in conjunction with other diagnostic tools to thoroughly evaluate the model’s performance.

Results and discussion

ANN model result analysis

Four models (3–4–1, 3–5–1, 3–6–1, and 3–7–1) were developed using (ANN) technique. All the models have normalization method (− 1.0 to 1.0) and activation function (Hyper Tan) and trained using “Back Propagation (BP)” algorithm. However, the network layout of the models was started with only four neurons in the hidden layer and gradually increased to seven neurons. The final network layout for the 3–7–1 topology is illustrated in Fig. 9. The reduction in (Error, %) with increasing the number of neurons and the relations between calculated and predicted values are shown in Fig. 10. These developed models were used to predict (FOS) values. The weight matrix of the ANN protocol is shown in Table 3. The average errors % of the total dataset and he (R2) values are (24%, 0.946), respectively. Further, the performance evaluation of the ANN model produced MAE of 0.27, RMSE of 0.46 and a parametric line relation of 0.972x. A very few outliers can be dictated from the ± 30% fit envelop within the best fit of the model. The relative importance values for each input parameter are illustrated in Fig. 11, which indicated that (C/γh) and tan(ϕ)/tan(β) have the main impact on (FoS). This has shown the influence of the ratio of the frictional angle to slope angle on the stability of the slope. The geometry factor is a very decisive parameter as the denominator of the deciding ratio. Soil cohesion to soil unit weight and slope height ratio also showed an impressive influence on the performance of the slope behavior under the studied conditions following the first ratio closely. The Artificial Neural Network (ANN) model for forecasting the factor of safety (FoS) of slopes shows excellent performance, offering higher accuracy and reliability compared to other models like CN2 and GMDH. Below is a detailed analysis of the ANN model’s performance and its implications for slope stability assessment: Average Error (%) of 24% represents the mean percentage deviation of predictions from actual values, reflecting improved accuracy compared to CN2 (47%) and GMDH (28%). R2 value of 0.946 indicates that 94.6% of the variability in the FoS dataset is explained by the model, showcasing strong predictive power. MAE (Mean Absolute Error) of 0.27 demonstrates lower average prediction errors compared to GMDH (0.30) and CN2 (0.47). RMSE (Root Mean Squared Error) of 0.46 reflects improved error magnitude over GMDH (0.53) and CN2 (0.89), showing better handling of outliers. Parametric Line Relation of 0.972 × highlights a near-perfect linear relationship between predicted and actual values. ± 30% fit envelope shows that the majority of predictions fall within the ± 30% envelope, with very few outliers, indicating high reliability. The ANN model’s average error of 24% is the lowest among the considered models, demonstrating its capability to make precise predictions. MAE (0.27) and RMSE (0.46) metrics confirm its effectiveness in reducing both absolute and larger errors. Superior R2 Value (0.946) explains 94.6% of the variance in the dataset, outperforming GMDH (0.926) and CN2 (0.876). The parametric line relation (0.972x) indicates a strong match between predicted and observed FoS values, confirming its reliability. Predictions are highly consistent, with most falling within the ± 30% fit envelope, making it suitable for practical applications. While the average error is relatively low, further reduction would be beneficial for highly sensitive slope stability assessments, especially for critical infrastructure. ANN models are computationally intensive and may require more resources for training and validation compared to simpler models like CN2. The ANN model’s strong performance metrics make it highly suitable for slope stability forecasting, especially in scenarios where accurate FoS predictions are critical. With minimal outliers and a high degree of accuracy, the ANN model is reliable for diverse slope conditions and geophysical flow contexts. This provides engineers and geotechnical experts with a robust tool for assessing slope safety, enabling timely and effective risk mitigation strategies. Incorporate additional geotechnical parameters (e.g., rock strength, soil cohesion, drainage conditions) to enhance the model’s robustness. Use cross-validation and external datasets to confirm the model’s generalizability and prevent overfitting. Combine ANN with other methods (e.g., GMDH or ensemble models) to capture complex nonlinear relationships more effectively. Develop user-friendly software tools for real-time FoS predictions using the ANN model to aid field engineers. The ANN model offers the best performance for forecasting the factor of safety of slopes among the considered models. Its average error of 24%, R2 value of 0.946, and minimal outliers within the ± 30% fit envelope demonstrate its reliability and accuracy. These characteristics make the ANN model a highly effective tool for slope stability analysis in geophysical flows. Further refinements and integration into practical applications could maximize its potential for real-world engineering challenges.

GMDH model result analysis

Three (GMDH) models were developed using “GMDH Shell 3” software considering three orders for the activation function starting from linear function to cubic function. The reduction in (Error, %) with increasing the number of neurons is presented in Fig. 12a. The final model is produced and proposed Eq. (16). This is a closed-form equation representing the overall behavior of the factor of safety of the studied soil with the global entries from field studies. It can be applied manually in the design of safe stability of slopes by trial-and-error method within the boundary conditions of the selected parameters and their ratios. The average errors (%) of total dataset and the R2values are (28%, 0.926), respectively. The relations between calculated and predicted values are also shown in Fig. 12b. Further, the performance evaluation of the GMDH model produced MAE of 0.30, RMSE of 0.53 and a parametric line relation of 0.963x. A very few outliers can be dictated from the ± 30% fit envelop within the best fit of the model. The Group Method of Data Handling (GMDH) model for forecasting the factor of safety (FoS) of slopes demonstrates improved performance compared to the CN2 model. Average Error (%) of 28%, reflects the average percentage deviation of predictions from actual values, showing a notable improvement compared to the CN2 model (47%). R2 value of 0.926 indicates that 92.6% of the variance in the FoS data is explained by the model, signifying strong predictive capability. MAE (Mean Absolute Error) of 0.30 highlights lower average deviations compared to CN2 (MAE = 0.47), indicating better accuracy. RMSE (Root Mean Squared Error) of 0.53 suggests lower overall error magnitude than the CN2 model (RMSE = 0.89), reflecting reduced impact from outliers. Parametric line relation of 0.963 × indicates a near-perfect linear correlation between predicted and actual values. ± 30% Fit Envelope shows that the predictions largely fall within the ± 30% envelope, demonstrating high reliability with only minimal outliers. The GMDH model significantly reduces the average error (28%) compared to the CN2 model (47%). Lower MAE (0.30) and RMSE (0.53) confirm its superior performance, particularly in handling deviations and outliers. High R2 Value (0.926) explains a large portion of variance in the FoS dataset, making it suitable for slope stability forecasting. A parametric line relation of 0.963 × underscores the strong alignment between predictions and observations. Most predictions lie within the ± 30% fit envelope, suggesting reliable performance across diverse conditions. While improved, the average error (28%) remains significant for highly sensitive slope stability assessments, where safety margins are critical. High R2 values in GMDH models may occasionally signal overfitting, requiring careful validation with external datasets. The GMDH model’s lower error metrics and high R2 value make it a robust tool for slope stability assessment and risk management in geophysical flows. This is suitable for varied slope types and environmental conditions, given its minimal outliers and reliable performance within a ± 30% envelope. Improved Decision Support provides precise FoS predictions for effective planning and mitigation strategies, particularly in areas prone to landslides or slope failures. Include diverse slope profiles, environmental conditions, and failure scenarios to improve generalizability. Introduce additional variables (e.g., soil composition, groundwater levels, slope geometry) to enhance prediction accuracy. Employ k-fold cross-validation and test the model on external datasets to confirm robustness and minimize overfitting. Investigate remaining outliers and higher errors for specific conditions to identify and mitigate potential model weaknesses. Explore combining GMDH with other techniques (e.g., neural networks or genetic algorithms) to capture complex nonlinear relationships. The GMDH model offers a significant improvement over the CN2 model, with a lower average error (28%), higher R2 (0.926), and reduced MAE (0.30) and RMSE (0.53). Its strong parametric line relation (0.963x) and minimal outliers within a ± 30% fit envelope make it a reliable and accurate tool for forecasting the factor of safety in slope stability assessments. While the model demonstrates excellent performance, further refinement and validation would ensure even greater applicability for geophysical flow management and high-risk scenarios.

CN2 model result analysis

Similar to the previous (GMDH) model, the (CN2 Role Induction) models were developed using “Orange” software. They began with beam with value of 2 and increase to 6. The reduction in (Error, %) with increasing the value of beam width and the relations between calculated and predicted values are shown in Fig. 13. The final model includes 155 successive “IF Condition” statements. The average errors % of total dataset and he (R2) values are (31%, 0.917), respectively. Further, the performance evaluation of the CN2 model produced MAE of 0.22, RMSE of 0.58 and a parametric line relation of 0.888x. A very few outliers can be dictated from the ± 30% fit envelop within the best fit of the model. The CN2 model for forecasting the factor of safety (FoS) of slopes demonstrates promising performance but also highlights areas for improvement. Below is an analysis of the model’s metrics and its applicability in geophysical flow predictions: Average Error (%) of 47% indicates the mean percentage deviation of predictions from actual values across the dataset. R2 value of 0.876, shows that 87.6% of the variability in the FoS is explained by the model. MAE (Mean Absolute Error) of 0.47 reflects the average magnitude of errors in the predictions. RMSE (Root Mean Squared Error) of 0.89 penalizes larger errors more than MAE, providing insight into prediction reliability. Parametric Line Relation of 0.876 × indicates a strong linear correlation between predicted and observed values. ± 30% Fit Envelope demonstrates that most predictions fall within 30% of the actual values. Outliers are minimal, suggesting reasonable predictive consistency. High R2 value (0.876) indicates a strong explanatory power of the model, suggesting that it captures the majority of variability in the FoS of slopes. The parametric line relation (0.876x) supports the high correlation between predicted and actual values. The MAE of 0.47 is moderate and shows that the model’s average prediction is close to actual values in absolute terms. The RMSE of 0.89 suggests that most errors are not significantly large, though it highlights the impact of outliers. Predictions mostly fall within a ± 30% envelope of the best fit, indicating reliability for practical applications with tolerable margins of error. High Average Error of (47%) shows that the large percentage error highlights variability across the dataset, which might stem from underrepresented or poorly modeled data points. Outliers: Although minimal, the presence of outliers within the ± 30% envelope indicates areas where the model struggles, likely due to unaccounted factors or noise in the dataset. The RMSE value suggests that while most predictions are accurate, a few significant deviations increase the overall error. Despite the high average error, the strong R2 and low MAE suggest that the CN2 model is applicable for slope stability forecasting in non-critical situations or where a margin of error is acceptable. The model provides a reliable framework for preliminary risk assessments of slopes, supporting decision-making in geophysical flow management and mitigation. The consistency within the ± 30% fit envelope aids in identifying potentially unstable slopes with reasonable confidence. Include additional geophysical parameters (e.g., soil type, moisture content, vegetation cover) to reduce variability and enhance model precision. Address high average errors by improving data quality, increasing dataset size, or employing feature engineering to better capture complex relationships. Outlier Management is to analyze and address conditions contributing to outliers by applying robust regression techniques and segmenting the dataset based on slope types or environmental conditions. Perform k-fold cross-validation or use an independent dataset to ensure generalizability and reduce overfitting. Combine the CN2 model with machine learning techniques (e.g., Random Forest or ANN) for improved handling of nonlinearities and interactions. The CN2 model achieves strong predictive power, as evidenced by its R2 value (0.876) and parametric line relation (0.876x). However, the high average error (47%) and moderate RMSE (0.89) suggest that it may require refinement for more precise applications. For practical purposes, the model is reliable for general slope stability assessments, particularly when combined with expert judgment. Addressing its limitations through additional inputs and error mitigation techniques could significantly enhance its performance for geophysical flow forecasting.

SGD model result analysis

Four (SGD) models were developed using “Orange” Software considering modified Huber classification function and “Elastic net” re-generalization technique with mixing factor started with 0.1 and up to 0.4. The reduction in (Error, %) with increasing the mixing factor and the relations between calculated and predicted values are illustrated in Fig. 14. The average errors % of total dataset and the (R2) values are (47%, 0.876), respectively. Further, the performance evaluation of the SGD model produced MAE of 0.47, RMSE of 0.89 and a parametric line relation of 0.876x. A very few outliers can be dictated from the ± 30% fit envelop within the best fit of the model. The Stochastic Gradient Descent (SGD) model for forecasting the factor of safety (FoS) of slopes shows reasonable predictive performance but is outperformed by models such as ANN and GMDH. Average Error (%) of 47%, indicates a relatively high level of deviation between predicted and actual values, suggesting moderate accuracy. R2 value of 0.876, suggests that 87.6% of the variance in the dataset is explained by the model, reflecting acceptable but not optimal predictive capability. MAE (Mean Absolute Error) of 0.47 reflects the average magnitude of errors, indicating moderate precision. RMSE (Root Mean Squared Error): 0.89 suggests larger errors compared to more advanced models, with penalties for significant deviations. Parametric Line Relation of 0.876 × highlights a moderate linear relationship between predicted and actual FoS values. ± 30% Fit Envelope shows that most predictions fall within the ± 30% envelope, with very few outliers, indicating reasonable reliability for general applications. SGD is computationally efficient and easy to implement, making it suitable for quick evaluations or resource-limited settings. With an R2 value of 0.876, the model captures a significant proportion of the variability in the dataset, though not as effectively as ANN or GMDH. The few outliers present within the ± 30% envelope suggest consistent performance across the dataset. High Average Error (47%): The relatively large error indicates that predictions often deviate significantly from actual values, reducing reliability for precise applications. Higher MAE and RMSE: The MAE of 0.47 and RMSE of 0.89 are higher than those of ANN (0.27, 0.46) and GMDH (0.30, 0.53), reflecting lower overall accuracy. Moderate R2 Value: While acceptable, the R2 value (0.876) is noticeably lower than that of ANN (0.946) and GMDH (0.926), suggesting the model may not fully capture the complexity of FoS predictions. The SGD model is best suited for preliminary assessments or less critical slope stability analyses where precision is less critical. Its simplicity and efficiency make it a good choice for rapid predictions or cases where computational resources are limited. Provides a general understanding of slope stability, but results should be supplemented with more accurate models (e.g., ANN or GMDH) for critical decisions. Include additional geotechnical and environmental parameters (e.g., drainage patterns, soil heterogeneity) to enhance predictive accuracy. Apply advanced regularization techniques (e.g., L1/L2 penalties) to reduce overfitting and improve generalization. Combine SGD with ensemble methods (e.g., bagging or boosting) to increase robustness and accuracy. Use SGD alongside more complex models (e.g., ANN) to benchmark and identify specific areas of improvement. The SGD model demonstrates moderate performance, with an average error of 47%, R2 of 0.876, and consistent predictions within a ± 30% fit envelope. While it is computationally efficient and suitable for preliminary slope stability assessments, it falls short in accuracy and reliability compared to GMDH and ANN models. For critical geophysical flow and slope stability applications, more advanced models are recommended to ensure precision and robustness.

Generally, the summary of the models’ performance in comparison to the collected field data entries are is presented in Table 4 and in Fig. 15 as Taylor chart. The Taylor chart corroborates with the best line of fit drawn for each of the models in previous model graphical illustrations. The summary Table 4 shows the ANN outclassed the other techniques with SSE of 62%, MAE of 0.27, MSE of 0.21, RMSE of 0.46, average total error of 24% and R2 of 0.946 thereby becoming the decisive intelligent model in this exercise. However, there is an advantage the deployment of GMDH, which comes second in superiority has over the ANN. This is the development of a closed-form equation that allows its model to be applied manually in the design of slope stability problems. In comparing the performance of this model with that of the previous models reported in the literature (Lin et al., 2021), which used a total of eleven (11) different machine learning techniques, which included the support vector regression-polynomial (SVR-poly) as the decisive model, the present research work produced all four models from ANN, GMDH, CN2, and SGD with outstanding performances. These models outperformed the best model from the previous research work (Lin et al., 2021), which had an R2 of 0.8640, MAE of 0.208, and MSE of 1.5531. Various factors are responsible for this outstanding outperformance. These included the sorting and eliminating the illogical and unrealistic data entries from the field exercise deposited in literature 5, the application of the dimensionless combination of the studied slope stability parameters and the superiority of the intelligent learning abilities of the selected techniques of the present exercise.

Sensitivity analysis results

Accordingly, the (SI) values are 0.86, 0.92, and 0.29 for (C/γh), (tan(ϕ)/tan(β)) and (ρ/γh), respectively. A sensitivity index of 1.0 indicates complete sensitivity, a sensitivity index less than 0.01 indicates that the model is insensitive to changes in the parameter 76. The sensitivity analysis values of 0.86 for (C/γh), 0.92 for (tan(ϕ)/tan(β)), and 0.29 for (ρ/γh) indicate the relative importance of these parameters in influencing slope behavior for geophysical flow predictions. These values represent the degree to which variations in each parameter affect the model’s output, with higher values indicating greater sensitivity. The value of 0.92 for (tan(ϕ)/tan(β)) suggests that this parameter has the most significant influence on slope behavior. This ratio reflects the interplay between the soil’s internal friction angle (ϕ) and the slope angle (β), which are critical in determining stability. A high sensitivity value indicates that small changes in the relationship between these angles can substantially impact slope stability, emphasizing the importance of accurately estimating these parameters in geophysical flow models. The value of 0.86 for (C/γh) shows that the ratio of cohesion (C) to the product of unit weight (γ) and slope height (h) is also highly influential. Cohesion contributes to the soil’s resistance to failure, and its relationship with γh represents the stabilizing forces relative to the driving forces. This high sensitivity suggests that any variations in cohesion, slope height, or soil unit weight significantly affect slope stability predictions. In contrast, the sensitivity value of 0.29 for (ρ/γh) indicates that this parameter has a relatively smaller influence on slope behavior. The ratio of material density (ρ) to γh may represent a less critical factor in the overall stability assessment. While density variations can affect slope stability, the lower sensitivity suggests that their impact is less pronounced compared to the frictional and cohesive properties of the soil. The analysis highlights the dominant roles of (tan(ϕ)/tan(β)) and (C/γh) in determining slope behavior, underscoring the need for precise measurements and careful consideration of these parameters in geophysical flow predictions. It also suggests that efforts to improve model accuracy should prioritize these high-sensitivity factors over parameters with lower sensitivity, such as (ρ/γh).

Conclusions

This research aims to predict the safety factor of slopes (FOS) using (C/γh), tan(ϕ)/tan(β) and (ρ/γh) for purposes of geophysical flow predictions. The utilized techniques were ((ANN), (GMDH), (CN2) & (SGD). The results of comparing the accuracies of the developed models could be concluded in the following points:

-

ANN model showed the most accurate predictions with accuracy of 76% followed by GMDH model with accuracy of 72% then the CN2 model with accuracy of 69% and finally the SGD model with accuracy of 53%.

-

Complexity wise, GMDH model is the simplest one with only one closed-form equation followed by ANN model with 4 × 7 weight matrix., then the CN2 mode with 155 “IF condition” statement and finally the SGD model as a black box.

-

Both correlation and sensitivity analysis showed that C/γh and tan(ϕ)/tan(β) had the main influence on the FoS, with ρ/γh following in effect.

-

These show that cohesion (C) is an important factor to be considered in the design of slope stability factors for purposes of geophysical flow design consideration and ANN with the most reliable prediction becomes the decisive model.

-

The developed models are valid within the considered range of parameter values, beyond this range; the prediction accuracy should be verified.

Data availability

The supporting data for this research project is available from the corresponding author on reasonable request.

References

Rabbani, A., Samui, P. & Kumari, S. A novel hybrid model of augmented grey wolf optimizer and artificial neural network for predicting shear strength of soil. Model. Earth Syst. Environ. 9, 2327–2347. https://doi.org/10.1007/s40808-022-01610-4 (2023).

Onyelowe, K. C., Sujatha, E. R., Aneke, F. I. & Ebid, A. M. Solving geophysical flow problems in Luxembourg: SPH constitutive review. Cogent Eng. https://doi.org/10.1080/23311916.2022.2122158 (2022).

Mahmoodzadeh, A. et al. Prediction of safety factors for slope stability: Comparison of machine learning techniques. Nat. Hazards 111, 1771–1799. https://doi.org/10.1007/s11069-021-05115-8 (2022).

Uyanık, O. Soil liquefaction analysis based on soil and earthquake parameters. J. Appl. Geophys. 176, 104004. https://doi.org/10.1016/j.jappgeo.2020.104004 (2020).

Lin, S., Zheng, H., Han, C., Han, B. & Li, W. Evaluation and prediction of slope stability using machine learning approaches. Front. Struct. Civil Eng. https://doi.org/10.1007/s11709-021-0742-8 (2021).

Sahoo, A. K., Pramanik, J., Jayanthu, S., & Samal, A.K. Slope stability predictions using machine learning techniques. In 2022 4th International Conference on Advances in Computing, Communication Control and Networking (ICAC3N), Greater Noida, India, 2022, 133–137. https://doi.org/10.1109/ICAC3N56670.2022.10074079.

Khajehzadeh, M. & Keawsawasvong, S. Predicting slope safety using an optimized machine learning model. Heliyon. 9(12), e23012. https://doi.org/10.1016/j.heliyon.2023.e23012 (2023).

Wang, S., Zhang, Z. & Wang, C. Prediction of stability coefficient of open-pit mine slope based on artificial intelligence deep learning algorithm. Sci. Rep. 13, 12017. https://doi.org/10.1038/s41598-023-38896-y (2023).

Cao, Z., Leslie Youd, T. & Yuan, X. Gravelly soils that liquefied during 2008 Wenchuan, China earthquake Ms=8.0. Soil Dyn. Earthq. Eng. 31, 1132–1143 (2011).

Meng, J., Mattsson, H. & Laue, J. Three-dimensional slope stability predictions using artificial neural networks. Int. J. Numer. Anal. Methods Geomech. 45, 1988–2000. https://doi.org/10.1002/nag.3252 (2021).