Abstract

In the contemporary, fiercely competitive marketplace, companies must adeptly navigate the complexities of understanding and fulfilling user needs to succeed. By mining potential user needs from User Generated Content (UGC) on social media platforms, businesses can design products that resonate with users’ needs, thereby swiftly capturing market share. When predicting user needs in this paper, the collected UGC is first processed through operations such as deduplication, word segmentation, and stop-word removal. Subsequently, Latent Dirichlet Allocation (LDA) is employed to extract product attribute features from UGC, cluster them to identify user needs and classify documents accordingly. The Bidirectional Encoder Representations from Transformers (BERT) model is then utilized for word vector feature extraction of the categorized documents, while also taking into account user interaction metrics to perform sentiment analysis of user needs using Long Short-Term Memory (LSTM). Finally, a Correlation Analysis-Vector Autoregressive-Markov (CA-VAR-Markov) model is constructed to forecast the evolution of user needs, and the Analytical Kano (A-Kano) model is applied for an in-depth analysis to propose strategies for product design optimization. In the case study, this paper takes the UGC from “Autohome” as an example to predict the user needs for the NIO EC6. Compared with LSTM and ARIMA, the prediction results are more accurate. Based on the prediction results and combined with the A-KANO model, suggestions are put forward for the optimization of the NIO EC6. The final results prove that the methods for identifying and predicting user needs proposed in this paper can effectively predict the development trend of user needs, providing a reference for enterprises to optimize their products.

Similar content being viewed by others

Introduction

In an era of intensifying market competition, companies are under mounting pressure to not only identify but also swiftly address user needs to foster their growth and development1. Traditional approaches to gathering user needs, such as interviews2 and surveys3, are often marred by inefficiency and inaccuracy, demanding considerable investment in terms of human resources and financial expenditure. The digital information age has witnessed a paradigm shift, with users increasingly taking to social networking platforms to voice their opinions. UGC, encompassing text, images, audio, video, emoticons, and other forms of expression, serves as a valuable repository of user sentiment and preference4. These user reviews on review platforms are instrumental, functioning as both a reference for prospective buyers and as market intelligence for businesses engaged in product development. Scholars have started to pay attention to the research on online reviews. Enterprises can obtain the product features that users are concerned about and their satisfaction with these features through UGC, thus guiding the optimization direction of product design. However, the sheer volume of UGC often results in user needs being identified at a macro level, which leaves designers without the granular guidance needed for targeted design optimization. Furthermore, it remains an open question whether UGC can entirely supplant conventional methods for obtaining user needs5, or if a hybrid approach could yield more precise and comprehensive insights.

The Kano model, introduced by Japanese professor Noriaki Kano, stands as an effective analytical tool for enhancing product user satisfaction6. Within the realm of product design and development, this model aids designers in capturing and categorizing user needs based on scientific assessment methods. By quantifying the importance of user needs, it elucidates the nexus between user satisfaction and product attributes, thereby enabling products to meet user needs more effectively.

Sentiment analysis, a pivotal subset of natural language processing, aims to uncover and quantify user needs for product attributes by mining the positive or negative sentiments expressed in user comments7. Current research is increasingly focused on analyzing emotional preferences within complex sentences and uncovering implicit user emotions8. By refining techniques for emotional preference analysis, a wealth of valuable information can be extracted from the diverse expressions found in user comments. However, interaction metrics such as likes, comments, and shares also reflect user emotional preferences and can serve as a more direct indicator of user preferences and needs for products. Consequently, it is imperative to consider the impact of user interaction indicators when conducting sentiment analysis based on UGC.

The dynamic nature of user needs, influenced by the changing times, underscores the significance of predicting user needs. Existing research often employs statistical models9, machine learning (ML) models10, and deep learning models11 to predict user needs. These models typically predict the development trend of a single user need in isolation. However, user needs are interconnected12, and predicting a single user need without considering its interrelations may compromise the accuracy of the prediction. The VAR model, comprised of multiple time series variables, is adept at analyzing and forecasting the development trends of multiple interrelated variables13. This paper utilizes the VAR model to predict the development trends of multiple user needs simultaneously. CA can determine whether multiple variables exert influence on each other14, which is employed before VAR prediction to identify variables with correlated relationships. Concurrently, when applying the VAR model to predict user needs, it is assumed that the future trend of the variables will mirror the past. Nevertheless, the future trend of user needs often bears little resemblance to the past and is more significantly impacted by the present. Furthermore, when applying the VAR model to predict user needs, extreme situations such as scarce data on user needs may disrupt the correlation between user needs, leading to inaccurate prediction outcomes. The Markov model, which predicts future changes based on the current state, is better suited for predicting samples with substantial fluctuations15. Therefore, to accurately predict user needs, this paper establishes a CA-VAR-Markov model.

The main contributions of this paper are as follows:

-

(1)

Based on obtaining user needs from UGC, the CA-VAR-Markov model is utilized to predict user needs. Meanwhile, to verify the accuracy of need prediction, the A-Kano model is applied to deeply explore user needs, to obtain accurate and comprehensive user needs, providing specific and clear guidance for the optimized design of products;

-

(2)

When conducting sentiment analysis of user needs, user interaction indicators are considered to correct user sentiment indicators;

-

(3)

Before applying the VAR model for prediction, CA is used to determine the need indicators with mutual influence relationships to input into the VAR model, and the Markov model is used to correct the prediction results of the VAR model, constructing a CA-VAR-Markov model framework to make up for the shortcomings of using the VAR model alone for prediction.

The rest of the paper is organized as follows. Sect “Literature review”introduces the literature review, Sect “Methodology”details the methodology, Sect “Case Studies”introduces the specific case study and result analysis, and Sect“Discussion”concludes.

Literature review

User need mining

The accurate and comprehensive extraction of user needs is fundamental to determining the direction of product optimization. UGC, which contains a wealth of feedback from users on products, has attracted significant attention from both academia and industry in terms of how to derive effective user needs. The initial steps involve processing UGC to obtain high-quality textual content. For instance, Yuan et al. employed the term frequency-inverse document frequency (TF-IDF) to assess the significance of words within a document set, thereby identifying female users’ needs regarding the front design of a vehicle16. Lai et al. utilized Word2Vec to process text into word vectors. This facilitated the clustering of synonyms and reduced the complexity of text processing17. Subsequent research has shifted towards uncovering users’ implicit needs from UGC. Zhou et al. proposed a two-tier model for eliciting latent customer needs through use case reasoning18. Zhang et al. extracted insights into user needs from a vast array of UGC to pinpoint product features that require enhancement for product redesign19. Despite these advances, products with limited UGC also necessitate competitiveness enhancement based on user needs. Yang et al. suggested extracting user needs for products with a short market life from small sample data to improve product design20. It is evident that extracting user needs from UGC is a viable approach. However, there is an absence of research confirming whether UGC can entirely replace traditional methods of obtaining user needs. A synergistic combination of the two approaches might be more effective.

Traditional methods for obtaining user needs include interviews, surveys, and focus group discussions. These methods offer an intuitive understanding of users’ real experiences with products and allow for a deeper exploration of more detailed and specific needs. Sun et al. conducted qualitative research on the behavior and needs of takeaway food box users through interviews and surveys21, yet lacked a refined division and analysis of user needs. Kumar et al. employed a fuzzy Kano model questionnaire to identify and obtain the needs of individuals with mobility disabilities for assistive products22. Koomsap et al. examined common pitfalls in using the Kano model and proposed improvements23, but did not account for the importance users place on need factors. The A-Kano model extends the traditional Kano model by incorporating Kano indices, which quantify attention and satisfaction while categorizing user needs, leading to a better determination of product features and aligning value between consumers and producers. Lizarelli et al. applied the A-Kano model to elicit user needs in entrepreneurial education services24. Therefore, this paper employs the A-Kano model to validate the accuracy of user needs acquisition through UGC and to further delve into understanding user needs, proposing detailed strategic plans for the optimization of the company’s products.

Sentiment analysis

Sentiment analysis, a crucial subset of natural language processing, aims to uncover and quantify user needs for product attributes by mining positive or negative sentiments expressed by users in comments25. Sentiment analysis can be categorized into document-based, sentence-based, and aspect-based according to the granularity26. In user need analysis, aspect-based sentiment analysis (ABSA) is frequently utilized, which first employs feature extraction techniques to identify product features that users care about from online comments, and then applies sentiment analysis methods to assess their emotional preferences for different features27. In feature extraction, machine learning methods are often employed, such as LDA and SVM. Sha et al. applied the LDA model to extract product features from comments28, but LDA is based on the bag-of-words model and does not consider word order, neglecting context, which is detrimental to subsequent sentiment classification. The BERT model is a pre-trained model based on the Transformer architecture, capable of understanding text context and offering advantages in processing the complex semantics of natural language. Lai et al. used the BERT model to rapidly and accurately analyze the emotional needs of smart cockpit users from online comments29. Therefore, this paper utilizes the BERT model for word vector definition after LDA topic classification, facilitating subsequent sentiment analysis.

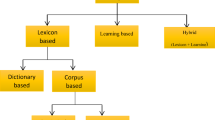

Regarding emotional preference analysis, there are currently three predominant research methods: dictionary-based, traditional machine learning-based, and deep learning-based approaches30. Cho et al. integrated multiple sentiment dictionaries for product comment sentiment analysis across various domains31. Although the performance of this integration surpasses that of a single dictionary, the method is constrained by the quality and coverage of sentiment dictionaries. In ML, Naive Bayes achieves excellent performance in text classification. Wang et al. constructed a multilayer Naive Bayes model based on multidimensional Naive Bayes models to analyze users’ retweeting sentiment tendencies towards a microblog32. However, when applying ML for sentiment analysis, it often fails to fully leverage the context of the contextual text, disregarding contextual semantics. Du et al. employed deep learning methods such as LSTM for sentiment analysis of user comments, which can proactively learn contextual features and exhibit superior performance25. Concurrently, many scholars conduct multi-dimensional sentiment analysis of UGC33, enabling a more comprehensive understanding of user needs and is considered rational. Nevertheless, existing research often overlooks the analysis of comment interaction indicators, and interaction indicators imply a more direct emotional expression of users towards product features. Although these expressions are often not in textual form, the user emotions they reflect should not be disregarded. Therefore, when conducting aspect-level sentiment analysis of user needs, this paper employs LDA for feature extraction, and LSTM for sentiment analysis, and incorporates the impact of interaction indicators on sentiment analysis.

User need prediction

Numerous scholars have ventured into exploring how to mine valuable information from UGC and construct prediction models. Ma et al. employed K-means clustering to predict users’ preferences for innovative features of smart connected vehicles from a cultural perspective34. Song et al. integrated the Kano model, grey theory, and Markov chains to predict customer needs and provided a case study for predicting the needs of mobile phone users, thereby demonstrating the feasibility of their method35. However, user needs are implicit, and mining users’ implicit needs is pivotal to need prediction. Jing et al. proposed utilizing EEG data to capture users’ implicit needs and employed backpropagation neural networks to predict user needs36. Yet, such methods are prone to randomness in prediction outcomes due to small data samples, whereas UGC sample data is abundant, rendering it an apt data source for user need analysis. Yakubu et al. proposed a method for forecasting the future significance of product attributes based on online customer reviews and Google Trends37. Zhang et al. used the Grey-Markov model to predict user needs in the short term based on online comments38. In summary, methods for predicting user needs in the product design domain include grey prediction, LSTM, and Markov methods. These methods disregard exogenous variables when predicting user needs and only consider endogenous variables. Moreover, when considering endogenous variables, they solely focus on the development trend of a single need, neglecting the interrelationship between needs. When designing products, user needs are often diverse and interconnected17. It is essential to consider the interrelationship between needs when predicting user needs. Chen et al. used CA to adjust and quantify user needs and combined it with the designer’s experience to generate product concepts39. Liu et al. proposed a forecast method within the Quality Function Deployment (QFD) framework based on compositional time series and VAR model to capture and forecast the volatility of customer needs40. The VAR model is a time series prediction model that accounts for the interrelationship between multiple variables. Therefore, this paper constructs a CA-VAR-Markov model to incorporate the interrelationship between user needs to accurately predict the development trend of user needs, thereby offering suggestions for future product optimization.

Methodology

Extensive scholarly research has been conducted on the mining of user needs, and prediction models have been established based on the extracted user needs. However, the majority of these studies have overlooked the interrelationships between various user needs, leading to inaccurate predictions. This paper analyses and predicts user needs in a four-stage process: UGC mining and processing, quantification of user needs indicators, user need prediction, and verification of prediction results. Initially, UGC is obtained and processed, which involves deduplication, tokenization, and the removal of stop words. Secondly, LDA is utilized for topic classification, and the BERT model is employed for feature extraction. Based on the word frequency and sentiment score of product features, the user attention value and user satisfaction value are calculated, taking into account user interaction indicators. Subsequently, correlation analysis is performed on various user need indicators, and a CA-VAR-Markov model is constructed for user need prediction to dynamically analyze the changing trends of user needs. Finally, the results of the prediction model are verified using the A-Kano model, and user needs are deeply explored to propose specific strategies for product optimization. The flowchart of user needs analysis and prediction is depicted in Fig. 1.

UGC mining and processing

Compared to traditional methods of obtaining user needs, UGC offers the advantages of abundant data and timely acquisition41. Therefore, this paper selects UGC as the data source. Given the large number of online users and the complexity of personnel, the quality of UGC published by users varies. To ensure the accuracy of subsequent text analysis, the collected UGC is processed as follows: (a) Removal of useless content: Manually removing comments that only express the user’s purchase intent and cannot reflect user needs, as well as comments describing other products unrelated to the purchased product. (b) Removal of emoticons: Emoticons convey emotional attitudes generally consistent with the preceding text, but they affect the readability of data, leading to garbled experimental results; hence, emoticons are removed in this paper. (c) Tokenization: After filtering UGC, sentence processing is performed, followed by word segmentation on single-sentence comments. (d) Removal of stop words: Removing a large number of meaningless particles, conjunctions, etc., in the text, and eliminating platform-related, brand-related, and other words unrelated to product features according to the language environment of the data source.

Quantification of user need indicators

The implicit nature of user sentiment increases the difficulty of sentiment analysis42. When conducting ABSA of UGC, the first step is to use LDA to identify document topics. After obtaining the "topic-document set" distribution matrix, documents are classified into topics based on the distribution, and then word vector feature extraction is performed on the classified results. Subsequently, LSTM is used for sentiment analysis. Given that user attention and satisfaction can respectively reflect the importance of product functions and the degree to which they meet user needs, a comprehensive consideration of these two factors can better analyze user sentiment. The quantification of user attention can be calculated based on the word frequency of product feature words. User satisfaction can be calculated by connecting the output of LSTM to a fully connected layer through Softmax. Finally, the attention value and satisfaction value are corrected by considering user interaction indicators. The sentiment analysis process is illustrated in Fig. 2.

User needs clustering

The LDA model is a topic model proposed by Blei et al. in 200343. As an unsupervised machine learning method, when a given document set is input into the model, the probability distribution of topics for each document in the document set can be obtained. Based on this probability distribution of topics, tasks such as text classification and topic clustering can be carried out44. The structure of the LDA model is shown in Fig. 3. The description of product features in UGC is diverse. By combining the results of LDA topic classification and manually adjusting some feature words that may express the same user needs, a user needs set is established by \(R\), and each user need is represented by \({R}_{i}\). A product has \(n\) user needs, which can be represented as \(R=\left\{{R}_{1},{R}_{2},\cdots {R}_{n}\right\}\). A user need includes \(j\) product features, among which \(j=\text{1,2},\cdots ,m\). Each product feature of a user need is represented by \({C}_{i,j}\), and the feature set of the product is represented by \(C=\left\{{C}_{\text{1,1}},{C}_{\text{1,2}},\cdots ,{C}_{i,j},\cdots ,{C}_{n,m}\right\}.\)

Calculation of user attention

According to the clustering results of the LDA topic model, map \({C}_{i,j}\) to \({R}_{i}\) and calculate the word frequency of product features \({f}_{i,j}^{*}\) based on TF-IDF values. Considering the impact of user interaction indicators, the corrected product feature word frequency calculation formula is presented as Formula 1. Since the values of user interaction indicators vary greatly and cannot be directly used as evaluation scores, scores are assigned to each interaction indicator to calculate the final score. If a comment with a product feature word has an attribute interaction indicator, it is scored 1, otherwise 0. The calculation formula for the number of likes (Formula 2) is used as an example. The attention value of the \(i\)-th user need \({g}_{i}^{*}\) is measured by the sum of the word frequencies of all product features under that user need. To facilitate subsequent data usage, standardize user attention. Standardization is a linear transformation based on the mean and standard deviation of the data. After standardization, the mean of the data is 0, the standard deviation is 1, and the data will follow a standard normal distribution. The standardized data can better improve the performance of machine learning and other models and can avoid excessive influence on model training caused by a large numerical range of certain features. It is denoted as \({g}_{i}\), the calculation formula is shown in Formula (3).

That is, the score of the interaction indicator is the ratio of the ranking reverse of the interaction indicator to the number of comments with interaction indicators.

Calculation of user satisfaction

The "topic-document set" classified by LDA is input into the BERT model. The BERT pre-trained language model, based on a bidirectional Transformer encoder, consists of stacked Transformer units, where the output of one layer serves as the input for the next layer, with each Transformer encoding unit operating independently45. The BERT layer mainly includes two parts: BERT embedding and BERT encoding. The BERT embedding layer is composed of word embeddings, segment embeddings, and position embeddings, which are summed together to achieve text vectorization46. The structure of the BERT model is shown in Fig. 4.

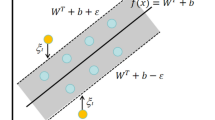

LSTM introduces storage and memory functions based on the Recurrent Neural Network, controlling information input and output through a gating mechanism, selectively forgetting certain information47. An LSTM unit includes an input gate \(i\), a forget gate \(f\), an output gate \(O\), and a memory cell \(c\). The forget gate determines which information should be discarded from the current memory cell. The specific structure is shown in Fig. 5. The specific calculation formula is as follows:

where \(\sigma\) is the sigmoid function; \({W}_{f}\) represents the weights of the forget gate; \({b}_{f}\) represents the bias of the forget gate.

The input gate determines which information should be added to the current memory cell, with the specific expression as follows:

After the information passes through the forget gate and the input gate, the current memory cell state needs to be updated, calculated as follows:

The output gate determines which feature information the current memory cell should output, with the calculation formula as follows:

The output layer primarily inputs the feature vector output from the LSTM model into a fully connected layer for dimensionality reduction. Through the fully connected layer, the number of sentiment label categories is output, achieving the sentiment label prediction task. Finally, Softmax is used for normalization to obtain the sentiment polarity of the text, which represents the initial user satisfaction score \({Satafication}_{Score}\). Additionally, for UGC with additional comments, the LSTM model is used to calculate the additional user satisfaction score \({Satafication}_{Score}^{\prime}\). Considering both scores, the final user satisfaction \({s}_{i}^{*}\) is obtained, with the specific calculation formula as follows:

To facilitate subsequent data usage, user satisfaction is normalized. Normalization is to map the data to a specified interval, usually the interval [0, 1]. After normalizing the data to a specific interval, data with different features have the same dimension and comparable scale, which makes it convenient to compare and analyze the data. Denote it as \({s}_{i}\). The \({s}_{i}\) is shown in Formula (11):

User needs prediction

Correlation analysis between user needs

CA is utilized to study the relationships between quantitative data, including whether there is a relationship and the degree of closeness48. This analysis aids in understanding the mutual influence between variables, thereby better predicting and explaining phenomena. Correlation coefficients are calculated to determine if there is a correlation between data. The correlation coefficient mentioned in this paper refers to Pearson’s correlation coefficient \(r\). Each correlation coefficient is \({r}_{o}\), \({r}_{o}=\left\{{r}_{1},{r}_{2},\cdots {r}_{{n}^{2}}\right\}\), and its calculation formula is:

VAR model for user needs prediction

The VAR model can analyze an econometric model of a system composed of multiple interrelated elements without considering whether a variable is exogenous or endogenous. This paper uses the VAR model, which can not only study the interrelationships between influencing factor variables but also predict their development trends49. The expression of a VAR model with \(N\) variables lagged \(k\) orders is:

where \({Y}_{t}\) is an \(N\times 1\) time series column vector, \({Y}_{t}=\left({g}_{1,t},{g}_{2,t},\cdots ,{g}_{n,t}\right)\cup \left({s}_{1,t},{s}_{2,t},\cdots ,{s}_{n,t}\right)\), \(\mu\) is an \(N\times 1\) constant term column vector, \({\prod }_{1} ,\cdots {\prod }_{k}\) are \(N\times N\) parameter matrices, and \({\mu }_{t}\sim \parallel D\left(0 \Omega \right)\) is an \(N\times 1\) random error column vector. Each element is not autocorrelated, but there may be correlations between the random error terms corresponding to different equations.

The VAR model construction process for user needs prediction is as follows:

(1) Unit root test. The role of the unit root test is to test the stationarity of the time series. Only stationary sequences can establish a VAR model, otherwise not. This paper uses the ADF test method to test the stationarity of the user needs time series. To illustrate briefly with a regression having two lagged difference terms (values of the previous two periods):

where \(\Delta {Y}_{t}\) is the first difference term of the sequence, \({Y}_{t-1}\) is the first leg of the sequence, \(\Delta {Y}_{t-2}\) is the second difference term of the sequence, \({\beta }_{1}\), \({\beta }_{2}\), \({\beta }_{3}\), \({\beta }_{4}\), \({\beta }_{5}\) are coefficients, and \({\varepsilon }_{t}\) is the constant term and \(t\) is the time trend term.

(2) Select the lag order. In addition to meeting the stationarity condition when establishing a VAR model, the appropriate lag order \(k\) should also be determined. If the lag order is too small, the autocorrelation of the error term will be very serious, leading to a non-consistent estimation of parameters. Appropriately increasing the value of \(k\) in the VAR model can eliminate the autocorrelation in the error term; however, on the other hand, the value of \(k\) should not be too large. Too large a value of \(k\) will reduce the degrees of freedom, directly affecting the effectiveness of the model parameter estimates. This paper selects the \(k\) value by comprehensively using the Akaike Information Criterion (AIC), Bayesian Information Criterion (BIC), Final Prediction Error Criterion (FPE), and Hannan-Quinn Information Criterion (HQIC).

(3) Judge model stability. The sufficient and necessary condition for the stability of the VAR model is that all characteristic values of the companion matrix \({\prod }_{1}\) must be inside the unit circle. For example, \({Y}_{t}=\mu +{\prod }_{l}{Y}_{t-1}+{u}_{t}\) , which \(A\left(L\right)=(I-\prod_{I}L)\), the condition for the stability of the VAR model is that the roots of the characteristic equation \(\left|{\prod }_{1}-\lambda I\right|=0\) are all inside the unit circle. The roots of the characteristic equation \(\left|{\prod }_{1}-\lambda I\right|=0\) are the characteristic values of \({\prod }_{1}\).

(4) Granger causality test. The Granger causality test is used to test whether there is a causal relationship between the endogenous variables in the VAR model. The null hypothesis is that variable \(x\) cannot Granger cause variable \(y\), and the alternative hypothesis is that variable \(x\) can Granger cause variable \(y\).

(5) Impulse response and variance decomposition. Impulse response function analysis is used when performing VAR model analysis to consider the stability of the entire model. When one of the influencing factors suddenly changes, it has a long-term or short-term dynamic impact on the model. Variance decomposition is to analyse the extent to which the change of one endogenous variable affects the entire system or the change of a certain endogenous variable.

(6) Forecasting. When making forecasts, introducing too few or too many influencing factors can lead to inaccurate forecast results. Therefore, the user needs data with mutual influence obtained from the above correlation analysis are input into the model one by one to predict the future development trend of user needs.

Markov model improves the predicted value

The Markov model is a prediction method based on Markov chains, suitable for predicting event sequences with time dependence. The core idea of this model is "memorylessness," meaning that the future state depends only on the current state and is independent of all previous historical states. This characteristic makes the Markov model particularly suitable for analyzing and predicting time series data, especially in areas such as financial market analysis, speech recognition, and natural language processing50. A Markov model is established for the residual sequence of the user needs attention and satisfaction values predicted by the VAR model. The specific steps are as follows:

(1) Determine the state intervals. To ensure the accuracy of prediction and reduce the computational complexity, the residual sequence between the predicted values of the VAR model and the true values is divided into four state intervals of equal length. When considering the number of interval divisions, if the number of intervals is too small, residuals with large differences may be classified into the same category, resulting in the loss of error information and an inability to accurately reflect the deviation of the model’s prediction. On the other hand, if there are too many divisions, the data in each interval may be too sparse, making it difficult to accurately count the probabilities and transition situations of each interval, which also affects the prediction accuracy. This paper attempts to make predictions by dividing into different numbers of intervals. Based on a comprehensive consideration of prediction accuracy and computational complexity, it is chosen to divide the residual sequence into four state intervals of equal length, that is, \(E=\left\{\text{1,2},\text{3,4}\right\}\), and each residual state interval has its corresponding upper and lower limits, that is:

where \({E}_{i}\) indicates the \(i\) th residual state interval; \({a}_{i}\) and \({b}_{i}\) indicate the upper and lower limits of the \(i\) th residual state interval, respectively.

(2) Determine the initial state probability. At the initial state \((k=0)\), the probability of each residual state occurring is the initial state probability, represented by \({\pi }_{i}\left(0\right)\), and its calculation formula is:

where \(N\) indicates the total number of each state in the residual sequence, and \({N}_{i}\) indicates the number of states in the residual sequence.

(3) To compute the state transfer probability matrix \(P\) is to ask for the transfer probability \({P}_{i,j}(i,j=\text{1,2},\cdots ,n)\) of each state transferring to any of the other states. In order to find each \({P}_{i,j}\), the idea of frequency approximation of probability is used to compute it, and the formulas for \({P}_{i,j}\) and \(P\) are as follows:

where \({M}_{i,j}\) indicates the number of times state \({E}_{i}\) transitions to state \({E}_{j}\), and \({M}_{i}\) indicates the total number of state \({E}_{i}\).

(4) Improve the predicted value. After the \(t\) th forecast period, the probability of being in the residual state interval is \({\pi }_{i}\left(t\right)\). According to the properties of the Markov model, it is calculated as:

After calculating, obtain the residual state interval with the maximum probability after the \(t\) th forecast period, thereby correcting the forecast value. The calculation formula is:

where \({R}_{i}^{\prime}(t)\) denotes the predicted value after \(\text{t}\) cycle with the VAR model and \({R}_{i}(t)\) denotes the predicted value after correction with the Markov model.

Verification of prediction results

To address the potential bias in predicting user needs using UGC and the vagueness in product design improvement strategies, traditional methods of obtaining user needs are used for verification, and specific design improvement strategies are proposed. The A-Kano model expands the traditional Kano model by introducing Kano indices, Kano allocators, and configuration coefficients24. It also adds an evaluation indicator for "user’s perception of the importance of needs" in the Kano questionnaire, thereby more scientifically and presenting the priority of user needs. The specific steps for using the A-Kano model to categorize user needs are as follows:

(1) Develop a questionnaire. Based on the high-frequency product feature words in UGC, use the Affinity Diagram method to determine the content of the questionnaire. At the same time, use asymmetric scaling to assign values to the questionnaire options to achieve quantification of user satisfaction, using a 10-level equal interval scale to quantify the respondents’ attention to a certain need.

(2) Quantification of need indicators. If the set of user needs is \(R=\left\{{r}_{i}|\text{1,2},\cdots ,i\right\}\), the set of respondents is \(T=\left\{{t}_{j}|\text{1,2},\cdots ,j\right\}\), then the evaluation set of respondents for the needs is \({e}_{ij}=\left({x}_{ij},{y}_{ij},{w}_{ij}\right)\), where \({x}_{ij}\) indicates the user’s score when the need is not available, \({y}_{ij}\) indicates the user’s score when the need is available, and \({w}_{ij}\) indicates the user’s score for the importance of the need. To avoid the effect of extreme values, the mean value of the ratings when the need is not available \(\overline{{X}_{i}}\) and the mean value of the ratings when the need is available \(\overline{{Y}_{i}}\) are calculated.

where \(j\) indicates the number of respondents, \(w=\sum_{j=1}^{j}{w}_{ij}\).

The A-Kano model characterizes the user’s preference for need \({r}_{i}\) with vector \(\overrightarrow{{\gamma }_{i}}\), i.e., \({r}_{i}\sim \overrightarrow{{\gamma }_{i}}\equiv \left({\gamma }_{i},{\alpha }_{i}\right)\). Where \({\gamma }_{i}\) is the magnitude of vector \(\overrightarrow{{\gamma }_{i}}\), which represents the importance index, and \({\alpha }_{i}\) is the angle between vector \(\overrightarrow{{\gamma }_{i}}\) and the horizontal axis, which represents the satisfaction index. Its calculation formula is:

(3) Categorization of need attributes. The A-Kano model classifies user needs into four categories: Attractive, One-dimensional, Must-be, and Indifferent, based on the following rules:

Based on the need classification criteria, and on the basis of the distance interval of \({\gamma }_{i}\) and the angular range of \({\alpha }_{i}\), a need distribution map can be drawn.

(4) Calculation of need priority. Different attributes of user needs imply different priorities, i.e., Must-be > One-dimensional > Attractive > Indifferent. However, user needs of the same type also need to determine the priority, which the A-Kano model solves through the configuration coefficient:

where \({\rho }_{i}\) is the configuration factor for need \({r}_{i}\), a larger value of \({\rho }_{i}\) indicates that need \({r}_{i}\) has a higher priority among the needs with the same attribute.

Case studies

In recent years, SUVs have emerged as a popular vehicle choice among consumers, leading to a continuous rise in their sales51. Understanding future user needs for SUVs is crucial for automotive companies. Influenced by the new Moore’s Law, emerging players in car manufacturing are consistently shortening product iteration cycles52, with some models undergoing facelifts in less than 12 months. As a leading automotive internet company in China, Autohome’s platform has a vast amount of user data, covering users of all ages and consumption levels. These data provide valuable information resources for the platform. This paper selects the UGC related to the NIO EC6 from “Auto Home” for case analysis and proposes strategic recommendations for optimizing the design of the NIO EC6 to verify the feasibility of the proposed methodology. First, Python is used to crawl and process the UGC of the NIO EC6. Then, the LDA is used to extract and classify product features and summarize user needs. Next, the BERT-LSTM model is used to quantify user need indicators, calculating user attention and satisfaction. Finally, the CA-VAR-Markov model for user needs prediction is established to predict the changing trends of user needs for the NIO EC6, and the A-Kano model is used to verify the forecast results and propose specific strategies for design optimization.

Mining and processing UGC for the NIO EC6

Crawl the UGC of NIO EC6. The crawling time range is from July 2021 to June 2024, with a total of 36 months of data, and 5,268 pieces of UGC crawled. It includes original reviews, view counts, like counts, the number of additional reviews, and the content of additional reviews. When screening UGC samples, in addition to manually removing content irrelevant to NIO EC6 and emoticons, more stringent screening criteria are formulated. It is required that the number of words in a review is not less than 30 to ensure that the review contains sufficient user-need information. At the same time, reviews containing keywords such as “need”, “improvement”, and “hope” are preferentially screened out, as these reviews are more likely to directly reflect users’ needs and expectations for the product, thus improving the relevance of the samples to the research topic. Finally, 5,230 pieces of UGC remain. Use regular expressions written in Python to remove emoticons, and then use Jieba for word segmentation. To achieve more accurate word segmentation, the Language Technology Platform developed by the Social Computing and Information Retrieval Research Center of Harbin Institute of Technology is further used for word segmentation processing. When dealing with stop words, based on the Chinese general stop word list, a comprehensive stop word library is constructed in combination with the characteristics of the automotive field. The difference set operation is performed between the set of words after word segmentation and the set of stop words. Finally, remove stop words such as “and”, platform words like “Autohome”, and product names such as “NIO”, which are irrelevant to product features. An example of the UGC processing results is shown in Table 1. At the same time, with two months as a period, the data is divided into 18 periods.

Quantifying user need indicators for the NIO EC6

User needs clustering for the NIO EC6

After conducting LDA topic analysis with a Python program, the distribution probability of each document within the document set corresponding to various topics was obtained, facilitating the subsequent classification of documents by topic. Several parameters must be set when using LDA, including the number of topics \(K\), Dirichlet prior parameters \(\alpha\) and \(\beta\), the number of topic display feature words \(W\), and the maximum number of iterations. This study uses default settings, that is, \(\alpha\) is 0.1, \(\beta\) is 0.01, the number of iterations is 3000, and \(W\) is 100. The number of topics \(K\) is determined through multiple experiments. Experiments were conducted multiple times with \(K=\{\text{2,3},4,...,10\}\). After manually checking the words under each topic, it was found that when \(K=5\), the best topic classification was achieved, meeting expectations. The product features mapped to user needs were identified based on the probability distribution of keywords across topics. The 50 most frequent product features were categorized into five user needs: Space \({(R}_{1})\), Configuration \({(R}_{2})\), Energy Consumption \({(R}_{3})\), Operation \({(R}_{4})\), and Decoration \({(R}_{5})\), as shown in Table 2.

User attention calculations for the NIO EC6

User attention towards a specific need is quantified by summing the term frequencies of all product feature keywords associated with that need, represented by TF-IDF. TF-IDF is a widely used method for assessing keyword importance, consisting of two components: TF, which represents the frequency of a word in a document, and IDF, which measures the general significance of a word. The importance of a word to a document or corpus is evaluated by multiplying TF and IDF. The higher the TF-IDF value, the more significant the word is within document53. The attention values for each user need are calculated according to Formula (5), as shown in Table 3. As can be seen from the data in the table, users pay the most attention to \({g}_{3}\). This may be because the automotive industry is developing toward a new energy direction, and the energy consumption index is one of the core criteria for measuring the performance of new energy vehicles. As an electric vehicle, users naturally expect the NIO EC6 to have low energy consumption and a long cruising range. Users pay relatively less attention to \({g}_{4}\). This may be because users can quickly get familiar with and adapt to the vehicle’s operation functions. As long as there are no obvious defects in the operation, it will not attract special attention from users. A network relationship diagram is a tool that visually presents various elements and their interrelationships in a graphical form. The network relationship diagram of product feature words is drawn using the network library and the matplotlib library, as shown in Fig. 6. It can be seen from the figure that the keywords are interrelated, which also proves the necessity of considering the mutual influence relationship among various needs when predicting user needs.

User satisfaction calculations for the NIO EC6

To calculate user satisfaction, the topic documents classified by LDA are processed using the Hugging Face open-source pre-trained BERT model. The pre-trained word vectors of this model have a dimensionality of 768, utilize 12-layer Transformers, employ the ReLU activation function, and have a maximum training sequence length set to 256. The model has a total of 110 MB model parameters. The experiment uses the AdamX optimizer, a learning rate of \(2{e}^{-5}\), a training batch size of 16, and the loss function is the cross-entropy loss function. The LSTM hidden layer size is 768, the dropout rate is 0.2, the number of memory layers is 2, and the training set and test set ratio is 8:2. The emotional scores of the original UGC and additional UGC of NIO EC6 are normalized by Softmax and the final user satisfaction value is obtained according to Formula (11), as shown in Table 4. As can be seen from the data in the table, users have the highest satisfaction with \({s}_{3}\). Decoration is a need that users can directly see and touch. Its effect is intuitive and obvious, making it easy to leave a deep impression on users. When the vehicle’s decoration meets users’ aesthetic expectations, they will quickly develop a favorable impression and satisfaction. Users’ satisfaction with \({s}_{4}\) is relatively low. This may be due to some deficiencies in the operation functions of the NIO EC6, which affect the user experience. For example, the interaction design of the in-car system is not user-friendly enough, resulting in cumbersome operation and slow response; or some driver-assistance functions are not intelligent enough to meet users’ needs well, thus reducing users’ satisfaction with the operation.

Predicting user need for the NIO EC6

After obtaining the user need indicators for 18 periods, it is necessary to conduct a correlation analysis on the need indicators within these 18 periods. The related need indicators are grouped and used as the input for the subsequent VAR model. Since this paper needs to use the Markov model to correct the predicted indicators, it is necessary to obtain the residual sequence first. Input the need indicator data of the first 1—8 periods into the VAR model to predict the need indicators for the 10 periods from 9 to 18. Compare the predicted values with the actual values to obtain the residual sequence. Finally, input the needed indicators of the 18 periods into the VAR model by group to predict the indicator values within the next 6 periods, and correct them according to the residual sequence to obtain the final predicted values. The schematic diagram of the prediction is shown in Fig. 7.

Correlation analysis to determine prediction groups

Before employing the VAR model to predict user needs, CA is conducted to determine the relationships between the attention and satisfaction of various user needs. This paper utilizes SPSS 26.0 for analysis, calculating correlation coefficients using Pearson, Spearman, and Kendall’s tau methods. Table 5 displays the results obtained using Spearman’s tau. The data indicates that the p-values between the attention and satisfaction values of user needs are predominantly less than 0.01, with all correlation coefficients being positive, signifying a positive correlation between them. To ensure accurate predictions, the input data for the VAR model should not be excessive. Based on the correlation coefficients, the attention and satisfaction of user needs are grouped into four categories for input into the VAR model. Among these, groups are formed as follows: \(\left({g}_{1},{g}_{2},{g}_{3}\right)\), \(\left({g}_{4},{g}_{5}\right)\) ,\(\left({s}_{1},{s}_{2}\right)\) and \(\left({s}_{3},{s}_{4},{s}_{5}\right)\).

VAR—Markov model prediction

Utilizing the group relationships, the VAR model is employed to predict six periods into the future. Taking the first group as an example, the lag order is selected as two lags. The Granger causality test shows that there is a long-term cointegration relationship among the variables, and there is no spurious regression. The AR root test shows that all variable roots are within the unit circle, and the model is in a stable state. The final forecast results are shown in Table 6.

To facilitate subsequent improvement of the forecast results by the Markov, taking \({g}_{1}\) as an example, the VAR model uses data from the first eight periods as raw data to predict the values for the next ten periods. The forecast results are compared with the actual values, and the residuals are calculated. The residuals are divided into 4 equal state intervals. The 4 residual state intervals for \({g}_{1}\) are \({E}_{{g}_{1}^{1}}(-0.0065,-0.0052)\),\({E}_{{g}_{1}^{2}}(-0.0052,-0.0039)\),\({E}_{{g}_{1}^{3}}(-0.0039,-0.0026)\), and \({E}_{{g}_{1}^{4}}(-0.0026,-0.0013)\). The residual state intervals for \({g}_{1}\) in 10 periods are \({E}_{{g}_{1}^{4}}\),\({E}_{{g}_{1}^{3}}\),\({E}_{{g}_{1}^{4}}\),\({E}_{{g}_{1}^{3}}\),\({E}_{{g}_{1}^{2}}\),\({E}_{{g}_{1}^{2}}\),\({E}_{{g}_{1}^{3}}\),\({E}_{{g}_{1}^{1}}\),\({E}_{{g}_{1}^{3}}\) and \({E}_{{g}_{1}^{1}}\). The specific results are shown in Table 7.

Based on the data in Table 7, the initial probabilities of each state \({\pi }_{{g}_{1}}\left(0\right)=\left(\frac{1}{5},\frac{1}{5},\frac{2}{5},\frac{1}{5}\right)\) for \({g}_{1}\) are calculated according to Formula (14). The state transition probability matrix \({P}_{{g}_{1}}\) for \({g}_{1}\) is calculated according to Formulas (15) and (16).

From Table 6 after a period of VAR model predicted value \({g}_{1}(19)\) is 0.015, at this time the probability of the residual state interval \({\pi }_{{g}_{1}}\left(1\right)={\pi }_{{g}_{1}}\left(0\right){P}_{{g}_{1}}=\left(\frac{1}{10},\frac{1}{5},\frac{1}{2},\frac{1}{5}\right)\), which shows that \({g}_{1}(19)\) is in the residual state interval \({E}_{{g}_{1}^{3}}\) of the probability of the largest. Based on this correction VAR predicts the value of \({g}_{1}\), which is calculated by the formula:

Similarly, the attention and satisfaction values of other user needs are corrected, and the results are shown in Table 8.

Validating the predictions of the NIO EC6

A-Kano model to deeply analyze the user needs of NIO EC6

To test the accuracy of predicting user needs through UGC, the traditional user need-obtaining method, the Kano model, is used for verification. Based on the high-frequency product feature words in the NIO EC6 UGC, such as “cruising range”, “interior”, “brakes”, etc., the KJ method is adopted to determine the content of the questionnaire. The questionnaire covers various aspects of vehicle needs, including vehicle space, configuration, energy consumption, operation, and decoration. The asymmetric scaling method is used to assign values to the questionnaire options. A 10-level equidistant scale is used to quantify the subjects’ attention to a certain need, ranging from 1 point (completely not concerned) to 10 points (extremely concerned). For the satisfaction evaluation, a 10-level scale is also used, with 1 point indicating very dissatisfied and 10 points indicating very satisfied. This can more precisely quantify users’ attitudes towards different needs. Five automotive design experts were invited to conduct a preliminary evaluation and optimization of the questionnaire to ensure that the questions accurately reflect user needs. The questionnaire was distributed to 100 respondents, including 10 teachers and 20 students majoring in transportation design, and the remaining 70 respondents were all NIO EC6 owners. Such a sample composition takes into account both professional opinions and actual user experiences. After collating and analyzing the questionnaire results, a need distribution map is drawn according to the need attribute classification rules of the A—Kano model and the user need priority is calculated according to Formula (25). The need distribution map is shown in Fig. 8. As can be seen from the figure, \({R}_{8}\), \({R}_{9}\), \({R}_{14}\), and \({R}_{15}\) are attractive needs, \({R}_{1}\), \({R}_{2}\), \({R}_{3}\), \({R}_{7}\), and \({R}_{12}\) are one—dimensional needs, and \({R}_{4}\), \({R}_{5}\), \({R}_{6}\), \({R}_{10}\), \({R}_{11}\), and \({R}_{13}\) are basic needs. A comparative analysis is carried out between the user needs predicted based on UGC and the user needs obtained based on the A—Kano model, as shown in Table 9. From the data in the table, it can be seen that basic needs are mostly concentrated in \({R}_{2}\) and \({R}_{4}\), where attention shows an upward trend and satisfaction shows a downward trend. One—dimensional needs are concentrated in \({R}_{1}\), where both attention and satisfaction show a downward trend. Attractive needs are concentrated in \({R}_{3}\) and \({R}_{5}\), where both the attention and satisfaction show an upward trend.

Model verification

To further assess the accuracy of the prediction model, the LSTM forecast model and the Autoregressive Integrated Moving Average (ARIMA) model are selected for a comparative analysis of forecast accuracy. Taking \({s}_{1}\) as an example, Fig. 9 illustrates the comparison between the actual values and the forecasted values from each model. As can be seen from the figure, the curve of predicted values of the CA-VAR-Markov model is closer to the curve of true values. The mean absolute percentage error (MAPE), root mean squared error (RMSE), and mean absolute error (MAE) are employed to evaluate the forecast accuracy. The forecast accuracy metrics for the three models are summarized in Table 10. The smaller the values of MAPE, RMSE, and MAE, the closer the forecast values align with the actual values, indicating higher model accuracy. The CA-VAR-Markov model, which considers the interrelationships between variables, demonstrates superior predictive capabilities for future development trends. Its average MAE for user attention and user satisfaction are 0.0032 and 0.1857, respectively, which are the lowest among the three models, signifying that the CA-VAR-Markov model exhibits high forecast accuracy and meets practical application needs. At the same time, the computational efficiency of the model and its adaptability to different data distributions are also important criteria for evaluating the model’s performance. In terms of computational efficiency, it is measured by recording the running time of each model during training and prediction, as well as resources such as occupied memory space. The calculation process of the CA -CA-VAR-Markov model is relatively complex. As the number of variables increases, the computational load will increase, and the computational efficiency may decrease. Due to its complex network structure and large number of parameters, the LSTM model requires a relatively long time for training and prediction, resulting in high computational costs. The ARIMA model has a relatively simple calculation process and high computational efficiency, but its accuracy is relatively low. Regarding adaptability to different data distributions, by analyzing data distributions with different characteristics, the performance changes of each model are observed. The CA-VAR-Markov model has strong adaptability to data distributions and can handle various types of data distributions. During the experiment, the LSTM model encountered the problem of overfitting, which affected the model’s adaptability. The ARIMA model is suitable for data with a stationary and normal distribution.

Discussion

This paper employs the CA-VAR-Markov model to predict user needs from UGC and utilizes the A-Kano model for an in-depth analysis to propose optimization design strategies for the NIO EC6. The predictions indicate that \({g}_{2}\) and \({g}_{4}\) are expected to rise in the next six periods, while \({s}_{2}\) and \({s}_{4}\) are anticipated to decline. Moreover, Must-be needs are predominantly concentrated in \({R}_{2}\) and \({R}_{4}\) where attention is increasing and satisfaction is decreasing, signaling that the current offerings in these areas no longer meet user expectations. It is recommended that the company enhance its research and development efforts in these areas. Specifically, prioritizing the optimization of the braking system in terms of Configuration and reducing cabin noise are suggested. For Operation, simplifying the human–machine interaction process to decrease the learning curve for users is essential. Additionally, optimizing the power mode is necessary. In terms of \({R}_{1}\), targeted adjustments are needed, particularly ensuring ample storage space in the vehicle’s trunk, as this is a significant user concern. Finally, for \({R}_{3}\) and \({R}_{5}\), the A-Kano model analysis indicates that many corresponding needs are Attractive needs. Meeting these functional needs could substantially boost user satisfaction. Therefore, it is advised that the NIO EC6 continues to enhance its endurance capabilities while maintaining the current battery-swapping policy and diversifies color options for interior and exterior designs to enrich personalization.

Conclusion

In this paper, the LDA topic model is applied to cluster product features in UGC to obtain user needs. Based on considering user interaction indicators, the attention degree of user needs is calculated according to the keyword frequencies obtained by TF—IDF. The BERT-LSTM model is adopted to calculate the satisfaction degree of user needs. Finally, user needs indicators are input into the CA-VAR-Markov model to predict the development trend of user needs. The A-Kano model is used to conduct an in-depth analysis of user needs, and the NIO EC6 is taken as a case for verification, guiding enterprises to optimize product design. The research contributions are as follows:

-

(1)

Based on obtaining user needs from UGC, the A-Kano model is simultaneously used to refine the need classification. By integrating these two methods, more accurate user needs can be obtained. At the same time, specific strategic suggestions can be put forward for enterprises to optimize product solutions.;

-

(2)

When conducting sentiment analysis on user needs, user interactions can often convey users’ emotional preferences more strongly. In this paper, interaction indicators are integrated into TF—IDF to obtain user attention. The sentiment analysis of additional reviews is incorporated into the satisfaction index using BERT—LSTM, to more accurately capture the emotional inclination of user needs and provide data support for accurately predicting user needs;

-

(3)

When predicting user needs based on UGC, the mutual influence relationships among various needs are taken into account. CA is used to identify the need indicators with mutual influence relationships for input into the VAR model. The VAR model can consider these relationships and output prediction results. Meanwhile, the Markov model is employed to correct the prediction results of the VAR model, compensating for the defect of the fixed and single-trend prediction of the VAR model. The use of the integrated prediction model enhances the accuracy of the prediction results.

However, there are still some limitations in this paper that need improvement:

-

(1)

The data source of user needs in this paper mainly considers UGC. Besides UGC, other data sources may also reflect users’ future needs, such as transaction data. Transaction data can provide valuable insights into consumers’ purchasing behaviors and long-term needs. By integrating these additional data sources, future research can achieve a more comprehensive understanding of user needs and behaviors in the context of electric vehicles;

-

(2)

In addition, for products that have just been launched and lack a large amount of UGC, it is necessary to conduct further research on whether the accuracy rate of the model proposed in this paper can reach the expected level when used for prediction. When predicting the need for products with a small amount of UGC, it may be possible to integrate a model specifically designed for predicting with a small amount of data, such as the grey prediction model, based on the prediction model proposed in this paper;

-

(3)

In the face of new user needs or need changes brought about by new technologies, the predictive ability of this research method is limited. In the future, we will explore the introduction of cutting—edge deep—learning architectures, such as new Transformer—based models. By integrating domain knowledge and industry trends, we aim to more effectively predict new user needs and respond promptly to market changes.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Wang, T. X. & Zhou, M. Y. A method for product form design of integrating interactive genetic algorithm with the interval hesitation time and user satisfaction. Int. J. Industrial Ergono. https://doi.org/10.1016/j.ergon.2019.102901 (2020).

Volokha, V. & Derevitskii, I. In 10th International Young Scientists Conference in Computational Science (YSC). 102–111 (Elsevier Science Bv, 2021).

Bavdaz, M., Giesen, D., Moore, D. L., Smith, P. A. & Jones, J. Qualitative testing for official establishment survey questionnaires. Surv. Res. Methods 13, 267–288. https://doi.org/10.18148/srm/2019.v13i3.7366 (2019).

Xu, Y. S. & Yin, J. W. Collaborative recommendation with user generated content. Eng. Appl. Artif. Intell. 45, 281–294. https://doi.org/10.1016/j.engappai.2015.07.012 (2015).

Nasrabadi, M. A., Beauregard, Y. & Ekhlassi, A. The implication of user-generated content in new product development process: A systematic literature review and future research agenda. Technol. Forecast. Soc. Chang. 206, 19. https://doi.org/10.1016/j.techfore.2024.123551 (2024).

Xu, Q. L. et al. An analytical Kano model for customer need analysis. Design Stud. 30, 87–110. https://doi.org/10.1016/j.destud.2008.07.001 (2009).

Mowlaei, M. E., Abadeh, M. S. & Keshavarz, H. Aspect-based sentiment analysis using adaptive aspect-based lexicons. Expert Syst. Appl. 148, 13. https://doi.org/10.1016/j.eswa.2020.113234 (2020).

Yang, Z. Y., Wang, B., Li, X. M., Wang, W. T. & Ouyang, J. H. S3MAP: Semisupervised aspect-based sentiment analysis with masked aspect prediction. Knowledge-Based Syst. 269, 11. https://doi.org/10.1016/j.knosys.2023.110513 (2023).

Luo, S. J., Zhang, Y. F., Zhang, J. & Xu, J. H. A User biology preference prediction model based on the perceptual evaluations of designers for biologically inspired design. Symmetry-Basel 12, 17. https://doi.org/10.3390/sym12111860 (2020).

Ketipov, R., Angelova, V., Doukovska, L. & Schnalle, R. Predicting user behavior in e-Commerce using machine learning. Cybern. Inf. Technol. 23, 89–101. https://doi.org/10.2478/cait-2023-0026 (2023).

Kim, D. et al. How should the results of artificial intelligence be explained to users?- Research on consumer preferences in user-centered explainable artificial intelligence. Technol. Forecast. Soc. Chang. 188, 8. https://doi.org/10.1016/j.techfore.2023.122343 (2023).

Hassenzahl, M., Diefenbach, S. & Göritz, A. Needs, affect, and interactive products - Facets of user experience. Interact. Comput. 22, 353–362. https://doi.org/10.1016/j.intcom.2010.04.002 (2010).

Qin, D. RISE OF VAR MODELLING APPROACH*. J. Econ. Surv. 25, 156–174. https://doi.org/10.1111/j.1467-6419.2010.00637.x (2011).

Kirchner-Krath, J. et al. Uncovering the theoretical basis of user types: An empirical analysis and critical discussion of user typologies in research on tailored gameful design. Int. J. Hum.-Comput. Stud. 190, 15. https://doi.org/10.1016/j.ijhcs.2024.103314 (2024).

Walk, S. et al. How to apply Markov chains for modeling sequential edit patterns in collaborative ontology-engineering projects. Int. J. Hum.-Comput. Stud. 84, 51–66. https://doi.org/10.1016/j.ijhcs.2015.07.006 (2015).

Yuan, B. K., Wu, K., Wu, X. Y. & Yang, C. X. Form generative approach for front face design of electric vehicle under female aesthetic preferences. Adv. Eng. Inform. 62, 16. https://doi.org/10.1016/j.aei.2024.102571 (2024).

Lai, X. J. et al. The analytics of product-design requirements using dynamic internet data: application to Chinese smartphone market. Int. J. Prod. Res. 57, 5660–5684. https://doi.org/10.1080/00207543.2018.1541200 (2019).

Zhou, F., Jiao, R. J. & Linsey, J. S. Latent customer needs elicitation by use case analogical reasoning from sentiment analysis of online product reviews. J. Mech. Des. 137, 12. https://doi.org/10.1115/1.4030159 (2015).

Zhang, L., Chu, X. N. & Xue, D. Y. Identification of the to-be-improved product features based on online reviews for product redesign. Int. J. Prod. Res. 57, 2464–2479. https://doi.org/10.1080/00207543.2018.1521019 (2019).

Cong, Y. F. et al. A small sample data-driven method: User needs elicitation from online reviews in new product iteration. Adv. Eng. Inform. 56, 14. https://doi.org/10.1016/j.aei.2023.101953 (2023).

Sun, H., Yang, Q. H. & Wu, Y. Q. Evaluation and design of reusable takeaway containers based on the AHP-FCE model. Sustainability 15, 21. https://doi.org/10.3390/su15032191 (2023).

Kumar, Y., Singh, R. & Kataria, C. An approach to identify assistive technology attributes for people with locomotor disability by using a refined Kano model. J. Eng. Des. 35, 901–920. https://doi.org/10.1080/09544828.2024.2347115 (2024).

Koomsap, P., Dharmerathne, B. R. Y. & Ayutthaya, D. H. N. Examination of common mistakes for successful leveraging the Kano model and proposal for enhancement. J. Eng. Des. https://doi.org/10.1080/09544828.2023.2245533 (2023).

Lizarelli, F. L., Osiro, L., Ganga, G. M. D., Mendes, G. H. S. & Paz, G. R. Integration of SERVQUAL, Analytical Kano, and QFD using fuzzy approaches to support improvement decisions in an entrepreneurial education service. Appl. Soft. Comput. 112, 15. https://doi.org/10.1016/j.asoc.2021.107786 (2021).

Du, Y. F., Liu, D. & Duan, H. X. A textual data-driven method to identify and prioritise user preferences based on regret/rejoicing perception for smart and connected products. Int. J. Prod. Res. 60, 4176–4196. https://doi.org/10.1080/00207543.2021.2023776 (2022).

Jin, J., Liu, Y., Ji, P. & Liu, H. G. Understanding big consumer opinion data for market-driven product design. Int. J. Prod. Res. 54, 3019–3041. https://doi.org/10.1080/00207543.2016.1154208 (2016).

Zhang, W. X., Li, X., Deng, Y., Bing, L. D. & Lam, W. A survey on Aspect-Based sentiment analysis: Tasks, methods, and challenges. IEEE Trans. Knowl. Data Eng. 35, 11019–11038. https://doi.org/10.1109/tkde.2022.3230975 (2023).

Sha, K. X., Li, Y. P., Dong, Y. A. & Zhang, N. Modelling the dynamics of customer requirements considering their lability and sensitivity in product development. Adv. Eng. Inform. 59, 16. https://doi.org/10.1016/j.aei.2023.102296 (2024).

Lai, X. J. et al. Kansei engineering for the intelligent connected vehicle functions: An online and offline data mining approach. Adv. Eng. Inform. 61, 20. https://doi.org/10.1016/j.aei.2024.102467 (2024).

Zhou, F., Jiao, J. X. R., Yang, X. J. & Lei, B. Y. Augmenting feature model through customer preference mining by hybrid sentiment analysis. Expert Syst. Appl. 89, 306–317. https://doi.org/10.1016/j.eswa.2017.07.021 (2017).

Cho, H., Kim, S., Lee, J. & Lee, J. S. Data-driven integration of multiple sentiment dictionaries for lexicon-based sentiment classification of product reviews. Knowledge-Based Syst. 71, 61–71. https://doi.org/10.1016/j.knosys.2014.06.001 (2014).

Wang, T. et al. A novel user-generated content-driven and Kano model focused framework to explore the impact mechanism of continuance intention to use mobile APPs. Comput. Hum. Behav. 157, 16. https://doi.org/10.1016/j.chb.2024.108252 (2024).

Bi, J. W., Liu, Y., Fan, Z. P. & Cambria, E. Modelling customer satisfaction from online reviews using ensemble neural network and effect-based Kano model. Int. J. Prod. Res. 57, 7068–7088. https://doi.org/10.1080/00207543.2019.1574989 (2019).

Ma, J., Gong, Y. Q. & Xu, W. X. Predicting user preference for innovative features in intelligent connected vehicles from a cultural perspective. World Electr. Vehicle J. 15, 28. https://doi.org/10.3390/wevj15040130 (2024).

Song, W. Y., Ming, X. G. & Xu, Z. T. Integrating Kano model and grey-Markov chain to predict customer requirement states. Proc. Inst. Mech. Eng. Part B-J. Eng. Manuf. 227, 1232–1244. https://doi.org/10.1177/0954405413485365 (2013).

Jing, L. T. et al. Data-driven implicit design preference prediction model for product concept evaluation via BP neural network and EEG. Adv. Eng. Inform. 58, 27. https://doi.org/10.1016/j.aei.2023.102213 (2023).

Yakubu, H. & Kwong, C. K. Forecasting the importance of product attributes using online customer reviews and Google Trends. Technol. Forecast. Soc. Chang. 171, 13. https://doi.org/10.1016/j.techfore.2021.120983 (2021).

Zhang, N., Qin, L., Yu, P., Gao, W. & Li, Y. P. Grey-Markov model of user demands prediction based on online reviews. J. Eng. Des. 34, 487–521. https://doi.org/10.1080/09544828.2023.2233058 (2023).

Chen, C. H. & Yan, W. An in-process customer utility prediction system for product conceptualisation. Expert Syst. Appl. 34, 2555–2567. https://doi.org/10.1016/j.eswa.2007.04.019 (2008).

Liu, H. J., Xu, K. X., Pan, Z. H. & Asme. In 11th ASME International Manufacturing Science and Engineering Conference (MSEC). (Amer Soc Mechanical Engineers, 2016).

Wang, Z. X., Zheng, P., Li, X. Y. & Chen, C. H. Implications of data-driven product design: From information age towards intelligence age. Adv. Eng. Inform. 54, 18. https://doi.org/10.1016/j.aei.2022.101793 (2022).

Wang, Z., Gao, P. & Chu, X. N. Sentiment analysis from Customer-generated online videos on product review using topic modeling and Multi-attention BLSTM. Adv. Eng. Inform. 52, 11. https://doi.org/10.1016/j.aei.2022.101588 (2022).

Blei, D. M., Ng, A. Y. & Jordan, M. I. Latent Dirichlet allocation. J. Machine Learn. Res. 3, 993–1022. https://doi.org/10.1162/jmlr.2003.3.4-5.993 (2003).

Yi, K., Zhou, Z. B., Wu, Y. Q., Zhang, Q. Y. & Li, X. Empathic connectivity of exhibition technology and users in the digital Transformation: An integrated method of social network analysis and LDA model. Adv. Eng. Inform. 56, 13. https://doi.org/10.1016/j.aei.2023.102019 (2023).

Zhao, A. P. & Yu, Y. Knowledge-enabled BERT for aspect-based sentiment analysis. Knowledge-Based Syst. 227, 8. https://doi.org/10.1016/j.knosys.2021.107220 (2021).

Chen, C. & Morkos, B. Exploring topic modelling for generalising design requirements in complex design. J. Eng. Des. 34, 922–940. https://doi.org/10.1080/09544828.2023.2268850 (2023).

Behera, R. K., Jena, M., Rath, S. K. & Misra, S. Co-LSTM: Convolutional LSTM model for sentiment analysis in social big data. Inf. Process. Manage. 58, 18. https://doi.org/10.1016/j.ipm.2020.102435 (2021).

Ke, J. et al. Discovering e-commerce user groups from online comments: An emotional correlation analysis-based clustering method. Comput. Electr. Eng. 113, 15. https://doi.org/10.1016/j.compeleceng.2023.109035 (2024).

Athanasopoulos, G., Guillén, O. T. D., Issler, J. V. & Vahid, F. Model selection, estimation and forecasting in VAR models with short-run and long-run restrictions. J. Econom. 164, 116–129. https://doi.org/10.1016/j.jeconom.2011.02.009 (2011).

Ororbia, M. E. & Warn, G. P. Design synthesis through a markov decision process and reinforcement learning framework. J. Comput. Inf. Sci. Eng. 22, 10. https://doi.org/10.1115/1.4051598 (2022).

Zeng, D., Miao, J. J., Tang, C. G., Long, Y. X. & He, M. E. Hybrid gene regulatory network for product styling construction in interactive evolutionary design. J. Eng. Des. 34, 986–1012. https://doi.org/10.1080/09544828.2023.2205809 (2023).

Garikapati, D. & Shetiya, S. S. Autonomous Vehicles: Evolution of Artificial Intelligence and the Current Industry Landscape. Big Data Cogn. Comput. 8, 25. https://doi.org/10.3390/bdcc8040042 (2024).

Trappey, A. J. C., Trappey, C. V., Chiang, T. A. & Huang, Y. H. Ontology-based neural network for patent knowledge management in design collaboration. Int. J. Prod. Res. 51, 1992–2005. https://doi.org/10.1080/00207543.2012.701775 (2013).

Funding

This work was supported by the Sichuan Provincial Key Laboratory Open Subject Program (23DMAKL06) and the National Natural Science Foundation of China (52465024)

Author information

Authors and Affiliations

Contributions

L.L. and B.M. wrote the main manuscript; B.M. conducted data collection and analysis using application software; L.L. created the charts. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Liu, L., Ma, B. CA-VAR-Markov model of user needs prediction based on user generated content. Sci Rep 15, 7716 (2025). https://doi.org/10.1038/s41598-025-92173-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-92173-8