Abstract

The capability parameters of third-party testing institutions not only serve as a critical reflection of their technical and quality management capabilities but also form the key basis for categorizing their testing abilities. However, current Chinese phrase-based descriptions of these capability parameters are influenced by diverse expression styles and varying internal standards, making it difficult to establish consistent criteria for classifying testing capabilities. This inconsistency presents notable difficulties for clients and regulatory bodies. Therefore, leveraging clustering techniques to uncover the intrinsic relationships and latent information between testing capabilities and their corresponding parameters is one of the crucial approaches to achieving scientific and reasonable classification of testing capabilities. Traditional text feature extraction methods suffer from several limitations, including sparse features, high-dimensional features, and lack of semantic information. These shortcomings complicate the classification and analysis of testing capability descriptions. To address this issue, this study focuses on the “products and testing objects” within the capability parameters of Chinese testing institutions as the research subject and proposes a joint model based on BERT and semi-supervised K-Means clustering. This model employs BERT to extract textual features from Chinese descriptive phrases and combines them with a small number of labeled samples for semi-supervised K-Means clustering analysis. The clustering results are then used to train a multi-output Softmax classifier, thereby enabling the classification of testing capabilities for third-party institutions. Experimental results demonstrate that the proposed method outperforms traditional methods such as TF-IDF and one-hot encoding when applied to the Chinese description datasets of testing institutions. Specifically, it exhibits advantages in reducing the dimensionality of textual features and enhancing clustering performance. When the proportion of labeled samples accounts for 10% of the total sample size, the method achieves optimal clustering results, with an average classifier accuracy of 89.8%.

Similar content being viewed by others

Introduction

Third-party testing organizations are not directly involved in the production and sale of products, the test report issued by the relative impartiality of the inspection and testing industry has become an indispensable part of the development. Since the introduction of third-party testing in European countries in the early 15th century, the French International Bureau of Inspection (Bureau Veritas, BV), Swiss General Notary Public (SGS), the British Tianxiang Group (Intertek, ITS) and other organizations to develop rapidly, the market scale has gradually expanded, the international head of the organization’s business expanded to more than 100 countries and regions around the world. However, the large volume of inspection and testing business, certification and accreditation classification and description of a variety of different organizations in the classification standards, expression habits, report exhaustiveness and other standards, resulting in inconsistency in the expression of inspection and testing capabilities library and other practical problems, the user and the testing organization of mutual recognition of the information between the impact of a certain degree.

Chinese third-party testing institutions conduct testing work in specific fields according to standard documents such as RB/T 214–20171and CNAS-CL01-G001:20182. The ability parameter is a key metric for evaluating an organization’s testing capabilities and is typically described using Chinese phrases. Compared to long sentences, these phrases are concise yet rich in meaning, reflecting the compact nature of Chinese. However, they often lack complete grammatical structures, sometimes containing only basic subject-predicate elements or even omitting necessary components3,4. Therefore, such phrases need to rely on the context to complement the information in order to make a full meaning. There are two challenges in assessing the consistency of agencies’ testing capacity against capacity parameters, which further complicate the assessment: first, different classification criteria and presentation practices among agencies; The second is the linguistic characteristics of Chinese phrases, such as noun ellipsis, ambiguity and diversification of description. Natural Language Processing (NLP) and clustering algorithms can address these challenges by uncovering internal connections and potential information within capability parameters. Traditional text clustering algorithms represent text using vector spaces characterized by sparse features, high dimensionality, and a lack of semantic information. While dimensionality reduction improves clustering for long texts, it is less effective for short texts. Currently, the Chinese inspection and testing industry lacks mature research utilizing BERT and K-Means for feature extraction and classification, there is an urgent need to develop models based on clustering algorithms to analyze Chinese phrase descriptions of testing capabilities. This would enhance the consistency and accuracy of assessments of third-party testing organizations’ capabilities.

Currently, feature extraction and classification using models such as BERT and K-Means clustering algorithms have been applied in many fields.Acheampong F A5et al. combined active learning methods with deep learning models such as BERT to effectively classify users’ short, disjointed, and mixed-coded Chinese text descriptions in courier pickup and delivery service scenarios. The BERT6pre-trained model has excellent performance in various tasks in natural language7,8,9,10, including applications in the Chinese context, Lai Y11proposed a novel framework called Lattice-Bert for NLU tasks in Chinese scenarios; Wang Z12et al. proposed a BERT-based automatic Chinese text classification method; Li M13and Li H14used BERT model to extract text features to analyse the classification performance and generalization ability in the scenarios of sentiment analysis of Chinese comments and long text classification, respectively. It can be seen that the BERT language model features perform better in both extraction and classification. K-Means clustering algorithm is widely used because of its simple idea and better results15,16,17, but the clustering algorithm divides the class clusters on the basis of no information other than data features. Some studies have shown that introducing some background information in the process of clustering can improve the results of clustering18,19,20, and this method is called semi-supervised clustering. In general, semi-supervised clustering introduces a small amount of supervision in the first stage of the clustering process, and integrates the generation by executing multiple semi-supervised clustering algorithms.Lai21 proposes a new framework for generating the basic partition in an unsupervised manner and assigning different weights to each cluster of the basic partition, which does not need to rerun semi-supervised clustering algorithms when there are limited supervised variations, and it is more Adaptable.

In view of the significant performance of BERT language model, K-Means clustering algorithm and semi-supervised clustering in text feature extraction, classification and clustering, this paper adopts the BERT pre-training model as the method of extracting word vectors, combined with semi-supervised K-Means+Softmax classification model based on the characteristics of sparse descriptive features of the Chinese phrases of the testing ability and the brevity of the text. A joint model of BERT+semi-supervised K-Means+Softmax classifier is proposed to classify the testing ability of multiple third-party testing organizations scientifically and reasonably from the dimension of “products and testing objects”, and evaluate the results. The research results are aimed at efficiently and accurately categorizing the testing capabilities of third-party testing organizations, and providing powerful data support and basis for users to choose the organizations.

Methods

Model

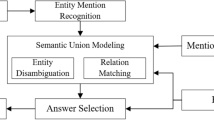

In this paper, the Chinese detection ability with Chinese is clustered, and a model framework is designed for Chinese phrases after data preprocessing. The framework model consists of feature extraction layer, clustering layer and classification layer, as shown in Figure 1.

-

1.

Feature Extraction Layer: Leveraging the bert-base-chinese pre-trained model, this layer transforms hard-to-quantify textual data into 768-dimensional numerical vectors, facilitating subsequent calculations and analyses by the models. The input to this layer is a preprocessed dataset, wherein Chinese phrases are processed through the model to obtain feature vectors for the text. The collection of these vectors constitutes the output of this layer.

-

2.

Clustering layer: The label set prior information is used to guide K-Means algorithm to cluster unlabeled data. This layer takes the unlabeled text features output by the feature extraction layer, the text features of the tag set and their corresponding labels as inputs. By introducing the prior knowledge through the tag set, the clustering process can be better consistent with the actual structure existing in the data. First, the label set is used to initialize the cluster center, then the unlabeled set is divided into samples, and the cluster center is recalculated. When the cluster center no longer changes or the number of iterations reaches the upper limit, the sample division process is ended; otherwise, the output of this layer is the clustering result of the training set, the training set, the test set and the number of labels k made based on this result will be input into the classification layer.

-

3.

Classification layer: This layer consists of a fully connected layer and softmax classifier, which outputs the classification results. Specifically, this layer receives the text feature vector generated by the previous layer as input, first performs linear transformation through the fully connected layer, and then uses Softmax function to convert the transformed output into a form representing the probability distribution of various categories, so as to realize the prediction of text categories. Among them, the fully connected layer is configured with 786 input units and the number of output units is k. Softmax classifier also has k output units, each unit represents a possible class, and the resulting probability value indicates the probability that the input text belongs to the corresponding class.

Feature extraction layer

In this paper, the pre-trained BERT model bert_base_chinese (hereinafter referred to as BERT_Chinese) in Huggingface22 library is used for feature extraction when dealing with Chinese descriptionsy (i.e., “product and test object” data). The model is optimized specifically for Chinese text.The BERT_Chinese model effectively captures the rich semantic information and potential associations in the text through its bi-directional Transformer encoder structure, which provides high-quality feature vectors for subsequent processing.

For Chinese text needs to be transformed before inputting into the BERT model, the text is transformed into a sequence of embeddings by a pre-trained word embedding model23, which consists of word embeddings (token embeddings), sentence embeddings and position embeddings, the input representation of BERT_Chinese is shown in Figure 2, where [CLS] and [SEP] are two special tokens that are added during the word embedding process, with [CLS] located at the beginning of the text and [SEP] at the end of each sentence. The word embeddings of the embedding sequence are used to characterise the semantic information of the text, the sentence embeddings are used to characterise the global semantic information of the text and merge it with the semantic information of the word embeddings, and the positional embeddings are used to characterise the differences in the semantic information carried by words appearing at different positions in the text24.

Clustering layer

The clustering part uses the semi-supervised K-means algorithm to achieve clustering. Semi-supervised K-Means improve the initialization sensitivity and local optimality of K-means means clustering algorithm by introducing information of partially known category labels. In this paper, the main idea of the semi-supervised K-Means model is to introduce part of the data with label information to guide the clustering process of unlabeled samples by initializing the center point. When determining the clustering centre (centroid), the distribution of the labelled samples is given priority, and known category samples are selected as the initial clustering centre to better capture the boundaries between different categories. The basic principle of the K-means mean clustering algorithm is as follows:

-

1.

Suppose there are n sample points \(\left\{ x_{1},x_{2},x_{3},x_{4},\dots ,x_{n}\right\}\), where each sample point\(x_{i}\) belongs to a vector in d-dimensional space.

-

2.

Define a center of mass vector\(\left\{ m_{1},m_{2},m_{3},m_{4},\dots ,m_{k}\right\}\), where \(m_{i}\) is a d-dimensional vector denoting the \(i\)th center of mass.

-

3.

The goal of the algorithm is to partition these sample points into \(k\) sets, each representing a category, while minimizing the sum of the Euclidean distances between all sample points and the centers of mass of the category to which they belong.

-

4.

The goal of the algorithm is to minimize the following loss function:

$$\begin{aligned} dis(x_i,m_j )=\sqrt{ {\textstyle \sum _{t=1}^{d}(x_{it}-m_{jt})^2}} \end{aligned}$$(1)In the above equation (1), \(x_i\) denotes the \(i\)th data object, \(1 \le i\le n\), and \(m_j\)denotes the \(j\)th centre of mass, \(1\le j \le k\) . \(x_{it}\) denotes the \(t\)th dimension of the \(i\)th data object, \(1 \le t\le d\), and \(m_{jt}\) denotes the \(t\)th dimension of the \(j\)th centre of mass, \(1 \le t\le d\).

Based on the K-means algorithm, the semi-supervised K-means clustering algorithm(hereinafter referred to as semi-KMeans) used in this paper generates initial clustering centers by introducing label sets L and label sets L-labels to guide the clustering process of unlabeled data. The algorithm is implemented as follows:

Input: data set \(D\), number of clusters \(k\), label set \(L\), label set \(L-labels\).

Step 1. Initialize centroids :

- Generates a centroid set \(C\) based on \(L\) and \(L-labels\).

- If the number of categories of the label set labels is less than k, the data is randomly selected from the data set D and the label set \(L\) is merged to generate an initial centroid set \(C\).

Step 2. Initialize assign samples:

- Calculate the distance from each sample \(x\) in the dataset \(D\) to each centroid, assigning \(x\) to the cluster corresponding to the nearest centroid.

Step 3. Iterate through the following steps until convergence:

- Calculate the Euclidean distance from each sample \(x\) in the dataset \(D\) to each centroid, assigning \(x\) to the cluster corresponding to the nearest centroid.

- Calculate the average of all samples in the cluster as the new centroid number.

Output: The clustering results.

Classification layer

Softmax classifier is a commonly used multi-classifier, and it works as shown in equation (2) and equation (3), where \(k\) is the number of clusters obtained by the clustering layer, and \(h(x_{m})\) is the probability that the current phrase belongs to the category \(y_{m}\) :

The processing process of the classification layer is shown in Figures3. Suppose that the text features obtained by the feature extraction layer are \(O=\{X_{1},X_{2},X_{3},\dots ,X_{i}\}, I \in [0,785]\), the whole connection layer handles the eigenvector \(O_{k} = \{x_{1},x_{2},x_{3},\dots ,x_{k}\}\). Input \(O_{k}\) into the Softmax classifier and obtain the probability that the data belong to any category by formula \(h(x_{m})\). After processing with the max function, obtain the prediction label \(y_{m},m \in [0,k]\) of the data.

Results

Data set

The data used in this paper are sourced from the Information Database of Competence Parameters of China’s Inspection and Testing Organisations (hereinafter referred to as the “Capability Parameter Information”). This database encompasses several key sectors, including light industry, pharmaceuticals, information technology, and agriculture and agricultural products.

The data source25 for the “ Parameter Information” comes from the “Laboratory Accreditation Management System,” an official platform for the qualification management of third-party laboratories. This system focuses on recording and managing information related to laboratory qualifications, primarily based on China Metrology Accreditation (CMA) standards. According to statistics, this data set includes approximately 5 million detailed records of capability parameters provided by 14,507 inspection and testing institutions. Although data entry is strictly controlled for necessity, there are no clear guidelines for standardized format, leading to variable overall data quality. An in-depth review revealed that the format of data items representing the attributes of “products and test objects” (hereinafter referred to as “categories”) was relatively standardized. Given this, we selected this data item as the core focus of our study from the original 5 million samples. Using interval sampling, we extracted 10,000 representative samples to construct the experimental dataset used in this paper.

The Chinese phrases in the experimental dataset include both test items and products, for example, the phrase “multifunctional belt” describes the product and “hazardous substances in PVC artificial leather” describes the test item, and the forms of the phrase descriptions also have their own characteristics. The form of description of phrases is also distinctive. Most of the phrases in the dataset have one or several combinations of the following forms: noun, noun + [conjunction] + noun, noun determiner + noun, noun phrase abbreviation. For example:

-

1.

“Furniture leather” is a “noun + noun” form;

-

2.

“Polyester silk” is a “noun” form;

-

3.

“Underwear” is a “noun phrase abbreviation”, it actually means “underwear and Panties” in Chinese;

-

4.

“Blast furnace with anhydrous artillery mud” can be understood as “noun + [conjunction] + noun”form, just like “blast furnace + [with] + anhydrous artillery mud”;

-

5.

“Ultra-high molecular weight polyethylene enthalpy of melting crystallinity and melting point” is a phrase combining various forms, the meaning of which is: to test the “Melting Enthalpy”, “Crystallinity” and “melting point” of “ultra-high molecular weight polyethylene”. “melting point”, in which “ultra-high molecular weight” is a noun determiner used to modify “polyethylene”, “Melting Enthalpy”, “Crystallinity” and “melting point” are all nouns;

So we need to pay attention to the processing of nouns and connectives in data cleaning, Figure is a visual explanation of the Chinese phrases mentioned in the first and fourth dot.

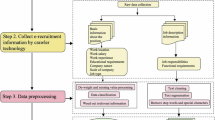

In this paper, a systematic pre-processing and cleaning exercise is implemented for the dataset used, as shown in Figure . The pre-processing steps are as follows:

-

Step 1. Removal of anomalous samples: In the “category” attribute of “capability parameter information”, some of the samples appear in the form of pure numbers or symbols, which do not have practical interpretability. Therefore, this paper chooses to discard such samples. At the same time, the samples containing missing data are also deleted to ensure the validity and completeness of the subsequent analyses.

-

Step 2. Duplicate Samples Removal: In view of the actual business situation, when the testing capacity of the laboratory is expanded, changed or involves the relocation of equipment/address, etc., the relevant capacity parameters will be recorded several times in the application for accreditation, which results in several records with the same “category”. Since these duplicate records do not contribute to the objectives of this study, this paper adopts the approach of retaining only one unique record for de-duplication.

-

Step 3. Text Noise Removal: Some of the samples contain a mixture of punctuation marks, English letters, numbers and special symbols, such as “30/25mm Plastic Anti-theft Top”,“70% Imidacloprid Water-dispersible Granules”,“Gasoline Vehicles Pollutants Emissions On-Board Diagnostic System (OBD) Inspection”, etc. Although these elements express specific specifications or acronyms to a certain extent, the removal of these elements does not affect their expression of the meaning of “category”. Therefore, this paper uses regular expression technology to replace the English letters, numbers and special symbols in the text with spaces, thus achieving effective noise reduction.

-

Step 4. Remove Stop Words: In the Chinese context, a sentence usually consists of several words, not all of which have actual meaning. For example, words such as “and”, “of”, etc. are excluded because they do not convey clear concepts in many cases. For this reason, this paper firstly uses Jieba to segment the text, and then filters the segmentation results with the Chinese deactivation word list to eliminate the stop words with no substantive content.

After the above series of data preprocessing measures, the final data set contains 6326 valid samples. The comparison before and after some text processing is shown in Table 1, Figure 6 shows the Chinese text before and after preprocessing. We divided the processed data set into test set and training set according to the ratio of 3:7, in which the test set contained 1917 samples and the training set contained 4409 samples. In addition, in order to evaluate the effect of the model in the future, this paper labels the data of the test set under the guidance of domain experts, including 5 labels: “Textile products”, “Information Technology”, “Medicine and Healthcare”, “Household Goods” and “Agrochemicals”.

Experimental comparison and analysis

In the experiment, the code is implemented in python3.11, torch 2.1 was installed for calling BERT model for training and feature extraction, JieBa 0.42.1 was used for data preprocessing for disambiguation, scikit-learn 1.2.2 was used for calling clustering algorithms and calculating clustering metrics. The BERT_Chinese model uses default Settings except for the core hyperparameters that need to be tuned.

Model tuning

The hyperparameters of the Bert model have an important impact on the performance of the model. The core hyperparameters in this paper include Epoch, Pad size and Batch size, while the default values are used for the remaining hyperparameters. Some experiments will be conducted in this section to optimize the model and find the optimal values of the parameters, The meaning of the core parameters is shown in the following Table 2.

We conducted three experiments to study the influence of the above hyperparameters on the model effect, so as to select the best hyperparameters. On the data, we divided the data set into the training set and the test set in a ratio of 7:3.

-

1.

Epoch Size

The Epoch Size is the number of training iterations specific to the training set, and in general, the performance of the model improves as the number of iterations increases. However, if the epoch is too large, the group will have overfitting problems, so it is important to choose the right number of iterations. Figure 7 shows the relationship between the epoch and F1. The F1 scores also increase with the increase of training iterations, which indicates that the classification performance of the model becomes better. When the epoch is 32, the model performs best, and when the epoch is 56, the model stops training and the accuracy decreases.

-

2.

Pad Size

Pad Size is the length of each sentence that the model will process. The basic processing principle is short filling and long cutting. If a sentence is shorter than the set length, then it is filled with 0. If the length of the sentence is greater than the set point, the sentence is truncated to get the set point. The size of the Pad has a large impact on the performance of the model, so an appropriate value should be chosen. If the Pad is too small, a large amount of information in the data will be lost. Instead, a lot of meaningless zeros will be filled in. According to the sample character length information in the data set collected in Table 3, the sample character length in this experiment accounted for \(75\%\) of the samples whose character length was less than 20 characters, and \(25\%\) of the samples whose character length was between 20 characters and 86 characters. Taking it all together, we set the maximum length of text for the input model to 44 characters, a value that neither loses too much information nor fills in too many zeros.

-

3.

Batch Size

Batch Size is the number of training samples sent to the neural network each time. The batch size affects the memory usage efficiency and memory capacity. When the batch is large, the memory required is also large. At the same time, the convergence time of the model can be shortened. To find a balance between efficiency and memory capacity, Batch size should be chosen carefully. In this experiment, the batch size was gradually increased from 16 to 256 on the premise of correcting and fixing other hyperparameters. The experimental results are shown in the Figure 8. According to the model tuning experiment in this chapter, we set the three core parameters of the model as shown in Table 4 to obtain the optimal model performance after tuning. The loss and accuracy rate under different training rounds are shown in Figure 9. As the loss curve gradually converges and smoothen.

Evaluation metrics

In order to evaluate the performance of the clustering algorithm in the clustering process, this paper selects two indexes, standard Mutual information (NMI) and adjusted Rand coefficient (ARI), to evaluate the performance of the clustering algorithm. NMI is a metric used to evaluate the similarity between clustering results and true labels, providing an overall measure of similarity. However, it does not indicate which specific parts match well or poorly, lacking fine-grained feedback. ARI is a metric used to assess the consistency between clustering results and true labels. It may exhibit bias when dealing with imbalanced datasets. If some categories contain significantly more samples than others, ARI might favor the larger categories, thereby affecting the overall accuracy of the evaluation. For semi-supervised clustering, different labeling ratios can lead to varying NMI and ARI results. In general, the larger the value of NMI and ARI, the better the clustering effect. The calculation formula of the two evaluation indexes is as follows:

\(H\left( X\right)\) and \(H\left( Y\right)\) are the entropy of \(X\), \(Y\) respectively. In equation (7), \(n_{ij}\) is the number of elements belonging to both cluster \(i\) and cluster \(j\), \(n_{i}\) is the number of elements that belong to cluster \(i\), \(m_{j}\) is the number of elements belonging to the cluster \(j\), \(n\) is the total number of elements.The \(NMI\) and \(ARI\) takes values between 0 and 1, the larger the value the better the clustering effect.

In addition, we use the word cloud image to visualize the class clusters, which is convenient to display the class clusters intuitively. Word cloud map is a form of expression that reflects the importance or gravity of words. In this paper, Jieba segmentation is used to convert the original text into a list of words, only the naming words are retained, and the frequency of occurrence of each word in various clusters is counted. In the generated word cloud map, the font size of the word represents the importance of the word, and the larger the font size indicates that the word is more representative of this kind of cluster.

The results of classification are visualized in the form of confusion matrix. Taking binary classification as an example, the true value and predicted value are mainly divided into two kinds of results, namely true and false. The predicted and true values are mixed in pairs to get the matrix shown in Figure 16. With the confusion matrix, the following metrics can be obtained as TP-Ture Positive (sample is normal, predicted to be normal), FP-False Positive (sample is anomalous, predicted to be normal), TN-True Negative (sample is anomalous, predicted to be anomalous), and FN-False Negative (sample is normal, predicted to be anomalous), and the Precision, Recall and F1 score as shown in the equation (8),(9) and (10).

Experiment and analysis

Bert pre-trained model for feature extraction mainly relies on two parts of Bert model Output: the Last Hidden State and the Pooler Output. The Last Hidden State layer is the senttion-level representation obtained after a special pooling layer in BERT model, which represents the hidden state of the last layer of the model and can be used for feature representation of various tasks. Pooler Output can be understood as the semantic feature vector representation of the whole sentence. Based on this, there are four ways to generate text features:

-

1.

BERT_cls: which uses Bert to output the CLS tag of the last hidden state .

-

2.

BERT_first-last-avg: the first and last layer average method, which takes the average of the first and last layer in the hidden state of the last layer output by Bert.

-

3.

BERT_last-avg: the third method takes the average of the tail layer in the last hidden state of Bert output as the text feature.

-

4.

BERT_all-avg: this method takes the Output of Pooler Output layer of Bert model as the text feature.

In order to better analyze the effect of the BERT_last-avg+semi-KMeans Method proposed in this paper (referred to as proposed method in the experiment) in feature extraction and clustering, the following two groups of ablation experiments were designed: The labeled test set is selected as the experimental data, and the text features are extracted by TF-IDF, unique thermal coding and the above four methods, and the text representation vectors generated by these methods are input into the semi-supervised K-means clustering algorithm, which aims to compare the influence of different feature extraction methods on the clustering effect. The second group: choose KMeans and Agglomerative Clutsering as the control of semi-KMeans, and use the text features extracted by BERT_last-avg as the input of the above clustering methods. In terms of data, the labeled test set is selected as the experimental data, and the label set for the semi-KMeans is produced by taking \(10\%\) of the data set. The label labeling work is completed under the guidance of experts in the field. The specific characteristics of the data set are described in the Table 5. Each method is run 10 times, and the average result of 10 times is taken as the final result, and 9 groups of clustering results are obtained.

The experimental results are shown in Table 6, Figures 10, 11, 12, 13, 14and 15 show the word cloud maps representing the electronic information categories in the results of each method. In all six cases, the larger words are “device”, “wireless”, “system”, and “digital”, “communication”, which indicates that the category label they represent is electronic information. In the word cloud of one-hot encoded terms, the words with larger fonts are “chemical reagents” and “kits”. The word cloud also includes terms such as “knitting”, “textiles” and “cosmetics”, which are unrelated to electronic information. This indicates that the cluster contains a large number of samples from other categories and does not adequately represent electronic information. In contrast, the word clouds generated using the TF-IDF method and the BERT method almost exclusively contain terms related to electronic information, clearly representing this category. Additionally, the word cloud from the BERT method contains fewer and more concise terms compared to the TF-IDF method, indicating that its text is more cohesive and representative. Among BERT variants, proposed method has the highest \(NMI\) value and \(ARI\) value, which are 0.526 and 0.399 respectively, while BERT_all-avg method has the lowest 0.019, and its word cloud map is heavily mixed with other categories of words. This difference may be caused by the fact that the Pooler Output is biased towards macro and lacks micro features. The above results show that the clustering results obtained by proposed method for feature extraction are more cohesive and representative. The experimental results of group 2 show that compared with KMeans and Agglomerative, the \(NMI\) index of proposed method increases by 0.074 and 0.151, respectively, and \(ARI\) index increases by 0.038 and 0.156, which indicates that the centroid initialized by the label set can improve the clustering effect of unlabeled data.

In addition, three different proportions of label sets, \(10\%\), \(20\%\) and \(30\%\), were set respectively in this paper to verify the influence of label proportions on the clustering results of proposed method. The experimental results are shown in the Table7, \(NMI\) and \(ARI\) indexes show a downward trend, and the best index value is obtained at \(10\%\) of the proportion of labels. This result shows that the higher the proportion of label sets is not the better, in fact, the clustering effect is best at the proportion of \(10\%\).

Finally, the results obtained by proposed method are made into a training set and input into the classification layer to train the classifier, which is then used to classify the test set. As shown in table 11, the performance of the classifier on each label was evaluated with an average accuracy of \(89.8\%\), with the highest accuracy of \(96.03\%\) for the label “Information Technology” and the lowest accuracy of \(82.37\%\) for the label “Household goods”. Combined with the confusion matrix in Figure 17 Some samples have been misclassified:

-

30 samples of the “Textile products” label were classified as “Household Goods”;

-

13 samples of the “Information Technology” label were classified as “Medicine and Healthcare”;

-

12 samples of the “Medicine and Healthcare” label are classified as “Agrochemicals” and 15 samples are classified as “Household Goods”;

-

22 samples of the “Household Goods” label were classified as “Textile products”;

-

25 samples of the “Agrochemicals” label were classified as “Medicine and Healthcare” and 24 samples are classified as “Household Goods”.

It can be seen that there are obvious misclassification between the labels “Textile products” and “Household Goods” (Group 1) and between the labels “Agrochemicals” and “Medicine and Healthcare” (Group 2), and some samples from both groups are given in Table 9 , Figure 18 shows the Chinese text of the misclassified samples. From the perspective of samples, the samples of group 1 all have phrases related to “shoes” and “clothing”, while the samples of group 2 all have phrases related to “chemicals”. These phrases have a certain degree of semantic crossover, and some samples can even belong to two categories at the same time. You can then construct and divide labels on the system, for example, build a tree label system or multi-type labels.

Based on the preliminary experiments, we further constructed datasets comprising 2, 3, and 4 different industries to evaluate the model’s generalization capability from the textile and electronics sectors to multiple industries. As shown in Table 10, as the number of industries involved increases, the average accuracy of the model shows a gradual downward trend. This phenomenon can be attributed to an increase in the degree of overlap between industries. When only the textile and electronics industries are involved, the feature differences between them are significant, with low rates of overlap, allowing the model to achieve a high accuracy rate of \(98\%\). However, as more industries are added (such as Medicine and Healthcare, Household Goods and Agrochemicals), features begin to overlap among these industries. Particularly, the Chinese phrase expression similarities between the textile and consumer goods industries increase the probability of misclassification, leading to a decline in the model’s average accuracy to \(89.8\%\). Our research findings indicate that when there is less overlapping territory between industries, the model remains capable of effectively identifying and distinguishing various types of data. However, as the number of involved industries grows, the inevitable increase in feature overlap poses a challenge to the accuracy of classification by the model, causing its average accuracy to gradually decrease. This finding warrants further investigation to develop more robust models that can adapt to multiple industries.

To assess the contribution of preprocessing procedures, a comparison was made between the effectiveness of clustering and the classification performance of the dataset before and after preprocessing. As shown in Table 8, the NMI and ARI indicators for clustering performance show small differences before and after preprocessing. This may be attributed to the limited influence of high-frequency words such as conjunctions on clustering outcomes. Regarding the classification tasks, Table 12 illustrates the classification performance before preprocessing, whereas Table 11 presents the performance after preprocessing. In the unprocessed dataset, the model achieved an overall accuracy of \(87.45\%\). After preprocessing, this accuracy improved to \(89.8\%\), marking an increase of approximately \(2.35\%\). The experimental findings demonstrate that preprocessing textual data can enhance data quality and bolster the model’s comprehension capabilities, thereby improving classification performance.

Discussion of results

In the experiment, the BERT pre-training model was used to extract the text features of the “product and test object” attribute in the Chinese phrase description competence set of a third-party detection institution. Under the guidance of a small amount of labeled data, the semi-supervised K-Means clustering algorithm was used to label the unlabeled data. Finally, the results are made into a training set for training the classifier to classify the capability set. The experimental results show that the clustering effect of the semi-supervised K-Means clustering algorithm which introduces \(10\%\) labeled data to initialize the centroid is better than that of Kmeans and Agglomerative methods. Compared with traditional TF-IDF and thermal coding methods, BERT pre-training model has obvious advantages in text feature extraction. The text features obtained by converting the output of BERT pre-trained model using BERT_last-avg method have good clustering effect in subsequent clustering tasks, and the classifier trained using clustering results also has good performance on the training set, with an average accuracy of \(89.8\%\).

Discussion

Aiming at the sparse features, high latitude features and no semantic information in Chinese short text features extracted by traditional text clustering algorithm, a joint model of BERT+ semi-supervised K-Means+Softmax classifier is proposed, and the detection ability of Chinese phrase description is studied. The results show that by comparing NMI, ARI and word cloud map, BERT pre-trained model is superior to traditional TF-IDF and unique thermal coding method in text feature extraction, and semi-supervised K-Means clustering is superior to K-Means and Agglomerative. Among the four methods for generating text features in BERT pre-trained models, BERT_last-layer-average has the best results and BERT_all-avg has the worst results, whose word cloud shows a large number of samples mixed with other categories in its class cluster. A BERT pre-trained model is usually used to extract features from the text of “products and test objects”, and 5 clustering labels are obtained by partially labeled data and semi-supervised K-Means. The classifier successfully divides the ability set into 5 categories, and the average accuracy of classification results is \(89.8\%\).

In the collection of Chinese description capability sets for third-party testing institutions in the fields of textile products, information technology, medicine and healthcare, household goods, and agrochemicals, the proposed method can effectively collect and classify testing capabilities using partially annotated data. The results demonstrate usability and effectiveness but also highlight areas requiring further research:

-

1.

Enhance the diversity and quality of data sets, collect multidimensional information including equipment, personnel qualifications, order quantity, etc., and improve the reliability and accuracy of the model.

-

2.

The current classification labels rely on expert guidance to determine the labeled data, and their rationality is influenced by the expertise, experience, and subjective biases of the experts. Therefore, further research is needed to reduce the impact of human factors and develop a more scientific, rational, and hierarchical classification system.

-

3.

Although the collection and classification of testing institutions’ capabilities in five specific fields within the Chinese language context have been met, how to address the classification of capabilities across more industry sectors in other language environments warrants further investigation

Data availability

Some or all data, models, or code generated or used during this study are available on request from the corresponding author, Q.J., upon reasonable request.

References

CAAC. rb/t t214-2017 “competence assessment for inspection body and laboratory mandatoryapproval-genaral requirements for inspection body and laboratory”. https://std.samr.gov.cn/hb/search/stdHBDetailed?id=8B1827F23E37BB19E05397BE0A0AB44A. 2023.

CNAS. cnas-cl01-g001:2018 “application of cnas-cl01<accreditation criteria for the competency of testing and calibration laboratories>”. https://www.cnas.org.cn/rkgf/sysrk/rkyyzz/2018/03/889071.shtml. 2023.

Wenhui, H. et al. Construction of a concurrent corpus for a chinese amr annotation system and recognition of concurrent structures. Journal of Tsinghua University (Science and Technology) 61, 920–926 (2021).

Her, O.-S. & Tsai, H.-C. Left is right, right is not: On the constituency of the classifier phrase in chinese. Language and Linguistics 21, 1–32 (2020).

Acheampong, F. A., Nunoo-Mensah, H. & Chen, W. Transformer models for text-based emotion detection: a review of bert-based approaches. Artificial Intelligence Review 54, 5789–5829 (2021).

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 (2018).

Liu, W., Fu, X., Zhang, Y. & Xiao, W. Lexicon enhanced chinese sequence labeling using bert adapter. arXiv preprint arXiv:2105.07148 (2021).

Sun, L., Wang, J., Zhang, K., Su, Y. & Weng, F. Rpbert: a text-image relation propagation-based bert model for multimodal ner. In Proceedings of the AAAI conference on artificial intelligence 35, 13860–13868 (2021).

Prabhu, S., Mohamed, M. & Misra, H. Multi-class text classification using bert-based active learning. arXiv preprint arXiv:2104.14289 (2021).

Briskilal, J. & Subalalitha, C. An ensemble model for classifying idioms and literal texts using bert and roberta. Information Processing & Management 59, 102756 (2022).

Lai, Y., Liu, Y., Feng, Y., Huang, S. & Zhao, D. Lattice-bert: leveraging multi-granularity representations in chinese pre-trained language models. arXiv preprint arXiv:2104.07204 (2021).

Wang, Z., Wang, L., Huang, C., Sun, S. & Luo, X. Bert-based chinese text classification for emergency management with a novel loss function. Applied Intelligence 53, 10417–10428 (2023).

Li, M., Chen, L., Zhao, J. & Li, Q. Sentiment analysis of chinese stock reviews based on bert model. Applied Intelligence 51, 5016–5024 (2021).

Li, H., Wang, J., Lu, Y., Zhu, H. & Ma, J. Chinese multicategory sentiment of e-commerce analysis based on deep learning. Electronics 12, 4259 (2023).

Zhu, J., Huang, S., Shi, Y., Wu, K. & Wang, Y. A method of k-means clustering based on tf-idf for software requirements documents written in chinese language. IEICE TRANSACTIONS on Information and Systems 105, 736–754 (2022).

Song, C., Guo, J., Gholizadeh, F. & Zhuang, J. Quantitative analysis of food safety policy-based on text mining methods. Foods 11, 3421 (2022).

Zhou, Q., Lei, Y., Du, H. & Tao, Y. Public concerns and attitudes towards autism on chinese social media based on k-means algorithm. Scientific Reports 13, 15173 (2023).

Kang, Z. et al. Structured graph learning for clustering and semi-supervised classification. Pattern Recognition 110, 107627 (2021).

Aamir, M. & Zaidi, S. M. A. Clustering based semi-supervised machine learning for ddos attack classification. Journal of King Saud University-Computer and Information Sciences 33, 436–446 (2021).

Bortoloti, F. D., de Oliveira, E. & Ciarelli, P. M. Supervised kernel density estimation k-means. Expert Systems with Applications 168, 114350 (2021).

Lai, Y. et al. An adaptive robust semi-supervised clustering framework using weighted consensus of random k-means ensemble. IEEE Transactions on Knowledge and Data Engineering (2021).

Inc., H. F. Hugging face hub documentation. https://hugginglace.co/docs/hub/index. 2023.

Wang, B. et al. On position embeddings in bert. In International Conference on Learning Representations (2020).

Li, B. et al. On the sentence embeddings from pre-trained language models. arXiv preprint arXiv:2011.05864 (2020).

DATA.ZJ.GOV.CN. Inspection and testing organizations accreditation certificate_capability parameter information. https://data.zjzwfw.gov.cn/jdop_front/detail/data.do?iid=16309. 2023.

Acknowledgements

This work has been supported by the Science and Technology Planned Project of the State Administration for Market Regulation “Research on Key Technologies for Non-Invasive Fusion Sensing Based Smart Supervision of Detection Behavio(number:CY2023213)”,and“Chu Ying” Project (Core Project) of Zhejiang Administration for Market Supervision“Research and Demonstration Application of Key Technologies for Smart Supervision of Testing Organizations(number:2022MK057)

Author information

Authors and Affiliations

Contributions

G.X.: Conceptualization, Methodology, Supervision, Validation, Project administration, Funding acquisition, Writing-review & editing. Q.C.: Conceptualization, Methodology, Data curation, Writing-original draft. S.J.: Investigation, Data curation, Writing-original draft, Visualization. X.F.: Project administration, Investigation. Y.W.: Investigation, Data curation. Q.J.: Conceptualization, Methodology, Supervision, Validation, Project administration, Writing-review. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Xu, G., Chen, Q., Jiang, S. et al. Analyzing the capability description of testing institution in Chinese phrase using a joint approach of semi-supervised K-Means clustering and BERT. Sci Rep 15, 11331 (2025). https://doi.org/10.1038/s41598-025-92296-y

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-92296-y