Abstract

The predictive performance of probabilistic pavement condition deterioration is critical for effective maintenance and rehabilitation decisions. Currently, numerous improved models exist, but few rely on probabilistic models to improve pavement deterioration prediction. Therefore, this study proposed an improved probabilistic model for pavement deterioration prediction based on the coupling of Bayesian neural network (BNN) and cuckoo search (CS) algorithm. The model prediction performance is evaluated against two metrics: determination coefficient (R2) and standard deviation (stability). Finally, based on the data from the pavement management system in Shanxi Province, it was verified that the CS-BNN model outperforms the genetic algorithm-BNN, particle swarm optimization-BNN, and BNN models in terms of the two metrics. Sensitivity analysis further confirms the robustness of the CS-BNN model. The findings indicate that the CS-BNN model provides more reliable predictions with lower uncertainty, aiding road engineers in optimizing maintenance schedules and costs.

Similar content being viewed by others

Introduction

Pavement condition prediction plays a crucial role in the field of transportation infrastructure management. Accurate prediction of road pavement condition deterioration significantly impacts the development of annual maintenance and rehabilitation decision-making plans1,2,3,4. A reliable pavement condition deterioration prediction model is essential not only for optimizing maintenance and rehabilitation costs but also for ensuring the safety and serviceability of road networks for users.

Despite the availability of various pavement condition deterioration prediction models, there is a critical need for improved probabilistic models that address predictive accuracy and uncertainty robustness. Existing deterministic models fail to account for the inherent uncertainty in pavement evolution, while tradition probabilistic approaches often produce predictions with poor stability under varying conditions. These limitations can lead to suboptimal maintenance and rehabilitation decisions. Deterministic models tend to maintenance and rehabilitation decisions that underestimate the required costs and overestimate the post-maintenance pavement condition levels5, whereas current probabilistic prediction models often result in maintenance and rehabilitation decisions that overestimate the required costs and underestimate the post-maintenance pavement condition levels.

To address this gap, this study proposed a novel Cuckoo Search-Bayesian Neural Network (CS-BNN) model, which combines the global optimization capabilities of the CS algorithm with the probabilistic framework of BNNs. The primary objectives of this study are to develop a probabilistic pavement condition deterioration prediction model that improves predictive accuracy and uncertainty robustness. The proposed prediction model is a more reliable and accurate tool for pavement infrastructure management, ultimately contributing to more cost-effective and timely maintenance decisions.

Literature review

Over the years, numerous methods have been developed for pavement condition prediction. These can be broadly classified into several categories. For example, Uddin6 categorized them as deterministic, probabilistic and artificial neural network models. The Pavement Management Guide7 grouped them into deterministic, probabilistic, Bayesian, and subjective (or expert-based) models. Pavement performance prediction models can also be classified into deterministic, probabilistic, and hybrid types8. Among these models, the deterministic and probabilistic models attract the greatest attention9,10.

Deterministic models are models where a predicted variable (or variables) are obtained from some independent variables, generally by mean of regression analysis7. The advantage of deterministic models lies in their simplicity and interpretability. However, they fail to account for the inherent uncertainty in pavement evolution, as pavement behavior is recognized to be probabilistic in nature11,12,13,14.

To address this uncertainty, probabilistic model have been developed. The stochastic process approach, which includes Markov methods and Gaussian process regression15,16, is commonly used. Markov-based models rely on the transition probability matrix to predict the transfer of pavement states. The transition probability matrix can be obtained through various methods such as back-calculation13, empirical methods17, optimization algorithms18 and simulation-based approaches19,20. Although they have an advantage in computational complexity, they are limited to a finite number of discrete variables, while most pavement condition indicators are continuous. Gaussian process regression, on the other hand, is a non-parametric model that can handle continuous values. It has been used in various aspects of pavement prediction, such as predicting the international roughness index (IRI) of flexible pavements21 and estimating the structural capacity of flexible pavements22. Gaussian process regression excels at modeling complex, non-linear relationships and provides uncertainty estimates, but it suffers from high computational complexity, scales poorly with large datasets, and is highly sensitive to the choice of kernel and hyperparameters.

In recent decades, machine learning algorithms have gained significant attention in pavement performance prediction8,23,24,25. Neural network models, a typical type in machine learning, have shown high prediction accuracy. Guo et al.26 constructed a multi-output prediction model for rigid pavement deterioration using the correlation between four pavement condition indicators (IRI, faulting, longitudinal crack and transverse crack) and the neural network theory, and verified that the prediction accuracy of the multi-output model is higher than that of the single-output model. Recurrent neural networks, another type of neural network-related method, are suitable for road pavement data with time series characteristics. Sun et al.27 established the semi-rigid asphalt pavement performance multi-output prediction model based on Long Short-Term Memory. In addition, the transformer model, which is also a type of neural network-related method, has better prediction accuracy. Han et al.28 proposed a predictive model for road health indicators (rutting depth, surface texture depth, center point deflection and deflection basin area of 5t falling weight deflectometer) based on improved transformer network, and they validated that the proposed model had higher predictive accuracy than recurrent neural network-based and artificial neural network-based prediction models. Although these neural network-related methods are able to achieve high prediction accuracy, the mathematical mapping from inputs to output in neural networks is not easily interpreted by humans, leading to low trustworthiness of its applications in practices. Moreover, these neural network-related methods, like deterministic models, are unable to address the uncertainty in the pavement evolution process.

By combining neural networks with Bayesian theory, a probabilistic Bayesian neural network (BNN) can be further obtained. The BNN can be used to develop probabilistic pavement performance prediction models, which are modeled in two way: probabilistic weights and structure29. For the BNN with probabilistic weights, its weight values are not definite values but probability distributions30. Thus, the same set of input values may correspond to different output values. For the BNN with probabilistic structure, the dropout is applied at both training and testing processes, resulting in a variable (i.e., probabilistic) model structure31. BNNs offer advantages such as the ability to quantify uncertainty, which is crucial in pavement performance prediction.

Furthermore, some researchers had tried to improve the pavement performance prediction models by relying on existing algorithms32,33,34. Wang and Li35 proposed a fuzzy regression method to determine the coefficients of the gray prediction model, so as to construct a fuzzy and gray-based IRI prediction model. They proved that the hybrid model was superior to the Mechanistic-Empirical Pavement Design Guide model and gray models. Deng and Shi36 coupled feed-forward neural networks with particle swarm optimization to obtain an improved pavement rutting prediction model. They verified that the improved model achieved better performance in terms of accuracy, reproducibility, and robustness. Similarly, there are other improved pavement performance prediction models, such as, the neural network model optimized by genetic algorithms37,38,39, the support vector regression model optimized by particle filter40 and by genetic algorithm41, and the gene expression programming-neural network model42.

Despite these advancements, there is a significant gap in the development of probabilistic prediction models that focus on prediction accuracy and uncertainty robustness simultaneously. Therefore, this study proposed a novel probabilistic pavement condition deterioration prediction model.

Traditional probabilistic models, such as Markov-based models and Gaussian process regression, are either limited in their ability to handle continuous variables or suffer from high computational complexity. In contrast, BNNs combine the expressive power of neural networks with the probabilistic framework of Bayesian methods, enabling them to capture complex nonlinear relationships and quantify uncertainty effectively24,43. These properties make BNNs particularly well-suited for addressing the uncertainty associated with pavement condition evolution, motivating our choice to improve upon this model. To further improve the probabilistic model, the authors attempted to combine an optimization algorithm with a BNN to form a new hybrid prediction model.

The Cuckoo search (CS) algorithm is a nature-inspired optimization algorithm44. It has several advantages compared with other optimization methods like the Genetic Algorithm (GA) and the Particle Swarm Optimization (PSO) algorithm. The CS algorithm has a better balance between exploration and exploitation, which means it can search a wider solution space in the initial stages and then focus on refining the best-found solution more effectively. It also has fewer parameters to adjust, making it more convenient to use. In addition, it had shown better performance in dealing with complex optimization problems45,46. Therefore, the CS was chosen to be combined with the BNN to improve probabilistic pavement condition deterioration prediction models in terms of the goodness-of-fit and stability.

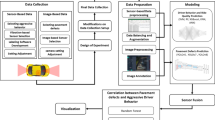

Data collection and methods

Data collection

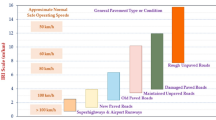

In this study, data related to asphalt pavements of freeways in the Shanxi Pavement Management System were used to validate pavement condition deterioration prediction models. The data variables are described in Table 1. In this study, a total of 5223 roadway pavement data were collected, of which randomly 80% were used as training data and 20% as validation data.

For the base courses, although three courses are shown in Fig. 1, two courses (Base courses I and II) are also a common structural type. In the collected data, the materials of base courses are usually mixtures of cement, lime, industrial waste or asphalt with soil or gravel. So, it has five different combinations: mixtures of cement with soil or gravel (base course I and II), mixtures of cement with soil or gravel (base course I) + mixtures of lime with soil or gravel (base course II), mixtures of cement with soil or gravel (base course I) + mixtures of industrial waste with soil or gravel (base course II), mixtures of asphalt with soil or gravel (base course I) + mixtures of cement with soil or gravel (base courses II and III), and mixtures of lime with soil or gravel (base course I) + mixtures of cement with soil or gravel (base course II) + mixtures of industrial waste with soil or gravel (base course III).

According to China’s highway climate zoning standards, Shanxi Province is located in the dry and wet transition zone of the Loess Plateau. The daily average temperature > 25 ℃ and < 0 ℃ are defined as high and low temperatures, respectively. In the definition of the number of consecutive high/low temperatures, the 3-day threshold is based on the experience of local engineers.

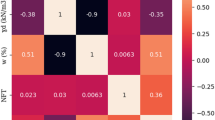

To help readers further understand the above variables, Table 2 shows descriptive statistics for numerical variables.

Proposed prediction model

The core of the proposed prediction model is the coupling of a Bayesian neural network with a cuckoo search algorithm, so it is necessary to introduce the Bayesian neural network and cuckoo search algorithm.

Bayesian neural network

For a given training set \(D=\left\{X,Y\right\}\), the key to obtain the probability distribution of weights \(P\left(w|D\right)\). Under the Bayesian framework, \(P\left(w|D\right)\) is expressed by Eq. (1).

where \(P\left(w\right)\) is a prior distribution; \(P\left(D|w\right)\) is a model likelihood; \(P\left(D\right)\) is a marginal likelihood; and \(w\) is model weights. Prior distribution represents initial belief or assumption about the possible values of a model parameter before observing any data. Likelihood measures the probability of observing the current data given specific parameter values, reflecting how well the parameters explain the data.

For a new input \({x}^{*}\) and an output \({y}^{*}\), the predictive distribution is defined as Eq. (2).

However, \(P\left(w|D\right)\) is intractable for any real-scale neural networks47. Instead, the posterior distribution of \(P\left(w|D\right)\) can be approximated by a simple distribution of \(q\left(w|\theta \right)\), parameterized by \(\theta\). Naturally, \(P\left(w|D\right)\) and \(q\left(w|\theta \right)\) are expected to as similar to each other as possible, and the difference between the two distributions is measured using the Kullback–Leibler (KL) divergence, i.e., minimizing the KL divergence value, as shown in Eq. (3).

Further derivation of Eq. (3) yields Eqs. (4)–(6).

Then, the minimization objective is \(\mathcal{F}\left(D,\theta \right)\), as shown in Eq. (7).

The variational distribution \(q\left(w|\theta \right)\) is usually recommended to use the product of Gaussian distributions47. Then, the BNN with with probabilistic weights can be obtained30, as shown in Fig. 2a. However, the approach performs poorly in practice due to the large number of weight parameters required. In contrast, Monte Carlo dropout is another simpler method of variational inference by a product of Bernoulli distribution. Monte Carlo dropout applies dropout at both training and testing steps, i.e., the BNN with probabilistic structure in Fig. 2b.

Cuckoo search algorithm

The CS algorithm in this study consists of five parts in Fig. 344,45,46:

-

(1)

Generation of a group of initial solutions. Each element in a solution represents a weight or bias value in BNN, as shown in Fig. 4. The initial solutions are randomly sampled on a truncated normal distribution.

-

(2)

Computation of fitness. The weight and bias values in each solution are substituted into the BNN model for training, and the determination coefficient of the BNN model is the fitness value.

-

(3)

Creation of new solutions by Lévy flight. When generating new solutions, the theoretical formulae of Lévy flight are shown in Eqs. (8)–(12).

$$x_{i}^{{\left( {g + 1} \right)}} = x_{i}^{{\left( g \right)}} + \alpha \otimes {\text{L}}\mathop {\text{e}}\limits^{\prime } {\text{vy}}\left( \beta \right)$$(8)in which:

$${\text{L}}\mathop {\text{e}}\limits^{\prime } {\text{vy}}\left( \beta \right) = \frac{\mu }{{\left| v \right|^{{\left( {1/\beta } \right)}} }}$$(9)$$\mu \sim N\left(0,{\sigma }^{2}\right)$$(10)$$v\sim N\left(\text{0,1}\right)$$(11)$$\sigma ={\left\{\frac{\Gamma \left(1+\beta \right)\text{sin}\left(\frac{\pi \beta }{2}\right)}{\beta\Gamma \left(\frac{1+\beta }{2}\right){2}^{\frac{\beta -1}{2}}}\right\}}^{\frac{1}{\beta }}$$(12)where \({x}_{i}^{\left(g\right)}\) is the \({i}^{th}\) element of the \({g}^{th}\) generation in a solution; \({x}_{i}^{\left(g+1\right)}\) is the \({i}^{th}\) element of the \({\left(g+1\right)}^{th}\) generation in a solution; \(\alpha >0\) is the step size; generally, \(\alpha =1\); the product \(\otimes\) is entry-wise multiplications; \(\mu\), \(v\) are random values subject to normal distributions; \(\Gamma \left(z\right)\) is the Gamma function, and \(\Gamma \left(z\right)={\int }_{0}^{+\infty }{t}^{z-1}{e}^{-t}dt\). \(\beta\) is a constant value, and \(\beta =1.5\).

-

(4)

Creation of new solutions by random walk. When generating new solutions, the theoretical formula of random walk is shown in Eq. (13).

$${x}_{i}^{\left(g+1\right)}={x}_{i}^{\left(g\right)}+\alpha \otimes \text{H}\left({P}_{a}-\varepsilon \right)\otimes \left[{x}_{j}^{\left(g\right)}-{x}_{k}^{\left(g\right)}\right]$$(13)where \({x}_{j}^{\left(g\right)}\) and \({x}_{k}^{\left(g\right)}\) are two different solutions selected by random permutation at the \({g}^{th}\) generation; \({P}_{a}\) denotes the probability of being updated by the random walk. \(\text{H}\left(z\right)\) is a Heaviside function; \(\varepsilon\) is a random value sampled from a standard normal distribution.

-

(5)

Update of solutions by elite selection. After the operations of Lévy flight and random walk, new solutions are obtained. The two fitness values of each new solution and each old solution are compared in sequence. If the fitness value of the new solution is better than the old solution, the new solution replaces the old solution; otherwise, the old solution remains unchanged.

Pavement deterioration prediction model based on CS-BNN

The development of the proposed prediction model consists of three steps (Fig. 5). Firstly, the road pavement data are preprocessed in two steps: data cleaning and normalization. Road pavement data containing outliers (e.g., cases where the road surface is not be treated but the condition unexpectedly improves) or missing values are removed. The 5223 road pavement data mentioned before are the cleaned data. And the road pavement data are normalized using z-score method. The theoretical equation for the z-score method is shown in Eq. (14).

where \({x}_{k}^{*}\), \({x}_{k}\), \({\mu }_{k}\), \({\sigma }_{k}\) are normalized value, initial value, mean, standard deviation of the \({k}^{\text{th}}\) variable, respectively.

Then, the best initial solution (i.e., weights and biases for BNN) is searched by the CS algorithm. The specific steps are (a) randomly generating multiple sets of solutions (weights and biases), (b) maximizing the determination coefficient of the BNN during the training process as the optimization objective, and (c) searching for a set of optimal initial weight and bias values by CS.

Finally, the pavement deterioration prediction model is constructed based on BNN. Using the best initial solution and preprocessed data involving road pavement, the BNN model (which incorporates Monte Carlo dropout during both training and testing) is retrained to finally obtain an improved probabilistic prediction model for pavement deterioration.

Comparison of the proposed prediction model

To verify the superiority of the proposed prediction model, three comparison models were developed48. Constructing a BNN-based pavement deterioration prediction model as the first comparison model can visualize the advantages brought by the CS algorithm. Since existing studies indicate that GA37 and PSO36 have similar advantages to the CS algorithm, pavement deterioration prediction models based on GA-BNN and PSO-BNN are constructed as the second and third comparative models. The detailed implementation of the BNN, GA-BNN, and PSO-BNN is provided in ESM Appendix A to ensure transparency and fairness in the comparison.

Pavement deterioration prediction model based on BNN

In contrast to the proposed prediction model, the initial weights and biases of the BNN-based model are sampled from a truncated normal distribution instead of being obtained by the CS algorithm. The rest of the modeling steps are the same as the proposed prediction model.

Pavement deterioration prediction model based on GA-BNN

In contrast to the proposed prediction model, the GA-BNN model generates next-generation solutions through crossover and mutation operations, rather than Lévy flight and random walk. The rest of the modeling steps are the same as the proposed prediction model. The crossover and mutation operations in a GA are schematically shown in Fig. 6.

Pavement deterioration prediction model based on PSO-BNN

In the process of searching for the best solution, the PSO-BNN model generates the next generation of solutions by utilizing individual extremes and population extremes49, rather than Lévy flight and random walk. The rest of the modeling steps are the same as the proposed prediction model.

The theoretical expressions for generating next generation solutions are shown in Eqs. (15) and (16), and the processes are graphically illustrated in Fig. 7.

where \({x}_{i}^{k+1}\) and \({x}_{i}^{k}\) are the position vector of the \({i}^{th}\) solution in the \({\left(k+1\right)}^{th}\) and \({k}^{th}\) iteration; \({v}_{i}^{k+1}\) and \({v}_{i}^{k}\) are the velocity vector of the \({i}^{th}\) solution in the \({\left(k+1\right)}^{th}\) and \({k}^{th}\) iteration; \({p}_{i}^{k}\) represents the personal best position of the \({i}^{th}\) solution in the past \(k\) iterations; \({g}^{k}\) represents the global best position of all solutions in the past \(k\) iterations; \(\omega\) is an inertia coefficient; \({c}_{1}\) and \({c}_{2}\) are individual learning factors and group learning factors. \({r}_{1,i}^{k}\) and \({r}_{2,i}^{k}\) are random values in the interval \(\left[\text{0,1}\right]\) for the \({i}^{th}\) solution in the \({k}^{th}\) iteration.

Two model evaluation indicators

In this study, the determination coefficient (R2) and Standard Deviation (SD) are used as the primary metrics to evaluate the goodness-of-fit and stability of these prediction models, respectively. R2 was chosen because it effectively quantifies the proportion of variance in pavement condition data explained by these probabilistic models, providing a clear measure of predictive accuracy. SD was selected to assess the stability of model predictions, as it directly reflects the dispersion of outputs. However, it is important to acknowledge the limitations of these metrics. R2 does not account for the magnitude of prediction errors and can be influenced by outliers or model complexity. Similarly, SD may not fully capture the distribution of uncertainties, especially in non-symmetric data. Alternative metrics, such as root mean squared error and confidence intervals, could provide additional insights and are recommended for future studies. Despite these limitations, R2 and SD remain appropriate for this research objectives, as they align with common practices in pavement condition modeling and provide a robust basis for comparing model performance.

Theoretical formula for R250 is shown in Eq. (17).

where \(N\) is the total number of predicted or true values; \(n\) is the serial number of \(N\); \(M\) indicates the probabilistic prediction model is run \(M\) times for a set of input values; \(m\) is the serial number of \(M\); \({y}_{n}^{t}\) denotes the \({n}^{th}\) true value;\({y}^{a}\) is the average of all \({y}_{n}^{t}\) values; \({y}_{n,m}^{p}\) represents the \({n}^{th}\) predicted value in the \({m}^{th}\) run.

For a set of input values, the proposed probabilistic prediction model is run \(M\) times to obtain \(M\) output values, and then the standard deviation is computed. Finally, the mean of the \(N\) standard deviations is computed as the prediction stability. The formula50 for prediction stability is shown in Eq. (18).

Results and discussion

Selection of hyperparameters

For comparability, the hyperparameters of the BNN in the CS-BNN, BNN, GA-BNN and PSO-BNN models are the same. The hyperparameters include the number of hidden layers and neurons, optimizer, activation functions, learning rate, batch size, number of epochs, simulation times, dropout probability. Simulation times refer to the \(M\) in Eqs. (17) and (18). The dropout probability is the probability that each neuron in the hidden layer is randomly dropped out during training and testing processes. One hidden layer is chosen for this study because it can model almost all nonlinear relationships. The hyperparameters in Table 3 were determined through iterative experimentation. A range of values for each hyperparameter was tested, and those maximizing model performance were selected. Specifically, the learning rate was evaluated in the range of [0.01, 0.2], and the dropout probability was tested in the range of [0.1, 0.5]. The final values (learning rate = 0.1, dropout probability = 0.3) were chosen based on their ability to maximize the R2.

For the selection of coefficient values in the CS, GA and PSO algorithms, several sets of alternative values were initially selected by referring to previous studies36,44,45,46,51,52, and then the final coefficient values were determined by several attempts based on the principle of maximizing the R2.

In the three algorithms, the number of solutions during the iterative search was set to 50 and the number of iterative searches was set to 50. Each element (weight or bias) in solutions has a value in the range of \([-2, 2]\), corresponding to the initial weights and biases obtained by random sampling from a truncated normal distribution.

In the CS algorithm, the probability (\({P}_{a}\)) of the random walk is 0.25. In the GA algorithm, the probabilities of crossover and mutation are 0.9 and 0.1, respectively. In the PSO algorithm, the inertia coefficient \(\omega\) is 1; individual and group learning factors (\({c}_{1}\), \({c}_{2}\)) are both 1.5; and the range of velocity vector values (\({v}_{i}^{k}\)) is \([-0.2, 0.2]\).

Numerical results

Results of the proposed prediction model (CS-BNN)

With the objective of maximizing of the R2 obtained from the training data, the cuckoo algorithm performed 50 searches, and produced the results shown in Fig. 8. The maximum value of 0.772 is reached in the 24th search process, and the optimization result consistently remains at that value. This indicates that the number of iterative searches set to 50 is sufficient.

The corresponding searched initial weights and biases are shown in Table 4, totaling 145 values. The BNN model in this study has a total of 10 neurons in the input layer, 12 neurons in the hidden layer and 1 neuron in the output layer. Thus, the weights from the input layer to the hidden layer are 10*12 matrices and the biases are 12*1 matrices corresponding to the values of the 1st to 120th terms and the 121st to 132nd terms, respectively. The weights from the hidden layer to the output layer are 12*1 matrices and the bias is 1*1 matrix corresponding to the values of the 133rd to the 144th terms and the 145th term, respectively.

Based on the searched initial weights and biases, the BNN model was retrained using the training data. Then, the R2 and stability of the retrained model were computed using the testing data. The R2 and stability (SD) values are 0.778 and 1.806, respectively. The relationship between the true PCI values and the predicted mean values of the testing data is shown in Fig. 9. Moreover, the correlation coefficient between the true PCI values and the predicted mean values is calculated to be 0.8815. Figure 9 shows the better correlation coefficient between the predicted and true PCI values of the CS-BNN model, demonstrating its potential to provide reliable predictions for pavement management decisions.

Superiority of the proposed prediction model

In order to verify the superiority of the pavement deterioration prediction model based on CS-BNN, three comparison models were established. Using the same training data, three pavement deterioration prediction models based on BNN, GA-BNN and PSO-BNN were trained. Subsequently, the R2 and stability (SD) were computed using validation data. A larger R2 value means higher goodness-of-fit (predictive accuracy), while a smaller SD value indicates better stability. The relationship between the true PCI values and the predicted mean values from the GA-BNN, PSO-BNN, and BNN models is illustrated in Fig. 10. Correspondingly, the three correlation coefficients are calculated. The proposed CS-BNN model is compared with the three comparative models in terms of the R2, standard deviation, and correlation coefficient, as shown in Fig. 11. The larger R2 values and correlation coefficient values mean higher goodness-of-fit, while the smaller SD values indicate better stability.

According to Fig. 11, three conclusions can be obtained: (1) the goodness-of-fit and stability of CS-BNN, GA-BNN and PSO-BNN are better than that of the BNN model; (2) the goodness-of-fit and stability of the CS-BNN prediction model are better than the other three prediction models; and (3) as the goodness-of-fit decreases, the stability deteriorates roughly.

For the first conclusion, the reason behind it was explored. In contrast to the BNN model, the CS-BNN, GA-BNN and PSO-BNN models have an extra step of searching for initial weights and biases. To better help readers understand the reason for the first conclusion, the search variable was assumed to be one-dimensional, as shown in Fig. 12. If the extra step is ignored, the randomly generated initial weights and biases are most likely to be the initial point 1. Then, the BNN model will converge to the local minimum point 1. Based on CS, GA, and PSO, the initial point 2 can be searched with high probability. So, the BNN model will converge to the local minimum point 2. This results in the superiority of the CS-BNN, GA-BNN and PSO-BNN over the BNN model.

For the second conclusion, two reasons behind it were explored. The first reason is that CS has fewer parameters than GA and PSO45,46. The second reason is the exploratory moves based on global search (Lévy flight) and local search (random walk), which are more efficient for large search spaces. It is these two reasons that cause CS-BNN to outperform GA-BNN and PSO-BNN prediction models. In addition, the second conclusion is able to demonstrate the superiority of the proposed prediction model.

For the third conclusion, the mechanism behind it was also revealed. Data from two road sections were substituted into the trained CS-BNN model, and the computations were repeated 10 times. Then, the relationship between predicted and true values was plotted in Fig. 13. Due to the inability to calculate R2 for individual road sections, the Mean Square Error (MSE) was used to characterize the goodness-of-fit of individual road sections. The theoretical formula is shown in Eq. (19). The smaller the MSE value, the higher the goodness-of-fit. Comparing the relationship between the predicted and true values of road sections 1 and 2, it can be intuitively judged that when the goodness-of-fit is better, predicted values are closer to true values. In the meantime, the dispersion of predicted values decreases, and naturally the stability becomes better. On the contrary, the goodness-of-fit decreases, and the stability deteriorates roughly.

The superior goodness-of-fit and stability of the CS-BNN model have significant practical implications for pavement management. By providing more reliable predictions of pavement condition evolution, the model enables engineers to optimize maintenance timing by accurately identifying when interventions are needed, thereby preventing premature or delayed actions that could lead to higher costs or reduced pavement performance. Additionally, it allows for a better assessment of maintenance effectiveness by estimating the long-term impact of different strategies, supporting the selection of the most cost-effective solutions. Furthermore, the model improves budget planning by reducing uncertainty in cost estimations, enabling agencies to allocate resources more efficiently and prioritize high-impact projects. These benefits collectively demonstrate the potential of the CS-BNN model to enhance data-driven decision-making in pavement management.

Analysis of the proposed prediction model

As mentioned above, the CS algorithm has fewer parameters, i.e., one parameter—the probability (\({P}_{a}\)) of the random walk. In this study, \({P}_{a}\) had been taken to be 0.25. In order to analyze the effect of the \({P}_{a}\) on the proposed prediction model (CS-BNN), four additional had been selected: 0.05, 0.15, 0.35, and 0.45. Then, the goodness-of-fit and stability of the proposed prediction model under different \({P}_{a}\) values were computed separately, as shown in Table 5. It can be intuitively seen that the \({P}_{a}\) seems to have an insignificant effect on the goodness-of-fit and stability of the proposed model, which matches the findings of the previous study45.

Sensitivity analysis of the CS-BNN model

In order to verify the robustness of the CS-BNN model, small changes of -5% ~ + 5% were made to each numerical input variable, and the changes in the output values were observed, i.e., sensitivity analysis. In this case, the median of each input variable is used as the base case, and the predicted PCI values are shown in Fig. 14. The 0% in the horizontal coordinate indicates the selected median (base case) of input variables, and − 4%, − 2%, 2%, and 4% indicate a decrease of 4%, a decrease of 2%, an increase of 2%, an increase of 4%, respectively, on the basis of the base case. It can be seen that the predicted PCI values did not oscillate drastically when each of the 9 numerical input variables changed slightly, which can demonstrate the robustness of the CS-BNN model. In addition, the predicted PCI increase gradually as the PCI of previous year increases, suggesting that the PCI of previous year is relatively sensitive for the CS-BNN model compared to the other eight variables. The high sensitivity of the PCI of previous year can be attributed to its direct and strong relationship with the current pavement condition. Pavement performance is inherently time-dependent, and historical condition data provides a robust baseline for predicting future performance. In contrast, variables such as the total low temperature days have a more indirect impact, as their effects are often cumulative and long-term. The CS-BNN model prioritizes variables with a strong and direct relationship to the target variable, which explains why the PCI of previous year exhibits higher sensitivity. The high sensitivity of the PCI of previous year means that when collecting the PCI value, errors in that value should be minimized to improve the goodness-of-fit and stability of the CS-BNN prediction model.

Conclusions and future work

This study proposed an improved probabilistic prediction model for pavement deterioration based on CS-BNN, which demonstrated superior performance in terms of goodness-of-fit and stability compared with GA-BNN, PSO-BNN and BNN models. The major findings in this study can be summarized as follows.

-

1.

The pavement deterioration prediction model based on CS-BNN outperforms these based on GA-BNN, PSO-BNN and BNN in terms of the goodness-of-fit and stability.

-

2.

The goodness-of-fit and stability of pavement deterioration probabilistic prediction models are roughly positively correlated.

-

3.

The probability (\({P}_{a}\)) of random walk has an insignificant effect on the goodness-of-fit and stability of the proposed pavement deterioration prediction model.

The CS-BNN model’s superior performance in predicting pavement deterioration provides a more reliable tool for infrastructure managers. This improvement can lead to better decision-making in maintenance and rehabilitation scheduling, resource allocation, and long-term pavement management strategies. The positive correlation between the goodness-of-fit and the stability simplifies the model optimization process, as improving one metric simultaneously enhances the other, eliminating the need for trade-offs. The insensitivity to random walk probability simplifies the application of the CS-BNN model in practice. This reduces the complexity of model calibration and makes it more accessible for real-world implementation. The findings collectively contribute to advancing pavement management practices by providing a more accurate and robust tool for predicting pavement condition deterioration.

One of the key challenges in current pavement management practices is that existing prediction models often fail to account for uncertainties, leading to underestimation of maintenance costs and overestimation of post-maintenance performance. The proposed CS-BNN model addresses this issue by incorporating uncertainty quantification through its Bayesian framework, while simultaneously achieving superior goodness-of-fit and stability in predictions. This improvement enables more accurate estimation of required maintenance budgets and more realistic expectations of pavement performance after maintenance, ultimately supporting better decision-making for road engineers and infrastructure managers.

In future studies, it is worthwhile to extend the application of CS-BNN to other transportation infrastructures such as railroads, bridges and tunnels. Rail track degradation is influenced by factors such as dynamic loads, track geometry, and environmental conditions. The CS-BNN model could be adapted to predict rail track degradation, addressing challenges related to maintenance scheduling and safety. Bridge deterioration involves complex interactions between material aging, traffic loads, and environmental stressors. The CS-BNN model could be used to predict concrete cracking or steel corrosion, helping prioritize rehabilitation efforts and extend service life. Tunnel degradation often involves issues like lining cracks, water infiltration, and ground movement. The CS-BNN model could predict these defects, supporting proactive maintenance and reducing the risk of sudden failures.

Moreover, the CS algorithm can be coupled not only with BNN, but perhaps also with other deep learning algorithms to enhance the model performance, such as Long Short-Term Memory (LSTM), transformer, and so on. Pavement deterioration is a time-dependent process influenced by historical conditions (e.g., traffic loads, weather patterns). LSTM’s ability to capture temporal dependencies makes it ideal for modeling such sequential data, improving the accuracy of long-term predictions. Transformers excel at handling complex, non-linear relationships in data through their self-attention mechanisms. This capability is valuable for pavement deterioration prediction, where multiple factors (e.g., material properties, environmental conditions) must be considered simultaneously.

Due to insufficient years of modeling data, the current CS-BNN model can only predict PCI for the next year. To solve this problem, data from sufficient years should be collected as much as possible to develop a reliable multi-year PCI prediction model based on the CS-BNN theory in the future. Besides, there are 4 strategies to overcome the data limitations: synthetic data generation, transfer learning, data augmentation, collaborative data sharing. Techniques such as generative adversarial networks or Monte Carlo simulations can be used to generate synthetic pavement condition data, supplementing limited real-world data. Transfer learning can address data scarcity by leveraging pre-trained models from related domains (e.g., bridge or railroad deterioration) that have larger datasets. By fine-tuning these models on the available pavement data, the dependency on large amounts of pavement-specific data can be reduced while still achieving reliable predictions. Existing data can be augmented by introducing variations (e.g., noise, scaling) to simulate different conditions, increasing the diversity of the training dataset. Partnerships with other agencies or regions can facilitate the pooling of pavement condition data, expanding the dataset available.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Madanat, S. Optimal infrastructure management decisions under uncertainty. Transport. Res. Part C Emerg. Technol. 1(1), 77–88 (1993).

Prozzi, J. A. & Madanat, S. Development of pavement performance models by combining experimental and field data. J. Infrastruct. Syst. 10(1), 9–22 (2004).

Sadeghi, J., Najafabadi, E. R. & Kaboli, M. E. Development of degradation model for urban asphalt pavement. Int. J. Pavement Eng. 18(8), 659–667 (2017).

Lin, L. et al. A new FCM-XGBoost system for predicting Pavement Condition Index. Expert Syst. Appl. 249, 123696 (2024).

Chootinan, P., Chen, A., Horrocks, M. R. & Bolling, D. A multi-year pavement maintenance program using a stochastic simulation-based genetic algorithm approach. Transport. Res. Part A Policy Practice 40(9), 725–743 (2006).

W. Uddin. Pavement management systems. in The Handbook of Highway Engineering (T. F. Fwa, Ed.) (Taylor & Francis, 2006).

AASHTO. in Pavement Management Guide, 2nd edn. (American Association of State Highway and Transportation Officials, 2012).

Justo-Silva, R., Ferreira, A. & Flintsch, G. Review on machine learning techniques for developing pavement performance prediction models. Sustainability 13(9), 5248 (2021).

Alaswadko, N. & Hwayyis, K. An approach to investigate the supplementary inconsistency between time series data for predicting road pavement performance models. Int. J. Pavement Eng. 24(2), 2045017 (2023).

Perez-Acebo, H., Montes-Redondo, M., Appelt, A. & Findley, D. J. A simplified skid resistance predicting model for a freeway network to be used in a pavement management system. Int. J. Pavement Eng. 24(2), 2020266 (2023).

Li, N., Haas, R. & Xie, W.-C. Development of a new asphalt pavement performance prediction model. Can. J. Civ. Eng. 24(4), 547–559 (1997).

Hong, F. Asphalt pavement overlay service life reliability assessment based on non-destructive technologies. Struct. Infrastruct. Eng. 10(6), 767–776 (2014).

Abaza, K. A. Back-calculation of transition probabilities for Markovian-based pavement performance prediction models. Int. J. Pavement Eng. 17(3), 253–264 (2016).

Abaza, K. A. Simplified staged-homogenous Markov model for flexible pavement performance prediction. Road Mater. Pavement Design 17(2), 365–381 (2016).

Dong, Q., Chen, X., Dong, S. & Ni, F. Data analysis in pavement engineering: An overview. IEEE Trans. Intell. Transport. Syst. 23(11), 22020–22039 (2021).

Shehadeh, A., Alshboul, O. & Tamimi, M. Integrating climate change predictions into infrastructure degradation modelling using advanced Markovian frameworks to enhanced resilience. J. Environ. Manag. 368, 1222234 (2024).

Abaza, K. A. Empirical approach for estimating the pavement transition probabilities used in non-homogenous Markov chains. Int. J. Pavement Eng. 18(2), 128–137 (2017).

Ortiz-García, J. J., Costello, S. B. & Snaith, M. S. Derivation of transition probability matrices for pavement deterioration modeling. J. Transport. Eng. 132(2), 141–161 (2006).

Osorio-Lird, A., Chamorro, A., Videla, C., Tighe, S. & Torres-Machi, C. Application of Markov chains and Monte Carlo simulations for developing pavement performance models for urban network management. Struct. Infrastruct. Eng. 14(9), 1169–1181 (2018).

M. S. Yamany, D. M. Abraham, & S. Labi. Probabilistic modeling of pavement performance using Markov chains: A state-of-the-art review. in ASCE International Conference on Transportation & Development, Alexandria, Virginia, USA, 2019: American Society of Civil Engineers.

Kaloop, M. R., El-Badawy, S. M., Hu, J. W. & El-Hakim, R. T. A. International Roughness Index prediction for flexible pavements using novel machine learning techniques. Eng. Appl. Artif. Intell. 122, 106007 (2023).

Karballaeezadeh, N., GhasemzadehTehrani, H., MohammadzadehShadmehri, D. & Shamshirband, S. Estimation of flexible pavement structural capacity using machine learning techniques. Front. Struct. Civ. Eng. 14(5), 1083–1096 (2020).

Alonso-Solorzano, A., Perez-Acebo, H., Findley, D. J. & Gonzalo-Orden, H. Transition probability matrices for pavement deterioration modelling with variable duty cycle times. Int. J. Pavement Eng. 24(2), 2278694 (2023).

Ziari, H., Sobhani, J., Ayoubinejad, J. & Hartmann, T. Prediction of IRI in short and long terms for flexible pavements: ANN and GMDH methods. Int. J. Pavement Eng. 17(9), 776–788 (2016).

Choi, J. H., Adams, T. M. & Bahia, H. U. Pavement roughness modeling using back-propagation neural networks. Comput.-Aided Civ. Infrastruct. Eng. 19(4), 295–303 (2004).

Guo, F., Zhao, X., Gregory, J. & Kirchain, R. A weighted multi-output neural network model for the prediction of rigid pavement deterioration. Int. J. Pavement Eng. 23(8), 2631–2643 (2021).

X. Sun, H. Wang, & S. Mei. Explainable highway performance degradation prediction model based on LSTM. Adv. Eng. Inform. 61 (2024).

Han, C. et al. Asphalt pavement health prediction based on improved transformer network. IEEE Trans. Intell. Transport. Syst. 24(4), 4482–4493 (2023).

F. Xiao. Research on expressway asphalt pavement maintenance management and optimization decision-making. in Ph.D. dissertation, Department of Roadway Engineering, Southeast University, Nanjing, Jiangsu Province (2023).

Xiao, F., Chen, X., Cheng, J., Yang, S. & Ma, Y. Establishment of probabilistic prediction models for pavement deterioration based on Bayesian neural network. Int. J. Pavement Eng. 24(2), 1–16 (2023).

Yao, L., Leng, Z., Jiang, J. & Ni, F. Modelling of pavement performance evolution considering uncertainty and interpretability: A machine learning based framework. Int. J. Pavement Eng. 23(14), 5211–5226 (2021).

Song, Y., Wang, Y. D., Hu, X. & Liu, J. An efficient and explainable ensemble learning model for asphalt pavement condition prediction based on LTPP dataset. IEEE Trans. Intell. Transport. Syst. 23(11), 22084–22093 (2022).

Mizutani, D. & Yuan, X.-X. Infrastructure deterioration modeling with an inhomogeneous continuous time Markov chain: A latent state approach with analytic transition probabilities. Comput.-Aided Civ. Infrastruct. Eng. 38(13), 1727–1892 (2023).

Ehsani, M., Hamidian, P., Hajikarimi, P. & Nejad, F. M. Optimized prediction models for faulting failure of Jointed Plain concrete pavement using the metaheuristic optimization algorithms. Construct. Build. Mater. 364, 129948 (2023).

Wang, K. C. P. & Li, Q. Pavement smoothness prediction based on fuzzy and Gray theories. Comput.-Aided Civ. Infrastruct. Eng. 26(1), 69–76 (2011).

Deng, Y. & Shi, X. An accurate, reproducible and robust model to predict the rutting of asphalt pavement: Neural networks coupled with particle swarm optimization. IEEE Trans. Intell. Transport. Syst. 23(11), 22063–22072 (2022).

Karlaftis, A. G. & Badr, A. Predicting asphalt pavement crack initiation following rehabilitation treatments. Transport. Res. Part C Emerg. Technol. 55(SI), 510–517 (2015).

Sun, Z., Hao, X., Li, W., Huyan, J. & Sun, H. Asphalt pavement friction coefficient prediction method based on genetic-algorithm-improved neural network (GAI-NN) model. Can. J. Civ. Eng. 49(1), 109–120 (2022).

Askari, A., Hajikarimi, P., Ehsani, M. & Nejad, F. M. Prediction of rutting deterioration in flexible pavements using artificial neural network and genetic algorithm. AMIRKABIR J. Civ. Eng. 54(9), 3581–3602 (2022).

Karballaeezadeh, N. et al. Prediction of remaining service life of pavement using an optimized support vector machine (case study of Semnan-Firuzkuh road). Eng. Appl. Comput. Fluid Mech. 13(1), 188–198 (2019).

Alnaqbi, A., Al-Khateeb, G. & Zeiada, W. A hybrid approach of support vector regression with genetic algorithm optimization for predicting spalling in continuously reinforced concrete pavement. J. Build. Pathol. Rehabilit. 9, 1–17 (2024).

Mazari, M. & Rodriguez, D. D. Prediction of pavement roughness using a hybrid gene expression programming-neural network technique. J. Traffic Transport. Eng. (Engl. Edn.) 3(5), 448–455 (2016).

Alatoom, Y. I. & Al-Suleiman, T. I. Development of pavement roughness models using Artificial Neural Network (ANN). Int. J. Pavement Eng. 23(13), 4622–4637 (2022).

X.-S. Yang & S. Deb. Cuckoo search via levy flights. in Presented at the World Congress on Nature and Biologically Inspired Computing, Coimbatore, INDIA (2009).

Yang, X.-S. & Deb, S. Engineering optimisation by cuckoo search. Int. J. Math. Modell. Numer. Optimisation 1(4), 330–343 (2010).

Xiao, F. et al. A binary cuckoo search for combinatorial optimization in multiyear pavement maintenance programs. Adv. Civ. Eng. 2020, 8851325 (2020).

S. Ryu, Y. Kwon, & W. Y. Kim. Uncertainty quantification of molecular property prediction with Bayesian neural networks. p. arXiv:1903.08375. Accessed on: March 01, 2022. https://ui.adsabs.harvard.edu/abs/2019arXiv190308375R

Guo, W. et al. A new method for solving parameter mutation analysis in periodic structure bandgap calculation. Eur. J. Mech. A/Solids 111(2025), 105572 (2025).

Shehadeh, A., Alshboul, O., Al-Shboul, K. F. & Tatari, O. An expert system for highway construction: Multi-objective optimization using enhanced particle swarm for optimal equipment management. Expert Syst. Appl. 249(Part B), 123621 (2024).

D. C. Montgomery & G. C. Runger. in Applied Statistics and Probability for Engineers, 5th Edn. (Wiley, 2010).

Chan, W. T., Fwa, T. F. & Tan, C. Y. Road-maintenance planning using genetic algorithms. I: Formulation. J. Transport. Eng. 120(5), 693–709 (1994).

Fwa, T., Chan, W. & Tan, C. Genetic-algorithm programming of road maintenance and rehabilitation. J. Transport. Eng. 122(3), 246–253 (1996).

Funding

This work was supported by the Science and Technology Project of Jiangxi Provincial Department of Transportation under Grant No. 2024QN009, and in part by the National Natural Science Foundation of China under Grant 52268068, and the two Key Projects for Scientific and Technological Cooperation Scheme of Jiangxi Province under Grants 20212BDH80022 and 20223BBH80002.

Author information

Authors and Affiliations

Contributions

Feng Xiao and Biying Shi conceived the study and designed the framework. Feng Xiao and Di Yang developed the methodology and supervised data collection. Feng Xiao and Jie Gao analyzed the data and contributed to result interpretation. Feng Xiao wrote the initial draft and incorporated key findings. Jie Gao and Huapeng Chen reviewed and edited the manuscript for language and style, ensuring compliance with journal guidelines. All authors participated in discussions, revised the work, and approved the final version, with each making unique and essential contributions to the research’s success.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xiao, F., Shi, B., Gao, J. et al. Enhanced probabilistic prediction of pavement deterioration using Bayesian neural networks and cuckoo search optimization. Sci Rep 15, 8665 (2025). https://doi.org/10.1038/s41598-025-92469-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-92469-9