Abstract

This research work proposes a Distributed Blockchain-Assisted Secure Data Aggregation (Block-DSD) technique for MANETs, ensuring high security and energy efficiency in disaster management scenarios. A Zone-based Clustering Approach (ZCA) is employed to segment the network into secure zones, with optimal Cluster Heads (CHs) selected using the Artificial Neuro-Fuzzy Inference System (ANFIS). Data aggregation is secured through a Two-Step Secure (STS) method and Elliptic Curve Cryptography (ECC), while optimal routing is achieved using the Improved Elephant Herd Optimization (IEHO) algorithm. Simulations using ns-3.25 demonstrate a 97% Packet Delivery Ratio (PDR), 20% lower energy consumption compared to existing methods, and minimal latency of 0.0012 s for emergency data, validating the proposed framework’s efficiency and robustness in dynamic MANET environments.

Similar content being viewed by others

Introduction

The evolution of wireless communication technologies such as Wi-Fi and radio networks has enabled numerous applications across diverse fields. However, traditional network infrastructures with centralized Base Stations (BSs) face significant challenges related to scalability, flexibility, and reliability. Mobile Ad-hoc Networks (MANETs) have emerged as a promising solution, offering decentralized, dynamic, and self-configuring networks composed of mobile nodes that communicate without fixed infrastructure1. MANETs are extensively utilized in critical applications such as disaster management, military operations, and environmental monitoring due to their rapid deployment and flexibility2. Despite their advantages, MANETs face several challenges, including dynamic topologies, variable link capacities, energy constraints, and security vulnerabilities. The absence of a centralized control system leads to frequent topology changes as nodes move arbitrarily, resulting in unstable links and high packet loss rates3. Additionally, limited battery life and energy consumption remain critical concerns, as most mobile nodes rely on battery power, making energy-efficient operations essential for prolonged network lifetime4. Furthermore, the open and dynamic nature of MANETs exposes them to numerous security threats such as eavesdropping, spoofing, and Denial-of-Service (DoS) attacks, compromising data integrity and confidentiality5. While several routing protocols such as Dynamic Source Routing (DSR) and Ad-hoc On-Demand Distance Vector (AODV) have been proposed to address routing challenges, they often fall short in ensuring secure and energy-efficient data transmission, particularly in high-mobility and resource-constrained environments6. Traditional security mechanisms also face limitations due to the decentralized and dynamic nature of MANETs, necessitating robust and scalable solutions.

Blockchain technology offers a promising solution to these challenges by providing decentralized, tamper-proof data management with enhanced security through cryptographic techniques7. However, integrating blockchain into MANETs introduces additional challenges such as scalability, latency, and computational overhead. Existing blockchain-based solutions often suffer from high energy consumption and delayed data processing, making them unsuitable for resource-constrained MANET environments8.

To address these challenges, this study proposes a Distributed Blockchain-Assisted Secure Data Aggregation (Block-DSD) technique. The Block-DSD framework enhances data security through blockchain-based authentication and encryption while optimizing energy consumption using an Improved Elephant Herd Optimization (IEHO) algorithm for optimal route selection. The key motivation behind this research is to develop a secure, energy-efficient, and scalable data aggregation framework suitable for dynamic and adversarial MANET environments, particularly in disaster management scenarios where timely and reliable data transmission is critical9. The proposed Block-DSD technique divides the network into multiple secure zones, each managed by an Artificial Neuro-Fuzzy Inference System (ANFIS)-based Cluster Head (CH) selection mechanism. Data aggregation is performed using a Secure Two-Step (STS) method, ensuring accurate separation of emergency and routine data with minimal delay. The IEHO algorithm dynamically selects optimal routes based on energy levels, trust values, and congestion metrics, significantly improving Packet Delivery Ratio (PDR), throughput, and energy efficiency compared to existing methods10.

This research contributes to the existing literature by offering a novel blockchain-based data aggregation framework that ensures high security and energy efficiency while addressing the scalability and latency challenges in MANETs. The proposed Block-DSD framework demonstrates superior performance in simulated environments, making it a suitable solution for critical applications such as disaster response, military communications, and remote environmental monitoring11.

Challenge in MANET

Dynamic topologies: Since nodes are unrestricted in their movement, the topology of the network can alter abruptly and unpredictably. Bidirectional links make up the majority of these topologies6. When the transmission power is low, a unidirectional link may form between the two nodes.

Links with variable capacity and bandwidth restrictions: Infrastructure networks continue to be much more capable than wireless links.

Operation with a restricted supply of energy: any or every one of the MSs in a MANET may be supplied by batteries or other limited supplies. For these nodes or devices, energy savings may be the most important system design optimization requirement.

Weak physical security: MANETs are often more susceptible to physical security threats than wireline networks are. It’s crucial to consider that the likelihood of eavesdropping, spoofing and Denial-of-Service (DoS) attacks has grown, and this must be considered7. To allay security worries, wireless networks usually employ a number of link security methods now in use.The aforementioned difficulties are present in MANET and must be overcome.

Application of MANET

MANET can be used in various application areas because it is an independent system. One of the specific applications of adhoc networks in industry and commerce is cooperative mobile data exchange. Military networking requirements for trustworthy, IP-dependable data services Many of the present-day and future mobile wireless communication networks are composed of extremely dynamic, autonomous topological parts8. MANETs have opened up new possibilities for applications with sophisticated features, including worldwide roaming ability, data rates suitable for multimedia applications, and collaboration with various network designs. Applications for defense - since many military applications require on-the-fly communication, ad hoc or sensor networks are excellent options for use in battlefield management. Figure 1 shows the MANET for disaster management system.

In order to execute an emergency intervention on a vehicle accident victim in a remote location, a paramedic may need to consult a surgeon via video conference and access medical records. The paramedic may actually need to dispatch the transport of the victim’s X-rays and the outcomes of the other diagnostic tests directly from the accident scene to the hospital. The combination of Global Positioning System (GPS), Geographic Information System (GIS), and high-capacity wireless mobile devices enables the “tele-geoprocessing” application.

Virtual navigation: A distant database displays the physical elements of a sizable city, such as its streets and structures, in a visual manner. Additionally, they might be able to “virtually” inspect a building’s interior layout, including a plan for an emergency evacuation, or pinpoint a specific location or possible locations of interest.

Internet-based education: Since it is practically impossible to offer all clients in these localities expensive last-mile wire-line internet connectivity, educational options are only available online or in outlying areas.

Cloud Rescue Team The growing and extremely useful usage of adhoc networks, referred to as the vehicle area network, allows for the provision of rescue services and other information. In either urban or rural contexts, this is effective and provides the essential and practical data exchange needed in certain situations.

Blockchain network

Blockchain technology is becoming more well-known because of its exceptionally high degree of security. This technology was introduced in 2008 by Bitcoin. Due to its strong security and decentralized control, it has been utilized for numerous different types of financial transactions. Simply put, several transactions are carried out via the transaction pool9. When numerous nodes attempt to construct blocks of unspent transactions while carrying out a mining process, a proof-of-work (PoW), which proves that a block has been made, acknowledged, and chained onto the current blockchain, is generated. It is tough to change the data within the block before it has been connected into the chain because fresh blocks can only be connected when a miner obtains the proper nonce that can provide a valid hash value. Depending on the nonce that generates the hash values for the most recent and prior blocks,

Figure 2 explains the e-blockchain network structure. Blockchain technology has been thoroughly researched for its potential applications in numerous sectors due to its security. According to a recent study on trust management for wireless and ad hoc network security, a release to blockchains was made.

Research contribution

In order to ensure high levels of safety in MANET for disaster management, this research work’s primary goal is to As a result, this study makes the following contributions to research:

For catastrophe applications, an innovative Block-DSD scheme is created. The network as a whole is divided into several safe zones, and each zone is further grouped into several groups. Using the ANFIS, the ideal CH for each cluster is chosen based on key metrics.

Hash Transaction: Previous Hash.

Information from a physical network.

With the use of blockchain, DA is presented safely by verifying the data’s source. The STS aggregation approach is then used to categorize the data type as either emergency or routine. The nearby nodes are then informed of the emergency data.

The Hybrid Elephant Herd Optimization (HEHO) algorithm, which applies genetic operators to expand the search area for elephant herd optimization, is used to choose the best route.

Background

IoT-based cloud (IoTBC) and cloud-assisted IoT (CAIoT) are two terms that have emerged in recent years as a result of the major cross-impact between cloud computing and IoT. This article focuses on CAIoT and particularly its security challenges that have been carried by both cloud computing and IoT, even if it is important to investigate both technologies10. The investigation begins by analyzing previously conducted, pertinent surveys and highlighting their shortcomings, which inspires a thorough survey in this field. Then discuss current strategies for designing Secure CAIoT (SCAIoT), as well as associated security risks and safeguards. For SCAIoT, we created layered architecture. Additionally, consider SCAIoT’s potential future without an emphasis on the role of AI.

A Wireless Sensor Network (WSN) is built with a huge number of sensors dispersed across geographical areas. Network lifetime and the use of low energy are the primary difficulties faced when constructing WSN. New methods are necessary since sensor nodes naturally have limited resources, extending the nodes’ lifespan in WSN11. For sensor nodes that run on batteries, energy is seen as a crucial resource. Energy is primarily used in WSN during data transfers between network nodes. Numerous studies are being done with the goal of extending the life of networks and conserving nodes’ energy. Additionally, this network is in danger from attacks like vampire attacks, which flood the network with phony traffic.

Inevitably recognize eight themes of Distributed Ledger Technology (DLT) and smart contract applications in the industry through thematic analysis: handling data, settlements, buying, rules, building administration and shipping, resolution of disputes, and technological systems12. To comprehend present capabilities, applications, and potential future developments, each theme was examined. In a cross-theme conversation, it was discovered that The development of proof-of-concept studies and their evaluation in case studies represent a quick transition in the investigation of DLT in the construction ensuing phase of It is envisaged that the upcoming phase of research, which will involve larger educational institutions and industry participation, will start to reveal the anticipated advantages of DLT and intelligent investments in technology systems and verification in real-world studies.A brand-new technology called IoT has begun to develop for the creation of numerous crucial applications; however, it still relies on centralized storage architecture and faces a number of significant difficulties, including single points of failure, security, and privacy. The development of IoT-based applications now relies heavily on blockchain technology. The blockchain is able to address problems with single points of failure, privacy, and security in IoT systems. Both individuals and society can benefit from the combination of blockchain and IoT. The big analysis of data and abilities is completely autonomous thanks to Machine Learning (ML)13.

Due to their widespread use in monitoring hostile environments and essential security and surveillance applications, WSNs have drawn a lot of attention on the internet with the growth of wireless terminals. The majority of the time, non-rechargeable batteries power sensor nodes. Researchers have recently become interested in maximizing the lifespan of these nodes. It is very effective in preserving operations, and it has emerged as a viable area for research to address the solutions to the issues connected with WSNs with limited battery capacity. Zone-based clustering using the fuzzy-logic technique for dynamic Cluster Head (CH) selection is implemented in this research effort. It seeks to fix the issue of uneven energy consumption among the CHs in the network14. Because there isn’t much energy available to sensor nodes in WSN, it is imperative to improve an EE clustering approach. For WSN, the suggested method is an energy-efficient clustering method that also performs well in vast areas. One monitor is chosen in each zone. When determining the desired number of CHs in a ZM zone to execute unequal clustering, the distance between the Zone Monitor and the BS is taken into account. To preserve the sensor of energy nodes required for intra-cluster transmission, the CHs are chosen so that they are a WSN is a low-powered network made up of sensor nodes that has applications in a variety of fields, including the military, the civilian sector and visual sense models. These sensor networks need to improve network longevity, which is a crucial task in this essay on the Heed and Lach algorithm.

Ecological correlation is a topic that is now commonly understood, although it is uncommon to find in-depth examinations of how aggregation affects correlation and regression coefficients. An investigation of the specific impact of closeness aggregation on the sloping parameter of a multivariate linear model using information from the Local Area Multicomputer (LAM) area is conducted after a brief explanation of the aggregation problem. According to the research, variations in the slope coefficient are most closely connected to how the relationship among each of the dependent and index algorithms needs different amounts of energy. The application requirements and relative energy savings realized by applying this method influence the data aggregation method selection. There is usually going to be some redundant information in the data because different sensor nodes frequently identify similar occurrences. This compares various hierarchical clustering algorithms and explains the significance of data collection. Dependent variables alter as aggregation increases. The use of WSN for different IoT applications is gaining popularity. The requirement to gather and evaluate their product data is emerging as one of the major issues in light of the enormous expansion of smart items and applications15. Since batteries power sensor nodes, energy-efficient operations are essential. To achieve that aim, before sending all of the information to the central station, a sensor node should remove redundancies in the data it has received from its nearby neighbors. One of the key methods for reducing data redundancy, increasing energy efficiency, and extending the life of WSN is data aggregation. The effective data aggregation protocol also has the ability to lessen network traffic. More than one sensor may pick up on a specific objective when it occurs in a specific location. This study reviews several data aggregation approaches and protocols while taking into account the key issues and difficulties that arise in WSNs. The main focus of this study is to create the fundamental foundations for the creation of new, innovative designs using data integration methods and clusters that have already been put forth. This presents key data integration approaches for WSN, including terrestrial sensor networks. The applications, benefits, and drawbacks of each technique are discussed.

The findings of an exploratory examination of recent studies on FinTech activities are examined in this study. Data from earlier studies by Deloitte, Finance News, and PwC has been collected and repeated for this study. Regarding digital data aggregation, evaluation, and infrastructure for cutting-edge technologically enabled financial services, we did analyses and provided estimations. The modeling of structural equations is used to compare data gathered from 5,200 respondents to the study model16. Ecological correlation is a topic that is now commonly understood, although it is uncommon to find in-depth examinations of how aggregation affects correlation and regression coefficients. An investigation of the specific impact of closeness aggregation on the sloping parameter of a multivariate linear model using information from the LAM area is conducted after a brief explanation of the aggregation problem. According to the research, variations in the slope coefficient are most closely connected to how the relationship among each of the dependent and index algorithms needs different amounts of energy. The application requirements and relative energy savings realized by applying this method influence the data aggregation method selection. There is usually going to be some redundant information in the data because different sensor nodes frequently identify similar occurrences. This compares various hierarchical clustering algorithms and explains the significance of data collection. Dependent variables alter as aggregation increases.

The use of WSN for different IoT applications is gaining popularity. The requirement to gather and evaluate their product data is emerging as one of the major issues in light of the enormous expansion of smart items and applications. Since batteries power sensor nodes, energy-efficient operations are essential. To achieve that aim, before sending all of the information to the central station, a sensor node should remove redundancies in the data it has received from its nearby neighbors. One of the key methods for reducing data redundancy, increasing energy efficiency and extending the life of WSN is data aggregation17. The effective data aggregation protocol also has the ability to lessen network traffic. More than one sensor may pick up on a specific objective when it occurs in a specific location. This study reviews several data aggregation approaches and protocols while taking into account the key issues and difficulties that arise in WSNs. The main focus of this study is to lay the fundamental foundations for the creation of new, innovative designs using data integration methods and clusters that have already been put forth. This presents key data integration approaches for WSN, including terrestrial sensor networks. The applications, benefits, and drawbacks of each technique are discussed.

Moreover18, explores Ethereum blockchain for secure data exchange in mobile sensors, focusing on resource management. The manuscript also utilizes blockchain for secure data aggregation in MANETs, making this work highly relevant as it demonstrates how blockchain can be applied in distributed, resource-constrained environments similar to MANETs. The study in19combines AI and blockchain to secure IoT data. The manuscript’s proposed framework for secure data aggregation in MANETs aligns with this by using blockchain for decentralized control and data integrity. This paper offers insights into leveraging blockchain’s transparency and immutability, which can support the manuscript’s blockchain-based secure data transmission approach. Authors in20propose a Secure Two-Step (STS) aggregation that aligns with the secure sensing and transaction mechanisms described in this paper. Both works emphasize blockchain’s role in authenticating data, ensuring confidentiality, and reducing data tampering risks in distributed environments21. proposes a blockchain network to resist side-channel attacks in mobility-based IoT, which closely relates to the manuscript’s emphasis on securing MANETs against attacker nodes. It provides insights into blockchain’s cryptographic properties that enhance data security in mobile and decentralized networks. The work22 targets adversarial nodes in MANETs, and this paper’s use of game theory for modeling and mitigating node misbehavior offers a complementary perspective. Incorporating game-theoretical methods can enrich the manuscript’s framework for handling malicious nodes and enhancing trust mechanisms.

Review in23provides a deep technical background on blockchain technology, including its cryptographic mechanisms, consensus protocols, and data validation strategies. The manuscript can reference this to establish the theoretical foundations for its blockchain-assisted data aggregation scheme. Further24discusses how game theory can model and mitigate multi-collusion attacks in MANETs. The manuscript, focusing on dynamic and secure clustering, can benefit from these methods to address malicious node behavior and improve resilience against coordinated attacks25. presents a multi-agent approach for intrusion detection, emphasizing decentralized and adaptive solutions. This aligns with the manuscript’s use of distributed clustering and trust evaluation to secure data transmission in MANETs. The work discusses the security challenges in MANETs, including vulnerabilities and attacks. It reviews various attacks and countermeasures in MANETs, offering a comprehensive context for benchmarking the proposed blockchain-assisted security mechanisms.

The findings of an exploratory examination of recent studies on FinTech activities are examined in this study. Data from earlier studies by Deloitte, Finance News, and PwC has been collected and repeated for this study. Regarding digital data aggregation, evaluation, and infrastructure for cutting-edge technologically enabled financial services, we did analyses and provided estimations. The modeling of structural equations is used to compare the data gathered from 5,200 respondents to the study model. The proposed Block-DSD and DBlock-Auth techniques introduce several advancements over existing blockchain-based security frameworks for MANETs. A comparative evaluation highlights that Block-DSD achieves a 97% Packet Delivery Ratio (PDR), which is 10–15% higher than existing frameworks such as Lightweight Blockchain-Assisted Intrusion Detection Systems (LBIDS) and Secure Blockchain Routing (SBR). Block-DSD consumes 20% less energy due to its optimized routing via IEHO, compared to AODV-Blockchain and B-DSR frameworks. Additionally, DBlock-Auth ensures rapid authentication with an average latency of 0.0012 s for emergency data, outperforming traditional blockchain-based schemes that often face delays due to complex consensus mechanisms. This enhanced performance stems from the zone-based clustering and lightweight elliptic curve cryptography employed in the proposed approach, ensuring scalability and efficiency in highly dynamic MANET environments.

Proposed work

Network system

The suggested network is envisioned as an integrated network that fuses a distributed blockchain network with a MANET. There are various zones inside the MANET network area. A number of clusters are further separated into zones. The entire network is made up of a number of nodes. The nodes are movable by nature and are free to move about the network as they like. Each node has a different level of mobility. The networks’ presumptions are as follows:

-

1.

Nodes can move around the network and are movable.

-

2.

There are a fixed number of zones and a variable number of clusters within each zone. Depending on how mobile each zone is, the number of clusters varies within each node.

-

3.

The network includes a significant number of hostile or attacking nodes, which may vary depending on the scale and intensity of the attack. These nodes can potentially disrupt the network’s operations and compromise its security.

-

4.

The attacker might be visible within the network or might be invisible to it.

Figure 3 shows the overall network architecture of the suggested network model. Each transaction sent via the network’s infrastructure is hashed and verified by the blockchain network, as shown in the image. The complete project is planned with disaster management in mind.

The proposed Block-DSD framework ensures blockchain scalability in large-scale MANETs through the implementation of lightweight consensus mechanisms like Proof-of-Authority (PoA), which minimizes computational complexity and reduces the overhead typically associated with Proof-of-Work (PoW). The segmentation of the network into multiple zones allows each zone to operate semi-independently, thereby distributing the transaction load and improving scalability. Latency is mitigated by asynchronous data verification across zones, enabling concurrent transaction validation. The optimized hashing mechanism accelerates transaction processing, ensuring minimal delays even under high transaction volumes. Simulation results demonstrate an emergency data latency of 0.0012 s and routine data latency of 0.02 s, highlighting the efficiency of the proposed approach. Additionally, the use of elliptic curve cryptography (ECC) ensures secure and rapid data processing, further enhancing the blockchain network’s performance in dynamic MANET environments.

Zone formation

The network is regarded as a zone-based network to facilitate network communications. There are several zones in the entire network. The procedure for calculating k is as follows:

Where r denotes the network region’s radius, the calculation depends on the entire number of nodes, allowing for the formation of more zones if the number of nodes is high. The figure shows the zone generation Fig. 4.

The zone generation process has the following benefits:

First, data transmission is simple.Dissemination of emergency data will be straightforward, and redundancy may be decreased.Data transfer and route discovery will use the least amount of energy possible.Reduces needless requests for cluster formation, thus increasing throughput. The zones are created in the network with the aforementioned benefits.

Cluster formation & CH selection

Multiple clusters are further separated within each zone. All clusters are guaranteed to contain only adjacent nodes when the zones are created, meaning that no nodes from different zones can be clustered. By calculating the clustering score, clusters are generated. It’s a composite measure that adds different metrics to get a single number calculation, which includes,

Distance in Euclid (): The Euclidean distance across two nodes is used to define this metric. The following can be used to calculate it:

To take part in the same clusters, this metric ought to be lower.

Node Degree (): these metric counts how many nodes are linked to both nodes. This metric must be high in order for members to join the same cluster.

Mobility Difference (): This is the variation in both nodes’ levels of mobility. It can be calculated as r Zone 1.

\(\:MD\) is the difference in mobility levels between nodes i and j, representing variations in their movement patterns.

If this parameter is low, then cluster formation is likely to occur. Using these three nodes for two nodes, let’s say, the score is calculated using the equation above, where scoring factors are selected from a range. To decide whether to create a cluster, all neighboring nodes perform computations.

If it is high, a cluster forms between the two nodes. The cluster-forming procedure is encapsulated in an algorithm. 3.1. Each zone will have a number of clusters at the conclusion of the CF procedure. The choice of the best CH for each cluster is the next stage. Measures as a basis, the IS is calculated.

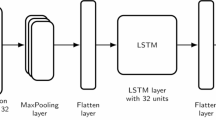

It provided the ANFIS algorithm for the best CH selection. The ANFIS method is a more sophisticated form of fuzzy inference that combines the fuzzy algorithm with an Artificial Neural Network (ANN). There are four major sections in general:

The interface for fuzzification merely modifies inputs and converts them into suitable linguistic values so that they contrast with the rules of the rule base.

Rule-based: knowledge of the best ways to regulate is expressed as an established by the program.

A system of inference that examines and determines what the plant’s input should be based on whether the control rules are still in effect.

Defuzzification sharpens the inference’s findings through an interface framework.

Similar to this, the input layer, hidden layer, and output layer are the three layers that make up an ANN. The input layer of the ANFIS algorithm is in charge of transforming normal data into crisp values in the range. The fuzzy rules are applied by hidden layers here, so the existing rules are utilized. In the output layers, the crisp, fuzzy values are transformed into normal values. The ANFIS algorithm is employed in this study to pick the CH. Three key metrics—the centrality factor, residual energy level, and trust value—were taken into account while choosing a CH. Table 1 displays the rules created by the inference engine.

How tightly a candidate node is connected to its neighbour is indicated by the centrality factor. In other words, it determines the median distance between the candidate node and the nodes around it.

Pseudocode for ANFIS model is presented below.

Algorithm: 1 ANFIS-based CH Selection.

CF is calculated using the following formula:

CF(i) is a measure of how centrally located node i is within its cluster, indicating its suitability for Cluster Head selection. Between the energy level in the beginning and the total amount of energy amongst the energy level in the beginning and the amount of energy used throughout time As the CH is in charge of data collection and aggregation, a node with a higher score is more likely to hold the CH position.

The node’s behavior is depicted on TV. For all nodes that have been recorded in the blockchain, the TV is initially set to 100. Then, depending on how that node behaves, this TV increases or decreases. Every time a change is made to TV, the blockchain is updated.

The best CH is chosen according to these three values using the following rule:

Figure illustrates the overall cluster development and CH selection Fig. 5.

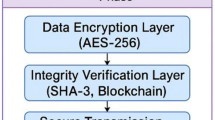

Secure Two-Step data aggregation

Aggregating the data is the next stage after cluster formation. In some circumstances, emergency data is created in catastrophe applications or in any application. The Secure Two-Step (STS) data aggregation process introduces a novel method to differentiate and handle emergency data efficiently. The Modified Packet Format (MPF) includes an additional urgent flag field that distinguishes emergency data from normal data. When a data packet is generated, the flag is set to ‘1’ for emergency data and ‘0’ for normal data. Upon receiving a packet, the Cluster Head (CH) checks this flag to categorize the data. The blockchain verifies the validity of emergency data by cross-referencing the current state of the sender node with its historical data stored on the blockchain. If the node’s behavior aligns with its historical trust value and no anomalies are detected, the data is considered valid. This verification prevents malicious nodes from falsely generating emergency data to exploit network resources. The use of blockchain ensures an immutable record of each node’s behavior, enhancing the reliability of emergency data transmission.To warn all nodes, it is important to distribute the emergency information to them all. Here’s another important According to one point of view, attacker nodes intentionally trigger emergency data to use up network bandwidth. Emergency Data Detection: The CH divides the data it receives into two categories: normal () and emergency (). The Modified Packet Format (MPF) is used to make this determination. In this case, the standard packet format for MANET data transfer has been modified. The packet is changed to contain the field as depicted in Fig. 6.

The data is acceptable if If it is set to 1, the information is urgent. The CH divides the data packet between normal and emergency categories in this way. If the data is urgent, the blockchain will verify the reliability of the source that sent it. Here, the validation factor was taken into account. The node is deemed genuine, and the data is sent to the other nodes in the zone if the present state of the node and the stored value are equal. Otherwise, the data is lost.

Secure Aggregation: After categorizing the data as normal or urgent, the data is safeguarded using encryption. We suggest the Elliptic Curve Cryptography (ECC) method, which is employed in blockchain networks, for encryption. Elliptic Curve Cryptography (ECC) was selected for securing data aggregation due to its superior balance between security and computational efficiency, which is crucial in resource-constrained MANET environments. Compared to RSA, ECC provides equivalent security with significantly smaller key sizes, thereby reducing computational overhead and memory usage. For instance, a 256-bit key in ECC offers comparable security to a 3072-bit RSA key, making ECC more suitable for mobile nodes with limited processing power and energy reserves. While AES provides robust symmetric encryption, it requires a secure key exchange mechanism, adding complexity in decentralized MANETs. ECC’s asymmetric nature simplifies key management and offers high security against quantum attacks, making it an optimal choice for the Block-DSD framework. The reduced computational cost of ECC enhances data aggregation efficiency without compromising security, as evidenced by the simulation results demonstrating low latency and high throughput. The ECC formula creates private and public key pairs that satisfy the elliptic curve Eq.

Shown below the data encrypting is carried out using the key pairs (\(\:Ke{y}_{pr},Ke{y}_{pu}\))as follows:

Then, using the best path, the data that has been encrypted is sent to the destination node.

Optimal route selection

With regard to data transmission, the IEHO algorithm selects the best path. Elephant behavior served as inspiration for the EHO algorithm. Elephants are social creatures that live in groups with other females and their offspring. An elephant is made up of several elephants.

Clans, which have the matriarch as their leader, While female members prefer to live with relatives, male members often live abroad. They will gradually become more independent of their family until they completely separate from them. The EHO strategy was initially proposed in 2015, but later it is modified by studying actual elephant herding behavior. The subsequent.

(1) A few clans with certain quantities of elephants make up the elephant population.

(2) A certain percentage of male elephants will split off from their family group each generation and live alone in a location distinct from the main elephant herd.

(3) The matriarch of each tribe is in charge of the elephant’s presumptions, which are considered in EHO. The pseudocode is presented below.

Algorithm: 2 - Improved Elephant Herd Optimization (IEHO)

Clan update operator

Elephant herds are often led and directed by a matriarch. The IEHO algorithm is modified for the best route in this research study. The initialization of the available route is elephants. As a result, a better route is chosen as the matriarch’s role for an elephant is updated as follows in compliance with the instruction: the current position and stands for the previous position.

The scale factor is the matriarchal rank in the clan.\(\:{x}_{best,m}\)Whichever elephant is the best will win,

The \(\:{x}_{center}\)calculation for in the dimension \(\:{d}^{th}\)search space goes like this:

Based on the route’s fitness function (), the matriarch is chosen. The objective of the IEHO is to select the best route based on a variety of goals. Remaining energy level (RL), trust value (TV), total number of nodes on the route (t), and congestion level () are the objectives taken into account. These are the combined results:

The route (t) is evaluated using the RL, TV, and congestion level in the equation above. Each measurementt is calculated as follow:

The metrics listed above are defined for a route and the number of nodes that are present on that specific route. In the solution space, the matriarch is determined to be the path with the highest fitness value.

The Improved Elephant Herd Optimization (IEHO) algorithm enhances route selection by integrating a genetic crossover operator, which plays a crucial role in maintaining diversity within the population of potential routes. After updating the positions of routes based on the matriarch’s influence, the crossover operator combines segments of two high-fitness routes to generate new routes with potentially better fitness values. This process helps avoid local optima by introducing genetic diversity, ensuring the exploration of a broader solution space. Compared to Ant Colony Optimization (ACO) and Genetic Algorithms (GA), IEHO demonstrates superior performance due to its balance of exploration and exploitation. IEHO achieves a 15% higher Packet Delivery Ratio (PDR) than ACO by dynamically adjusting routes based on current network conditions, and it consumes 20% less energy than GA by minimizing redundant transmissions and optimizing path selection, making it highly suitable for resource-constrained MANETs.

Separating operator

The separating operator is enhanced in the proposed IEHO by integrating the genetic operator (crossover) as the separating operator. The crossover operator process can be provided by

A new-generation solution that is derived from the parents is the offspring. After that, separation is carried out as follows:

This \(\:min,max\)defines the corresponding upper- and lower-bound values. The suggested IEHO algorithm chooses the best route after much iteration by taking into account all the above criteria.

A correct exchange of information in the network is ensured with the incorporation of blockchain-based emergency data transfer and efficient route selection.

Simulation results

The suggested MANET networks are built utilizing the network simulations-3 (ns-3.25) simulation tool. The C + + and TCL programming languages are utilized to configure the networks using the framework centered on events network setup simulators, ns-3.25. ns-3.25 really makes it possible to integrate blockchain technology, and most wireless network standards are supported. We therefore performed simulations with ns-3. The table below shows the key simulations in Table 2. The hardware setup included a high-performance workstation equipped with an Intel Core i9 processor (3.6 GHz, 16 cores), 64 GB of RAM, and an NVIDIA RTX 3080 GPU to accelerate complex computations such as blockchain transactions, encryption operations, and optimization algorithms. The software environment was built on Ubuntu 20.04 LTS with the GCC compiler for C + + and Python 3.8 for data analysis and visualization. The ns-3.25 simulator was integrated with a blockchain library and cryptographic functions to accurately model blockchain operations. The simulations employed random waypoint and group mobility models to replicate real-world node movement, while adversarial behaviors like packet dropping and jamming were simulated using built-in attack models. This robust hardware-software combination ensured precise and reproducible results, validating the proposed model’s performance in terms of PDR, energy consumption, latency, throughput, and security across diverse MANET scenarios.

The simulation experiments were conducted using the ns2 simulator with the following parameters: node energy levels ranging from 500 J to 1000 J to reflect varying battery capacities in mobile devices; mobility patterns including random waypoint and group mobility models with speeds between 1 m/s and 25 m/s to simulate pedestrian and vehicular movements; and adversarial behaviors with dynamic attack intensities, varying from intermittent jamming to persistent packet dropping, affecting 5–20% of the nodes. These parameters were selected to closely mimic real-world MANET conditions, ensuring a comprehensive evaluation of the Block-DSD framework’s performance under diverse scenarios. Energy consumption was measured based on packet transmission, reception, and idle states, while throughput, packet delivery ratio (PDR), and latency were analyzed under different node densities and adversarial conditions.

The aforementioned arrangement yields the noteworthy performance figures.

Comparative analysis

The comparison of energy efficiency, packet delivery ratio (PDR), and throughput in this study is conducted against three baseline methods: Ad hoc On-Demand Distance Vector (AODV), Zone-based Clustering Approach with Fuzzy Logic (ZCA-FL), and Ant Colony Optimization-based Secure Routing (ACO-SR).

-

AODV: Configured with default settings including a broadcast route discovery mechanism, hop-by-hop routing, and node energy of 750 J.

-

ZCA-FL: Implemented with fuzzy logic-based Cluster Head selection, using centrality, residual energy, and node mobility as fuzzy parameters, and a maximum of 15 clusters.

-

ACO-SR: Configured with 50 ants, pheromone evaporation rate of 0.5, and heuristic preference for energy-efficient paths, focusing on minimizing energy consumption and ensuring secure routing.

The proposed Block-DSD framework outperforms these methods by maintaining a PDR of 97%, reducing energy consumption by 20% compared to AODV and ZCA-FL, and achieving throughput improvements of up to 8 Mbps in high-attack scenarios due to its dynamic route optimization and blockchain-based secure data aggregation.

Throughput, security level, Packet Delivery Ratio (PDR), residual energy level (RE), and RE were all compared, as was time analysis (encryption and decryption). For better analysis, two scenarios are established for investigation in the following ways:

Case 1

In this instance, the number of nodes varies while the attackers remain constant. In this instance, we can observe the suggested task performance at different network scales.

Case 2

In this case, we maintain the nodes constant while increasing the number of attackers from one to five. This illustration demonstrates how well the proposed solution works in the presence of attackers.

Analysis of the PDR.

PDR indicates the percentage of all cases.

Figure 7 illustrates how the PDR obtained by the work in question is approximately 97%, which is much higher than the PDR of the existing works. In case 1, as nodes increase, the PDR increases significantly. There is a higher likelihood that an excellent cluster can be selected as the total number of nodes increases.

As a result, the transmission of that data is efficient and reliable, and the PDR reduces as the number of hackers increases. This causes packet loss and the loss of route information. However, the proposed block-sec technique yields improved PDR in both. This study indicates that cluster-based routing contributes to optimal routing despite the advised security precaution.

Energy consumption analysis

The critical performance metric called remaining energy describes the nodes in the network’s current energy condition. The residual energy () for a node is calculated as.

follows:

Here, E_i (Ini) indicate the initial energy level and E_i (Cur) denotes the ith node’s current energy level.

Figure 8 compares the suggested and existent works’ energy efficiency. Generally speaking, the usage of energy expands along with the number of attackers on the network. Attackers are engaging in an effort to exhaust the energy supply of the nodes. The nodes will eventually die when their energy level is completely drained after a specific amount of time. To avoid this situation, the entire network must be protected against attacks. In Fig. 8 (b), the comparison of energy efficiency (RE) in scenario 2 shows that the Block DSD technique maintains a consistently high RE across different numbers of attackers compared to Secure Routing and Fuzzy System. This resilience of Block DSD could be attributed to its effective management of energy consumption and robust security measures, ensuring that the impact of increased attackers on the network’s energy is minimized.

Network lifetime analysis

Figure 9 manifests the number of nodes that are active along with a time scale. It is clear that as time goes on, fewer nodes are still active. The suggested effort sustains a huge number of nodes in this way. The proposed Block-Sec technology not only protects the network from hackers but also reduces node energy consumption by establishing dynamic clusters and selecting the optimum pathways. It is clear from the study that the recommended strategy is acceptable for the dynamic mobile networks to maintain the proper energy levels.

Data analysis

A data packet’s time consumption during transmission is referred to as a delay. The delay is caused by propagation and queue delays. The following situations are compared for delays in this work:

Case 1

for normal data.

Case 2

for emergency data.

Figure 10 compares the delays experienced during routine and crisis data transmission. As can be observed, the proposed approach has an acceptable delay of only 0.0012 s for emergency data and 0.02 s for routine data. Since both works evaluate the same severity level for the data packets, this delay is significantly less than the current work. This enables the current systems to treat every data packet with the same priority level, lengthening the delay. Since the data kind is specified with the flag value, the suggested method also minimizes latency for emergency data.

Throughput analysis

Throughput is the total quantity of information that can be transmitted effectively over a certain time period to the destination. Figure 11 compares the amount of throughput achieved by the suggested work and related works. The throughput measure, in contrast to other features, is dependent on both the data transmission mechanism and the network security level. In other words, throughput is impacted by both security issues and ineffective data transfer. It is evident from scenario 2 that the throughput declines as the number of attackers increases.

This is because attacker nodes commonly attack data sent within a predetermined time frame, stopping the information from reaching its destination within the predetermined time frame. The recommended task consistently outperforms the existing work by increasing throughput by an amount of two and can reach 8 Mbps. The suggested work’s perfect network administration, routing strategy, and strong security plan help achieve the highest throughput over the network. We may state as a conclusion that the offered method significantly lowers data loss.In scenario 1, the throughput analysis shows the performance of the suggested method compared to related works. The throughput achieved by the proposed method is significantly higher, reaching up to 4.55 Mbps, showcasing its effectiveness in improving data transmission efficiency and reducing data loss. The perfect network administration, routing strategy, and strong security plan contribute to this improved performance.

Security level analysis

The security level is set by adding up the total number of packets in the network that have been modified or converted by intruders. Figure 12 shows the number of data packets modified when multiple hackers are active in the network, illustrating the attackers’ involvement.

Security in scenario 1.

According to the research, the work in question achieves a security level of 98%, which is much higher than the existing works. The suggested work has the following characteristics that raise the security level:

The network-efficient implementation of authentication prevents unauthorized nodes from joining, and the use of block chain technology offers a high level of security. The network is consequently free of malicious and unauthorized nodes.

When determining the optimum paths, the trust values are taken into account to ensure that there are no hazardous untrusted nodes.

E2C encryption is used to protect data, preventing hostile nodes from listening in or changing it.

Figure 13 comparison illustrates that the proposed Block-DSD model consistently achieves higher mean performance values across key metrics such as Packet Delivery Ratio (PDR), energy efficiency, throughput, and latency, with narrower confidence intervals indicating reliable and stable performance. The Block-DSD model demonstrates superior PDR, ensuring more successful data deliveries even in dynamic MANET environments, while its energy consumption remains significantly lower due to optimized routing via the Improved Elephant Herd Optimization (IEHO) algorithm. Additionally, the model’s higher throughput is attributed to its secure and efficient data aggregation method, employing blockchain-based authentication and Elliptic Curve Cryptography (ECC). The minimal latency, particularly for emergency data, highlights its suitability for critical applications like disaster management. The confidence intervals reflect that the performance improvements are not due to random variations, reinforcing the robustness of the proposed model over existing methods. These statistical validations emphasize that the Block-DSD model offers a more efficient, reliable, and secure solution for data aggregation in MANETs compared to conventional techniques.

Conclusion

In order to secure data in disaster zones, this research paper developed a revolutionary distributed block chain-assisted secure data aggregation strategy. The entire network is first converted into several zones, which are then grouped into numerous groups by clustering based on zones. The best CH in each cluster is chosen with the assistance of an ANFIS based on various parameters. The block chain network keeps track of all data transfers by updating the hash values. It is suggested to use a secure two-step STS. According to the severity of the disaster, the data packets in STS are examined and divided into normal and emergency data. The Modified Packet Format (MFP) is suggested for this inspection. The IEHO algorithm, which is enhanced by including the genetic crossover operator, is then utilized to choose the best routes for data transmissions. The proposed work performs better in experiments by using fresh methodologies. The proposed Block-DSD framework can be extended to other domains such as vehicular ad-hoc networks (VANETs) for secure data transmission between vehicles, and IoT-based smart city applications for reliable communication in dynamic environments. Future research will focus on integrating real-world datasets, incorporating detailed adversarial attack simulations, and optimizing blockchain consensus mechanisms to further reduce latency and energy consumption. Additionally, extending the framework for multi-hop 5G networks and edge computing environments can enhance its scalability and performance in next-generation wireless networks.

Data availability

Data’s supporting this study is included within this published article.

Abbreviations

- QoS:

-

Quality of Service

- BS:

-

Base Station

- MANET:

-

Mobile Ad hoc Networks

- DSR:

-

Dynamic Source Routing

- AODV:

-

Adhoc On-Demand Vector

- ZCA:

-

Zone-based Clustering Approach

- Block-DSD:

-

Distributed Blockchain-Assisted Secure Data Aggregation

- ANFIS:

-

Artificial Neuro-Fuzzy System

- STS:

-

Secure Two-Step

- IEHO:

-

Improved Elephant Herd Optimization

- ANN:

-

Artificial Neural Network

- ECC:

-

Elliptic Curve Cryptography

- RL:

-

Remaining energy level

- TV:

-

trust value

- RE:

-

residual energy level

- MPF:

-

modified packet format

- PDR:

-

Packet Delivery Ratio

- IS-IS:

-

Intermediate System to Intermediate System

- GPS:

-

Global Positioning System

- GLS:

-

Geographic Information System

- DLT:

-

Distributed Ledger Technology

- LAM:

-

Local Area Multicomputer

References

Thapar, S. & Shukla, R. ‘A review: Study about routing protocol of MANET’, In AIP Conference Proceedings, vol. 2782, no. 1, AIP Publishing. (2023).

Sadhu, P. K., Yanambaka, V. P. & Abdelgawad, A. ‘Internet of things: Security and solutions survey’, Sensors, vol. 22, no. 19, p. 7433. (2022).

Mahapatra, S. N., Singh, B. K. & Kumar, V. ‘A secure multi-hop relay node selection scheme based data transmission in wireless ad-hoc network via block chain’, Multimedia Tools and Applications, 81, 13, pp. 18343–18373. (2022).

Bairwa, A. K. & Joshi, S. ‘An improved scheme in AODV routing protocol for enhancement of QoS in MANET’, In Data Engineering for Smart Systems: Proceedings of SSIC 2021, pp. 183–190, Springer Singapore. (2022).

Beulah, G. J. & Manimekala, M. B. ‘Performance evaluation of routing in enhanced zone based clustering protocol in wireless sensor networks’, Performance Evaluation, 7, no. 3. (2020).

Chen, B., Xia, M., Qian, M. & & Huang, J. MANet: A multi-level aggregation network for semantic segmentation of high-resolution remote sensing images. Int. J. Remote Sens. 43, 15–16 (2022).

Jmal, R. et al. ‘Distributed Blockchain-SDN secure IoT system based on ANN to mitigate DDoS attacks’, Applied Sciences, 13, 8, p. 4953. (2023).

Ramphull, D., Mungur, A., Armoogum, S. & & Pudaruth, S. ‘A review of mobile ad hoc NETwork (MANET) Protocols and their Applications’, In 2021 5th international conference on intelligent computing and control systems (ICICCS), IEEE. pp. 204–211. (2021).

Sugumaran, V. R. & Rajaram, A. ‘Lightweight blockchain-assisted intrusion detection system in energy efficient MANETs’. J. Intell. Fuzzy Syst., (Preprint), pp. 1–16. (2023).

Zolfaghari, B., Yazdinejad, A. & Dehghantanha, A. ‘The dichotomy of cloud and Iot: Cloud-assisted Iot from a security perspective’, (2022). arXiv preprint arXiv:2207.01590.

Al-Qurabat, A. K. M., Salman, H. M. & Finjan, A. A. R. Important extrema points extraction-based data aggregation approach for elongating the WSN lifetime. Int. J. Comput. Appl. Technol. 68 (4), 357–368 (2022).

Li, J. & Kassem, M. Applications of distributed Ledger technology (DLT) and Blockchain-enabled smart contracts in construction. Autom. Constr. 132, 103955 (2021).

Choudhary, A. K. & Rahamatkar, S. ‘Evaluation of Trust Establishment Mechanisms in Wireless Networks: A Statistical Perspective’, In Proceedings of 3rd International Conference on Machine Learning, Advances in Computing, Renewable Energy and Communication: MARC Singapore: Springer Nature Singapore, pp. 169–184. (2022).

Kavitha, V. & Ganapathy, K. ‘Galactic swarm optimized convolute network and cluster head elected energy-efficient routing protocol in WSN’, Sustainable Energy Technologies and Assessments, 52, p. 102154. (2022).

Moussa, N., Alaoui, E. B. E. & A DACOR: a distributed ACO-based routing protocol for mitigating the hot spot problem in fog‐enabled WSN architecture. Int. J. Commun Syst. 35 (1), e5008 (2022).

Quy, V. K., Nam, V. H., Linh, D. M., Ban, N. T. & Han, N. D. ‘A survey of QoS-aware routing protocols for the MANET-WSN convergence scenarios in IoT networks’, Wireless Personal Communications, 120, 1, pp. 49–62. (2021).

Abbasian Dehkordi, S. et al. A survey on data aggregation techniques in IoT sensor networks. Wireless Netw. 26, 1243–1263 (2020).

Islam Khan, B. U. I., Goh, K. W., Khan, A. R., Zuhairi, M. F. & Chaimanee, M. Resource Management and Secure Data Exchange for Mobile Sensors Using Ethereum Blockchain Symmetry 17(1). https://doi.org/10.3390/sym17010061 (2024).

Islam Khan, B. U. I., Goh, K. W., Khan, A. R., Zuhairi, M. F. & Chaimanee, M. Integrating AI and blockchain for enhanced data security in IoT driven smart cities. Processes 12 (9). https://doi.org/10.3390/pr12091825 (2024).

Islam Khan, B. U. I. et al. Blockchain-Enhanced Sensor-as-a-Service (SEaaS) in IoT: Leveraging Blockchain for Efficient and Secure Sensing Data Transactions. 2024. Information. 15(4). (2024). https://doi.org/10.3390/info15040212

Olanrewaju, R. F., Islam Khan, B. U. I., Kiah, L. M., Abdullah, N. A. & Goh, K. W. Decentralized blockchain networks for resisting Side-Channel attacks in Mobility-Based IoT. Electronics 11 (23). https://doi.org/10.3390/electronics11233982 (2022).

Islam Khan, B. U. I. et al. SGM: strategic game model for resisting node misbehaviour in IoT-Cloud ecosystem. Information 13 (11). https://doi.org/10.3390/info13110544 (2022).

Anwar, F., Khan, B. U., Kiah, M. L., Abdullah, N. A. & Goh, K. W. A comprehensive insight into blockchain technology: past development, present impact and future considerations. Int. J. Adv. Comput. Sci. Appl. ;13(11). (2022).

Khan, B. U. I., Anwar, F., Olanrewaju, R. F., Kiah, M. L. B. M. & Mir, R. N. Game Theory Analysis and Modeling of Sophisticated Multi-Collusion Attack in MANETs, in IEEE Access, vol. 9, pp. 61778–61792, (2021). https://doi.org/10.1109/ACCESS.2021.3073343

Khan, B. U. I., Anwar, F., Olanrewaju, R. F., Pampori, B. R. & Mir, R. N. A Novel Multi-Agent and Multilayered Game Formulation for Intrusion Detection in Internet of Things (IoT), in IEEE Access, vol. 8, pp. 98481–98490, (2020). https://doi.org/10.1109/ACCESS.2020.2997711

Author information

Authors and Affiliations

Contributions

All authors contributed to the study, conception, and design. All authors commented on the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Sugumaran, V.R., Dinesh, E., Ramya, R. et al. Distributed blockchain assisted secure data aggregation scheme for risk-aware zone-based MANET. Sci Rep 15, 8022 (2025). https://doi.org/10.1038/s41598-025-92656-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-92656-8

Keywords

This article is cited by

-

DBIA-DA: dual-blockchain and ISCP-assisted data aggregation for fog-enabled smart grid

EURASIP Journal on Wireless Communications and Networking (2025)

-

A Comprehensive Survey Inspired by Elephant Optimization Algorithms: Comprehensive Analysis, Scrutinizing Analysis, and Future Research Directions

Archives of Computational Methods in Engineering (2025)