Abstract

There are some key problems faced in modern agriculture that IoT-based smart farming. These problems such shortage of water, plant diseases, and pest attacks. Thus, artificial intelligence (AI) technology cooperates with the Internet of Things (IoT) toward developing the agriculture use cases and transforming the agriculture industry into robustness and ecologically conscious. Various IoT smart agriculture techniques are escalated in this field to solve these challenges such as drop irrigation, plant diseases detection, and pest detection. Several agriculture devices were installed to perform these techniques on the agriculture field such as drones and robotics but in expense of their limitations. This paper proposes an AI-IoT smart agriculture pivot as a good candidate for the plant diseases detection and treatment without the limitations of both drones and robotics. Thus, it presents a new IoT system architecture and a hardware pilot based on the existing central pivot to develop deep learning (DL) models for plant diseases detection across multiple crops and controlling their actuators for the plant diseases treatment. For the plant diseases detection, the paper augments a dataset of 25,940 images to classify 11-classes of plant leaves using a pre-trained ResNet50 model, which scores the testing accuracy of 99.8%, compared to other traditional works. Experimentally, the F1-score, Recall, and Precision, for ResNet50 model were 99.91%, 99.92%, and 100%, respectively.

Similar content being viewed by others

Introduction

The increasing demand for agriculture goods is continually escalated due to the rapid population growth all over the world1. The need for the crop yield production is expected to double its output by 2050. It means that the crop production should be increases by 2.4% each year2. However, the crop yield production is lost by about 20-40% every year due to the plant diseases and insect pests. As well as the shortage of water resources required to grow the plant. Plant disease identification has emerged as a critical concern in recent years3. Among the variety of fruits and vegetables consumed, tomatoes, potatoes, and bell peppers are particularly significant. Timely identification of diseases affecting the leaves of these crops is critical. Plant leaves serve as the primary means of detecting leaf infections since the majority of disease symptoms manifest visibly on the leaves4.

An automated image-based disease detection and classification mechanism is essential for ecosystem health and ensuring food production. Advanced agriculture techniques, such as image processing and DL rather than encountered machine learning (ML) challenges5, offer promising approaches for disease identification and management, potentially revolutionizing agricultural practices. They are utilizing advanced technology, analyzing plant images, eliminating reliance on subjective judgments and delivering more reliable results. Timely identification of crop diseases is crucial for taking necessary action, preventing extensive damage in large-cultivated areas.

Adaptive transfer learning technique as a branch of deep learning could solve ML limitations. It can achieve better performance on the target task with less data and computational resources compared to the inefficiencies associated training a model from scratch6. This not only reduced the demand for extensive computational resources but also enhanced the model’s ability to generalize well to diverse datasets. That’s why we chose to embrace transfer learning in our study, as it offered significant advantages. In this paper, the transfer learning technique in the DL was used in this paper for the plant diseases detection.

A diverse advanced techniques were released not only to solve the problem of the plant diseases, but extended to preserve water irrigation and mitigate the spread of pests…etc. They should be equipped on agriculture devices as drones, and robotics to particularly handle the farming operations at the agriculture field. Unmanned Aerial Vehicles (UAVs) or drones is becoming a promising development technology toward remote agriculture applications. UAVs are remotely controlled and wirelessly connected using WSN technology to fly over a wide range of field for developing agriculture applications such as plant diseases detection and pest attack control There are more than 250 models over all the world in the agriculture field7,8,9. UAVs may be equipped by RGB camera, or Hyper-spectral camera, or Multi-Spectral camera based on the required agriculture use case to capture aerial images about the cultivated field as an extensive data set to develop the deep learning algorithms for plant diseases prediction, pesticides spraying, and plant growth monitoring10,11,12.

Most of UAV models with its different characteristics and attributes can perform two main agriculture operations: (1) aerial imagery for real time data monitoring of the crop production and the vegetation landscape, (2) spraying liquid “fertilization, chemical pesticides, water” for plant diseases treatment, pest attack control, and irrigation, respectively13. However, it is difficult to practically design and implement one UAV model for performing two major operations. The characteristics and attributes “endurance, weight, speed, and cost” should be also considered and evaluated before design UAV model on the basis of the type of the farming operation and their requirements. UAVs have many limitations due to high cost, low battery lifetime, communication distance. Sometimes the flight time is insufficient to develop all farming operations that required from UAV14.

On the other side, the human being could be replaced by robotics for a better and smart management of crop yield in the farming operations. Robotics perform a multi-tasking operations to preserve time, human efforts, and cost in the multiple agriculture use cases15. They can recognize the suitable time for the water irrigation, the sowing of seeds, weeding, fertilization, spraying pesticides for pest attack, and the plant diseases detection13,16,17,18. However, working robotics in the ground operations causes many limitations such as obstacles, terrain or uneven plains, path planning, speed, and performing multi agriculture tasks with reduced and less hardware equipment15,19.

Rather than the works3,4,5,6, the paper is working to exploit both image processing and deep learning techniques for the robustness plant diseases detection. Both techniques are setup on the proposed hardware pilot for the plant diseases treatment. The hardware pilot is placed on the existing central pivot instead of the use of UAVs and robotics to fill the gap of the limitations of UAVs and agriculture robotics. Imposing both image processing and deep learning techniques with the hardware pilot on a central pivot is called artificial intelligent-internet of things (AI-IoT) smart agriculture pivot.

Rather than UAVs, the proposed AI-IoT smart agriculture pivot can perform both two main agriculture missions that performed by different UAV models and cover different regions of a farm field13. The pivot height is near to the crops to easily access the field of tall crops and then spray liquid for plant diseases treatment, pest attack control, and water irrigation. Its pivot has a very long arm that covers a large coverage of cultivated regions to perform most air farming operations of UAVs as aerial imaginary for plant diseases detection without need to be equipped by multi-spectral or hybrid-spectral camera that is expensive and consumes high power. On the other side, a proposed AI-IoT smart agriculture pivot can perform all farming operations at low altitude of about 2 ~ 3 m from the ground to avoid the robotics’ limitations.

In this paper, the proposed AI-IoT smart agriculture pivot performs the plant diseases detection and treatment using both techniques of image processing and DL. Accordingly, the proposed tasks in the plant diereses detection and treatment lead to make a robustness decision by the ResNet50 model, score high accuracy, and minimize the loss of the detection and the treatment of the plant diseases on the pivot itself without the use of the cloud computing or edge computing.

The main contributions of this paper are as follows:

-

Proposing an AI-IoT smart system agriculture pivot architecture for plant diseases detection and treatment.

-

Design and implementation of a hardware pilot to prove the concept.

-

Pre-processing images before training, testing, and validating using a ResNet50 deep learning model.

-

Classification the leaf images onto 3-healthy images and 8-unhealthy images using a pre-trained ResNet50.

-

Spraying the fertilizations to treat the detected plant diseases.

-

Design a mobile application to get the farmers advices and guide lines about the plant diseases detection and treatment methods.

The paper is organized as follows: Section “Related work” tackles the related works. The methodology is presented in Section “Methodology”, including the proposed AI-IoT smart agriculture pivot architecture and a hardware pilot. Experimental setup including data reprocessing, plant diseases detection, plant diseases treatment, and mobile App are described in Section “Experimental setup analysis”. Section “Experimental results” explains the experimental results. Finally, the conclusion and the future works are discussed in Section “Conclusion”.

Related work

In this section, the previous scientific articles tackled the DL models that widely used for solving the problem of the plant diseases detection. While, other works discussed how to carry the advanced technologies “IoT” and techniques on the traditional central pivot for the plant diseases treatment.

For plant diseases detection, a convolutional neural network model was presented for detecting and identifying plant leaf diseases based on visual data to boost accuracy, generality, and the overall efficiency of training. The experimental results showed that the proposed convolutional neural network-based model outperforms other previous models by a classification accuracy of 99.23%20.

Sun et al.,21 proposed a convolutional neural network architecture FL-EfficientNet (Focal loss EfficientNet), which was used for multi-category identification of plant disease images. The experiment used the public data set New Plant Diseases Dataset (NPDD) and compared it with three models: ResNet50, DenseNet169, and EfficientNet. The accuracy of FL-EfficientNet in identifying ten diseases of 5 kinds of crops is 99.72%. In22, R. Santhana Krishnan and E. Julie proposed an enhanced convolution neural network (CNN) based on a visual geometry group-16 (VGG-16) was used for potato leaf disease classification. The convolution layers of VGG-16 along with the Inception and the SE block were used in this research for classification. This model achieved the highest classification accuracy of 99.3%.

-

a.

Jerome et al.,23, discussed a DL based assessment-based convolutional neural network (A-CNN) method to detect healthy and unhealthy plant leaves. This method was implemented to detect widespread plant diseases and achieve a high accuracy of 92% with the proposed classification. In24, T.-Y. Lee, et al., presented a high-efficiency CNN architecture tailored for potato disease detection. The authors developed a training set using image processing techniques. They utilized the Adam optimizer and cross-entropy for model analysis, with a Softmax serving as the final judgment function. This CNN achieved an impressive accuracy of 99.53%.

-

b.

Agarwal, et al.25 developed a CNN model for potato crop disease classification. The architecture comprised of four convolution layers with 32, 16, and 8 filters in each respective layer, and achieved an impressive accuracy of 99.8%. In26, M. Umer, et al. tackled a five-layered CNN model for automatic detection of plant disease utilizing leaf images. For the better training on a CNN model, 20,000 augmented images are generated to perform the accuracy of 99.99%. M. S. Hossain, et al.27 proposed the CNN model to obtain an accuracy of 99.44% for training, 97.34% for validation, and 96.88% for testing. For the plant diseases treatment, it is required to carry DL models that aforementioned above or other DL techniques over a hardware pilot, located on a traditional central pivot.

Recently, a central pivot was invented as a mechanical and electrical hardware pilot for water irrigation in an efficient manner. Its technique relied on irrigating water quite uniformly. A pivot sprayed the equal quantity of water over the farm land. Accordingly, Pivot was more suited for irrigating a circular farm land. It had a control unit (CU) to irrigate the field using sprinklers by the same water quantity and the same flow rate. In28, A. Shilpa et al., the IoT technology was utilized to develop a traditional pivot version, called center pivot irrigation (CPI), a class of self propelled irrigation system to detect the percentage of water irrigation for each segmented region inside a circular farm land according to the variety of topography and types of soil. The CPI was used for all uneven topographic fields and of course there was no crop limitation by following this system for irrigation. The convenient circular irrigation can cover about 76% of the field, while placing the end gun in the system can increase more additional irrigated area in the field and reduce the unirrigated area. IoT with the Self-Propelled CPI System technology was proposed to monitor the values of temperature and soil moisture in the farm and uses the internet gateway to send the data onto the database for storage, analyzing, and decision making to actuate an appropriate choice whether, irrigation the water or not.

For both water irrigation and fertilization without plant diseases detection, Badreldeen et al.29 updated the central pivot system (CPS) using an IoT technology by adding the rain sensor and humidity sensor to the system to improve water irrigation management and save water resources.

For crop irrigation monitoring, Matilla et al.,30 presented center pivot irrigation monitoring systems based on the IoT technology and two low-cost communication technologies LoRaWAN and GPS to report on demand the pivot states or change in its operation such as an unexpected stop and monitor the pivot sensors onto the farmer. Both wireless technologies solved the long communication problem that expected in the wide space of field and the far fields for successful irrigation monitoring system. The proposed system electrically explained the interfacing between LoRaWAN and GPS and the CU of the pivot and given a case study to prove the concept. The packet loss, received packets was evaluated to study and analyze an effect of these parameters on the operation of the system.

O. Debauche et al.,31 proposed a multi agent system (MAS) based on Kubernetes and Docker architecture32 to adapt AI algorithms on heterogeneous clusters at the edge level for computing water use and videos of plant diseases near the pivot and minimize the processing time required to make the decision based rule-conditions.

Several AI algorithms were tested on water requirements and plant village database to validate the operation of the proposed architecture. However, the pest detection wasn’t considered in these works. Table 1 shows a state-of-the-art literature review for previous studies.

Methodology

The proposed work includes: the AI-IoT system architecture and the hardware pilot. The proposed AI-IoT smart system agriculture pivot for plant diseases detection and treatment is shown in Fig. 1.

The proposed system architecture

The proposed system architecture consists of three major network layers: sensor field layer, communication layer, and application layer. The sensor field layer includes all hardware components associated with the traditional central pivot in the farm field such as controller, sensors, actuators, cameras…etc. The sensor field layer is the physical layer that performs both monitoring and actuating processes in the farm field. The monitoring process is to monitor the real time data about the farm field from the sensors and cameras such as temperature, humidity, rain, soil moisture, and images of a leaf plant. While, the actuating process is to control and manage the overall hardware components by the actuators “sprinklers” for decision making. Such as water irrigation, pest attack control, and plant diseases detection. The actuation process may be operated in the application layer.

The communication layer represents the connectivity between the sensors and cameras associated with the central pivot in the sensor field layer and the user interface in the application layer using the internet cloud. The communication is happened by two ways. The central pivot could be connected directly onto the cloud using the convenient cellular communication networks in one way. It can use an indirect wireless connection using the Lora WAN/Wi-Fi technologies with the Gateway in the other way. The application layer includes the web browser and the mobile App. The web browser can store and log all data sent from the sensor field layer based on its database “MYSQL”. It can also retrieve the log data from the database onto the user interface “API: web browser” to graphically display these data with time and use the statistical analysis to discover a new and useful information about the farm field status. While, the mobile App can periodically receive a real time data monitoring from the sensors and cameras in the sensor field layer. The mobile App assists the farmer for monitoring and controlling the farm field. The mobile App helps the farmer to remotely monitor the status report information about the plant diseases in the farm field and treatment methods such as regions of plant diseases and healthy plant in the farm, the dates of irrigation, dates of spraying liquid of fertilizations, dates of turning ON and OFF the sprinklers…etc. In addition, it enables the farmer to manually control the proposed pivot that located in the sensor field layer by actuators.

The proposed hardware pilot

Rather than previous works28,29,30,31,32, the proposed hardware pilot is designed and implemented on the central pivot itself as part of the sensor field layer of Fig. 1 for the plant diseases detection & treatment. According to the proposed system architecture, the hardware pilot consists of three main dependent levels: physical level, communication level, and network level.

Physical level

As shown in Table 2, the proposed pivot includes controller, actuators “two pipelines, sprinklers, water pump”, camera, and communication technology “SIM800L GSM/GPRS Module”. The central pivot is equipped by two pipelines; one pipeline for the use in the fresh water irrigation and the other pipeline for spraying fertilizations on regions, where suffer from plant diseases. Two peer pipelines along the pivot axis are ended by the doubled sprinklers to obtain both water irrigation and spraying fertilizations.

In this proposal, cameras should be deployed at different places of the pivot axis " pivot point, pivot tires, and the pivot end” to cover all regions of the field farm. Cameras are placed on the low altitude of the central pivot to avoid the limitations of the robotics aforementioned above15,19. RBG camera " raspberry pi camera” is sufficient to preciously capture images about the plant leafs without the use of a very expensive spectral or hybrid-spectral cameras that equipped on multiple drones10,11,12. The cameras are connected with the Raspberry pi controller, located at the tower of the pivot point.

The CU of the convenient central pivot is replaced by the Raspberry pi controller to perform all irrigation operations followed in the traditional pivot30, inspect the plant crop for plant diseases detection, and spray the required fertilization liquid for plant diseases treatment.

Raspberry pi controller can support most of Machine Learning (ML) and Deep Learning (DL) models in the AI technology. Raspberry pi module is a good developer for multiple open source python libraries “Scikit-learn, Py-Torch, Keras, and Pandas” to create the ResNet50 model as one of residual DL models, installed in the Raspberry pi module to train, test, and validate the huge amount of leaf plant images form the NPDD source and obtain the plant diseases detection.

Communication level

The role of the Raspberry pi controller is extended to the communication layer in the proposed architecture by the act of its communication unit, called communication level. In this level, the raspberry pi is equipped by different communication technologies “Ethernet, Bluetooth, GSM/GPRS module, and Wi-Fi”, which enable it to remotely communicate with the application layer through the internet. Each communication technology was selected based on its communication range. The proposed hardware pilot is developed by the SIM800L GSM/GPRS module as one of several wireless technologies, widely used for a long distance. It uses SIM800L GSM/GPRS module to send the classified images onto the mobile App at the application layer based on the existing cellular network towers33.

Application level

The mobile App was setup in this work using flutter framework and python code to diagnose some types of healthy or diseased leaf plant of pepper, potatoes, and tomatoes. As well as, the mobile App can classify among different leaf plant diseases based on the ResNet50 DL model that built in the Raspberry pi controller.

Raspberry pi can interface with camera to perceive leaf plant images in the physical level. The collaborated images were augmented using to extract their important features before the classification process. The augmented images were trained, validated, and tested using ResNet50 model to identify the leaf plant, whether, healthy and diseased for the plant diseases detection. In the communication level, Raspberry pi controller exploits its SIM800L GSM/GPRS module to transfer the classification type “healthy/diseased” onto the mobile App in the application layer.

Experimental setup analysis

The hardware pilot of the proposed AI-IoT smart agriculture pivot is experimentally setup and operated in this section for the plant diseases detection and treatment, including the following processes: Data augmentation, plant disease detection, plant diseases treatment, and design mobile application.

Data augmentation

The captured images need to be reprocessed for obtaining a very precise and clear image features extraction before going to the plant diseases classification. The augmentation process grants the ResNet50 classifier model an ability to learn the incoming image features with a very high accuracy and a lowest loss of the plant detection. Images are enhanced using the following image processing techniques: rescaling, shear range, zoom range, horizontal flip, and resizing. Rescaling process is to normalize the image pixel values from the range of 0 to 255 onto the range 0 to 1 by dividing each pixel value by 255.5 in order to make the training of the classifier model is more fast convergence. For example, if the original pixel values of a captured image are: [120, 105]. Thus, the rescaling pixel values become [0.471, 0.412].

Shearing process is a transformation that displaces each point in a fixed direction, effectively skewing the image. A shear range of 0.2 means the image will be randomly sheared by an angle up to 0.2 radians (approximately 11 degrees), introducing variability that can make the model robust to such transformations in real-world scenarios. This process represents the random zoom of about ± 20% from the image size. It enables the learning model to recognize objects in the images regardless of variations in their size, simulating a closer or further away camera effect. Figure 2 illustrates the original image pixels’ histogram, while Fig. 3 shows the histogram of the shearing of the image pixels by 0.2 range, showing its impact on the training dataset, compared to the original image pixels.

Horizontal flipping makes the random horizontal flipping of images. It is a common augmentation for scenarios where the orientation of the objects in the images (left to right or right to left) does not imply different categories. It effectively doubles the variety of horizontal orientations the model will encounter, enhancing generalization. A process of resizing all images from a resolution of 256 × 256 pixels to a uniform resolution of 224 × 224 pixels as specified by the target_size parameter for a consistent shape for the image pixels, which is required by the model for classification process. The used dataset is available on the Kaggle repository: https://www.kaggle.com/datasets/vipoooool/new-plant-diseases-dataset.

Plant diseases detection

A robust deep learning model for plant disease classification is developed by Raspberry pi controller upon data features that are extracted from data augmentation of the images. We employ ResNet50 deep learning model on Raspberry pi controller to analyze plant leaves and diagnose the disease type on the leaf, offering valuable tools for the plant growth in agricultural field. ResNet50 model has emerged as a powerful deep learning model due to the following aspects: (1) its ability to learn complex patterns and images from data, (2) its deep residual learning framework, which facilitates the training of deeper networks without the degradation problem, (3) utilizing ResNet50 pre-trained on the ImageNet dataset exploits its rich feature extractions derived from a vast and diverse set of natural images, making it an ideal starting point for the plant diseases detection, (4) its transfer learning attribute allows ResNet50 model during pre-trained on large-scale datasets to make the fine-tuning for specific tasks, and adaptable to diverse image classification problems.

The proposed ResNet50 model structure

As shown in Fig. 4, ResNet50 model is structured form 50 layers, including a series of convolutional, pooling, and fully connected layers35. A series convolutional layer is attributed as the residual blocks that have shortcut connections to allow the training in the deep neural network to learn residual functions by addressing the vanishing gradient problem. The output of a residual block can be mathematically described in Eq. (1)36.

where x is the input to the residual block, F(x,W) represents the operations performed within the block, typically consisting of convolutional layers, batch normalization, and activation functions, W denotes the weights associated with the convolutional layers within the block. The input x is added to the output of the function F(x,W) to characterize the “residual” nature of the block, enabling the network to learn how to identity functions effectively and thus deeper feature representations without degradation.

The proposed ResNet50 deep learning model structure35.

After the convolutional layers, ResNet-50 typically uses global average pooling (GAP) layer to reduce each feature map to a single number by calculating the average of the elements. It can minimize the spatial dimensions of the input feature maps, thus summarizing the essential statistical features in each channel. Equation (2) represents the mathematical formula of the GAP layer as follows37:

Where H and W are the height and width of the feature map, respectively, and \(\:{\text{x}}_{ij}\) represents the value at the (i, j) position in the feature map. After the GAP layer, a fully connected layer effectively uses the summarized or reduced features from the GAP to produce the final detection, followed by a softmax activation function for selection and classification among multiple output classes. This layer acts as a classifier or a detector that maps between one of the different reduced features of the plant image in one side and one of multiple output classes in the other side. The output of the fully connected layer was denoted in Eq. (3)38.

Where represents the output of the GAP layer, serving as the input to the fully connected layer, Wfc denotes the weight matrix of the fully connected layer, and bfc is the biases associated with the fully connected layer.

ResNet50 model programming

ResNet50 model as a classifier could be adapted, trained, validated, and tested using python code at the Raspberry pi controller for plant diseases detection and classification. Adaptive ResNet50 model requires the initialization, and adding extra layers instead of the fully connected layer in the top of the ResNet50 structure. Table 3 lists the initialization parameters of the ResNet50 model, and the extra layers added to the original ResNet50 model structure, respectively. About 25,940 images from New Plant Diseases Dataset are trained, validated, and tested for the plant diseases detection.

The dataset comprises to 11-classes; 8-diseased plants and 3-healthy plants as depicted in Table 4. The dataset is split into: 70%, 20%, and 10% for training, validation, and testing, respectively. 18,158-images, 5,188-images, and 2,594-images are specified for training, validation, and testing, respectively. Each group of images involves 11-classes of plants. Table 5 lists the settings of the training parameters.

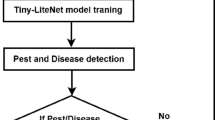

Plant diseases treatment

After the plant diseases detection and classification process happened, ResNet50 model is able to discover the status “healthy/diseased” of any new captured images by a camera. In case of a new captured image is classified onto unhealthy image, Raspberry pi exploits the actuators “water pump, sprinklers, two pipelines, water tank, and fertilization liquid tank, and switches” for the plant diseases treatment. As shown in Fig. 3, Raspberry pi controller is connected to a water pump motor and the electronic switches to control the process of the plant diseases treatment. It operates the water pump to pull down the liquid from the fertilization liquid tank to its specified pipeline and opens the sprinklers by electronic switches to spray this liquid across the farm regions that are detected as a diseased plant. Otherwise, if the new captured image is detected as a healthy image, the raspberry pi turns off the water pump and close the sprinklers by the electronic switches “solenoid valve”. Table 6 summarizes the work flow of the plant diseases treatment process using Raspberry pi controller.

On the other hand, Raspberry pi controller acts as the traditional CU of the central pivot for the water irrigation process. Figure 5 shows the interfacing among the Raspberry pi, sprinklers, and the water pump to water the plant based on the scheduled and frequent irrigation periods, and the type of crop. As shown in Fig. 5, Raspberry pi controller operates the water pump to pull down a fresh water from the water tank onto its specified pipeline and opens their sprinklers by the electronic switches to water the farm land according to the water quantity and irrigation periods, which are required to grow the plant. Otherwise, it turns off the water pump and the sprinklers.

Mobile application

Figure 6a shows the front end page of the main PLANTUM mobile App that was implemented in the application layer of the proposed AI-IoT smart agriculture pivot. The PLANTUM mobile App enables the farmer to test the plant’s leaf whether healthy or diseased, specifically pepper, potatoes, tomatoes. It can also identify the type of plant diseases using the scan operation or the upload operation. In the scan operation, the App can scan any real images of the leaf and classify them onto one of the 11-classes “3-healthy or 8-diseased” as shown in Fig. 6b,c, respectively. Figure 6b shows the healthy bell pepper image after that scanned by PLANTUM App. Firstly, the image is captured by App then retrieved to the Raspberry pi controller in the sensor field layer for testing the plant diseases classification by using ResNet50 model.

The ResNet50 model classifies the captured image onto a healthy bell pepper. This classification is transferred onto the PLANTUM App to carry out this type of classification onto the farmer. Figure 6c describes the diseased bell pepper “bacterial spot” image that scanned by PLANTUM App. The scanned image begins to be sent onto the Raspberry pi controller for classification. After that, the ResNet50 model detects the bacterial spot on the leaf as one of 8-diseased classes. This result is sent onto the PLANTUM App. The upload operation is similar to the scan process. In the upload operation, PLANTUM App can upload any plant images form agriculture websites for plant diseases classification.

After building the proposed hardware pilot in our experimental lab model, the processes of data augmentation, plant diseases detection, plant diseases treatment and mobile App were cooperated together to develop the operation of the proposed hardware pilot as follows:

-

Monitoring the images of the leaf plant in the farm field that captured from a camera on the raspberry pi.

-

Augmentation images “rescaling, shearing, zooming, flipping, and resizing” to extract their precise features using python code on the Raspberry pi controller.

-

Image classification “plant diseases detection process” as one of 11-classes of the leaf plant using ResNet50 model that adapted, configured, and programmed through python code on raspberry pi controller.

-

Controlling the central pivot “plant diseases treatment process” by an act of Raspberry pi controller and actuators “sprinklers, pipelines, water tank, electronic switches”.

-

Figure out the type of leaf plant classification from Raspberry pi controller onto the PLANTUM Mobile App using SIM800L GSM/GPRS module.

Experimental results

Both data augmentation and the plant diseases detection processes were analyzed and evaluated in the experimental setup section to obtain an optimum plant diseases detection.

Data augmentation results

Figures 7 and 8 show the data augmentation results of zooming and horizontal flip, respectively. As shown in Fig. 7, zooming the original image helps the model to precisely detect the plant diseases. Figure 8 shows the transformation of the original image onto horizontally flipped image. This transformation is leading to train the model by diversifying the dataset and improving model robustness.

Plant diseases detection results

The paper presents the system performance metrics of the ResNet50 model for plant diseases detection as: model accuracy, confusion matrix, and classification report.

Table 7 evaluates the accuracy of the ResNet50 model during training, validation, and testing the augmented 25,940 images using python programming on raspberry pi controller to classify one of the 11-plant classes. The confusion matrix reflects the ability of ResNet50 model to classify 11-classes plants in the 2,594 testing images of as a predicted classes compared to actual classes.

The classification report indicates the evaluation of the performance metrics “precision, recall, and F1-score” for the 11-plant classes of the 2,594 testing images as listed in Table 8. High precision scores (close to 1.00) indicate that the model makes very few false positive errors across most classes and vice versa. High recall scores (close to 1.00) indicate that the model captures a high proportion of actual positive instances for each class and vice versa. The F1-score balances precision and recall, is consistently high across classes, showcasing the model’s overall robustness in classification.

The classification report results showed that the high accuracy, along with balanced precision, recall, and F1-scores across classes, underscores the effectiveness of our model in accurately classifying plant diseases. This performance is crucial for real-world applications, where accurate disease detection is paramount for effective crop management and yield optimization. The classification report has several evaluation metrics are calculated based on Equations from (4) to (7)39.

These metrics are essential in deep learning, particularly for classification models, as they help assess the performance of a model in various scenarios. Accuracy measures the overall correctness of the model by calculating the ratio of correctly predicted observations to the total number of observations. It provides a general measure of model performance but may not always be the best metric when dealing with imbalanced datasets. Precision focuses on the reliability of positive predictions by determining the proportion of correctly predicted positive cases out of all predicted positive cases. It is particularly important in situations where false positives need to be minimized, such as in medical diagnoses or fraud detection. Recall evaluates the model’s ability to correctly identify actual positive cases. It is calculated as the ratio of correctly predicted positive cases to the total number of actual positive cases. This metric is crucial when false negatives carry significant consequences, such as in disease detection or security applications. Finally, the F1-score serves as a balanced measure by taking the harmonic mean of precision and recall. It is especially useful for handling imbalanced datasets, where a high accuracy alone may not accurately reflect model performance. The F1-score ensures that both precision and recall are considered, making it a more comprehensive evaluation metric.

As seen in Fig. 9, the majority of classes exhibit diagonal dominance, indicating that the model’s predictions align well with the actual classes. On contrary, there are minimal off-diagonal elements of the dark colors that record minimum values of misclassifications. It is showed that the diseased classes “Pepper, bell__Bacterial_spot, Potato__Late_blight, Tomato__Tomato_Yellow_Leaf_Curl_Virus” record the perfect predictions (precision, recall, and F1-score of 1.00) and zero misclassification, reflecting the strong ability of ResNet50 model to distinguish these classes.

Figure 10 shows the accuracy of training and testing for the ResNet50 model over 30 epochs. The orange training curve starts at 52.34% and reaches 100%. Similarly, the green testing curve begins at 62.97% and rises to 99.8%. Figure 11 shows the testing and training loss for the ResNet50. The training loss begins from 1.1838 and decreases to 0.00001 after 30 epochs, while the test loss starts at 0.8635 and down to 0.0244.

Finally, different DL models were trained, validated, and tested by using the same conditions of ResNet50 model such as data augmentation techniques, images size, image numbers, splitting ratio (70%, 20%, 10%), classes number, and configuration parameters. It is concluded that ResNet50 model outperforms other DL models due to their aspects discussed in the previous section. As listed in Table 9, the accuracy of ResNet50 model is higher than that in the VGG model, CNN model, and MOBILNET model. Table 10 lists the comparison study among different related works and the proposed work at the same conditions.

Conclusion

This paper presents the plant diseases detection and treatment based a proposed AI-IoT Smart Agriculture Pivot for plant for solving the crop production and the lake of the food in the agriculture field. Furthermore, the paper presented different enhancement techniques and technologies and their related works, widely used for plant diseases detection. The paper introduced the most common agriculture devices as a solution for plant diseases treatment such as UAVs, robotics, and pivot. The proposed AI-IoT Smart Agriculture Pivot is architected, designed, implemented, programmed using python code, and controlled by Raspberry pi controller in order to achieve the plant diseases detection and treatment. The programming of the proposal includes data augmentation, and ResNet50 DL model for training, validation, and testing the augmented images from NPDD source. It is concluded that ResNet50 model scores a high accuracy during the classification of 11-plant classes compared to other DL models and outperforms other related works for plant diseases detection. Experimentally, the ResNet-50 achieved a testing accuracy of 99.8%, while F1-score, Recall, and Precision, for ResNet50 model were 99.91%, 99.92%, and 100%, respectively. A design of a mobile application is developed to provide farmers with expert advice and guidelines on plant disease detection and treatment methods. Further, the paper presents an automation of the spraying of fertilizers to treat identified plant diseases. Moreover, Raspberry pi controller can control the implemented hardware pilot for spraying the liquid on the farm land for the plant diseases treatment. In the future work, we look to improve the water irrigation process in the proposed AI-IoT smart agriculture pivot compared to the traditional central pivot for preserving water resources. The proposed pivot will develop the pest attack control and its treatment.

Data availability

The paper has a dataset available in the Kaggle repository https://www.kaggle.com/datasets/vipoooool/new-plant-diseases-dataset.

References

Fróna, D., Szenderák, J. & Rákos, M. H. The challenge of feeding the world. Sustainability 11(20), 5816 (2019).

Ray, D. K., Mueller, N. D., West, P. C. & Foley, J. A. Yield trends are insufficient to double global crop production by 2050. PLoS One 8(6), 1–8 (2013).

Ristaino, J. B. et al. The persistent threat of emerging plant disease pandemics to global food security. Natl. Library Med. 118(23), e2022239118 (2021).

Hassan, S. M., Maji, A. K., Jasiński, M., Leonowicz, Z. & Jasińska, E. Identification of plant-leaf diseases using CNN and transfer-learning approach. Electronics 10(12), 1388 (2021).

Meena, S. D., Kumar, K. A. Y., Mandava, D., Sri, K. B., Panda, L. et al., Plant diseases detection using transfer learning. In Proceedings of fourth international conference on computer and communication technologies, pp. 1–16 (2023).

Ali, A., Yaseen, M., Aljanabi, M. & Abed, S. Transfer learning: A new promising techniques. Mesopotamian J. Big Data 2023, 29–30 (2023).

Deore, B. D., Kasar, P., Bhamare, M. A. & Sahane, M. Drones for smart agriculture: A technical report. Int. J. Res. Appl. Sci. Eng. Technol. (IJRASET) 6(4), 341–346 (2018).

Unpaprom, Y., Dussadeeb, N. & Ramaraj, R. Modern agriculture drones, The Development of Smart Farmers, pp.13–19 (2018).

Talaviya, T., Shah, D., Patel, N., Yagnik, H. & Shah, M. Implementation of artificial intelligence in agriculture for optimisation of irrigation and application of pesticides and herbicides. Artif. Intell. Agric. 4, 58–73 (2020).

Qazi, S., Khawaja, B. A. & Farooq, Q. U. IoT-equipped and AI-enabled next generation smart agriculture: A critical review, current challenges and future trends. IEEE Access 10, 21219–21235 (2022).

Xiang, H. & Tian, L. Development of a low-cost agricultural remote sensing system based on an autonomous unmanned aerial vehicle (UAV). Biosys. Eng. 108(2), 174–190 (2011).

Boursianis, A. D. et al. Internet of Things (IoT) and agricultural unmanned aerial vehicles (UAVs) in smart farming: A comprehensive review. Internet Things 18, 100187 (2020).

Friha, O., Ferrag, M. A., Shu, L., Maglaras, L. & Wang, X. Internet of Things for the future of smart agriculture: A comprehensive survey of emerging technologies. IEEE/CAA J. Automatica Sinica 8(4), 718–752 (2021).

Kumar, A. et al. Artificial intelligence, internet of Things (IoT) and smart agriculture for sustainable farming: A review. Ann. Plant Sci. 11(11), 5512–5564 (2022).

Alzubi, A. A. & Galyna, K. Artificial intelligence and internet of Things for sustainable farming and smart agriculture. IEEE Access 11, 78686–78692 (2023).

Barnett, J., Duke, M., Au, C. K. & Lim, S. H. Work distribution of multiple cartesian robot arms for kiwifruit harvesting. Comput. Electron. Agric. 169, 105202 (2020).

Steward, B. L., Gai, J. Y. & Tang, L. The use of agricultural robots in weed management and control. In Robotics and automation for improving agriculture, Cambridge, pp. 1–25 (UK: Burleigh Dodds Science Publishing, 2019)

Santos, P. G. D. et al. Fleets of robots for environmentally-safe pest control in agriculture. Precision Agric. 18(4), 574–614 (2017).

Ponce, D. F. Y., Salcedo, J. V., Montalvo, P. D. R. & Sanchis, J. Mobile robotics in smart farming: Current trends and applications. Front. Artif. Intell. 6, 1–13 (2023).

Banerjee, S. & Mondal, A. An intelligent approach towards plant leaf disease detection through different convolutional neural Networks. Int. J. Intell. Syst. Appl. Eng. 12(2), 536–546 (2024).

Sun, X. et al. Research on plant disease identification based on CNN. Cognitive Robot. 2, 155–163 (2022).

Krishnan, R. & Julie, E. Computer aided detection of leaf disease in agriculture using convolution neural network-based squeeze and excitation network. Automatika 64(4), 1038–1053 (2023).

Jerome, N., Jothiraj, S., Kandasamy, S. & Ramachandran, D. An effective approach for plant disease detection using assessment-based convolutional neural networks (A-CNN). J. Adv. Res. Appl. Sci. Eng. Technol. 31(3), 155–172 (2023).

Lee, T. Y., Lin, I. A., Yu, J. Y., Yang, J. M. & Chang, Y. C. High efficiency disease detection for potato leaf with convolutional neural network. SN Comput. Sci. 2(297), 1–11 (2021).

Agarwal, M., Sinha, A., Gupta, S., Mishra, D. & Mishra, R. Potato crop disease classification using convolutional neural network. Smart Syst. IoT: Innovat. Comput. 141, 391–400 (2019).

Mustafa, H. et al. Pepper bell leaf disease detection and classification using optimized convolutional neural network. Multimedia Tools Appl. 82, 12065–12080 (2023).

Mahamud, F. et al. Bell pepper leaf disease classification using convolutional neural network. Conf. Intell. Comput. Optimiz. 569, 75–86 (2022).

Shilpa, A., Muneeswaran, V. & Rathinam, D. D. K. A precise and autonomous irrigation system for agriculture: IoT based self-propelled center pivot irrigation system. In International conference on advanced computing & communication systems (ICACCS), pp. 533–538, Coimbatore, India, (2019).

Ragab, M. A., Badreldeen, M. M., Sedhom, A. & Mamdouh, W. M. IoT based smart irrigation system. Int. J. Ind. Sustain. Dev. (IJISD) 3(1), 76–86 (2022).

Matilla, D. M., Murciego, Á. L., Bravo, D. M. J., Mendes, A. S. & Leithardt, V. R. Q. Low cost center pivot irrigation monitoring systems based on IoT and LoRaWAN technologies. In 2020 IEEE international workshop on metrology for agriculture and forestry (MetroAgriFor), pp. 262–267, Trento, Italy, (2020).

Debauche, O. et al. Edge AI-IoT pivot irrigation, plant diseases, and pests identification. Int. Conf. Emerg. Ubiquitous Syst. Pervasive Netw. (EUSPN) 177, 40–48 (2020).

Debauche, O., Mahmoudi, S., Mahmoudi, S. A., Manneback, P. & Lebeau, F. A new edge Architecture for AI-IoT services deployment. Int. Conf. Mobile Syst. Pervasive Comput. (MobiSPC) 175, 10–19 (2020).

https://cdn.shopify.com/s/files/1/0672/9409/files/Datasheet_SIM800L.pdf?v=1601544539. Access: June 2024.

https://datasheets.raspberrypi.com/rpi4/raspberry-pi-4-datasheet.pdf. Access: June 2024.

Bhatti, A. et al. Explicit content detection system: An approach towards a safe and ethical environment. Appl. Comput. Intell. Soft Comput. 2018, 1–13 (2018).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In IEEE Conference on computer vision and pattern recognition (CVPR), pp. 770–778, Las Vegas, NV, USA, (2016).

Krishnana, R. & Julie, E. Computer aided detection of leaf disease in agriculture using convolution neural network based squeeze and excitation network. Automatika 64(4), 1038–1053 (2023).

He, K., Zhang, X., Ren, S. & Sun, J. Delving deep into rectifiers: surpassing human-level performance on imagenet classification. In 2015 IEEE International Conference on Computer Vision (ICCV), pp. 1026–1034, Santiago, Chile, (2015).

Mohsen, S. et al. Brain tumor classification using hybrid single image super-resolution technique with resnext101_32 × 8d and vgg19 pre-trained models. IEEE Access 11, 55582–55595 (2023).

Acknowledgements

The authors extend their appreciation to Taif University, Saudi Arabia, for supporting this work through project number (TU-DSPP-2024-17).

Author information

Authors and Affiliations

Contributions

All authors contributed to the writing—the original draft of the manuscript, concepts, methodology, design of the proposed work, experimental results analysis, the software of the proposed work, dataset collection, resources, visualization, implementation of the work, similarity reduction, and the editing of the manuscript, the review of the writing and grammatical errors for the manuscript, validation of the results, and supervision of the proposed work.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ibrahim, A.S., Mohsen, S., Selim, I.M. et al. AI-IoT based smart agriculture pivot for plant diseases detection and treatment. Sci Rep 15, 16576 (2025). https://doi.org/10.1038/s41598-025-98454-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-98454-6

Keywords

This article is cited by

-

Data-efficient and accurate rapeseed leaf area estimation by self-supervised vision transformer for germplasms early evaluation

Plant Methods (2025)

-

AI and IoT-powered edge device optimized for crop pest and disease detection

Scientific Reports (2025)

-

A quantum-driven multi-stage framework integrating variational entanglement, reinforcement learning, and federated explainability for climate-resilient farming

Scientific Reports (2025)