Abstract

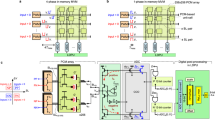

Large language models (LLMs), with their remarkable generative capacities, have greatly impacted a range of fields, but they face scalability challenges due to their large parameter counts, which result in high costs for training and inference. The trend of increasing model sizes is exacerbating these challenges, particularly in terms of memory footprint, latency and energy consumption. Here we explore the deployment of ‘mixture of experts’ (MoEs) networks—networks that use conditional computing to keep computational demands low despite having many parameters—on three-dimensional (3D) non-volatile memory (NVM)-based analog in-memory computing (AIMC) hardware. When combined with the MoE architecture, this hardware, utilizing stacked NVM devices arranged in a crossbar array, offers a solution to the parameter-fetching bottleneck typical in traditional models deployed on conventional von-Neumann-based architectures. By simulating the deployment of MoEs on an abstract 3D AIMC system, we demonstrate that, due to their conditional compute mechanism, MoEs are inherently better suited to this hardware than conventional, dense model architectures. Our findings suggest that MoEs, in conjunction with emerging 3D NVM-based AIMC, can substantially reduce the inference costs of state-of-the-art LLMs, making them more accessible and energy-efficient.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to the full article PDF.

USD 39.95

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Data were generated by the presented simulator and by evaluating the models trained on the WikiText-103 dataset. The WikiText-103 dataset is publicly available at https://huggingface.co/datasets/Salesforce/wikitext. Source data are provided with this paper57.

Code availability

The code used to generate the results of this study is publicly available from https://github.com/IBM/analog-moe (ref. 58) and https://github.com/IBM/3D-CiM-LLM-Inference-Simulator (ref. 59).

References

Jiang, A. Q. et al. Mixtral of experts. Preprint at https://arxiv.org/abs/2401.04088 (2024).

Touvron, H. et al. Llama 2: open foundation and fine-tuned chat models. Preprint at https://arxiv.org/abs/2307.09288 (2024).

Gemini Team Google et al. Gemini: a family of highly capable multimodal models. Preprint at https://arxiv.org/abs/2312.11805 (2023).

Brown, T. B. et al. Language models are few-shot learners. In Proc. Advances in Neural Information Processing Systems Vol. 33 (eds Larochelle, H. et al.) 1877–1901 (Curran Associates, 2020).

Kaplan, J. et al. Scaling laws for neural language models. Preprint at https://arxiv.org/abs/2001.08361 (2020).

Hoffmann, J. et al. An empirical analysis of compute-optimal large language model training. In Proc. Advances in Neural Information Processing Systems (eds Koyejo, S. et al.) Vol. 35 (Curran Associates, 2022).

Chowdhery, A. et al. PaLM: scaling language modeling with pathways. J. Mach. Learn. Res. 24, 11324–11436 (2023).

Jordan, M. & Jacobs, R. Hierarchical mixtures of experts and the EM algorithm. In Proc. 1993 International Conference on Neural Networks (IJCNN-93-Nagoya, Japan) Vol. 2, 1339–1344 (IEEE, 1993); https://doi.org/10.1109/IJCNN.1993.716791

Jacobs, R. A., Jordan, M. I., Nowlan, S. J. & Hinton, G. E. Adaptive mixtures of local experts. Neural Comput. 3, 79–87 (1991).

Shazeer, N. et al. Outrageously large neural networks: the sparsely-gated mixture-of-experts layer. In Proc. International Conference on Learning Representations (ICLR, 2017); https://openreview.net/forum?id=B1ckMDqlg

Fedus, W., Zoph, B. & Shazeer, N. Switch transformers: scaling to trillion parameter models with simple and efficient sparsity. J. Mach. Learn. Res. 23, 5232–5270 (2022).

Raffel, C. et al. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 21, 5485–5551 (2020).

Du, N. et al. GLaM: efficient scaling of language models with mixture-of-experts. In Proc. 39th International Conference on Machine Learning, Proceedings of Machine Learning Research Vol. 162, 5547–5569 (PMLR, 2022).

Clark, A. et al. Unified scaling laws for routed language models. In Proc. 39th International Conference on Machine Learning Vol. 162 (eds Chaudhuri, K. et al.) 4057–4086 (PMLR, 2022).

Ludziejewski, J. et al. Scaling laws for fine-grained mixture of experts. In Proc. ICLR 2024 Workshop on Mathematical and Empirical Understanding of Foundation Models (PMLR, 2024); https://openreview.net/forum?id=Iizr8qwH7J

Csordás, R., Irie, K. & Schmidhuber, J. Approximating two-layer feedforward networks for efficient transformers. In Proc. Association for Computational Linguistics: EMNLP 2023 (eds Bouamor, H. et al.) 674–692 (ACL, 2023); https://doi.org/10.18653/v1/2023.findings-emnlp.49

Reuther, A. et al. AI and ML accelerator survey and trends. In Proc. 2022 IEEE High Performance Extreme Computing Conference (HPEC) 1–10 (IEEE, 2022); https://doi.org/10.1109/HPEC55821.2022.9926331

Sebastian, A., Le Gallo, M., Khaddam-Aljameh, R. & Eleftheriou, E. Memory devices and applications for in-memory computing. Nat. Nanotechnol. 15, 529–544 (2020).

Lanza, M. et al. Memristive technologies for data storage, computation, encryption and radio-frequency communication. Science 376, eabj9979 (2022).

Mannocci, P. et al. In-memory computing with emerging memory devices: status and outlook. APL Mach. Learn 1, 010902 (2023).

Huang, Y. et al. Memristor-based hardware accelerators for artificial intelligence. Nat. Rev. Electr. Eng. 1, 286–299 (2024).

Le Gallo, M. et al. A 64-core mixed-signal in-memory compute chip based on phase-change memory for deep neural network inference. Nat. Electron. 6, 680–693 (2023).

Ambrogio, S. et al. An analog-AI chip for energy-efficient speech recognition and transcription. Nature 620, 768–775 (2023).

Wan, W. et al. A compute-in-memory chip based on resistive random-access memory. Nature 608, 504–512 (2022).

Zhang, W. et al. Edge learning using a fully integrated neuro-inspired memristor chip. Science 381, 1205–1211 (2023).

Wen, T.-H. et al. Fusion of memristor and digital compute-in-memory processing for energy-efficient edge computing. Science 384, 325–332 (2024).

Fick, L., Skrzyniarz, S., Parikh, M., Henry, M. B. & Fick, D. Analog matrix processor for edge AI real-time video analytics. In Proc. 2022 IEEE International Solid-State Circuits Conference (ISSCC) Vol. 65, 260–262 (IEEE, 2022); https://doi.org/10.1109/ISSCC42614.2022.9731773

Arnaud, F. et al. High density embedded PCM cell in 28 nm FDSOI technology for automotive micro-controller applications. In Proc. 2020 IEEE International Electron Devices Meeting (IEDM) 24.2.1–24.2.4 (IEEE, 2020); https://doi.org/10.1109/IEDM13553.2020.9371934

Lee, S. et al. A 1 Tb 4b/cell 64-stacked-WL 3D NAND flash memory with 12 MB/s program throughput. In Proc. 2018 IEEE International Solid-State Circuits Conference (ISSCC) 340–342 (IEEE, 2018); https://doi.org/10.1109/ISSCC.2018.8310323

Park, J.-W. et al. A 176-stacked 512 Gb 3b/cell 3D-NAND flash with 10.8 Gb/mm2 density with a peripheral circuit under cell array architecture. In Proc. 2021 IEEE International Solid-State Circuits Conference (ISSCC) Vol. 64, 422–423 (IEEE, 2021); https://doi.org/10.1109/ISSCC42613.2021.9365809

Lee, S.-T. & Lee, J.-H. Neuromorphic computing using NAND flash memory architecture with pulse width modulation scheme. Front. Neurosci. 14, 571292 (2020).

Bavandpour, M., Sahay, S., Mahmoodi, M. R. & Strukov, D. B. 3D-aCortex: an ultra-compact energy-efficient neurocomputing platform based on commercial 3D-NAND flash memories. Neuromorphic Comput. Eng. 1, 014001 (2021).

Shim, W. & Yu, S. Technological design of 3D NAND-based compute-in-memory architecture for GB-scale deep neural network. IEEE Electron Device Lett. 42, 160–163 (2020).

Hsieh, C.-C. et al. Chip demonstration of a high-density (43 Gb) and high-search-bandwidth (300 Gb/s) 3D NAND based in-memory search accelerator for Ternary Content Addressable Memory (TCAM) and proximity search of Hamming distance. In Proc. 2023 IEEE Symposium on VLSI Technology and Circuits (VLSI Technology and Circuits) 1–2 (IEEE, 2023); https://doi.org/10.23919/VLSITechnologyandCir57934.2023.10185361

Huo, Q. et al. A computing-in-memory macro based on three-dimensional resistive random-access memory. Nat. Electron. 5, 469–477 (2022).

Jain, S. et al. A heterogeneous and programmable compute-in-memory accelerator architecture for analog-AI using dense 2-D mesh. IEEE Trans. Very Large Scale Integr. VLSI Syst. 31, 114–127 (2023).

Cui, C. et al. A survey on multimodal large language models for autonomous driving. In Proc. IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) Workshops 958–979 (IEEE, 2024).

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: pre-training of deep bidirectional transformers for language understanding. In Proc. 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies Vol. 1, 4171–4186 (ACL, 2019); https://doi.org/10.18653/v1/N19-1423

Kim, W., Son, B. & Kim, I. ViLT: vision-and-language transformer without convolution or region supervision. In Proc. 38th International Conference on Machine Learning Vol. 139 (eds Meila, M. & Zhang, T.) 5583–5594 (PMLR, 2021); https://proceedings.mlr.press/v139/kim21k.html

Alayrac, J.-B. et al. Flamingo: a visual language model for few-shot learning. In Proc. Advances in Neural Information Processing Systems Vol. 35 (eds Koyejo, S. et al.) 23716–23736 (Curran Associates, 2022); https://proceedings.neurips.cc/paper_files/paper/2022/file/960a172bc7fbf0177ccccbb411a7d800-Paper-Conference.pdf

Pope, R. et al. Efficiently scaling transformer inference. In Proc. Machine Learning and Systems Vol. 5 (eds Song, D. et al.) 606–624 (Curran Associates, 2023).; https://proceedings.mlsys.org/paper_files/paper/2023/file/c4be71ab8d24cdfb45e3d06dbfca2780-Paper-mlsys2023.pdf

Choquette, J., Gandhi, W., Giroux, O., Stam, N. & Krashinsky, R. NVIDIA A100 Tensor Core GPU: performance and innovation. IEEE Micro 41, 29–35 (2021).

Radford, A. et al. Language models are unsupervised multitask learners. Semantic Scholar https://api.semanticscholar.org/CorpusID:160025533 (2019).

Merity, S., Xiong, C., Bradbury, J. & Socher, R. Pointer sentinel mixture models. In Proc. International Conference on Learning Representations (ICLR, 2017); https://openreview.net/forum?id=Byj72udxe

Vasilopoulos, A. et al. Exploiting the state dependency of conductance variations in memristive devices for accurate in-memory computing. IEEE Trans. Electron Devices 70, 6279–6285 (2023).

Paszke, A. et al. PyTorch: an imperative style, high-performance deep learning library. In Proc. Advances in Neural Information Processing Systems Vol. 32, 8024–8035 (Curran Associates, 2019).

Reed, J. K., DeVito, Z., He, H., Ussery, A. & Ansel, J. Torch.fx: practical program capture and transformation for deep learning in Python. Preprint at https://arxiv.org/abs/2112.08429 (2021).

Vaswani, A. et al. Attention is all you need. In Proc. Advances in Neural Information Processing Systems Vol. 30 (eds Guyon, I. et al.) (Curran Associates, 2017); https://proceedings.neurips.cc/paper_files/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf

Fisher Trace scheduling: a technique for global microcode compaction. IEEE Trans. Comput. C-30, 478–490 (1981).

Bernstein, D. & Rodeh, M. Global instruction scheduling for superscalar machines. In Proc. ACM SIGPLAN 1991 Conference on Programming Language Design and Implementation PLDI ’91 241–255 (ACM, 1991); https://doi.org/10.1145/113445.113466

Joshi, V. et al. Accurate deep neural network inference using computational phase-change memory. Nat. Commun. 11, 2473 (2020).

Kudo, T. & Richardson, J. SentencePiece: a simple and language independent subword tokenizer and detokenizer for neural text processing. In Proc. 2018 Conference on Empirical Methods in Natural Language Processing: System Demonstrations (eds Blanco, E. & Lu, W.) 66–71 (Association for Computational Linguistics, 2018).

Tillet, P., Kung, H. T. & Cox, D. Triton: an intermediate language and compiler for tiled neural network computations. In Proc. 3rd ACM SIGPLAN International Workshop on Machine Learning and Programming Languages 10–19 (ACM, 2019); https://doi.org/10.1145/3315508.3329973

Le Gallo, M. et al. Using the IBM analog in-memory hardware acceleration kit for neural network training and inference. APL Mach. Learn. 1, 041102 (2023).

Büchel, J. et al. AIHWKIT-lightning: a scalable HW-aware training toolkit for analog in-memory computing. In Proc. Advances in Neural Information Processing Systems 2024 Workshop, Machine Learning with new Compute Paradigms (Curran Associates, 2024); https://openreview.net/forum?id=QNdxOgGmhR

Büchel, J. et al. Gradient descent-based programming of analog in-memory computing cores. In Proc. 2022 International Electron Devices Meeting (IEDM) 33.1.1–33.1.4 (IEEE, 2022); https://doi.org/10.1109/IEDM45625.2022.10019486

Büchel, J. Source data for figures in ‘Efficient scaling of large language models with mixture of experts and 3D analog in-memory computing’. Zenodo https://doi.org/10.5281/zenodo.14146703 (2024).

Büchel, J. IBM/analog-moe: code release. Zenodo https://doi.org/10.5281/zenodo.14025079 (2024).

Büchel, J. & Vasilopolous, A. IBM/3D-CiM-LLM-Inference-Simulator: code release. Zenodo https://doi.org/10.5281/zenodo.14025077 (2024).

Goda, A. 3D NAND technology achievements and future scaling perspectives. IEEE Trans. Electron Devices 67, 1373–1381 (2020).

Lacaita, A. L., Spinelli, A. S. & Compagnoni, C. M. High-density solid-state storage: a long path to success. In Proc. 2021 IEEE Latin America Electron Devices Conference (LAEDC) 1–4 (IEEE, 2021); https://doi.org/10.1109/LAEDC51812.2021.9437865

Shoeybi, M. et al. Megatron-LM: training multi-billion parameter language models using model parallelism. Preprint at https://arxiv.org/abs/1909.08053 (2020).

Acknowledgements

We thank G. Atwood, A. Goda and D. Mills from Micron for fruitful discussions and technical insights. We also thank R. Csordás from Stanford for valuable help in the reproduction of the Sigma-MoE results. We thank P. Diener for helping with illustrations. We also thank J. Burns from IBM and M. Helm from Micron for managerial support. We received no specific funding for this work.

Author information

Authors and Affiliations

Contributions

J.B., A.V., I.B., A.R., M.L.G. and A.S. initiated the research effort. J.B. and A.V. implemented the high-level simulator. J.B. implemented the GPU kernels for training MoEs and conducted the experiments. W.A.S., J.B. and G.W.B. compared the high-level simulator to a more detailed simulator developed for 2D AIMC. B.F. and H.C. provided insights on 3D in-memory computing. H.T., V.N. and A.S. provided managerial support. J.B. and A.V. wrote the manuscript with input from all authors.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Computational Science thanks Erika Covi, Anand Subramoney and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Primary Handling Editor: Jie Pan, in collaboration with the Nature Computational Science team.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Supplementary information

Supplementary Information

Supplementary Notes 1–8.

Supplementary Data 1

Source data of Supplementary Fig. 1.

Supplementary Data 2

Source data of Supplementary Fig. 2.

Supplementary Data 3

Source data of Supplementary Fig. 3.

Supplementary Data 4

Source data of Supplementary Fig. 4.

Supplementary Data 5

Source data of Supplementary Fig. 5.

Supplementary Data 6

Source data of Supplementary Fig. 6.

Supplementary Data 7

Source data of Supplementary Fig. 7.

Supplementary Data 8

Source data of Supplementary Fig. 8.

Supplementary Data 9

Source data of Supplementary Fig. 9.

Supplementary Data 10

Source data of Supplementary Fig. 10.

Source data

Source Data Fig. 1

Source data of Fig. 1.

Source Data Fig. 3

Source data of Fig. 3.

Source Data Fig. 4

Source data of Fig. 4.

Source Data Fig. 5

Source data of Fig. 5.

Source Data Fig. 6

Source data of Fig. 6.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Büchel, J., Vasilopoulos, A., Simon, W.A. et al. Efficient scaling of large language models with mixture of experts and 3D analog in-memory computing. Nat Comput Sci 5, 13–26 (2025). https://doi.org/10.1038/s43588-024-00753-x

Received:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s43588-024-00753-x

This article is cited by

-

Compute-in-memory implementation of state space models for event sequence processing

Nature Communications (2026)

-

Efficient large language model with analog in-memory computing

Nature Computational Science (2025)

-

The design of analogue in-memory computing tiles

Nature Electronics (2025)

-

Evolution of Neural Network Models and Computing-in-Memory Architectures

Archives of Computational Methods in Engineering (2025)

-

Revisiting the Role of Review Articles in the Age of AI-Agents: Integrating AI-Reasoning and AI-Synthesis Reshaping the Future of Scientific Publishing

Bratislava Medical Journal (2025)