Abstract

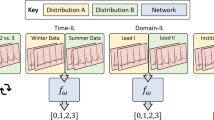

The advent of vision–language models fosters interactive conversations between artificial intelligence-enabled models and humans. However, applying these models in the clinic faces challenges related to large-scale training data as well as financial and computational resources. Here we propose CLOVER, a cost-effective instruction learning framework for conversational pathology. CLOVER trains a lightweight module and uses instruction tuning while freezing the parameters of the large language model. Instead of using costly GPT-4, we propose well-designed prompts on GPT-3.5 for building generation-based instructions, emphasizing the utility of pathological knowledge derived from the Internet source. We construct a high-quality set of template-based instructions in the context of digital pathology. Using two benchmark datasets, our findings reveal the strength of hybrid-form, pathological visual question–answer instructions. CLOVER outperforms baselines that possess 37 times more training parameters and exhibits few-shot capacity on an external clinical dataset. CLOVER could thus accelerate the adoption of rapid conversational applications in digital pathology.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to the full article PDF.

USD 39.95

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The QUILT-1M, QUILT-VQA and Quilt-instruct27 can be accessed via GitHub at https://quilt1m.github.io/. LLaVA-Med-Pathology18 can be accessed via GitHub at https://github.com/microsoft/LLaVA-Med. PathVQA34 can be downloaded via HuggingFace at https://huggingface.co/datasets/flaviagiammarino/path-vqa. The clinical dataset from Xinhua Hospital is available upon request from the corresponding author (zhangshaoting@pjlab.org.cn) owing to the privacy protection restriction of the hospital. Requests will be reviewed to ensure confidentiality. A data-sharing agreement must be signed before data release. Source data are provided with this paper.

Code availability

The code, instruction datasets and models are publicly available via GitHub at https://github.com/JLINEkai/CLOVER (ref. 57).

References

Zhang, Y. et al. Data-centric foundation models in computational healthcare: a survey. Preprint at https://arxiv.org/abs/2401.02458 (2024).

van Sonsbeek, T., Derakhshani, M. M., Najdenkoska, I., Snoek, C. G. M. & Worring, M. Open-ended medical visual question answering through prefix tuning of language models. In Medical Image Computing and Computer Assisted Intervention – MICCAI 2023 Lecture Notes in Computer Science, vol 14224 (eds Greenspan, H. et al.) https://doi.org/10.1007/978-3-031-43904-9_70 (Springer, Cham, 2023).

Li, P., Liu, G., Tan, L., Liao, J, & Zhong, S. Self-supervised vision-language pretraining for medial visual question answering. In IEEE 20th International Symposium on Biomedical Imaging https://doi.org/10.1109/ISBI53787.2023.10230743 (IEEE, 2023).

Thirunavukarasu, A. J. et al. Large language models in medicine. Nat. Med. 29, 1930–1940 (2023).

Singhal, K. et al. Large language models encode clinical knowledge. Nature 620, 172–180 (2023).

Wei, J. et al. Chain-of-thought prompting elicits reasoning in large language models. In 36th Conference on Neural Information Processing Systems (NeurIPS 2022) https://openreview.net/pdf?id=_VjQlMeSB_J (NeurIPS, 2022).

Schulman, J. et al. ChatGPT: optimizing language models for dialogue. OpenAI Blog https://openai.com/index/chatgpt (2022).

Achiam, J. et al. GPT-4 technical report. Preprint at https://arxiv.org/abs/2303.08774 (2023).

Liu, H., Li, C., Wu, Q. & Lee, Y. J. Visual instruction tuning. In 37th Conference on Neural Information Processing Systems (NeurIPS 2023) https://proceedings.neurips.cc/paper_files/paper/2023/file/6dcf277ea32ce3288914faf369fe6de0-Paper-Conference.pdf (NeurIPS, 2023).

Alayrac, J.-B. et al. Flamingo: a visual language model for few-shot learning. In 36th Conference on Neural Information Processing Systems (NeurIPS 2022) https://papers.neurips.cc/paper_files/paper/2022/file/960a172bc7fbf0177ccccbb411a7d800-Paper-Conference.pdf (NeurIPS, 2022).

Koh, J. Y., Salakhutdinov, R. & Fried, D. Grounding language models to images for multimodal generation. In International Conference on Machine Learning 17283–17300 (PMLR, 2023).

Ye, Q. et al. mPLUG-Owl: modularization empowers large language models with multimodality. Preprint at https://arxiv.org/abs/2304.14178 (2023).

Song, A. H. et al. Artificial intelligence for digital and computational pathology. Nat. Rev. Bioeng. 1, 930–949 (2023).

Schwalbe, N. & Wahl, B. Artificial intelligence and the future of global health. The Lancet 395, 1579–1586 (2020).

Baxi, V., Edwards, R., Montalto, M. & Saha, S. Digital pathology and artificial intelligence in translational medicine and clinical practice. Mod. Pathol. 35, 23–32 (2022).

Wang, X. et al. Editorial for special issue on foundation models for medical image analysis. Med. Image Anal. https://doi.org/10.1016/j.media.2024.103389 (2024).

Zhang, S. & Metaxas, D. On the challenges and perspectives of foundation models for medical image analysis. Med. Image Anal. 91, 102996 (2024).

Li, C. et al. LLaVA-Med: training a large language-and-vision assistant for biomedicine in one day. In 37th Conference on Neural Information Processing Systems (NeurIPS 2023) https://papers.neurips.cc/paper_files/paper/2023/file/5abcdf8ecdcacba028c6662789194572-Paper-Datasets_and_Benchmarks.pdf (NeurIPS, 2023).

Seyfioglu, M. S., Ikezogwo, W. O., Ghezloo, F., Krishna, R. & Shapiro, L. Quilt-LLaVA: visual instruction tuning by extracting localized narratives from open-source histopathology videos. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 13183–13192 (IEEE, 2024).

Wu, C. et al. PMC-LLaMA: toward building open-source language models for medicine. J. Am. Med. Inform. Assoc. https://doi.org/10.1093/jamia/ocae045 (2024).

Moor, M. et al. Med-flamingo: a multimodal medical few-shot learner. In Proc. 3rd Machine Learning for Health Symposium 353–367 (PMLR, 2023).

Wang, X. et al. A pathology foundation model for cancer diagnosis and prognosis prediction. Nature 634, 970–978 (2024).

Lu, M. Y. et al. A visual-language foundation model for computational pathology. Nat. Med. 30, 863–874 (2024).

Lu, M. Y. et al. A multimodal generative AI copilot for human pathology. Nature 634, 466–473 (2024).

Xu, Y. et al. A multimodal knowledge-enhanced whole-slide pathology foundation model. Preprint at https://arxiv.org/abs/2407.15362 (2024).

Zhang, S. et al. Large-scale domain-specific pretraining for biomedical vision-language processing. Preprint at https://arxiv.org/abs/2303.00915 (2023).

Ikezogwo, W. O. et al. Quilt-1m: One million image-text pairs for histopathology. In 37th Conference on Neural Information Processing Systems (NeurIPS 2023) https://proceedings.neurips.cc/paper_files/paper/2023/file/775ec578876fa6812c062644964b9870-Paper-Datasets_and_Benchmarks.pdf (NeurIPS, 2023).

Huang, Z., Bianchi, F., Yuksekgonul, M., Montine, T. J. & Zou, J. A visual–language foundation model for pathology image analysis using medical twitter. Nat. Med. 29, 2307–2316 (2023).

Radford, A. et al. Learning transferable visual models from natural language supervision. In Proc. International Conference on Machine Learning 8748–8763 (PMLR, 2021).

Gao, Y., Gu, D., Zhou, M. & Metaxas, D. Aligning human knowledge with visual concepts towards explainable medical image classification. In International Conference on Medical Image Computing and Computer-Assisted Intervention 46–56 (Springer, 2024).

Zhang, Y. et al. Text-guided foundation model adaptation for pathological image classification. In Proc. International Conference on Medical Image Computing and Computer-Assisted Intervention 272–282 (Springer, 2023).

Ding, K., Zhou, M., Metaxas, D. N. & Zhang, S. Pathology-and-genomics multimodal transformer for survival outcome prediction. In Proc. International Conference on Medical Image Computing and Computer-Assisted Intervention 622–631 (Springer, 2023).

Li, J., Li, D., Savarese, S. & Hoi, S. BLIP-2: bootstrapping language-image pre-training with frozen image encoders and large language models. In International Conference on Machine Learning 19730–19742 (PMLR, 2023).

He, X. et al. Pathvqa: 30000+ questions for medical visual question answering. Preprint at https://arxiv.org/abs/2003.10286 (2020).

Dubey, A. et al. The Llama 3 herd of models. Preprint at https://arxiv.org/abs/2407.21783 (2024).

Chen, X. et al. Recent advances and clinical applications of deep learning in medical image analysis. Med. Image Anal. 79, 102444 (2022).

Chang, Q. et al. Mining multi-center heterogeneous medical data with distributed synthetic learning. Nat. Commun. 14, 5510 (2023).

Graikos, A. et al. Learned representation-guided diffusion models for large-image generation. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 8532–8542 (IEEE, 2024).

Ding, K. et al. A large-scale synthetic pathological dataset for deep learning-enabled segmentation of breast cancer. Sci. Data 10, 231 (2023).

Sun, Y. et al. PathGen-1.6M: 1.6 million pathology image-text pairs generation through multi-agent collaboration. In Thirteenth International Conference on Learning Representations (ICLR 2025) https://openreview.net/pdf?id=rFpZnn11gj (ICLR, 2025).

Chung, H. W. et al. Scaling instruction-finetuned language models. J. Mach. Learn. Res. 25, 1–53 (2024).

Liu, H., Li, C., Li, Y. & Lee, Y. J. Improved baselines with visual instruction tuning. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 26296–26306 (IEEE, 2024).

Peng, B., Li, C., He, P., Galley, M. & Gao, J. Instruction tuning with GPT-4. Preprint at https://arxiv.org/abs/2304.03277 (2023).

Zhou, C. et al. LIMA: less is more for alignment. In 37th Conference on Neural Information Processing Systems (NeurIPS 2023) https://proceedings.neurips.cc/paper_files/paper/2023/file/ac662d74829e4407ce1d126477f4a03a-Paper-Conference.pdf (NeurIPS, 2023).

Wei, J. et al. Finetuned language models are zero-shot learners. In Tenth International Conference on Learning Representations https://openreview.net/pdf?id=gEZrGCozdqR (ICLR, 2022).

Chen, P., Zhu, C., Zheng, S., Li, H., & Yang, L. WSI-VQA: interpreting whole slide images by generative visual question answering. In Proc. European Conference on Computer Vision 401–417 (2025).

Ding, K., Zhou, M., Wang, H., Zhang, S. & Metaxas, D. N. Spatially aware graph neural networks and cross-level molecular profile prediction in colon cancer histopathology: a retrospective multi-cohort study. Lancet Digit. Health 4, 787–795 (2022).

Hu, E.J. et al. LoRA: low-rank adaptation of large language models. In Tenth International Conference on Learning Representations https://openreview.net/pdf?id=nZeVKeeFYf9 (ICLR, 2022).

Jin, Y. et al. Efficient multimodal large language models: a survey. Preprint at https://arxiv.org/abs/2405.10739 (2024).

Liu, H. et al. Few-shot parameter-efficient fine-tuning is better and cheaper than in-context learning. In 36th Conference on Neural Information Processing Systems (NeurIPS 2022) https://openreview.net/pdf?id=rBCvMG-JsPd (2022).

Vaswani, A. et al. Attention is all you need. In 31st Conference on Neural Information Processing Systems (NIPS 2017) https://papers.nips.cc/paper_files/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf (2017).

Li, J., Li, D., Xiong, C. & Hoi, S. BLIP: bootstrapping language-image pre-training for unified vision-language understanding and generation. In Proc. International Conference on Machine Learning 12888–12900 (PLMR, 2022).

Fang, Y. et al. EVA: exploring the limits of masked visual representation learning at scale. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 19358–19369 (IEEE, 2023).

Chiang, W.-L. et al. Vicuna: an open-source chatbot impressing GPT-4 with 90% chatgpt quality. LMSYS Org https://vicuna.lmsys.org (2023).

Bazi, Y., Rahhal, M. M. A., Bashmal, L. & Zuair, M. Vision–language model for visual question answering in medical imagery. Bioengineering 10, 380 (2023).

Liu, Y., Wang, Z., Xu, D. & Zhou, L. Q2ATransformer: improving medical VQA via an answer querying decoder. In Proc. International Conference on Information Processing in Medical Imaging 445–456 (Springer Nature, 2023).

Chen, K. et al. Cost-effective instruction learning for pathology vision and language analysis. Zenodo https://doi.org/10.5281/zenodo.15081542 (2025).

Acknowledgements

This study is supported in part by Shanghai Artificial Intelligence Laboratory (M.L. and S.Z.) and the Centre for Perceptual and Interactive Intelligence Ltd under the Innovation and Technology Commission’s InnoHK (S.Z.).

Author information

Authors and Affiliations

Contributions

K.C., M.L., M.Z. and S.Z. are major contributors to drafting and revising the manuscript for content and analyzing the data. F.Y., L.M., X.S., L.W., X.W., L.Z. and Z.W. played major roles in the acquisition of data. K.C., M.L., M.Z., F.Y., X.S., L.W., L.M. and X.W. substantially revised the manuscript. K.C., M.L., M.Z. and S.Z. conceptualized and designed the study. M.L., L.Z. and Z.W. interpreted the data. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Computational Science thanks Hao Chen, Prateek Verma and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available. Primary Handling Editor: Ananya Rastogi, in collaboration with the Nature Computational Science team.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Related Works, Tables 1–9 and Fig. 1.

Source data

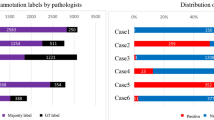

Source Data Fig. 2.

Statistical source data for Fig. 2.

Source Data Fig. 3.

Statistical source data for Fig. 3.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chen, K., Liu, M., Yan, F. et al. Cost-effective instruction learning for pathology vision and language analysis. Nat Comput Sci 5, 524–533 (2025). https://doi.org/10.1038/s43588-025-00818-5

Received:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s43588-025-00818-5