Abstract

Integrating prior epidemiological knowledge embedded within mechanistic models with the data-mining capabilities of artificial intelligence (AI) offers transformative potential for epidemiological modeling. While the fusion of AI and traditional mechanistic approaches is rapidly advancing, efforts remain fragmented. This scoping review provides a comprehensive overview of emerging integrated models applied across the spectrum of infectious diseases. Through systematic search strategies, we identified 245 eligible studies from 15,460 records. Our review highlights the practical value of integrated models, including advances in disease forecasting, model parameterization, and calibration. However, key research gaps remain. These include the need for better incorporation of realistic decision-making considerations, expanded exploration of diverse datasets, and further investigation into biological and socio-behavioral mechanisms. Addressing these gaps will unlock the synergistic potential of AI and mechanistic modeling to enhance understanding of disease dynamics and support more effective public health planning and response.

Similar content being viewed by others

Introduction

Epidemiological modeling is a powerful tool for understanding the dynamics of infectious diseases and guiding public health decisions and policies1,2,3,4,5. Mechanistic models, grounded in the known governing laws and physical principles of disease transmission, have been widely used to investigate various infectious diseases, including respiratory infections6,7,8, sexually transmitted diseases9,10, and vector-borne diseases11,12. Unlike empirical models, which primarily focus on data fitting without necessarily incorporating the underlying causes of observed patterns, mechanistic models aim to explain how and why epidemics unfold.

Despite their utility for predicting and controlling the spread of infectious diseases, traditional mechanistic models, such as the classical susceptible-infected-recovered (SIR) structure, face several challenges. First, the reliability of these models depends heavily on the accuracy of estimated parameters governing transmission dynamics5,13,14. However, current models are often constrained by simplifications and data availability. For example, disease transmissibility, though modeled as dynamic, is frequently calibrated using lagged and potentially incomplete death or hospitalization data. Similarly, human contact patterns, crucial for understanding transmission, are often assumed to be static due to limited access to high-quality, real-time data. Furthermore, the impact of interventions is typically modeled using linear terms, failing to fully capture the complex interplay between public responses and pathogen evolution. Second, despite the wealth of epidemiological knowledge encoded in unstructured and multimodal data sources (e.g., satellite imagery, social media, electronic health records), their incorporation into mechanistic models has largely relied on manual feature extraction, hindering the effective utilization of the richness of these data15,16,17. Third, the rise of big data18,19 has spurred the development of more complex mechanistic models that offer granular and detailed descriptions of disease dynamics20,21, but also increase the computational resources required for model calibration and validation, epidemic simulation, and optimization.

Recent advances in artificial intelligence (AI), especially machine learning (ML) and deep learning (DL), offer promising solutions to overcome the challenges and limitations of traditional epidemiological modeling using mechanistic models22,23,24,25,26. AI techniques demonstrate exceptional capabilities in predicting future outcomes, processing diverse databases, and extracting nuanced patterns and insights from big data. Various AI-based approaches have been successfully deployed for healthcare applications27,28,29,30, including medical image analysis, drug discovery, clinical outcome prediction, and treatment optimization. The potential of AI to transform epidemiological modeling has been actively explored across disciplines31,32,33,34. One line of research focuses on purely AI-driven predictive models35,36,37, as alternatives to traditional mechanistic models. Although these predictive models may perform well in short-term epidemic forecasting, their lack of underlying mechanisms limits their utility for long-term planning and scenario analysis. Integrated models, which combine the data-mining capabilities of AI techniques with the explanatory power of mechanistic models, are gaining significant attention. Despite the wide spectrum of AI methods, current integrations with mechanistic epidemiological models are predominantly limited to traditional statistical models, particularly for parameter inference and model calibration38,39,40,41. Explorations on emerging ML and DL techniques, though promising and rapidly expanding, remain fragmented due to the complexity of these techniques and interdisciplinary communication challenges. Bridging this gap is crucial to fully harnessing the power of AI to advance epidemiological modeling.

Existing reviews on emerging AI applications in infectious disease management have primarily focused on clinical aspects (e.g., diagnosis and treatment), drug discovery, and purely AI-driven predictive models18,42,43,44,45. Some reviews have provided overviews of AI applications in infectious disease surveillance46,47,48, offering vistas into the integration between AI and mechanistic models; however, a comprehensive review dedicated specifically to this integration is lacking. This scoping review aims to address this gap by systematically synthesizing literature in this emerging field. We identify solutions with the potential to address the immediate need in epidemiological modeling from various disciplines, outline the gaps between research and real-world applications, and highlight promising research directions for utilizing integrated models to provide data-driven policy guidance.

Results

Study selection and characteristics

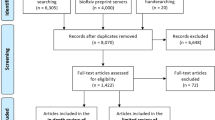

Our search produced 15,460 studies (15,422 from database search, 17 through backward citation search, and 21 through manual search of relevant journals and conference proceedings). After eliminating 6267 duplicates, 9193 studies were screened. Of these, 807 studies advanced to the full-text review, and 245 studies were ultimately included in this scoping review (Methods, Fig. 1). The characteristics of these studies are provided in Supplementary Appendix 5.

The studies spanned various application areas of integrated models for diverse infectious diseases. Overall, 26 infectious diseases were investigated using integrated models (Supplementary Appendix 6). The majority of these studies focused on COVID-19 (148 studies, 60%), followed by influenza (18 studies, 7%), dengue (4 studies, 2%), and HIV (3 studies, 1%). Additionally, 56 studies (23%) used hypothetical disease scenarios to demonstrate method applicability rather than investigating specific diseases. The recent surge in COVID-19 research has notably increased the volume of studies integrating AI with epidemiological models, with 217 (89%) of the included studies published between 2020 and 2023. Despite the increase in research study volume, the distribution of application areas for integrated models remained consistent over time (Fig. 2). We grouped the application areas into six primary categories (Fig. 2, Box 1, Supplementary Appendix 6): infectious disease forecasting (86 studies, 35%), model parameterization and calibration (77 studies, 31%), and disease intervention assessment and optimization (72 studies, 29%), followed by retrospective epidemic course analysis (16 studies, 7%), transmission inference (9 studies, 4%), and outbreak detection (7 studies, 3%). These categories are not mutually exclusive, indicating that a single integrated model can serve multiple application areas.

A list of investigated infectious diseases can be found in Supplementary Appendix 6.

Infectious disease forecasting

Among the included studies, 86 reported on the use of integrated models for infectious disease forecasting (Supplementary Appendix 7). Nearly all of these studies validated their proposed forecasting frameworks with real-world datasets, and 76 studies (88%) used COVID-19 datasets.

One study used a hierarchical clustering approach to group regions with similar disease activity patterns, partially determined by epidemiological models49. This approach allowed for the identification of regions with synchronized disease activity and the generation of cluster-based predictions. One study predicted case numbers using tree-based models, with input features informed by an epidemiological model50. Two studies leveraged tree-based methods, trained on synthetic datasets generated by epidemiological models, to discern the relationship between early-phase outbreak situation metrics and future epidemic outcomes51,52. Six studies employed ensemble learning frameworks that combined forecasts from AI and epidemiological models to improve forecasting performance53,54,55,56,57,58. Long short-term memory (LSTM) networks–the most frequently used method–are adept at learning temporal dependencies from time-series data, thereby complementing mechanistic models in generating robust forecasts. Of these six studies, four used weighted averaging to combine forecasts based on historical model performance53,54,55,57; one utilized stacking, where an LSTM-based meta-model was trained to learn the optimal way to integrate forecasts from epidemiological models56; and one employed boosting, where a neural network learned to correct errors in the epidemiological model’s forecasts58.

Twenty-nine studies forecasted epidemic trajectories based on physics-informed neural networks (PINNs; n = 9)32,59,60,61,62,63,64,65,66, epidemiology-aware AI models (EAAMs; n = 11)67,68,69,70,71,72,73,74,75,76,77, and synthetically-trained AI models (n = 9)78,79,80,81,82,83,84,85,86. PINNs represented state variables and other time-varying parameters as neural networks with the input time \(t\). The loss function of PINNs consists of two components: (i) the data loss, reflecting the disparity between neural network outputs and actual data, and (ii) the residual loss, ensuring adherence to disease transmission mechanisms represented by differential equations. By incorporating epidemiological knowledge into neural networks through residual loss, PINNs exhibit enhanced performance in parameter inference and disease forecasting. PINNs extrapolated future state variables using time steps over the forecast period as input. In contrast, EAAMs offered more adaptable model structures and knowledge-infusing frameworks that extended standard AI models, such as recurrent neural networks (RNNs) and graph neural networks (GNNs), by assimilating epidemiological knowledge into the architectures, loss functions, and training processes of AI models. Synthetically-trained AI models employed time series or spatiotemporal forecasting models, such as LSTM networks and GNNs, for infectious disease forecasting. These models acquire epidemiological insights by learning transmission mechanisms from synthetic datasets generated by epidemiological models. Such integrated approaches surmount the limitations of methodological frameworks that rely solely on mechanistic or AI models, which may be computationally impractical when surveillance data are noisy or sparse.

Forty-seven studies adopted AI-augmented epidemiological models, which replaced parts of epidemiological models (e.g., model parameters, derivatives, or derivative orders) with AI components. These AI components were employed to predict future values of epidemiological model parts, which were subsequently inputted for forecasting. Among these, eight studies employed end-to-end training of AI-augmented epidemiological models87,88,89,90,91,92,93,94. When inserting epidemiological model parts directly or indirectly approximated by AI models into numerical solvers, AI-augmented epidemiological models can produce estimated observational data, which were used to train AI models by minimizing the loss function designed based on the difference between actual and estimated observations. Thirty-seven studies forecasted unknown components in epidemiological models based on supervised learning frameworks, where AI models (primarily RNNs) were trained on synthetic data generated by epidemiological models or historical component values95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123,124,125,126,127,128,129,130,131. Two studies did not specify their component learning frameworks132,133.

Model parameterization and calibration

Seventy-seven studies explored the use of integrated models for parameterization or calibration of epidemiological models (Supplementary Appendix 8). Among these, four studies employed AI techniques to improve observational data by extracting auxiliary information from non-traditional surveillance sources, such as social media content and search trend data134,135,136,137. The improved observational data were then used for precise parameterization and calibration of epidemiological models, investigating diseases including COVID-19 and influenza. Two of these studies utilized support vector machines (SVMs) or tree-based methods to generate disease activity data by inferring individuals’ health status from social media content134,136. Another study employed tree-based methods to refine observed data and estimate unobserved data by supplementing traditional surveillance data (specifically, laboratory-confirmed influenza hospitalizations) with search trend data137. The final study used tree-based methods to determine the relative importance of various non-pharmacological interventions in modifying the transmission rate within the epidemiological model135.

The remaining 73 studies implemented AI-enhanced calibration methods using three main approaches: surrogate modeling (n = 13), synthetically-trained scenario classifiers (n = 5), and direct parameter calibration (n = 56). In surrogate modeling-based calibration methods, lightweight AI-based surrogates of epidemiological models were integrated into Bayesian138,139,140 or simulation-based optimization frameworks86,141,142 to achieve efficient parameter inference, thereby replacing computationally intensive processes. Trained on datasets generated by epidemiological models with varying input parameters, these surrogates learned the relationships between inputs and simulated outputs, thereby accelerating model fitting. Most of these studies (9 out of 13) developed surrogates for agent/individual-based models, primarily due to their high computational demands. Neural networks were the most frequently used surrogates (10 of 13 studies). Five studies evaluated the performance of AI-based surrogates using simulation datasets generated by disease-specific models. Scenario classifier-based methods reframe parameter inference as classification tasks. To predict epidemic scenarios characterized by sets of parameters, classifiers are trained on synthetic data from epidemiological models143,144,145,146,147. This approach has been applied to calibrate epidemiological models for diseases such as influenza and tomato spotted wilt virus infection. Common classification algorithms, including tree-based methods (4 out of 5) and SVMs (2 out of 5), were frequently employed.

Of the 56 studies employing direct parameter calibration methods, 29 leveraged PINNs32,59,60,61,62,63,64,65,66,131,148,149,150,151,152,153,154,155,156,157,158,159,160,161,162,163,164,165,166, 3 used EAAMs73,74,167, 18 adopted AI-augmented epidemiological models87,89,91,92,93,94,168,169,170,171,172,173,174,175,176,177,178,179, 4 employed Bayesian neural networks180,181,182,183, and 2 used synthetically-trained neural networks95,139. In PINN or EAAM-based methods, parameters in epidemiological models were either represented by AI models or set as trainable weights, enabling parameter estimations to be updated during training. Among studies employing AI-augmented epidemiological models, 17 utilized neural networks to estimate model parameters87,89,91,92,93,168,169,170,171,172,173,174,175,176,177,178,179, while one study utilized a regression method to predict model parameters with unobserved features estimated by tree-based methods94. These AI-augmented models underwent end-to-end training, similar to the approach discussed in the Infectious Disease Forecasting section. Bayesian neural networks leverage the capabilities of neural networks in handling high-dimensional data to enhance parameter inference in Bayesian approaches, including variational and simulation-based inference. These approaches attempt to approximate the posterior distribution of model parameters given observed data, especially when faced with intractable likelihood or marginal likelihood. One study employed variational inference to jointly infer unknown parameters and latent diffusion processes in the epidemiological model181. An RNN formed part of the variational approximation of the joint posterior distribution of the parameters and diffusion processes, conditional on the observed data. This approximation was optimized by maximizing the evidence lower bound (ELBO). Three studies utilized simulation-based inference techniques to approximate the posterior when the likelihood, implicitly defined by epidemiological models, was intractable180,182,183. These techniques include neural density estimation methods and neural network-based approximate Bayesian computation184. Methods utilizing synthetically-trained neural networks, by contrast, involved training on labeled datasets generated by epidemiological models to directly predict parameter values from observational data.

Among 77 studies that focused on model parameterization and calibration, 28 used well-calibrated models for retrospective disease intervention assessment or future projections32,59,62,64,87,92,134,135,136,137,147,148,150,151,152,156,160,161,163,164,165,167,168,169,170,174,176,177. Retrospective assessments were achieved by analyzing the fitted values of parameters affected by interventions. Parameter values over the projection horizon were set as their final values in the training window.

Disease intervention assessment and optimization

Seventy-two studies leveraged integrated models to assess (n = 13) or optimize (n = 59) the impact of interventions (Supplementary Appendix 9). To accelerate estimating the effectiveness of interventions, seven of these studies constructed neural network-based or tree-based surrogates of epidemiological models185,186,187,188,189,190,191. In four studies, AI-augmented epidemiological models were utilized to establish relationships between control measures and epidemiological parameters. AI models, including tree-based methods, SVMs, and neural networks, were trained for this purpose31,192,193,194. The impact of control strategies was then assessed by incorporating the parameter values estimated by these AI models into epidemiological models. Additionally, one study employed a cluster-based framework to assess the effectiveness of large-scale interventions. K-means clustering was used to identify representative areas with distinct archetypes195, after which an agent-based model was used to evaluate the impact of interventions on these areas. Three studies utilized a game-theoretic approach to assess196 or optimize197,198 control measures using agent-based models. In these studies, Nash equilibrium was derived using a neural network approach. Of the 13 studies utilizing integrated models for intervention assessment, six focused on investigating COVID-19, one studied malaria, one was plague, one was on dengue, and one examined HIV. Three studies did not investigate any specific disease.

In addition to the game theory-based optimization frameworks197,198, intervention optimization in integrated models also used reinforcement learning (RL) (38 studies)34,199,200,201,202,203,204,205,206,207,208,209,210,211,212,213,214,215,216,217,218,219,220,221,222,223,224,225,226,227,228,229,230,231,232,233,234,235, key node finding (10 studies)236,237,238,239,240,241,242,243,244,245, optimal control theory (6 studies)93,212,246,247,248,249, Markov decision process (MDP) (1 study)250, and surrogate modeling (3 studies)251,252,253 frameworks. In RL-based frameworks, RL environments were constructed based on epidemiological models to assess the impact of different intervention strategies. Through interactions with these environments, RL agents learned the optimal intervention strategy. In key node finding-based frameworks, AI models were utilized to identify the optimal set of individuals for interventions based on their attributes. With the exception of one study that used an AI-augmented epidemiological model to derive node importance236, all other studies employed synthetically-trained AI models, primarily GNNs (7 out of 9 studies). These models were trained on datasets generated by epidemiological models, featuring various source node sets and transmission network structures, to identify key nodes in unobserved scenarios.

In optimal control problem frameworks applied to intervention optimization, obtaining optimal control signals is typically challenging due to complexities arising from the underlying transmission dynamics. To address this challenge, one study developed a reduced model for an agent-based model, where neural networks were employed to approximate transition rates among individuals in different compartments246. Other studies used neural networks to approximate decision variables, thereby transforming the optimal control problem into a parameter learning problem. Identifying the optimal control strategy parallels training neural networks to minimize the loss function designed based on the objective function of the intervention optimization problem.

In MDP-based frameworks, the optimal intervention problem was translated into a discrete-time MDP, where neural networks approximated time-dependent control strategies as a function of current states, similar to the training strategy in the optimal control theory-based frameworks. In surrogate frameworks for intervention optimization, AI models were trained to improve the computational efficiency of identifying optimal control strategies. These AI models, often tree-based methods, learned decision rules from computationally intensive non-AI techniques such as search-based optimization methods.

Among the 59 studies utilizing integrated models for intervention optimization, 25 studies investigated COVID-19, one studied malaria, one was on foot-and-mouth disease, one was related to influenza, one studied HIV, one was on porcine reproductive and respiratory syndrome, and one examined Zymoseptoria tritici infection. Additionally, 28 studies proposed general methodological frameworks without investigating any specific disease.

Retrospective epidemic course analysis

Sixteen studies leveraged integrated models to retrospectively analyze past epidemics using surrogate modeling frameworks (Supplementary Appendix 10). In 14 of these studies, surrogate models were used to identify key factors influencing transmission dynamics. This was done by training the models to recognize dependencies between various factors and the corresponding simulation outputs254,255,256,257,258,259,260,261,262,263,264,265,266,267. Two studies used surrogate models to understand how individual characteristics and behaviors relate during epidemics268,269. Most (13 out of 16) studies adopted tree-based models. This framework was applied to investigate COVID-19 (4 studies), influenza (3 studies), dengue (1 study), enterovirus infection (1 study), brucellosis (1 study), foot-and-mouth disease (1 study), smallpox (1 study), pertussis (1 study), SARS (1 study), and varicella zoster virus infection (1 study).

Transmission inference

Nine studies investigated the use of integrated models for transmission inference (Supplementary Appendix 11), focusing on source localization (n = 4)270,271,272,273, determining the underlying transmission network or pattern (n = 2)236,274, inferring the health status of unobserved individuals (n = 1)275, reconstructing disease evolution dynamics (n = 1)276, and inferring incidence from death records (n = 1)33.

One study defined the COVID-19 transmission mechanism based on the renewal equation33. This knowledge was then incorporated into the loss function of a convolutional neural network, which connected death records with incidence data, similar to the PINNs/EAAMs described previously. The other eight studies relied on individual-based disease models. Due to challenges in obtaining real-world individual-level disease transmission networks, the majority (6 out of 8 studies) proposed general methodological frameworks and evaluated their performance using hypothetical networks and disease transmission scenarios. Only two studies validated their methods using real-world COVID-19 or tuberculosis datasets272,274.

Among studies employing individual-level models, one study formulated transmission inference as an optimal control problem with the unknown network structure treated as the control variable, the underlying transmission dynamics as constraints, and the difference between actual and estimated observations as the objective function236. The problem was then solved using a neural network approach. Another study employed a tree-based classifier to infer the health status of unobserved individuals based on their attributes. Disease propagation properties derived from epidemiological models were used as features in the classifier275.

The remaining six studies employed AI models trained on datasets generated by epidemiological models. These trained models were then applied to unseen synthetic or real-world data to infer transmission dyanamics270,271,272,273,274,276, such as generating source probability distribution272,273 or identifying the underlying transmission pattern (e.g., homogeneous transmission and super-spreader transmission)274. GNN-based models were commonly used in 5 out of 6 studies, owing to their ability to learn the intricate structures of transmission networks and dynamics on networks.

Outbreak detection

Seven studies reported on the use of integrated models for outbreak detection (Supplementary Appendix 12). Among these, two studies formulated the problem of COVID-19 outbreak detection as a classification problem, where tree-based, SVM-based, or multilayer perceptron (MLP) classifiers were trained to predict the outbreak risk level in a region based on its associated features277,278. Three studies estimated the outbreak risk of vector-borne diseases using epidemiological models parameterized by tree-based or Natural Language Processing (NLP) methods279,280,281. The final two studies gauged influenza outbreak risks using posterior probabilities of epidemiological models in the presence and absence of outbreaks282,283. Specifically, NLP methods were used to extract patient diagnosis data from emergency department reports, which were then input into Bayesian frameworks to derive the posterior probabilities for model selection.

Summary of integration methodologies

We identified nine primary methodological frameworks (Fig. 3, Supplementary Appendix 16), with surrogate modeling/synthetically-trained AI models comprising the largest proportion at 28% (68 studies). AI-augmented epidemiological models accounted for 26% (64 studies), AI-enhanced optimization frameworks made up 20% (48 studies), and PINNs and EAAMs collectively represented 17% (42 studies). Epidemiological models utilizing improved observational data appeared in 4% (9 studies), Bayesian neural networks in 3% (7 studies), ensemble learning frameworks in 2% (6 studies), AI models incorporating epidemiological input features in 1% (3 studies), and cluster-based transmission analysis frameworks in 1% (2 studies).

Nine primary frameworks were identified, including AI-augmented epidemiological models (a), epidemiological models with improved observational data (b), PINNs/EAAMs (c), AI models incorporating epidemiological input features (d), surrogate modeling/synthetically-trained AI models (e), ensemble learning frameworks (f), Bayesian neural networks (g), AI-enhanced optimization frameworks (h), and cluster-based transmission analysis frameworks (i). Details of these frameworks can be found in Supplementary Appendix 16. PINNs physics-informed neural networks, EAAMs epidemiology-aware AI models.

Seven integration approaches were adopted across methodological frameworks (Fig. 4). Nearly half (112 studies) of the studies employed AI models to learn unknown components of epidemiological models, enabling the incorporation of time-varying components and diverse datasets into disease modeling. Other common integration approaches (76 studies) included training AI techniques using data generated from epidemiological models. These approaches were used to learn disease transmission mechanisms, build surrogates for faster estimation and evaluation of model outcomes, or overcome the limitations of scarce and low-quality real-world data by leveraging synthetic datasets. Additionally, 73 studies demonstrated the integration of epidemiological knowledge into the input, loss functions, architectures, and learning processes of AI models. Forty-seven studies utilized AI models, primarily RL and optimal control theory-based frameworks, to determine optimal decisions under dynamic disease spreading processes. Only ten studies employed AI models to enhance observational data by extracting auxiliary information from non-traditional surveillance data, while six studies combined AI and epidemiological models through ensemble modeling frameworks to improve epidemic forecasting performance. Finally, one study used clustering methods to decompose large-scale epidemiological models.

The weight of each edge is proportional to the number of studies. Edges between application areas and methodological frameworks are colored based on application areas. Edges between methodological frameworks and integration approaches are colored based on integration approaches. Source data for the Sankey diagram can be found in Supplementary Appendix 14. PINNs physics-informed neural networks, EAAMs epidemiology-aware AI models.

Measures of quality

Among the 178 articles published in peer-reviewed journals, 14 (8%) did not list an impact factor, and 15 (8%) lacked a listed h5-index in Google Scholar (Supplementary Appendix 13). Seventy-four articles (42%) were published in quartile 1 (Q1) journals, meaning their impact factors were higher than those of at least 75% of journals in the same subject domain. Of the 67 articles published in the proceedings of conferences, workshops, or symposiums, 15 (22%) lacked a listed h5-index in Google Scholar. Additionally, 75 articles (26 published in peer-reviewed journals and 49 published in the proceedings of conferences, workshops, or symposiums) did not have citation information in Web of Science.

Discussion

The rapid expansion of big data and advancements in computational capacity have greatly broadened the integration of AI techniques with mechanistic epidemiological modeling. This scoping review identified and synthesized contributions to this burgeoning field. Among the 245 studies reviewed, nearly 90% were published during the past four years, propelled by the surge in COVID-19 research. We identified 26 infectious diseases that have been investigated using integrated models, with COVID-19 research constituting 60% of the studies. The applications of integrated models fell into six primary areas: infectious disease forecasting, model parameterization and calibration, disease intervention assessment and optimization, retrospective epidemic course analysis, transmission inference, and outbreak detection. The majority of studies focused on the first three categories. In contrast, fewer studies addressed retrospective epidemic course analysis, with notably limited research on transmission inference and outbreak detection, highlighting potential areas for future exploration. The majority of studies validated their proposed frameworks using real-world datasets (Supplementary Appendix 5). However, studies on transmission inference or intervention optimization often relied on synthetic data due to a sparsity of real-world data required for validation.

Integrated models have successfully addressed the challenges posed by mechanistic models in the face of continuously evolving epidemiological situations. This success has been achieved by leveraging AI techniques (Supplementary Appendix 15) to extract valuable information from diverse databases, uncover hidden spatiotemporal dependencies within high-dimensional data, discern complex relationships between variables and outcomes of interest, effectively learn and transfer knowledge embedded in the data, and introduce methodological innovations within established Bayesian and optimization frameworks.

Our review identified significant gaps and opportunities in the literature regarding the use of AI in mechanistic epidemiological modeling. First, among the six application areas identified in our review, integrated models stand out for their practical potential to revolutionize disease forecasting, model parameterization, and calibration in the near future. Traditional mechanistic models, grounded in human knowledge of disease progression and pathogen characteristics, have been constrained by their inflexibility in rapidly refining model structures and parameters to reflect current disease landscapes and policy priorities5,13. Continuous model refinement is resource-intensive and time-consuming, potentially leading to more complex models that are difficult to calibrate. Empowered by AI’s ability to handle diverse datasets and approximate complex functions, integrated models offer crucial and timely solutions. These advancements enable the effective utilization of widely available, yet dynamically evolving and intrinsically noisy, non-traditional surveillance data and facilitate the calibration of increasingly sophisticated mechanistic models with numerous free parameters. While extensive studies focus on intervention optimization, most remain theoretical, with limited demonstrations of practical applicability. For example, despite the heterogeneity in intervention objectives, most studies formulated the optimization problem using oversimplified assumptions about disease transmission dynamics, decision-making processes, and the costs and impacts of intervention strategies. Realistic considerations for decision-makers, such as the reasonableness of model assumptions, the feasibility of intervention strategies, public responses, and the trade-offs between disease and socioeconomic outcomes, were frequently overlooked. Although several studies attempted to incorporate economic factors into decision-making processes to balance health benefits and costs, significant gaps remain between proof-of-concept methods and real-world applications.

Second, while big data has great potential to enhance these models, the integration of non-traditional surveillance data such as social media content, search queries, medical reports, and satellite imagery remains limited. These data types could significantly augment or even replace traditional data sources in some contexts. For example, the transmission of climate-sensitive vector-borne diseases is influenced by a variety of climate and environmental factors. However, existing mechanistic models often utilize basic weather data, such as temperature, rainfall, and humidity11,284. These data, primarily collected by meteorological institutions, can be limited by spatial coverage and temporal resolution. Satellite imagery presents a valuable supplement, offering real-time, high-resolution data for a wide range of climate and environmental variables47,285. Previous studies have shown the potential of integrating satellite data into disease surveillance and forecasting models, utilizing purely AI approaches286,287,288. This highlights the ability of AI to extract rich information from satellite data, enabling mechanistic models to build more comprehensive and dynamic representations of abiotic and biotic drivers of disease transmission.

Third, disease transmission is a complex process influenced by a confluence of epidemiological, biological, and socio-behavioral factors. However, existing integrated models focus predominantly on epidemiological aspects, often neglecting the intricate interplays between biological and socio-behavioral processes5,289. This omission constrains the models’ utility for in-depth analysis, long-term forecasting, and strategic decision-making. These observations underscore the need for broader data integration and the development of new analytical tools capable of generating detailed, timely, and high-resolution insights into disease dynamics and evolution, policy impacts, and population behavior. The successful application of AI techniques across related fields290,291,292, including sociology and biology, creates opportunities to bridge this gap. For instance, agent-based models, equipped with large language models to enable human-like reasoning and decision-making, have demonstrated remarkable success in replicating human behaviors293. Incorporating such advancements into agent-based models of infectious diseases, which often rely on rule-based methods, has the potential to improve the realism of simulations in capturing complex human behaviors during epidemics294. Furthermore, machine learning and deep learning methods, trained on the rapidly growing volume of biological data, exhibit great promise for forecasting viral evolutionary dynamics and understanding immunity landscapes295,296.

Fourth, our review reveals a research landscape that is currently concentrated on direct transmission (especially COVID-19). The dominance of COVID-19 research is likely attributable to the vast amounts of data collected and shared during the pandemic. However, such extensive data may not be available for less prevalent or local diseases, particularly in low- and middle-income countries with limited surveillance capabilities. The practicality and scalability of most methodological frameworks depend on the availability of abundant, high-quality data, which is often lacking. For instance, GNN-based methods require individual-level contact networks that are frequently unavailable. Therefore, it is crucial to invest in both enhanced disease surveillance and research to improve modeling techniques capable of handling incomplete and noisy data.

Moreover, this narrow focus on direct transmission raises concerns about their generalizability to diseases with indirect transmission routes. While these models offer valuable insights into common modeling challenges faced across disease types—such as model calibration, parameter estimation, and capturing the non-linear impacts of interventions—their lack of consideration of more complex transmission mechanisms hinders their full potential. For example, models for vector-borne diseases should account for the vector population dynamics and the interactions between vector and human populations. Similarly, models for water-borne diseases require a detailed representation of environmental factors, such as sanitation infrastructure and contamination pathways, and human behavioral factors, such as hygiene practices and access to clean water sources. Traditional mechanistic models, constrained by simplified assumptions and parameter uncertainties, face challenges in fully capturing these complexities. By leveraging AI’s ability to integrate diverse data, learn complex patterns, and generate accurate predictions, integrated models can potentially enhance our understanding of underlying transmission mechanisms, optimizing a balance between model simplification and realism. This enables the application of integrated models to a broader spectrum of infectious diseases, each with unique challenges requiring tailored AI solutions.

Finally, while this review identified a diverse range of methodological frameworks, many studies lacked rigorous evaluations of robustness, sensitivity, and generalizability—all crucial for real-world application. Addressing these deficiencies in performance evaluation is essential to increase model transparency and reliability, ultimately fostering public trust and facilitating wider adoption of these models. Moreover, the proliferation of methodological frameworks raises important questions about the relative performance of integrated, purely AI, and traditional mechanistic models. While integrated models often outperform simple mechanistic models (e.g., classical SIR systems), they may not surpass those that capture sufficient realism for practical decision-making. Additionally, although traditional statistical models fall outside the scope of AI techniques considered in our review, their integration into mechanistic epidemiological models remains valuable38. However, comparisons between these integrated approaches, especially those incorporating traditional statistical models versus those based on ML/DL, are rarely discussed. For example, while LSTM networks, with their ability to capture temporal dependencies, were frequently used in AI-augmented epidemiological models to predict dynamic model parameters, traditional statistical models such as autoregressive integrated moving average (ARIMA) models may be more robust when faced with noisy and scarce time series data297. Therefore, future research is needed to rigorously examine the limitations and comparative utility of AI-assisted and well-established traditional disease transmission models to guide effective model selection.

In conclusion, AI techniques and mechanistic epidemiological models can synergistically enhance one another, leveraging the strength of AI methods to learn complex input-output relationships while incorporating the prior epidemiological knowledge embedded within mechanistic models. This scoping review systematically synthesizes the literature in this field and identifies diverse applications of integrated models, including disease forecasting, model calibration, and intervention optimization. While highlighting promising methodological advancements with practical potential, our review also reveals significant gaps in the current literature. These include the need for rigorous evaluation and comparison of methodologies, better incorporation of domain expertise in guiding the development of integrated models for policy-relevant decision-making, and expanded exploration of diverse datasets and underlying biological and socio-behavioral mechanisms. By addressing these challenges through interdisciplinary collaboration, we can unlock the full potential of AI to enrich the toolkit for epidemiological modeling, ultimately enhancing our ability to understand, prevent, mitigate, and respond to infectious disease outbreaks.

Methods

Overview

We conducted a scoping review to synthesize the literature on the integration of AI techniques, specifically ML and DL models, with mechanistic epidemiological models of infectious disease dynamics. Our methodology adhered to the framework proposed by the Joanna Briggs Institute (JBI)298 and reporting follows the guidelines for the Preferred Reporting Items for Systematic Reviews and Meta-Analyses extension for Scoping Reviews (PRISMA-ScR)299. The protocol was registered prospectively with the Open Science Framework (registration https://doi.org/10.17605/OSF.IO/E8ZG7) on October 9, 2023. Our review focuses on three key questions: (i) In which areas of epidemiological modeling have integrated models been applied? (ii) What infectious diseases have been modeled using integrated models? (iii) How have AI techniques and mechanistic epidemiological models been integrated?

Eligibility criteria

Our review included studies that integrated AI techniques with mechanistic models of infectious disease, irrespective of the disease type or research objective. We included studies published in English that underwent peer review, whether in journals or in the proceedings of conferences, workshops, or symposiums. Seven types of mechanistic models, commonly used in epidemiological modeling, were considered eligible for this review: compartmental models, individual/agent-based models, metapopulation models, cellular automata, renewal equations, chain binomial models, and branching processes. Eligible AI techniques include all ML and DL models, excluding statistical models and fuzzy logic systems. We define “integrated models” as those combining mechanistic epidemiological models with AI techniques specifically for transmission analysis and disease intervention optimization. Studies that constructed AI techniques as alternative modeling methods, compared the performance of AI techniques with mechanistic models, or used mechanistic models solely to generate validation/testing datasets for AI techniques were not considered eligible under this definition. We excluded studies that did not use eligible AI techniques or mechanistic models, did not integrate AI techniques with mechanistic models, or were not original research (e.g., reviews, commentaries, and editorial notes). Studies lacking methodological details, numerical results, or accessible full texts were excluded. Detailed eligibility criteria and justifications are provided in Supplementary Appendix 1.

Search strategy

We conducted searches across six databases: PubMed, Embase (Ovid), Web of Science, Scopus, IEEE Xplore, and ACM Digital Library (the ACM Full-Text collection). Our search combined six categories of terms (“AI”, “Epidemic modeling”, “Modeling”, “Infectious disease”, “Infectious agents”, and “Spreading”) using Boolean operators. We employed three kinds of search strings: (“AI” AND “Epidemic modeling”), (“AI” AND “Modeling” AND “Infectious disease”), and (“AI” AND “Modeling” AND “Infectious agents” AND “Spreading”). Within each category, terms were linked by the Boolean operator “OR.” Restrictions on search functionality within IEEE Xplore and ACM Digital Library required multiple separate searches. We used the Polyglot tool300 to translate search syntax across databases. Details of the search strings for each database can be found in Supplementary Appendix 2.

Our initial literature search commenced on October 6, 2023. To ensure comprehensive results, we updated this search on November 7, 2023 and December 6, 2023 to include newly published literature. We also conducted manual searches of relevant journals and conference proceedings (e.g., Nature Machine Intelligence and the ACM SIGKDD Conference on Knowledge Discovery and Data Mining). Finally, we reviewed the references of all included studies to identify additional relevant studies.

Selection of sources of evidence

All retrieved records were initially imported into EndNote 20.1301 to remove duplicates before transferring to Covidence302 for screening and processing. Three reviewers (YY, AP, and CB) screened titles and abstracts during the primary screening stage, using the ASReview software (version 1.5)303 — an open-source, ML-assisted tool for active learning and systematic reviews — to aid in decision-making (Supplementary Appendix 3). Full-text screening was conducted independently by six reviewers (YY, AP, CB, DMS, RR, and AS), with any discrepancies resolved through team discussion, ensuring adherence to the inclusion and exclusion criteria throughout the review process.

Data charting and data items

We extracted data from studies satisfying the inclusion criteria using Covidence and exported the data to Google Forms for further analysis. We piloted a standardized data extraction form with two members of the team (Y.Y. and A.P.) on three studies to ensure it captured all necessary information; the form is available in Supplementary Appendix 4. The data extraction form was first completed by one reviewer (Y.Y.), and subsequently verified by another reviewer (A.P.) for correctness, with any discrepancies resolved through discussion.

Quality assessment

The quality of each included study was assessed through the 2022 journal impact factor (not applicable for proceedings of conferences, workshops, or symposiums), the h5-index of the publication (journal, conference, workshop, or symposium) from Google Scholar, and the number of citations listed in Web of Science as of November 13, 2024. The h-index is an author-level metric that measures both the productivity and citation impact of a publication, and the h5-index is the h-index for articles published in the last 5 complete years (2018–2022).

Synthesis of results

We tabulated and summarized the characteristics of eligible studies by grouping them based on their application area, methodological framework, and integration approach. The application area encompassed the specific use of integrated models, such as outbreak detection and forecasting. We also identified the advantages of integrated models and highlighted the most commonly used AI techniques.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

All data generated or analyzed during this study are included in this published article (and its supplementary information files).

References

Kermack, W. O., McKendrick, A. G. & Walker, G. T. A contribution to the mathematical theory of epidemics. Proc. R. Soc. Lond. Ser. A, Containing Pap. A Math. Phys. Character 115, 700–721 (1997).

Riley, S. Large-scale spatial-transmission models of infectious disease. Science 316, 1298–1301 (2007).

Grassly, N. C. & Fraser, C. Mathematical models of infectious disease transmission. Nat. Rev. Microbiol. 6, 477–487 (2008).

Heesterbeek, H. et al. Modeling infectious disease dynamics in the complex landscape of global health. Science 347, aaa4339 (2015).

Becker, A. D. et al. Development and dissemination of infectious disease dynamic transmission models during the COVID-19 pandemic: what can we learn from other pathogens and how can we move forward? Lancet Digital Health 3, e41–e50 (2021).

Bedford, T. et al. Global circulation patterns of seasonal influenza viruses vary with antigenic drift. Nature 523, 217–220 (2015).

Moghadas, S. M. et al. Projecting hospital utilization during the COVID-19 outbreaks in the United States. Proc. Natl Acad. Sci. USA 117, 9122–9126 (2020).

Ye, Y. et al. Equitable access to COVID-19 vaccines makes a life-saving difference to all countries. Nat. Hum. Behav. 6, 207–216 (2022).

Eames, K. T. D. & Keeling, M. J. Modeling dynamic and network heterogeneities in the spread of sexually transmitted diseases. Proc. Natl Acad. Sci. USA 99, 13330–13335 (2002).

Marshall, B. D. L. et al. Potential effectiveness of long-acting injectable pre-exposure prophylaxis for HIV prevention in men who have sex with men: a modelling study. Lancet HIV 5, e498–e505 (2018).

Caldwell, J. M. et al. Climate predicts geographic and temporal variation in mosquito-borne disease dynamics on two continents. Nat. Commun. 12, 1233 (2021).

Thompson, H. A. et al. Seasonal use case for the RTS,S/AS01 malaria vaccine: a mathematical modelling study. Lancet Glob. Health 10, e1782–e1792 (2022).

Holmdahl, I. & Buckee, C. Wrong but useful - what covid-19 epidemiologic models can and cannot tell us. N. Engl. J. Med. 383, 303–305 (2020).

Wei, Y. et al. Better modelling of infectious diseases: lessons from covid-19 in China. BMJ 375, n2365 (2021).

Budd, J. et al. Digital technologies in the public-health response to COVID-19. Nat. Med. 26, 1183–1192 (2020).

Whitelaw, S., Mamas, M. A., Topol, E. & Van Spall, H. G. C. Applications of digital technology in COVID-19 pandemic planning and response. Lancet Digit Health 2, e435–e440 (2020).

Pandit, J. A., Radin, J. M., Quer, G. & Topol, E. J. Smartphone apps in the COVID-19 pandemic. Nat. Biotechnol. 40, 1013–1022 (2022).

Zhang, Q., Gao, J., Wu, J. T., Cao, Z. & Dajun Zeng, D. Data science approaches to confronting the COVID-19 pandemic: a narrative review. Philos. Trans. A Math. Phys. Eng. Sci. 380, 20210127 (2022).

Buckee, C. Improving epidemic surveillance and response: big data is dead, long live big data. Lancet Digit Health 2, e218–e220 (2020).

Prasad, P. V. et al. Multimodeling approach to evaluating the efficacy of layering pharmaceutical and nonpharmaceutical interventions for influenza pandemics. Proc. Natl Acad. Sci. USA 120, e2300590120 (2023).

Pangallo, M. et al. The unequal effects of the health–economy trade-off during the COVID-19 pandemic. Nat. Hum. Behav. 8, 264–275 (2024).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Jordan, M. I. & Mitchell, T. M. Machine learning: Trends, perspectives, and prospects. Science 349, 255–260 (2015).

Hirschberg, J. & Manning, C. D. Advances in natural language processing. Science 349, 261–266 (2015).

Jumper, J. et al. Highly accurate protein structure prediction with AlphaFold. Nature 596, 583–589 (2021).

Davies, A. et al. Advancing mathematics by guiding human intuition with AI. Nature 600, 70–74 (2021).

Yu, K.-H., Beam, A. L. & Kohane, I. S. Artificial intelligence in healthcare. Nat. Biomed. Eng. 2, 719–731 (2018).

Komorowski, M., Celi, L. A., Badawi, O., Gordon, A. C. & Faisal, A. A. The Artificial Intelligence Clinician learns optimal treatment strategies for sepsis in intensive care. Nat. Med. 24, 1716–1720 (2018).

Rajpurkar, P., Chen, E., Banerjee, O. & Topol, E. AI in health and medicine. N.@nat.,Com. 28, 31–38 (2022).

Adebamowo, C. A. et al. The promise of data science for health research in Africa. Nat. Commun. 14, 6084 (2023).

Ghamizi, S. et al. Data-driven Simulation and Optimization for Covid-19 Exit Strategies. in Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining 3434–3442 (Association for Computing Machinery, New York, NY, USA, 2020).

Kharazmi, E. et al. Identifiability and predictability of integer- and fractional-order epidemiological models using physics-informed neural networks. Nat. Computational Sci. 1, 744–753 (2021).

Vilar, J. M. G. & Saiz, L. Dynamics-informed deconvolutional neural networks for super-resolution identification of regime changes in epidemiological time series. Sci. Adv. 9, eadf0673 (2023).

Yao, Y., Zhou, H., Cao, Z., Zeng, D. D. & Zhang, Q. Optimal adaptive nonpharmaceutical interventions to mitigate the outbreak of respiratory infections following the COVID-19 pandemic: a deep reinforcement learning study in Hong Kong, China. J. Am. Med. Inform. Assoc. 30, 1543–1551 (2023).

Kapoor, A. et al. Examining COVID-19 forecasting using spatio-temporal Graph Neural Networks. arXiv [cs.LG] (2020).

Gao, J. et al. Evidence-driven spatiotemporal COVID-19 hospitalization prediction with Ising dynamics. Nat. Commun. 14, 3093 (2023).

Panagopoulos, G., Nikolentzos, G. & Vazirgiannis, M. Transfer graph neural networks for pandemic forecasting. Proc. Conf. AAAI Artif. Intell. 35, 4838–4845 (2021).

Rodríguez, A. et al. Machine learning for data-centric epidemic forecasting. Nat. Mach. Intell. 6, 1122–1131 (2024).

Andersson, H. & Britton, T. Stochastic Epidemic Models and Their Statistical Analysis. (Springer, New York, NY, 2000).

Ionides, E. L., Bretó, C. & King, A. A. Inference for nonlinear dynamical systems. Proc. Natl Acad. Sci. USA 103, 18438–18443 (2006).

Farah, M., Birrell, P., Conti, S. & Angelis, D. D. Bayesian emulation and calibration of a dynamic epidemic model for A/H1N1 influenza. J. Am. Stat. Assoc. 109, 1398–1411 (2014).

Syrowatka, A. et al. Leveraging artificial intelligence for pandemic preparedness and response: a scoping review to identify key use cases. NPJ Digit. Med. 4, 96 (2021).

Alfred, R. & Obit, J. H. The roles of machine learning methods in limiting the spread of deadly diseases: A systematic review. Heliyon 7, e07371 (2021).

Syeda, H. B. et al. Role of Machine Learning Techniques to Tackle the COVID-19 Crisis: Systematic Review. JMIR Med. Inf. 9, e23811 (2021).

Adly, A. S., Adly, A. S. & Adly, M. S. Approaches Based on Artificial Intelligence and the Internet of Intelligent Things to Prevent the Spread of COVID-19: Scoping Review. J. Med. Internet Res. 22, e19104 (2020).

Brownstein, J. S., Rader, B., Astley, C. M. & Tian, H. Advances in Artificial Intelligence for Infectious-Disease Surveillance. N. Engl. J. Med. 388, 1597–1607 (2023).

Pley, C., Evans, M., Lowe, R., Montgomery, H. & Yacoub, S. Digital and technological innovation in vector-borne disease surveillance to predict, detect, and control climate-driven outbreaks. Lancet Planet Health 5, e739–e745 (2021).

Xiang, Y. et al. Application of artificial intelligence and machine learning for HIV prevention interventions. Lancet HIV 9, e54–e62 (2022).

Poirier, C. et al. Real-time forecasting of the COVID-19 outbreak in Chinese provinces: Machine learning approach using novel digital data and estimates from mechanistic models. J. Med. Internet Res. 22, e20285 (2020).

Fan, X.-R., Zuo, J., He, W.-T. & Liu, W. Stacking based prediction of COVID-19 Pandemic by integrating infectious disease dynamics model and traditional machine learning. In Proceedings of the 2022 5th International Conference on Big Data and Internet of Things 20–26 (Association for Computing Machinery, New York, NY, USA, 2022).

Garner, M. G. et al. Early Decision Indicators for Foot-and-Mouth Disease Outbreaks in Non-Endemic Countries. Front Vet. Sci. 3, 109 (2016).

Kondapalli, A. R., Koganti, H., Challagundla, S. K., Guntaka, C. S. R. & Biswas, S. Machine learning predictions of COVID-19 second wave end-times in Indian states. Indian J. Phys. Proc. Indian Assoc. Cultiv Sci. (2004) 96, 2547–2555 (2022).

Kandula, S. et al. Evaluation of mechanistic and statistical methods in forecasting influenza-like illness. J. R. Soc. Interface 15, 20180174 (2018).

Adiga, A. et al. All Models Are Useful: Bayesian Ensembling for Robust High Resolution COVID-19 Forecasting. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery and Data Mining (dl.acm.org, https://doi.org/10.1101/2021.03.12.21253495. 2021).

Nadler, P., Arcucci, R. & Guo, Y. A Neural SIR Model for Global Forecasting. In Proceedings of the Machine Learning for Health NeurIPS Workshop, in Proceedings of Machine Learning Research. Vol. 136, 254–266 (PMLR, 2020). Available from https://proceedings.mlr.press/v136/nadler20a.html.

Maniamfu, P. & Kameyama, K. LSTM-based Forecasting using Policy Stringency and Time-varying Parameters of the SIR Model for COVID-19. In 2023 19th IEEE International Colloquium on Signal Processing & Its Applications (CSPA) 111–116 (IEEE, 2023).

Adiga, A. et al. Enhancing COVID-19 Ensemble Forecasting Model Performance Using Auxiliary Data Sources. In 2022 IEEE International Conference on Big Data (Big Data) 1594–1603 (IEEE, Osaka, Japan, 2022). https://doi.org/10.1109/BigData55660.2022.10020579.

Delli Compagni, R., Cheng, Z., Russo, S. & Van Boeckel, T. P. A hybrid Neural Network-SEIR model for forecasting intensive care occupancy in Switzerland during COVID-19 epidemics. PLoS One 17, e0263789 (2022).

Barmparis, G. D. & Tsironis, G. P. Physics‐informed machine learning for the COVID‐19 pandemic: Adherence to social distancing and short‐term predictions for eight countries. Quant. Biol. 10, 139–149 (2022).

De Rosa, M., Giampaolo, F., Piccialli, F. & Cuomo, S. Modelling the COVID-19 infection rate through a Physics-Informed learning approach. In 2023 31st Euromicro International Conference on Parallel, Distributed and Network-Based Processing (PDP) 212–218 (IEEE, 2023).

Torku, T., Khaliq, A. & Rihan, F. SEINN: A deep learning algorithm for the stochastic epidemic model. Math. Biosci. Eng. 20, 16330–16361 (2023).

Berkhahn, S. & Ehrhardt, M. A physics-informed neural network to model COVID-19 infection and hospitalization scenarios. Adv. Contin. Discret Model 2022, 61 (2022).

Rodríguez, A., Cui, J., Ramakrishnan, N., Adhikari, B. & Aditya Prakash, B. EINNs: Epidemiologically-Informed Neural Networks. AAAI 37, 14453–14460 (2023).

Shaier, S., Raissi, M. & Seshaiyer, P. Data-driven approaches for predicting spread of infectious diseases through DINNs: Disease Informed Neural Networks. Lett. Biomath. 9, 71–105 (2022).

Bertaglia, G., Lu, C., Pareschi, L. & Zhu, X. Asymptotic-Preserving Neural Networks for multiscale hyperbolic models of epidemic spread. Math. Models Methods Appl. Sci. 32, 1949–1985 (2022).

Malinzi, J., Gwebu, S. & Motsa, S. Determining COVID-19 Dynamics Using Physics Informed Neural Networks. Axioms 11, 121 (2022).

Liu, P., Han, Q. & Yang, X. Rolling Iterative Prediction for Correlated Multivariate Time Series. In Data Science 433–452 (Springer Nature Singapore, 2023).

Otadi, M. & Mosleh, M. Universal approximation method for the solution of integral equations. Math. Sci. 11, 181–187 (2017).

Liu, M., Liu, Y. & Liu, J. Epidemiology-aware Deep Learning for Infectious Disease Dynamics Prediction. In Proceedings of the 32nd ACM International Conference on Information and Knowledge Management 4084–4088 (Association for Computing Machinery, New York, NY, USA, 2023).

Cao, Q. et al. MepoGNN: Metapopulation Epidemic Forecasting with Graph Neural Networks. In Machine Learning and Knowledge Discovery in Databases 453–468 (Springer Nature Switzerland, 2023).

Sun, C., Kumarasamy, V. K., Liang, Y., Wu, D. & Wang, Y. Using a Layered Ensemble of Physics-Guided Graph Attention Networks to Predict COVID-19 Trends. Appl. Artif. Intell. 36, 2055989 (2022).

Gao, J. et al. STAN: spatio-temporal attention network for pandemic prediction using real-world evidence. J. Am. Med. Inform. Assoc. 28, 733–743 (2021).

Zheng, Y., Li, Z., Xin, J. & Zhou, G. A spatial-temporal graph based hybrid infectious disease model with application to COVID-19. Int Conf Pattern Recognit Appl. Method abs/2010.09077, (2020).

Ma, J. et al. Enhancing Online Epidemic Supervising System by Compartmental and GRU Fusion Model. Mobile Information Systems 2022, 3303854 (2022).

Wang, L. et al. CausalGNN: Causal-Based Graph Neural Networks for Spatio-Temporal Epidemic Forecasting. AAAI 36, 12191–12199 (2022).

Nguyen, C. et al. BeCaked+: An Explainable AI Model to Forecast Delta-Spreading Covid-19 Situations for Ho Chi Minh City. In Proceedings of the ICR’22 International Conference on Innovations in Computing Research 53–64 (Springer International Publishing, 2022).

Nguyen, D. Q. et al. BeCaked: An Explainable Artificial Intelligence Model for COVID-19 Forecasting. Sci. Rep. 12, 7969 (2022).

Wang, L., Chen, J. & Marathe, M. TDEFSI: Theory-guided Deep Learning-based Epidemic Forecasting with Synthetic Information. ACM Trans. Spat. Algorithms Syst. 6, 1–39 (2020).

Zhan, C., Wu, Z., Wen, Q., Gao, Y. & Zhang, H. Optimizing Broad Learning System Hyper-parameters through Particle Swarm Optimization for Predicting COVID-19 in 184 Countries. In 2020 IEEE International Conference on E-health Networking, Application & Services (HEALTHCOM) 1–6 (IEEE, 2021).

Wang, D., Zhang, S. & Wang, L. Deep Epidemiological Modeling by Black-box Knowledge Distillation: An Accurate Deep Learning Model for COVID-19. AAAI 35, 15424–15430 (2021).

Bogacsovics, G. et al. Replacing the SIR epidemic model with a neural network and training it further to increase prediction accuracy. Ann. Math. Inform. 53, 73–91 (2021).

Wang, H. et al. Predicting the Epidemics Trend of COVID-19 Using Epidemiological-Based Generative Adversarial Networks. IEEE J. Sel. Top. Signal Process. 16, 276–288 (2022).

Murphy, C., Laurence, E. & Allard, A. Deep learning of contagion dynamics on complex networks. Nat. Commun. 12, 4720 (2021).

Zhang, T. & Li, J. Understanding and predicting the spatio-temporal spread of COVID-19 via integrating diffusive graph embedding and compartmental models. Trans. GIS 25, 3025–3047 (2021).

Quilodrán-Casas, C. et al. Digital twins based on bidirectional LSTM and GAN for modelling the COVID-19 pandemic. Neurocomputing 470, 11–28 (2022).

Silva, V. L. S., Heaney, C. E., Li, Y. & Pain, C. C. Data Assimilation Predictive GAN (DA-PredGAN) Applied to a Spatio-Temporal Compartmental Model in Epidemiology. J. Sci. Comput. 94, 25 (2023).

Bhouri, M. A. et al. COVID-19 dynamics across the US: A deep learning study of human mobility and social behavior. Comput. Methods Appl. Mech. Eng. 382, 113891 (2021).

Wang, H. et al. Neural-SEIR: A flexible data-driven framework for precise prediction of epidemic disease. Math. Biosci. Eng. 20, 16807–16823 (2023).

Chopra, A. et al. Differentiable Agent-based Epidemiology. In Proceedings of the 2023 International Conference on Autonomous Agents and Multiagent Systems (AAMAS '23). 1848–1857 (International Foundation for Autonomous Agents and Multiagent Systems, Richland, SC, 2023).

Yang, Y., Kiyavash, N., Song, L. & He, N. The devil is in the detail: A framework for macroscopic prediction via microscopic models. Adv. Neural Inf. Process. Syst. 33, 8006–8016 (2020).

Amaral, F., Casaca, W., Oishi, C. M. & Cuminato, J. A. Towards Providing Effective Data-Driven Responses to Predict the Covid-19 in São Paulo and Brazil. Sensors 21, 540 (2021).

He, Z. & Cai, Z. Quantifying the Effect of Quarantine Control and Optimizing its Cost in COVID-19 Pandemic. IEEE/ACM Trans. Comput. Biol. Bioinform. PP, 1–11.

Yin, S., Wu, J. & Song, P. Optimal control by deep learning techniques and its applications on epidemic models. J. Math. Biol. 86, 36 (2023).

Arık, S. Ö. et al. A prospective evaluation of AI-augmented epidemiology to forecast COVID-19 in the USA and Japan. NPJ Digit Med. 4, 146 (2021).

Petrica, M. & Popescu, I. Inverse problem for parameters identification in a modified SIRD epidemic model using ensemble neural networks. BioData Min. 16, 22 (2023).

Liu, X., Wang, W., Hou, B. & Feng, N. Prediction of Active Cases of COVID-19 Based on small-scale-KNN-LSTM. In 2023 35th Chinese Control and Decision Conference (CCDC) 2031–2036 (IEEE, 2023).

Rahnsch, B. & Taghizadeh, L. Network-based uncertainty quantification for mathematical models in epidemiology. J. Theor. Biol. 577, 111671 (2024).

Kumar, N. & Susan, S. Epidemic Modeling using Hybrid of Time-varying SIRD, Particle Swarm Optimization, and Deep Learning. In 2023 14th International Conference on Computing Communication and Networking Technologies (ICCCNT) 1–7 (IEEE, 2023).

Ji, J. et al. Climate-dependent effectiveness of nonpharmaceutical interventions on COVID-19 mitigation. Math. Biosci. 366, 109087 (2023).

Vega, R., Flores, L. & Greiner, R. SIMLR: Machine Learning inside the SIR Model for COVID-19 Forecasting. Forecasting 4, 72–94 (2022).

Chen, Y., Liu, J. & Yu, B. COVID-19 Trend Prediction Using CLS-Net Hybrid Model. In 2023 12th International Conference of Information and Communication Technology (ICTech) 277–282 (IEEE, 2023).

Alsmadi, M. et al. Susceptible exposed infectious recovered-machine learning for COVID-19 prediction in Saudi Arabia. Int. J. Electr. Comput. Eng. (IJECE) https://doi.org/10.11591/ijece.v13i4.pp4761-4776 (2023).

Qiu, Y. et al. Prediction and Analysis of Infectious Diseases Based on M-SIR Model. In 2022 5th International Conference on Pattern Recognition and Artificial Intelligence (PRAI) 225–234 (IEEE, 2022).

Mu, C., Teo, J. & Cheong, J. Modelling Singapore’s Covid-19 Pandemic Using SEIRQV and Hybrid Epidemiological Models. In IRC-SET 2022 559–575 (Springer Nature Singapore, 2023).

Wang, L. et al. Machine learning spatio-temporal epidemiological model to evaluate Germany-county-level COVID-19 risk. Mach. Learn.: Sci. Technol. 2, 035031 (2021).

Wu, W. Computer intelligent prediction method of COVID-19 based on improved SEIR model and machine learning. In 2022 IEEE 2nd International Conference on Power, Electronics and Computer Applications (ICPECA) 934–938 (IEEE, 2022).

Yao, S. Assessment on the anti-epidemic measures: Mathematical model establishment for COVID-19 epidemic and application of recurrent neural network. In 2022 7th International Conference on Intelligent Computing and Signal Processing (ICSP) 1584–1590 (IEEE, 2022).

Zhang, G. & Liu, X. Prediction and control of COVID-19 spreading based on a hybrid intelligent model. PLoS One 16, e0246360 (2021).

Wyss, A. & Hidalgo, A. Modeling COVID-19 Using a Modified SVIR Compartmental Model and LSTM-Estimated Parameters. Mathematics 11, 1436 (2023).

Gadewadikar, J. & Marshall, J. A methodology for parameter estimation in system dynamics models using artificial intelligence. Syst. Eng. 27, 253–266 (2024).

Zisad, S. N., Hossain, M. S., Hossain, M. S. & Andersson, K. An Integrated Neural Network and SEIR Model to Predict COVID-19. Algorithms 14, 94 (2021).

Merkelbach, K. et al. HybridML: Open source platform for hybrid modeling. Comput. Chem. Eng. 160, 107736 (2022).

Muñoz, L. et al. A hybrid system for pandemic evolution prediction. ADCAIJ 11, 111–128 (2022).

Castillo Ossa, L. F. et al. A Hybrid Model for COVID-19 Monitoring and Prediction. Electronics 10, 799 (2021).

Jiang, R. et al. Countrywide Origin-Destination Matrix Prediction and Its Application for COVID-19. In Machine Learning and Knowledge Discovery in Databases. Applied Data Science Track 319–334 (Springer International Publishing, 2021).

Yasami, A., Beigi, A. & Yousefpour, A. Application of long short-term memory neural network and optimal control to variable-order fractional model of HIV/AIDS. Eur. Phys. J. Spec. Top. 231, 1875–1884 (2022).

Liao, Z. et al. SIRVD-DL: A COVID-19 deep learning prediction model based on time-dependent SIRVD. Comput. Biol. Med. 138, 104868 (2021).

Zheng, N. et al. Predicting COVID-19 in China Using Hybrid AI Model. IEEE Trans. Cyber. 50, 2891–2904 (2020).

Watson, G. L. et al. Pandemic velocity: Forecasting COVID-19 in the US with a machine learning & Bayesian time series compartmental model. PLoS Comput. Biol. 17, e1008837 (2021).

Liu, X.-D. et al. Nesting the SIRV model with NAR, LSTM and statistical methods to fit and predict COVID-19 epidemic trend in Africa. BMC Public Health 23, 138 (2023).

Wang, X., Wang, H., Ramazi, P., Nah, K. & Lewis, M. From Policy to Prediction: Forecasting COVID-19 Dynamics Under Imperfect Vaccination. Bull. Math. Biol. 84, 90 (2022).

Wang, X., Wang, H., Ramazi, P., Nah, K. & Lewis, M. A Hypothesis-Free Bridging of Disease Dynamics and Non-pharmaceutical Policies. Bull. Math. Biol. 84, 57 (2022).

Deng, Q. Dynamics and Development of the COVID-19 Epidemic in the United States: A Compartmental Model Enhanced With Deep Learning Techniques. J. Med. Internet Res. 22, e21173 (2020).

Kim, J., Matsunami, K., Okamura, K., Badr, S. & Sugiyama, H. Determination of critical decision points for COVID-19 measures in Japan. Sci. Rep. 11, 16416 (2021).

Gupta, A. & Katarya, R. A deep-SIQRV epidemic model for COVID-19 to access the impact of prevention and control measures. Comput. Biol. Chem. 107, 107941 (2023).

Bousquet, A., Conrad, W. H., Sadat, S. O., Vardanyan, N. & Hong, Y. Deep learning forecasting using time-varying parameters of the SIRD model for Covid-19. Sci. Rep. 12, 3030 (2022).

Feng, L., Chen, Z., Harold, A. L. Jr., Furati, K. & Khaliq, A. Data driven time-varying SEIR-LSTM/GRU algorithms to track the spread of COVID-19. Math. Biosci. Eng. 19, 8935–8962 (2022).

Ding, Z., Sha, F., Zhang, Y. & Yang, Z. Biology-Informed Recurrent Neural Network for Pandemic Prediction Using Multimodal Data. Biomimetics 8, 158 (2023).

Khan, J. I., Ullah, F. & Lee, S. Attention based parameter estimation and states forecasting of COVID-19 pandemic using modified SIQRD Model. Chaos Solitons Fractals 165, 112818 (2022).

Kumaresan, M., Kumar, M. S. & Muthukumar, N. Analysis of mobility based COVID-19 epidemic model using Federated Multitask Learning. Math. Biosci. Eng. 19, 9983–10005 (2022).

Long, J., Khaliq, A. Q. M. & Furati, K. M. Identification and prediction of time-varying parameters of COVID-19 model: a data-driven deep learning approach. Int. J. Comput. Math. 98, 1617–1632 (2021).

Farooq, J. & Bazaz, M. A. A deep learning algorithm for modeling and forecasting of COVID-19 in five worst affected states of India. Alex. Eng. J. 60, 587–596 (2021).

Soemsap, T., Wongthanavasu, S. & Satimai, W. Forecasting number of dengue patients using cellular automata model. In 2014 International Electrical Engineering Congress (iEECON) 1–4 (IEEE, 2014).

Tuarob, S., Tucker, C. S., Salathe, M. & Ram, N. Modeling Individual-Level Infection Dynamics Using Social Network Information. In Proceedings of the 24th ACM International on Conference on Information and Knowledge Management 1501–1510 (Association for Computing Machinery, New York, NY, USA, 2015).

Solares-Hernández, P. A., Garibo-i-Orts, Ò., Alberto Conejero, J. & Manzano, F. A. Adaptation of the COVASIM model to incorporate non-pharmaceutical interventions: Application to the Dominican Republic during the second wave of COVID-19. Appl. Math. Nonlin. Sci. 8, 2319–2332 (2023).

Rosato, C. et al. Extracting Self-Reported COVID-19 Symptom Tweets and Twitter Movement Mobility Origin/Destination Matrices to Inform Disease Models. Information 14, 170 (2023).

Kandula, S., Pei, S. & Shaman, J. Improved forecasts of influenza-associated hospitalization rates with Google Search Trends. J. R. Soc. Interface 16, 20190080 (2019).

Reiker, T. et al. Emulator-based Bayesian optimization for efficient multi-objective calibration of an individual-based model of malaria. Nat. Commun. 12, 7212 (2021).

Jørgensen, A. C. S., Ghosh, A., Sturrock, M. & Shahrezaei, V. Efficient Bayesian inference for stochastic agent-based models. PLoS Comput. Biol. 18, e1009508 (2022).

Kwok, W. M., Streftaris, G. & Dass, S. C. Laplace based Bayesian inference for ordinary differential equation models using regularized artificial neural networks. Stat. Comput. 33, 124 (2023).

Anirudh, R. et al. Accurate Calibration of Agent-based Epidemiological Models with Neural Network Surrogates. In Proceedings of the 1st Workshop on Healthcare AI and COVID-19, ICML 2022 (eds. Xu, P. et al.) vol. 184 54–62 (PMLR, 2022).

Perumal, R. & van Zyl, T. L. Surrogate-assisted strategies: the parameterisation of an infectious disease agent-based model. Neural Comput. Appl. https://doi.org/10.1007/s00521-022-07476-y (2022).

Nsoesie, E. O., Beckman, R., Marathe, M. & Lewis, B. Prediction of an Epidemic Curve: A Supervised Classification Approach. Stat. Commun. Infect. Dis. 3, 5 (2011).

Harrison, G. et al. Identifying Complicated Contagion Scenarios from Cascade Data. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining 4135–4145 (Association for Computing Machinery, New York, NY, USA, 2023).

Augusta, C., Deardon, R. & Taylor, G. Deep learning for supervised classification of spatial epidemics. Spat. Spatiotemporal Epidemiol. 29, 187–198 (2019).

Pokharel, G. & Deardon, R. Supervised learning and prediction of spatial epidemics. Spat. Spatiotemporal Epidemiol. 11, 59–77 (2014).

Nsoesie, E. O., Leman, S. C. & Marathe, M. V. A Dirichlet process model for classifying and forecasting epidemic curves. BMC Infect. Dis. 14, 12 (2014).

Torku, T. K., Khaliq, A. Q. M. & Furati, K. M. Deep-Data-Driven Neural Networks for COVID-19 Vaccine Efficacy. Epidemiologia (Basel) 2, 564–586 (2021).

Ghosh, S., Ogueda-Oliva, A., Ghosh, A., Banerjee, M. & Seshaiyer, P. Understanding the implications of under-reporting, vaccine efficiency and social behavior on the post-pandemic spread using physics informed neural networks: A case study of China. PLoS One 18, e0290368 (2023).

Cai, M., Em Karniadakis, G. & Li, C. Fractional SEIR model and data-driven predictions of COVID-19 dynamics of Omicron variant. Chaos 32, 071101 (2022).

Oluwasakin, E. O. & Khaliq, A. Q. M. Data-Driven Deep Learning Neural Networks for Predicting the Number of Individuals Infected by COVID-19 Omicron Variant. Epidemiologia (Basel) 4, 420–453 (2023).

He, M., Tang, S. & Xiao, Y. Combining the dynamic model and deep neural networks to identify the intensity of interventions during COVID-19 pandemic. PLoS Comput. Biol. 19, e1011535 (2023).

Raissi, M., Ramezani, N. & Seshaiyer, P. On parameter estimation approaches for predicting disease transmission through optimization, deep learning and statistical inference methods. Lett. Biomath. 6, 1–26 (2019).

Nguyen, L., Raissi, M. & Seshaiyer, P. Modeling, Analysis and Physics Informed Neural Network approaches for studying the dynamics of COVID-19 involving human-human and human-pathogen interaction. Computational Math. Biophysics 10, 1–17 (2022).

Grimm, V., Heinlein, A., Klawonn, A., Lanser, M. & Weber, J. Estimating The Time-Dependent Contact Rate Of Sir And Seir Models In Mathematical Epidemiology Using Physics-Informed Neural Networks. Electron. Trans. Numer. Anal. 56, 1–27 (2022).

Jamiluddin, M. S., Mohd, M. H., Ahmad, N. A. & Musa, K. I. Situational analysis for COVID-19: Estimating transmission dynamics in Malaysia using an SIR-type model with neural network approach. Sains Malays. 50, 2469–2478 (2021).

Heldmann, F., Berkhahn, S., Ehrhardt, M. & Klamroth, K. PINN training using biobjective optimization: The trade-off between data loss and residual loss. J. Comput. Phys. 488, 112211 (2023).

Schiassi, E., De Florio, M., D’Ambrosio, A., Mortari, D. & Furfaro, R. Physics-Informed Neural Networks and Functional Interpolation for Data-Driven Parameters Discovery of Epidemiological Compartmental Models. Mathematics 9, 2069 (2021).

Angeli, M., Neofotistos, G., Mattheakis, M. & Kaxiras, E. Modeling the effect of the vaccination campaign on the COVID-19 pandemic. Chaos Solitons Fractals 154, 111621 (2022).

Ning, X., Li, X.-A., Wei, Y. & Chen, F. Euler iteration augmented physics-informed neural networks for time-varying parameter estimation of the epidemic compartmental model. Front. Phys. 10, 1062554 (2022).

He, M., Tang, B., Xiao, Y. & Tang, S. Transmission dynamics informed neural network with application to COVID-19 infections. Comput. Biol. Med. 165, 107431 (2023).

Jung, S. Y., Jo, H., Son, H. & Hwang, H. J. Real-World Implications of a Rapidly Responsive COVID-19 Spread Model with Time-Dependent Parameters via Deep Learning: Model Development and Validation. J. Med. Internet Res. 22, e19907 (2020).