Abstract

Animals often exhibit rapid action changes in context-switching environments. This study hypothesized that, compared to the expected outcome, an unexpected outcome leads to distinctly different action-selection strategies to guide rapid adaptation. We designed behavioral measures differentiating between trial-by-trial dynamics after expected and unexpected events. In various reversal learning data with different rodent species and task complexities, conventional learning models failed to replicate the choice behavior following an unexpected outcome. This discrepancy was resolved by the proposed model with two different decision variables contingent on outcome expectation: the support-stay and conflict-shift bias. Electrophysiological data analyses revealed that striatal neurons encode our model’s key variables. Furthermore, the inactivation of striatal direct and indirect pathways neutralizes the effect of past expected and unexpected outcomes, respectively, on the action-selection strategy following an unexpected outcome. Our study suggests unique roles of the striatum in arbitrating between different action selection strategies for few-shot adaptation.

Similar content being viewed by others

Introduction

Common reinforcement learning (RL) models explain that animals gradually learn to take specific actions to maximize the reward. This also allows them to adapt to a changing environment. Several neural substrates, including the prefrontal cortex (PFC)1, hippocampus2, and striatum3, have been implicated in adaptive behavior.

Various reversal learning tasks are used to study adaptive behavior4. In a probabilistic reward learning task, subjects learn to associate action with a specific reward probability determined by the task context, which remains constant for several trials. Then action-reward probability is reversed without explicit cues, necessitating adaptation to a new context.

During an experiment, rational subjects make choices they believe will lead to rewards based on their estimated context. Receiving an actual reward confirms their belief about the current context. However, the absence of an expected reward does not necessarily invalidate their context estimation. This unexpected outcome suggests at least two possibilities: first, the context estimation remains valid, and the lack of reward is simply a noisy event due to environmental uncertainty. In this case, there’s no need to change the choice behavior. Second, the context has actually changed, necessitating rapid adaptation through inferring a new context and adjusting associated choices. This thought experiment suggests that unexpected outcomes may initiate complex behavioral dynamics associated with context adaptation, which cannot be adequately explained by conventional few-shot learning. We term this the unexpected event-driven few-shot adaptation hypothesis.

As a means to explore behavioral evidence of few-shot adaptation guided by unexpected events, we designed measures, specifically, behavioral dynamics profiles that differentiate between trial-by-trial dynamics after an unexpected event and those after an expected one. The analyses using the proposed measures on the behavioral data, collected from rats during the two-step task5, demonstrated that various reinforcement learning (RL) models’ predictions fail to explain choice behavior after an unexpected event, compared to an expected one. It strongly supports our hypothesis that animals often exhibit abrupt and rapid changes in choice behavior depending on whether the last event was expected.

To better understand complex behavioral dynamics of rapid adaptation guided by expected and unexpected events, we propose a computational model that learns two decision variables; the support-stay bias and conflict-shift bias, called the support-stay, conflict-shift (SSCS) model. The SSCS model successfully replicates the behavioral patterns following both an unexpected and an expected event, qualitatively and quantitatively better than various RL models. The prediction of the SSCS model is also confirmed by the multi-trial history regression analysis, which explains the choice behavior after an unexpected event as a function of past expected and unexpected events, across different forms of reversal tasks (two-step task5, two-armed bandit task6,7, and T-maze task8) and species (rat5,8 and mouse6,7).

Using electrophysiological data from rats8, we found two pools of medium-spiny neurons (MSNs) in the dorsomedial and ventral striatum encoding the trial-by-trial changes of support-stay and conflict-shift bias. Another behavioral data analysis on mice data under inactivation of D1R- and D2R-expressing MSNs7 revealed that the respective inactivation substantially affects the effect of past expected and unexpected outcomes on choice behavior after unexpected ones, elucidating the dissociable roles of different MSN types in conveying the associations between past outcomes and the action-selection strategy after unexpected outcomes.

Results

Unexpected event-driven few-shot adaptation hypothesis

In a context-switching environment with binary choices and probabilistic reward (Fig. 1a left), a subject who infers the current task context as T1 (p1 > p2; marked as a red square in the reward probability plot) is likely to choose the action A1 since it leads to the outcome state S1 with a higher reward probability (p1). Suppose a reward is not offered, contrary to its expectation. Although this unexpected outcome can be regarded as a noisy event due to the probabilistic nature of the reward function, it could also indicate the context switching to T2 (p2 > p1; marked as a blue square in the reward probability plot). This cognitive process might urge action A2 at the trial right after, which can lead to highly rapid adaptation. Such contextual behavior patterns contradict the prediction of the conventional RL models that choices are made based on values of decision variables accumulated over several past trials.

a Alternative role of the unexpected event. Left for an example reversal learning task with two contexts and two actions. p1/p2 is the probability of receiving a reward after arriving at the outcome state S1/S2, respectively. Right for the reward probability plot depicting the context reversal. At the task context T1, p1 > p2. On the other hand, in the task context T2, p1 < p2. b, c Key terminology for behavioral measures. b The definition of action support/conflict events. c The two-step task5; Left for the definition of positive/negative actions with the task diagram. Here, the current task context is T1 (p1 > p2). Right for the summary table of 4 event types.

Based on this thought experiment, we hypothesize that the occurrence of an expected and unexpected event triggers a distinctly different action-selection strategy. To examine this, we proposed behavioral measures that can differentiate between trial-by-trial dynamics after an unexpected event and those after an expected event. As a prerequisite for our measures, for each context, we defined an action typically leading to a positive (or negative) outcome as the positive (or negative) action. For example, in the task shown in Fig. 1a, if the current task context is T1, then A1 and A2 are positive and negative actions, respectively. The subject’s action is followed by transitioning to a specific outcome state according to a certain transition probability. The outcome state is associated with a specific reward probability. The event where the actual outcome matches (or mismatches) the subject’s expectation is defined as a support (or conflict) event (Fig. 1b). Here, the subject interprets A1/A2 as a positive/negative action, assuming the current context as T1. If a reward is received after choosing A1, this event is classified as a support event. This is because the actual outcome matches the subject’s expectation that A1 will lead to a reward.

Reframing choice behavior from an event-type perspective

To examine whether animal choice behavior after support/conflict events can be explained by the RL model, we analyzed the rat behavior during the two-step task5 using various RL models (model-free (MF), model-based (MB), and latent-state models). The two-step task is a paradigm commonly used to distinguish between the influences of MF and MB reinforcement learning on animal behavior.

In each trial, the subject chooses between two actions, A1 and A2, associated with the outcome state S1 or S2 (Fig. 1c left). Each action leads to one outcome state with high probability (e.g., A1 leads to S1 with probability 0.8, a “common” transition) and to another outcome state with low probability (e.g., A1 leads to S2 with probability 0.2, an “uncommon” transition). S1 and S2 have different probabilities of yielding a reward: p1 for S1 and p2 for S2, which is determined by the task context at the current trial. The task context remains constant for several trials before changing unpredictably and without explicit cues, called “reversal.” When it occurs, the values of p1 and p2 become switched.

Suppose the current task context is T1, where p1 > p2 (Fig. 1c left). Here, A1 is defined as the “positive” action (the 1st column of Table in Fig. 1c) because this action leads to a reward with a higher probability. When a subject chooses a positive action (e.g., “I am making this choice because I think the current context is T1.”), the “positive support” event (S+ of Table in Fig. 1c) refers to the outcome confirming one’s prediction about the context (e.g., “After the common transition, the reward is given exactly as I expected, suggesting that current context is T1”), whereas the “positive conflict” event (C+ of Table in Fig. 1c) is the outcome contradicting one’s prediction (e.g., “After the common transition, the reward was omitted contrary to my expectation, suggesting that the context has switched to T2, where p1 < p2”).

In the same context T1, A2 it is defined as the “negative” action (the 2nd column of table in Fig. 1c) as it is less likely to lead to a reward. When the subject chooses a negative action for a certain reason, (e.g., “I am making this choice because I suspect the context has switched from T1 to T2.”), the “negative support” event (S− of table in Fig. 1c) refers to the outcome confirming one’s context prediction (e.g., “After the common transition, the reward is given exactly as I expected, reinforcing my prediction of T1 → T2.”), whereas the “negative conflict” event (C− of table in Fig. 1c) means that the outcome is not what one predicted (e.g., “After the common transition, the reward was omitted contrary to my expectation, suggesting the current context is still T1.”)

This terminology enables us to classify all possible action-state transitions of the two-step task (table in Fig. 1c). The episodes of (1) being rewarded after a common transition (Common-Rewarded) and (2) reward omission after an uncommon transition (Uncommon-Omission) can be classified as support events (the 1st row of table in Fig. 1c). Likewise, the episodes of (1) reward omission after a common transition (Common-Omission) and (2) being rewarded after an uncommon transition (Uncommon-Rewarded) are classified as conflict events (the 2nd row of table in Fig. 1c).

RL models fail to predict animals’ choice behavior after an unexpected event

To evaluate how much the animal’s actual behavior confirms the predictions of RL theory, an MB model was fitted to the rat’s behavioral data since it was shown to be the most dominant behavior component on this task following the analyses in ref. 5. After each trial, the model first updates the outcome value V using the Rescorla-Wagner (RW) learning rule:

where r is a binary variable indicating reward delivery, and α is a learning rate. RW learning rule9, derived from the RW model10, has been widely utilized to estimate the action values for the MF RL8,11,12,13,14,15,16 and the state values for the MB RL17,18,19.

The MB model computes the action value by multiplying the transition matrix with the outcome value. This implies that the event types (S+ or C+) affect the outcome value updates, which subsequently affects the relative action value of the positive action, defined as a positive action value minus a negative action value (relative action value hereinafter)20.

Specifically, the S+ event leads to an increase in the relative action value, whereas the C+ event decreases it. For instance, the model chooses a positive action A1 in the current task context T1 and receives the reward after a common transition (S+ event). The model increases the outcome value V of the outcome state S1 by α(1 − V), resulting in an increase in the action value of A1 that commonly leads to S1. Consequently, the relative action value increases. Likewise, when the reward was omitted after an uncommon transition (S+ event), the outcome value V of the outcome state S2 decreases to V + α(0 − V). This decreases the action value of the negative action A2 that commonly leads to S2. As a result, the relative action value increases.

First, to examine the dynamics behind rats’ choices when they experienced S+ events consistently, we used the choice inconsistency (CI; Fig. 2a), defined as the probability of choosing the negative action (at trial t, marked as a blue rectangle) as a function of the number of S+ repetitions (NS), when the subject experienced S+ events NS times until the last trial (from trial t − NS to t − 1, marked as a blue bold line on the trial axis). The CI quantifies how often rats make a choice that contradicts the supporting evidence provided by preceding trials (prior S+ events), even when the context remains unchanged.

a–c Behavioral dynamics profiles. Left for the conceptual diagram of the individual profile, middle for the result from the fitted model-based RL model behavior, and right for the result from rat behavior. In the conceptual diagram, each event is depicted as a rectangle above the trial axis, indicating when it happened (trial index). S+ and C+ events are marked in gray and white, respectively. Each profile consists of two components: (1) the event sequence leading up to the last t − 1th trial, represented by a bold line on the trial axis, and (2) the probe trial at the current tth trial, where the probability of choosing the negative action is assessed; a Choice inconsistency (CI). b Effect of conflict event (CE). c Conditional effect of conflict event (Conditional CE). Decreases in behavioral dynamics profiles and their finite differences were assessed using paired two-sample permutation tests. The difference between CE-C and CE-S was examined using a linear mixed-effects model, with the number of S+ repetitions (NS) and type (CE-C or CE-S) as fixed factors and subject as a random effect. The main effects were tested using paired two-sample permutation tests. d Multi-trial history regression analysis; Left for the regression weights estimated from the fitted model-based RL model behavior, and right for the regression weights estimated from rat behavior. Regression weights were tested against zero using paired two-sample permutation tests. e Support-stay index (SSI); Left for conceptual diagram, and right for SSI computed from the fitted model-based RL model behavior and rat’s behavior. f Conflict-shift index (CSI); Left for conceptual diagram, and right for CSI computed from the fitted model-based RL model behavior and rat’s behavior. SSI and CSI were tested against zero using paired two-sample permutation tests. All statistical tests were two-sided and corrected for multiple comparisons using the Benjamini–Yekutieli procedure. All panels show data from n = 21 rats. Error bars indicate mean ± s.e.m. ***P < 0.001. See Supplementary Table 2 for full statistical information. Source data are provided as a Source Data file.

The MB model predicts that CI(NS) will decrease as NS increases. Experiencing S+ events consecutively increases the relative action value, reducing the probability of choosing the negative action (CI). Further, the MB model estimates that its finite difference, CI(NS) − CI(NS − 1), will also decrease as NS increases. The above theoretical predictions of the MB model are confirmed by the CI profile measured from the simulated behavior of the fitted MB model (Fig. 2a, middle). Both the CI and its finite difference continually decrease significantly until NS = 5.

These trends are also observed in the rats’ profile (Fig. 2a, right) until NS = 2. The lack of a significant decrease in other NS can be attributed to the limited number of trials, both in the model’s simulated behavior and the animal’s actual behavior. These results imply that the RW learning rule can reliably describe the rats’ action selection after they have experienced a sequence consisting solely of S+ events.

Next, we introduced a behavioral profile called the effect of conflict event (CE) to examine the dynamics behind rats’ action-selection strategy when a C+ event takes place following multiple S+ events (Fig. 2b). The CE quantifies how often rats perceive a noisy event (C+ event), which is caused by a probabilistic transition or reward delivery, as the evidence suggesting context-switching, even though the context does not change. It is defined as the probability of choosing the negative action (at trial t, marked as an orange rectangle) immediately after the C+ event (at trial t − 1, marked as red on the trial axis) as a function of NS, where NS(≥0) is the number of successive S+ events (from trial t − NS − 1 to t − 2, marked as an orange bold line on the trial axis) preceding the C+ event (at trial t − 1, marked as red on the trial axis). NS = 0 means no S+ event occurred before the C+ event happened at trial t.

The MB model predicts that, similar to CI, both the CE and its finite difference will decrease as NS increases. These predictions are confirmed by the CE profile measured from the simulated behavior of the fitted MB model (Fig. 2b, middle). The CE and its finite difference decrease significantly until NS = 3. These trends are also observed in the rats’ profile (Fig. 2b, right) until NS = 2.

The action-selection strategy after the conflict event can be understood by analyzing its interaction with events in multiple past trials, rather than the most recent trial only. For this, we defined a more detailed behavioral profile based on CE, called conditional effect of conflict event (Conditional CE; Fig. 2c). The conditional CE is divided into two cases, depending on whether the event sequence of CE (Fig. 2b, marked as an orange bold line on the trial axis) is preceded by a C+ event (CE-C; Fig. 2c left top) or an S+ event (CE-S; Fig. 2c left bottom) at trial t − NS − 2 (marked as red on the trial axis), where NS is the number of successive S+ events (from trial t − NS − 1 to t − 2, marked as a bold line on the trial axis) preceding the C+ event (at trial t − 1).

The MB model predicts the following about conditional CE: for the same NS, CE-C will be higher than CE-S. The event sequence of CE-S (Fig. 2c left bottom, marked as an orange bold line on the trial axis) contains one more S+ event than the event sequence of CE-C (Fig. 2c left top, marked as a yellow bold line on the trial axis). This additional S+ event further increases the relative action value. Consequently, the relative action value after experiencing the event sequence of CE-S will be larger than that after CE-C. Thus, the probability of choosing the negative action in the former case (CE-S) will be lower than in the latter case (CE-C).

Furthermore, as NS increases, the difference between CE-C and CE-S (ΔCE) will be more reduced. The more successive S+ events that occur, the greater the accumulated increase in the relative action value. Here, in the context of this cumulative growth of the relative action value, the effect of different initial events (C+ or S+ event, marked as red on the trial axis) on the choice probability becomes more negligible as NS increases (Fig. 2c middle).

The above theoretical predictions of the MB model are confirmed by the conditional CE profiles measured from the simulated behavior of the fitted MB model (Fig. 2c middle). ΔCE is significantly positive and shows a decreasing trend at any NS.

These two predictions made by the MB model are in sharp contrast with those of rat data (Fig. 2c right). First, the rats’ CE-C was not significantly higher than their CE-S at any NS. Second, ΔCE decreases significantly only when NS changes from 0 to 1. Specifically, ΔCE is insignificantly different from 0 at NS = 0, but becomes significantly negative at NS = 1. To dismiss any potential biases, we balanced CE-S with CE-C by excluding cases where the event sequence for CE-C is preceded by the S+ event. Despite such adjustment, observed discrepancies between the MB model and rats were consistent (Supplementary Fig. 1).

Event-type-specific behavioral dynamics underlying few-shot adaptation

Such behavioral patterns show defining characteristics of rats’ few-shot adaptation: unexpected event-guided confirmation bias. At the trial t − NS − 2 (Fig. 2c left, marked as red on the trial axis), rats become more affected by the S+ event following their decision to stay with the same action after a C+ event (affirming their belief despite the noisy event), compared to when they decided to simply stay after a S+ event. This effect lasts until when rats experience the C+ event again, leading to CE-C ≤ CE-S at NS ≥ 1.

The following example scenario can capture this explanation: At trial t − NS − 2 (Fig. 2c left, marked as red on the trial axis), rats may still choose the same action after experiencing a C+ event that signals a potential context reversal. If it is followed by an S+ event, rats interpret this sequence of events as evidence that the context does not change. Thereafter, the unexpected event-guided confirmation bias weakens the association between the conflict event and action switch. Rats are less likely to interpret a following C+ event as a sign of the context switch. Instead, they attribute it to the inherent randomness in reward delivery and transition, decreasing the probability of switching to negative action in response to future C+ events. This collectively suggests that the action-selection strategy after the conflict event should be described by not only the effect of the latest trial but also the interaction effect between the latest and past trials.

We also investigated the MF model alongside the MB model by analyzing how different conflict events influence the action-selection strategy, finding that the MF model fails to replicate animal behavior after the conflict event (Supplementary Fig. 2).

To further examine the behavioral dynamics underlying action-selection strategy after a conflict event (Fig. 2b, c) in a more generalized setting that accommodates both positive and negative actions, we employed a multi-trial history regression analysis based on ref. 5. We used a logistic regression model that approximates the action-selection strategy after the conflict event, the conditional probability of choosing the positive action (a+) given that the subject experienced the conflict (C) event in the previous trial (P(at = a+∣et−1 ∈ C)). The regression model represents this action-selection strategy as a parametric function of recent trials and their event types (Fig. 2d top and Supplementary Fig. 3).

Trials from the past are indexed by the variable τ. An event that occurred τ trials ago can be one of two types: support (S) and conflict (C) event. For each τ, each of these trial types is assigned a corresponding weight (βS(τ) and βC(τ), respectively). A positive weight indicates a greater likelihood that the subject will make the same choice. For example, βS(2) > 0 indicates that the subject is more likely to choose the same action that was made two trials ago, in which the support event occurred. Conversely, a negative weight indicates a higher likelihood that the subject will make the opposite choice to the one made τ trials ago. Note that there is no βS(1) term in the logistic regression model (Fig. 2d top), as the model focuses exclusively on the effect of past events on the action-selection strategy when a conflict event, rather than a support event, occurred one trial ago.

In terms of βS(τ), the MB model predicts that it will be positive at any τ. After experiencing a support event, the MB model increases the relative action value of the corresponding action, which increases the probability of choosing the same action in the future. These predictions are confirmed by the βS computed from the simulated behavior of the fitted MB model (Fig. 2d, bottom left, blue curve). These trends are also observed in the rats’ profile (Fig. 2d, bottom right, blue curve) until τ = 4. To quantify the general effect of past support events (τ > 1) on choosing the same action, we defined the support-stay index (SSI) as the average of regression weights βS(τ > 1) (Fig. 2e left). We confirmed that SSIs computed from both the MB model and the rat are significantly positive (Fig. 2e right). The MB model also predicts that βC(τ) will be negative at any τ, which is confirmed by the βC measured from the simulated behavior of the fitted MB model (Fig. 2d, bottom left, orange curve). This prediction, however, does not at all match with the rat data (Fig. 2d, bottom right, orange curve) showing significantly negative βC only at τ = 1 and positive otherwise. This result implies that the effect of past conflict events on action-selection strategy after the conflict event depends on when this past event occurred. A conflict event 1 trial ago after choosing one action leads rats to switch to the alternative action (βC(τ = 1) < 0). In contrast, a conflict event more than 1 trial ago leads them to repeat the same action (βC(τ > 1) > 0). Note that the MB model does not accommodate this effect. The effects of past conflict events are also consistent with our interpretation of the discrepancies observed at conditional CE, CE-C ≤ CE-S at NS ≥ 1.

To quantify the general effect of past conflict events (τ > 1) on choosing the alternative action, we defined the conflict-shift index (CSI) as the average of regression weights βC(τ > 1) multiplied by −1 (Fig. 2f left). The CSI computed from the MB model is significantly positive, whereas the CSI of the rat is significantly negative (Fig. 2f right). This result corroborates our finding that the past conflict events (τ > 1) guide rats to stay on this action, which cannot be explained by the MB model.

Taken together, we found behavioral evidence that support and conflict events guide animal action-selection strategies differently, contradicting the predictions of conventional RL models. This motivates us to design a dual-process model to accommodate support and conflict events in a distinctly different manner (Fig. 3a).

a The mechanisms of the support-stay, conflict-shift (SSCS) model. After each trial, the model performs (1) conflict bias update, (2) event-type-dependent action selection, (3) support bias update, and (4) setting a flag for the conflict bias update in the next trial. Each variable’s superscript indicates the trial index, e.g. et represents the event type (support or conflict event) the model experienced at the tth trial. ft represents the flag at the tth trial. b The rat’s choice behavior after the reversal and representations of the SSCS model; Left for the choice behavior after reversal of rat (Black) and the SSCS model (Gray). The x-intercept and time constant of the exponential curve fitted to rat behavior and SSCS model behavior were compared using paired two-sample permutation tests and Spearman correlation analysis. Right for the modulation of decision variables, the support bias difference \({b}_{{{\rm{S}}}}^{+}-{b}_{{{\rm{S}}}}^{-}\) (Top) and the conflict bias difference \({b}_{{{\rm{C}}}}^{+}-{b}_{{{\rm{C}}}}^{-}\) (Bottom). The positive and negative actions are determined by the context after the reversal. The dotted lines indicate the trial when the context reversal occurs, the trial when the quantity of interest exceeds 0, and the time constant of the exponential curve fitted to the quantity of interest after the context reversal from the left. b shows data from n = 21 rats. All statistical tests were two-sided. Error bars indicate mean ± s.e.m. See Supplementary Table 2 for full statistical information. Source data are provided as a Source Data file. SSCS, support-stay, conflict-shift model.

Computational model for event-type-dependent action-selection strategy

We designed a support-stay, conflict-shift (SSCS) model to understand how support and conflict events guide action-selection strategy differently. In the SSCS model, each action is associated with two decision variables: support-stay bias (support bias hereinafter) and conflict-shift bias (conflict bias hereinafter). The first and the second variables accommodate the patterns of CI (Fig. 2a) and conditional CE/βC (Fig. 2c, d), respectively.

The support bias is defined as the likelihood of taking the same action following a support event, encoding the association between the support event and the chosen action. The conflict bias is defined as the likelihood of switching to the other action following a conflict event, encoding the association between the conflict event and the chosen action.

In each trial, the model updates the conflict bias (gC: \({b}_{{{\rm{C}}}}^{t}\to {b}_{{{\rm{C}}}}^{t+1}\), Process 1 in Fig. 3a), based on the support/conflict event (et) and the flag (ft). The flag is a simple gating function to determine whether the model repeats the same action despite a previous conflict event. Note that this update reflects behavioral patterns of conditional CE (Fig. 2c) and the effect of past conflict events (βC of Fig. 2d). The model then chooses the next action (at+1, Process 2 in Fig. 3a) based on the support bias (\({b}_{{{\rm{S}}}}^{t}\)) or the conflict bias (\({g}_{{{\rm{C}}}}({b}_{{{\rm{C}}}}^{t})\)) following a support or conflict event, respectively. The model then updates the support biases (gS: \({b}_{{{\rm{S}}}}^{t}\to {b}_{{{\rm{S}}}}^{t+1}\), Process 3 in Fig. 3a), specifically increasing the support bias of the chosen action (at+1) and decreasing that of the alternative action. Finally, a flag (ft+1, Process 4 in Fig. 3a) is set to indicate whether the model repeats the same action in the next trial despite a conflict event. The flag serves as a mental note to bet on the possibility that there was no context change.

To investigate how the SSCS model represents animal behavior, we first measured the rat’s choice behavior during the trials around the context reversal and compared it with those from the simulated behavior of the SSCS model. After the context reversal, rats rapidly adapt to a new context. After 3 trials, the choice probability of the positive action P(+) is significantly higher than that of the negative action P(−). The exponential curve fitted to their difference (choice bias), P(+) − P(−), converges to the upper asymptote with the average time constant of 5 trials (Fig. 3b left black line). The SSCS model accurately describes this adaptation (Fig. 3b left gray line). Both the x-intercepts and the time constants from rat behavior and the SSCS model were insignificantly different and showed a significant positive correlation.

Among the two decision variables, the support bias was the one that showed a faster response to the context reversal. After 4 trials, the support bias of the positive action \({b}_{{{\rm{S}}}}^{+}\) becomes significantly higher than the support bias of the negative action \({b}_{{{\rm{S}}}}^{-}\), and their difference \({b}_{{{\rm{S}}}}^{+}-{b}_{{{\rm{S}}}}^{-}\) converges to the upper asymptote with the average time constant of 6 trials (Fig. 3b right top).

On the other hand, the conflict bias exhibited slower dynamics. After 8 trials, the conflict bias of the positive action \({b}_{{{\rm{C}}}}^{+}\) becomes significantly lower than the conflict bias of the negative action \({b}_{{{\rm{C}}}}^{-}\), and their difference \({b}_{{{\rm{C}}}}^{+}-{b}_{{{\rm{C}}}}^{-}\) converges to the lower asymptote with the average time constant of 12 trials (Fig. 3b right bottom).

The SSCS model explains rat’s few-shot adaptation

The SSCS model was compared against the six other models; (1) the model-free RL (MF) model21, (2) the model-based RL (MB) model22, (3) the latent-state (LT) model23, (4) the meta-learning (MTL) model24, (5) the asymmetric Bayesian learning (ABL) model25, and (6) the reduced (RD) model5.

The MF model selects the next action based on the action value updated following the RW learning rule. The MB model is based on the MF model, except that its action value prediction incorporates the model of the environment. The LT model selects the next action based on the most probable task context, while its probability is inferred by the Bayesian update rule. The MTL model builds upon the MB model but modulates RPE magnitude and negative outcome learning rate based on expected and unexpected uncertainty. The ABL model is extended from the LT model by assuming that the task context inference is asymmetrically influenced by the receipt and omission of rewards. The RD model adopts a mixture-of-agents approach, where the action value is calculated by a weighted average of several different ’agents’ implementing different behavioral strategies, including model-based planning, novelty preference, bias, and perseveration.

Baseline models were chosen to ensure a comprehensive and fair comparison by incorporating a broad spectrum of computational perspectives. First, we grounded our selection in empirical evidence, choosing models that have been recognized as the “best" in previous studies for specific tasks analyzed in our work. In the two-step task, we adopted the reduced model as detailed in ref. 5. Next, considering that the two-step task incorporates the transition uncertainty between the chosen action and the arrived outcome state, we considered different action-selection strategies used in popular value-based decision-making tasks. The models include model-free21, model-based22, Bayesian ideal observer23,25, meta-learning24, and mixture-of-agents approaches5.

Each model was fitted to the behavioral data of each animal individually. The normalized BIC score and the normalized cross-validation likelihood were used to compare the different models’ predictability. We confirmed that all models showed significantly higher scores than the chance level in both measures. The RD and SSCS models showed the highest scores, followed by the ABL, MTL, MB, LT, and MF models (Fig. 4a top for normalized cross-validation likelihood, Supplementary Fig. 4 for normalized BIC score).

a Model predictability comparison using normalized cross-validation likelihood and time constant of the choice bias. In the normalized cross-validation likelihood, a white bar indicates a significantly lower value than the highest model (P < 0.05). In the time constant, a white bar indicates a significantly different value than the rat’s time constant (P < 0.05). b Comparison of behavioral dynamics profiles between animal and top 2 models. Filled dots indicate significantly different model predictions from rat behavior (P < 0.05), while blank dots indicate insignificant differences (P > 0.05). c–f Model explainability comparison in multi-trial history regression analysis by measuring similarity measures computed from rat behavior and simulated behavior of the fitted model. c βS. βS of the RD model, βS of the SSCS model, and the comparison of cosine similarity across baseline models. d Model explainability comparison by measuring the RMSE and Spearman correlation coefficient between SSI computed from rat’s behavior and simulated behavior of the fitted model. e βC. βC of the RD model, βC of the SSCS model, and the comparison of cosine similarity across baseline models. f Model explainability comparison by measuring the RMSE and Spearman correlation coefficient between CSI computed from rat’s behavior and simulated behavior of the fitted model. In RMSE, a white bar indicates significantly higher values than the lowest model (P < 0.05). In cosine similarity and Spearman correlation coefficient, a white bar indicates significantly lower values than the highest model (P < 0.05). Model comparisons were performed using paired two-sample permutation tests and Dunn and Clark’s Z tests. All statistical tests were two-sided and corrected for multiple comparisons using the Benjamini–Yekutieli procedure. All panels show data from n = 21 rats. Error bars indicate mean ± s.e.m., while for the Spearman correlation coefficient, they represent mean ± bootstrap standard error. See Supplementary Table 2 for full statistical information. Source data are provided as a Source Data file. MF, model-free RL model21; MB, model-based RL model22; LT, latent-state model23; MTL, meta-learning model24; ABL, asymmetric Bayesian learning model25; RD, reduced model5; SSCS, support-stay, conflict-shift model.

To measure the adaptation speed of the animal or the fitted model, we computed the choice bias (Fig. 3b left) and fitted the exponential curve to it. The comparison between the time constant of the exponential curve between the animal and fitted models (Fig. 4a bottom) showed that the models following the MB strategy exhibited significantly smaller time constants than that of the rat (MB, LT, RD models), compared to other types of RL models (ABL, MF, MTL models).

It implies that conventional models capable of adaptation predict unrealistically fast adaptation compared to animal behavior. Notably, our SSCS model has nearly the same time constants as the one from animal behavior. This result underscores the capability of the SSCS model to accurately reflect the temporal dynamics of behavioral adaptation in a manner that closely approximates the natural processes observed in animal behavior.

We also ran behavioral recoverability tests, in which we computed behavioral dynamics profiles (CI, CE, and conditional CE) from the simulated behavior of the fitted models and compared them with those of rats. Our model showed the most similar behavioral dynamics profiles to rats (Supplementary Figs. 5 and 6). Specifically, the SSCS model exhibited a quantitatively better prediction than the RD model, especially in CI and ΔCE profiles (Fig. 4b). The results suggest that the two key variables of our model serve to predict rapid context-switching behavior, above and beyond the predictions made by previously known variables of the RD model, including novelty preference, bias, and perseveration.

Next, we conducted behavioral recoverability tests, in which we computed βS and βC from the simulated behavior of fitted models and compared them with those of rats. We found that various models replicate βS with similar accuracy (Fig. 4c, and Supplementary Fig. 7a). By and large, the SSCS model and various baseline models exhibit similar explainability of SSI (Fig. 4d and Supplementary Fig. 7c). On the other hand, the SSCS model replicates βC (Fig. 4e, and Supplementary Fig. 7b) most accurately. The direct comparison of CSI between rats and the fitted models showed that the SSCS model explains CSI most accurately (Fig. 4f and Supplementary Fig. 7d).

In addition to these cognitive models, we considered two types of biologically plausible neural networks implementing the PFC-basal ganglia function25,26. In both models, PFC recurrent networks learn to infer the hidden task context by predicting an upcoming state, while basal ganglia rectified linear units learn the corresponding value and appropriate action using the RL mechanism. In one network model (NN model with direct input), the PFC network received full information, the preceding state, and action26. On the other hand, the PFC network of another model (NN model with gated input) received only the information about the preceding state, gated by whether the reward was given25.

After training these models to maximize their reward, we conducted additional analyses computed from the simulated behavior. First, we examined whether the behavioral dynamics profiles of the two trained neural network models can replicate the trends shown in rats’ profiles (Supplementary Fig. 8a–c). Although the model’s predictions are by and large aligned with the rats’ behavioral profiles, the NN model with direct input shows that [CE-C]>[CE-S] significantly at NS = 0 and 1 (Supplementary Fig. 8c middle), which does not match with the rats’ profile, [CE-C] ≤ [CE-S] at every NS (Supplementary Fig. 8c left).

Second, to validate whether two events (CO and UR events) in the C+ category differently affect the action-selection strategy, we compared the behavioral dynamics profiles from the trained models. Here, in every profile of the NN model with gated input, we observed that there are significant differences between CO and UR events (Supplementary Fig. 8d–f right), which do not align with the rat behavior (Supplementary Fig. 8d–f left).

Furthermore, when we compared βC and CSI, both NN models do not replicate the rat’s result (Supplementary Fig. 8h). Taken together (Supplementary Fig. 8), we found that two NN models could not replicate the animal behavior in the two-step task, especially in terms of representing the action-selection strategy after the conflict event.

In conclusion, we introduced the SSCS model, a computational framework that captures how animals leverage support and conflict events to guide their action-selection strategies. The SSCS model outperformed existing models in explaining animal behavior, particularly while describing the animal’s action-selection strategy after the conflict event (Fig. 3b and 4a–b, e–f). These findings strongly support our hypothesis that animals differentiate their action-selection strategies following conflict events compared to those following support events, especially within a context-switching environment.

The SSCS model characterizes the behavior of different species in simpler tasks

To test whether our findings are replicated in another experiment, we conducted the same analyses on an independent dataset where mice perform a two-armed bandit task with context reversal6. In each trial, the mouse chooses between two actions, A1 and A2. A1 and A2 have different probabilities of yielding a reward: p for A1 and 1 − p for A2, which is determined by the task context at the current trial. When the context reversal happens, the reward probabilities allocated to each action become switched. The same mice performed several sessions with different values of higher reward probability p, fixed to one of three values (0.7, 0.8, 0.9) during each session (Fig. 5a). In this task, we also classified possible events into four categories. After choosing the positive action, we classified the event when the reward was given or omitted as an S+ event and a C+ event, respectively.

a Task structure description. b Model explainability comparison by measuring the RMSE between behavioral dynamics profiles computed from mouse behavior and simulated behavior of the fitted model. CI, CE, CE-C, CE-S, and ΔCE from the left. Comparison of MF and SLRP models with respect to the SSCS model from the top. c–f Model explainability comparison in multi-trial history regression analysis by measuring similarity measures computed from mouse behavior and simulated behavior of the fitted model. In RMSE and cosine similarity, comparison of MF and SLRP models with respect to the SSCS model from the left. c Cosine similarity between βS. d Model explainability comparison with respect to SSI. Left for RMSE between SSI. Right for repeated-measures correlation coefficient91 between SSI. e Cosine similarity between βC. f Model explainability comparison with respect to CSI. Left for RMSE between CSI. Right for repeated-measures correlation coefficient91 between CSI. RMSE and cosine similarity comparisons between models were performed using a linear mixed-effects model, with reward probability (p) and model type as fixed factors and subject as a random effect. Estimated marginal means were compared with degrees of freedom adjusted by the Satterthwaite method for main effects and simple main effects tests. SSI and CSI computed from mouse and model behavior across different p were compared using repeated-measures correlation analysis. All statistical tests were two-sided and corrected for multiple comparisons using the Benjamini–Yekutieli procedure. b–f show data from n = 6 mice. Error bars indicate mean ± s.e.m., while for the repeated-measures correlation coefficient, they represent mean ± bootstrap standard error. *P < 0.05, **P < 0.01, ***P < 0.001. See Supplementary Table 2 for full statistical information. Source data are provided as a Source Data file. MF, model-free RL model21; SLRP, stochastic logistic regression policy model6; SSCS, support-stay, conflict-shift model.

From analyses of behavioral data, we found that mice also showed behavioral patterns observed in the two-step task consistently across different values of p; consecutive decreases in CI (Supplementary Fig. 9a) and reversal of the sign of the difference between CE-C and CE-S (Supplementary Fig. 9c).

In the two-armed bandit task6, we considered the stochastic logistic regression policy (SLRP) model as the best model based on the BIC score comparison detailed in the original study6. Unlike the two-step task, this task does not involve transition uncertainty from the chosen action to the outcome state, confining ourselves with models that do not utilize transition information, such as the MF model21.

As a result, we compared our SSCS model with two other models: (1) the MF model21, which selects the next action based on action values updated using the RW learning rule, and (2) the SLRP model6, which uses a logistic regression model incorporating choice and choice-reward interaction terms to capture mice’s stochastic and efficient action-switching behavior after context reversal.

All the models were fitted to the behavioral data of each animal in different values of p individually. Detailed comparisons showed that the SSCS model most accurately predicts various profiles (CE, ΔCE, SSI, βC) in every value of p (Fig. 5b–f and Supplementary Fig. 10). These results support that our model, which is based on the policy arbitrates between support and conflict bias, better describes the animal behavior in context reversal consistently across different species, task complexity, and environmental parameters.

The SSCS model explains the activity of medium-spiny neurons

After confirming that the SSCS model accurately replicates the event-type-dependent action-selection strategy across species and task complexity (Figs. 4 and 5, Supplementary Figs. 1, 4–7, 10), we sought to investigate its neural substrates. During value-based decision-making, the striatum is known to integrate reward-related information8, evaluate the value of different options16, and execute appropriate actions based on expected rewards7. Especially dorsomedial striatum (DMS) has been traditionally implicated in action selection in context-changing environments7,8, and it is known to be associated with flexible behavior during value-based decision-making27.

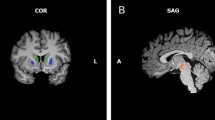

Various RL models, such as the model-free RL (MF) model21, and the differential forgetting Q-learning (DFQ) model28, are frequently employed to investigate whether neurons encode the decision variables, such as action value or state value, during reversal learning tasks11,15,20,27,29,30. However, these models have not been tested for their ability to replicate the animal’s event-type-dependent action-selection strategies. We hypothesized that the striatum guides the event-type-dependent action-selection strategy. To examine this, we reanalyzed previously published datasets of rat behavior during a T-maze task with context reversal, a spatial navigation task that resembles the previous two-armed bandit task (Fig. 5)8. In this experiment, the activities of medium-spiny neurons (MSNs) in the DMS were simultaneously recorded (Fig. 6a left).

a Task configuration8. The numbered S marks within the T-maze indicate the position of photo-beam sensors, which represent the boundaries between different sections within a single trial. b Distribution of neurons encoding decision and task variables (n = 201 neurons from 3 rats). c Behavioral dynamics profiles from rats and fitted SSCS model (n = 3 rats). CI, CE, and Conditional CE from the left. The difference between CE-C and CE-S was examined using a linear mixed-effects model, with the number of S+ repetitions (NS) and type (CE-C or CE-S) as fixed factors and subject as a random effect. Estimated marginal means were compared with degrees of freedom adjusted by the Satterthwaite method for main effects and simple main effects tests. d Regression weights from the multi-trial history regression analysis from rats and fitted SSCS model (n = 3 rats). βS and βC from the left. Regression weights were tested against zero using a linear mixed-effects model, with the trials in the past (τ) as the fixed factor and the subject as a random effect. Estimated marginal means were compared with degrees of freedom adjusted by the Satterthwaite method for post-hoc tests. e, f Example temporal dynamics sorted and colored by the value of the corresponding decision variable, Modulations of firing rates are listed in the order of prepare, action, reward, and update section from the left; e Normalized firing rate of support bias neurons. f Normalized firing rate of conflict bias neurons. All statistical tests were two-sided and corrected for multiple comparisons using the Benjamini–Yekutieli procedure. Error bars indicate mean ± s.e.m. See Supplementary Table 2 for full statistical information. Source data are provided as a Source Data file.

To validate this hypothesis at the behavioral level, we conducted behavioral dynamics profiles and multi-trial history regression analyses. We classified possible events into four categories identical to those used in the two-armed bandit task. For the T-maze task with context reversal8, we considered the differential forgetting Q-learning (DFQ)28 model as the best model, in accordance with the BIC score comparison conducted in the original study8. Similar to the two-armed bandit task6, this task does not involve transition uncertainty from the chosen action to the outcome state, for which case models that do not account for probabilistic transition are suitable, such as the MF model21 for comparative purposes.

Therefore, our SSCS model was compared with: (1) the MF model21, which uses the RW learning rule to update action values, and (2) the DFQ model28, which extends the MF model by incorporating a forgetting rate for the unchosen action and applying distinct update rules to the chosen action based on whether it was rewarded.

The analysis of the rat’s behavioral dynamics profiles revealed that CE-C is significantly lower than CE-S (Fig. 6c top, 3rd column). The SSCS model is the only model that successfully replicates this trend (Fig. 6c bottom, 3rd column), unlike the MF (Supplementary Fig. 11b top, 3rd column) and DFQ (Supplementary Fig. 11c top, 3rd column) models, which exhibited that CE-C is significantly higher than CE-S at every NS. Second, from multi-trial history regression analyses, we observed that rat’s βC significantly fluctuates, crossing zeros, across different τ values. It was significantly negative only at τ = 1, but became significantly positive from τ = 2 onward (Fig. 6d top right). Once again, only the SSCS model accurately replicates this finding (Fig. 6d bottom right), in contrast to the MF (Supplementary Fig. 11f right) and DFQ models (Supplementary Fig. 11g right).

These results (Fig. 6c, d and Supplementary Fig. 11) demonstrate that rats utilized the event-type-dependent action-selection strategy. The RL models, including the MF and DFQ models that are widely used to decode the neural representation of decision variables, failed to replicate the action-selection strategy after the conflict event. In contrast, our SSCS model successfully replicated these behavior patterns.

We then examined MSN activity to determine whether the striatum represents the event-type-dependent action-selection strategy by analyzing their correlations with the key decision variables of the SSCS model, the support, and conflict biases. The entire T-maze was divided into four sections; prepare, action, reward, and update (Fig. 6a right). The average firing rates of MSNs were computed during each trial. For each trial and each section, the average firing rates of MSNs were also computed while rats were passing over the corresponding section.

To accommodate temporal correlations for identifying striatal representation31, we employed the autoregressive exogenous (ARX) model, a mathematical framework for time series analysis and system identification. The ARX model explains such correlations by adding the autoregressive term inside the fitting model32. This approach has been validated in30 for effectively mitigating false identifications related to temporal correlations.

For each neuron, we fit an ARX model to evaluate how much the average firing rates of neural data are explained by the decision variables (support and conflict bias) of the SSCS model and task variables (choice and reward as confounding variables) on a trial-by-trial basis. A neuron was considered to represent a specific variable if the ARX regression coefficient associated with that variable was significantly different from zero, as determined using a block-wise permutation test8.

As well as choice and reward-encoding neurons previously reported, we found two sets of neurons encoding trial-by-trial changes of support bias (Fig. 6b, 6e and Supplementary Fig. 12a) or conflict bias (Fig. 6b, f, and Supplementary Fig. 12b). We observed that distinct sets of neurons representing either support or conflict biases were consistently identified in each section of the T-maze (Fig. 6b, e, f, and Supplementary Fig. 12a, b). Notably, we also found that a proportion of MSNs in the ventral striatum (VS) also represent the support bias or conflict bias (Supplementary Fig. 12c, d).

Taken together, these findings demonstrate that the striatum represents the event-type-dependent action-selection strategy, captured by the SSCS model. Especially, the striatum represents the distinct action-selection strategy after the conflict event, the behavior dynamics overlooked by the other models.

Mice exhibit event-type dependent action-selection strategy, independent from MSN inactivations

We further examined the possibility the different types of MSNs could provide a more detailed account of the striatal representation of the event-type-dependent action-selection strategy. We considered two types of MSNs, D1 receptor-expressing MSNs (D1-MSNs) and D2 receptor-expressing MSNs (D2-MSNs), distinguished by their connectivity and expression profile of dopaminergic receptors33. D1-MSNs are part of the direct pathway, facilitating desired actions and selecting actions that lead to positive outcomes. On the other hand, D2-MSNs are part of the indirect pathway, associated with inhibiting competing actions and suppressing actions linked with negative outcomes34,35,36. The balance between the direct and indirect pathway activity is crucial for flexible behavior and adaptive decision-making since it helps to optimize the behavior in response to changing environments7,35,36.

These functional segregations could be related to the action-selection strategy after the conflict event, a key process underlying flexible behavior in a context-switching environment. This motivated us to investigate another behavioral study in which mice performed a two-armed bandit task with context reversal (Fig. 7a left) while they were under reversible inactivation of D1-MSNs or D2-MSNs in DMS, respectively (Fig. 7a right)7. The analysis aimed to assess if MSN inactivation leads to deficits in this strategy, as well as to explore the differential contributions of D1-MSNs and D2-MSNs to this process.

a Description of the two-armed bandit task with reversal task and reversible inactivation used in the experiment7. b–d Behavioral dynamics profile comparisons between the mice in the control condition and fitted MF model (n = 39 mice). Left for results from simulated behavior of fitted MF model. Right for results from mice behavior in control condition; b Choice inconsistency (CI). c Effect of conflict event (CE). d Conditional effect of conflict event (Conditional CE). e–g Behavioral dynamics profiles from mice behavior in inactivation condition. Left for results from mice behavior in D1-MSN inactivation (n = 20 D1R-Cre mice). Right for results from mice behavior in D2-MSN inactivation (n = 19 D2R-Cre mice). e CI. f CE. g Conditional CE. Decreases in behavioral dynamics profiles and their finite differences were assessed using paired two-sample permutation tests. The difference between CE-C and CE-S was examined using a linear mixed-effects model, with the number of S+ repetitions (NS) and type (CE-C or CE-S) as fixed factors and subject as a random effect. The main effects were tested using paired two-sample permutation tests. All statistical tests were two-sided and corrected for multiple comparisons using the Benjamini–Yekutieli procedure. Error bars indicate mean ± s.e.m. See Supplementary Table 2 for full statistical information. Source data are provided as a Source Data file. MF, model-free RL model21.

In the two-armed bandit task with MSN inactivation7, we considered the MF model as the best model based on the BIC score comparison performed in the original study7. Additionally, previous studies employing this task8,37 have demonstrated that the DFQ model outperforms the MF model, prompting us to also include the DFQ model for comparison.

Our model was evaluated against: (1) the MF model21, which updates action values through the RW learning rule, and (2) the DFQ model28, which extends the MF model by incorporating a forgetting mechanism for the unchosen action and separate update rules for the chosen action depending on whether it was rewarded. All the models were fitted to the behavioral data of each animal individually and separately for different pharmacological conditions.

To investigate whether mice employ different action-selection strategies after a conflict event compared to after a support event, we applied the behavioral dynamics profiles to the mouse behavior under the control condition. We classified possible events into four categories identical to those used in the two-armed bandit task (Fig. 7a middle). Unlike the two-step task incorporating the probabilistic transition from a chosen action to an outcome state, the two-armed bandit task with context reversal does not have such uncertainty. Therefore, we selected the MF model as a baseline. The MF model increases the relative action value after experiencing an S+ event and decreases it after a C+ event. Also, after each trial, the MF model updates its action values based on the RW learning rule. Therefore, the MF model yields the same predictions as those demonstrated by the MB model in the two-step task (Fig. 2).

First, the MF model predicts that CI(NS) and its finite difference will decrease as NS increases. These predictions are confirmed by the CI profile measured from the simulated behavior of the fitted MF model. Both CI and its finite difference continually decrease significantly until NS = 5 (Fig. 7b left). These trends are also observed in the mice profile until NS = 2 (Fig. 7b right).

Second, the MF model predicts that CE(NS) and its finite difference will decrease as NS increases. These are confirmed by the CE profile measured from the simulated behavior of the fitted MF model. Both CE and its finite difference continually decrease significantly until NS = 4 (Fig. 7c left). These trends are also observed in the mice profile until NS = 1 (Fig. 7c right).

Third, the MF model predicts that CE-C(NS) will be higher than CE-S(NS) for any NS. These predictions are confirmed by the conditional CE profile measured from the simulated behavior of the fitted MF model. CE-C is significantly higher than CE-S until NS = 2 (Fig. 7d left). But in the mice profile, CE-C and CE-S were insignificantly different (Fig. 7d right).

The alignment observed in CI/CE (Fig. 7e, f) and discrepancies observed in conditional CE (Fig. 7g) also manifested in mice behavior during the inactivation of D1-MSNs or D2-MSNs. Especially, after D1- or D2-MSN inactivation, CE-C becomes significantly lower than CE-S. Furthermore, the direct comparison of mice behavioral dynamics profiles between control and inactivation conditions revealed that D1-MSN inactivation significantly increases CI (Supplementary Fig. 13a left), CE (Supplementary Fig. 13b left), and CE-S (Supplementary Fig. 13d left). Importantly, D1-MSN inactivation did not significantly alter CE-C (Supplementary Fig. 13c left), demonstrating the specificity of the observed effects. D2-MSN inactivation did not significantly influence any of the assessed behavioral dynamics profiles (Supplementary Fig. 13a–d right).

The findings suggest that mice exhibit a different action-selection strategy after the conflict event (Fig. 7), consistent with our previous analyses involving various tasks (Figs. 2, 4–6). Moreover, it is noteworthy that such utilization also appears after D1-MSNs or D2-MSNs are inactivated (Fig. 7e–g). However, only the inactivation of D1-MSNs specifically increases some behavioral dynamics profiles, thereby implying the distinct roles of D1- and D2-MSNs in the event-type-dependent action-selection strategy of mice (Supplementary Fig. 13).

The SSCS model replicates type-specific contributions of MSNs to the action-selection strategy after the conflict event

To further investigate the dissociable contributions of D1-MSNs and D2-MSNs to the event-type-dependent action-selection strategy, we examined how past events influenced the action-selection strategy following a conflict event and how these relationships were altered by MSN inactivation.

First, to investigate the effect of past support events, we computed the regression weights βS and SSI from the actual behavior of mice under control and inactivation conditions. βS showed continual decay, regardless of inactivation (Fig. 8a).

a–c Effect of type-specific inactivation to the regression weight βS and SSI; a, b Effect of type-specific inactivation to the regression weight βS. βS before and after D1-MSN inactivation, and βS before and after D2-MSN inactivation from the left; a βS from mouse actual behavior. b βS from simulated behavior of fitted SSCS model. c The effect of inactivation on SSI and replications of the SSCS model. d–f Effect of type-specific inactivation to the regression weight βC and CSI; d, e Effect of type-specific inactivation to the regression weight βC. βC before and after D1-MSN inactivation, and βC before and after D2-MSN inactivation from the left; d βC from mouse actual behavior. e βC from simulated behavior of fitted SSCS model. f The effect of inactivation on CSI and replications of the SSCS model. g, h Model explainability comparison by measuring the Spearman correlation coefficient between the measure computed from the mouse behavior and simulated behavior of the fitted model. D1+, D1−, D2+, and D2− from the left. g Model explainability comparison on SSI. h Model explainability comparison on CSI. In the Spearman correlation coefficient, white bars indicate significantly lower values than the highest model (P < 0.05). SSI and CSI comparisons between control and inactivation conditions were evaluated using paired two-sample permutation tests. Spearman correlation coefficients between models were compared using Dunn and Clark’s Z tests. All statistical tests were two-sided and corrected for multiple comparisons using the Benjamini–Yekutieli procedure. Panels show data from n = 20 D1R-Cre mice (D1+/D1−) or n = 19 D2R-Cre mice (D2+/D2−). Error bars indicate mean ± s.e.m., while for the Spearman correlation coefficient, they represent mean ± bootstrap standard error. *P < 0.05, **P < 0.01, ***P < 0.001. See Supplementary Table 2 for full statistical information. Source data are provided as a Source Data file. MF, model-free RL model5; DFQ, Q-learning with different forgetting model28; SSCS, support-stay, conflict-shift model; D1+, D1-MSN control; D1−, D1-MSN inactivation; D2+, D2-MSN control; D2−, D2-MSN inactivation.

After the inactivation of D1-MSNs, the SSI from mice behavior decreases significantly (Fig. 8c 1st column). In contrast, the effect of D2-MSN inactivation on SSI was marginal (Fig. 8c 2nd column). This implies that the effect of past support events on choosing the same action becomes attenuated after D1-MSN inactivation specifically.

Second, to investigate the effect of past conflict events, we computed the regression weights βC and CSI from mouse behavior under control and inactivation conditions. Regardless of targeted types (D1-MSN or D2-MSN) and conditions (control or inactivation), mice’s βC starts from a value nearby 0, increases significantly at τ = 2, and continuously decays as τ increases (Fig. 8d).

After the inactivation of D1-MSNs, the change in CSI from mouse behavior was insignificant (Fig. 8f 1st column). On the other hand, after the inactivation of D2-MSNs, the CSI from mouse behavior decreases significantly (Fig. 8f 2nd column). This implies that the effect of past conflict events on choosing the same action increases after D2-MSN inactivation specifically.

To investigate whether the SSCS model can replicate these findings, we performed multi-trial history regression analyses on the simulated behavior of the fitted SSCS model. We found that the SSCS model accurately replicated (1) similar trends in βS (Fig. 8b) and βC (Fig. 8e) regardless of conditions (D1+, D1−, D2+, and D2−), (2) a specific decrease in SSI after D1-MSN inactivation (Fig. 8c 3rd column), and (3) a selective decrease in CSI after D2-MSN inactivation (Fig. 8f 4th column). Furthermore, the SSI and CSI values from the SSCS model showed significant positive correlations with those of the mice (Fig. 8g for SSI, Fig. 8h for CSI) across different conditions (D1+, D1−, D2+, and D2−).

Collectively, these results suggest neural substrates conveying the associations between past events and the action-selection strategy after the conflict event. D1-MSNs selectively transfer the effect of past support events, whereas D2-MSNs specifically transfer the effect of past conflict events.

To validate whether the observed deficits following MSN inactivations are specifically attributed to impairments in the event-type-dependent action-selection strategy represented by the SSCS model, we conducted model explainability comparisons. Specifically, we performed multi-trial history regression analyses on simulated behaviors of different models and compared these regression results with those derived from actual mouse behavior.

First, we computed the regression weights βS and SSI from the simulated behavior of the fitted models under control and inactivation conditions. All models exhibited a continual decay in βS regardless of inactivation (Supplementary Fig. 15a for D1-MSN, Supplementary Fig. 15f for D2-MSN), mirroring the trends observed in mice. Consistent with this, the cosine similarity of βS between mice and the fitted models did not differ significantly across the different models (Supplementary Fig. 15b for D1-MSN, Supplementary Fig. 15g for D2-MSN).

A selective decrease in SSI following D1-MSN inactivation (Supplementary Fig. 15c), but not D2-MSN inactivation (Supplementary Fig. 15h), was observed across simulations of all three models. Comparing model explainability for SSI under D1-MSN manipulation (Supplementary Fig. 14a, c), the models showed similar performance in terms of RMSE (Supplementary Fig. 15d) and Spearman correlation coefficient (Supplementary Fig. 15e), regardless of inactivation. This pattern was also observed for D2-MSN manipulations (Supplementary Fig. 15i for RMSE, and Supplementary Fig. 15j for Spearman correlation coefficient).

Second, we computed the regression weights βC and CSI from the simulated behavior of the fitted models under control and inactivation conditions. Across all targeted MSN types (D1-MSN or D2-MSN) and conditions (control or inactivation), the SSCS model most accurately replicated the βC trends (Supplementary Fig. 16a 4th column for D1-MSN, Supplementary Fig. 16f 4th column for D2-MSN) observed in mice (Supplementary Fig. 16a 1st column for D1-MSN, Supplementary Fig. 16f 1st column for D2-MSN). This was supported by significantly higher cosine similarity of βC between mice and the fitted SSCS model relative to the other models across conditions (Supplementary Fig. 16b for D1-MSN, Supplementary Fig. 16g for D2-MSN).

A selective decrease in CSI following D2-MSN inactivation (Supplementary Fig. 16h), but not D1-MSN inactivation (Supplementary Fig. 16c), was also consistent with simulations from the SSCS model. When comparing the model explainability of CSI for D1-MSN manipulations (Supplementary Fig. 14b, d), the SSCS model demonstrated significantly lower RMSE under inactivation condition (Supplementary Fig. 16d right) and higher Spearman correlation coefficient across both conditions (Supplementary Fig. 16e) compared to the other models. Furthermore, the SSCS model exhibited significantly lower RMSE (Supplementary Fig. 16i) and higher Spearman correlation coefficient (Supplementary Fig. 16j) for both D2-MSN control and inactivation conditions.

These results indicate that the observed behavioral changes in mice following striatal inactivation are specifically linked to impairments in the event-type-dependent action-selection strategy, providing causal evidence for its essential role. Given this causal evidence and our findings that the striatum represents the event-type-dependent action-selection strategy (Fig. 6), these results underscore that the striatum encodes the event-type-dependent action-selection strategy. This highlights its critical function in guiding flexible, context-driven behavior.

Discussion

This study demonstrated how rodents achieve flexible behavior at the behavioral, computational, and neural levels. We hypothesized that the animal achieves this by operating different action-selection strategies after experiencing support and conflict events, respectively. Using behavioral dynamics profiles to track the trial-by-trial dynamics of the action-selection strategy after one type of event, we showed that conventional RL models fail to explain the action-selection strategy after the conflict event (Fig. 2). On the other hand, the computational model, which implements our inferred learning rules from behavioral dynamics profiles, makes quantitatively successful predictions about flexible behavior patterns in the four independent datasets5,6,7,8 (Figs. 3–7 and Supplementary Figs. 1, 4–7, 10–11, 14–16). Interestingly, MSNs in both DMS and VS were found to encode not only choice and reward information but also the two key decision variables of our computational model, suggesting a possibility that the striatum serves as the information hub of flexible behavior (Fig. 6 and Supplementary Fig. 12). Moreover, our model explains the exclusive contributions of different MSN types on the action-selection strategy after the conflict event; D1-MSNs selectively transfer the effect of past support events (Fig. 8c and Supplementary Fig. 14a, c), whereas D2-MSNs specifically transfer the effect of past conflict events (Fig. 8f and Supplementary Fig. 14b, d).

Previously, several regression-based behavioral profiles5,38,39 have been proposed, but they mainly targeted to distinguish between MF and MB RL5,39 and have several limitations23,40. The proposed behavioral dynamics profiles can characterize the trial-by-trial dynamics of the action-selection strategy following events that support or conflict with the subject’s inferred task context. Moreover, these profiles reflect how one model updates its decision variable, such as action value, outcome value, or belief. Therefore, these profiles can compare the update rules between different models and evaluate whether the model’s update rule reflects the animal behavior. Using these measures, we found that the animals’ action-selection strategy following an unexpected event does not match conventional RL models; this result corroborates an alternative view that the animals might have different action-selection strategies to handle the support event and conflict event. Furthermore, our behavioral measures can serve as complementary tools along with traditional methods, such as likelihood-based model comparison.

Another significant contribution of our work is that we generalized the idea of behavioral flexibility, which earlier discussions have mainly focused on discrimination tasks41,42,43,44. To understand this, we investigated the dynamics of the action-selection strategy after the conflict event, which is vital to achieving behavioral flexibility. In general, it is hard to evaluate whether two measures quantify different aspects of the same behavioral policy, potentially leading to multicollinearity45,46,47. To explain the action-selection strategy after the conflict event better without such issues, we introduced multi-trial history regression, which discriminated between the effect of past support events and conflict events on the action-selection strategy after the conflict event. Notably, our model consistently replicated the effect of past support events and past conflict events computed from animal behavior of independent datasets across different species and task complexities5,6,7,8.

Our support-stay, conflict-shift model underpinning the animal behavior in reversal learning tasks has been evaluated at the behavioral, computational, and neural levels. Specifically, a proportion of MSNs in both DMS and VS reflects the gain control effect of the two decision variables of our computational model, support and conflict bias, and the striatal direct and indirect pathways are shown to contribute to maintaining flexible behavior differentially.

The robust neural evidence solidly supports our conclusions regarding the striatum’s pivotal role in the action-selection strategy following the conflict event. The results from behavioral and neural analyses in rats (Fig. 6 and Supplementary Figs. 11 and 12) reinforce the idea that the striatum encodes the distinct action-selection strategy after the conflict event, which previous models had overlooked. Through analyses of mice behavior that assessed the impact of selective inactivation of D1- and D2-MSNs, we have clarified the striatal association with the action-selection strategy after the conflict event (Figs. 7 and 8). Collectively, these findings strongly support the hypothesis that the striatum engages in the action-selection strategy after the conflict event, as evidenced across different species (rat for MSN recording, mouse for MSN inactivation), methodologies (electrophysiology for MSN recording, pharmacogenetics for MSN inactivation), and tasks (T-maze task for MSN recording, two-armed bandit task for MSN inactivation).

Previously, numerous studies had related different types of dopamine receptors with behavioral flexibility36,48,49,50 respectively, but they mainly focused on manipulating neurons and examining simple behavioral effects. On the contrary, we linked the neuron/circuit level activities with our model implementing support and conflict bias with their update rules inferred from animal behavior. This could provide an integrative framework capable of producing more viable predictions. For example, our model can explain the recent finding investigating the role of striatal direct and indirect pathways in goal-directed behavior in updating action selection35, including a pathway-specific association of task variables to neuronal signals and results of optogenetic stimulation. In the long run, the support-stay, conflict-shift model can help us deepen the understanding of how the striatal dopaminergic system manages flexible behavior.

It would be interesting to show that our model can generalize to situations accommodating various choices and a hierarchical structure between adjacent states. Recently, several papers have discussed the generalization of binary choices to multiple ones by incorporating the Bayesian ideal observer model51. However, such inductive reasoning requires an assessment by objective behavioral measures to preclude any hasty generalization fallacy. In this regard, one experiment52 showed that the Bayesian ideal observer model could not explain captured discrepancies in a three-alternative visual categorization task.

There are two major directions to which insights from our findings can be extended. What neurons encode depends on both the brain region they locate and how much experience accumulated inside the task environment. First, since dorsal and ventral striatum receive and send different inputs53,54,55 and outputs56,57 respectively, it is necessary to investigate how the distribution of neurons encoding decision variables modulates across the dorsoventral axis58,59,60 and how their dynamics are changing throughout training61,62.

Second, without explicit cues indicating different contexts, subjects must actively infer the task structure63,64,65 based on their past experiences66,67,68,69. After continual evaluations to determine whether the inferred structure can explain underlying variances17,70,71,72,73,74, this reasoning may lead to the true task structure26,75. While the SSCS model fits the broader definition of model-based RL models by utilizing task structure, it can be viewed as a form of meta-learning, as it learns to choose between contexts/tasks and associated actions simultaneously. Furthermore, our model advances the concept of biological meta-RL by providing detailed computational principles underlying event-type-dependent action-selection for rapid animal adaptation15.

Overall, our support-stay, conflict-shift model can provide an expanded interpretation of the action-selection strategy after the conflict event guiding behavioral flexibility and shed light on the multi-dimensional role of the striatum and related dopaminergic control in driving flexible behavior.

Methods

Terms for behavioral analyses

Our study considers behavioral datasets derived from various research5,6,7,8. These tasks share five key components:

-

Action: The task contains two possible actions.

-

Transition: Each action leads to an outcome state according to the state-action-state transition probability.

-

Outcome state: Each action leads to one of two potential outcome states.

-