Abstract

Small Language Models offer an efficient alternative for structured information extraction. We present SLM-MATRIX, a multi-path collaborative reasoning and verification framework based on SLMs, designed to extract material names, numerical values, and physical units from materials science literature. The framework integrates three complementary reasoning paths: a multi-agent collaborative path, a generator–discriminator path, and a dual cross-verification path. SLM-MATRIX achieves an accuracy of 92.85% on the BulkModulus dataset and reaches 77.68% accuracy on the MatSynTriplet dataset, both outperforming conventional methods and single-path models. Moreover, experiments on general reasoning benchmarks such as GSM8K and SVAMP validate the framework’s strong generalization capability. Ablation studies evaluate the effects of agent number, Mixture-of-Agents (MoA) depth, and discriminator design on overall performance. Overall, SLM-MATRIX presents an effective approach for high-quality material information extraction in resource-constrained and offers new insights into structured scientific text understanding tasks.

Similar content being viewed by others

Introduction

In recent years, large language models (LLMs) have achieved remarkable breakthroughs in natural language understanding and generation domains1,2,3,4,5. Through extensive pretraining and reinforcement learning from human feedback, these models are now capable of producing coherent and practical language outputs6. Meanwhile, in materials science, automated data extraction plays a crucial role in building comprehensive databases and accelerating knowledge discovery. However, traditional approaches often rely on hand-crafted rules, templates, or fine-tuned models, which are costly, less adaptable, and limited in generalization. Indeed, conventional methods for automated data extraction, such as rule-based parsing and template matching, typically perform well on structured content but face limitations when handling scientific texts characterized by long-range dependencies, implicit semantics, and cross-paragraph reasoning. For example, Kim et al. identified synthesis parameters using syntactic parse trees combined with rule sets7, while Mavracic et al. extended ChemDataExtractor 2.0 to support table parsing for extracting physical quantities and semantic relations8. These techniques often fail to generalize across document formats and domains due to their reliance on brittle rules and heuristics.

With the emergence of generative LLMs, researchers began to explore more scalable extraction methods. Consequently, the emergence of generative LLMs has enabled researchers to extract information such as material names, key property values, and corresponding units with minimal domain expertise, using only carefully designed prompt engineering strategies. For instance, Polak et al. proposed ChatExtract9, a framework that demonstrates the potential of advanced generative LLMs for efficient data extraction. The core of this method lies in a set of engineered prompts that guide the LLM to identify and extract target information from relevant sentences. More importantly, it introduces a series of follow-up questions to repeatedly verify the accuracy of the extracted data. This interactive verification mechanism effectively mitigates the inherent hallucination problem of LLMs. In evaluations on materials-related datasets, ChatExtract combined with GPT-4 achieved precision and recall rates approaching 90%. Broadly speaking, generative LLMs have demonstrated strong performance across a wide range of natural language processing tasks, largely attributed to the Transformer architecture10 and large-scale pretraining. The GPT series models4 have shown excellent generalization in both zero-shot and few-shot settings, and are now widely applied in diverse domains. Ouyang et al6. further improved model response quality by introducing reinforcement learning from human feedback. To enhance reasoning capabilities, Wang et al.11 proposed the self-consistency strategy, which improves accuracy by generating multiple reasoning paths and selecting the most consistent answer. Despite these advancements, current LLM-based extraction methods face three major challenges in practical deployment: (1) High computational and API costs – running proprietary models like GPT-4 for large-scale literature mining is prohibitively expensive for many academic environments; (2) Heavy reliance on annotated data – many approaches still require task-specific fine-tuning with high-quality labeled datasets, which is time-consuming and difficult to scale across diverse materials systems or experimental conditions12; (3) Limited reasoning ability under complex semantics – LLMs often struggle with multivariable coupling, implicit expressions, or contradictions in text, even when equipped with advanced techniques like Chain-of-Thought (CoT)13 prompting, leading to hallucinated or incorrect outputs14.

In response to these challenges, recent research has turned to small language models (SLMs) and multi-agent reasoning strategies. As the scale of LLMs increases, the trade-off between model size and efficiency has become more evident. To address this, SLMs such as the LLaMA series proposed by Meta—including LLaMA-3B, LLaMA-7B, and LLaMA-11B—as well as models like OPT-1 and OpenFlamingo15, offer significantly reduced resource requirements while maintaining competitive performance. These models are particularly suitable for tasks such as entity recognition in materials science texts. Nevertheless, SLMs face performance bottlenecks in multi-step reasoning and implicit relation modeling, necessitating structural enhancements for improved effectiveness. To address these issues, researchers have begun exploring multi-model collaborative reasoning strategies. It has been observed that outputs from different LLMs exhibit a degree of complementarity, such that cooperative interactions among models can improve overall reasoning performance16. Recent methods like rStar17 demonstrate that even without fine-tuning or high-end model supervision, self-play generation–discrimination mechanisms can achieve strong reasoning capabilities. Among these enhancements, two core mechanisms have been explored to further boost reasoning capacity in lightweight settings. The first is the application of Monte Carlo Tree Search (MCTS), as adopted by the rStar framework, which constructs a multi-branch search tree and leverages language model scoring mechanisms to select the most semantically coherent answer. The second is the generator–discriminator architecture, originally adapted from generative adversarial networks (GANs)18, which enables consistency validation and error filtering. When coupled with MCTS, this strategy notably improved the reasoning accuracy of LLaMA2-7B from 24.34% to 63.91%.

Building on these insights, we propose SLM-MATRIX, a mixture-of-agents collaborative reasoning and verification framework based on small language models (SLMs). Our goal is to enable accurate and low-cost materials information extraction without the need for model fine-tuning. The framework integrates three core reasoning paths: (i) Mixture-of-Agents collaboration path (MoA)16: a layered agent system where multiple parallel proposers generate candidate answers, followed by iterative aggregation and optimization by a central aggregator. (ii) Generation–discrimination path enhanced by Monte Carlo Tree Search (MCTS): a generator explores the answer space while a discriminator evaluates candidate quality; MCTS efficiently navigates the complex reasoning space, and a consistency-check mechanism improves discriminator reliability. (iii) Dual cross-verification: a final layer that compares and filters candidate answers from the two previous paths to ensure robustness and correctness based on mutual verification. Importantly, SLM-MATRIX operates entirely on open-source small language models, without requiring any model fine-tuning or expensive GPU resources. Despite this, our experiments on the BulkModulus dataset demonstrate a high accuracy of 92.85%, significantly outperforming single-SLM baselines and approaching the performance of proprietary models such as GPT-4o (99.44%).

Results

Experimental setup

To evaluate SLM-MATRIX’s performance across varied reasoning tasks, we select two domain-specific datasets in materials science for structured information extraction, along with four widely used mathematical reasoning benchmarks. The BulkModulus Dataset9 contains 179 material–property–unit triples related to bulk modulus values. The dataset is characterized by semantically complex expressions and high similarity among numerical parameters, providing a robust challenge for assessing the structured extraction capabilities of domain-specific models. The MatSynTriplet Dataset is a refined subset based on the Materials Science Procedural Text Corpus (MSPTC) introduced by Mysore et al.19 It targets the evaluation of extraction performance in material synthesis scenarios. The original corpus comprises texts from 30 journal articles, covering synthesis procedures, experimental conditions (e.g., temperature, reaction time, atmosphere), and quantitative usage of reagents/materials. To enhance SLM-MATRIX’s ability to parse complex synthesis language, we employ the Gemini-2.0-Flash model to semantically segment the original paragraphs into a fine-grained collection of 115 short texts. Each fragment focuses on one or more concrete synthesis actions, blending domain-specific terminology, quantitative parameters, and hybrid structured-natural language formats. This MatSynTriplet is used in this study to validate the system’s generalizability in masked-reconstruction consistency and complex triplet extraction.

For general mathematical reasoning, GSM8K20 and SVAMP21 focus on elementary-level arithmetic word problems, testing models’ abilities in basic numerical reasoning and interpreting natural language descriptions of mathematical relationships. GSM-Hard22, a more challenging subset of GSM8K, includes questions requiring more complex reasoning steps or prone to common reasoning errors, allowing evaluation of model robustness under difficulty stress. The MATH dataset23 covers a wider range of problems from middle to high school competition-level mathematics, spanning algebra, geometry, and more. It serves to comprehensively test advanced mathematical reasoning and problem-solving abilities. Together, these diverse datasets help evaluate SLM-MATRIX’s overall performance in both domain-specific knowledge extraction and general logical-numerical reasoning.

To ensure reliability and reproducibility, all experiments on materials science datasets are run independently three times, and the reported accuracy metrics are the mean and standard deviation over these trials. In our study, SLM-MATRIX is built entirely on open-source small language models, including Qwen2.5-7B, Mistral-7B, Gemma-2-9B-it as Proposers, LLaMA-3.2-11B-Vision as both Aggregator and Discriminator, and LLaMA-3.1-8B-Instruct-Turbo as the Generator. All inference tasks were executed via the Together platform, without any fine-tuning. Among them, the MoA module uses a three-layer proposer add one-layer aggregator architecture. Each proposer layer includes three SLM agents generating answers in parallel, followed by aggregation using a composite scoring function. All key hyperparameters include: a. All models use a maximum generation length (max_tokens) of 2048 and a temperature of 0.7 to balance accuracy and diversity. b. All agents follow predefined system prompts that guide their behavior in terms of role identity, material domain context, and output structure. Prompt templates are included in Supplementary Note 1. c.During the trajectory generation and evaluation phase, we incorporated MCTS to enhance solution space exploration. In the MCTS parameter configuration, the number of rollouts for each input is 16, and each simulation generates one reasoning trajectory; the number of reasoning paths is 3; the search depth of each path is 5 to balance semantic completeness and computational cost. Path selection follows the classic UCT strategy, the exploration constant c is 1.5, and follows the best practices of the rStar framework.

To precisely guide MCTS behavior, we developed two types of specialized prompts: Space Definition Prompt is provided to the Generator, this prompt defines the allowable reasoning actions (A1–A5), mimicking human analytical strategies. It constrains and guides the generator to simulate domain-expert decision flows. Discriminator Prompt is provided to the Discriminator, this prompt outlines the criteria for evaluating the generated reasoning path. The discriminator performs a mask-and-reconstruct consistency check—masking the conclusion of a reasoning path and attempting to reconstruct it using preceding steps. This enables effective assessment of the path’s logical coherence and reasoning quality.Full prompt texts for both components are provided in Supplementary Note 2 and Supplementary Note 3, respectively. In addition to these general prompts, specific prompt designs tailored for the MatSynTriplet dataset experiments are detailed in Supplementary Note 9.

In the aggregator agent of the multi-agent collaborative reasoning path, we apply the previously defined composite scoring function (see Eq. (13)) to evaluate each candidate answer \({A}_{i}\). The relevance weight \(\alpha\) and the consistency weight \(\beta\) are set to 0.7 and 0.3, respectively. We selected these values based on experiments using a 60-sample validation set from the BulkModulus dataset. Results showed that the configuration \(\alpha\) = 0.7, \(\beta\) = 0.3 achieves an effective trade-off between accuracy, consistency, and trajectory diversity, and was thus adopted as the final setting. Finally, within the dual-path verification mechanism, the system cross-compares outputs from the MoA and the generator–discriminator paths. Only when both paths produce structurally consistent results—i.e., identical material names, values, and units—is the answer accepted. Otherwise, the system initiates up to three rounds of iterative refinement using the MoA path. If consensus is still not reached, the task enters a human-in-the-loop resolution phase.

Benchmark Results

To comprehensively evaluate the SLM-MATRIX framework based on the fully open-source SLM, we systematically compare it with three representative baseline methods. The first category is multi-agent collaboration, represented by MoA methods. The second category involves single-round reasoning, focusing on chain of thoughts (CoT) strategies, such as zero-shot CoT and few-shot CoT13. The third category covers multi-round enhancements, including techniques such as Tree of Thoughts (ToT)24 and Reasoning via Planning (RAP)25. For consistency and fairness, the prompt templates and planning logic of all baselines (described in Supplementary Note 4) follow their official implementations.

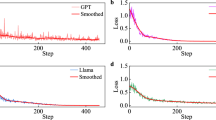

We tested on both mathematical reasoning datasets and material datasets. The performance of SLM-MATRIX alongside baseline methods on four widely used mathematical reasoning datasets, including GSM8K, GSM-Hard, SVAMP, and MATH, is summarized in Table 1. These results highlight several key findings regarding SLM-MATRIX’s capabilities. Notably, SLM-MATRIX demonstrates superior overall accuracy, achieving the highest score of 92.7% on SVAMP and significantly outperforming all other methods. Furthermore, on more complex tasks such as GSM8K and MATH, multi-model collaborative approaches like MoA and SLM-MATRIX clearly surpass single-model strategies. For instance, on GSM8K, SLM-MATRIX improved performance to 90.83%, compared to MoA’s 87.19% and the 84.23% achieved by the best-performing single model (Qwen2.5-7B + Few-shot CoT). A similar advantage was observed on the MATH dataset, where SLM-MATRIX reached 61.8%, exceeding MoA’s 58.4%. As shown in Fig. 1, across all datasets and models, while MoA consistently outperformed individual models, SLM-MATRIX consistently delivered the highest accuracy in every case. This underscores that SLM-MATRIX not only effectively integrates the advantages of different SLMs but also surpasses both single-model and naive ensemble strategies in overall reasoning capability. Moreover, the results show that common prompt strategies like Zero-shot CoT, Few-shot CoT, ToT, and RAP exhibit varying effectiveness across different models, with no single prompt method consistently dominating. In contrast, SLM-MATRIX effectively resolves this inconsistency, showcasing its robust performance.

SLM-MATRIX also demonstrates strong performance on domain-specific tasks. As shown in Table 2, On the BulkModulus dataset, it achieves an accuracy of 92.85% (±2.05%), significantly outperforming all baseline methods. Stage 1: The initial multi-agent collaborative reasoning (MoA) stage yields an accuracy of 87.90% (±1.71%), surpassing all individual model results. Stage 2: The generator–discriminator reasoning module, enhanced by MCTS, leads to a substantial performance gain by diversifying reasoning actions and applying consistency validation across trajectories, effectively expanding the solution space and improving answer precision.Stage 3: Final cross-verification between the MoA and MCTS-generated paths ensures the reliability of the extracted results, bringing the overall accuracy of 92.85%, averaged across three independent runs. While GPT-4o achieves higher accuracy (98.32–99.44%), As shown in Fig. 2, SLM-MATRIX reaches a comparable level without relying on proprietary models, narrowing the performance gap to approximately 5.5%. This highlights the framework’s practical value and cost-effectiveness in low-resource scenarios. In terms of automation capability, only 2.98% (±0.85%) of samples—averaged across three independent trials—required human adjudication even after up to K = 3 rounds of refinement. This indicates that the system can automatically achieve trajectory agreement in most cases, demonstrating a high level of autonomous decision-making and practical deployability in real-world, low-resource environments. On the MatSynTriplet dataset (115 samples), as shown in Table 3, SLM-MATRIX reaches an accuracy of 77.68% (±1.81%), outperforming the MoA path alone at 69.86% (±2.66%), and remaining reasonably close to GPT-4o’s 85.35%. This dataset includes diverse experimental conditions, material ratios, and process descriptions, featuring high textual complexity and dense information, making extraction notably more challenging. The performance drop is mainly attributed to the diversity of parameter types, strong contextual dependencies, entity name ambiguity, and interference from non-target numerical values. Although the newly designed prompts improved the completeness of structured extraction, they still show limited adaptability to certain complex syntactic structures. Automation evaluation results show that 5.22% (±1.74%) of samples failed to converge during iterative validation and required human intervention—slightly higher than BulkModulus but still relatively low. These results suggest that SLM-MATRIX possesses strong generalization ability and a high degree of automation when handling complex materials synthesis information.

The bar chart compares the accuracy of various language models and reasoning strategies. Our proposed framework, SLM-MATRIX (highlighted in red), is benchmarked against the state-of-the-art model GPT-4o (orange bars) and other open-source models (blue bars). The y-axis lists the combination of a base model and a reasoning strategy. The x-axis represents the task accuracy in percent. The vertical dashed line indicates the performance level achieved by SLM-MATRIX for easy comparison against all other methods.

Ablation studies

Our framework’s performance was further analyzed through a series of ablation studies to understand the contribution of key components and design choices.

Impact of the number of proposer models. The investigation into the impact of proposer model quantity revealed significant effects on system performance in the MATH reasoning task, as detailed in Table 4. A key observation across all configurations was that the multi-proposer strategy, which utilizes multiple heterogeneous models, consistently outperformed single-proposer setups employing the same model. For instance, within the three-layer MoA structure, increasing the number of proposers from 2 to 4 resulted in an accuracy improvement from 57.6% to 59.8%. The most notable difference was observed in the 4-layer, 4-model configuration; here, the multi-proposer scheme achieved an accuracy of 61.2%, a 3.8 percentage point gain compared to the single-proposer baseline of 57.4%. This finding strongly supports the notion that, particularly in complex reasoning tasks, aggregating diverse perspectives from multiple heterogeneous models is more effective than relying on the diversity achievable with a single model alone.

Effect of MoA layer depth. Further examination focused on the effect of MoA layer depth. As indicated in Table 4, increasing the number of MoA layers under the multi-proposer setting consistently led to performance improvements. The overall system accuracy steadily improved as the number of layers increased from 2 to 4, confirming the effectiveness of MoA in information integration and reasoning optimization. For instance, with a 4-model configuration, accuracy rose from 58.2% (2 layers) to 61.2% (4 layers). A similar trend was observed in the 3-model setting, where accuracy increased from 57.4% (2 layers) to 60.2% (4 layers). In contrast, under single-proposer settings, the marginal gains diminished more noticeably, suggesting that the benefits of deeper structures are constrained by a lack of model diversity. For example, in the 3-model single-proposer setting, the performance gain from 2 to 3 layers (52.0% → 56.2%) was much larger than that from 3 to 4 layers (56.2% → 57.0%). Overall, moderate increases in MoA depth enhance system performance, with the best results achieved through a balanced optimization of both depth (layers) and width (model diversity).

Role adaptability of different models. The suitability of five different models for Proposer and Aggregator roles within the MoA framework was also evaluated using controlled variable tests, with results summarized in Table 5. Significant performance differences emerged based on role assignment. Notably, LLaMA-3.2-11B-Vision demonstrated strong integration capabilities, performing best as an Aggregator with an accuracy of 59.2%. Qwen2.5-7B also showed better performance as an aggregator (55.8%) compared to its role as a proposer (53.2%). Conversely, Gemma-2-9B-it and Mistral-7B were more effective as Proposers, with Gemma-2-9B-it achieving 58.2% in this role and both models exhibiting strong candidate generation but lower aggregator performance (Mistral-7B showing 49.6% as an aggregator). Other models, such as LLaMA-3.1-8B, displayed relatively moderate performance across both roles. These findings indicate clear role preferences among the models, supporting the use of hybrid configurations—for example, pairing Gemma-2-9B-it for proposing with LLaMA-3.2-11B-Vision for aggregation—to significantly improve overall performance. This provides empirical guidance for role assignment in multi-agent collaboration frameworks like MoA.

Effectiveness of the SLM-MATRIX generator.To assess the generator component of SLM-MATRIX, its effectiveness was compared against two baseline methods, Reasoning via Planning (RAP) and Self-Consistency (SC@64), alongside an evaluation of different answer verification mechanisms. The results, presented in Table 6, show that SLM-MATRIX consistently outperformed both RAP and SC@64 on the GSM8K and BulkModulus datasets. For example, when using LLaMA-3.1-8B-Instruct-Turbo, SLM-MATRIX achieved an accuracy of 90.83%, which was substantially higher than RAP (76.35%) and SC@64 (86.43%). Furthermore, SLM-MATRIX’s trajectory selection and verification mechanism demonstrated clear advantages in both accuracy and stability over traditional majority voting (Maj) approaches, especially when dealing with complex reasoning tasks. These outcomes further validate the efficacy of the generator-discriminator design in ensuring reliable answer selection.

Effectiveness of the SLM-MATRIX discriminator. The influence of different discriminator models on answer verification results was also a subject of evaluation. In this experiment, LLaMA-3.1-8B-Instruct-Turbo served as the generator, while several open-source small language models were tested as discriminators for the consistency verification task within the collaborative reasoning process. The results, detailed in Table 7, indicate that while the choice of discriminator does exert some influence on final performance, the overall differences are relatively small, suggesting strong compatibility of the SLM-MATRIX framework with various discriminator models. Among the tested discriminators, LLaMA-3.2-11B-Vision achieved the highest accuracy at 90.83%, outperforming the traditional majority voting strategy (87.49%) by approximately 3.34%. However, its advantage over other models, such as Qwen2.5-7B (90.14%) and Gemma-2-9B-it (89.61%), was marginal. These findings suggest that SLM-MATRIX can maintain high verification accuracy without necessitating large or high-performance models; even smaller-scale language models are capable of yielding reliable and stable results within this verification framework.

Proposer agent combination diversity. The impact of Proposer Agent diversity within the multi-agent collaborative reasoning path on the overall performance of SLM-MATRIX was assessed through a model combination diversity experiment. For this, the number of proposers was fixed at three, and three distinct combination strategies were compared on the BulkModulus dataset. The first, a benchmark heterogeneous combination, served as the default SLM-MATRIX configuration and included Qwen2.5-7B-Instruct-Turbo, Gemma-2-9B-IT, and Mistral-7B-Instruct, representing high model heterogeneity. The second, a low-diversity combination, used three identical instances of Qwen2.5-7B-Instruct-Turbo as Proposer Agents to reduce inter-model differences and highlight potential gains from heterogeneity. The third, an alternative heterogeneous combination, replaced Qwen2.5-7B-Instruct-Turbo in the benchmark setup with Qwen2.5-Coder-7B (a model optimized for code generation), keeping Mistral-7B-Instruct and Gemma-2-9B-IT, to examine the effect of introducing a task-specialized model. The experimental results for these strategies are presented in Table 8. The benchmark heterogeneous combination outperformed the low-diversity combination (composed of three identical Qwen2.5-7B-Instruct-Turbo models) by 12.4% in accuracy, as shown in Table 8. This significant margin indicates that model heterogeneity among Proposer Agents is crucial and positive for enhancing collaborative reasoning performance. Heterogeneous models provide diverse interpretations and reasoning perspectives, leading to a wider range of candidate answers. This helps expand the solution space, mitigate systematic errors from model homogeneity, and ultimately supply higher-quality inputs for downstream aggregation and verification, thereby improving overall accuracy and robustness. In the alternative heterogeneous combination, substituting Qwen2.5-7B-Instruct-Turbo with Qwen2.5-Coder-7B yielded an accuracy of 90.88%—slightly below the benchmark but notably better than the low-diversity setup. This suggests that while a model with strong coding capabilities introduces a new dimension of heterogeneity, its effectiveness in the current materials information extraction task might be limited, underscoring the importance of aligning model specialization with task characteristics to translate architectural diversity into performance gains.

Effectiveness of the mask–reconstruct discriminator strategy. Finally, the effectiveness of the mask–reconstruct discriminator strategy proposed within the SLM-MATRIX framework was evaluated through a comparative experiment on the BulkModulus dataset against a direct path evaluation baseline, with results summarized in Table 9. In the experimental group (i.e., the default SLM-MATRIX configuration), the complete mask–reconstruct workflow was applied: after generating N candidate trajectories using the MCTS generator, the discriminator masked the final structured output \({S}_{d}\) of each trajectory and attempted to reconstruct it as \({S}_{d}^{{\prime} }\) based on the preceding path \({t}_{{prefix}}\). The consistency score between \({S}_{d}\) and \({S}_{d}^{{\prime} }\) was used as the criterion for evaluating path quality, and the trajectory with the highest score was selected as the final output. In contrast, the baseline group removed the masking step entirely. The discriminator directly scored the full trajectory—including the final answer—based on overall quality and selected the best-scoring path. The results show that the mask–reconstruct strategy achieved a significantly higher accuracy of 92.85% (±2.05%), compared to 88.45% (±1.80%) for the direct evaluation strategy. Additionally, the proportion of cases requiring manual adjudication during the final dual verification stage dropped from 5.77% to 2.98%, indicating enhanced decision reliability. Notably, even without the mask–reconstruct mechanism, the MCTS path still contributes performance gains when used in dual-path cross-verification with the MoA path, demonstrating the intrinsic benefit of the MCTS framework. However, the integration of the mask–reconstruct discriminator yields a more substantial performance improvement, highlighting its superior ability to detect logical inconsistencies, hallucinations, and missing information within the reasoning process.

Failure case analysis

To gain a deeper understanding of the limitations and potential areas for improvement in SLM-MATRIX, we conducted a detailed analysis of failure cases on the BulkModulus dataset. Among 15 representative failed samples, we identified five major categories of errors, as summarized in Table 10.

The most prevalent error type was Complex Semantic Misinterpretation (33.33%), where the model failed to correctly interpret conditional statements, long-range dependencies, or semantically ambiguous expressions. The next most common issues were Numerical Value Extraction Errors (20.00%) and Triplet Association Errors (20.00%). The former included missing or misread values, or incomplete extraction of ranges, while the latter involved incorrect matching between materials, values, and units. In addition, Unit Extraction or Association Errors and Material Name Identification Errors each accounted for 13.33% of failures, reflecting challenges in handling unit ambiguity (e.g., GPa vs. MPa) and rare or complex material nomenclature. Similar error patterns were observed on the MatSynTriplet dataset, particularly in cases involving structurally complex experimental conditions or non-standard material expressions. Specific cases have been provided in Supplementary Note 8.

These findings suggest that SLM-MATRIX still has room for improvement in the following areas: handling of complex semantics, long-range entity associations, accurate numerical parsing, and resolution of inter-model conflicts. Future work may focus on integrating materials science knowledge graphs to improve recognition of rare entities, adopting enhanced contextual modeling (e.g., long-range attention or graph neural networks) to strengthen inter-sentence reasoning, and refining the numerical parsing module to support error margins, unit conversions, and contextual disambiguation. Furthermore, introducing more fine-grained trust evaluation and cross-verification mechanisms between agents may improve robustness in cases of conflicting outputs.

Computational cost and efficiency analysis

To assess the practical deployment cost and runtime efficiency of SLM-MATRIX, we measured its average inference time and estimated API token consumption on the BulkModulus dataset. Results are shown in Table 11.

SLM-MATRIX exhibited an average inference time of 38.8 seconds per sample, which is significantly higher than that of MoA (17.3 seconds) and a single SLM baseline (5.4 seconds). This increase is primarily due to the framework’s complex multi-agent collaboration process, including multi-round MoA interactions, multi-path MCTS reasoning, and dual-path cross-verification. Despite the increased time cost, SLM-MATRIX improves accuracy from 87.90% (MoA) to 92.85%, demonstrating a clear “accuracy-for-time” trade-off. In terms of API cost, SLM-MATRIX incurs an estimated cost of $0.0004763 per sample, which is significantly lower than GPT-4o ($0.00298 per sample), while avoiding any dependency on closed-source models or fine-tuning. These results indicate that SLM-MATRIX offers strong cost-effectiveness and transparency, making it well-suited for scientific and industrial applications where accuracy, reproducibility, and budget constraints are critical.

Discussion

This study demonstrates that the proposed SLM-MATRIX, a multi-path collaborative framework based on SLMs, can achieve performance comparable to leading LLMs in materials science information extraction—while operating at a significantly lower resource cost. On the BulkModulus dataset, SLM-MATRIX achieved an accuracy of 92.85% (±2.05%). Notably, this result not only outperforms its own core component MoA (87.90% ±1.71%) but also exceeds all baseline methods based on standard prompting strategies (e.g., CoT, ToT, RAP) using individual SLMs. For example, the best single-SLM baseline—LLaMA-3.1-8B Instruct-Turbo with Zero-shot CoT—achieved 84.76% accuracy, while other models ranged from 61.01% to 79.89%. On the more challenging MatSynTriplet dataset, which includes diverse experimental conditions and complex material compositions, SLM-MATRIX maintained robust performance with 77.68% (±1.81%) accuracy, outperforming the MoA path (69.86% ±2.66%) and approaching GPT-4o’s benchmark (85.35%). Although SLM-MATRIX remains approximately 5.6% behind GPT-4o (which achieved 99.44% under Few-shot CoT), it has narrowed the performance gap significantly while requiring no fine-tuning or closed-source dependencies. Moreover, the system demonstrated strong automation capability: on average, only 2.98% (±0.85%) of BulkModulus samples and 5.22% (±1.74%) of MatSynTriplet samples required human intervention after three rounds of verification, indicating strong autonomous consistency convergence even under uncertain semantic or rare material naming conditions. In terms of cost-effectiveness, the estimated API cost per sample for SLM-MATRIX is only $0.00048, nearly six times lower than that for GPT-4o ($0.00298/sample) under the same conditions. This highlights the framework’s practical value in resource-constrained or large-scale deployments, particularly for scientific tasks demanding high transparency, interpretability, and affordability.

The superior performance of SLM-MATRIX arises not from any single component but from the synergy among its three core reasoning paths. The MoA path alone demonstrates strong inter-model synergy; by integrating multiple heterogeneous SLMs as proposers and aggregators, it achieves 87.90% accuracy on the BulkModulus dataset, outperforming any individual SLM under standard prompting. As shown in Table 2, even the best single-SLM baseline (LLaMA-3.1-8B + Zero-shot CoT) only achieved 84.76%, while other models such as Gemma-2-9B-it (79.89%), Qwen2.5-7B (76.97%), and Mistral-7B (61.01%) performed considerably worse. This clearly indicates that model diversity and cross-architecture integration provide meaningful advantages over single-model reasoning. The MCTS-driven Generator–Discriminator Path complements MoA by further enhancing performance, with SLM-MATRIX reaching 92.85%, a 5.6-point improvement over MoA alone. This path uses a self-play-inspired approach to explore diverse reasoning trajectories and generate candidate answers that may uncover relations and logic chains missed even by multi-model collaboration, proving particularly beneficial for tasks involving implicit dependencies or nuanced numeric reasoning. The Dual Cross-Verification Path conducts consistency checks between outputs from the MoA and MCTS paths, filtering hallucinated or unreliable results to ensure only the most trustworthy outputs are selected. These three paths form a synergistic structure where MoA provides breadth via inter-model consensus, the MCTS path provides depth via exploration, and cross-verification ensures output quality. The combination of model complementarity, strategic fusion, and multi-result validation enables SLM-MATRIX to surpass both individual models and isolated reasoning strategies.

SLM-MATRIX’s strengths extend beyond materials science, as its reasoning capabilities generalize effectively to open-domain mathematical and logical reasoning tasks. On GSM8K, SLM-MATRIX achieves 90.83% accuracy, outperforming MoA (87.19%) and the best single-SLM baseline (Qwen2.5-7B + Few-shot CoT, 84.23%) by over 6.6%. On the more challenging GSM-Hard dataset, it scores 73.24%, ahead of MoA (68.76%) and Gemma-2-9B-it + Zero-shot CoT (66.34%). On SVAMP, SLM-MATRIX reaches 92.7%, improving on MoA (88.3%) and the best single-SLM baseline (Gemma-2-9B-it + Few-shot CoT, 86.7%). Even on the most demanding MATH dataset, SLM-MATRIX achieves 61.8%, surpassing MoA (58.4%) and Qwen2.5-7B + Zero-shot CoT (57.4%). These results confirm that SLM-MATRIX is not just domain-adapted but also a general-purpose reasoning framework.

Despite its strong performance, SLM-MATRIX has several limitations that inform future research. Current task coverage is limited to the BulkModulus and MatSynTriplet datasets and does not encompass more complex, multi-step workflows or structure–property extraction tasks. Future evaluations should include larger-scale and more diverse corpora, for example, those involving synthesis procedures, multi-phase compositions, and implicit inter-property dependencies; multimodal data (e.g., XRD plots, SEM images, Raman spectra), essential in real-world materials science literature, should also be incorporated. While ablation studies were conducted on BulkModulus, further validation on more complex datasets like MatSynExtract is needed to fully assess each module’s adaptability, complementarity, and optimization space. The present framework is unimodal, processing only text and ignoring valuable non-textual information such as chemical structures, diagrams, and tabular data. Future versions may integrate vision modules or graph-based models to achieve cross-modal information extraction. Additionally, SLM combinations are currently fixed; adaptive model selection or dynamic routing based on document types and task demands could significantly improve system efficiency and scalability. Interpretability also remains limited; although multi-agent interaction and MCTS enhance performance, the complexity of internal logic paths reduces transparency.

In summary, this work presented SLM-MATRIX, a multi-path collaborative reasoning and verification framework for automated materials science information extraction. Built entirely on open-source small language models, SLM-MATRIX achieves high-precision extraction of materials entities and properties without requiring model fine-tuning or closed-source dependencies. The framework integrates a multi-agent collaboration path (MoA) with a multi-layer proposer–aggregator architecture for improved stability, a generator–discriminator path enhanced by Monte Carlo Tree Search (MCTS) and mask–reconstruct consistency verification for deep trajectory exploration, and a dual cross-verification mechanism for final answer quality assurance. Experiments demonstrated that SLM-MATRIX achieves 92.85% accuracy on BulkModulus and 77.68% on MatSynTriplet, outperforming standard prompting methods and approaching GPT-4o’s upper-bound performance. Notably, the framework operates entirely on open-access models with a cost of only $0.00048 per sample, compared to GPT-4o’s $0.00298. It shows strong generalization across reasoning benchmarks (GSM8K, GSM-Hard, SVAMP, MATH) and requires minimal human intervention (2.98%–5.22%), validating its reliability and cost-efficiency for scalable deployment. The ablation studies confirmed the contributions of proposer diversity, MoA depth, and component configurations to overall performance. A key advantage is that SLM-MATRIX requires no closed-source LLMs or fine-tuning, offering high reasoning utility with strong flexibility for real-world deployment. Future work will explore the scalability of this framework to broader materials science corpora, its extension to multimodal inputs like diagrams and formulas, and its application in other domains such as biomedical research, environmental science, and legal document processing.

Methods

Overview

To address the limitations of traditional approaches in automated data extraction, we propose SLM-MATRIX. The framework is designed to (1) minimize computational cost, (2) improve reasoning accuracy, and (3) handle complex, domain-specific data more effectively. As shown in Fig. 3, SLM-MATRIX integrates three complementary reasoning paths:

a Tokenizer: Transforms materials-related text into structured token sequences using word embeddings and self-attention. b Mixture-of-Agents (MoA) module: A hierarchical ensemble of heterogeneous SLMs generates candidate answers across multiple layers; an aggregator integrates them into the final output. c Generation–Discrimination module: Constructs multiple reasoning trajectories via a generator and evaluates their reliability using a discriminator with masked reconstruction and MCTS-based optimization. d Answer verification module: Compares outputs from both reasoning paths; if inconsistent, triggers iterative refinement until semantic agreement is achieved.

Mixture-of-agents collaborative reasoning

Organized into three hierarchical layers (Layer 1–3), each containing multiple open-source SLM agents (e.g., Agent A₁, ₁ –A₃, ₃). These agents function as Proposer Agents to generate candidate answers in parallel, which are subsequently evaluated and aggregated by a central Aggregator Agent.

Generator–discriminator reasoning

a Generator (S₁) produces multiple reasoning trajectories, which are assessed by a Discriminator (S₂) using masked reconstruction and MCTS to optimize output consistency and accuracy.

Dual verification

Outputs from the first two paths are cross-compared. If the results are consistent, the final answer is returned. Otherwise, the process enters an iterative feedback loop and reruns through the MoA collaborative path for further optimization. Upon reaching a pre-defined iteration limit, human verification is invoked.

SLM-MATRIX operates without fine-tuning and leverages the structured characteristics of materials science texts—standardized terminology, numerical precision, and unit conventions—to ensure efficient and reliable information extraction.

Multi-agent collaborative reasoning path

To improve structured data extraction from scientific texts, a collaborative reasoning mechanism using multiple Small Language Models (SLMs) with diverse strengths was introduced.

As shown in Fig. 4, The framework design for this path involves several key components. Proposer Agents, a set of open-source SLMs (e.g., Qwen, Mistral, Gemma), are employed in parallel to generate candidate answers. The parameter sizes and architectural features of these models are summarized in Table 12. Each proposer independently generates a candidate answer based on the input materials text, a process formalized as:

where \({P}_{i}\) denotes the generation function of the \(i\) proposer, and \(T\) represents the input materials data.

Subsequently, an Aggregator Agent, instantiated using LLaMA-3.2-11B-Vision, performs a joint evaluation of these candidate answers concerning semantic consistency and information coverage to select the most optimal answer. The scoring function for this evaluation considers two key aspects: (1) the relevance of a candidate answer to the original input text, and (2) the complementarity among different candidate answers. This scoring formula is defined as:

where \({Rel}\left({A}_{i},T\right)\) measures the relevance of the candidate answer \({A}_{i}\) to the input data \(T\), and \({Cov}({A}_{i},A)\) evaluates the complementarity of \({A}_{i}\) with respect to other candidate answers in the set \(A\).

The aggregator selects the answer \({A}^{* }\) with the highest score as the output and passes it to the next round of proposers for iterative refinement; \(\alpha\) and \(\beta\) are empirical coefficients that balance the trade-off between relevance and diversity. This scoring mechanism serves as the basis for optimizing the final output through controlled integration, maintaining a balance between accuracy and diversity. In practice, we adopt a reference-aware generative aggregation strategy, in which the aggregator LLM receives all candidate answers as in-context references and performs implicit evaluation, selection, and structural reorganization, thereby producing the final output. A detailed illustration of the MoA generation process is provided in Supplementary Note 5.

The reasoning workflow of this multi-agent collaborative path consists of three main stages. First, in Candidate Answer Generation, a set of candidate answers,

is independently generated by multiple proposer agents based on the input text, covering materials-related entities such as material names, numerical values, and units.

Second, during Candidate Evaluation and Selection, the aggregator agent evaluates this candidate set using the composite scoring function (as defined in Eq. 2) and selects the optimal answer \({A}^{* }\).

Finally, through Iterative Optimization and Convergence, the system iteratively refines the output via multiple rounds of the “propose–aggregate” cycle until convergence is achieved. The final answer is defined as:

where \(k\) is the maximum number of iterations, dynamically set based on task complexity in practice.

This path harnesses the complementary strengths of diverse models to improve robustness in handling complex structures and implicit relationships in materials texts.

Generator–discriminator mechanism based on MCTS

To enhance the diversity and accuracy of generated reasoning paths, we introduce a generator–discriminator mechanism based on MCTS. Through collaborative interaction between a Generator and a Discriminator, this mechanism significantly improves the quality of reasoning without requiring any fine-tuning.

Focusing on the task of structured information extraction, the framework constructs a reasoning search tree, where each path from the root node to a leaf node represents a candidate reasoning trajectory. The Generator incrementally builds reasoning paths by prompting the language model with natural language instructions, while the Discriminator evaluates the semantic consistency and logical soundness of the generated paths. As illustrated in Fig. 5, the system simulates human-like reasoning behavior by leveraging a set of heuristic reasoning actions (A1–A5), which mimic how researchers analyze materials-related texts. These actions include material identification, parameter extraction, sub-question generation, and consistency verification. Using the example of AgSbTe₂, the framework demonstrates the step-by-step construction of triplets for its bulk modulus (B₀) and pressure derivative (Bp), showcasing the generation of interpretable and structured scientific information. The step-by-step generation process within the MCTS framework is further detailed in Supplementary Note 6.

The figure illustrates how SLM-MATRIX extracts structured material–value–unit triples from scientific text through a sequence of reasoning actions. The process follows three stages: a Material identification (A1–A2); b Value and unit extraction (A3–A4); c Triple construction and validation (A5–A2). Using the example of AgSbTe₂’s bulk modulus (B₀) and pressure derivative (Bp), the model decomposes the task into sub-questions, refines reasoning paths, and assembles standardized outputs through iterative actions.

The MCTS implementation within SLM-MATRIX for generating high-quality reasoning paths during the materials data extraction stage involves several components. A core aspect is the construction of the search tree, where the input material text is treated as the root node. From this root, the reasoning process is progressively expanded. Each path from the root to a leaf node constitutes a complete reasoning trajectory, which can be denoted as:

where \(x\) is the initial query and \({S}_{i}\) denotes the \(i\) reasoning step.

The MCTS implementation also features a specific action space design to improve the flexibility and controllability of reasoning. SLM-MATRIX defines five categories of human-inspired reasoning actions (A1–A5) that simulate typical analytical behaviors in material data extraction tasks. These actions are dynamically invoked by the language model through structured prompt templates. At each node in the MCTS tree, the system uses a uniform template such as: “Please generate the next action based on the current reasoning history.” The current trajectory state is embedded into the prompt, guiding the model to produce the next step, which may take the form of either a structured instruction (e.g., extracting numerical values) or a natural language sub-question (e.g., “What materials are mentioned in the text?”). The five actions are defined as follows and illustrated with candidate trajectories in Fig. 6.

For materials science queries, the generator explores multiple reasoning paths using Monte Carlo Tree Search (MCTS). Each trajectory represents a distinct sequence of reasoning actions (e.g., A3 → A3, A5 → A5, A4 → A5), composed of intermediate steps that collectively construct structured material–value–unit triples. All paths ultimately converge on the accurate extraction of key entities, such as CeO₂: 176.9 GPa; PrO₂: 176.9 GPa; and Th₀.₆Pr₀.₄O₂₋ₓ: 175 ± 12 GPa.

A1: Material Identification. This action enables the model to quickly identify key materials mentioned in the text. Leveraging the existing context, the LLM proposes the next step in reasoning, such as recognizing chemical substances or discovering material compositions. It guides the model to locate material entities like CeO₂, PrO₂, or (Th₀.₆Pr₀.₄)O₂₋ₓ, forming the foundation for downstream property extraction. For instance, in Trajectory 1 of Fig. 6, the model initiates the process by asking: “What materials are mentioned in the text?”

A2: Inference Completion and Property Extraction. Based on the material names identified in the previous step, the model is guided to complete the property extraction. Unlike full-chain reasoning, this action simplifies the process by prompting the model to finish the remaining inference steps and return structured outputs. In Fig. 6, the model extracts numerical values and units once the material name is known, yielding structured triplets.

A3: Problem Decomposition and Sub-question Exploration. This action decomposes complex property extraction tasks into a series of simpler sub-questions. The model is guided to sequentially resolve each sub-task, such as first identifying materials, then extracting values and units. In Trajectory 1 of Fig. 6, the model first answers “What materials are mentioned?”, and then follows up with “What are their corresponding values?”, demonstrating a compositional reasoning chain.

A4: Accuracy Enhancement via Reevaluation. When uncertainty or inconsistency is detected in previous steps, the model is prompted to reevaluate its answers using few-shot CoT (Chain-of-Thought) strategies. As shown in Trajectory 3, the model reassesses the extraction strategy and employs refined prompts to improve the precision of the resulting triplets.

A5: Problem Reformulation and Output Verification. When the model detects potential misinterpretation of the original query (e.g., missing units or malformed values), this action instructs it to restate the problem and verify the outputs accordingly. In Trajectory 2, the model is prompted with instructions like: “Ensure each triplet includes a material, a numerical value, and a unit.”, triggering problem reformulation and output validation.

The MCTS process employs UCT-based node selection and expansion to balance exploration and exploitation, thereby selecting the most promising node for creating new branches. The standard Upper Confidence Bound for Trees (UCT) function is applied as follows:

where \(Q(s,a)\) is the accumulated reward, \({N}_{{parent}}(s)\) is the visit count of the parent node, \(N(s,a)\) is the visit count, and \(c\) is the exploration coefficient.

Following node expansion, reward backpropagation and update procedures are executed. After each simulation, the terminal leaf node \({S}_{L}\) receives a scalar reward \({R}_{L}\), which reflects the overall quality of the generated reasoning path. This reward is then backpropagated to update the statistics of each visited state–action pair \(({s}_{i},{a}_{i})\) along the path. This involves updating the visit count:

and updating the accumulated reward:

Here, \(N({s}_{i},{a}_{i})\) is the total number of times action \({a}_{i}\) has been taken from state \({s}_{i}\), and \(W({s}_{i},{a}_{i})\) is the total reward accumulated through all such simulations. The average reward (i.e., Q-value) used in Eq. (6) is computed as:

In the material data extraction task, the terminal reward \({R}_{L}\) is determined by evaluating the completeness, format compliance, and correctness of the extracted triplets. This reward-guided backpropagation mechanism enables MCTS to learn which reasoning paths tend to yield higher-quality outputs, gradually guiding the search process to converge toward more reliable and informative candidate answers.

Discriminator-based validation and dual consistency mechanism

To improve the reliability of reasoning results, the SLM-MATRIX framework introduces a consistency verification strategy based on trajectory masking and reconstruction. This method hides key information in the reasoning path on purpose, then checks whether the model can logically recover it. In this way, it evaluates the internal consistency of the reasoning process in a more generalizable manner. Compared to judging only the final output, this strategy provides deeper insights into the stability and reasoning soundness of the model. A detailed workflow of the MCTS consistency verification process is available in Supplementary Note 7.

The validation procedure begins with N candidate reasoning trajectories generated by the MCTS-based Generator (with N = 3 in this study). For each trajectory, the system performs a deterministic, one-time masking of the final structured output—that is, the set of extracted material-property-unit triplets. The masking is conducted through explicit substitution, without introducing randomness in position or masking ratio, to ensure controllability and reproducibility. The masked trajectory (retaining the earlier reasoning steps) is then passed to the Discriminator, which is prompted to reconstruct the full structured output without access to the final conclusion. The Discriminator generates a reconstructed output denoted as \({S}_{d}^{{\prime} }\). The original trajectory:

Reconstructed full path:

to evaluate the model’s reasoning consistency and stability under incomplete information.

Regarding handling multi-attribute extraction, in the current MCTS-based trajectory generation mechanism, the final reasoning step \({S}_{d}\) typically attempts to aggregate all identified material entities and their associated triplets in a single output. Thus, in the masking stage, the system masks the entire aggregated result as a whole. Accordingly, the Discriminator is required to reconstruct all relevant property triplets based on the preceding reasoning steps. However, we observe that when the number of target attributes is large or when dependencies among attributes are complex, this whole-output masking and reconstruction strategy can significantly increase the difficulty of the task. To address this, we propose staged masking as a potential future improvement. This strategy decomposes the final output \({S}_{d}\) into attribute-level sub-conclusions and applies masking and reconstruction to each sub-output independently. This design is expected to reduce the reasoning burden per round, provide finer-grained consistency evaluation, and improve the model’s robustness and interpretability in complex extraction tasks.

The consistency scoring mechanism evaluates the consistency between the original output \({S}_{d}\) and the reconstructed output \({S}_{d}^{{\prime} }\), the system extracts all triplets from both outputs and calculates the consistency score as:

A triplet is considered correctly reconstructed only if its numerical value and unit are exactly matched, and its material name is semantically equivalent. To avoid false negatives due to expression variations (e.g., chemical formulas vs. abbreviations), the system leverages the Discriminator to assess referential equivalence between material entities. If the original output is empty, a score of 0 is assigned—unless the reconstruction is also empty, in which case the result is considered Not Applicable.

For path selection and final trajectory determination, each candidate trajectory T receives a final score calculated by combining its MCTS reward and consistency score:

Here, \({{Reward}}_{{MCTS}}(T)\) reflects the relevance between the generated answer and the input text, and \({Consistency}\left({S}_{d},{S}_{d}^{{\prime} }\right)\) measures the logical consistency under masking. The weights \(\alpha\) and \(\beta\) balance the contributions of generation quality and reasoning consistency, respectively. The trajectory with the highest final score is selected as the final output. Fig. 7 presents two representative cases: Trajectory 1, where all material-property triplets are correctly reconstructed, yielding a high consistency score and labeled as Consistent; and Trajectory 2, where errors in extracting PrO₂ and ThO₂ attributes lead to a low consistency score and an Inconsistent label. These results demonstrate the practical effectiveness of the consistency verification mechanism in assessing reasoning robustness.

This figure illustrates the consistency evaluation between the original reasoning path generated by the generator and the reconstructed path produced by the discriminator after masking the final structured output. Trajectory 1 is classified as “Consistent” due to alignment with the original content, while Trajectory 2 is marked as “Inconsistent” due to mismatched values for PrO₂ and ThO₂. The results highlight the discriminator’s effectiveness in detecting semantic deviations and ensuring output reliability.

To ensure the rigor of the final output, the SLM-MATRIX framework incorporates a mechanism for answer consistency verification and final path selection. This involves cross-verification between the MoA and Generator–Discriminator paths, where the outputs generated by the Mixture-of-Agents (MoA) path and the Generator–Discriminator (G–D) path are compared. If the material names, numerical values, and units are all consistent, the outputs are considered structurally equivalent, as illustrated in Fig. 8. For iterative refinement and establishing termination criteria, if substantial differences are detected in key fields (e.g., mismatched values or missing attributes), an iterative optimization process is triggered. This process reinitiates the cross-verification mechanism and re-runs the MoA collaborative reasoning path. If inconsistency remains, iteration continues up to a maximum of K rounds. If consistency is not achieved within K iterations, the system enters a manual adjudication mode. Finally, a standardized output format is used, where the final answer is presented in a unified format consisting of material name, property value, and unit (see Fig. 8).

This figure illustrates the final consistency verification stage in the extraction of material triples. It compares the outputs of the discriminator, the MoA (consistency-based) model, and the integrated framework. All methods converge on the same two triples for the O–BN material, confirming the correctness and consistency of the extracted results.

Data availability

The datasets analyzed during the current study are publicly available. The BulkModulus dataset was sourced from Polak et al. The MatSynTriplet dataset was refined from the Materials Science Procedural Text Corpus (MSPTC) as introduced by Mysore et al. The general mathematical reasoning datasets used include GSM8K, SVAMP, GSM-Hard, and MATH. All other data used in this study were derived from previously published research articles, which are cited accordingly within the manuscript.

Code availability

The code developed to reproduce the findings of this study is openly available in a public repository at https://github.com/AmberGTP5/SLM-MATRIX. This repository represents the version of the code used for this publication. The codebase is part of a larger, ongoing research project and may be updated in the future. The code relies on third-party models accessed via APIs, and the availability of these specific models may change over time. The repository’s documentation includes detailed instructions for running the code with the original model identifiers and provides guidance on adapting it to currently available alternatives.

References

Zhang, S. et al. OPT: Open Pre-trained Transformer Language Models. Preprint at https://doi.org/10.48550/arXiv.2205.01068 (2022).

Chowdhery, A. et al. PaLM: Scaling Language Modeling with Pathways. J. Mach. Learn. Res. 24, 1–113 (2023).

Touvron, H. et al. LLaMA: Open and Efficient Foundation Language Models. Preprint at https://doi.org/10.48550/arXiv.2302.13971 (2023).

Brown, T. et al. Language Models are Few-Shot Learners. in Advances in Neural Information Processing Systems vol. 33 1877–1901 (Curran Associates, Inc., 2020).

OpenAI et al. GPT-4 Technical Report. Preprint at https://doi.org/10.48550/arXiv.2303.08774 (2024).

Ouyang, L. et al. Training language models to follow instructions with human feedback. Adv. Neural Inf. Process. Syst. 35, 27730–27744 (2022).

Kim, E. et al. Materials Synthesis Insights from Scientific Literature via Text Extraction and Machine Learning. Chem. Mater. 29, 9436–9444 (2017).

Mavračić, J., Court, C. J., Isazawa, T., Elliott, S. R. & Cole, J. M. ChemDataExtractor 2.0: Autopopulated Ontologies for Materials Science. J. Chem. Inf. Model. 61, 4280–4289 (2021).

Polak, M. P. & Morgan, D. Extracting accurate materials data from research papers with conversational language models and prompt engineering. Nat. Commun. 15, 1569 (2024).

Vaswani, A. et al. Attention is All you Need. In Advances in Neural Information Processing Systems vol. 30 (Curran Associates, Inc., 2017).

Wang, X. et al. Self-Consistency Improves Chain of Thought Reasoning in Language Models. In: The Eleventh International Conference on Learning Representations Preprint at https://doi.org/10.48550/arXiv.2203.11171 (2023).

Gururangan, S. et al. Don’t Stop Pretraining: Adapt Language Models to Domains and Tasks. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics 8342–8360 (2020).

Wei, J. et al. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 35, 24824–24837 (2022).

Valmeekam, K., Olmo, A., Sreedharan, S. & Kambhampati, S. Large Language Models Still Can’t Plan (A Benchmark for LLMs on Planning and Reasoning about Change). in (2022).

Alayrac, J.-B. et al. Flamingo: a Visual Language Model for Few-Shot Learning. Adv. Neural Inf. Process. Syst. 35, 23716–23736 (2022).

Wang, J., Wang, J., Athiwaratkun, B., Zhang, C. & Zou, J. Mixture-of-Agents Enhances Large Language Model Capabilities. in (2024).

Qi, Z. et al. Mutual Reasoning Makes Smaller LLMs Stronger Problem-Solvers. CoRR Preprint at https://doi.org/10.48550/arXiv.2408.06195 (2024).

Goodfellow, I. J. et al. Generative Adversarial Nets. In Advances in Neural Information Processing Systems, vol. 27 (Curran Associates, Inc., 2014).

Mysore, S. et al. The Materials Science Procedural Text Corpus: Annotating Materials Synthesis Procedures with Shallow Semantic Structures. in Proceedings of the 13th Linguistic Annotation Workshop (eds. Friedrich, A., Zeyrek, D. & Hoek, J.) 56–64 (Association for Computational Linguistics, Florence, Italy). https://doi.org/10.18653/v1/W19-4007 (2019).

Cobbe, K. et al. Training Verifiers to Solve Math Word Problems. Preprint at https://doi.org/10.48550/arXiv.2110.14168 (2021).

Patel, A., Bhattamishra, S. & Goyal, N. Are NLP Models really able to Solve Simple Math Word Problems? In: Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics:Human Language Technologies 2080–2094 (2021).

Gao, L. et al. PAL: Program-aided Language Models. in Proceedings of the 40th International Conference on Machine Learning 10764–10799 (PMLR, 2023).

Hendrycks, D. et al. Measuring Mathematical Problem Solving With the MATH Dataset. In: Thirty-fifth Conference on Neural Information Processing Systems Datasets and Benchmarks Track (Round 2) Preprint at https://doi.org/10.48550/arXiv.2103.03874 (2021).

Yao, S. et al. Tree of Thoughts: Deliberate Problem Solving with Large Language Models. Advances in Neural Information Processing Systems 36, 11809–11822 (2023).

Hao, S. et al. Reasoning with Language Model is Planning with World Model. In: Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing 8154−8173 (2023).

Acknowledgements

Not applicable.

Author information

Authors and Affiliations

Contributions

X.L. and Z.H. conceived the initial idea and designed the framework. X.L., Z.H., S.Q., and C.P. developed the methodology and performed the experiments. X.L. and Z.H. analyzed the results and wrote the initial draft of the manuscript. S.Q. and C.P. contributed to data curation and software implementation. X.M. supervised the project, provided critical feedback, and revised the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Li, X., Huang, Z., Quan, S. et al. SLM-MATRIX: a multi-agent trajectory reasoning and verification framework for enhancing language models in materials data extraction. npj Comput Mater 11, 241 (2025). https://doi.org/10.1038/s41524-025-01719-x

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41524-025-01719-x