Abstract

Photonic computing has emerged as a promising next-generation technology for processors, with diffraction-based architectures showing particular potential for large-scale parallel processing. Unfortunately, the lack of on-chip reconfigurability poses significant obstacles to realizing general-purpose computing, restricting the adaptability of these architectures to diverse advanced applications. Here we propose a diffractive tensorized unit (DTU), which is a fully reconfigurable photonic processor supporting million-TOPS general-purpose computing. The DTU leverages a tensor factorization approach to perform complex matrix multiplication through clustered diffractive tensor cores, while each diffractive tensor core employs a near-core modulation mechanism to activate dynamic temporal diffractive connections. Experiments confirm that the DTU overcomes the long-standing generality and scalability constraints of diffractive computing, realizing general computing with a 10−6 mean absolute error for arbitrary 1,024-size matrix multiplications. Compared with state-of-the-art solutions, the DTU not only achieves competitive accuracy on various challenging tasks, such as natural language generation and cross-modal recognition, but also delivers a 1,000× improvement in computing throughput over conventional electronic processors. The proposed DTU represents a leap forward in general-purpose photonic computing, paving the way for further advancements in large-scale artificial intelligence.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$259.00 per year

only $21.58 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to the full article PDF.

USD 39.95

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

All data required to evaluate the conclusions of this study are presented in the Article or its Supplementary Information. The data repository is further available via Dryad at https://doi.org/10.5061/dryad.7d7wm387c (ref. 67). The DTU testing sample can be provided upon signing a material transfer agreement.

Code availability

All relevant code is available from the corresponding author upon reasonable request.

References

Jaeger, H., Noheda, B. & van der Wiel, W. G. Toward a formal theory for computing machines made out of whatever physics offers. Nat. Commun. 14, 4911 (2023).

Brunner, D. & Psaltis, D. Competitive photonic neural networks. Nat. Photonics 15, 323–324 (2021).

Huang, C. et al. Prospects and applications of photonic neural networks. Adv. Phys. X 7, 1981155 (2022).

Fang, L. et al. Engram-driven videography. Engineering 25, 101–109 (2023).

McMahon, P. L. The physics of optical computing. Nat. Rev. Phys. 5, 717–734 (2023).

Shastri, B. J. et al. Photonics for artificial intelligence and neuromorphic computing. Nat. Photon. 15, 102–114 (2021).

Xue, Z. et al. Fully forward mode training for optical neural networks. Nature 632, 280–286 (2024).

Shen, Y. et al. Deep learning with coherent nanophotonic circuits. Nat. Photon. 11, 441–446 (2017).

Meng, X. et al. Compact optical convolution processing unit based on multimode interference. Nat. Commun. 14, 3000 (2023).

Feldmann, J. et al. Parallel convolutional processing using an integrated photonic tensor core. Nature 589, 52–58 (2021).

Ashtiani, F., Geers, A. J. & Aflatouni, F. An on-chip photonic deep neural network for image classification. Nature 606, 501–506 (2022).

Fyrillas, A., Faure, O., Maring, N., Senellart, J. & Belabas, N. Scalable machine learning-assisted clear-box characterization for optimally controlled photonic circuits. Optica 11, 427 (2024).

Wetzstein, G. et al. Inference in artificial intelligence with deep optics and photonics. Nature 588, 39–47 (2020).

Zhou, H. et al. Photonic matrix multiplication lights up photonic accelerator and beyond. Light Sci. Appl. 11, 30 (2022).

Lin, X. et al. All-optical machine learning using diffractive deep neural networks. Science 361, 1004–1008 (2018).

Zhou, T. et al. Large-scale neuromorphic optoelectronic computing with a reconfigurable diffractive processing unit. Nat. Photon. 15, 367–373 (2021).

Ambrogio, S. et al. An analog-AI chip for energy-efficient speech recognition and transcription. Nature 620, 768–775 (2023).

Liu, C. et al. A programmable diffractive deep neural network based on a digital-coding metasurface array. Nat. Electron. 5, 113–122 (2022).

Wu, T., Menarini, M., Gao, Z. & Feng, L. Lithography-free reconfigurable integrated photonic processor. Nat. Photonics 17, 710–716 (2023).

Zuo, C. & Chen, Q. Exploiting optical degrees of freedom for information multiplexing in diffractive neural networks. Light Sci. Appl. 11, 208 (2022).

Zhang, Z. et al. Space–time projection enabled ultrafast all‐optical diffractive neural network. Laser Photon. Rev. 18, 2301367 (2024).

Luo, Y. et al. Design of task-specific optical systems using broadband diffractive neural networks. Light Sci. Appl. 8, 112 (2019).

Luo, X. et al. Metasurface-enabled on-chip multiplexed diffractive neural networks in the visible. Light Sci. Appl. 11, 158 (2022).

Kulce, O., Mengu, D., Rivenson, Y. & Ozcan, A. All-optical information-processing capacity of diffractive surfaces. Light Sci. Appl. 10, 25 (2021).

Hu, J. et al. Diffractive optical computing in free space. Nat. Commun. 15, 1525 (2024).

Rahman, M. S. S., Yang, X., Li, J., Bai, B. & Ozcan, A. Universal linear intensity transformations using spatially incoherent diffractive processors. Light Sci. Appl. 12, 195 (2023).

Kulce, O., Mengu, D., Rivenson, Y. & Ozcan, A. All-optical synthesis of an arbitrary linear transformation using diffractive surfaces. Light Sci. Appl. 10, 196 (2021).

Cheng, Y. et al. Photonic neuromorphic architecture for tens-of-task lifelong learning. Light Sci. Appl. 13, 56 (2024).

Xu, Z. et al. Large-scale photonic chiplet Taichi empowers 160-TOPS/W artificial general intelligence. Science 384, 202–209 (2024).

Gu, T., Kim, H. J., Rivero-Baleine, C. & Hu, J. Reconfigurable metasurfaces towards commercial success. Nat. Photon. 17, 48–58 (2023).

Yao, Y., Wei, Y., Dong, J., Li, M. & Zhang, X. Large-scale reconfigurable integrated circuits for wideband analog photonic computing. Photonics 10, 300 (2023).

Nemati, A., Wang, Q., Hong, M. H. & Teng, J. H. Tunable and reconfigurable metasurfaces and metadevices. Opto-Electron. Adv. 1, 1–25 (2018).

Qu, Y., Lian, H., Ding, C., Liu, H. & Liu, L. High-frame-rate reconfigurable diffractive neural network based on superpixels. Opt. Lett 48, 1–4 (2023).

Yang, G. et al. Nonlocal phase-change metaoptics for reconfigurable nonvolatile image processing. Light Sci. Appl. 14, 182 (2025).

Dinsdale, N. J. et al. Deep learning enabled design of complex transmission matrices for universal optical components. ACS Photonics 8, 283–295 (2021).

Li, Q., Sun, Y. & Zhang, X. Single-layer universal optical computing. Phys. Rev. A 109, 053527 (2024).

Giamougiannis, G. et al. A coherent photonic crossbar for scalable universal linear optics. J. Light. Technol. 41, 2425–2442 (2023).

Yang, Y., Krompass, D. & Tresp, V. Tensor-train recurrent neural networks for video classification. In Proc. 34th International Conference on Machine Learning https://proceedings.mlr.press/v70/yang17e/yang17e.pdf (PMLR, 2017).

Cheng, Y., Li, G., Wong, N., Chen, H. & Yu, H. DEEPEYE: a deeply tensor-compressed neural network for video comprehension on terminal devices. ACM Trans. Embed. Comput. Syst. 19, 1–25 (2020).

Miscuglio, M. & Sorger, V. J. Photonic tensor cores for machine learning. Appl. Phys. Rev. 7, 031404 (2020).

Wang, Y. et al. An energy-efficient nonvolatile in-memory computing architecture for extreme learning machine by domain-wall nanowire devices. IEEE Trans. Nanotechnol. 14, 998–1012 (2015).

Cheng, Y., Wang, C., Chen, H.-B. & Yu, H. A large-scale in-memory computing for deep neural network with trained quantization. Integration 69, 345–355 (2019).

Krizhevsky, A. et al. Learning multiple layers of features from tiny images. University of Toronto https://www.cs.toronto.edu/~kriz/learning-features-2009-TR.pdf (2009).

Deng, J. et al. ImageNet: a large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition 248-255 (IEEE, 2009); https://doi.org/10.1109/CVPR.2009.5206848

Oseledets, I. V. Tensor-train decomposition. SIAM J. Sci. Comput. 33, 2295–2317 (2011).

Cheng, Y., Yang, Y., Chen, H.-B., Wong, N. & Yu, H. S3-Net: a fast scene understanding network by single-shot segmentation for autonomous driving. ACM Trans. Intell. Syst. Technol. 12, 1–19 (2021).

A, de S.-E. The Little Prince and Letter to a Hostage (Penguin UK, 2021).

Rong, X. word2vec parameter learning explained. Nature 606, 501–506 (2014).

Graves, A., Jaitly, N. & Mohamed, A. Hybrid speech recognition with Deep Bidirectional LSTM. In 2013 IEEE Workshop on Automatic Speech Recognition and Understanding 273–278 (IEEE, 2013); https://doi.org/10.1109/ASRU.2013.6707742

Gesmundo, A. & Dean, J. An evolutionary approach to dynamic introduction of tasks in large-scale multitask learning systems. Preprint at https://arxiv.org/abs/2205.12755 (2022).

Plath, J., Sinclair, G. & Curnutt, K. The 100 Greatest Literary Characters (Bloomsbury, 2019).

Carroll L. Alice’s Adventures in Wonderland (Broadview Press, 2011).

Baum, L. F. The Wonderful Wizard of Oz (Broadview Press, 2024).

Abdi, H. & Williams, L. J. Principal component analysis. WIREs Comput. Stat. 2, 433–459 (2010).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 770–778 (IEEE, 2016).

Wang, B. Dataset for couplets. GitHub https://github.com/wb14123/couplet-dataset (2018).

michaelarman. Poems Dataset (NLP). Kaggle https://www.kaggle.com/datasets/michaelarman/poemsdataset (2020).

Karvelis, P., Gavrilis, D., Georgoulas, G. & Stylios, C. Topic recommendation using Doc2Vec. In 2018 International Joint Conference on Neural Networks (IJCNN) 1–6 (IEEE, 2018); https://doi.org/10.1109/IJCNN.2018.8489513

Chen, D. & Dollan, W. Collecting highly parallel data for paraphrase evaluation. In Proc. 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies (eds Lin, D. et al.) 190–200 (Association for Computational Linguistics, 2011).

Abu-El-Haija, S. et al. YouTube-8M: a large-scale video classification benchmark. Preprint at https://arxiv.org/abs/1609.08675 (2016).

Yang, A. et al. Vid2Seq: large-scale pretraining of a visual language model for dense video captioning. Preprint at https://arxiv.org/abs/2302.14115 (2023).

Liang, Y., Zhu, L., Wang, X. & Yang, Y. IcoCap: improving video captioning by compounding images. IEEE Trans. Multimed. 26, 4389–4400 (2024).

Xu, J., Mei, T., Yao, T. & Rui, Y. MSR-VTT: a large video description dataset for bridging video and language. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 5288–5296 (IEEE, 2016); https://doi.org/10.1109/CVPR.2016.571

Schuldt, C., Laptev, I. & Caputo, B. Recognizing human actions: a local SVM approach. In Proc. 17th International Conference on Pattern Recognition, ICPR 2004 https://doi.org/10.1109/ICPR.2004.1334462 (IEEE, 2004).

Srivastava, N., Mansimov, E. & Salakhutdinov, R. Unsupervised learning of video representations using LSTMs. Preprint at https://arxiv.org/abs/1502.04681 (2015).

Lecun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 86, 2278–2324 (1998).

Wang, C. et al. Diffractive tensorized unit for million-TOPS general-purpose computing. Dryad https://doi.org/10.5061/dryad.7d7wm387c (2025).

Acknowledgements

This work is supported in part by National Science and Technology Major Project (contract no. 2021ZD0109903), in part by Natural Science Foundation of China (NSFC) (contract nos. 62125106, 62407026 and 62205176), in part by the Beijing Outstanding Young Scientist Program (contract no. JWZQ20240101009), in part by the XPLORER PRIZE.

Author information

Authors and Affiliations

Contributions

L.F. initiated the project, L.F. and Q.D. supervised the project, L.F. and C.W. conceived the idea, C.W. designed the photonic integrated circuit, Y.C. performed the simulations, Z.X. C.W. and Y.C. constructed the experimental system and conducted the on-chip task validations. All the authors analysed the results. C.W., Y.C., Z.X. and L.F. prepared the manuscript with input from all the authors.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Photonics thanks Shengxi Huang and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

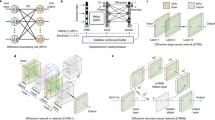

Extended Data Fig. 1 Modeling analysis and physical architecture of a DTC.

a, Schematic structure and b, mathematical expression of on-chip modulation technologies. c, Physical architecture of the proposed DTC, with the analysis in the inset representing two classical applications of optical devices, as preliminarily validated by the numerical simulations in d and e.

Extended Data Fig. 2 Chip layout and CMOS-compatible fabrication.

a, Designed chip with commercially available processes (including 29 layout layers). b, Layout of the CMOS-compatible chip on the SOI platform. c, Passive process layers, including the designs of optical devices, and d, active process layers, including the designs of electrical devices, with the fabricated chip partially characterized by optical photos.

Extended Data Fig. 3 Integrated optoelectronic chip testing platform.

a, Constructed multifunctional experimental platform, which supports both calibration and validation of the chip. b, Example collection of the infrared spots from the OMA through the spy GCs. c, Example collection of the converted volts from the PDA obtained through the signal collector. d, Developed software for setup integration and chip testing. e, Key parts of the highly integrated experimental setup. (T-laser, tunable laser. EDFA, erbium-doped fiber amplifier. OPC, optical polarization controller. FA, fiber array. EC, edge coupler. OSP, optical splitter. GCA, grating coupler array. OMA, optical modulator array. DONN, diffractive optical neural network. PDA, photon detector array. PIC, photonic integrated circuit. NIR, near-infrared spectroscopy. IVC, current‒voltage converter. TIA, transimpedance amplifier. MVS, multichannel voltage signal source).

Extended Data Fig. 4 Detailed computational framework of benchmark models with the best settings for the DTU.

Computing network structures for various applications involving the applied a, word prediction, b, image classification, and c, video captioning tasks. Generally, the structure contains multimode computational blocks for diffractive propagation, including 1-dimensional tensor core, 2-dimensional tensor chain, 3-dimensional tensor array, and 4-dimensional tensor cluster. Each basic block performs near-core modulation with recurrent flows and tensor connections, which are configured with flexible input and output parameters.

Supplementary information

Supplementary Information

Supplementary Notes 1–20, Supplementary Figs. 1–17 and Supplementary Tables 1–4.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, C., Cheng, Y., Xu, Z. et al. Diffractive tensorized unit for million-TOPS general-purpose computing. Nat. Photon. 19, 1078–1087 (2025). https://doi.org/10.1038/s41566-025-01749-3

Received:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s41566-025-01749-3