Abstract

High-resolution tissue imaging is often compromised by sample-induced optical aberrations that degrade resolution and contrast. Although wavefront sensor-based adaptive optics (AO) can measure these aberrations, such hardware solutions are typically complex, expensive to implement and slow when serially mapping spatially varying aberrations across large fields of view. Here we introduce AOViFT (adaptive optical vision Fourier transformer)—a machine learning-based aberration sensing framework built around a three-dimensional multistage vision transformer that operates on Fourier domain embeddings. AOViFT infers aberrations and restores diffraction-limited performance in puncta-labeled specimens with substantially reduced computational cost, training time and memory footprint compared to conventional architectures or real-space networks. We validated AOViFT on live gene-edited zebrafish embryos, demonstrating its ability to correct spatially varying aberrations using either a deformable mirror or postacquisition deconvolution. By eliminating the need for the guide star and wavefront sensing hardware and simplifying the experimental workflow, AOViFT lowers technical barriers for high-resolution volumetric microscopy across diverse biological samples.

Similar content being viewed by others

Main

As we peer deeper into living organisms to reveal their inner workings, our view is increasingly compromised by sample-induced optical aberrations. Numerous AO methods exist to compensate for these by using a wavefront shaping device that responds to a measurement of sample-induced aberration1. These methods differ in their complexity, generality, robustness and practicality. In our laboratory, dependable success was had using a Shack–Hartmann (SH) sensor to measure the aberrations imparted on a guide star (GS) created by two-photon excitation (TPE) fluorescence within the specimen2, and we have used this approach extensively in adaptive optical (AO) lattice light sheet (LLS) microscopy (AO-LLSM) to study four-dimensional (4D) subcellular dynamic processes within the native environment of whole multicellular organisms3.

Several recent approaches dispense with the cost and complexity of hardware-based wavefront measurement in favor of directly inferring aberrations from the microscope images themselves through machine learning (ML)4,5,6,7,8 (Supplementary Table 1). Based on our experience with a variety of specimens, any ML-AO approach suitable for AO-LLSM must meet the following specifications:

-

(1)

Speed: to maximize the range of spatiotemporal events that can be visualized, the time for the ML model to infer the aberrations across any volume should be less than the time needed to image it—typically a few seconds in LLSM for a volume that encompasses a handful of cells.

-

(2)

Robustness: the model must accurately predict the vast majority of aberrations encountered in practice—for AO-LLSM in zebrafish embryos, typically up to 5λ peak-to-valley (P–V), where λ is the free-space wavelength, in any combination of the first 15 Zernike modes (\({Z}_{0}^{\,0}\) through \({Z}_{4}^{\,\pm 4}\) Supplementary Fig. 1).

-

(3)

Accuracy: the method should be able to recover close to the theoretical three-dimensional (3D) resolution limits of the microscope, regardless of the distribution of spatial frequencies within the specimen.

-

(4)

Noninvasiveness: the method should provide accurate correction without unduly depleting the fluorescence photon budget within the specimen or perturbing its native physiology.

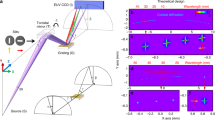

As none of the aforementioned ML-AO methods meet all these specifications, we endeavored to create one better suited to the needs of AO-LLSM. Our baseline model architecture, selected from an ablation study (Supplementary Note A, Supplementary Figs. 2–7), contains two transformer stages with patches of 32 and 16 pixels, respectively (Fig. 1c).

a, AOViFT correction. An aberrated 3D volume is preprocessed and cast into a Fourier embedding, which is passed to a 3D vision transformer model to predict the detection wavefront. A DM compensates for this aberration, enabling acquisition of a corrected volume (D, depth; H, height; W, width). b, The Fourier embedding, \({\mathcal{E}}\). The Fourier transform of the 3D volume is embedded into a lower space (\({\mathcal{E}}\in {{\mathbb{R}}}^{\ell \times d\times d}\)), consisting of three amplitude planes (α1, α2, α3) and three phase planes (φ1, φ2, φ3), each of size d × d where d is the Fourier embedding size. c, AOViFT model. The Fourier embedding is input to a dual-stage 3D vision transformer model. At each stage, the ℓ Fourier planes are tiled into k patches (Patchify), applying a radially encoded positional embedding to each patch. These patches are passed through n Transformer layers. At the end of each stage, a residual connection is added, and the patches are merged back to the shape matching the stage input (Merge patches). After all stages, the resulting patches are pooled (GlobalAvgPool) and connected with a dense layer to output the z Zernike coefficients.

Priors can greatly improve the performance of any ML approach. For our method, we depend on the prior that each isoplanatic subvolume (that is, having the same aberration) within the larger volume of interest contains one or more fluorescent puncta of true subdiffractive size. Here we introduce these by using genome-edited specimens expressing fluorescent protein-fused versions of AP2—an adapter protein that targets clathrin-coated pits (CCPs) ubiquitously present at CCPs located on the plasma membrane of all cells (Methods). While this entails a one-time upfront cost for each specimen type, it noninvasively produces a robust signal for AO correction that does not preclude simultaneously imaging another subcellular target that occupies the same fluorescence channel, provided they are computationally separable9.

Results

Benchmark comparisons of AOViFT to other architectures

We created five variants of AOViFT by varying the numbers of layers and heads in each stage (Supplementary Table 2) to explore the tradeoffs between model size (number of parameters and memory footprint), speed (floating-point operations (FLOPs) required, training time and latency) and prediction accuracy (Supplementary Fig. 3). To compare these to existing state-of-the-art architectures, we developed 3D versions of ViT and ConvNeXt for AO inference in three and four different size variants, respectively (Supplementary Note B). We trained all models with the same set of 2 × 106 synthetic image volumes chosen to capture the full diversity of aberrations and imaging conditions likely to be encountered in AO-LLSM (Methods) and tested AOViFT on a separate set of 105 image volumes created to find the limits of its accuracy when presented with an even larger range of aberration magnitudes, signal-to-noise ratio (SNR) and number of fluorescent puncta (Supplementary Note C). We also tested the performance of all models and variants on 104 image volumes from a test set that contained only a single punctum in each (Fig. 2, Supplementary Figs. 8–9 and Supplementary Table 3).

a–d, Comparison of model variants ConvNeXt-T/S/B/L (blue), ViT/16-S/B (orange) and AOViFT-T/S/B/L/H (gray). a, Total number of trainable parameters. b, Maximum predictions per second, using a batch size of 1,024 on a single A100 GPU. Higher values are better. c, Training time on eight H100 GPUs. d, Median λ RMS residuals over 10,000 test samples after one correction, with aberrations ranging between 0.2λ and 0.4λ, simulated with 50,000 to 200,000 integrated photons. e,f, Median λ RMS residuals using our Small variant of AOViFT model for a single bead over a wide range of SNR. g,h, Median λ RMS residuals using our Small variant of AOViFT model for several beads (up to 150 beads), simulated at photon levels from 50,000 to 200,000 per bead. Lower values are better for all performance indicators listed here, except for b. CDF, cumulative distribution function; KDE, kernel density estimation.

Although all models but the smallest variant of ConvNeXt were able to reduce the median residual error in a single iteration of aberration prediction to less than the diffraction limit (Fig. 2d), AOViFT excelled in its parsimonious use of compute resources: training time using a node with eight NVIDIA H100 GPUs (Fig. 2c), training FLOPs (Supplementary Fig. 8c) and memory footprint (Supplementary Fig. 8f). This reflects the benefits of our multistage architecture: faster convergence by learning features across different scales, accurate prediction even at comparatively modest model size (Fig. 2a), highest inference rate among the models tested (Fig. 2b) and fastest single-shot inference time (‘latency’; Supplementary Fig. 8h). Given its small size and low latency, we chose the Small variant of AOViFT as our primary model for evaluation.

In silico evaluations of AOViFT

Diffraction-limited performance is defined conventionally by wavefront distortions below ≈0.075λ root mean square (RMS) or λ/4 peak-to-valley, corresponding to a Strehl ratio of 0.8 under the Rayleigh quarter-wave criterion10,11. In silico evaluation using the 104 single punctum test images shows that AOViFT recovers diffraction-limited performance in a single iteration in nearly all trials where the initial aberration is <0.30λ RMS and the integrated signal is >5 × 104 photons (Fig. 2e). The corrective range increases to 0.40, 0.50, 0.55 and 0.6λ RMS for two to five iterations, respectively, although ~5 × 104 photons remains the floor of required signal (Fig. 2f and Supplementary Fig. 10). This is comparable to the signal needed for SH wavefront sensing2,3 and at least three times lower than that needed for PhaseRetrieval. In comparison, PhaseNet4 and PhaseRetrieval12 extend the diffraction-limited range only slightly (initial aberration <0.15λ RMS) after a single iteration on the same test data (Supplementary Fig. 11a,b) and, in contrast to AOViFT, do not appreciably increase this range after several iterations (Supplementary Fig. 12a–f). PhaseRetrieval does advantageously reduce residuals after a single iteration over a much broader range of initial aberration than AOViFT, and this trend continues with further iteration, albeit never back to the diffraction limit (Supplementary Fig. 12a–c). However, this advantage is lost if the fiducial bead is not centered in the field of view (FOV), and the predictive power of PhaseNet is lost completely under the same circumstances (Supplementary Fig. 11d,e) because the widefield 3D image of the bead is then clipped. Furthermore, PhaseRetrieval and PhaseNet assume a priori the existence of only a single bead. AOViFT is trained on one of five puncta falling anywhere within the FOV but, thanks to the normalization step to eliminate phase fringes from several puncta (Supplementary Fig. 24dd), produces inferences comparably accurate to a single punctum for up to 150 puncta, provided their mean nearest neighbor distance is >400 nm (Fig. 2g,h and Supplementary Fig. 13). Indeed, AOViFT relies on the combined signal of several native but dim subdiffractive biological assemblies such as CCPs to achieve accurate inferences.

Experimental characterization on fiducial beads

We performed all experiments using the AO-LLSM microscope schematized in Supplementary Fig. 14. For initial characterization of the ability of AOViFT to correct a wide range of possible aberrations, we performed 66 separate experiments wherein we:

-

(1)

introduced aberration by applying to the deformable mirror (DM) one of the 66 possible combinations of one or two Zernike modes (from the first 15, excluding piston, tip/tilt and defocus), with each mode set to 0.2λ RMS amplitude

-

(2)

used AO-LLSM with the MBSq-35 LLS excitation profile of Supplementary Table 6 to image a field of 100 nm diameter fluorescent beads with this aberration;

-

(3)

used AOViFT to predict the aberration

-

(4)

applied the corrective pattern to the DM

-

(5)

repeated (1)–(5) for five iterations

In 45 cases, we recovered diffraction-limited performance in two iterations (Fig. 3) and, in five iterations for 11 more cases (Supplementary Fig. 15). In the remaining ten cases, aberrations were reduced by at least 50% after five iterations.

a–l, Four examples where the initial aberration was applied artificially by the DM. a,b,c, O-Astig and H-Coma \(({Z}_{n = 2}^{\,m = \,{-}\!2}+{Z}_{n = 3}^{\,m = 1})\); d,e,f, O-Quadrafoil and P-Spherical \(({Z}_{n = 4}^{\,m = \,{-}\!4}+{Z}_{n = 4}^{\,m = 0})\); g,h,i, V-Astig and V-Trefoil \(({Z}_{n = 2}^{\,m = 2}+{Z}_{n = 3}^{\,m = \,{-}\!3})\); j,k,l, V-Coma and O-Astig2 \(({Z}_{n = 3}^{\,m = \,{-}1}+{Z}_{n = 4}^{\,m =\, {-}\!2})\). Iteration 0 shows XY maximum intensity projection (MIP) of four beads with initial aberration imaged using LLS, providing initial conditions for AOViFT predictions (a,d,g,j). Iteration 1 shows the resulting field of beads after applying AOViFT prediction to the DM (b,e,h,k). Iteration 2 shows the results after applying the AOViFT prediction measured from Iteration 1 (c,f,i,l). Insets: the AOViFT predicted wavefront over the NA = 1.0 pupil with a dashed line at NA = 0.85. m, Heatmap of the residual aberrations (measured by PhaseRetrieval on isolated bead) after applying AOViFT predictions, starting with a single Zernike mode up to Mode 14 (\({Z}_{n = 4}^{\,m = 4}\)) across up to five iterations.

Correction of aberrations on live cultured cells

We next tested the ability of AOViFT to correct aberrations during live cell imaging under biologically relevant conditions of limited signal, dense puncta and specimen motion. To this end, we applied aberrations to the DM and imaged cultured SUM159 human breast-cancer-derived cells gene edited to produce endogenous levels of the clathrin adapter protein AP2 tagged with eGFP. This yielded numerous membrane-bound CCPs at various stages of maturation that were suitable for aberration measurement. In one example (Fig. 4a), we applied a 2.9λ peak-to-valley (P–V) aberration to the DM consisting of a mix of horizontal coma and oblique trefoil (\({Z}_{3}^{1}\) and \({Z}_{3}^{3}\)), and recovered near diffraction-limited performance after two iterations (Supplementary Table 4). Peak signal at the CCPs increased twofold to threefold postcorrection, and the spatial frequency content as seen in orthoslices through the 3D fast Fourier transform (FFT) (insets at bottom) increased in every iteration. In another case (Fig. 4b), we reduced a 3.1λ P–V aberration composed of a combination of horizontal coma and primary spherical (\({Z}_{3}^{1}\) and \({Z}_{4}^{\,0}\)) to 0.069λ RMS after two iterations, increasing CCP signal by threefold to fourfold (Supplementary Table 4). Four more examples of correction on cells and fiducial beads after applying single modes of 1λ P–V aberration are given in Supplementary Fig. 16 and five more examples of two-mode correction are shown in Supplementary Fig. 17.

a, 3D volume SUM159-AP2 cells represented as xyMIPs and yzMIPs covering a 15.7 × 55.6 × 25.6 μm3 FOV after applying a 2.9λ P–V aberration to the DM. This aberration combines horizontal coma \({Z}_{3}^{1}\) and oblique trefoil \({Z}_{3}^{3}\). b, xyMIPs and yzMIPs of a similar FOV with 3.1λ P–V aberration composed of horizontal coma (\({Z}_{3}^{1}\)) and primary spherical (\({Z}_{4}^{\,0}\)). In both cases, near diffraction-limited performance was recovered after two iterations. Insets: FFTs and corresponding wavefronts for each iteration.

In vivo correction of native aberration within a zebrafish embryo

As a transparent vertebrate, zebrafish are a popular model organism for imaging studies. However, the spatially heterogenous refractive index within multicellular organisms and the discontinuity of refractive index at their surfaces with respect to the imaging medium result in aberrations that vary throughout their interiors (Fig. 5a). We corrected a ~ 2λ P–V aberration in one such region (Fig. 5b, top) with AOViFT (Fig. 5b, bottom) near the notochord of a transgenic zebrafish embryo 72 h postfertilization expressing AP2-mNeonGreen in CCPs at the membranes of all cells (ap2s1:ap2s1-mNeonGreenbk800; Methods) and recovered spatial frequencies across the corrected volume (FFTs at right) comparable to SH correction over the same region (Fig. 5b, middle).

a, xyMIP of a 72-hpf gene-edited zebrafish embryo expressing endogenous AP2-mNeonGreen, exhibiting native and spatially varying aberrations near the notochord. b, Enlarged view of the dashed blue box in a. The xyMIPs and yzMIPs, along with the corresponding FFTs of a 12.5 × 12.5 × 12.8 μm3 FOV, show ~2λ P–V of sample-induced aberration without AO (top row), corrected by SH (middle row) and corrected by AOViFT (bottom row). The contrast for each volume was scaled to its 1st and 99.99th percentile intensity values. c, xyMIPs and yzMIPs of a different gene-edited zebrafish embryo expressing exogenous AP2-mNeonGreen and injected mRNA for mChilada-Cox8a (to visualize mitochondria). The AP2 signal was used to infer the underlying aberration, and the same correction was applied to both channels. Top: ~1.5λ P–V aberration; middle: AOViFT correction after two iterations. The third and fourth rows present the results of OMW deconvolution without and with AOViFT corrected volumes, respectively.

In a second embryo expressing AP2-mNeonGreen in CCPs and mChilada-Cox8a in mitochondria (Fig. 5c), we used the mNeonGreen signal to correct a ~1.5λ P–V aberration (top row) in one region, which provided an aberration-corrected view of both CCPs and mitochondria (second row). Deconvolution of the aberrated images using an assumed ideal point spread function (PSF) amplified only high frequency artifacts (third row), but provided a more accurate representation of sample structure (bottom row) for the aberration-corrected ones by compensating for known attenuation of high spatial frequencies in the ideal optical transfer function (OTF).

Correction of spatially varying aberrations in vivo

With GS-illuminated SH sensors, aberration measurement is not accurate unless it is confined to a single isoplanatic region. However, these are often much smaller than the volume of interest, and their boundaries are not generally known a priori. Consequently, microscopists are often forced to map aberrations by serial SH measurement over many small, tiled subregions whose dimensions are a matter of educated guesswork. On the other hand, with AOViFT we generated a complete map of 204 aberrations (Fig. 6a) at 6.3-μm intervals over 37 × 211 × 12.8 μm3 in a live zebrafish embryo 48 hpf (Fig. 6b,d) in ~1.5 min on a single node of four A100 graphics processing units (GPUs). Unfortunately, it is not possible to apply a corrective pattern to a single pupil conjugate DM and thereby correct this spatially varying aberration across the entire FOV. One option would be to apply each aberration in turn and image the tiles one by one, or together in groups of similar aberration. Although slow, this would recover the full information of which the microscope is capable. However, a much faster and simpler alternative is to deconvolve each raw image tile with its own unique aberrated PSF (Fig. 6c,e). This does not recover full diffraction-limited performance, but it does suppress aberration-induced artifacts and provides a more faithful representation of the underlying sample structure (Fig. 6f–h).

a, Isoplanatic patch map determined by AOViFT for 204 tiles (6.3 × 6.3 × 12.8 μm3 each), spanning 37 × 211 × 12.8 μm3 FOV in a live, gene-edited zebrafish embryo expressing endogenous AP2-mNeonGreen. The yellow box marks areas with insufficient spatial features to accurately determine aberrations; an ideal PSF was used for OMW deconvolution in these regions. b, xyMIP of the AP2 signal without AO. c, xyMIP of each tile after deconvolution with spatially varying PSFs predicted by AOViFT. d,e, Raw (d) and deconvolved (e) xyMIPs of the mitochondria channel for the same region. f, Enlarged view of the wavefronts within the black dashed box in a. g,h, Zoomed-in views of AP2 (g) and mitochondria (h) structures from b–e, comparing No AO to OMW deconvolution using either an ideal PSF or spatially varying tile-specific aberrated PSFs predicted by AOViFT.

Discussion

AOViFT provides accurate mapping of spatially varying sample-induced aberrations in specimens having subdiffractive puncta. Although AOViFT can be slower than using SH for a single region of interest, it gains a substantial net speed advantage when mapping several regions of interest across a large FOV due to its parallelizable inference framework (Supplementary Table S5). Moreover, its throughput can be further accelerated by distributed GPU processing across several nodes and by compiling the model with TensorRT (https://docs.nvidia.com/deeplearning/tensorrt/pdf/TensorRT-Developer-Guide.pdf) for optimized inference. Unlike AOViFT, SH measurement with a TPE GS has the key advantage of being agnostic to the fluorescence distribution within each isoplanatic region, but requires additional hardware (TPE laser, galvos, SH sensor) and the TPE power level must be carefully monitored to minimize photodamage. In addition, since the isoplanatic regions are not known a priori, the initial measurement grid for SH sensing must be very dense to accurately map aberrations and their rate of change across the FOV, requiring additional time at an additional cost to the photon budget. Conversely, AOViFT determines the aberration map from a single large 3D image volume, and can therefore iteratively adjust tile sizes or positions in silico as needed until the map converges to an accurate solution.

Although trained for a specific LLS type (Supplementary Table 6), AOViFT retained predictive capability when tested in silico with other light sheets as well (Supplementary Fig. 20). Although training specifically for such light sheets might increase the predictive range even further, a more fruitful path might be to augment the synthetic training data with light sheets axially offset from the detection focal plane to replace the closed-loop hardware-based mitigation of such offsets needed now3. Future models might leverage ubiquitous subcellular markers, such as plasma membranes or organelles, rather than genetically expressed diffraction-limited puncta, provided these markers contain sufficient high spatial frequency content for accurate inference of aberrations. Finally, to enhance generalizability of AOViFT and reduce overfitting to narrowly defined imaging scenarios, future models should incorporate a more diverse range of light sheets, specimen types and labeling strategies.

Development of AOViFT highlighted the challenges of constructing a 3D transformer-based architecture for AO correction. Each iteration of model design, training and testing required specialized simulated data pipelines, large GPU resources and extensive hyperparameter tuning—leading to lengthy model development cycles. A key bottleneck is the absence of universally applicable, large pretrained models for volumetric imaging data—a limitation that extends beyond AO applications.

Unlike the natural image domain, where ViT benefited from extensive training on standardized two-dimensional (2D) datasets, a comparable ‘foundation model’ for 3D microscopy is pending the collection of similar datasets. This gap severely limits how far and how quickly new methods like AOViFT can be generalized. Although our work highlights the feasibility of building a solution for a given task (for example, AO corrections under specific imaging conditions), adapting to new scenarios such as new sample types, microscope geometries or aberration ranges typically requires substantial retraining and additional data curation.

These limitations highlight the need for pretrained foundation models in volumetric microscopy. We consider AOViFT—a 3D vision transformer model—as a stepping stone towards the more ambitious goal of creating a 4D model pretrained on massive volumetric microscopy datasets. Such a model could be fine-tuned for tasks across spatial (from molecules to organisms) and temporal (from stochastic molecular kinetics to embryonic development) scales13. Realizing this vision would require petabytes of high-quality curated 4D datasets and significant computational resources. However, successful implementation would dramatically shorten development timelines, improve generalization and reduce the overhead of custom training for varied experimental setups or microscope configurations.

Methods

AO-LLS microscope

Imaging was performed using an AO-LLS microscope similar to one described previously3 (Supplementary Fig. 14 and Supplementary Table 7). Briefly, 488-nm and 560nm lasers (500 mW 2RU-VFL-P-500-488-B1R and 1,000 mW 2RU-VFL-P-1000-560-B1R, MPB Communications Inc.) were modulated using an acousto-optical tunable filter (Quanta-Tech, AA OptoElectronic, AOTFnC-400.650-CPCh-TN) and shaped into a stripe by a Powell lens (Laserline Optics Canada, LOCP-8.9R20-2.0) and a pair of 50- and 250-mm cylindrical lenses (25-mm diameter; Thorlabs, ACY254-050 and LJ1267RM-A). The stripe illuminated a reflective, phase-only, gray-scale spatial light modulator (Meadowlark Optics, AVR Optics, P1920-0635-HDMI; 1,920 × 1,152 pixels) located at a sample conjugate plane. An eight-bit phase pattern written to the spatial light modulator generated the desired light-sheet pattern in the sample, and an annular mask (Thorlabs Imaging) at a pupil conjugate plane blocked unwanted diffraction orders before the light passed through the excitation objective (Thorlabs, TL20X-MPL). A pair of pupil conjugate galvanometer mirrors (Cambridge Technology, Novanta Photonics, 6SD11226 and 6SD11587) scanned the light sheet at the sample plane. The sample was positioned at the common foci of the excitation and detection objectives by a three-axis XYZ stage (Smaract; MLS-3252-S, SLS-5252-S and SLS-5252-S). Fluorescence emission from the sample was collected by a detection objective (Zeiss, ×20 1.0 numerical aperture (NA), 421452-9800-000), reflected off a pupil conjugate DM (ALPAO, DM69) that applied aberration corrections, and then recorded on two sample conjugate cameras (Hamamatsu ORCA Fusion).

SH measurements (Supplementary Fig. 18) were performed on the same microscope by localizing the intensity maxima (on a Hamamatsu ORCA Fusion) formed by the emitted light after passage through a pupil conjugate lenslet array (Edmund Optics, 64-479). The positional shifts of these maxima relative to those seen with no specimen present encode the pupil wavefront phase2, which can then be reconstructed.

Integration with microscope

AOViFT inference is performed routinely on the microscope acquisition PC (Intel Xeon, W5-3425, Windows 11, 512 GB RAM, NVIDIA A6000 with 48 GB VRAM). Inferences are made in an Ubuntu Docker container based on the TensorFlow NGC Container (24.02-tf2-py3) running in parallel with the microscope control software. Data communication between AOViFT and the microscope control software is handled through the computer’s file system. Image files and command-line parameters are passed to the model, and an output text file reports the resultant DM actuator values (Supplementary Fig. 19). When a volume is large enough to require tiling and dozens of volumes need to be processed, model inferences are parallelized and run using a SLURM compute cluster consisting of four nodes, each node containing four NVIDIA A100 80GB.

Fluorescent beads and cells expressing fluorescent endocytic adapter AP2

The 25-mm coverslips (Thorlabs, CG15XH) used for imaging beads, cells and zebrafish embryos were first cleaned by sonication in 70% ethanol followed by Milli-Q water, each for at least 30 min. They were then stored in Milli-Q water until use. Gene-edited SUM159-AP2-eGFP cells14 were grown in Dulbecco’s modified Eagle’s medium (DMEM)/F12 with GlutaMAX (Gibco, 10565018) supplemented with 5% fetal bovine serum (FBS; Avantor Seradigm, 89510-186), 10 mM HEPES (Gibco 15630080), 1 μg ml−1 hydrocortisone (Sigma, H0888), 5 μg ml−1 insulin (Sigma, I9278). Fluorescent beads (0.2-μm diameter, Invitrogen FluoSpheres Carboxylate-Modified Microspheres, 505/515 nm, cat. no. F8811 or 0.2-μm diameter Tetraspeck, Thermo Fisher Scientific Invitrogen, T7280) alone or with cells at 30–50% confluency were deposited onto plasma-treated and poly-d-lysine (Sigma-Aldrich, P0899)-treated 25-mm coverslips. Cells were cultured under standard conditions (37 °C, 5% CO2, 100% humidity) with twice weekly passaging. The SUM159-AP2-eGFP cells were imaged in Leibovitz’s L-15 medium without phenol red (Gibco,21083027) with 5% FBS (American Type Culture Collection, SCRR-30-2020), 100 μM Trolox (Tocris, 6002) and 100 μg ml−1 Primocin (InvivoGen, ant-pm-1) at 37 °C. Aberrations of approximately 1λ P–V were induced using a DM in ten configurations of Zernike modes (\({Z}_{2}^{2}\), \({Z}_{3}^{-3}\), \({Z}_{3}^{-1}\), \({Z}_{4}^{\,0}\) and their pairwise combinations). Widefield PSFs were collected from 0.2-μm fluorescent beads to confirm the aberrations applied and residual aberrations after correction (Supplementary Table 7).

Zebrafish embryos expressing fluorescent AP2 and mitochondria

Genome-edited ap2s1-expressing zebrafish (genome editing of ap2s1, ap2s1:ap2s1-mNeonGreenbk800; Supplementary Note D) were injected with cox8-mChilada mRNA for two color experiments. The N-terminal 34 amino acids of Cox8a were cloned into a pMTB backbone with a linker and mChilada coding sequence on the C terminus (unpublished, gift from N. Shaner). The plasmid was linearized, and mRNA was synthesized using a SP6 mMessage mMachine transcription kit (Thermo Fisher). RNA was purified using an RNeasy kit (Qiagen) and embryos were injected with 2 nl of 10 ng μl−1 Cox8a-mChilada, 100 mM KCl, 0.1% phenol red, 0.1 mM EDTA and 1 mM Tris, pH 7.5. Zebrafish embryos were first nanoinjected with 3 nl of a solution containing 0.86 ng μl−1 α-bungarotoxin protein, 1.43 × PBS and 0.14% phenol red. The injected embryos were mounted for imaging using a custom, volcano-shaped agarose mount. Each mount was constructed by solidifying a few drops of 1.2% (w/w) high-melting agarose (Invitrogen UltraPure Agarose, 16500–100, in 1× Danieau buffer) between a 25-mm glass coverslip and a 3D-printed mold (Formlabs Form 3+, printed in clear v.4 resin). This created ridges that formed a narrow groove. A hair-loop was used to orient the embryo within the agarose groove, positioning the left lateral side upward. Subsequently, 10–20 μl of 0.5% (w/w) low-melt agarose (Invitrogen UltraPure LMP Agarose, 16520–100, in 1× Danieau buffer) preheated to 40 °C, containing 0.2 μm Tetraspeck microspheres, was added on top of the embryo. This layer solidified around the embryo to secure it while providing fiducial beads for sample finding. Once the low-melt agarose solidified, the volcano-shaped mount was held by a custom sample holder for imaging. The embryo was oriented so that its anterior–posterior axis lay parallel to the sample x axis, with the anterior end facing the excitation objective and the posterior end facing the detection objective. The microscope objectives and the sample was immersed in a bath of ~50 ml bath Danieau buffer and were fully submerged, ensuring the embryo remained in buffered medium. Measurements for AOViFT and SH were done serially on the same FOV to compare the aberration corrections of both methods (Supplementary Table 7).

Spatially varying deconvolution

To compensate for sample-induced aberrations postacquisition, we performed a tile-based spatially varying deconvolution on each 3D volume. Each volume was first subdivided into several 3D tiles approximating isoplanatic patches. A AOViFT predicted PSF (for compensation) or an ideal PSF (for no compensation) was assigned to each tile, and aberrations were corrected using OTF masked Wiener (OMW) deconvolution15. To minimize boundary artifacts during deconvolution, the tile size was extended by half the PSF width at each boundary (32 pixels); after deconvolution, these overlaps were removed and the deconvolved core regions were stitched together to form the final corrected volume. All computations were done in MATLAB v.2024a (Mathworks).

Synthetic training/testing datasets

To train a model for predicting optical aberrations from images of subdiffractive objects in biological samples, we generated synthetic datasets encompassing a range of relevant variables (for example, aberration modes and amplitudes, number and density of puncta, SNR). This synthetic dataset generation procedure is as follows.

For a single subdiffractive punctum, the electric field in the rear pupil of the detection objective is given by:

where A(kx, ky) is the pupil amplitude with coordinates kx, ky, and ϕ(kx, ky) is the pupil phase. Under aberration-free conditions, ϕ(kx, ky) is a constant. We can empirically determine A(kx, ky) by acquiring a widefield image of an isolated subdiffractive object (100-nm fluorescent bead), performing phase retrieval12,16 and applying the opposite of the retrieved phase using a pupil conjugate DM so that ϕ(kx, ky) becomes a constant.

The electric field for the image of a single aberrated punctum is:

where the ϕabb(kx, ky) is described as a weighted sum of Zernike modes of unique amplitudes:

Empirically, zebrafish induced aberrations for the microscopes used here are well described by combinations of 11 of the first 15 Zernike modes17 (Supplementary Fig. 1), for which n ≤ 4, excluding piston (\({Z}_{0}^{\,0}\)), tip (\({Z}_{1}^{-1}\)), tilt (\({Z}_{1}^{1}\)) and defocus (\({Z}_{2}^{\,0}\)) (as these represent phase offsets or sample translation). The distributions and amplitudes of the remainder are used to build the training set as discussed below.

The aberrated 3D detection PSF of a subdiffractive punctum is approximated by:

where \({k}_{z}=\sqrt{{(\frac{2\pi \eta }{\lambda })}^{2}-{k}_{x}^{2}-{k}_{y}^{2}}\), η is the refractive index of the imaging medium and λ is the free-space wavelength of the fluorescence emission.

For light sheet microscopy, the aberrated 3D overall PSF is:

where PSFexc(z) is given by the cross-section of the swept light sheet used for imaging. Examples of these PSFs are shown in Supplementary Fig. 20 I–V, with MBSq-35 in Supplementary Table 6 used for training and imaging (see ref. 18 for additional information on these light sheets).

Each synthetic training volume sample V is 64 × 64 × 64 voxels in size spanning 8 × 8 × 12.8 μm3 (with 125 × 125 × 200 nm3 voxels) and containing between J = 1 to J = 5 puncta chosen from a uniform distribution and located randomly at points (xj, yj, zj) within the volume. Each punctum is modeled as a Gaussian of full width at half maximum wj chosen randomly from the set [100, 200, 300, 400] nm, allowing for slightly larger than the diffraction-limit features. The image of each punctum is generated by its convolution with the aberrated PSF:

The integrated photons No per punctum were selected from a uniform distribution of 1 to 200,000 photons. The total intensity distribution is:

where

As the signal from each aberrated punctum can exceed the boundary of V, total signal SV within V is:

After accounting for partial signal contributions (SV) the photons per voxel were converted to camera counts by applying the quantum efficiency QE, Poisson shot noise η and camera read noise ϵ to arrive at the final synthetic training set example:

Zernike distributions

To ensure diversity in the training set to cover potential aberrations, each training example was chosen from the amplitudes of the 11 included aberration modes shown in color in Supplementary Fig. 1 with equal probability from one of four different distributions:

-

(1)

Single mode (Supplementary Fig. 21b) One mode is randomly chosen, with amplitude α chosen randomly from 0 ≤ α ≤ 0.5 λ RMS.

-

(2)

Bimodal (Supplementary Fig. 21c) An initial target for the total amplitude αt is chosen randomly from 0 ≤ αt ≤ 0.5 λ RMS. A second partitioning factor ϵ is chosen randomly from 0 ≤ ϵ ≤ 1. The amplitudes of the two modes are then α1 = ϵαt and α2 = (1 − ϵ)αt.

-

(3)

Powerlaw (Supplementary Fig. 21d) An initial target for the total amplitude αt is chosen randomly from 0 ≤ αt ≤ 0.5 λ RMS. The initial partitioning factors ϵn for the modes are chosen randomly from a Lomax (that is, Pareto II) distribution19:

$${\epsilon }_{n}=\frac{\gamma }{{({x}_{n}+1)}^{\gamma +1}}\quad {\rm{where}}\quad \gamma =0.75$$(11)where each xn is chosen randomly from 0 ≤ xn ≤ 1. They are then renormalized:

$${\epsilon }_{n}^{{\prime} }=\frac{{\epsilon }_{n}}{\mathop{\sum }\nolimits_{n = 1}^{11}{\epsilon }_{n}}$$(12)and the final amplitudes of the modes are \({\alpha }_{n}={\epsilon }_{n}^{{\prime} }{\alpha }_{t}\).

-

(4)

Dirichlet (Supplementary Fig. 21e) An initial target for the total amplitude αt is chosen randomly from 0 ≤ αt ≤ 0.5 λ RMS. The initial partitioning factors ϵn for the modes are chosen randomly from 0 ≤ ϵn ≤ 1. They are then renormalized:

$${\epsilon }_{n}^{{\prime} }=\frac{{\epsilon }_{n}}{\mathop{\sum }\nolimits_{n = 1}^{11}{\epsilon }_{n}}$$(13)and the final amplitudes of the modes are \({\alpha }_{n}={\epsilon }_{n}^{{\prime} }{\alpha }_{t}\).

Together, the training examples from these four distributions create a diverse set of overall aberration amplitudes and number of significant modes in the training data, with all 11 modes contributing equally across the dataset (Supplementary Fig. 21a).

Training dataset

For the model training, a dataset of 2 million synthetic 3D volumes was created, with aberration magnitude uniform sampled from 0.0 to 0.5 λ RMS (at wavelength λ = 510 nm), uniform distribution of the number of objects between 1 and 5, and photons ranging between 1 and 200,000 integrated photons per object.

Test dataset

To evaluate our models, we created a test dataset with 100,000 3D volumes. The parameter distribution was the same as training, but extended the aberration magnitude up to 1.0 λ RMS, and up to 500,000 integrated photons. To test the operational limit of our models, this test dataset included up to 150 objects in any given volume.

Fourier embedding

Most ML vision models operate on real-space representations of the data, which lack clearly defined limits on image size or feature descriptors of their content. Instead, we used Fourier domain embeddings (Supplementary Fig. 24). These are bound by the microscope’s OTF. Aberrations within an isoplanatic patch globally effect all photons within that patch, producing a unique, learnable ‘fingerprint’ pattern in the FFT amplitude and phase (Supplementary Note A.1 and Supplementary Figs. 5–7).

Preprocessing

To create Fourier embeddings (Fig. 1b) for our model, we preprocess the input 3D image stack W of CCPs within an isoplanatic region to suppress noise and edge artifacts (Fig. 1a),

The preprocessing module (ϒ) begins with a set of filters to extract sharp-edged objects that reveal the aberration signatures: a Gaussian high-pass filter to remove inhomogeneous background and a low-pass filter through a Fourier frequency filter, with cutoff set at the detection NA limit (σ = 3 voxels). A Tukey window (Tukey cosine fraction = 0.5, in \(\hat{x}\hat{y}\) only) is applied to remove FFT edge artifacts from the volume borders. No windowing is applied along the axial direction, \(\hat{z}\), because embeddings are constructed near kz = 0 where aberration information is maximized.

Embedding

Once preprocessed, a ratio of the resultant 3D FFT amplitude, to the 3D FFT amplitude of the ideal PSF (undergoing identical preprocessing steps) is used to generate the amplitude embedding, α(kz) at each kz plane:

where \({\mathcal{F}}\) denotes the 3D Fourier transform. The most useful information content is located at kz = 0, the principal plane located at the midpoint of the \(\hat{{k}_{z}}\)-axis. Three 2D planes from α1, α2 and α3 along \(\hat{{k}_{z}}\)-axis as are necessary to extract axial information for inputs to the model as follows:

where α1 is the principal plane along the kx-axis and ky-axis, α2 is the mean of five consecutive 2D planes starting from the principal plane and α3 is the mean of five consecutive 2D planes starting from the kz = 5 plane (Supplementary Figs. 7 and 24a,c).

For the phase embedding, φ, we first remove interference from several puncta in the FOV that may obscure the aberration signature in the phase image. The interference patterns are removed using: peak local maxima (PLM; https://scikit-image.org/docs/stable/auto_examples/segmentation/plot_peak_local_max.html) for peak detection in real space using normalized cross-correlation (NCC; https://scikit-image.org/docs/stable/auto_examples/registration/plot_masked_register_translation.html) with a kernel cropped from the highest peak in V. The neighboring voxels around the detected puncta peaks are masked off, creating a volume, \({\mathcal{S}}\). The OTF with interference removed, \({\tau }^{{\prime} }\), can now be obtained as well as a real space reconstructed volume, \({V}^{{\prime} }\), through inverse FFT,

The phase φ(kz) at each kz plane is then given by the unwrapped phase of τ at that plane (Supplementary Fig. 24b,d). We calculate the three phase embeddings in the same manner as our amplitude embedding such that:

Combining the six planes together, we define the input to the model as a Fourier embedding,

A notable advantage of this approach is that, although the signal from each individual CCP is weak, those in the same isoplanatic region contain near-identical spatial frequency distributions that add together to yield Fourier embeddings of high SNR suitable for accurate inference of the underlying aberration (Supplementary Fig. 6).

AO vision Fourier transformer

Below, we outline the key components of AOViFT, which uses a 3D multistage vision transformer architecture. This model efficiently captures Fourier domain features at several spatial scales, enabling robust aberration prediction.

Multistage

Recent advances in attention-based transformers have demonstrated scalability, generalizability and multi-modality for a range of computer vision applications20,21,22,23,24.

Multiscale (or hierarchical) vision transformers, such as Swin25 and MViT26, are designed with specialized modules (for example, shifted-window partitioning25 and hybrid window attention27) to excel at a variety of detection tasks for 2D natural images using supervised training on ImageNet28. Although these variants are more efficient than their ViT counterparts in terms of FLOPs and number of parameters, they often incorporate specialized modules as noted above. Hiera29 showed that these designs can be streamlined without performance loss by leveraging large-scale self-supervised pretraining.

Current multiscale architectures use a feature pyramid network scheme30—downsampling the spatial resolution of the image for each stage while expanding the embedding size for deeper layers. Instead, in our work, we use Ω stages and do not downsample during any of the stages, but rather select different patch sizes for each stage (Fig. 1). This allows the embedding dimension within each stage to be fixed to the number of voxels in the patch of that stage, rather than expanding with increasing depth as in some hierarchical models.

Patch encoding

The input to the model is the Fourier embedding, a 3D tensor \({\mathcal{E}}\in {{\mathbb{R}}}^{\ell \times d\times d}\), where ℓ = 6 is the number of 2D planes each with a height and width of d. For each model stage, i, patchifying begins by dividing the input tensor \({\mathcal{E}}\) into nonoverlapping 2D tiles (each pi × pi) that are each flattened into a one-dimensional patch for a total of ki patches in a plane. After patchifying, the input tensor is transformed into \({x}_{p}\in {{\mathbb{R}}}^{\ell \times {k}_{i}\times {p}_{i}^{2}}\) (Fig. 1b).

The initial ViT model uses a set of consecutive transformer layers with a fixed patch size for all transformers, where each transformer layer can capture local and global dependencies between patches through self-attention20. The computation needed for the self-attention layers scales quadratically with reference to the number of patches (that is, sequence length). Although using a smaller patch size could be useful to capture visual patterns at a finer resolution, using a large patch size is computationally cheaper.

Our baseline model uses a two-stage design with patch sizes of 32 and 16 pixels, respectively (Fig. 1c). Supplementary Note A shows an ablation study using several stages with patch sizes ranging between 8 and 32 pixels.

Positional encoding

Rather than adopting the Cartesian positional encoding of ViT20, we use a polar coordinate system (r, θ) to encode the position of each patch. This choice is motivated by the radial symmetries of the Zernike polynomials and the efficiencies gained in NeRF31, coordinate-based MLPs32 and RoFormer33. For a given plane in \({\mathcal{E}}\) (Eq. 27), the radial positional encoding vector (RPE) is calculated for every patch,

where (r, θ) are the polar coordinates for the center of each patch, and m = 16. All patches and their positional encoding are then mapped into a sequence of learnable linear projections \(\zeta \in {{\mathbb{R}}}^{\ell \times {k}_{i}\times {p}_{i}^{2}}\) that we use as our input to the transformer layers in the model.

Transformer building blocks

Each stage has n transformer layers, where each layer has h multihead attention (MHA) layers that map the interdependencies between patches, followed by a multilayer perceptron block (MLP) that learns the relationship between pixels within a patch. The stage’s embedding size, \({\epsilon }_{i}={p}_{i}^{2}\), is set to match the number of voxels in a patch for that stage. The MLP block is four times wider than the embedding size (Supplementary Fig. 2c). Layer normalization (LN)34 is applied before each step, and a skip/residual connection35 is added after each step:

In addition to the skip connections in each transformer layer, we also add a skip connection between the input and output of each stage. We use a dropout rate of 0.1 for each dense layer36 and stochastic depth rate of 0.1 (ref. 37). The patches from the final stage are pooled using a global average along the last dimension and passed to a fully connected layer to output z Zernike coefficients.

Attention modules

We use self-attention38 as our default attention module for all transformer layers in our model. Complementary to our approach, recent studies have looked into alternative attention methods to reduce the quadratic scaling of self-attention23,39,40. Our architecture is compatible with these attention mechanisms, which would further improve our model’s efficiency.

In silico evaluations

Supplementary Note A shows an ablation study of our synthetic data simulator (Supplementary Note A.2), our multistage design (Supplementary Note A.3), our training dataset size (Supplementary Note A.4 and Supplementary Fig. 2) and details of our training hyperparameters (Supplementary Note A.5, Supplementary Table 2 and Supplementary Table 8). We also introduce a new way of measuring prediction confidence of our model using digital rotations in Supplementary Note A.6 (Supplementary Fig. 23).

We present a detailed cost analysis benchmark comparing our architecture with other widely used models such as ConvNeXt41 and ViT20 in Supplementary Note B. To further diagnose our model’s performance, we carried out a series of experiments to understand our model’s sensitivity to SNR (Supplementary Note C.1 and Supplementary Figs. 24–25), generalizability to other light sheets (Supplementary Note C.2), number of objects in the FOV (Supplementary Note C.3) and object size (Supplementary Note C.4 and Supplementary Fig. 26).

Ethics approval and consent to participate

All experiments with zebrafish were done in accordance with protocols approved by the University of California, Berkeley’s Animal Care and Use Committee and following standard protocols (animal use protocol number AUP-2019-09-12560-1). All zebrafish used in this study were embryos younger than 72 h postfertilization. Sex determination was not a factor in our experiments. All husbandry and experiments with zebrafish were done in accordance with protocols approved by the University of California, Berkeley’s Animal Care and Use Committee and following standard protocols (animal use protocol numbers AUP-2019-09-12560-1 (Upadhyayula laboratory), AUP-2020-10-13737-1 (Swinburne laboratory) and AUP-2021-05-14347-1 (Zebrafish Facility Core Protocol)).

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

Data for demos is available on our Github repository at https://github.com/cell-observatory/aovift. The full datasets for training and testing are too large to be hosted on public repositories, they can be shared upon reasonable request. Our synthetic data generator is also available at https://github.com/cell-observatory/beads_simulator to enable users to simulate their own datasets for training and evaluation.

Code availability

Source code for training and evaluation (and all pretrained models) are available at https://github.com/cell-observatory/aovift. Docker image is available at https://github.com/cell-observatory/aovift/pkgs/container/aovift. Deconvolution was performed using PetaKit5D (https://github.com/abcucberkeley/PetaKit5D).

References

Zhang, Q. et al. Adaptive optics for optical microscopy. Biomed. Opt. Express 14, 1732–1756 (2023).

Wang, K. et al. Rapid adaptive optical recovery of optimal resolution over large volumes. Nat. Methods 11, 625–628 (2014).

Liu, T.-L. et al. Observing the cell in its native state: imaging subcellular dynamics in multicellular organisms. Science 360, 1392 (2018).

Saha, D. et al. Practical sensorless aberration estimation for 3D microscopy with deep learning. Optics Express 28, 29044–29053 (2020).

Rai, M. R., Li, C., Ghashghaei, H. T. & Greenbaum, A. Deep learning-based adaptive optics for light sheet fluorescence microscopy. Biomed. Opt. Express 14, 2905–2919 (2023).

Zhang, P. et al. Deep learning-driven adaptive optics for single-molecule localization microscopy. Nat. Methods 20, 1748–1758 (2023).

Hu, Q. et al. Universal adaptive optics for microscopy through embedded neural network control. Light Sci. Appl. 12, 270 (2023).

Kang, I., Zhang, Q., Yu, S. X. & Ji, N. Coordinate-based neural representations for computational adaptive optics in widefield microscopy. Nat. Mach. Intell. 6, 714–725 (2024).

Ashesh, A. et al. Microsplit: Semantic unmixing of fluorescent microscopy data. Preprint at bioRxiv https://doi.org/10.1101/2025.02.10.637323 (2025).

Mahajan, V. N. Strehl ratio for primary aberrations: some analytical results for circular and annular pupils. J. Optic. Soc. Am. 72, 1258–1266 (1982).

Bentley, J. & Olson, C. Field Guide to Lens Design (SPIE Press, 2012)

Hanser, B. M., Gustafsson, M. G. L., Agard, D. A. & Sedat, J. W. Phase-retrieved pupil functions in wide-field fluorescence microscopy. J. Microsc. 216, 32–48 (2004).

Betzig, E. A cell observatory to reveal the subcellular foundations of life. Nat. Methods 22, 646–649 (2025).

Aguet, F. et al. Membrane dynamics of dividing cells imaged by lattice light-sheet microscopy. Mol. Biol. Cell 27, 3418–3435 (2016).

Ruan, X. et al. Image processing tools for petabyte-scale light sheet microscopy data. Nat. Methods 21, 2342–2352 (2024).

Campbell, H. I., Zhang, S., Greenaway, A. H. & Restaino, S. Generalized phase diversity for wave-front sensing. Opt. Lett. 29, 2707–2709 (2004).

Lakshminarayanan, V. & Fleck, A. Zernike polynomials: a guide. J. Mod. Opt. 58, 545–561 (2011).

Liu, G. et al. Characterization, comparison, and optimization of lattice light sheets. Sci. Adv. 9, 6623 (2023).

Lomax, K. S. Business failures: another example of the analysis of failure data. J. Am. Stat. Assoc. 49, 847–852 (1954).

Dosovitskiy, A. et al. An image is worth 16 × 16 words: transformers for image recognition at scale. In Proc. International Conference on Learning Representations (ICLR, 2021).

Carion, N. et al. End-to-end object detection with transformers. In European Conference on Computer Vision (eds Vedaldi, A. et al.) 213–229 (Springer, 2020).

Arnab, A. et al. Vivit: a video vision transformer. In Proc. IEEE/CVF International Conference on Computer Vision 6836–6846 (IEEE, 2021).

Cheng, B., Misra, I., Schwing, A.G., Kirillov, A. & Girdhar, R. Masked-attention mask transformer for universal image segmentation. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 1290–1299 (IEEE, 2022).

He, K. et al. Masked autoencoders are scalable vision learners. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 16000–16009 (IEEE, 2022).

Liu, Z. et al. Swin transformer: hierarchical vision transformer using shifted windows. In Proc. IEEE/CVF International Conference on Computer Vision 10012–10022 (IEEE, 2021).

Fan, H. et al. Multiscale vision transformers. In Proc. IEEE/CVF International Conference on Computer Vision 6824–6835 (IEEE, 2021).

Li, Y. et al. Mvitv2: improved multiscale vision transformers for classification and detection. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 4804–4814 (IEEE, 2022).

Deng, J. et al. Imagenet: a large-scale hierarchical image database. In Proc. 2009 IEEE Conference on Computer Vision and Pattern Recognition 248–255 (IEEE, 2009).

Ryali, C. et al. Hiera: a hierarchical vision transformer without the bells-and-whistles. In Proc. International Conference on Machine Learning 29441–29454 (PMLR, 2023).

Lin, T.-Y. et al. Feature pyramid networks for object detection. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 2117–2125 (IEEE, 2017).

Mildenhall, B. et al. Nerf: representing scenes as neural radiance fields for view synthesis. Commun. ACM 65, 99–106 (2021).

Tancik, M. et al. in Advances in Neural Information Processing Systems Vol. 33 (eds Larochelle, H. et al.) 7537–7547 (Curran Associates, 2020).

Su, J. et al. Roformer: enhanced transformer with rotary position embedding. Neurocomputing 568, 127063 (2024).

Ba, L.J., Kiros, J.R. & Hinton, G.E. Layer normalization. Preprint at https://arxiv.org/abs/1607.06450 (2016).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In. Proc. IEEE Conference on Computer Vision and Pattern Recognition 770–778 (IEEE, 2016).

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I. & Salakhutdinov, R. Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15, 1929–1958 (2014).

Huang, G., Sun, Y., Liu, Z., Sedra, D. & Weinberger, K.Q. Deep networks with stochastic depth. In Proc. Computer Vision–ECCV 2016: 14th European Conference Part IV 14. 646–661 (Springer, 2016).

Vaswani, A. et al. in Advances in Neural Information Processing Systems Vol. 30 (eds Guyon, I. et al.) 2440–2448 (Curran Associates, 2017).

Dao, T., Fu, D., Ermon, S., Rudra, A. & Ré, C. in Advances in Neural Information Processing Systems (NeurIPS 2022) Vol. 35 (eds Koyejo, S. et al.) 16344–16359 (Curran Associates, 2022).

Yang, J., Li, C., Dai, X. & Gao, J. in Advances in Neural Information Processing Systems (NeurIPS 2022) Vol. 35 (eds Koyejo, S. et al.) 4203–4217 (Curran Associates, 2022).

Liu, Z. et al. A convnet for the 2020s. In Proc IEEE/CVF Conference on Computer Vision and Pattern Recognition 11976–11986 (IEEE, 2022).

Acknowledgements

We thank X. Ruan, M. Mueller, P. Zwart and H. York for helpful discussions and comments. SUM159 cells used in this study were a gift from the Kirchhausen laboratory. We thank N. Shaner for providing the mChilada fluorescent protein plasmid to I.A.S., which was used to generate reagents for this study. We gratefully acknowledge the support of this work by the Laboratory Directed Research and Development (LDRD) Program of Lawrence Berkeley National Laboratory under US Department of Energy contract no. DE-AC02-05CH11231. We thank J. White for managing our computing cluster. T.A., G.L., F.G., J.L.H. and S.U. are partially supported by the Philomathia Foundation (awarded to E.B. and S.U.). T.A. and G.L. are partially supported by the Chan Zuckerberg Initiative (awarded to S.U.). T.A. and S.U. are partially supported by Lawrence Berkeley National Laboratory’s LDRD program 7647437 and 7721359 (awarded to S.U.). T.A., D.E.M. and E.B. are supported by HHMI (awarded to E.B.). C. Shirazinnejad. and D.G.D. are partially supported by NIH Grant R35GM118149 (awarded to D.G.D.). C. Simmons, I.S.A. and I.A.S. are partially supported by NIH Grant 1R01DC021710 (awarded to I.A.S.). F.G. is partially funded by the Feodor Lynen Research Fellowship, Humboldt Foundation. S.U. is funded by the Chan Zuckerberg Initiative Imaging Scientist program 2019-198142 and 2021-244163. E.B. is an HHMI Investigator. S.U. is a Chan Zuckerberg Biohub–San Francisco Investigator.

Author information

Authors and Affiliations

Contributions

T.A. designed models, developed training pipelines and evaluation benchmarks. D.E.M. designed the preprocessing algorithms and developed the microscope software for the imaging experiments. T.A. and D.E.M. designed Fourier embedding, and developed the synthetic data generator for training and validation. C. Shirazinnejad, C. Simmons, I.S.A., S.E.W., N.H., E. Hong, E. Huang, E.S.B., A.N.K., D.G.D. and I.A.S. generated the zebrafish reagents. A.N.K. prepared the cultured SUM159 cells. C. Shirazinnejad, J.L.H., K.A. and A.M.-J. prepared samples. G.L. and J.L.H. performed the imaging experiments with zebrafish. J.L.H. and K.A. performed the imaging experiments with cells. G.L., K.A. and F.G. performed the imaging experiments with beads. T.A., D.E.M. and S.U. performed analysis and prepared figures. T.A. wrote the paper with input from all co-authors. D.E.M., E.B. and S.U. edited the paper. T.A., E.B. and S.U. supervised the project.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Methods thanks Gordon Love, Chang Qiao and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available. Primary Handling Editor: Rita Strack, in collaboration with the Nature Methods team.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Notes A–D, Figs, 1–26 and Tables 1–8.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Alshaabi, T., Milkie, D.E., Liu, G. et al. Fourier-based three-dimensional multistage transformer for aberration correction in multicellular specimens. Nat Methods 22, 2171–2179 (2025). https://doi.org/10.1038/s41592-025-02844-7

Received:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s41592-025-02844-7