Abstract

In this study, we propose a novel keyword spotting method that integrates a dynamic convolution model with a cross-frontend mutual learning strategy. The dynamic convolution model enables the adaptive capture of diverse and time-varying acoustic patterns, while the mutual learning strategy effectively leverages complementary features extracted from multiple audio frontends to enhance the model’s generalization across different input conditions. Experimental results on the public Google Speech Commands dataset demonstrate that the proposed method achieves 97% accuracy with only 62K parameters and 6.11M FLOPs. Furthermore, the method shows strong robustness in noisy environments, maintaining reliable recognition performance even under challenging conditions with low signal-to-noise ratio. These findings highlight the efficiency and robustness of the proposed approach, making it a promising solution for real-world keyword spotting applications.

Similar content being viewed by others

Introduction

With the rapid development of technology, voice-based human–computer interaction, which once existed only in the realm of science fiction, has now permeated our daily lives. Intelligent voice assistants, such as Amazon’s Alexa and Apple’s Siri, have been widely applied across various fields of society. These intelligent systems are activated by specific wake-up words, triggering the Automatic Speech Recognition (ASR) module upon detecting predefined keywords, thereby significantly reducing the computational resources required for continuous operation of ASR systems1. Within this technological framework, Keyword Spotting (KWS), as a critical component of ASR, is primarily responsible for accurately capturing a predefined set of keywords in continuous audio streams2. The application scope of KWS is extensive, ranging from wake-up functionalities in smart devices to speech data analysis, audio content search, and intelligent phone navigation3.

Keyword Spotting technology has undergone years of development. One of its early approaches was based on Large Vocabulary Continuous Speech Recognition (LVCSR) systems4. While this method offers the flexibility to handle dynamically changing or undefined keywords, it requires substantial computational resources5, making it unsuitable for resource-constrained small electronic devices. As a more attractive alternative, the keyword/filler Hidden Markov Model (HMM) approach6 was proposed, which models acoustic features using Gaussian Mixture Models to generate the likelihood values for state emissions in keyword/filler HMMs. In 2014, the first deep learning-based KWS system was introduced7, marking a milestone in this field. The advantage of deep learning models lies in their scalability, allowing complexity to be adjusted according to computational constraints8, while achieving significant performance improvements with minimal space requirements. Thanks to their powerful performance, deep KWS systems quickly found applications in consumer electronics and spurred extensive related research9,10,11,12,13.

As a foundational technology for voice interaction, KWS systems are typically deployed in environments with strict computational resource constraints. Compared to other acoustic models, KWS systems must achieve higher computational efficiency and lower resource consumption while maintaining recognition performance. Current mainstream approaches generally transform input audio into spectrogram representations and process them using compact neural networks. However, while this simplified processing improves computational efficiency, it may also compromise the overall system performance. To enhance model performance under limited resources, researchers have proposed various innovative solutions. On the one hand, model compression techniques, such as knowledge distillation14, transfer the superior characteristics of large models to resource-constrained smaller networks. On the other hand, specialized network structures, such as deep residual structures15, depthwise separable convolutions16, and attention mechanisms17, have been designed to optimize computational efficiency. These methods alleviate, to some extent, the conflict between computational resource limitations and model performance.

However, in practical applications, KWS systems face more complex challenges. First, the diversity and unpredictability of environmental noise severely impact system reliability. Second, the dynamic nature of audio signals demands stronger adaptability from the model. Existing static network structures, while performing well under ideal conditions, often struggle to handle dynamically changing audio signals, making it difficult to balance computational efficiency and recognition accuracy.

Based on an in-depth analysis of these challenges, this study proposes an innovative solution by designing an adaptive network structure based on dynamic convolution18. Unlike traditional static convolutions, this structure dynamically adjusts convolution kernel parameters according to input features, enabling intelligent allocation of computational resources. This design allows the model to adaptively capture time-varying features in audio signals, reducing computation for simple samples while dedicating more resources to complex ones, thereby improving computational efficiency while maintaining high recognition performance. Furthermore, a cross-frontend mutual learning strategy is introduced to enhance model robustness. The core idea of this strategy is to explore how to leverage different audio frontends to extract diverse feature representations and promote knowledge exchange among these representations through deep mutual learning19. This method attempts to organically combine diverse feature extraction with the mutual learning strategy to balance the trade-off between computational efficiency and recognition performance, while improving the model’s robustness in complex environments. Through this exploratory study, we aim to validate the potential of this strategy in addressing noisy environments and provide new insights and methods for the field of audio processing.

Related work

In deep learning-based KWS systems, there are typically two core components: the preprocessing stage and the neural network-based classifier. The preprocessing stage plays a crucial role in the audio classification process, directly influencing subsequent processing steps and significantly impacting the system’s recognition accuracy and computational complexity. At this stage, various feature extraction methods have been proposed. Among them, features extracted using perceptually motivated Mel-scale filterbanks, such as log-Mel spectral coefficients and Mel-frequency cepstral coefficients(MFCC)20,21, have been widely used for decades in the fields of ASR and KWS. In deep learning-driven KWS systems, these speech features are typically normalized to have zero mean and unit variance before being fed into the acoustic model. This normalization stabilizes and accelerates the training process while improving the model’s generalization ability. Mel-scale-related features are among the most commonly used speech features in KWS, often paired with their first- and second-order derivatives to effectively capture the temporal and spectral information of speech signals. Moreover, deep learning models can leverage the spectro-temporal correlations of speech signals22 to further enhance system performance.

In recent years, various novel feature extraction methods have been proposed. Unlike traditional handcrafted features such as MFCC and log-Mel, these new methods optimize the feature extraction process according to specific tasks and training criteria, showing promise as alternatives to these well-established features. For instance, in23, the acoustic model parameters are jointly optimized with the cut-off frequencies of a filterbank based on sinc-convolutions (SincConv). Similarly, the authors of24 investigated two filterbank learning-based feature extraction methods: one learns the filterbank matrix in the power spectral domain, while the other learns the parameters of a gammachirp filterbank25, which is motivated by psychoacoustics. Zeghidour et al. introduced a novel principled, lightweight, and fully learnable architecture26 that can serve as a direct replacement for Mel filterbanks. Paola Vitolo et al. propose a convolutional autoencoder-based audio feature extraction method27 that improves classification accuracy in noisy environments while significantly reducing computational complexity for keyword spotting systems. These general-purpose learnable audio classification frontends can automatically learn and extract features from audio signals, demonstrating excellent performance across various classification tasks and exhibiting strong adaptability and robustness.

In the classifier section, the success of deep learning in the field of speech recognition has driven the widespread application of deep neural networks (DNNs), which provide efficient and space-saving solutions. The residual learning structure proposed by He et al.15 for image recognition has been widely adopted. This structure introduces a series of shortcut connections across non-contiguous layers, facilitating the training of deeper models. Howard et al.16 utilized depthwise separable convolutions to reduce the computational cost of standard CNNs by decomposing standard convolutions into depthwise convolutions and pointwise convolutions. Tang and Lin28 were the first to explore the application of deep residual learning in deep KWS. They also incorporated dilated convolutions to enlarge the receptive field of the network, enabling it to capture longer time–frequency patterns without increasing the number of parameters. Choi et al.29 proposed using one-dimensional convolutions along the time axis, which significantly reduced computational costs compared to two-dimensional convolutions. Within the deep residual learning framework (TC-ResNet), they processed MFCC features as input channels, enabling the network to capture both high-frequency and low-frequency features simultaneously with a relatively shallow architecture.

Some studies have achieved higher accuracy in keyword spotting by employing advanced architectures such as Transformers30. In early work, Berg et al. introduced the Keyword Transformer (KWT)31, showcasing the potential of self-attentional architectures for this task. Building on this, Ding et al. proposed a lightweight variant, LETR32, which reduced computational complexity and improved efficiency for resource-constrained devices. Further advancements expanded Transformer applications to multimodal scenarios. Li et al. introduced the Audio-Visual Keyword Transformer (AVKT)33, integrating audio and video inputs to enhance keyword detection and enable basic keyword localization. Similarly, Gao et al. leveraged self-supervised speech representation learning (S3RL)34 to demonstrate the effectiveness of lightweight Transformers in resource-limited environments, achieving notable performance gains by improving utterance-level distinction. The use of Transformers has also extended to multimodal sentiment analysis. Qiu et al. proposed a Joint Chained Interactive Attention Mechanism(VAE-JCIA, Video Audio Essay-Joint Chain Interactive Attention)35, which extracts consistent emotional features across video, audio, and text modalities, significantly enhancing classification performance. Additionally, Lei et al. developed a multilingual customized keyword spotting method based on Similar-Pair Contrastive Learning (SPCL)36, effectively addressing noise interference while balancing parameter efficiency and performance.

In recent years, dynamic feature extraction has achieved significant advancements in the field of deep learning. Chen et al.37 proposed Dynamic Convolution, which enables the adaptive aggregation of convolution kernels through attention mechanisms, providing a new paradigm for feature extraction. Compared to traditional static convolutions, Dynamic Convolution offers two major advantages: first, it can dynamically adjust the weights of convolution kernels based on input features, providing more flexible feature extraction capabilities; second, by dynamically combining parallel convolution kernels, it significantly enhances the model’s expressive power while maintaining computational efficiency. This method has achieved remarkable success in the field of computer vision, particularly in image classification tasks. However, existing studies are primarily limited to applications within single model architectures, failing to fully explore the potential value of Dynamic Convolution in collaborative learning frameworks.

Deep Mutual Learning (DML)19, an innovative collaborative training strategy, was first proposed by Zhang et al. Unlike the traditional teacher–student network paradigm, DML establishes an equal multi-model collaborative learning framework. Its core mechanism is to promote bidirectional knowledge transfer between models by minimizing the Kullback–Leibler (KL) divergence between their output distributions. The study demonstrates that this approach not only enhances the generalization ability of individual models but also achieves overall performance superior to independently trained models. However, existing research on mutual learning primarily focuses on knowledge exchange between networks with static structures, leaving the exploration of its integration with dynamic feature extraction mechanisms unexplored.

Although dynamic convolution and deep mutual learning have each demonstrated remarkable potential in model architecture design and training strategies, how to effectively integrate these two techniques-especially in the context of complex and dynamic keyword spotting tasks-remains a research direction in urgent need of deeper exploration. KWS tasks not only require precise capture of intricate acoustic features but also demand adaptability to diverse real-world application scenarios, presenting a valuable opportunity for technological integration. We note that the adaptive feature extraction mechanism of dynamic convolution has the potential to capture subtle changes in acoustic features, which is particularly important for improving the accuracy of keyword recognition. At the same time, the mutual learning framework provides the possibility of feature sharing between different dynamic convolution models, thereby achieving performance improvement at the model collaboration level.

In light of this, we propose a forward-looking exploration: integrating the adaptive feature extraction advantages of dynamic convolution with the collaborative training mechanism of deep mutual learning. We firmly believe that this combination will unleash significant synergistic effects, enabling models to not only adapt sensitively to the dynamic variations of input features but also strengthen and enhance overall robustness and recognition performance through deep knowledge sharing and mutual complementarity.

Proposed method

Model architecture

The model structure proposed in this study is shown in Fig. 1. The overall network design draws inspiration from Temporal Convolution29 and MobileNetV238, aiming to achieve excellent performance while maintaining low computational complexity. The model processes the input speech features as a time series, with the input feature dimensions represented as \(\textrm{X} \in {\mathbb {R}}^{T \times 1 \times F}\), where T denotes the time steps, 1 represents the single-channel input, and F is the feature dimension. In the initial stage of the model, a convolutional layer (CONV) combined with batch normalization (BN) is employed to quickly capture the primary features of the input. The convolutional layer extracts local features through a sliding window mechanism, while batch normalization effectively reduces feature distribution shifts through standardization, accelerating the training process and improving generalization performance. The introduction of batch normalization not only reduces the sensitivity of the network to weight initialization but also lays a solid foundation for the extraction of deeper features in subsequent layers.

Building upon this foundation, the network further designs an efficient feature extraction module by integrating depthwise separable convolution and residual structures. The core components include the Inverted Bottleneck Block (IBB), the Dynamic Convolution Module, and the Stacked Layers structure. The IBB adopts a three-stage structure of expansion-depthwise convolution–projection, specifically consisting of a \(1 \times 1\) convolution for channel expansion, a \(1 \times 9\) depthwise separable convolution for feature extraction, and a \(1 \times 1\) convolution for dimensionality reduction and projection. By setting the stride to 1, this structure preserves the spatial dimensions of the feature map, thereby maintaining strong feature representation capabilities and retaining detailed information while reducing computational complexity.

In the design of the Dynamic Convolution Module, this study incorporates a filter generation network and dynamic convolution operations. The filter generation network is based on the squeeze-and-excitation mechanism39, where global spatial information is compressed via average pooling. The compressed features are then processed by a two-layer fully connected network, with a ReLU activation function in between. Finally, a softmax function is applied to generate normalized attention weights for three convolution kernels. This design enables the model to dynamically adjust the convolution parameters according to the characteristics of the input data, significantly enhancing the model’s feature representation capability and adaptability to varying environments. In this design, attention over kernel37 plays a key role by dynamically assigning importance to different convolution kernels. This mechanism ensures that the model can focus on the most relevant kernels for a given input, effectively combining their contributions to extract meaningful features and improve overall performance. The dynamic convolution block achieves adaptive feature extraction and fusion by using three different convolution kernels in parallel and dynamically adjusting their weights through the attention mechanism. This allows the model to better meet the demands of different scenarios.

Figure 2 illustrates the detailed structure of the stacked layers, which form an efficient multi-scale feature extraction system by alternating between S1 and S2 blocks. The S1 module achieves efficient feature extraction by integrating channel expansion, temporal dimension processing, and residual connections. First, a \(1 \times 1\) convolution is used for channel expansion, which increases the feature dimensions while balancing performance and computational efficiency. Then, a lightweight \(1 \times 9\) depthwise convolution extracts critical information along the temporal dimension while preserving the integrity of spatial features. Finally, residual connections retain the original input features, and the combination with nonlinear activation further enhances the representation capability. This overall design strikes a good balance between efficiency, robustness, and computational cost, laying the foundation for improved model performance. The S2 block incorporates a downsampling mechanism, where a depthwise separable convolution with a stride of 2 and a \(1 \times 1\) convolution for residual connections are used to match dimensions. This effectively reduces the size of the feature map and lowers computational complexity. The alternating use of S1 and S2 blocks creates a complete multi-scale feature learning system: the S1 block focuses on capturing detailed and contextual information by maintaining the feature map dimensions, while the S2 block enhances the model’s abstract representation capability through downsampling. This design not only optimizes computational efficiency but also significantly improves the model’s ability to learn features at different scales, enhancing its generalization and overall performance.

Finally, the network compresses the spatial dimensions through a Temporal Average Pooling (TAP) layer, followed by a fully connected (FC) layer to map the features into the target class space, generating the final logits output. This carefully designed network architecture demonstrates significant advantages in terms of computational efficiency, feature representation, environmental adaptability, and model generalization. It provides an efficient and powerful solution for lightweight speech recognition tasks.

Cross-frontend optimization method

In the field of audio processing, feature extraction, as a critical step for efficient audio analysis, directly impacts the effectiveness and performance of subsequent processing. This study proposes an innovative cross-frontend model optimization method, which integrates multiple feature extraction techniques to optimize the feature extraction process and enhance the overall performance of the model, as illustrated in Fig. 3.

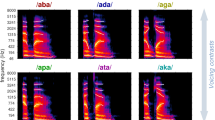

The processing flow of this method begins with the frontend processing of raw audio, focusing on two representative feature extraction techniques: the traditional Mel-Frequency Cepstral Coefficients (MFCC) and the novel Learnable Audio Frontend (LEAF). MFCC, as a classical and widely adopted speech feature extraction technique, is deeply aligned with the characteristics of the human auditory system. Specifically, MFCC simulates the human ear’s perception mechanism for different sound frequencies by first performing a Short-Time Fourier Transform (STFT) on the signal, then analyzing the spectrum using a Mel filter bank, and finally applying a Discrete Cosine Transform (DCT) to obtain the final feature coefficients. This cepstral-based method effectively extracts key features of speech signals and is recognized as one of the most effective algorithms for speech feature extraction.

In contrast to traditional methods, LEAF offers a novel learnable audio frontend solution, serving as a powerful alternative to traditional Mel-filterbanks. The core advantage of LEAF lies in its end-to-end learnable nature, where a unified frontend model can be trained to adaptively learn optimal feature representations. In practice, LEAF has demonstrated exceptional performance across various audio signal processing tasks, including speech, environmental sound, and animal sound classification. Compared to manually designed Mel-filterbanks, LEAF not only exhibits greater flexibility and efficiency but also automatically adjusts its parameters according to specific tasks, thereby obtaining more suitable feature representations.

To fully leverage the strengths of both feature extraction methods, this study innovatively adopts a Deep Mutual Learning (DML) strategy. This approach enables two models with different frontend processing techniques to learn from and optimize each other during training through a bidirectional knowledge distillation process. Specifically, the Kullback–Leibler (KL) divergence is utilized to measure the difference between the output probability distributions of the two models, facilitating mutual knowledge transfer. Let \(\mathbf {p_{1}}\) and \(\mathbf {p_{2}}\) represent the output probabilities of the two models, respectively. The KL divergence from \(\mathbf {p_{1}}\) to \(\mathbf {p_{2}}\) is calculated as follows:

Where N is the number of samples, M is the number of classes, \({p_{2}^{m}}(\mathbf {x_i})\) is the probability of the m-th class for the i-th sample predicted by the second model, and \({p_{1}^{m}}(\mathbf {x_i})\) is the corresponding probability predicted by the first model. This divergence quantifies how closely the output distribution of one model approximates that of the other.

Based on this, the loss functions of the two networks are defined as \(L_{1}\) and \(L_{2}\):

\(L_{c1}\) and \(L_{c2}\) denote the cross-entropy (CE) loss functions of the two models, which minimize the discrepancy between the predicted probability distributions and the true labels. The mutual learning strategy, in contrast to traditional knowledge distillation that relies on a pre-trained teacher model, enables equal collaboration between models by dynamically sharing prediction distributions. This bidirectional information exchange allows the models to learn from each other’s feature representations, thereby introducing a novel paradigm for collaborative learning and paving the way for simultaneous performance improvement.

To explore the effectiveness of this approach, we developed two independent yet complementary frontend models based on LEAF and MFCC, with bidirectional knowledge transfer facilitated through the mutual learning strategy. LEAF’s adaptability is designed to capture complex audio features, while MFCC’s stability provides a robust feature foundation. By integrating these characteristics during collaboration, the proposed method aims to leverage their complementary strengths, potentially enhancing both the generalization and environmental adaptability of the system.

To clarify, while both LEAF and MFCC-based models are integral to the mutual learning strategy proposed in this study, the MFCC-based model was preliminarily considered for potential application scenarios due to its computational efficiency and robustness. However, the actual contributions and complementary strengths of these models are further investigated in the subsequent ablation studies, which aim to provide a comprehensive evaluation of their roles within the proposed framework. These findings will guide future decisions regarding the optimal deployment of the system in real-world environments.

Experiments

Experimental settings

We evaluate our method using Google’s Speech Commands Dataset40, which contains about 65K one-second-long utterance files of 30 different keywords from thousands of people. Following Google’s implementation, we seek to discriminate among 12 classes: “yes,” “no,” “up,” “down,” “left,” “right,” “on,” “off,” “stop,” “go”, unknown, or silence. The dataset is split into 80% for training set, 10% for validation set and 10% for test set according to the SHA-1 hashed name of the audio files. In terms of experimental implementation, we used the PyTorch framework for model training and evaluation. Specifically, the training configuration employed the Adam optimizer with an initial learning rate of 0.01, a total of 50 training epochs, and a batch size of 100. In this study, two backbone models were designed and trained, with the feature maps obtained from the audio frontend processing being fed into these two models separately. A comparative analysis was conducted to evaluate the performance of different models. Additionally, we specifically designed a series of experiments to validate the effectiveness of the mutual learning strategy, focusing on its contribution to improving model performance.

Ablation studies

This study designed two different network architectures, TempConvNet and DynTempConvNet, and utilized two distinct audio frontends, LEAF and MFCC, to comprehensively evaluate the performance of models under different configurations. As shown in Table 1, by introducing the dynamic convolution, DynTempConvNet achieved stable performance improvements while only increasing FLOPs by 0.63M (from 5.48 to 6.11M) and parameters by 10K (from 52 to 62K) compared to the baseline TempConvNet. This result indicates that dynamic convolution can indeed extract features more effectively, demonstrating its advantages in feature extraction.

From the experimental data in Table 1, it can be observed that on the v1 dataset, DynTempConvNet with the MFCC frontend achieved the best performance of 96.50%, while on the v2 dataset, DynTempConvNet with the LEAF frontend reached the highest accuracy of 97.27%. These results fully demonstrate that dynamic convolution consistently maintains performance advantages across different audio frontends and datasets. It is worth noting that, despite changes in the model structure, both the LEAF and MFCC frontends were able to adapt well to the network structures, showcasing excellent adaptability.

Mutual learning with homogeneous frontends

To fully validate the potential of the models, this experiment adopted identical model structures and audio frontends, while introducing the mutual learning strategy. By sharing and optimizing each other’s knowledge, the experiment explored the effectiveness of homogeneous frontends.

Tables 2 and 3 present the results on the v1 and v2 datasets. The experimental results show that, compared to independent training, models employing the mutual learning strategy exhibited stable performance improvements across various configurations. When using the LEAF frontend, the mutual learning strategy brought varying degrees of performance improvement to both the TempConvNet and DynTempConvNet structures. Similarly, when using the MFCC frontend, both network structures achieved significant performance enhancements, with DynTempConvNet achieving the best performance.

These results further validate two important findings. First, the mutual learning strategy demonstrates universal effectiveness within homogeneous model structures. Second, even with identical network structures, mutual learning can still develop differentiated optimization paths, thereby achieving greater performance improvements.

Mutual learning with heterogeneous frontends

This experiment adopted identical model structures and different audio frontends, while introducing the mutual learning strategy to explore the effects of heterogeneous frontends by sharing and optimizing each other’s knowledge.

Tables 4 and 5 present the results of experiments using heterogeneous frontends (LEAF and MFCC) but identical network structures on the v1 and v2 datasets. These results also highlight differences between the v1 and v2 datasets in terms of how mutual learning benefits each frontend.

For the v1 dataset, the model with the MFCC frontend consistently achieved greater improvements compared to the LEAF frontend, especially with the TempConvNet structure (+0.43% vs. +0.1%) and DynTempConvNet structure (+0.5% vs. +0.13%). This suggests that the MFCC frontend benefited more from the complementary features provided by LEAF, which may capture richer or more diverse representations on this dataset.

In contrast, for the v2 dataset, the improvements were more balanced. With the TempConvNet structure, the LEAF frontend showed slightly larger gains (+0.32% vs. +0.17%), while with the DynTempConvNet structure, both frontends achieved similar improvements (+0.25% vs. +0.23%). This indicates that on v2, the features extracted by LEAF and MFCC were more equally complementary, allowing both models to benefit similarly from mutual learning.

These observations demonstrate that the effectiveness of mutual learning with heterogeneous frontends depends on the characteristics of the dataset. In datasets like v1, where one frontend captures more diverse or complementary features, the other frontend can benefit more significantly. Meanwhile, on datasets like v2, where the feature complementarity is more balanced, both frontends achieve comparable improvements.

In summary, the mutual learning strategy effectively leverages the complementary nature of heterogeneous frontends, enabling the models to learn richer and more robust feature representations. The DynTempConvNet structure, in particular, demonstrates strong adaptability and fusion capabilities, resulting in consistent performance gains across datasets.

Performance comparison of models

Table 6 presents a comprehensive performance comparison between DynTempConvNet and current mainstream models, clearly showcasing the competitive advantages of the proposed model through detailed data on parameter count, computational complexity, and accuracy.

From the perspective of parameter efficiency, DynTempConvNet utilizes only 62K parameters, which is comparable to the lightweight model TENet6 (54K) and significantly lower than larger models such as TCNet14 (305K) and F-DARTS (188K), demonstrating excellent parameter efficiency.Compared to Transformer-based models like KWT-1 (607K) and LeTR-128 (617K), DynTempConvNet achieves a substantial reduction in parameter count while delivering competitive or even superior performance. Although Transformer models have demonstrated exceptional capabilities in various tasks, their relatively high parameter count and computational complexity often make them less suitable for resource-constrained scenarios. DynTempConvNet, on the other hand, strikes a better balance between efficiency and performance, making it a more practical choice for lightweight keyword spotting tasks.

In terms of computational complexity, DynTempConvNet achieves FLOPs of 6.11M, which is at a relatively moderate level. Compared to models like DyConv (7.69M) and TCNet14 (8.26M), the proposed model maintains a relatively low computational overhead while delivering superior performance. This characteristic fully reflects the efficiency of the model design, offering greater potential for practical application scenarios.

In terms of performance, DynTempConvNet achieved remarkable results on both datasets. Specifically, the model reached a best accuracy of 97.17% on the V1 dataset and an even higher accuracy of 97.56% on the V2 dataset, surpassing all the compared models. These experimental results strongly demonstrate that DynTempConvNet not only has advantages in efficiency but also maintains a leading position in performance.

Performance analysis across SNRs

In this study, we conducted an in-depth evaluation of the robustness of the proposed method under unseen noise conditions. Specifically, we added noise samples from the Urban44 and WHAM45 datasets to the Google Speech Commands dataset at different signal-to-noise ratios (SNRs). The models were then tested under various SNR levels to assess their robustness to unseen noise. The benchmark model used in the experiments was TCNet14, and we also included TENet6, which has a comparable number of parameters, for comparison. Additionally, we compared the performance of using mutual learning (DynTempConvNet+DML) and not using the mutual learning strategy (DynTempConvNet). The experimental results are presented in Tables 7 and 8.

We observed significant differences and trends in the performance of the v1 and v2 versions of the models under various SNR conditions. Firstly, for the v1 dataset, DynTempConvNet+DML demonstrated outstanding performance across all SNR conditions, especially in low-SNR environments (e.g., Urban 0 dB and WHAM 0 dB), where it significantly outperformed other models. This result indicates that DynTempConvNet+DML exhibits strong robustness and adaptability in handling complex noisy environments. Secondly, although DynTempConvNet did not adopt the DML technique, its performance closely followed, showcasing its inherent advantages in architectural design. In contrast, TENet6 and TCNet14 performed relatively weaker under extreme noise conditions (e.g., Urban 0 dB and WHAM 0 dB), with a noticeable decline in performance.

In the analysis of the v2 dataset, the overall performance showed significant improvement compared to v1. DynTempConvNet+DML continued to maintain its leading position in v2, achieving even better results under all SNR conditions, particularly in low-SNR scenarios, where its noise adaptability was further enhanced. Additionally, DynTempConvNet also exhibited notable improvements in v2, especially under moderate noise conditions (e.g., Urban 5 dB and WHAM 5 dB). In comparison, while TENet6 and TCNet14 showed some improvement, their performance enhancements were less pronounced than the first two models, though they still achieved certain gains under moderate noise conditions (e.g., Urban 10 dB and WHAM 10 dB).

From a comprehensive analysis of v1 and v2 datasets, the v2 version outperformed v1 in most cases, indicating that the model’s optimization and upgrades have made significant progress in handling noise. In both v1 and v2, DynTempConvNet+DML consistently delivered the best performance, especially under extreme noise conditions, fully validating its robustness and stability. DynTempConvNet also demonstrated strong adaptability, closely following DynTempConvNet + DML, highlighting its potential in architectural design. In contrast, while TENet6 and TCNet14 showed some improvement, they consistently lagged behind the DynTempConvNet series, indicating room for improvement in their performance under complex noise environments.

In summary, the improvements in the v2 version have enhanced the performance of all models in noisy environments, with the DynTempConvNet series showing the most significant gains. In particular, DynTempConvNet+DML achieved the best performance across all SNR conditions, demonstrating great potential in audio processing tasks, whether in high-SNR or low-SNR scenarios. Therefore, in practical applications, DynTempConvNet+DML is a highly recommended model, especially for scenarios requiring the handling of complex noisy environments.

Additionally, to further validate performance under lower SNR conditions, we utilized the QUT dataset46, which contains audio data with living room noise, to test the performance of different models under various SNR conditions. The tested models included DynTempConvNet+DML, DynTempConvNet, TENet6, and TCNet14, with each model having two versions (v1 and v2). The SNR range spanned from 30 to \(-12.5\) dB, covering a wide range of conditions from relatively clean audio environments to extreme noise environments. This approach allowed us to comprehensively evaluate the models’ performance in different noise environments, providing a foundation for further model optimization and practical applications. The trends are illustrated in Figs. 4 and 5.

Under high SNR conditions (30 dB and 20 dB), the performance of all models was very close, with accuracy rates remaining above 95%. DynTempConvNet+DML v2 achieved the best performance at 30 dB (97.15%) and maintained high performance at 20 dB. This indicates that in relatively clean audio environments, all models can effectively handle noise, with minimal performance differences.

As the SNR decreased to 10 dB and 0 dB, significant differences in model performance began to emerge. The v2 versions of DynTempConvNet+DML and DynTempConvNet outperformed other models and versions under these conditions. This suggests that these models exhibit better robustness when handling moderate noise levels.

Under extremely low SNR conditions (\(-10\) dB and \(-12.5\) dB), the performance of all models declined significantly. However, DynTempConvNet+DML v2 maintained relatively high accuracy rates at \(-10\) dB and \(-12.5\) dB (75.00% and 67.75%, respectively). In contrast, TCNet14 experienced the most severe performance drop under low SNR conditions, with its v1 version achieving only 53.43% accuracy at \(-12.5\) dB.

A comprehensive comparison with existing methods shows that DynTempConvNet successfully achieves a balance between lightweight design and high performance. Its ability to maintain low resource consumption while achieving or even surpassing the performance of larger-scale models demonstrates its strong practical value and potential for widespread application. This achievement not only validates the effectiveness of the proposed method but also provides new ideas and references for research in related fields.

Overall, DynTempConvNet+DML v2 performed excellently under all test conditions, particularly in low-SNR environments, where it exhibited strong robustness and adaptability. In comparison, while other models and versions performed well under high-SNR conditions, their performance deteriorated more significantly as noise levels increased. These analyses provide a clearer understanding of the strengths and weaknesses of different models in handling noisy audio data, offering important insights for future model optimization and selection.

Conclusion

In this study, we propose a novel cross-frontend keyword spotting method based on a dynamic convolution model. By dynamically adjusting convolutional filters, the method effectively captures diverse acoustic patterns. Combined with a mutual learning strategy, it enhances generalization across different frontend conditions, achieving robust keyword spotting.

The results demonstrate that dynamic convolution and the mutual learning strategy are pivotal in improving performance and noise resistance. The mutual learning strategy leverages the complementarity of features extracted by different audio frontends, significantly enhancing model performance. Meanwhile, the dynamic convolution structure facilitates effective feature fusion across heterogeneous frontends, improving adaptability to diverse input conditions. Notably, the method achieves strong noise resistance under low SNR conditions, offering an efficient and robust solution for keyword spotting in real-world scenarios.

However, it is worth noting that the proposed method may face challenges related to asymmetric knowledge transfer between models during the mutual learning process, which could affect the balance of learning. Additionally, when the feature spaces of different frontends overlap significantly, the mutual learning process may result in redundant information exchange. These limitations highlight directions for future work, such as designing mechanisms to balance knowledge transfer and encouraging the models to learn more complementary features.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Michaely, A. H., Zhang, X., Simko, G., Parada, C. & Aleksic, P. Keyword spotting for google assistant using contextual speech recognition. In 2017 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU) 272–278 (IEEE, 2017).

Vinyals, O. & Wegmann, S. Chasing the metric: Smoothing learning algorithms for keyword detection. In 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 3301–3305 (IEEE, 2014).

Zhuang, Y., Chang, X., Qian, Y. & Yu, K. Unrestricted vocabulary keyword spotting using LSTM-CTC. In Interspeech 938–942 (2016).

Weintraub, M. Keyword-spotting using SRI’s decipher large-vocabulary speech-recognition system. In 1993 IEEE International Conference on Acoustics, Speech, and Signal Processing, vol. 2, 463–466 (IEEE, 1993).

Shan, C., Zhang, J., Wang, Y. & Xie, L. Attention-based end-to-end models for small-footprint keyword spotting. arXiv:1803.10916 (2018).

Rohlicek, J. R., Russell, W., Roukos, S. & Gish, H. Continuous hidden Markov modeling for speaker-independent word spotting. In International Conference on Acoustics, Speech, and Signal Processing 627–630 (IEEE, 1989).

Chen, G., Parada, C. & Heigold, G. Small-footprint keyword spotting using deep neural networks. In 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 4087–4091 (IEEE, 2014).

Wang, X. et al. Adversarial examples for improving end-to-end attention-based small-footprint keyword spotting. In ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 6366–6370 (IEEE, 2019).

Sainath, T. N. & Parada, C. Convolutional neural networks for small-footprint keyword spotting. In Interspeech 1478–1482 (2015).

Sun, M. et al. Max-pooling loss training of long short-term memory networks for small-footprint keyword spotting. In 2016 IEEE Spoken Language Technology Workshop (SLT) 474–480 (IEEE, 2016).

Tang, R. & Lin, J. Deep residual learning for small-footprint keyword spotting. In 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 5484–5488 (IEEE, 2018).

Alvarez, R. & Park, H.-J. End-to-end streaming keyword spotting. In ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 6336–6340 (IEEE, 2019).

Rybakov, O., Kononenko, N., Subrahmanya, N., Visontai, M. & Laurenzo, S. Streaming keyword spotting on mobile devices. arXiv:2005.06720 (2020).

Song, Z., Liu, Q., Yang, Q. & Li, H. Knowledge distillation for in-memory keyword spotting model. In INTERSPEECH 4128–4132 (2022).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision And Pattern Recognition 770–778 (2016).

Howard, A. G. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv:1704.04861 (2017).

Luo, R. et al. Multi-layer attention mechanism for speech keyword recognition. arXiv:1907.04536 (2019).

Jia, X., De Brabandere, B., Tuytelaars, T. & Gool, L. V. Dynamic filter networks. Adv. Neural Inf. Process. Syst. 29 (2016).

Zhang, Y., Xiang, T., Hospedales, T. M. & Lu, H. Deep mutual learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 4320–4328 (2018).

Volkmann, J., Stevens, S. & Newman, E. A scale for the measurement of the psychological magnitude pitch. J. Acoust. Soc. Am. 8, 208–208. https://doi.org/10.1121/1.1901999 (2005).

Davis, S. & Mermelstein, P. Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences. IEEE Trans. Acoust. Speech Signal Process. 28, 357–366 (1980).

Watanabe, S., Delcroix, M., Metze, F. & Hershey, J. R. New Era for Robust Speech Recognition (Springer, Cham, 2017).

Ravanelli, M. & Bengio, Y. Speaker recognition from raw waveform with sincnet. In 2018 IEEE Spoken Language Technology Workshop (SLT) 1021–1028 (IEEE, 2018).

López-Espejo, I., Tan, Z.-H. & Jensen, J. Exploring filterbank learning for keyword spotting. In 2020 28th European Signal Processing Conference (EUSIPCO) 331–335 (IEEE, 2021).

Irino, T. & Unoki, M. An analysis/synthesis auditory filterbank based on an IIR implementation of the gammachirp. J. Acoust. Soc. Jpn. (E) 20, 397–406 (1999).

Zeghidour, N., Teboul, O., Quitry, F. D. C. & Tagliasacchi, M. Leaf: A learnable frontend for audio classification. arXiv:2101.08596 (2021).

Vitolo, P., Liguori, R., Di Benedetto, L., Rubino, A. & Licciardo, G. D. Automatic audio feature extraction for keyword spotting. IEEE Signal Process. Lett. 31, 161–165 (2023).

Tang, R. & Lin, J. Deep residual learning for small-footprint keyword spotting. In 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 5484–5488 (IEEE, 2018).

Choi, S. et al. Temporal convolution for real-time keyword spotting on mobile devices. In Interspeech (2019).

Berg, A., O’Connor, M. & Cruz, M. T. Keyword transformer: A self-attention model for keyword spotting. In Interspeech (2021).

Berg, A., O’Connor, M. & Cruz, M. T. Keyword transformer: A self-attention model for keyword spotting. In Interspeech. https://doi.org/10.21437/interspeech.2021-1286 (ISCA, 2021).

Ding, K., Zong, M., Li, J. & Li, B. Letr: A lightweight and efficient transformer for keyword spotting. In ICASSP 2022–2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 7987–7991. https://doi.org/10.1109/ICASSP43922.2022.9747295 (2022).

Li, Y. et al. Audio-visual keyword transformer for unconstrained sentence-level keyword spotting. CAAI Trans. Intell. Technol. 9, 142–152 (2024).

Gao, C., Gu, Y., Caliva, F. & Liu, Y. Self-supervised speech representation learning for keyword-spotting with light-weight transformers (2023). arXiv:2303.04255.

Qiu, K. et al. A multimodal sentiment analysis approach based on a joint chained interactive attention mechanism. Electronics 13 https://doi.org/10.3390/electronics13101922 (2024).

Lei, L., Yuan, G., Yu, H., Kong, D. & He, Y. Multilingual customized keyword spotting using similar-pair contrastive learning. IEEE/ACM Trans. Audio Speech Lang. Process. 31, 2437–2447. https://doi.org/10.1109/TASLP.2023.3284523 (2023).

Chen, Y. et al. Dynamic convolution: Attention over convolution kernels. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 11027–11036 (2019).

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A. & Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 4510–4520 (2018).

Hu, J., Shen, L. & Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 7132–7141 (2018).

Warden, P. Speech commands: A dataset for limited-vocabulary speech recognition. arXiv:1804.03209 (2018).

Wu, F., Fan, A., Baevski, A., Dauphin, Y. N. & Auli, M. Pay less attention with lightweight and dynamic convolutions. arXiv:1901.10430 (2019).

Li, X., Wei, X. & Qin, X. Small-footprint keyword spotting with multi-scale temporal convolution. arXiv:2010.09960 (2020).

Zhang, B. et al. Autokws: Keyword spotting with differentiable architecture search. In ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2830–2834 (IEEE, 2021).

Salamon, J., Jacoby, C. & Bello, J. P. A dataset and taxonomy for urban sound research. In Proceedings of the 22nd ACM International Conference on Multimedia (2014).

Wichern, G. et al. Wham!: Extending speech separation to noisy environments. In Interspeech (2019).

Dean, D., Sridharan, S., Vogt, R. & Mason, M. The QUT-NOISE-TIMIT corpus for the evaluation of voice activity detection algorithms. In Interspeech (2010).

Acknowledgement

This work is supported by The Key Research and Development Program of Guangxi (No. AD25069071) .

Author information

Authors and Affiliations

Contributions

Rongqi Liu: investigation, formal analysis, validation, visualization, software, writing—original draft. Wenkang Chen: conceptualization, data curation, visualization, writing—review and editing. Xuejun Zhang: data curation, methodology, writing—review and editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Liu, R., Chen, W. & Zhang, X. Dynamic convolution models for cross-frontend keyword spotting. Sci Rep 15, 16745 (2025). https://doi.org/10.1038/s41598-025-00304-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-00304-y