Abstract

This study aims to forecast the spread of acute diarrhoea and dengue diseases in India by conducting a comparative analysis of statistical, mathematical (compartmental), and deep learning time series models. Utilizing weekly reported cases and fatalities from January 1, 2011, to Week 33, 2024, we evaluated ten forecasting techniques, including Regression, Bayesian Linear Regression with MultiOutputRegressor + XGBoost, SIR model, Prophet, N-BEATS, GluonTS, LSTM, Seq2Seq, and the ARIMA statistical model. Performance was assessed using mean absolute percentage error (MAPE) and root mean square error (RMSE). Our findings indicate that the ARIMA model excels in predicting acute diarrhoeal disease cases, achieving an RMSE of 317.7 and a MAPE of 2.4. Conversely, the Seq2Seq model outperforms others in forecasting dengue cases, with an RMSE of 399.1 and a MAPE of 6.3. Additionally, models such as N-BEATS and LSTM demonstrated strong predictive capabilities, while traditional models like Regression and the SIR compartmental model showed higher error rates. This research underscores the importance of selecting appropriate forecasting models to enhance disease prediction accuracy, thereby providing valuable insights for policymakers to effectively allocate healthcare resources and implement targeted intervention strategies.

Similar content being viewed by others

Introduction

Without a vaccine or cure for many infectious diseases, the health infrastructure and services must be carefully planned to ensure the best possible outcomes. Forecasting can control the spread of diseases. Thus, estimating the total number of confirmed cases is crucial to planning the housing supply in the healthcare system and building additional medical facilities if needed. We can estimate short-term and long-term infected cases using mathematics, statistics and machine learning tools. With this, we can effectively plan and estimate the need for additional materials and resources to deal with the outbreak. This estimate of the expected burden on the healthcare system is critical for managing medical facilities and other necessary resources in a timely and effective manner to combat the pandemic. These forecasts can help determine the scope of the outbreak and the number of preventive actions.

Studies estimate that the risk of dengue (a mosquito-borne viral disease) fever covers a population of over 3.9 billion people1. There are 128 countries where the disease is endemic (Africa, Eastern Mediterranean, South Asia, South-East Asia and Western Pacific)2,3,4. Models from the time series forecasting and machine learning category are broadly used to forecast dengue5,6. For one to twelve weeks ahead forecasting, models use meteorological factors as covariates, such as precipitation and temperature, which is then clubbed with the historical dengue data7,8,9,10,11,12. Multiple trends and outliers pose a serious challenge in forecasting complex requirements from conventional time series models such as autoregressive integrated moving average (ARIMA)13,14,15,16.

Numerous fields, such as geography, ecology, and epidemiology, have relied on optimization17 and machine learning (ML) methods in the last two decades to derive useful information from massive amounts of heterogeneous datasets. It is possible to include many correlated variables in machine learning models. These models can incorporate intricate interactions and relationships between these variables without assuming the underlying function’s form18,19. This problem-solving approach is observed to be more flexible. Deep learning, a part of machine learning involving neural networks, has been demonstrated to be an excellent function approximator in complex problems of speech/text recognition, computer vision, natural language processing and so on. Commonly used neural network architectures are convolutional neural network (CNN), recurrent neural network (RNN), fully connected deep neural network (FCDNN), transformers etc. These function approximators can be used to forecast diseases. World Health Organization (WHO) estimates 2.2 million deaths from acute diarrheal disease from 1.7 billion total cases20.

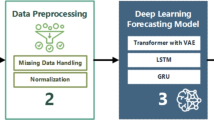

Figure 1 is the flowchart that illustrates the complete workflow of the study. The process starts with sourcing weekly disease data on acute diarrhoea and dengue cases from the National Centre for Disease Control (NCDC). The data undergoes preprocessing (cleaning, normalization, and transformation) and is used to develop forecasting models. These models, including statistical (ARIMA), mathematical (SIR), and deep learning models (LSTM, Seq2Seq), are evaluated using metrics like RMSE and MAPE. A comparative analysis of the models determines the best model for forecasting. Finally, the results are analyzed to derive actionable insights that inform public health decisions. The flowchart provides a clear overview of these sequential processes.

This work is the first to present the comparative analysis of different statistical, mathematical (compartmental), and deep learning models for Indian disease data sets. The notable contributions of the proposed study are as follows:

-

Collected disease trends data in raw form from the Indian government website. Data is converted into a weekly format of reported cases and fatalities from January 1, 2009, to week 33 of 2024, which we have used to accurately predict new incidents and death cases.

-

To further enhance the accuracy and relevance of this study, we have incorporated the latest available data up to week 33 of 2024. This inclusion allows the models to leverage the most recent trends in disease spread, ensuring that the findings reflect current scenarios and provide actionable insights for policymakers. The updated dataset strengthens the study’s applicability by addressing evolving patterns in acute diarrhoea and dengue cases, making it more aligned with real-time needs in public health planning.

-

Examined the sequence-to-sequence (Seq2Seq) model and government data on disease trends to evaluate and forecast the disease prevalence over several weeks. Predictions for multiple time steps have been computed using the Seq2Seq model.

-

The proposed study is evaluated by comparing ten different disease spread forecasting techniques, Regression, Bayesian Linear Regression MultiOutputRegressor21 + XGBoost22, SIR model23, Prophet24, NBEATS25, Gluonts26, LSTM, Seq2Seq, ARIMA statistical model24, and Seq2Seq model using two evaluation parameters: mean absolute percentage error (MAPE) and root mean square error (RMSE).

The main contribution of this manuscript is given below:

-

Public Health Significance: Forecasting the spread of infectious diseases, including acute diarrhea and dengue, is critical for public health authorities tasked with managing limited healthcare resources and strategizing effective interventions. India, with its diverse socio-environmental conditions and seasonal disease patterns, frequently experiences outbreaks of these conditions. By accurately predicting future case counts, public health officials can pre-emptively allocate medical supplies, ensure adequate hospital bed capacity, and implement targeted prevention measures (e.g., vector control for dengue, sanitation improvements for diarrhea).

-

Data-Driven Policy and Intervention Strategies: Effective epidemic forecasting models enable evidence-based decision-making. When policymakers understand when and where disease cases are likely to surge, they can initiate timely vaccination drives, distribute rehydration solutions and vector control measures, and raise community awareness campaigns. Such proactive approaches can significantly reduce morbidity, mortality, and the socioeconomic burden of disease outbreaks.

-

Bridging Research Gaps: While several modeling techniques have been developed for infectious disease forecasting, there is a lack of comprehensive comparative studies that evaluate the performance of diverse model categories statistical, mathematical (compartmental), and deep learning on the same dataset in the Indian context. Our work addresses this gap by providing a systematic, side-by-side evaluation. This perspective is essential for identifying which modeling approaches are most accurate, robust, and suitable given the complexity and variability of local disease data.

-

Complex Data and Disease Dynamics: Acute diarrheal and dengue diseases are influenced by multiple, interrelated factors such as climate conditions, population density, hygiene standards, and regional healthcare infrastructure. Traditional models alone may not capture these nonlinear, long-term dependencies, while deep learning approaches can sometimes lack interpretability. By comparing various models, our study highlights the strengths and limitations of each approach. This helps practitioners choose the most appropriate tools for specific forecasting needs, time horizons, and data conditions.

-

Future-Proofing Public Health Planning: The landscapes of public health challenges are continuously evolving due to climate change, population mobility, and the emergence of new disease variants. Having a suite of validated forecasting methods at one’s disposal provides flexibility and resilience in adapting to changing conditions. Our study not only suggests which models perform best under current scenarios but also provides a framework that can be extended to incorporate newer data sources (e.g., environmental factors) and improved algorithms as they become available.

-

Contributions to the Academic and Policy Communities:By demonstrating the comparative performance of multiple models using real-world data, our study offers a benchmark for future research. It encourages researchers to develop hybrid or improved models and guides policymakers towards more data-driven strategies. This dual impact—academic advancement and policymaking support—further justifies the need for this research.

Despite significant advances in statistical, mathematical, and machine learning models for disease forecasting, several critical research gaps remain. Current forecasting models often struggle with:

-

1.

Effectively handling sparse and noisy datasets, which is common in real-world disease surveillance systems.

-

2.

Addressing the variability and seasonality inherent in diseases such as acute diarrhoea and dengue, which are influenced by multiple external factors like climate and population density.

-

3.

Providing a comprehensive comparison of various forecasting techniques to identify the most effective approaches under different scenarios.

-

4.

Offering models tailored to regional datasets, particularly for developing nations like India, where disease dynamics are unique due to socio-environmental factors.

This study bridges these gaps by systematically evaluating statistical, mathematical, and deep learning models for forecasting disease spread in India, aiming to improve the precision and applicability of predictive models in such contexts.

The study hypothesizes that integrating statistical, mathematical (compartmental), and deep learning models can improve the accuracy, robustness, and reliability of disease forecasting. By leveraging the strengths of each modeling approach and addressing their individual limitations, the study aims to create a framework that is adaptable to diverse datasets and provides actionable insights for public health planning.

While it is true that the foundational methods, statistical models like ARIMA, compartmental models like SIR, and deep learning architectures such as LSTM and Seq2Seq are well-established techniques, our study’s novelty lies in the integrative approach applied to a specific and underexplored epidemiological context, as well as the development of a comprehensive workflow that addresses practical considerations in disease forecasting. We have taken several steps to ensure that our work extends beyond merely applying known methods:

-

1.

Contextual Novelty in Disease Forecasting:

Although these modeling techniques have been individually employed for various diseases and regions, our study applies them specifically to acute diarrheal disease and dengue in India, where high-quality longitudinal forecasting studies are still relatively limited. By focusing on these diseases, we provide targeted insights that are highly relevant for regional public health policy, enhancing the practical impact of our work.

-

2.

Systematic, Multimethod Comparison:

Instead of relying on a single model category or method, we present a systematic comparison across a broad spectrum of models—statistical, mathematical (compartmental), and deep learning. Such a comprehensive evaluation is less common in the literature, allowing us to benchmark the strengths and limitations of each approach under identical data and evaluation conditions. This comparative methodology ensures we provide a more robust understanding of which models work best under different scenarios.

-

3.

Unified Evaluation Framework with Long-Term Data:

We apply these methods to a multi-year dataset (now extended up to Week 33, 2024), spanning over a decade of disease incidence. This extended temporal coverage and uniform evaluation framework provide an enriched environment to observe how these models handle complex seasonal patterns, changing disease dynamics, and variations in reporting standards over time. The long-term data provides additional nuance and rigor in model evaluation, ensuring our findings are not just snapshot analyses but meaningful insights across time.

-

4.

Guidance for Practitioners and Policymakers:

While many studies focus on improving well-known algorithms, our work is uniquely positioned to provide actionable knowledge for public health practitioners and policymakers. By elucidating which models excel in short-term versus long-term forecasting, and by highlighting practical considerations for data preparation and model selection, we deliver knowledge that can be directly applied to decision making in public health settings, rather than just theoretical model evaluation.

-

5.

Establishing Baselines for Future Research:

Our comprehensive suite of methods and transparent reporting of outcomes establishes a benchmark for future research. Researchers can use our findings as a reference point to develop novel hybrid models, incorporate external factors (such as climate or mobility data), or apply emerging techniques to improve upon the baselines we have established, thus advancing the field of disease forecasting.

While the individual models used are well-established in the literature, the novelty of this study stems from its integrated application, thorough comparison, extended temporal analysis, and specific epidemiological context, all of which together contribute valuable insights for public health forecasting and strategic decision-making.

The paper aims to estimate the scenario of acute diarrhoea and dengue disease spread in India. The study uses statistical, mathematical (compartmental), and deep learning time series models to forecast the spread of these diseases. The predictions are based on the number of reported cases and fatalities between January 1, 2011, and 33rd week of 2024. The content compares ten different techniques for disease spread forecast, including regression, Bayesian linear regression, SIR model, Prophet, NBEATS, Gluonts, LSTM, Seq2Seq, and ARIMA statistical model. The study aims to provide insights that can help policymakers develop and monitor strategies to combat these diseases.

The paper is organized as follows. The “Literature work” section provides a basic introduction and literature review. The “Methodology” section discusses the theoretical background and the methodologies used. The “Experimental setups” section describes the data and its analysis. The “Experimental results and analysis” section presents the comparative studies. This is followed by the “Discussion” section. Finally, the “Conclusion” section summarizes the key findings of the study.

Literature work

Since Kermack and McKendrick’s27 pioneering work in 1972, many researchers have turned to mathematical modelling of infectious illnesses and epidemics as a potent tool for examining disease traits and monitoring disease spread. This method facilitates making the greatest decisions and creating the finest regulations. Numerous models have been created to investigate the dynamics of how infectious diseases spread, including the Ronald Ross model for malaria28, the Capasso and Pareri-Fontana model for cholera29, the Hethcote and Yorke model for gonorrhoea30, ebola, the H1N1 model, and many others31,32. The COVID-19 disease has also been the subject of several mathematical models33.

Disease transmission through time and place has been tracked, predicted, and tracked using artificial intelligence34. Akhtar et al. estimated the COVID-19 pandemic’s duration with the help of dynamic artificial neural networks (ANN)35. This approach was used to foresee the 2015 Zika virus pandemic. HealthMap and BlueDot were created using ML algorithms to precisely anticipate the virus outbreak36,37. Studies show that an influenza prognosis model based on current Twitter data can aid in halting further epidemics38,39. In the same year, an ML-based prediction model XGBoost was utilised to recognise a sickness related to coronavirus in a patient40. Epidemiological time series have long been predicted using AI models. Popular time series deep learning models are Recurrent neural networks (RNNs) and long-short-term memory (LSTM) networks41,42,43.

Recent advancements in infectious disease forecasting have leveraged machine learning (ML), deep learning (DL), and mathematical models to address challenges in epidemiology and public health. For instance, Saleem et al.44 provided a systematic review of ML, DL, and mathematical approaches for analyzing and forecasting COVID-19, emphasizing hybrid methods while highlighting challenges in data accuracy and scalability. Islam et al.45 showcased the potential of integrating mathematical modeling with ML techniques to predict dengue outbreaks using time-varying contact rates. Similarly, Keshavamurthy et al.46 reviewed ML and DL applications in predicting infectious diseases, focusing on biopreparedness and public health response. Bousquet et al.47 demonstrated the enhancement of prediction accuracy by incorporating dynamic parameters in deep learning models using the SIRD framework for COVID-19. Rakhshan et al.48 combined recurrent dynamic models with ML to analyze global outbreaks, emphasizing the benefits of time-series analysis. Malhotra and Goel49 reviewed the evolution of infectious disease modeling, highlighting the shift from traditional methods to evolutionary algorithms for improved forecasting. Ijeh et al.50 explored predictive modeling techniques, emphasizing the importance of high-quality data and addressing challenges related to data accuracy and implementation. Kaur et al.51 focused on AI techniques for modeling vector-borne diseases, showcasing their potential in mitigating disease spread. Singh et al.52 compared ML and time-series regression models for monkeypox forecasting, demonstrating the strength of ML in handling complex trends. Mun˜oz-Organero53 introduced a space-distributed traffic-enhanced LSTM model for COVID-19 forecasting, emphasizing the role of mobility data in improving predictions. Ogueda-Oliva et al.54 applied physics-informed neural networks for modeling infectious disease dynamics during travel, integrating physical and epidemiological principles. Kosma et al.55 explored neural ordinary differential equations (ODEs) for epidemic modeling, effectively capturing dynamic processes in disease transmission. Finally, Ning et al.56 proposed epidemiological prior-informed deep neural networks (Epi-DNNs) that combined domain knowledge with DL techniques to enhance the modeling of COVID-19 dynamics. Collectively, these studies underscore the transformative potential of advanced computational methods in forecasting infectious diseases.

To train high-dimensional complex functions by a series of non-linear transformations, the deep-learning models utilize ANNs with various architectures. Compared to conventional shallow ANN models, FCDNNs often have more hidden layers (more structure), which enables them to recognise more intricate associations. Two crucial members of the deep learning family are CNNs and RNNs. CNN models generally work well with spatial data (for example, images). However, it may analyse input sequences utilising their internal state (memory), which improves the ability to grasp temporal dependencies. Long-range associations can be learned sequentially via the more effective RNN variation known as long short-term memory (LSTM).

In many short-term prediction problems, including time prediction, LSTM has drawn much interest due to its advantages in handling sequence dependencies. However, most suggested frameworks favour stacking naive LSTM units for sequential modelling. Although LSTM modules can record temporary dependencies, organising many-to-many structures by stacking multiple LSTM layers still requires numerous essential constraints. Under the many-to-many structure, the length of the target sequence, for instance, can only be equal to (or less than) the length of the input sequence. The model’s flexibility and ability to generalise may be severely limited because the input and target sequences are likely to be of varying lengths.

Furthermore, the simple many-to-many structures won’t see complete sequences when producing the outputs of the second last step (middle outputs). For many multi-step prediction tasks, this results in restrictions and irrationality, especially for the unidirectional LSTM that typically serves as the predetermination. Additionally, even though LSTM is the optimised version of the conventional native RNN, its output will drop to some extent if the input sequence is longer. Fortunately, sequential modelling architectures have significantly improved since the introduction of Sequence-to-Sequence (Seq2Seq)/encoder-decoder in recent years.

The statistics model known as the autoregressive moving average (ARMA) is generalised in the ARIMA model (particularly in time series analyses). These two models were developed to predict future data points in time series data and better understand it. The ARIMA model can be used in several cases where data demonstrate a nonstationary mean. That is, the non-stationary mean function can be removed with the initial differentiation step (which corresponds to the “integrated” section of the model) when used once or more than one time (i.e., the trend). In these circumstances, many statistical and mathematical models are frequently applied57,58,59,60,61,62.

Table 1 to include a comprehensive overview of key literature on epidemic prediction methods using statistical, mathematical (compartmental) and deep learning techniques. This table consolidates a range of approaches, including both traditional statistical models and more advanced computational techniques, highlighting the diversity of epidemic forecasting algorithms used in prior studies. The table is organized by author and publication source, specifying the particular disease or epidemic type under consideration (e.g., influenza, dengue, COVID-19, or other emerging infections). Each reference identifies the modeling approach adopted, such as time series methods (e.g., SARIMA, ARIMA/ARIMAX), machine learning algorithms (e.g., Random Forest, Bayesian Networks), deep learning architectures (e.g., ANN, LSTM, Seq2Seq), and well-established mathematical compartmental frameworks (e.g., SIR, SEIR). Additionally, the table includes cutting-edge techniques like fuzzy logic models, ensemble wavelet neural networks, deep transfer learning, and adapted frameworks (e.g., ETAS models for seismic events). It provides an overview of key literature on epidemic prediction methods across various infectious diseases. By providing this concise yet comprehensive snapshot of the literature, the table illustrates the breadth of contemporary epidemic forecasting research, the range of data-driven and theoretical approaches, and emerging trends in leveraging complex computational methods to enhance predictive accuracy and inform public health decision-making.

Methodology

This section discusses the various techniques to forecast the propagation scenario of different diseases. This includes the preliminary methods we developed for ARIMA, SIR, basic LSTM and Seq2Seq model mechanism prediction models.

ARIMA model

The ARIMA models are methods for time-series scans used widely for infectious disease prediction in the time domain to improve forecast accuracy. The autocorrelation (AC) and partial autocorrelation (PAC) simulations are performed to create a stationary time series. This estimates the autocorrelation order, moving average order and difference order. The model requires current and historical information from residual series to consider past values by acquiring AR and MA. According to the ARIMA model, a linear model can successfully capture a linear pattern from various illness series. If the sequence is in line with the decomposition hypothesis, decomposition methods perform better. The inconvenience of the model is that linear relations can only be derived from the time series results. Events like the effects of weather and social interactions, where several factors may influence, are not working well. ARIMA model is limited by the absence of any uncertainty or intermediate shifts in the prediction periods.

The time series data for the ARIMA model is denoted by Yt where t is a time step, and the series is assumed to be independent variables based on time. Yt = f(t) represents a deterministic time-series function. Similarly, Yt = X(t) represents a stochastic time series function with X being a random variable. For the process of stationary time series forecasting, the ARMA model is generally considered. Box Jenkins (ARMA) forecasting model is also a popular choice in stationary time series analysis because of its high prediction efficiency. Another technique that uses time series data as input for future prediction is autoregression AR(p). It uses the last p time step data as input, which is fed to a regression multiplier with coefficients ϕ of AR. Then, a white noise ω in the form of random error is added along with the mean µ of the time series. The equation obtained in the AR(p) model is given as:

This shows the AR part of the ARMA model. For every variable from a time series, the Moving Average’s MA(q) polynomial function and technique are not included. There are three sections of MA. The first component is the series’ mean (µ). The second is the sum of a product of the model residual (ω) with a finite number of MA coefficients (θ). The final component is white noise or uniform random error. Hence, the MA(q) model can be written as:

Thus, the polynomials AR(p) and MA(q), together forms the ARMA(p, q) model which is given by:

This can be further shortened to

The notation ARMA(p, q) stands for the predicted value at time t. Here, p indicates the total autoregressive lags, which is also the order of AR polynomial. The total number of moving average lags is MA models’ order, given by q. The mean of the time series data is represented by µ. The coefficients of AR(p) estimate the coefficients of MA(q).

The first stage in developing a model like ARIMA (ARIMA(p, d, q)) is to determine whether or not time series statistical stationarity can be attained. The following stage estimates the values of p and q in AR and MA models. The fundamental premise of this model is that the anticipated value of the variable Yt results from a linear equation of a number of prior observations clubbed with random errors. When a process Xt satisfies the form in Eq. 5, it is an ARIMA(p, d, q):

That is, after differencing a non-seasonal process d time, the process Xt should be stationary. The values of p, d, and q change up until the completion of the training phase of the ARIMA model utilising the supplied dataset. Like RNNs, previous or past values are used to forecast the next values or future. Mathematically, it can be expressed as:

Long short-term memory (LSTM) networks

Recurrent neural networks are capable of learning to make decisions based on historical data. However, due to problems of vanishing gradients, traditional simple RNN models are limited in their ability to handle very long sequences93.

Figure 2 shows the LSTM’s unit internal structure. LSTM units have several gates controlling the information flow, which aids in capturing long-range relationships. The LSTM unit’s ability to store memory for a very long time and optionally allow information to pass through has been demonstrated using the Eqs. 7–12.

Equations 7, 8, and 9 define update gate control, forget gate control and output gate control, respectively. Equations 10, 11, and 12 demonstrate the updation of the memory cell state ct and output at. Wua, Wux, Wfa, Wfx, Woa, Wox, Wca, Wcx, bu, bf, bo, and bc are all trainable parameters, where Wua, Wux, Wfa, Wfx, Woa, Wox, Wca, and Wcx are weighted matrices governing the connection from corresponding inputs to hidden layer while bu, bf, bo, and bc are bias terms. The sigmoid function (sigma) and the hyperbolic tangent function (tanh) are both non-linear activation functions that have been described by the following formulas:

According to Eq. 8, before ignoring gate control, information is first obtained from both the current step input xt and the prior step output at−1. The combined information is then sent to a sigmoid activation function. Each value in the cell state ct−1 is converted by the sigmoid function into a number between 0 and 1. Whereas ut serves as a filter and is a potential replacement for the memory cell, the update gate decides what new data will be stored in the cell state. A new cell state is produced by multiplying the previous cell state by ft and then adding it to the filtered candidate.

Sequence to sequence (Seq2Seq) model

There are numerous RNN model architectures that can be used for various applications. The four most typical RNN model architectures are depicted in Fig. 3. Both Fig. 3a, c depict many-to-one RNN models, which means that at the final time step, only one output is present in these models. As seen in Fig. 3b, d, another typical type of many-to-many design has input and output sequences that have the same length. When using a many-to-many architecture, RNN/LSTM layers scan the input sequence and generate output sequences of the same or different lengths. The single-layer RNN models shown in Fig. 3a, b are another example. As shown in Fig. 3c, d, building a deeper RNN model by stacking additional layers can occasionally aid in learning more complicated functions.

Although it is possible that the above-mentioned architectures do not apply in many practical cases where the output sequence length is higher than one and varies from the input sequence length, this is highly unlikely. Therefore, a more adaptable architecture that can handle any input or output sequence is required. Sutskever et al. refined the term “RNN encoder-decoder network,” which was previously developed by94. The Seq2Seq (Sequence-to-Sequence) model has gained significant attention in recent years for its ability to effectively model and predict sequential data. In the field of epidemiology, where accurate and timely predictions of infectious disease outbreaks are crucial for effective intervention planning, Seq2Seq models have emerged as a promising tool. This paper presents a comprehensive description of the Seq2Seq model applied to the task of epidemic prediction of infectious diseases. Infectious diseases pose a significant threat to public health, necessitating accurate prediction models to anticipate and mitigate potential outbreaks. The Seq2Seq model, a type of recurrent neural network (RNN), has demonstrated remarkable performance in various natural language processing tasks. Leveraging its ability to capture temporal dependencies, the Seq2Seq model is adapted to the field of epidemiology to predict the progression and spread of infectious diseases.

Model architecture

The Seq2Seq model consists of two main components: an encoder and a decoder. The encoder processes the input sequence, typically representing historical epidemiological data, and encodes it into a fixed-length vector representation called the context vector. The encoder can be implemented using recurrent neural networks such as Long Short-term Memory (LSTM) or Gated Recurrent Units (GRU), which effectively capture temporal information. The decoder, also an RNN-based network, takes the context vector as input and generates predictions for future time steps. At each decoding step, the model´s output is fed back as input to predict the subsequent time step. This iterative process enables the model to capture the sequential dynamics of infectious disease outbreaks. The Seq2Seq model is trained to increase, given an input sequence, the conditional probability of a target sequence, which could be described by the following equation:

Data Preprocessing: Historical epidemiological data is collected to train the Seq2Seq model, including variables such as the number of cases, geographical information, demographic data, climate factors, and interventions implemented. The data is pre-processed by normalizing numerical features, encoding categorical variables, and partitioning the dataset into training, validation, and testing sets.

Training: The training process involves optimizing the model´s parameters to minimize the discrepancy between predicted and actual epidemic patterns. This is achieved through the use of a loss function, such as mean squared error or cross-entropy loss, which quantifies the disparity between predicted and ground-truth values. The model is trained using gradient-based optimization algorithms like stochastic gradient descent (SGD) or Adam, which iteratively update the weights to minimize the loss.

Prediction and Evaluation: Once trained, the Seq2Seq model can be deployed for epidemic prediction as seen in Fig. 4. Given a new input sequence, the model generates predictions for future time steps. These predictions can inform public health officials and policymakers about the potential trajectory of an infectious disease outbreak, allowing them to make informed decisions regarding resource allocation, intervention strategies, and public awareness campaigns. The Seq2Seq model has been proven remarkably efficient for different kinds, especially if the input and output sequences vary in length. In the last few years, the Seq2Seq model has constantly been improved, and the notion of attention mechanism is one of the essential ideas in profound learning. Sometimes, it becomes difficult to store all information in the input when the input sequence is very long for the basic Seq2Seq model, thereby reducing the performance of decoding and encoding.

Selection criteria for methods

We have expanded the Methodology section to explicitly state the criteria used to select each prediction model (statistical, mathematical/compartmental, and deep learning). The choice of methods was driven by the complexity and nature of the data, as well as the need to evaluate both short-term and long-term predictive capabilities.

-

1.

Statistical Models (e.g., ARIMA):

Rationale: Selected for their robustness in handling linear time-series patterns and well-established use in epidemiological forecasting.

Justification: ARIMA models are capable of effectively capturing seasonality, trends, and autocorrelation within disease incidence data, making them suitable for short-term predictions and serving as a strong benchmark model for comparison.

-

2.

Mathematical (Compartmental) Models (e.g., SIR):

Rationale: Employed to understand disease dynamics through epidemiological parameters and compartmental structures.

Justification: Although these models may not always excel in long-range predictions due to simplified assumptions, they provide valuable insight into disease transmission mechanisms and serve as interpretative baselines for complex diseases, especially when empirical data is limited.

-

3.

Deep Learning Models (e.g., LSTM, Seq2Seq):

Rationale: Implemented due to their ability to model nonlinear relationships and capture long-term dependencies in complex time-series data.

Justification: LSTM and Seq2Seq architectures are well-suited for learning intricate temporal patterns, especially when sequences are long or when capturing subtle fluctuations beyond linear trends is necessary. Seq2Seq, in particular, was chosen for its capacity to handle variable-length input and output sequences, making it more flexible and robust for forecasting multiple future time steps.

Feature selection and data preparation

In this manuscript, we now clearly delineate the criteria for feature selection and data preprocessing:

-

Disease Incidence Data: Weekly reported cases and fatalities for acute diarrheal disease and dengue were selected as the primary features because they are the most direct indicators of disease spread.

-

Temporal Resolution: Data were aggregated and standardized on a weekly basis to ensure consistency and reduce noise. This granularity aligns with public health reporting intervals and resource planning cycles.

-

Normalization and Encoding: We applied Min–Max normalization to ensure that all input variables share a similar scale, facilitating faster convergence during model training. Time-based categorical features (e.g., day of the week) were one-hot encoded to capture any cyclical patterns without introducing bias.

-

Exclusion of Irrelevant or Incomplete Data: Noisy, incomplete, or redundant data points were removed or corrected to maintain data integrity. Only features that demonstrated epidemiological relevance (e.g., weekly counts of cases/deaths) and consistency over the selected time span were retained.

Data allocation for model training and testing

We allocated 80% of the total weekly data samples for model training and the remaining 20% for testing. This ratio was chosen to ensure that the models have sufficient historical information to learn underlying disease patterns while still retaining a meaningful segment of the data that the models have not seen during training. Using this hold-out test set allows us to independently evaluate the predictive performance and generalizability of each model. We have explicitly stated the number of samples corresponding to these percentages, providing precise counts once the final dataset configuration (including data through Week 33, 2024) is established. This ensures transparency in how we balanced model development and evaluation, and provides a clear basis for comparing the performance of the different forecasting methods under consistent conditions.

Training procedures for the prediction models

We have now included a detailed, step-by-step description of the training methodology:

-

1.

Data Split: The dataset from January 1, 2011, to 33rd week of 2024, was split into training (80%) and testing (20%) subsets. This split ensures that the models learn from historical patterns and are then evaluated on unseen data, which helps test their generalizability.

-

2.

Hyperparameter Tuning: For ARIMA, parameters (p, d, q) were determined using autocorrelation and partial autocorrelation plots to achieve stationarity and optimal fit.

-

For deep learning models (LSTM, Seq2Seq), hyperparameters such as learning rate, number of layers, hidden units, and sequence lengths were optimized via iterative experimentation and validation-based early stopping to avoid overfitting.

-

-

3.

Model Training:

ARIMA and Statistical Models: These models were fit iteratively, adjusting parameters to minimize residual errors.

SIR (Compartmental Model): The model was initialized with known epidemiological parameters and fitted to actual case trajectories through nonlinear least squares to estimate parameters that best replicate observed data trends.

LSTM and Seq2Seq Deep Learning Models:

-

Implemented using Python-based deep learning frameworks (e.g., TensorFlow or PyTorch).

-

Utilized backpropagation through time (BPTT) for training RNN-based architectures.

-

Adopted Adam optimizer with an initial learning rate of 0.001 and exponential decay rates of 0.9 and 0.999 for momentum.

-

Employed a training period of 100 epochs, with early stopping conditioned on validation loss improvements to prevent overfitting. This ensures models generalize well without memorizing noise in the data.

-

-

4.

Evaluation Metrics:

-

Performance was assessed using RMSE, MAPE, R2, and MAE for all methods. This multi-metric evaluation allowed us to objectively compare predictive accuracy and interpretability across models, ensuring a fair and robust assessment of their forecasting capabilities.

-

Experimental setups

The Python 3.6.5 64-bit compiler has been used for experiments in the Spyder 3.2.8 Python development environment. Table S1 shows the system’s configuration. The I/O cost has not been measured.

Description of the database

The data has been obtained from the Indian government’s data centre, the National Centre for Disease Control (NCDC). The NCDC manages more than 50 diseases week by week data, including the number of cases and deaths. We have taken cases of Acute Diarrheal Disease and Dengue Fever. We took these two diseases because, compared to other diseases, there are more outbreaks and deaths. The rest of the disease outbreak cases are sparse, with sometimes very few outbreaks in a week and no death cases, so we chose acute diarrhoeal disease and Dengue Disease, which are the most common in India.

The dataset used in this manuscript is from January 1, 2011, to Week 33, 2024. The dataset has a total of 520 samples, and each sample contains information on the weekly number of cases and deaths of acute diarrheal disease and dengue fever. A few samples of the dataset are shown in Table S2.

Difficulties during data preparation: Predicting model uses all values in the dataset to learn data patterns. Hence, minimisation of noise and enhancement of data accuracy and uniformity is necessary. The initial data set is unclean, with many incorrect and incomplete data. We have first found and eliminated the incomplete, inaccurate, and inconsistent data. Inconsistent data from the Indian government website contains some misspellings and erroneous data. We have also eliminated the redundant and unused data. We know that date-wise cases could not be used directly; hence, we changed it to day-wise or week-wise, which is more informative.

Dataset preparation

The weekly dataset from January 1, 2011, to Week 33, 2024, was used. The Min–Max normalisation has been used for scaling the data into the range shown in Eq. 16. Scaling was performed to speed up learning and convergence during training. The sliding window approach has been used to sample the scaled data. It also reshapes it into the 3-D tensors with the required form. Additionally, external information like time of day and day of week, i.e. the categorical variables, have been translated into the one-hot encoding form.

Data cleaning and preprocessing

Data source documentation

-

Original Source: We explicitly state the official source of the data (National Centre for Disease Control, India) and the exact URL or repository from which the raw weekly case and fatality counts for acute diarrheal disease and dengue were obtained.

-

Data Span and Updates: The revised text now includes the temporal coverage (January 1, 2011, to Week 33, 2024) and notes on when the dataset was last updated.

Data cleaning steps

-

Handling Missing Values: We describe the approach used to identify missing values in weekly reports. If any week’s data were incomplete or absent, we clarify how these instances were addressed—either through careful cross-verification with official records, interpolation where justified, or exclusion if data could not be reliably recovered.

-

Correcting Inconsistencies: We outline how spelling errors, date mismatches, and any anomalous spikes (e.g., reporting delays leading to sudden jumps in case counts) were identified and resolved. For instance, if a particular week showed an extraordinarily high count that could not be corroborated by neighbouring weeks or official corrections, it was flagged and addressed by consulting supplementary sources or official errata, if available.

Normalization and scaling

-

Temporal Aggregation: We detail how daily or event-based records were aggregated into a uniform weekly format, ensuring consistent intervals for the entire dataset.

-

Data Transformation: We describe the normalization techniques (e.g., Min–Max normalization) applied to scale the input features before model training to maintain uniform data ranges and enhance the convergence speed of the algorithms.

Reproducibility and record-keeping

-

Code and Scripts: We mention that the code snippets used for data cleaning and preprocessing are available upon request or in a supplementary material section, ensuring that other researchers can replicate our data preparation steps.

-

Detailed Logs: We keep a log of all changes made to the raw dataset, including the date of correction, nature of the inconsistency, and the rationale behind any modifications. This log can be shared as supplementary information, further strengthening the reproducibility of our study.

Training model

We use Python libraries, such as pandas, NumPy, etc., for all the experimental studies. The hardware configuration of the system is reported in Table S1.

During the training phase, we randomly selected 80% of the data as a training set, and the remaining 20% was used for model performance validation. The model is only trained in a training set for 100 epochs. At the same time, the early-stopping mechanism is used to monitor the validation losses so that overfitting problems can be prevented. The efficient algorithm for optimising the Adam loss function is used to improve the level at which the learning rate is set to 0.001. In addition, the exponential decay rates for the first and second-moment estimates are set to 0.9 and 0.999, respectively.

Selection of predictors (inputs)

The main inputs used for our models are the historical weekly counts of disease cases and fatalities for both acute diarrheal disease and dengue. We chose these inputs because:

-

1.

Epidemiological Relevance:

Weekly counts of cases and fatalities directly reflect disease burden and progression, making them the most relevant indicators for predicting future trends.

-

2.

Data Availability and Reliability:

These variables are consistently reported and maintained by the National Centre for Disease Control (NCDC) in India. Using well-documented, regularly updated official data ensures data quality and enhances model reliability.

-

3.

Temporal Consistency:

By focusing on weekly granularity, we standardize the input data and align it with the planning and surveillance cycles of public health authorities. This uniformity assists in capturing seasonal patterns and longer-term trends inherent in disease dynamics.

-

4.

Avoiding High-Dimensionality and Noise:

We prioritized essential epidemiological indicators to maintain a manageable feature set, reduce overfitting risk, and ensure the model concentrates on core drivers of disease spread. Supplementary factors (e.g., climate data, demographics) could be included in future work if consistently available and validated.

Selection of responses (outputs)

The primary outputs are future weekly disease incidence (cases) and fatalities. This choice is driven by:

-

1.

Practical Utility:

Predicting the number of cases and fatalities allows policymakers, healthcare administrators, and stakeholders to assess imminent healthcare resource needs, such as hospital beds, medications, and staffing.

-

2.

Direct Impact:

Forecasts of cases and fatalities have immediate implications for public health interventions. Anticipating disease surges helps in timely responses, including targeted vaccination drives, increased diagnostic testing, and community awareness campaigns.

-

3.

Comparative Evaluation:

Using the same response variables (i.e., predicted future cases/fatalities) across different modeling techniques enables a straightforward comparison of model performance and forecasting accuracy.

By grounding our input and output selections in epidemiological relevance, data quality, and practical applicability, we ensure that the resulting models serve as effective tools for evidence-based decision-making and public health planning.

Evaluation criteria

The following metrics are adopted to evaluate the prediction performance of the proposed system:

Mean absolute percentage error (MAPE)

MAPE is a widely used metric to evaluate the accuracy of forecasting models. It expresses the error as a percentage of the actual value, providing a measure of relative error. The formula for MAPE is:

where yi represents the ith real value (actual value), xi represents the ith predicted value.

A lower MAPE value indicates better forecasting accuracy. This metric is particularly useful when noticeable errors are undesirable, and it provides a clear percentage error between the predicted and actual values.

Mean absolute error (MAE)

The Mean Absolute Error (MAE) is another important metric for evaluating the forecasting performance of the model. It calculates the average of the absolute differences between predicted and actual values. The formula for MAE is:

where \({y}_{i}\) represents the ith real value (actual value), \({x}_{i}\) represents the ith predicted value.

The MAE gives an intuitive sense of the magnitude of the prediction errors. A lower MAE indicates better accuracy, as it suggests that the predicted values are closer to the actual values on average.

Root mean square error (RMSE)

Root Mean Square Error (RMSE) is a metric that calculates the square root of the average squared differences between predicted and actual values. It is highly sensitive to large errors and is useful when large deviations are particularly undesirable. The formula for RMSE is:

where yi represents the ith real value (actual value), xi represents the ith predicted value.

RMSE provides a measure of the magnitude of error in the same units as the original data. A lower RMSE value signifies better model accuracy, as it reflects smaller deviations between predicted and actual values.

R-Square (R2)

R-Square (R2) is a statistical metric that represents the proportion of the variance in the dependent variable that is predictable from the independent variables. It provides an indication of how well the model fits the data. The R2 value ranges from 0 to 1, where 1 indicates perfect prediction accuracy and 0 indicates that the model does not explain any of the variance in the data. The formula for R2 is:

where \({y}_{i}\) represents the ith real value (actual value), \({x}_{i}\) represents the ith predicted value, \(\underline{y}\) represents the mean of the actual values.

A higher R2 value indicates better model performance, as it means that a larger proportion of the variance in the actual data can be explained by the model’s predictions.

Results and analysis

ARIMA model

The term “autoregressive” refers to the lags of the stationary series in the forecasting equation. The term “moving average” refers to lags in predicted error rates. An “integrated” version of a stationary series is a time series that needs to be differentiated in order to be made stationary. The ARIMA model is referred to as a “ARIMA(p,d,q)” model. Here, p defines total autoregressive terms. The amount of non-seasonal deviations required for stationarity has been denoted by d. q has been used to define the total lag forecast errors in prediction. For this analysis we have used ARIMA(1, 1, 1). Weekly data has been collected from the year 2011 to Week 33, 2024. We have shown the weekly data in the below Table S2. We have trained the model for up to 400 weeks and predicted the data for the next 120 weeks. ARIMA model gives good prediction results, as shown in Fig. 5. ARIMA model predicts the four cases: (1) Acute diarrhoeal disease cases, (2) Acute diarrhoeal disease death cases, (3) Dengue cases, and (4) Dengue death cases. Figures 5, 6, 7, and 8 show the spread prediction of acute diarrhoeal disease and dengue disease cases.

The model is based on the ARIMA(1, 1, 1) model, which has one AR term, one first difference term, and one MA term, respectively. A first difference has been used to explain a linear trend in the data. ARIMA(1, 1, 1) predicts well, as shown in Figs. 5, 6, 7 and 8. For 120 weeks, the model predicts acute diarrhoeal disease cases, Dengue cases, acute diarrhoeal disease death cases, and Dengue death cases. The majority of the prediction values are within the prediction range. Any organisation or government can make future decisions to control or mitigate the disease based on this analysis.

SIR model, LSTM model and Seq2Seq model

We have shown the infected spread scenario in the result section. This section presents a comparative study of the SIR model, LSTM model, and Seq2Seq model. Figure 9 shows the real spread scenario of acute diarrhoeal and Dengue disease cases from 2011 to Week 33, 2024.

Figure 10 shows the final comparison of the SIR model, LSTM model, and Seq2Seq model week-wise actual spread scenario prediction of acute diarrhoeal disease 2020–2024 flu season. Figure 11 shows the final comparison of the SIR model, LSTM model, and Seq2Seq model week-wise real spread scenario prediction of Dengue disease 20,202,024 flu season.

SIR calculates the probable number of infected people over time in a closed population. The prediction from the SIR has been found to be unsatisfactory in real-life scenarios. It has also been found that the SIR’s prediction outcome varies significantly due to the absence of numerous crucial characteristics.

Figures 10 and 11 show that LSTM models do not perform well in prediction. When the data sequence is large, and the dataset amount is insufficient, the LSTM model does not perform well. In these cases, training data is inadequate to train the model and achieve better prediction results. Seq2seq model outperforms the LSTM model for long sequences and small data sets. For long-sequenced data, the Seq2Seq model predicts well. Figure 11 shows that Seq2Seq prediction is very close to the real scenario. As per Fig. 11, the Seq2Seq model outperforms the LSTM and SIR models.

As per Table 2, the desired results (lowest RMSE) are highlighted in bold. In the case of conventional methods such as ARIMA, SIR, LSTM and Seq2Seq, it is possible to predict short-term prospects. For acute diarrhoeal disease cases, the ARIMA model performs best compared to the SIR model, LSTM, and Seq2Seq model. For Dengue cases, the Seq2Seq model outperforms the SIR, LSTM, and ARIMA models. Table 3 compares the predicted value to the actual data. It demonstrates that the expected value is nearly identical to the real value when using the Seq2Seq model. For cases of acute diarrhoeal disease cases, ARIMA outperforms the SIR, LSTM, and Seq2Seq models. Seq2Seq outperforms the SIR, ARIMA, and LSTM models for dengue cases.

Observations

-

1.

Proposed Model (Seq2Seq) achieves the lowest RMSE, MAPE, and MAE, and the highest R2 for both diseases, indicating its superior performance.

-

2.

ARIMA, LSTM, and NBEATS also perform well, with high correlation coefficients and low error rates.

-

3.

Regression and SIR models exhibit significantly higher errors and lower R2 values, reflecting poorer predictive performance.

The comparative analysis of different prediction models in Table 2 illustrates their effectiveness in forecasting Acute Diarrhoeal Disease and Dengue cases using key performance metrics: RMSE (Root Mean Square Error), MAPE (Mean Absolute Percentage Error), MAE (Mean Absolute Error), and R2 (Correlation Coefficient). Among the models, the Proposed Model (Seq2Seq) consistently outperforms others, achieving the lowest RMSE, MAPE, and MAE values, as well as the highest R2 values. Specifically, for Acute Diarrhoeal Disease cases, the Seq2Seq model recorded an RMSE of 385.515, a MAPE of 2.789%, and an MAE of 320, with a correlation coefficient (R2) of 0.96. For Dengue cases, it maintained superior performance with an RMSE of 399.134, a MAPE of 6.354%, and an MAE of 340, also achieving a correlation coefficient of 0.95. These results highlight the Seq2Seq model’s ability to provide accurate predictions and strong correlation with actual outcomes.

Other notable models include ARIMA (1,1,1), LSTM, and NBEATS, which also performed well with relatively low error rates and high R2 values. For instance, the ARIMA (1,1,1) model achieved an RMSE of 317.707 for Acute Diarrhoeal Disease and 422.551 for Dengue, with a high R2 of 0.95 and 0.94, respectively. The LSTM model delivered comparable accuracy, achieving an RMSE of 431.316 for Acute Diarrhoeal Disease and 573.651 for Dengue, paired with high correlation coefficients. These results indicate that advanced machine learning and time-series forecasting models can effectively capture complex patterns in disease prediction, making them suitable alternatives to traditional regression-based approaches. Figure 12a, b displays the training and validation Mean Absolute Error (MAE) loss curves over time for two diseases: acute diarrheal disease (part a) and dengue (part b). Each subplot illustrates the progression of the MAE loss during the model training process, indicating how well the models predict the respective disease cases as training progresses.

In contrast, simpler models like Regression, Bayesian Linear Regression, and the SIR model demonstrated significantly higher error rates and lower correlation coefficients. The RMSE for Regression reached 20,366.625 for Acute Diarrhoeal Disease and 27,087.611 for Dengue, with corresponding R2 values of 0.45 and 0.40, reflecting limited predictive accuracy. These traditional models struggle to capture the intricacies of disease spread and temporal patterns, highlighting their inadequacy for precise forecasting tasks compared to modern machine learning approaches. This comparative study emphasizes the importance of selecting robust models like Seq2Seq for accurate disease prediction, especially when dealing with critical health data.

Table 3 presents the comparative analysis of predicted versus actual cases for Acute Diarrhoeal Disease and Dengue during the year 2024. The table contains weekly data for both diseases, showing how the predictions align with the actual reported cases.

-

Acute Diarrhoeal Disease: The predicted and actual cases show some variation across the weeks. In most cases, the predicted number of Acute Diarrhoeal Disease cases is close to the actual values, with minor discrepancies. For example, in Week 24, the predicted value is 84, which is slightly higher than the actual value of 81, while in Week 33, the predicted cases are 26, which is marginally higher than the actual count of 24. This indicates a fairly accurate prediction model with small deviations.

-

Dengue Cases: For Dengue, the predicted values are generally in close alignment with the actual cases reported. In Weeks 24, 25, 26, and 28, the predicted values are almost identical to the actual values, demonstrating the model’s strong performance in forecasting Dengue cases. However, in Week 29, the prediction slightly overestimates the number of Dengue cases (predicted: 60, actual: 63), but the overall trend reflects a reliable forecasting model.

The data in this table highlights the importance of accurate prediction models for public health management, showing how predictions can be close to actual case numbers, though occasional deviations occur. This information can help in evaluating the effectiveness of the forecasting models for disease prevention and resource allocation in healthcare settings.

Key findings per model

-

1.

Regression:

-

Strengths: Simple and interpretable, offering a straightforward approach to forecasting.

-

Limitations: High error rates (RMSE of 20,366.625 for Acute Diarrhoeal Disease and 27,087.611 for Dengue) and low correlation coefficients (R2 of 0.45 and 0.40). The model struggles to capture complex, non-linear relationships, making it unsuitable for precise disease forecasting.

-

-

2.

Bayesian Linear Regression:

-

Strengths: Improved performance over basic regression, with reduced RMSE (9,310.285 for Acute Diarrhoeal Disease and 12,382.679 for Dengue) and higher R2 values (0.72 and 0.68). It offers probabilistic insights into predictions.

-

Limitations: Still limited in capturing highly non-linear patterns and interactions, resulting in moderate error rates compared to advanced models.

-

-

3.

MultiOutputRegressor + XGBoost:

-

Strengths: Enhanced predictive power using ensemble techniques, yielding RMSE values of 9,775.794 (Acute Diarrhoeal Disease) and 13,001.806 (Dengue). Offers flexibility and handles large datasets well.

-

Limitations: Higher computational complexity and error rates compared to deep learning methods. R2 values (0.70 and 0.65) indicate moderate correlation, making it suitable for less complex forecasting tasks.

-

-

4.

SIR (Susceptible-Infectious-Recovered):

-

Strengths: Epidemiologically interpretable, useful for understanding disease dynamics.

-

Limitations: High RMSE (17,727.026 for Acute Diarrhoeal Disease and 23,576.944 for Dengue) and low R2 values (0.50 and 0.45). The model lacks adaptability to real-world data fluctuations, limiting its use for precise, data-driven forecasting.

-

-

5.

ARIMA (1,1,1):

-

Strengths: Excellent for time-series forecasting, with low RMSE (317.707 for Acute Diarrhoeal Disease and 422.551 for Dengue) and high R2 values (0.95 and 0.94). Effective for data with clear trends and seasonality.

-

Limitations: Limited to linear patterns and struggles with long-term predictions or highly non-linear data.

-

-

6.

Prophet:

-

Strengths: User-friendly with solid performance (RMSE of 698.220 for Acute Diarrhoeal Disease and 928.633 for Dengue) and R2 values of 0.90 and 0.88. Effective for handling missing data and seasonality.

-

Limitations: Slightly less accurate than LSTM and Seq2Seq models. May not capture complex temporal dependencies as effectively.

-

-

7.

NBEATS:

-

Strengths: Strong performance with low RMSE (438.316 for Acute Diarrhoeal Disease and 582.961 for Dengue) and high R2 values (0.93 and 0.91). Well-suited for complex time-series data.

-

Limitations: Requires substantial computational power and large datasets for optimal performance.

-

-

8.

Gluonts:

-

Strengths: Flexible and efficient for probabilistic time-series forecasting.

-

Limitations: Higher RMSE (1,163.370 for Acute Diarrhoeal Disease and 1,547.282 for Dengue) and moderate R2 values (0.85 and 0.80) compared to other deep learning models, indicating it may be less effective for high-precision tasks.

-

-

9.

LSTM (Long Short-Term Memory):

-

Strengths: Excellent for capturing long-term dependencies in sequential data, with low RMSE (431.316 for Acute Diarrhoeal Disease and 573.651 for Dengue) and high R2 values (0.94 and 0.92). Effective for non-linear patterns.

-

Limitations: Computationally intensive and requires fine-tuning of hyperparameters for optimal performance.

-

-

10.

Proposed Model (Seq2Seq):

-

Strengths: Outperforms all other models with the lowest RMSE (385.515 for Acute Diarrhoeal Disease and 399.134 for Dengue) and highest R2 values (0.96 and 0.95). Ideal for capturing complex temporal and non-linear patterns.

-

Limitations: Higher computational requirements; may need significant training data for best results.

-

The Proposed Model (Seq2Seq), LSTM, and ARIMA offer the most accurate predictions for disease forecasting. Simpler models like Regression and SIR may be useful for basic analysis but fall short in predictive accuracy for real-world applications. Each model has its strengths depending on the complexity of the data and forecasting needs.

Discussion

Based on the results, we have found that the ARIMA model performed better in short-term prediction. For short-term prediction, the ARIMA model outperforms the SIR, LSTM, and Seq2Seq models. We have also found that Seq2Seq neural networks are a reliable model for epidemic prediction. Seq2Seq outperforms the SIR and LSTM models in predicting the flu information and shows promising results in predicting disease. The current dataset has a small amount of data and a long sequence. That is why Seq2Seq models perform well in this context. As a result, we can conclude that Seq2Seq is a viable option for this dataset type. As a result, we conclude that ARIMA outperforms LSTM and Seq2Seq in short-term forecasting while Seq2Seq outperform in long-term forecasting.

This discussion section provides a clearer understanding of how our comparative analysis of models, and the identification of more accurate forecasting approaches, can translate into tangible improvements in epidemic management and population health outcomes.

Linking Results to Public Health Planning and Intervention Strategies: We have expanded the Discussion to go beyond the performance metrics of the models and delve into their practical implications for public health. Specifically, we have included commentary on how accurate short-term and long-term forecasts can assist in:

-

Resource Allocation: Predictive models that reliably indicate impending surges in disease cases can guide health authorities in pre-positioning resources—such as hospital beds, medical supplies, and personnel—in regions forecasted to experience increased caseloads.

-

Timely Interventions: By identifying potential hotspots or periods of heightened transmission, policymakers can implement preventive measures (e.g., intensified sanitation efforts for acute diarrhea, targeted vector control strategies for dengue) proactively.

-

Public Awareness Campaigns: Forecasts can inform public health messaging and education campaigns, helping communities understand the importance of preventive actions (such as safe drinking water, proper waste disposal, and personal protective measures against mosquitoes) at the most critical times.

Each model’s strengths and limitations in the context of disease forecasting

-

1.

ARIMA Model:

-

Strengths: Well-suited for time-series data exhibiting linear trends and seasonal patterns. It provides easily interpretable parameters, making it useful as a baseline or benchmark.

-

Limitations: ARIMA’s linear structure may not fully capture complex, nonlinear disease dynamics or shifting epidemiological conditions over extended horizons.

-

-

2.

SIR (Compartmental) Model:

-

Strengths: Offers epidemiological interpretability by linking model parameters to disease transmission rates. Useful for understanding underlying disease mechanisms.

-

Limitations: Simplifying assumptions (e.g., homogeneous mixing of the population) and lack of adaptability to external factors can limit predictive accuracy, especially for long-term forecasts.

-

-

3.

LSTM (Long Short-Term Memory) Model:

-

Strengths: Capable of learning nonlinear patterns and capturing long-range dependencies, potentially outperforming traditional models when data exhibit complex temporal structures.

-

Limitations: Relatively data-hungry and can be difficult to interpret. Performance may be less stable when dealing with smaller datasets or rapidly changing conditions.

-

-

4.

Seq2Seq Model:

-

Strengths: Flexible input–output sequencing, effective at modeling nonlinearities and capturing intricate temporal relationships. Often excels in forecasting multiple time steps ahead and dealing with evolving trends.

-

Limitations: Reduced interpretability compared to simpler models. It may also require more careful hyperparameter tuning and computational resources.

-

Including these concise summaries for each model will help readers quickly identify which method might be most appropriate for their specific forecasting goals, data conditions, and resource constraints. These additions have been incorporated into the revised manuscript to enhance clarity and practical relevance.

Bias and limitations

Our models are subject to biases from data quality issues, simplified assumptions, unanticipated environmental changes, and evolving public health interventions, which can affect prediction accuracy. These limitations highlight the challenges in fully capturing the complexities of disease dynamics and ensuring reliable forecasts.

-

1.

Data Availability and Quality:

Since our models rely on publicly reported weekly cases and fatalities, any underreporting, delays in data entry, or inconsistencies in record-keeping can introduce biases. For instance, if certain regions or time periods are systematically underrepresented due to reporting issues, the models may produce predictions that are overly optimistic or fail to capture localized outbreaks. Inconsistent or incomplete data can result in underfitting or overfitting, affecting the accuracy of predictions in regions with limited data availability.

-

2.

Model Assumptions and Simplifications:

Simplified assumptions in compartmental models (e.g., homogeneous population mixing) can bias results by overlooking heterogeneity in population density, socioeconomic conditions, or healthcare access. For example, assuming equal mixing of individuals in a population may not account for urban-rural differences or the varying efficacy of healthcare infrastructure across different areas. Similarly, statistical and deep learning models assume that historical patterns will persist and may not adapt rapidly to sudden shifts in disease dynamics, such as the emergence of new variants or changes in transmission dynamics. This could lead to biased forecasts when there are significant deviations from historical trends.

-

3.

External Environmental Factors:

Environmental changes, such as abrupt climate shifts, flooding, droughts, or changes in vector ecology for diseases like dengue, can significantly influence disease transmission dynamics. Because our models primarily focus on historical incidence data, they may not fully account for sudden environmental changes that were not present in the training set. For example, a sudden heatwave or a drought could affect vector populations and influence dengue transmission, but these events may not have been captured in the historical data, leading to predictions that underestimate or overestimate disease spread in future scenarios influenced by evolving environmental conditions.

-

4.

Evolving Public Health Interventions:

Shifts in public health policies, prevention strategies, and resource allocation (e.g., improved sanitation, insecticide spraying, or vaccine distribution) can alter disease trajectories. Our models did not incorporate these factors explicitly, due to data unavailability or the complexity of quantifying such interventions. As a result, the predictions may not reflect recent changes in healthcare infrastructure or response efforts, leading to biased forecasts in regions where public health measures have dramatically altered disease dynamics.

These biases and limitations underscore the necessity for cautious interpretation of model predictions and highlight areas for future improvement, such as integrating more diverse data sources, refining model assumptions, and incorporating real-time information on environmental and public health factors.

Model selection rationale

-

1.

ARIMA (Auto-Regressive Integrated Moving Average):

-

Rationale: The ARIMA model is a well-established statistical method widely used for time-series forecasting, especially when historical patterns and autocorrelation structures are present. Our weekly disease incidence and fatality data exhibit seasonal patterns and trends that can often be captured effectively by ARIMA.

-

Data Characteristics: The relatively stable and long-term historical data for acute diarrheal and dengue cases allowed ARIMA to leverage autocorrelation and seasonality. By fitting ARIMA to the historical sequence, we aimed to establish a robust baseline, as it can capture linear relationships and known seasonal fluctuations in disease incidence.

-

-

2.

Seq2Seq (Sequence-to-Sequence Neural Network):

-

Rationale: Unlike ARIMA, Seq2Seq models are better equipped to capture complex, nonlinear relationships and long-term dependencies within the data. Given that infectious disease patterns can be influenced by multifaceted factors, such as climatic changes, intervention strategies, and transmission dynamics simple linear models may not fully exploit underlying patterns when they become more intricate over time.

-

Data Characteristics: Our dataset spans multiple years and includes periods with nonlinear trends, variable seasonality, and shifting disease prevalence. Seq2Seq architectures can handle arbitrary input–output sequence lengths and model nonlinear temporal dynamics more effectively than traditional statistical methods. This capability is particularly beneficial for forecasting longer horizons or when dealing with evolving transmission patterns that may not be strictly periodic or linear.

-

-

3.

Comparison Between ARIMA and Seq2Seq in This Context:

-

We selected ARIMA to serve as a strong, interpretable benchmark that effectively models linear components and known seasonal effects.

-

We chose Seq2Seq to explore whether incorporating a deep learning approach could reveal more subtle, nonlinear patterns in the time series and potentially improve long-horizon forecasts.

-

-

4.

Other Models Used: We also included compartmental (SIR) and LSTM models, among others, to ensure a broad comparative analysis. The SIR model provides a theoretical epidemiological perspective, capturing disease dynamics on a mechanistic level. LSTM, like Seq2Seq, is a deep learning approach designed to model sequence data, but Seq2Seq tends to handle variable-length input–output sequences more flexibly.

By selecting a diverse range of models ranging from traditional statistical methods like ARIMA to advanced deep learning approaches such as Seq2Seq, we aimed to understand which techniques are best suited to capturing the complex temporal patterns observed in disease incidence data. These justifications have been included in the revised manuscript to strengthen our rationale for model choice and better align our methodological decisions with the unique characteristics of the dataset.

Limitations of the present study

All the limitations of the present study are given below:

-

1.

Data Scope and Quality: The analysis relies on weekly aggregated case and fatality data obtained from publicly available sources. Although these sources are reliable and officially maintained, there are inherent limitations related to variations in reporting accuracy, underreporting, and lag times in data release. These factors can influence the precision and timeliness of the predictions made by our models. The accuracy of our results is inherently dependent on the completeness and consistency of the data.

-

2.

Lack of Additional Predictors: Our study primarily focused on time-series data regarding disease incidence and fatalities. While this is crucial for forecasting, additional predictors (e.g., climate variability, population mobility, healthcare interventions, socio-economic factors, and vaccination coverage) were not incorporated into the models. The unavailability or inconsistency of such data during the study period limited the scope of our models. Including these variables in future work could significantly improve model accuracy, interpretability, and relevance to public health planning.

-

3.

Generalizability Across Regions and Diseases: While we have focused on two specific diseases (acute diarrheal disease and dengue) within the Indian context, it is important to note that our findings may not be directly applicable to other regions or diseases with different transmission dynamics and data availability patterns. The methods and models applied in this study may need to be tailored for each specific context to ensure their generalizability and accuracy.

-

4.

Model Complexity and Interpretability: Deep learning models such as Seq2Seq exhibited strong predictive performance but are often viewed as “black box” approaches. While these models offer powerful predictions, their interpretability is limited compared to simpler, more transparent models such as statistical and compartmental models. Future research could benefit from integrating explainable AI techniques or domain-specific knowledge to enhance the interpretability of these complex models, making them more accessible for policy implementation and decision-making.

-

5.