Abstract

Sliding Mode Controller (SMC) is a controller design method used for control systems, aimed at achieving robust and stable control of systems. To improve the performance of SMC, this paper applies a hybrid enhanced particle swarm optimization algorithm (HEPSO) to optimize the parameters, including \(c_{1}\), \(c_{2}\), \(\varepsilon\) and \(k\), of SMC (HEPSO-SMC). The HEPSO integrates three strategies: adaptive inertia weightings (AIW), unified factor enhancement (UFE), and global optimal particle training (GOPT). The HEPSO is validated by simulation with CEC2022 which contains twelve benchmark functions, and the results show that the HEPSO is superior to the other variants of the PSO algorithm in terms of convergence speed and accuracy. The HEPSO-SMC is used as a 2-jointed manipulator for simulation verification. The simulation results, which are compared to PSO-SMC, IPSO-SMC, and UPS-SMC, are shown to illustrate the effectiveness and robustness of the HEPSO-SMC.

Similar content being viewed by others

Introduction

Over the past three decades, robotic manipulators have witnessed remarkable technological evolution, transitioning from simple repetitive actuators to autonomous systems capable of handling complex industrial tasks. This paradigm shift has positioned them as indispensable assets in modern manufacturing ecosystems, effectively addressing labor shortages while enhancing operational precision. They play a crucial role across various industries, including manufacturing, logistics, and healthcare1,2,3,4. As technology advances and labor costs increase, manipulators must evolve to become more flexible, precise, and intelligent to remain competitive. However, operational disturbances can impede their tracking control. Therefore, it is essential to develop control algorithms that can effectively manage these uncertainties and ensure stable performance, as this is vital for enhancing productivity and safety5,6.

Currently, the controllers used in manipulator systems include Sliding Mode Control (SMC)7, Neural Network Controllers (NNC)8, and Proportional Integral Derivative (PID) controllers9. The simplicity of the PID controller is a significant advantage, prompting many researchers to combine it with other methods to enhance control performance10,11,12, NNC also has numerous applications in manipulator control13. However, the performance of PID controllers heavily depends on their parameter settings, while the effectiveness of NNC relies on the accuracy of the training samples used for system design, whether trained online or offline. Therefore, designing an efficient manipulator controller remains a challenge.

SMC is known for its robustness and insensitivity to changes in system parameters and external disturbances, allowing for rapid adjustment of the system state. As the manipulator industry grows, so does the use of SMC in manipulators14,15. However, traditional parameter tuning for SMC heavily relies on manual experience and trial-and-error methods, which are not only time-consuming and labor-intensive but also often fail to achieve global optimality. Moreover, they are prone to low tuning efficiency and system instability due to human factors. In response to these challenges, the application of intelligent algorithms to automatically search for optimal parameters has emerged as a promising solution. These algorithms can efficiently explore the parameter space to identify optimal combinations, overcoming local optima and achieving global optimization16. For example, Han et al.17 applied a hybrid approach combining Gray Wolf Optimization (GWO) and Whale Optimization Algorithm (WOA) to a manipulator system, optimizing the parameters of both the controller and observer to enhance performance and stability. Similarly, Gad et al.18 proposed an SMC strategy that improves the tracking accuracy of manipulators by dynamically fine-tuning control parameters using a Genetic Algorithm (GA) optimization method. Particle Swarm Optimization (PSO), a classical algorithm in swarm intelligence, is also widely applied in the sliding mode control of manipulators. Tlijani et al.19 proposed an enhanced control method using a higher-order PSO algorithm for SMC to tackle the issues of jittery trajectories and poor tracking performance in a three-jointed rigid manipulator. Meanwhile, Zahra et al.20 utilized PSO to determine the optimal parameter values for the controller. Simulation results demonstrate that their trajectory tracking control method for a two-link manipulator significantly outperforms the traditional SMC strategy.

PSO algorithms offer advantages in global search capabilities and simplified implementation. However, they are prone to local optima and sensitive to parameter settings. To address these issues, numerous researchers have dedicated their efforts to enhancing the efficacy of PSO, and various strategies have been effectively employed to enhance PSO, leading to significant performance improvements21,22,23,24,25,26. This paper introduces a novel PSO variant, HEPSO, which integrates three advanced strategies: AIW, UFE, and GOPT (The AIW and UFE strategies are improved based on referencing other strategies in this article, while the GOPT strategy is first proposed in this article). These improvements significantly boost PSO’s global search capability and convergence speed while reducing parameter sensitivity. In the context of sliding mode controller parameter optimization, HEPSO enhances optimization efficiency and precision while improving system robustness and stability. It thus provides a more efficient and reliable solution for designing complex control systems. The contributions of this paper are as follows:

-

(1)

This paper contributes to the improvement of the particle swarm algorithm through multiple strategies, resulting in the proposal of a new variant: HEPSO.

-

(2)

The integration of this particle swarm variant with a manipulator SMC results in the development of the HEPSO-SMC, to enhance tracking accuracy.

The main sections of this paper are organized as follows: Section “Introduction” introduces the significance of the research and discusses the current development of SMC for manipulators; Section “Proposed PSO” outlines the basic principles of PSO and the improved HEPSO, details the enhancement strategies, and evaluates the comparative performance of HEPSO against other PSO variants using the CEC2022 benchmark functions; Section “Manipulators modeling and proposed SMC” presents the dynamic model of manipulators along with the design of the SMC system; Section “Simulation experiment” compares the optimization performance of HEPSO with other PSO variants for the SMC of the manipulators, and demonstrates HEPSO’s superiority in terms of the fitness of the objective function, as well as in combined comparisons of angular position tracking and angular velocity tracking; Section “Conclusions” concludes the findings of the paper.

Proposed PSO

This section introduces the basic principles of PSO and HEPSO. It also provides a detailed explanation of the three strategies employed in HEPSO. The performance of HEPSO is evaluated against other PSO variants using the CEC2022 benchmark functions. Lastly, HEPSO’s superior performance is illustrated through adaptive convergence plots and a performance radar plot.

Original PSO

The PSO algorithm introduces inertia weights, which significantly enhances convergence performance27. This innovation has made the PSO algorithm a preferred choice in the field, resulting in widespread recognition and application in various optimization problems.

The updated rules for \({v}_{i}^{t}\) and \({x}_{i}^{t}\) are defined as Eq. (1) and Eq. (2), respectively. Figure 1 shows a schematic diagram of the motion of the particle swarm.

where w denotes the inertia weight, which determines the previous velocity retention weight;\(c_{1}\) and \(c_{2}\) are the two acceleration coefficients that determine the relative learning factors of \(p_{i,j}^{t}\) and \(g_{i,j}^{t}\), respectively; \(r_{1}\) and \(r_{2}\) are two random numbers uniformly distributed in the interval [0,1]; \(x_{i,j}^{t}\) and \(v_{i,j}^{t}\) denote the j-th dimensional values of \(x_{i}^{t}\) and \(v_{i}^{t}\), respectively.

HEPSO

This paper presents a HEPSO that employs multiple strategies to optimize the parameters of the manipulator SMC. These strategies include the AIW, UFE, and GOPT strategies, each of which is described in detail in the following sections.

AIW

To reduce the ineffective iteration of PSO, enhance its convergence performance, and balance global exploration and local exploitation, the Adaptive Inertia Weights (AIW) strategy is introduced. The AIW strategy can monitor the motion state of the particle swarm in real-time and adjust the inertia weight accordingly. This paper integrates the AIW28 strategy into PSO to achieve these objectives.

where maximum inertia weight \(w_{\max } = 0.9\), minimum inertia weight \(w_{\min } = 0.4\),\(fitness\) is the current adaptation value of the particle, \(f_{avg}\) is the current global average adaptation value, and \(f_{\min }\) is the current global minimum adaptation value.

By employing Eq. (3) instead of constant inertia weights w, this adaptive formula adjusts the weights based on the fitness of the particles relative to the minimum and average fitness of the population. This approach enhances the search for the globally optimal solution while maintaining population diversity. The strategy schematic is shown in Fig. 2.

UFE

In a standard PSO, particles may move to areas outside the central point defined by their personal best and global best positions, resulting in less efficient searches. Unified Particle Swarm 29 (UPS) addresses this shortcoming by introducing a unified factor to balance the influence of cognitive and social components, thereby directing particles toward the central point between their personal and global best positions, as illustrated in Fig. 3. In this paper, we further optimize the selection of the unified factor, propose an adaptive selection mechanism for the unified factor, and introduce the Unified Factor Evolution (UFE) strategy.

where \(c_{3}\) is the unified factor.

where \(c_{3old}\) is \(c_{3}\) before the update; \(c_{3new}\) is \(c_{3}\) after the update; \(fitnessgbest\) is the current global optimal fitness; \(fitnesspbest\) and is the current individual optimal fitness.

A larger value \(c_{3}\) boosts individual learning and global search capability, while a smaller \(c_{3}\) enhances social learning and local convergence speed. By dynamically adjusting \(c_{3}\), we achieve a smooth transition from exploration to exploitation. Initially, \(c_{3}\) is randomly selected from the range [0, 1] with a uniform distribution (as shown in Eq. (6)). It is then updated based on the current global and individual optimal fitness using Eq. (7) and \(c_{3}\) remains within the range [0, 1]. The strategy schematic is shown in Fig. 4.

GOPT

During the early-phase training of algorithms, when particle fitness values predominantly reside in suboptimal regions, the Laplacian distribution demonstrates superior global exploration capabilities through its heavy-tailed characteristics. This manifests as substantial perturbations to elite particles, thereby enhancing population diversity and facilitating escape from local optima. Conversely, during later iterations as the swarm approaches near-optimal solutions, the distribution’s long-tail property induces finer perturbations, enabling precise local exploitation. This dual-phase perturbation mechanism establishes an adaptive balance between exploration and exploitation, significantly improving both algorithmic precision and operational stability30,31. Particularly noteworthy is the distribution’s dynamic response characteristics when addressing high-dimensional multimodal optimization problems, which synergistically coordinate global search breadth and local refinement depth, thereby substantially increasing the probability of locating globally optimal solutions. Based on the above analysis, the GOPT strategy is further proposed in this paper.

The probability density function of Laplace distribution:

where u is the location parameter, which controls the center of the distribution, and b is the scale parameter, which controls the shape and magnitude of the distribution.

where \(laplace\_noise\) is the generated Laplace noise; \(gbest\) is the global optimal particle; \(\dim\) is the problem dimension; \(gbest_{old}\) is the global optimal particle before updating and \(gbest_{new}\) is the global optimal particle after updating.

The parameter u sets the center of the Laplace distribution, indicating the expected value of the random perturbation. It can be set to the current position \(gbest\) to focus the perturbation around \(gbest\), effectively using available information. The parameter b controls the distribution’s shape and the strength of the perturbation. A smaller b leads to a more concentrated distribution and milder perturbations, while a larger b results in a more scattered distribution and stronger perturbations. For complex problems, a larger b is suitable, while a smaller b is better for simpler problems. The strategy schematic is shown in Fig. 5.

The pseudo-code for the HEPSO, which is based on multiple strategies, is presented in Table 1.

HEPSO verification

To verify the algorithm’s performance, we used test functions for comparison, selecting the CEC2022 benchmark function32, which is widely recognized in research. This set includes various function types, such as single-peak and multi-peak optimization problems, allowing for a thorough evaluation of the algorithm’s adaptability and robustness. All experiments were conducted on a laptop with an Intel Core i5-8265U processor and MATLAB version R2022b.

The CEC2022 benchmark function includes 12 single-objective benchmark functions, each offering options for 2, 10, and 20 dimensions to accommodate optimization problems of varying complexity. In this paper, we selected the highest dimension of 20. The names and numerical requirements of the benchmark functions used in this study are presented in Table 2.

To verify the superior performance of the hybrid strategy, we conducted a series of comparison experiments. To ensure fairness, we carefully selected parameters to maximize the effectiveness of the PSO algorithm. Through these well-designed experiments, we aim to comprehensively evaluate the actual performance and synergy of different strategies in solving optimization problems. The specific parameters chosen include a population size of 100, a maximum of 3000 iterations, a dimension of 20, and 30 independent runs. We compare the algorithms’ performance based on the mean difference and standard deviation obtained from the experiments.

The results presented in Tables 3 and 4 reveal that in the comparison between the GOPT strategy and PSO, 9 out of 12 test functions demonstrated superior performance relative to PSO. Similarly, in the comparison between the AIW strategy and PSO, 9 functions exhibited better performance than PSO. Furthermore, in the comparison between the UFE strategy and PSO, 7 functions showed improved performance over PSO. These findings indicate that each strategy has significantly enhanced the performance of PSO, thereby contributing positively to the overall algorithmic performance.

To verify the superior performance of the HEPSO algorithm, this paper compares EPSO with PSO, PSO-ST33, UPS29, and WOA34 for experiments.

The results of the 20-dimensional comparison experiments are presented in Tables 5, 6, and Fig. 6, indicating that HEPSO outperforms the four other algorithms on 7 out of 12 benchmark functions. In direct comparison with PSO, HEPSO demonstrates superior performance in 11 out of 12 functions. These findings underscore HEPSO’s effectiveness in multidimensional optimization problems and further validate the robustness and potential of its algorithmic design.

Although HEPSO is slightly less effective than PSO in testing the F2 function, as shown in the data presented in Table 7, it is noteworthy that only HEPSO successfully finds the optimal value. This finding underscores HEPSO’s adaptability and effectiveness in high-dimensional optimization problems and further confirms its potential advantages in addressing complex challenges.

The performance of swarm intelligence algorithms can be assessed using several key indicators. Number of Fitness Evaluations (NFE): refers to the total iterations needed to find the optimal solution; Optimization Time (OT): measures how long it takes to identify this solution, reflecting efficiency; Stability of Optimization (SO): evaluates how consistently the algorithm achieves solutions across different runs, indicating robustness; Optimization Accuracy (OA): assesses how close the found solution is to the true optimal value, showing precision; Coverage (C): looks at the distribution of solutions explored in the solution space, highlighting exploration capabilities; Coverage Rate (CR): counts the number of new solutions found per unit of time, indicating search speed. These six metrics provide a comprehensive evaluation of the performance of various algorithms. In this paper, scores are assigned based on the rankings of five different algorithms across these metrics, using a uniform scale ranging from 0.2 to 1, as detailed in Table 8. The comparative radar charts illustrating these rankings are presented in Fig. 7.

Optimization accuracy and stability are typically represented by the mean difference and standard deviation, while comparisons of coverage and coverage rate are generally based on optimization accuracy. Therefore, when evaluating algorithms, we first assess the number of fitness evaluations, optimization time, optimization stability, and optimization accuracy, considering their cumulative scores (CS). Subsequently, we compare the algorithms based on optimization accuracy, coverage, and coverage rate, with optimal values highlighted in bold in the table. As shown in Tables 8, 9, and Fig. 7, HEPSO demonstrates the best overall performance across 10 out of the 12 benchmark functions, achieving an optimal rate exceeding 80%. In contrast, the highest optimal rate for the other algorithms is below 10%, with HEPSO significantly outperforming the others.

In summary, the HEPSO algorithm has demonstrated remarkable performance in experimental comparisons with the standard particle swarm algorithm, its variants, and advanced algorithms in recent years. Its superior performance across the majority of benchmark functions signifies a significant breakthrough in the field of optimization algorithms. The strong performance of HEPSO is not limited to specific benchmarks; it has consistently maintained a high level of effectiveness across a range of evaluations, further attesting to its adaptability and robustness.

Manipulators modeling and proposed SMC

This paper takes the articulated manipulator as the object of research because it is highly adaptable to different working environments and task requirements. With its high degree of flexibility and freedom, the articulated manipulator is widely used in industrial automation. In this section, we describe in detail the dynamics of the articulated manipulator, design the SMC for it, and prove the stability of the SMC using the Lyapunov function. Finally, we select the controller parameters to be optimized and design the objective function.

Dynamic modeling for manipulators

Consider first an n-jointed manipulator, and the dynamics of an n-jointed manipulator are governed by the following second-order nonlinear differential Eq. (11):

where \(q,\dot{q},\ddot{q}\left( {q \in R^{n} } \right)\) is the amount of joint angular displacement, the amount of joint angular velocity, and the amount of joint angular acceleration;\(M\left( q \right) \in R^{n \times n}\) is the inertia matrix of the manipulator;\(H\left( {q,\dot{q}} \right) \in R^{n \times n}\) Indicates the centrifugal and Coriolis forces of the manipulator;\(G\left( q \right) \in R^{n}\) is the gravity term;\(F\left( {\dot{q}} \right) \in R^{n}\) is expressed as the frictional torque;\(\tau \in R^{n}\) is the control torque;\(\tau_{d} \in R^{n}\) is external interference.

The dynamics of the manipulator are characterized by the following properties:

Property 1: For some normal numbers \(l_{1}\) and \(l_{2}\), Satisfying:

Property 2: Eq. (13) is a skew-symmetric matrix,

Property 3: The gravity term \({\text{G}}\left( q \right)\) satisfies the following relation

Property 4: There exists a parameter vector that \(M\left( q \right),C\left( {q,\dot{q}} \right),G\left( q \right),F\left( {\dot{q}} \right)\) satisfies the linear relationship

where \(\Phi \left( {q,q,\rho ,\theta } \right) \in R^{n \times m}\) is the regression matrix of the known function of the joint variables as a function of the generalized coordinates of the manipulator and its derivatives of each order, and \(P \in R^{n}\) is the unknown constant parameter constants describing the mass characteristics of the manipulator.

Having discussed the general dynamics of an n-joint manipulator, we now focus on the specific case of a bi-articulated manipulator. The mathematical model of this manipulator is given by Eq. (16) 35:

where \(d\left( t \right)\) is external interference.

The actual working object is:

Considering parameter variations, errors and uncertainties uniformly as external perturbations \(f\left( {q,\dot{q},t} \right)\):

SMC for manipulators

Taking the angle commands of the 2-joints of the manipulator as \(q_{d1}\) and \(q_{d2}\), the tracking errors of the two joints are

The switching function is:

C is \(\left( {\begin{array}{*{20}c} {c_{1} } & 1 \\ {c_{2} } & 1 \\ \end{array} } \right)\) can obtain:

There are three commonly used convergence laws: Eq. (23) isovelocity convergence law, Eq. (24) exponential convergence law, and Eq. (25) power convergence law.

The exponential convergence law is chosen for this system:

where \({\text{sgn}} \left( s \right)\) is the step function, also known as the sign function.

Combining Eq. (22) with Eq. (26) gives the control law as:

In this control law \(f\) is an unknown quantity, so in practice \(f_{c}\) is taken as an estimate of \(f\). The upper and lower bounds of \(f\) are \(f_{u}\) and \(f_{l}\)。

Make \(f_{c}\) replace \(f\):

Substituting Eq. (28) into Eq. (22) yields:

Taking the upper bound of \(f\) to be \(\overline{f}\),\(\overline{f} = \left( {\begin{array}{*{20}c} {\overline{{f_{1} }} } & {\overline{{f_{2} }} } \\ \end{array} } \right)^{T}\), the design \(f_{c}\) is:

Take the Lyapunov function as \(V = \frac{1}{2}s^{T} s\), a substitution into Eq. (29):

Due to the nature of the \({\text{sgn}} \left( s \right)\) function, \(s^{T} \varepsilon {\text{sgn}} \left( s \right)\) is always non-negative, so \(- s^{T} \varepsilon {\text{sgn}} \left( s \right)\) is always non-positive. Since \(k > 0\) so \(ks^{T} s\) is positive and therefore \(- ks^{T} s\) is negative.

From Eq. (29), \(M^{ - 1} \left( {f_{c} - f} \right)\) does not affect \(\dot{V}\) to become positive due to the design of \(f_{c}\). Thus, the stability of the system is ensured。

In summary, \(\dot{V} = s^{T} \dot{s} \le 0\) can be obtained, and therefore the bi-articulated manipulator slide mold control system is stable36。

HEPSO-SMC

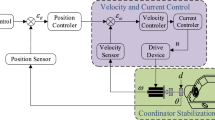

The primary goal of using HEPSO to optimize the SMC parameters for a double-jointed manipulator is to find the optimal values for these parameters. This optimization is crucial for improving the overall performance of the control system. The block diagram illustrating the HEPSO-SMC parameter optimization process is presented in Fig. 8.

The parameter C is crucial for defining the sliding mode surface in the controller design. The sliding mode surface is a key component in ensuring the system’s stability and performance. The choice of the sliding mode surface has a significant impact on the dynamic response and control performance of the system. When the parameter C is selected appropriately, the system state can reach the sliding mode surface expeditiously and smoothly, thereby enabling effective control of the system state and, consequently, enhancing the system’s stability and reliability.

The parameter \(\varepsilon\) is one of the key components of the convergence law and plays a crucial role in reducing or eliminating jitter phenomena. Jitter phenomena can cause instability in the system, and ε helps to mitigate this issue. Its functionality is closely intertwined with the speed at which the system reaches the sliding mold surface. By adjusting the value of \(\varepsilon\), the rate of convergence of the system state can be modified. An appropriate value of \(\varepsilon\) enables a more harmonious progression of the system as it approaches the sliding mode surface, thereby enhancing its resilience to unforeseen circumstances and external disturbances.

It is evident that the parameter k, as defined by the exponential convergence law, exerts a significant influence on the performance of the system in question. This can be attributed to its role in regulating the velocity at which the system state reaches the slip mode surface and approaches the intended state or trajectory. From the perspective of system design, the objective of rapid convergence is of paramount importance, as it minimizes the time required for the system to attain stability. Therefore, the selection of an appropriate value of k serves to guarantee the maintenance of stability, thereby preventing oscillatory or divergent behavior.

To summarize, the optimization of the switching function matrix C, \(\varepsilon\), and k within the convergence law is essential for the SMC system. Properly tuning these parameters ensures that the system state reaches the sliding mode surface promptly, smoothly, and accurately, leading to effective and reliable control outcomes.

Performance index

In the field of control system design, the selection of an appropriate objective function is of critical importance. The most commonly employed comprehensive performance evaluation criteria are presently based on the interrelationship between the degree of control deviation exhibited by the system and time37.

-

(1)

ISE

$$ISE = \int_{0}^{\infty } {e^{2} \left( t \right)} dt$$(32) -

(2)

IAE

$$IAE = \int_{0}^{\infty } {\left| {e\left( t \right)} \right|} dt$$(33) -

(3)

ITSE

$$ITSE = \int_{0}^{\infty } {te^{2} } \left( t \right)dt$$(34) -

(4)

ITAE

$$ITAE = \int_{0}^{\infty } {t\left| {e\left( t \right)} \right|} dt$$(35)

A decrease in the values of these objective functions indicates an improvement in the controller’s performance. This reflects the controller’s ability to achieve precise, stable, and reliable control more effectively. Nevertheless, these objective functions are frequently employed in PID controllers due to the similarities and differences between SMC and PID controllers. The objective of SMC is to achieve faster response times, superior tracking performance, diminished output jitter in SMC, and reduced energy consumption of the final control output. To achieve this, the square term of the control input is included in the ITAE. Moreover, to circumvent the problem of overshooting within the system, a penalty function \(\sigma\), that is specifically tailored to address this phenomenon is integrated into the optimization process. In consequence, the objective function can be expressed as follows:

where \(\omega_{1} = 0.1,\omega_{2} = 0.001,\omega_{3} = 100\) is the weighting factor of error and output; \(e\left( t \right)\) is the systematic tracking error,\(u\left( t \right)\) is the controller output.

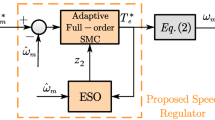

Flow scheme of HEPSO-SMC

The HEPSO optimization process for the SMC is as depicted in Figs. 9 and 10. The process begins with the initialization of the particle swarm parameters, which are then assigned to the SMC. Next, the Simulink model is executed using the S function, and the resulting data is sent to HEPSO for iterative updating. Inertial weights are calculated using adaptive formulas, while velocity and position updates are performed using the adaptive unified factor strategy. Subsequently, the optimized particles are trained globally by the optimal particle addition training strategy. The optimal parameter combination for the SMC is outputted once the iteration process converges to a satisfactory solution.

Simulation experiment

This section first specifies the ideal trajectory of the manipulator and illustrates the parameter configurations of the different controller algorithms. It then compares the fitness values of the different controllers for the objective function of the bi-articulated manipulator system. Before concluding, the section compares the tracking performances of the different controllers, thereby highlighting the superior performance of the HEPSO-SMC.

Parameter setting

The ideal angle commands for the bi-articulated manipulator are respectively \(q_{d1} = \cos t\) and \(q_{d2} = \sin t\), \(\left( {\begin{array}{*{20}c} {q_{1} } & {\dot{q}_{1} } & {q_{2} } & {\dot{q}_{2} } \\ \end{array} } \right) = \left( {\begin{array}{*{20}c} {0.5} & 0 & {0.5} & 0 \\ \end{array} } \right)\) is the initial state of the system. Take the simulation parameters of the manipulator \(m_{1} = m_{2} = 0.5({\text{kg}})\), \(l_{1} = l_{2} = 1({\text{m}})\), \(g = 9.8({{\text{N}} \mathord{\left/ {\vphantom {{\text{N}} {{\text{kg}}}}} \right. \kern-0pt} {{\text{kg}}}})\), the mass inertia matrix, the ensemble force matrix, and the interference matrix are obtained from the above data: Equations. (37), (38), and (39), and then substitute these matrices into Eq. (16). (2-jointed manipulator as shown in Fig. 11).

To validate the control performance of the HEPSO-SMC controller, a comparison with three intelligent algorithm-optimized SMC (PSO-SMC, IPSO-SMC, and UPS-SMC) was performed. (IPSO and UPS are two strategic enhancements to this algorithm, drawing from improvements based on other algorithmic strategies. The third strategy, which is an innovative contribution of this paper, is not included in the comparative analysis).

Following the completion of a simulation and subsequent verification in Matlab2022b, the optimal setup parameters for each algorithm were identified. The parameters of each algorithm were set as follows: For the PSO algorithm the learning factor is set to \(c_{1} = c_{2} = 1.5\), inertia weights are set to \(w = 0.7\), velocity limit of the particles is set to \(v_{\max } = 0.5,v_{min} = - 0.5\), number of particle swarms is set to 30 and the number of iterations is set to 50. For IPSO, UPS, and HEPS0 algorithms inertia weights are set as \(w_{\max } = 0.9,w_{\min } = 0.4,\) \(c_{3}\) is randomly selected within the range [0,1] for the UPS algorithm and adaptively within the range [0,1] for the HEPSO algorithm. In addition, the remaining parameters adhere to the principles of PSO.

Following the initial parameterization phase, the optimal range for each parameter is then determined: \(c_{1} \in \left[ {4,8} \right]\), \(c_{2} \in \left[ {4,8} \right]\), \(\varepsilon \in \left[ {0.4,0.8} \right]\),\(k \in \left[ {4,8} \right]\). The algorithm adaptation values based on the objective function are illustrated in Fig. 12 and Table 10, with the optimal adaptation values in Table 10 obtained based on the objective function of Eq. (36). Figure 12 illustrates that the HEPSO method achieves the optimal fitness value among the tested algorithms. A direct comparison of the derived fitness values based on the objective function indicates that the HEPSO method demonstrates superior optimization capabilities.Additionally,Following several iterations of the simulation, the optimal parameters of the controlled system are presented in Table 11.

Result analysis

Comparison and verification with other methods

Upon comparing and analyzing the tracking performance of the disparate algorithm-optimized controllers depicted in Figs. 13 and 14 in terms of the rotation angle and angular velocity of the manipulator’s joints, the preeminence of HEPSO-SMC in tracking efficacy becomes markedly evident. In comparison to the other three controllers, HEPSO-SMC not only tracks the ideal trajectory with greater speed but also demonstrates a more precise adherence to the ideal path in subsequent performance. The superior performance of HEPSO-SMC in terms of both response speed and tracking accuracy is a consequence of the efficiency of its optimization algorithm, which enables precise control of the rotation angle of the manipulator’s joints in a relatively short time.

Comparative validation with legacy approaches

The manipulator controller was initially adjusted via empirical parameter tuning when the algorithm was not optimized (as illustrated in Table 12). The tracking effect was then evaluated using the HEPSO-SMC in comparison to the SMC before optimization (as depicted in Figs. 15, 16, and 17, and detailed in Table 13). Through a comparative analysis of the tracking effects observed in the three graphs, it can be observed that the HEPSO-SMC exhibited superior tracking performance in terms of both time and precision compared to the unoptimized SMC.

As illustrated in Figs. 18 and 19, and detailed in Table 14, the system input is equivalent to the controller output. A comparative analysis of the two controllers reveals distinct performance characteristics. The SMC output (Fig. 18) exhibits a rapid initial response but is followed by substantial overshoot and sustained oscillations, with fluctuations ranging from − 4 to 4 and a standard deviation of approximately 3. This behavior indicates significant post-steady-state output variability, which adversely affects system stability and control precision.

In contrast, the HEPSO-SMC output (Fig. 19) demonstrates a more stable trajectory, with minimal overshoot and fluctuations confined to a narrower range of − 2 to 2, and a standard deviation of about 1. This suggests that the HEPSO-SMC system achieves steady-state conditions more efficiently and maintains consistent stability throughout its operation. While the SMC’s rapid response is advantageous, its prolonged oscillations imply a delayed stabilization, which is generally undesirable in practical applications.

The HEPSO-SMC output not only accelerates stabilization but also reduces the transition period, indicating superior adaptability to input signal changes and sustained high-precision control. These improvements highlight the innovative design of the HEPSO-SMC method, which addresses the limitations of traditional SMC by significantly reducing output variability and enhancing system stability and control accuracy.

In conclusion, the HEPSO-SMC demonstrates superior output performance, exhibiting excellence not merely in dynamic response but also in steady-state performance. This performance renders the HEPSO-SMC more suitable for applications that necessitate rapid response and elevated stability, making it a preferred choice in scenarios where precise and swift control is critical.

Conclusions

This study aimed to enhance the tracking control performance of manipulators and introduce innovative methods for optimizing this performance. We propose a novel variant of particle swarm optimization: HEPSO, which is based on multiple strategies (AIW, UFE, and GOPT). This method is subsequently compared with PSO, IPSO, UPS, and WOA through a comparative analysis using the CEC2022 benchmark functions. The findings demonstrate that HEPSO exhibits improved convergence precision and accuracy. HEPSO achieved an optimal rate exceeding 80%. In contrast, the highest optimal rate for the other algorithms is below 10%, with HEPSO significantly outperforming the others. Furthermore, in trajectory tracking control experiments using the 2-joint manipulator SMC system, the HEPSO-SMC demonstrates superior tracking capabilities and robustness, outperforming the PSO-SMC, IPSO-SMC, and UPS-SMC. Compared to existing methods that involve manual parameter adjustment, our approach demonstrates significantly enhanced performance. Specifically, the fluctuation range of the tracking error is reduced by 50%, and the stability of the controller is increased by 50%, which can be attributed to the innovative design of our controller. This not only significantly improves the performance of SMC in tracking applications but also provides a novel perspective for future advancements in the SMC domain, thereby enriching related research endeavors. While this paper successfully improves the tracking control performance of SMC, it does not address extreme scenarios such as accidental collisions or component degradation. Future research will focus on enhancing control efficacy under these extreme conditions.

Data availability

The data are available upon request, can be contacted at lzw20230221@163.com.

References

Joo, J. et al. Parallel 2-DoF manipulator for wall-cleaning applications. Autom. Constr. 101, 209–217 (2019).

Bayle, B., Fourquet, J. Y. & Renaud, M. Manipulability of wheeled mobile manipulators: Application to motion generation. Int. J. Robot. Res. 22(7–8), 565–581 (2003).

Yao, Z. et al. Data-driven control of hydraulic manipulators by reinforcement learning. IEEE/ASME Trans. Mechatron. (2023).

Aghili, F. Robust impedance-matching of manipulators interacting with uncertain environments: Application to task verification of the space station’s dexterous manipulator. IEEE/ASME Trans. Mechatron. 24(4), 1565–1576 (2019).

Ghodsian, N. et al. Mobile manipulators in industry 4.0: A review of developments for industrial applications. Sensors 23, 8026 (2023).

Zhang, Y. et al. A passivity-based approach for kinematic control of manipulators with constraints. IEEE Trans. Ind. Inform. 16(5), 3029–3038 (2019).

Yu, X., Feng, Y. & Man, Z. Terminal sliding mode control–an overview. IEEE Open J. Ind. Electron. Soc. 2, 36–52 (2020).

Narendra, K. S. Neural networks for control theory and practice. Proc. IEEE 84, 1385–1406 (1996).

Chao, C. T. et al. Equivalence between fuzzy PID controllers and conventional PID controllers. Appl. Sci. 7, 513 (2017).

Kuc, T. Y. & Han, W. G. An adaptive PID learning control of robot manipulators. Automatica 36, 717–725 (2000).

Ardeshiri, R. R. et al. Robotic manipulator control based on an optimal fractional-order fuzzy PID approach: SiL real-time simulation. Soft. Comput. 24, 3849–3860 (2020).

Rani, K. & Kumar, N. Intelligent controller for hybrid force and position control of robot manipulators using RBF neural network. Int. J. Dyn. Control 7, 767–775 (2019).

Liu, H. et al. Adaptive control of manipulator based on neural network. Neural Comput. Appl. 33, 4077–4085 (2021).

Chávez-Vázquez, S. et al. Trajectory tracking of Stanford robot manipulator by fractional-order sliding mode control. Appl. Math. Model. 120, 436–462 (2023).

Zhang, Y. et al. Adaptive non-singular fast terminal sliding mode based on prescribed performance for robot manipulators. Asian J. Control 25, 3253–3268 (2023).

Zhang, J. et al. High-order sliding mode control for three-joint rigid manipulators based on an improved particle swarm optimization neural network. Mathematics 10, 3418 (2022).

Han, S. I. Fractional-order sliding mode constraint control for manipulator systems using grey wolf and whale optimization algorithms. Int. J. Control Autom. Syst. 19, 676–686 (2021).

Gad, M. et al. Optimized power rate sliding mode control for a robot manipulator using genetic algorithms. Int. J. Control Autom. Syst. 22, 3166–3176 (2024).

Tlijani, H., Jouila, A. & Nouri, K. Optimized sliding mode control based on cuckoo search algorithm: Application for 2DF robot manipulator. Cybern. Syst. (2023).

Zahra, K. A. & Abdalla, T. Y. A PSO optimized RBFNN and STSMC scheme for path tracking of robot manipulator. Bull. Electr. Eng. Inform. 12, 2733–2744 (2023).

Zibaei, H. & Mesgari, M. S. Improved discrete particle swarm optimization using bee algorithm and multi-parent crossover method (case study: Allocation problem and benchmark functions). Preprint at http://arxiv.org/abs/2403.10684 (2024).

Fang, X. et al. An improved near-field weighted subspace fitting algorithm based on niche particle swarm optimization for ultrasonic guided wave multi-damage localization. Mech. Syst. Signal Process. 215, 111403 (2024).

Han, B., Li, B. & Qin, C. A novel hybrid particle swarm optimization with marine predators. Swarm Evol. Comput. 83, 101375 (2023).

Gu, Q. et al. A constrained multi-objective evolutionary algorithm based on decomposition with improved constrained dominance principle. Swarm Evolut. Comput. 75, 101162 (2022).

Wang, F., Wang, X. & Sun, S. A reinforcement learning level-based particle swarm optimization algorithm for large-scale optimization. Inf. Sci. 602, 298–312 (2022).

Xu, H. et al. A hybrid differential evolution particle swarm optimization algorithm based on dynamic strategies. Sci. Rep. 15, 4518. https://doi.org/10.1038/s41598-024-82648-5 (2025).

Kennedy J, Eberhart R. Particle swarm optimization. Proceedings of ICNN’95-International Conference on Neural Networks vol. 4 1942–1948 (1995).

Harrison, K. R., Engelbrecht, A. P. & Ombuki-Berman, B. M. Self-adaptive particle swarm optimization: A review and analysis of convergence. Swarm Intell. 12, 187–226 (2018).

Tsai, H. C. Unified particle swarm delivers high efficiency to particle swarm optimization. Appl. Soft Comput. 55, 371–383 (2017).

Tian, Z. & Gai, M. Football team training algorithm: A novel sport-inspired meta-heuristic optimization algorithm for global optimization. Expert Syst. Appl. 245, 123088 (2024).

Cardoso, D. M., Jacobs, D. P. & Trevisan, V. Laplacian distribution and domination. Graphs Comb. 33, 1283–1295 (2017).

Bujok, P. & Kolenovsky, P. Eigen crossover in cooperative model of evolutionary algorithms applied to CEC 2022 single objective numerical optimisation. In Proceedings of 2022 IEEE Congress on Evolutionary Computation (CEC) 1–8 (2022).

Kiani, T. et al. An improved particle swarm optimization with chaotic inertia weight and acceleration coefficients for optimal extraction of PV models parameters. Energies 14, 2980 (2021).

Nasiri, J. & Khiyabani, F. M. A whale optimization algorithm (WOA) approach for clustering. Cogent Math. Stat. 5, 1483565 (2018).

Jouila, N. K. An adaptive robust nonsingular fast terminal sliding mode controller based on wavelet neural network for a 2-DOF robotic arm. J. Frankl. Inst. 357, 13259–13282 (2020).

Jie, W. et al. Terminal sliding mode control with sliding perturbation observer for a hydraulic robot manipulator. IFAC-PapersOnLine 51, 7–12 (2018).

Zhang, D. L., Ying-Gan, Y. G. & Xin-Ping, X. P. Optimum design of fractional order PID controller for an AVR system using an improved artificial bee colony algorithm. Acta Automatica Sinica 40, 973–979 (2014).

Acknowledgements

This work was supported by Liaoning Provincial Department of Education Basic Research Projects for Higher Education Institutions, China (LJKZ0301).

Author information

Authors and Affiliations

Contributions

Zhongwei Liu wrote the main manuscript text, and He Wang reviewed the manuscript. Zhongwei Liu, Tianyu Zhang and Sibo Huang completed the validation of the algorithm, He Wang to revise the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Liu, Z., Zhang, T., Huang, S. et al. HEPSO-SMC: a sliding mode controller optimized by hybrid enhanced particle swarm algorithm for manipulators. Sci Rep 15, 16580 (2025). https://doi.org/10.1038/s41598-025-00728-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-00728-6