Abstract

Effective analysis of medical data is essential for understanding complex healthcare phenomena. Probability distribution models offer a structured approach to uncover patterns in such data, particularly for studying disease progression, survival analysis and many more. In this study, we explore a novel probability distribution model, derived by applying the DUS transformation to the standard Rayleigh distribution. We thoroughly investigate the statistical properties of the proposed model and derive key reliability measures to demonstrate its applicability in reliability analysis. To ensure precise parameter estimation, various estimation methods are evaluated, and their effectiveness is assessed through a detailed simulation study using bias, mean squared error, and mean relative error as performance criteria. The developed model’s practical applicability is demonstrated with an analysis of COVID-19 data, comparing its performance with several well-known distributions. The results highlight the flexibility and accuracy of the model, establishing it as a powerful and reliable tool for advanced statistical modelling in healthcare research.

Similar content being viewed by others

Introduction

In the realm of medical science, the accurate analysis and interpretation of data are crucial for advancing healthcare outcomes, improving treatment strategies, and enhancing clinical decision-making. Medical data, however, is often complex, high-dimensional, and riddled with uncertainties arising from biological variability, environmental influences, and limitations in data collection. To address these challenges, probability distribution models provide a powerful and flexible approach for analysing and interpreting medical data. These models not only account for the inherent randomness in medical phenomena but also offer structured ways to quantify uncertainty, predict outcomes, and make evidence-based decisions. This research article focuses on the application of probability distribution models in the context of medical science data with special reference to COVID-19. The COVID-19 pandemic has underscored the importance of effective data analysis in medical science, particularly in understanding the spread, impact, and mitigation of infectious diseases. Such data encompassing infection rates, recovery times, mortality, and transmission patterns, is inherently stochastic, meaning it involves elements of randomness. Probability distributions provide a framework for modelling these random phenomena and understanding their behaviour over time. By fitting appropriate distributions to the observed data, researchers can predict outcomes like future case counts, hospitalization rates, or the probability of transmission under different conditions. Thus, from modelling disease spread and patient survival rates to assessing treatment efficiency and risk factors, probability distribution models play a vital role in capturing real-world variability in clinical and epidemiological data.

In recent years, researchers have increasingly focused on developing families of probability distributions for modelling medical data. Notable contributions in this area include the innovative lifetime distribution introduced by Almongy et al.1, which merges the Rayleigh distribution with the extended odd Weibull family to form the extended odd Weibull Rayleigh distribution, specifically aimed at modelling COVID-19 mortality rates. Additionally, Sindhu et al.2 explored a generalization of the Gumbel type-II distribution for analysing COVID-19 data, while in another study Sindhu et al.3 developed an exponentiated transformation of Gumbel type-II to handle two datasets of COVID-19 death cases. Liu et al.4 proposed a novel statistical model known as the arcsine modified Weibull distribution, demonstrating its effectiveness through COVID-19 data modelling. Kilai et al.5 introduced a new flexible statistical model for analysing COVID-19 mortality rates. Hossam et al.6 presented an extension of the Gumbel distribution, incorporating a new alpha power transformation method to enhance its application to COVID-19 data. Gemeay et al.7 contributed by proposing a two-parameter statistical distribution, combining exponential and gamma distributions, and demonstrated its superiority using COVID-19 datasets. Recently, Alomair et al.8 introduced the exponentiated XLindley distribution, showcasing its applicability through three real-world datasets, including COVID-19 mortality rates, precipitation measurements, and failure times for repairable items. Further advancements in probability distributions include the exponentiated Chen distribution, as examined by Dey et al.9, who investigated its properties and estimation methods while applying it to real-world datasets to assess its potential for statistical analysis. Additionally, Dey et al.10 studied the generalized exponential distribution, particularly in relation to ozone data. Rather and Subramanian11 introduced the exponentiated Mukherjee-Islam distribution, demonstrating its efficiency through real-world applications. Following this, Rather and Özel12 explored the weighted Power Lindley distribution. Moreover, Rather and Özel13 continued their work with the study of a new length-biased Power Lindley distribution, including an analysis of its properties and applications. Rather et al.14 proposed a new class of probability distribution called the exponentiated Ailamujia distribution, finding that it offers a superior fit compared to traditional distributions. Singh et al.15 examined the exponentiated Nadarajah–Haghighi distribution, while Ahmad et al.16 developed a novel Sin-G class of distributions, including an illustration involving the Lomax distribution. Qayoom and Rather17 contributed by exploring the Weighted Transmuted Mukherjee-Islam distribution, along with a comprehensive study of the length-biased Transmuted distribution18 as an extension of the Mukherjee-Islam distribution.

In this research article, we aim to develop a new extension of the Rayleigh distribution using the DUS transformation approach initially proposed by Kumar et al.19. The DUS transformation has been extensively studied by numerous researchers to create enhanced probability models for the analysis and interpretation of real-world data. Notable contributions in this area include those by Tripathi et al.20, Abujarad et al.21, Kavya and Manoharan22, Gul et al.23, Deepti and Chacko24, Gauthami and Chacko25, Karakaya et al.26, Thomas and Chacko27 and Gül et al.28. More recently, Qayoom et al.29 extended the DUS transformation to the Lindley distribution, demonstrating its utility in evaluating and enhancing system reliability.

New extension of Rayleigh distribution

The Rayleigh distribution is a continuous probability distribution named after the British Scientist Lord Rayleigh30 and is characterized by its scale parameter, which influences the shape and spread of the data. The distribution is widely utilized across various fields, including life testing experiments, communication theory, medical testing and clinical studies, reliability analysis, applied statistics and many more fields. Given its importance and the aim to enhance its versatility, several researchers have proposed extensions to the Rayleigh distribution. Notably, Kundu and Raqab31 introduced the generalized Rayleigh distribution. MirMostafaee et al.32 presented a new extension called the Marshall–Olkin extended generalized Rayleigh distribution, which builds on the framework established by Marshall and Olkin33. Additionally, Rashwan34 examined the Kumaraswamy Rayleigh distribution. Further contributions include Ateeq et al.35, who derived the Rayleigh-Rayleigh distribution (RRD) using the transformed transformer technique. Bantan et al.36 explored the Unit-Rayleigh distribution, assessing its significance through real-life datasets. Falgore et al.37 developed the inverse Lomax-Rayleigh distribution for modelling medical data. More recently, Ahmad et al.38 introduced a new family of distributions inspired by the hyperbolic Sine function generator, with the Rayleigh distribution serving as the base for the newly established hyperbolic Sine-Rayleigh distribution.

Consider a random variable \(V\) following Rayleigh distribution with parameter \(\tau > 0\), then the probability density function (PDF) of \(V\) is given by

Here \(\tau > 0\) is the scale parameter characterizing the shape and spread of the distribution. The associated cumulative distribution function (CDF) is given by \(F_{V} (v) = 1 - e^{{ - \frac{{v^{2} }}{{2 \tau^{2} }}}}\).

In this section, we will generalize the PDF of Rayleigh distribution by following DUS transformation approach suggested by Kumar et al.19. So, the PDF of the new generalized Rayleigh distribution is

From now onwards this new generalized Rayleigh distribution expressed by Eq. (1) will be called as DUS Rayleigh distribution. Tripathi and Agiwal39 have also studied and discussed this new generalization of Rayleigh distribution. The behavior of the DUS Rayleigh distribution for different values of its parameter is graphically presented in Figs. 1 and 2 as below:

From the above graphical representation of the PDF of DUS Rayleigh distribution, it can be observed that the distribution is positively skewed (right-skewed), that is, longer tail on the right. For small \(\tau\), the curve is sharply peaked near small values of variable V, indicating that most values are concentrated in a narrow range around the mode of the distribution. For large \(\tau\), the curve becomes more stretched out, with a broader range of values. This implies that the values are less concentrated around the mode.

The corresponding cumulative distribution function (CDF) of DUS Rayleigh distribution is given by

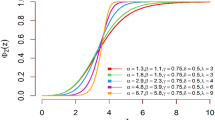

The graphical representation of the CDF of DUS Rayleigh distribution is shown in Figs. 3 and 4.

It can be observed that the CDF Curve stretches in between 0 and 1. This implies that the expression 4 is a valid CDF. From the graphical representation of the CDF of DUS Rayleigh distribution, the CDF shifts right as \(\tau\) increases. This means that for the same value \(\nu\) of given random variable V, the probability of finding a value below \(\nu\) decreases. For small \(\tau\), the curve rises steeply at small values of \(\nu\), meaning that most values are clustered around a lower range. For large \(\tau\), the curve shifts right, meaning that values are more spread out and higher values are more likely, that is, the probability of small values is lower. In other words, it can be interpreted that larger \(\tau\) values make the CDF increase more gradually, spreading the probability over a wider range.

Statistical properties

In this section, some of the general statistical properties of the newly developed probability distribution will be explored. These properties include quantile function, moments of the distribution, coefficient of variation, measure of skewness, measure of kurtosis and incomplete moments. In this section, we will also compute moment generating function, characteristics function and cumulant generating function for the explored DUS Rayleigh distribution.

Quantile function

A quantile function provides the value below which a given percentage of data falls. Given a cumulative distribution function \(F_{X} (x) = P(X \le x) = u\) such that \(u \in (0,1)\) of a continuous random variable \(X\), then the quantile function denoted by \(Q(u)\) for \(u \in (0, 1)\) is defined as

For the DUS Rayleigh distribution, the quantile function is obtained by determining the value of \(V = v\) for which \(G_{V} (v) = u\), that is

which is the required expression of quantile function for DUS Rayleigh distribution and is very essential for assessing behaviour of the distribution with the help of simulation study.

Moments

Moments are essential mathematical tools used to describe and analyze the properties of datasets or mathematical functions, offering a mathematical framework to capture essential properties like central tendency, spread (variability) and shape. Their versatility makes them indispensable across diverse fields, enabling deeper insights and more precise predictions in both theoretical and applied research.

Now, the \(r^{th}\) moment about origin of the given model is

Using Eq. (1) in the above expression, we get

Put \(\frac{{v^{2} }}{{2 \tau^{2} }} = z\), so \(dv = (2 )^{{ - \frac{1}{2}}} \tau z^{{ - \frac{1}{2}}} dz\).

When \(v \to 0, {\text{then}} z \to 0\) and, when \(v \to \infty , {\text{then}} z \to \infty\), therefore

Putting \(r = 1, 2, 3, {\text{and}} 4\) in Eq. (3) we obtain first four moments about origin and are expressed as

So, the variance \((\mu_{2} )\) and coefficient of variation \(({\text{C}}{\text{.V}})\) for the given model respectively are calculated as

\(\mu_{2} = \sum\limits_{k = 0}^{\infty } {\frac{{( - 1)^{k} }}{k!}\left( {\frac{e}{e - 1}} \right) \frac{{2 (\tau )^{2} }}{{(k + 1)^{2} }}} - \left( {\sum\limits_{k = 0}^{\infty } {\frac{{( - 1)^{k} }}{k!}\left( {\frac{e}{e - 1}} \right) \frac{{(\sqrt 2 \tau ) \Gamma \left( \frac{3}{2} \right)}}{{(k + 1)^{\frac{3}{2}} }}} } \right)^{2}\) and

Moreover, the coefficient of skewness \((\gamma_{1} )\) and the coefficient of kurtosis \((\gamma_{2} )\) for the explored model are given by

The behavior of the mean, variance, C.V, coefficient of skewness and kurtosis for the DUS Rayleigh distribution for different values of the parameter involved in the distribution is presented in Table 1 below:

Incomplete moments

The \(r^{th}\) incomplete moment about origin for the given model is given by

After following the steps used in the computation of moments of the distribution, the resultant expression for incomplete moments is given by

Moment generating function

The moment generating function of the given model is computed as \(M_{V} (t) = E(e^{tv} ) = \int\limits_{0}^{\infty } {e^{tv} } g(v; \tau )dv\)

Similarly, the characteristics function and cumulant generating function of given model are given by

Reliability analysis measures

In this section we will explain various measures needed for studying reliability analysis to evaluate the performance of a component or system. These measures include survival function, hazard rate, mean residual life, mean past life, and stress-strength reliability. All these measures will be derived in relation to the DUS Rayleigh distribution.

Survival function and hazard function

The survival function for the DUS Rayleigh distribution is given by

It can be further simplified as

The graphical behaviour of the survival function for different parameter values is illustrated in Figs. 5 and 6. It can be observed from graphical representation of the survival function based on DUS Rayleigh distribution that for small \(\tau\), the survival function declines sharply, indicating that failures occur early and survival probability decreases rapidly. This can be interpreted as the system has low reliability and short lifespan. On the other hand for large \(\tau\), the survival function shifts right and declines more slowly, implies that failures are spread over a longer duration and survival remains high for a longer time. This indicates that the system is more reliable, with a longer lifespan.

The hazard function based on DUS Rayleigh distributions expressed as a ratio of PDF of DUS Rayleigh distribution and its survival function. Mathematically, it is expressed as

Mean residual life

Mean residual life (MRL) is a measure that describe “the expected remaining lifetime of a system, component or individual given that it has survived up to a certain time point”. In simple words MRL states “How much more time can the system be expected to function, given that it is still operational at time \(T\)”. Mathematically, for a non-negative random variable \(T\) denoting the failure time of a component or system and follows DUS Rayleigh distribution, then the MRL at time \(T\) is given by

On substituting the expression of PDF of DUS Rayleigh distribution shown in Eq. (1), the MRL at time \(T\) becomes

Let \(\frac{{v^{2} }}{{2 \tau^{2} }}(k + 1) = z\), then \(dv = \left( {\frac{{ \tau^{2} }}{k + 1}} \right)\left( {\frac{{2 z \tau^{2} }}{k + 1}} \right)^{{ - \frac{1}{2}}} dz\).

When \(v \to t, {\text{then}} z \to \frac{{t^{2} }}{{2 \tau^{2} }}(k + 1)\) and when \(v \to \infty ,{\text{then}} z \to \infty\), therefore

where, \(G_{T} (t)\) represents the CDF of DUS Rayleigh distribution given in Eq. (2).

Mean past life

The mean past life (MPL) at time measures “the expected amount of time that the system or component has already operated, given that it is still functioning at time \(T\)”. In other words, it explains “how long has the system or component been operating on average, given that it is still functioning at time \(T\)”. Suppose that the non-negative random variable \(T\) denoting the failure time of a component or system follows DUS Rayleigh distribution. Then the MPL is given by

Using Eq. (1), then MPL can be expressed as

Let \(\frac{{v^{2} }}{{2 \tau^{2} }}(k + 1) = z\), then \(dv = \left( {\frac{{ \tau^{2} }}{k + 1}} \right)\left( {\frac{{2 z \tau^{2} }}{k + 1}} \right)^{{ - \frac{1}{2}}} dz\).

When \(v \to t, {\text{then}} z \to \frac{{t^{2} }}{{2 \tau^{2} }}(k + 1)\) and when \(v \to 0,{\text{then}} z \to 0\), therefore

Where, \(G_{T} (t)\) denotes the CDF of DUS Rayleigh distribution given in Eq. (2).

Stress-strength reliability

Stress-strength reliability provides an estimate of the probability that a component or system will not fail when exposed to a given stress or load. The purpose is to compare strength with stress, that is, the capacity of the system to withstand the actual load applied to the component or system. This approach is essential in designing reliable products as it accounts for the variability in both stress and strength distribution.

Let the random variable \(V_{1}\) represents the strength of the component or system, that is, the maximum load or pressure a component or system can endure and let the random variable \(V_{2}\) denotes the stress level, that is, the actual load that the component experiences in operation. Then stress-strength reliability \(R\)(say) is the probability that the strength exceeds the stress and is mathematically expressed as

Under the assumption that \(V_{1}\) and \(V_{2}\) follows DUS Rayleigh distribution with parameters \(\tau_{1}\) and \(\tau_{2}\) respectively. Therefore, the stress-strength reliability can be written as \(R = \int\limits_{0}^{\infty } {\left( {\frac{e}{e - 1}} \right)\frac{v}{{(\tau_{2} )^{2} }}e^{{ - \left( {\frac{{v^{2} }}{{2 (\tau_{2} )^{2} }} + e^{{ - \frac{{v^{2} }}{{2 (\tau_{2} )^{2} }}}} } \right)}} } \left( {\frac{e}{e - 1}} \right) \left( {1 - e^{{ - e^{{ - \frac{{v^{2} }}{{2 (\tau_{1} )^{2} }}}} }} } \right)dv\).

After simplification we get

Order statistics

Let \(V_{1} , V_{2} , V_{3} ,..., V_{n}\) be a random sample of size \(n\) attaining the values \(v_{1} , v_{2} , v_{3} , ..., v_{n}\) from DUS Rayleigh distribution. Order statistics simply represents the ordered values of the data set. So, the ordered statistics for the given random sample is enumerated as

where,

\(V_{(1)} = Min \left( {V_{1} , V_{2} , V_{3} ,..., V_{n} } \right)\), and \(V_{(n)} = Max \left( {V_{1} , V_{2} , V_{3} ,..., V_{n} } \right)\).

The PDF of \(i^{th}\) ordered statistics for DUS Rayleigh is given by

On using Eqs. (1) and (2), then the above expression of the PDF of \(i^{th}\) ordered statistics becomes

On substituting \(r = 1, {\text{and}} n\) in Eq. (4), we get the PDF of minimum and maximum ordered statistics respectively for the given model and are expressed as.

Entropy measures

Entropy is a fundamental concept in statistics and information theory that provides a quantitative measure of uncertainty, randomness, and information content associated with a random variable or phenomenon. It represents the average amount of information produced by a stochastic source of data and reflects how unpredictable the variable is. Entropy measures find a broad range of application across various domains such as, machine learning, information theory, cluster analysis, economics and finance, engineering and many more, making it essential for both theoretical exploration and practical implementation in various domains. In this section, Renyi and Tsalli’s measure of entropy are explored based on DUS Rayleigh distribution.

The Renyi entropy40 for the given model is

The Tsalli’s entropy41 for the given distribution is given by

After simplification we get

Estimation methods

In this section various methods for estimating parameter of the explored probability distribution will be discussed. These methods include maximum likelihood estimation, Anderson–Darling estimation, Right-tailed Anderson–Darling estimation, Left-tailed Anderson–Darling estimation, Cramer-von Mises estimation, least squares estimation, weighted least squares estimation, maximum product of spacing estimation, minimum spacing absolute distance estimation and minimum spacing absolute log-distance estimation. All these methods provide an estimate of the parameter either by maximizing or minimizing an objective function. The objective function that is to be maximized or minimized is a function of parameter and the random samples drawn from the given population.

Maximum likelihood estimation

Under maximum likelihood estimation method, the likelihood function of a random sample is the required objective function that is to be maximized to estimate unknown parameter. Consider a random sample \(V_{1} , V_{2} , V_{3} ,\ldots, V_{m}\) of size \(m\) assuming the values \(v_{1} ,v_{2} , v_{3} ,\ldots, v_{m}\) respectively drawn from DUS Rayleigh distribution. Then the likelihood function of \(V_{1} , V_{2} , V_{3} ,\ldots, V_{m}\) defined as joint probability density function of \(V_{1} , V_{2} , V_{3} ,\ldots, V_{m}\) is given by

Taking logarithm on both sides of the above expression we get

Differentiating above equation partially with respect to \(\tau\) and equating to zero we get

On solving the above equation, we obtain estimate of the given parameter of the distribution under maximum likelihood estimation method.

Anderson–Darling estimation

The Anderson–Darling estimation method minimizes the Anderson–Darling statistic to find the value of parameter that provides best fit to the distribution. For a random sample \(V_{1} , V_{2} , V_{3} ,\ldots, V_{m}\) of size \(m\) taking the values \(v_{1} ,v_{2} , v_{3} ,\ldots, v_{m}\) and are arranged in ascending order as \(V_{(1)} , V_{(2)} , V_{(3)} ,\ldots, V_{(m)}\) with theoretical CDF, the Anderson–Darling statistic denoted by \(A_{D} (v; \tau )\)(say) is given by

Right-tailed and left-tailed Anderson–Darling estimations

Like the Anderson–Darling estimation method, the right-tailed Anderson–Darling and left-tailed Anderson–Darling estimation methods provide an estimate of the parameter that minimizes the modified Anderson–Darling statistic. For right-tailed Anderson–Darling estimation method, the objective function to be minimized is

Similarly, for left-tailed Anderson–Darling estimation method, the objective function to be minimized is

Cramer-von Mises estimation

In the Cramer-von Mises estimation method, the parameters of the underlying distribution are estimated by minimizing the Cramer-von Mises Statistic used to study goodness-of-fit of the distribution. Given a random sample sorted in increasing order with theoretical CDF, the Cramer-von Mises statistics is expressed as

Least squares estimation

In the case of least squares estimation method for probability distributions, parameters are estimated by minimizing the squared differences between the empirical cumulative distribution and the theoretical cumulative distribution of the chosen distribution. The objective function in this case which is to minimized is mathematically expressed as

Weighted least squares estimation

Weighted least square estimation method is an extension of least squares estimation method. The objective function that is to be minimized in order to obtain estimate of the unknown parameter under weighted least squares estimation method is equal to

Maximum product of spacing estimation

The maximum product of spacing estimation method aims to maximize the product of spacing-the differences between successive values of the theoretical cumulative distribution function evaluated at the ordered data points. The method of maximum product of spacing provides an estimate of unknown parameter by maximizing the following objective function

where \(\Psi_{l} = G_{{V_{(l)} }} (v; \tau ) - G_{{V_{(l - 1)} }} (v; \tau )\) and \(G_{{V_{(l)} }} (v; \tau )\) is the CDF of \(l^{th}\) ordered statistics.

Minimum spacing absolute distance and Minimum spacing absolute log-distance estimation

Similar to maximum product of spacing estimation method, the objective function that is to be minimized for obtaining estimate of the parameter under minimum spacing absolute distance estimation method and minimum spacing absolute log-distance estimation method respectively are given in Eqs. (5) and (6) as below:

where \(\Psi_{l} = G_{{V_{(l)} }} (v; \tau ) - G_{{V_{(l - 1)} }} (v; \tau )\) and \(G_{{V_{(l)} }} (v; \tau )\) is the CDF of \(l^{th}\) ordered statistics.

And,

Data analysis and real data application

Simulation study

In this section, we will conduct simulation study to understand the behavior and compare performance of all the estimating approaches discussed in “Estimation methods” section for estimating parameter of the DUS Rayleigh distribution. For the comparative study, we calculate bias, mean squared error (MSE) and mean relative error (MRE) for the estimate of the parameter under different estimation technique for various samples generated using Monte Carlo simulation in R-software42. We investigated the performance of the distribution’s parameter estimator across a variety of sample sizes, including 25, 50, 75, 100, 150, 250, 400, and 600. By examining both small and large sample sizes, we aimed to assess how the estimator behaves across different data scales. Larger sample sizes are particularly valuable for evaluating the stability and reliability of the estimator, as they provide more consistent estimates by reducing the influence of random variability and outliers. They also offer insights into the model’s ability to generalize to larger, more complex datasets. Although smaller sample sizes, such as 25, 50 may be sufficient for initial assessments, they may not fully capture the complexities of the model’s behaviour in more varied datasets. Consequently, our study sought to provide a comprehensive evaluation by incorporating a broad range of sample sizes, allowing us to draw more robust and generalizable conclusions about the estimator’s accuracy and reliability across different data scales.

The simulation process is repeated 1000 times to generate various samples of size 25, 50, 75, 150,250, 400 and 600 from DUS Rayleigh distribution using Inverse CDF technique. The initial parameter values-0.5, 0.75, 1.25, 1.75, 2.5, and 4.0 are chosen to evaluate the flexibility and robustness of different estimation methods under varying conditions. The rationale behind selecting these values allows us to test whether an estimation method performs consistently across different scales. Some estimation methods may perform well for small parameters but struggle with larger values. A flexible estimator should maintain accuracy and stability across the entire range of chosen parameter values. From this simulation study, the values of bias, MSE, and MRE of estimated parameter under different estimations approaches are presented in Tables 2, 3, 4, 5, 6, 7 and are computed using following mathematical expressions respectively:

Where, \(k\), is number of iterations in the simulation process, and \(\hat{\tau }\) is an estimate of the parameter under particular estimation method. The standard error of the parameter estimate can be calculated as

In terms of MSE and Bias, standard error of the parameter estimate can be also expressed as \(SE(\hat{\tau }) = \sqrt {MSE - (Bias)^{2} }\).

The estimation methods used in this simulation study to estimate bias, MSE, and MRE of estimated parameter are represented as:

\(\Delta_{1}\) = Maximum likelihood estimation method,

\(\Delta_{2}\) = Anderson–Darling estimation method,

\(\Delta_{3}\) = Cramer-von Mises estimation method,

\(\Delta_{4}\) = Maximum product of spacing estimation method,

\(\Delta_{5}\) = Least squares estimation method,

\(\Delta_{6}\) = Weighted least square estimation method,

\(\Delta_{7}\) = Right-tailed Anderson–Darling estimation method,

\(\Delta_{8}\) = Left-tailed Anderson–Darling estimation method,

\(\Delta_{9}\) = Minimum spacing absolute distance estimation method, and

\(\Delta_{10}\) = Minimum spacing absolute log-distance estimation method.

To compare the performance of different estimation approaches, we have assigned ranks to the values of bias, MSE, and MRE on the basis of their value in decreasing order for different estimation methods corresponding to same sample size.

Now, in Table 8, we separately present the ranks (partial ranks) assigned to summed ranks of bias, MSE, and MRE in aggregate under different estimation methods according to the decreasing order corresponding to same sample size. The overall rank for the estimation method is obtained and is mentioned in last row of Table 8.

It can be observed from Tables 2, 3, 4, 5, 6, 7, that the bias of the estimated parameter under each estimation method decreases as the sample size increases, that is, the estimated parameter gets closer to its true value. Furthermore, the value of MSE and MRE also show downward trend as we increase sample size. This declining trend ensures that the estimated parameter value becomes consistent and stable with increased sample size.

The partial ranks and the overall ranks of the various estimation methods are presented in Table 8. From the overall ranks in Table 8, we observed that the rank of estimation method \(\Delta_{1}\) is minimum among all methods followed by \(\Delta_{7}\), \(\Delta_{4}\), \(\Delta_{2}\),\(\Delta_{6}\), \(\Delta_{3}\), \(\Delta_{5}\), \(\Delta_{10}\), \(\Delta_{8}\), and \(\Delta_{9}\). So, we can conclude that the maximum likelihood parameter estimation method \(\Delta_{1}\) is superior for estimating parameter of DUS Rayleigh distribution with minimum bias, MSE and MRE as compared to other methods mentioned. Moreover, in Fig. 7, Fig. 8 and Fig. 9, we have graphically presented the bias, MSE, and MRE respectively of the estimated parameter under various estimation techniques computed in Table 1 for different sample values. From these figures, it can be observed that bias, MSE and MRE of the estimated parameter reduces as we increase sample size. This graphical representation also indicates that maximum likelihood estimation method is better for estimating parameter of DUS Rayleigh distribution as compared to other estimation methods because of minimum bias, MSE and MRE.

Real data application

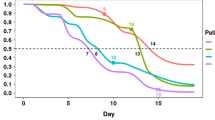

In this section we will demonstrate the performance and significance of the newly explored probability distribution namely DUS Rayleigh distribution (DRD) for analysing real life scenarios. The real-life dataset used in this study is based on COVID-19 about Italy recorded for 111 days from 1 April to 20 July 2020 and the values in the data represents the ratio between daily new deaths and daily new cases. The given dataset presented in Table 9was used by Hassan et al.43 and was also studied by Hossam et al.6

To study whether the developed distribution provides better fit or not for analysing given dataset, various information criterions will be adapted and the performance of the distribution will be compared with other well-known distributions present in the literature. These distributions include Rayleigh distribution (RD), Weibull distribution (WD), Alpha Power Lindely distribution (APLD), and novel Alpha Power Gumbel distribution (NAPGD).

The information criterions used to measure the performance and determine better fit model for modelling real life dataset include Log-likelihood (-2logL), Akaike information criterion (AIC), Corrected Akaike information criterion (CAIC), Bayesian information criterion (BIC), Hannan-Quinn information criterion (HQIC), Cramér-von Misses Statistic (CVMS), Anderson Darling Statistic (ADS), Kolmogorov Smirnov (K-S) test, and K-S Test P-value. These measures are defined as

\({\text{AIC}} = - 2\ln (L) + 2\Theta\), \({\text{CAIC}} = {\text{AIC}} + \frac{{2\Theta^{2} + 2\Theta }}{n - \Theta - 1}\), \({\text{BIC}} = - 2\ln (L) + \Theta \ln (n)\), and \({\text{HQIC}} = - 2\ln (L) + 2\Theta \ln \left\{ {\ln (n)} \right\}\).

where \(\Theta\) represents number of parameters involved in the model and \(n\) denotes the number of observations in the given data set.

The summary statistics for the given data set are presented in Table 10. In Table 11, we have summarized the maximum likelihood estimator (MLE) and corresponding standard error of the parameter(s) of the distributions mentioned above for underlying real-life dataset. The performance criterion values for each of the distribution are highlighted in Table 12.

On the basis of information criterion measures, the distribution with least value of these measures offers best fit for modelling given real life dataset. From Table 12, it can be observed that DUS Rayleigh distribution (DRD) has minimum information criterion values compared to Rayleigh distribution (RD), Weibull distribution (WD), Alpha Power Lindely distribution (APLD), and novel Alpha Power Gumbel distribution (NAPGD). So, DUS Rayleigh distribution provides better fit than these distributions. Also, the minimum values of goodness-of-fit measures Cramér-von Misses Statistic (CVMS), Anderson Darling Statistic (ADS) and Kolmogorov Smirnov (K-S) test together with its p-value suggest that DUS Rayleigh distribution shows a very good fit than base distribution as well as other well-known distributions. Furthermore, the better performance of the DUS Rayleigh distribution is also explained by various graphical plots as shown in Figs. 10, 11, 12, 13, 14, 15, and 16. The behaviour of estimated CDF, estimated survival function, P-P plot, Q-Q plot, and TTT-plot explains that the newly developed model is superior and suitable for modelling given dataset. Figure 16 represents Failure function plot of DRD based on given data set.

Conclusion

This paper presents the DUS Rayleigh distribution as an extension of the standard Rayleigh distribution developed through DUS transformation approach. In this study, the essential statistical properties associated with the developed distribution are examined. To derive accurate and reliable parameter estimate for the introduced model, the multiple parameter estimation strategies are discussed. Through extensive simulation studies, we assess the efficiency and stability of these methods, ultimately identifying the maximum likelihood estimation method (\(\Delta_{1}\)) as the most reliable approach characterized by its low bias, minimal MSE, and reduced MRE. The utility of the DUS Rayleigh distribution is further demonstrated through its application to empirical data, specifically in the context of the COVID-19 pandemic. Comparative analysis against several well-established distributions including Rayleigh distribution (RD), Weibull distribution (WD), Alpha Power Lindely distribution (APLD), and novel Alpha Power Gumbel distribution (NAPGD), indicate that the DUS Rayleigh distribution provides a superior fit, effectively capturing the underlying data complexities. Such flexibility of the DUS Rayleigh distribution makes it a significant tool across various disciplines. Its effectiveness extends beyond medical data analysis to applications in risk management, environmental research, engineering projects, and financial modeling.

Future research could explore the theoretical development of the DUS Rayleigh distribution, investigating potential extensions, generalizations, and alternative formulations. This could include in-depth analysis of Bayesian approaches for parameter estimation, as well as the integration of survival analysis techniques under various censoring conditions and reliability modelling in scenarios involving risk assessment and decision-making. Such investigations would not only enhance the theoretical understanding of the distribution but also expand its real-world applications. Comparative analyses with other complex distributions could further highlight its versatility and adaptability. Furthermore, fostering interdisciplinary collaborations, particularly with fields like actuarial science, epidemiology, bioinformatics, machine learning, economics, engineering, and environmental sciences could lead to significant advancements in the practical implementation and broader adoption of the DUS Rayleigh distribution, offering new avenues for solving complex, domain-specific challenges.

Data availability

The data that supports the findings of this study are available within the article.

References

Almongy, H. M., Almetwally, E. M., Aljohani, H. M., Alghamdi, A. S. & Hafez, E. A new extended Rayleigh distribution with applications of COVID-19 data. Results Phys. 23, 104012. https://doi.org/10.1016/j.rinp.2021.104012 (2021).

Sindhu, T. N., Shafiq, A. & Al-Mdallal, Q. M. On the analysis of number of deaths due to COVID−19 outbreak data, using a new class of distributions. Results Phys. 21, 103747. https://doi.org/10.1016/j.rinp.2020.103747 (2021).

Sindhu, T. N., Shafiq, A. & Al-Mdallal, Q. M. Exponentiated transformation of Gumbel Type-II distribution for modeling COVID-19 data. Alex. Eng. J. 60(1), 671–689. https://doi.org/10.1016/j.aej.2020.09.060 (2021).

Liu, X. et al. Modeling the survival times of the COVID-19 patients with a new statistical model: A case study from China. PLoS ONE 16(7), e0254999. https://doi.org/10.1371/journal.pone.0254999 (2021).

Kilai, M., Waititu, G. A., Kibira, W. A., El-Raouf, M. A. & Abushal, T. A. A new versatile modification of the Rayleigh distribution for modeling COVID-19 mortality rates. Results Phys. 35, 105260. https://doi.org/10.1016/j.rinp.2022.105260 (2022).

Hossam, E. et al. A novel extension of Gumbel distribution: Statistical inference with COVID-19 application. Alex. Eng. J. 61(11), 8823–8842. https://doi.org/10.1016/j.aej.2022.01.071 (2022).

Gemeay, A. M. et al. General two-parameter distribution: Statistical properties, estimation, and application on COVID-19. PLoS ONE 18(2), e0281474. https://doi.org/10.1371/journal.pone.0281474 (2023).

Alomair, A. M., Ahmed, M., Tariq, S., Ahsan-Ul-Haq, M. & Talib, J. An Exponentiated XLindley distribution with properties, inference and applications. Heliyon 10(3), e25472. https://doi.org/10.1016/j.heliyon.2024.e25472 (2024).

Dey, S., Kumar, D., Ramos, P. L. & Louzada, F. Exponentiated Chen distribution: Properties and estimation. Commun. Stat. Simul. Comput. 46(10), 8118–8139. https://doi.org/10.1080/03610918.2016.1267752 (2017).

Dey, S., Alzaatreh, A., Zhang, C. & Kumar, D. A new extension of generalized Exponential distribution with application to ozone data. Ozone Sci. Eng. 39(4), 273–285. https://doi.org/10.1080/01919512.2017.1308817 (2017).

Rather, A. A. & Subramanian, C. Exponentiated Mukherjee-Islam distribution. J. Stat. Appl. Probab. 7(2), 357–361. https://doi.org/10.18576/jsap/070213 (2018).

Rather, A. A. & Özel, G. The Weighted Power Lindley distribution with applications on the life time data. Pak. J. Stat. Oper. Res. 225–237 https://doi.org/10.18187/pjsor.v16i2.2931 (2020).

Rather, A. A. & Ozel, G. A new length-biased power Lindley distribution with properties and its applications. J. Stat. Manag. Syst. 25(1), 23–42. https://doi.org/10.1080/09720510.2021.1920665 (2021).

Rather, A. A., Subramanian, C., Al-Omari, A. I. & Alanzi, A. R. A. Exponentiated Ailamujia distribution with statistical inference and applications of medical data. J. Stat. Manag. Syst. 25(4), 907–925. https://doi.org/10.1080/09720510.2021.1966206 (2022).

Singh, B., Alam, I., Rather, A. A. & Alam, M. Linear combination of order statistics of exponentiated Nadarajah-Haghighi distribution and their applications. Lobachevskii J. Math. 44(11), 4839–4848. https://doi.org/10.1134/s1995080223110318 (2023).

Ahmad, A. et al. Novel Sin-G class of distributions with an illustration of Lomax distribution: Properties and data analysis. AIP Adv. https://doi.org/10.1063/5.0180263 (2024).

Qayoom, D. & Rather, A. A. Weighted transmuted Mukherjee-Islam distribution with statistical properties. Reliab. Theory Appl. 19(2(78)), 124–137. https://doi.org/10.24412/1932-2321-2024-278-124-137 (2024).

Qayoom, D. & Rather, A. A. A comprehensive study of length-biased transmuted distribution. Reliab. Theory Appl. 19(2(78)), 291–304. https://doi.org/10.24412/1932-2321-2024-278-291-304 (2024).

Kumar, D., Singh, U. & Singh, S. K. A method of proposing new distribution and its application to bladder cancer patients data. J. Stat. Appl. Probab. Lett. 2(3), 235–245 (2015).

Tripathi, A., Singh, U. & Singh, S. K. Inferences for the DUS-exponential distribution based on upper record values. Ann. Data Sci. 8(2), 387–403. https://doi.org/10.1007/s40745-019-00231-6 (2019).

AbuJarad, M. H., Khan, A. A. & AbuJarad, E. S. A. Bayesian survival analysis of generalized DUS exponential distribution. Austrian J. Stat. 49(5), 80–95. https://doi.org/10.17713/ajs.v49i5.927 (2020).

Kavya, P. & Manoharan, M. On a generalized lifetime model using DUS transformation. In Infosys Science Foundation Series, 281–291 https://doi.org/10.1007/978-981-15-5951-8_17 (2020).

Gül, H. H., Acıtaş, Ş., Şenoğlu, B., andBayrak, H. DUS inverse Weibull distribution and its applications. In 19th International Symposium on Econometrics, Operation Research and Statistics, Antalya, Turkey, 743–745 (2018).

Deepthi, K. S. & Chacko, V. M. An upside-down bathtub-shaped failure rate model using a DUS transformation of Lomax distribution. In CRC Press eBooks-Stochastic Models in Reliability Engineering, Taylor & Francis Group, 81–100. https://doi.org/10.1201/9780429331527-6 (2020).

Payyoor, G. & Chacko, V. M. DUS transformation of Inverse Weibull distribution: An upside-down failure rate model. Reliab. Theory Appl. 16(2(62)), 58–71. https://doi.org/10.24412/1932-2321-2021-262-58-71 (2021).

Karakaya, K., Kinaci, I., Kus, C. & Akdogan, Y. On the DUS-Kumaraswamy distribution. ISTATISTIK J. Turk. Stat. Assoc. 13(1), 29–38 (2021).

Thomas, B. & Chacko, V. M. Power generalized DUS transformation of exponential distribution. Int. J. Stat. Reliab. Eng. 9(1), 1–12. https://doi.org/10.48550/arxiv.2111.14627 (2022).

Gül, H. H., Acıtaş, Ş, Bayrak, H. & Şenoğlu, B. DUS inverse Weibull distribution and parameter estimation in regression model. Süleyman Demirel Üniversitesi Fen Bilimleri Enstitüsü Dergisi 27(1), 42–50. https://doi.org/10.19113/sdufenbed.1107862 (2023).

Qayoom, D., Rather, A. A., Alsadat, N., Hussam, E. & Gemeay, A. M. A new class of Lindley distribution: System reliability, simulation and applications. Heliyon https://doi.org/10.1016/j.heliyon.2024.e38335 (2024).

Rayleigh, L. On the resultant of a large number of vibrations of the same pitch and of arbitrary phase. Lond. Edinb. Dublin Philos. Mag. J. Sci. 10(60), 73–78. https://doi.org/10.1080/14786448008626893 (1880).

Kundu, D. & Raqab, M. Z. Generalized Rayleigh distribution: different methods of estimations. Comput. Stat. Data Anal. 49(1), 187–200. https://doi.org/10.1016/j.csda.2004.05.008 (2005).

MirMostafaee, S. M. T. K., Mahdizadeh, M. & Lemonte, A. J. The Marshall-Olkin extended generalized Rayleigh distribution: Properties and applications. Commun. Stat. Theory Methods 46(2), 653–671. https://doi.org/10.1080/03610926.2014.1002937 (2016).

Marshall, A. W. & Olkin, I. A new method for adding a parameter to a family of distributions with application to the exponential and Weibull families. Biometrika 84(3), 641–652. https://doi.org/10.1093/biomet/84.3.641 (1997).

Rashwan, N. I. A note on Kumaraswamy exponentiated Rayleigh distribution. J. Stat. Theory Appl. 15(3), 286–295. https://doi.org/10.2991/jsta.2016.15.3.8 (2016).

Ateeq, K., Qasim, T. B. & Alvi, A. R. An extension of Rayleigh distribution and applications. Cogent Math. Stat. 6(1), 1622191. https://doi.org/10.1080/25742558.2019.1622191 (2019).

Bantan, R. A. et al. Some new facts about the Unit-Rayleigh distribution with applications. Mathematics 8(11), 1954. https://doi.org/10.3390/math8111954 (2020).

Falgore, J. Y., Isah, M. N. & Abdulsalam, H. A. Inverse Lomax-Rayleigh distribution with application. Heliyon 7(11), e08383. https://doi.org/10.1016/j.heliyon.2021.e08383 (2021).

Ahmad, A. et al. New hyperbolic Sine-generator with an example of Rayleigh distribution: Simulation and data analysis in industry. Alex. Eng. J. 73, 415–426. https://doi.org/10.1016/j.aej.2023.04.048 (2023).

Tripathi, H. & Agiwal, V. A new version of univariate Rayleigh distribution: Properties, estimation and it’s application. Int. J. Syst. Assur. Eng. Manag. https://doi.org/10.1007/s13198-024-02543-0 (2024).

Alfréd, R. On measures of information and entropy. In Proceedings of the fourth Berkeley Symposium on Mathematics, Statistics and Probability 1960, 547–561 (1961).

Constantino, T. Possible generalization of Boltzmann-Gibbs statistics. J. Stat. Phys. 52(1–2), 479–487 (1988).

R Core Team. R: A Language and Environment for Statistical Computing. https://www.R-project.org/ (R Foundation for Statistical Computing, 2023).

Hassan, A. S., Almetwally, E. M. & Ibrahim, G. M. Kumaraswamy Inverted Topp-Leone distribution with applications to COVID-19 data. Comput. Mater. Continua 68(1), 337–358. https://doi.org/10.32604/cmc.2021.013971 (2021).

Acknowledgements

Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2025R734), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Contributions

All authors have worked equally to write and review the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Qayoom, D., Rather, A.A., Alqasem, O.A. et al. Development of a novel extension of Rayleigh distribution with application to COVID-19 data. Sci Rep 15, 18535 (2025). https://doi.org/10.1038/s41598-025-03645-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-03645-w