Abstract

This study refines the Extended Unified Theory of Acceptance and Use of Technology (UTAUT2) to explore the factors influencing the adoption and utilization of artificial intelligence (AI) by Chinese mathematics teachers in STEM education, aiming to promote broader integration of AI within this domain. Utilising structural equation modelling (SEM) on survey data collected from 503 in-service mathematics teachers across China, the findings indicate that performance expectancy (PE), hedonic motivation (HM), and price value (PV) significantly affect teachers’ behavioural intention (BI). Moreover, the study finds that effort expectancy (EE), facilitating conditions (FC), and price value (PV) significantly influence teachers’ actual usage behaviour (UB). Notably, price value emerges as a crucial factor influencing both BI and UB, underscoring the importance teachers place on balancing the benefits of AI teaching tools with the time investment required for their adoption.

Similar content being viewed by others

Introduction

Recently, the application of AI in various fields has garnered attention, particularly in STEM education, where it is fostering a transformative shift paradigms1,2,3,4. With the advancement of machine learning and large language models (LLMs), artificial intelligence technologies offer interactive learning opportunities that significantly enhance students’ creativity, critical thinking, collaboration, and problem-solving skills skills5,6,7,8. This convergence of artificial intelligence and STEM education redefines the roles of educators from mere knowledge transmitters to effective learning facilitators and collaborators1,9,10. while empowering students to become active participants in constructing and applying knowledge independently.

However, the successful integration of AI into education ultimately depends on teachers’ acceptance and willingness to adopt these technologies6,11,12,13,14. Mathematics, as the foundation of all STEM disciplines, is critical in this transformation; however, mathematics is often underrepresented in STEM education research15,16,17,18. Current research primarily focuses on assessing mathematics teachers’ readiness to adopt technology19,20,21. However, there is a notable gap in attention to their acceptance of artificial intelligence specifically within an interdisciplinary STEM framework. Consequently, this study seeks to address the significant research gap by examining the principal factors that influence mathematics teachers’ acceptance and adoption of artificial intelligence technologies in the field of STEM education.

Extensive research has explored the adoption and continued use of educational technology by higher education faculty. For example, Jeyaraj et al.22 integrated the TAM, TPACK, and UTAUT models to analyze the factors influencing sustained technology use among higher education instructors in emerging economies. Similarly, Al-Adwan et al.23 focused on educators’ perceptions, experiences, and barriers to using educational technologies. However, the present study shifts the focus to the Chinese context, specifically investigating K–12 mathematics teachers’ initial intentions to adopt and use artificial intelligence (AI) technologies within STEM education. At present, the application of AI in STEM teaching at the K–12 level in China remains in its early stages, and teachers generally lack sufficient understanding, application skills, and integration capabilities related to AI2,24. Therefore, identifying the key factors that influence mathematics teachers’ adoption of AI technologies is of great theoretical and practical significance for promoting innovation in STEM education and achieving effective integration of AI technologies.

This study aims to address two core research questions: (1) What are the key determinants of K–12 mathematics teachers’ intention to adopt AI technologies in STEM education? (2) What factors influence their actual acceptance behavior when integrating AI into interdisciplinary STEM teaching?

To explore these underlying mechanisms, this study adopts the Unified Theory of Acceptance and Use of Technology 2 (UTAUT2), a comprehensive theoretical framework that has been widely validated in educational research contexts25,26. UTAUT2 extends the original UTAUT model27 and is particularly well-suited for understanding voluntary technology adoption in learning environments28.

This study aims to contribute to the field in three ways: (1) identifying the key factors that influence mathematics teachers’ adoption of AI technologies in STEM education; (2) providing theoretical support for targeted professional development programs to enhance the application of AI in STEM teaching; and (3) offering practical recommendations for policymakers and technology developers to better support mathematics teachers in AI-driven educational environments.

Literature review and hypothesis development

The role and application of AI in STEM education

In today’s digital landscape, artificial intelligence (AI) is emerging as a transformative force in education, particularly within STEM education29. AI integration in STEM education reshapes traditional teaching by incorporating intelligent technologies and data-driven methods strategies6,30,31,32,33. AI and STEM education are inherently connected: while STEM provides a context for technological literacy development, AI serves as a catalyst that enhances interdisciplinary learning and supports critical and computational thinking34,35. This synergy enables students to actively engage with AI tools to explore cross-disciplinary concepts and apply knowledge in practical, meaningful ways.

Research has examined AI in STEM education. Xu and Ouyang2 reviewed 71 studies from 2011 to 2021, identifying six application areas: learning prediction, intelligent tutoring systems, behavior monitoring, automated assessment, personalized learning recommendations, and teaching management. Their findings revealed that most applications target higher education, especially in computing and engineering, enhancing student engagement and critical thinking. However, K–12 implementation, particularly in mathematics and science, faces challenges like inadequate infrastructure, low teacher proficiency, and insufficient long-term effectiveness data. Similarly, Yang et al.3 analyzed 186 papers from 2013 to 2023, outlining four integration paths: enhancing inquiry-based learning, merging with emerging technologies (e.g., AR/VR), applying practical AI tools, and improving student motivation. Yet, they noted that K–12 research is sparse and regionally uneven due to technological constraints.

Mathematics teachers’ perceptions and adoption of AI in STEM

Research shows that the adoption of AI among mathematics teachers is shaped by personal psychological factors (such as self-efficacy and attitudes toward AI) as well as external conditions (including policy support, availability of infrastructure, and cultural context) contexts)11,12. Wang et al.36 noted that self-efficacy and perceived usefulness strongly affect teachers’ adoption behaviors. Furthermore, differences across educational settings and countries are evident, as indicated by Xu and Ouyang2who found K–12 mathematics classrooms significantly lag behind higher education in AI implementation due to limited teacher readiness and institutional support.

Despite generative AI’s promising potential in personalized learning and student engagement37mathematics teachers often face ongoing challenges, such as inadequate training, insufficient technical literacy, limited awareness of AI applications, and resource constraints38,39,40,41. These challenges are especially pronounced in K–12 contexts, emphasizing a significant gap in existing research, which has largely overlooked AI integration within this educational level and discipline.

Teachers’ acceptance of emerging technologies significantly influences their successful integration into classroom teaching. Previous research frequently employs theoretical frameworks such as the Technology Acceptance Model (TAM) and the Unified Theory of Acceptance and Use of Technology 2 (UTAUT2) to explore teachers’ adoption behaviors towards Artificial Intelligence (AI). For instance, Nikou and Economides42 found that perceived ease of use—a core TAM factor—significantly affects teachers’ intention to use AI, alongside external support and outcome quality. Wang et al.36indicated that teachers’ self-efficacy, expected benefits, and attitudes toward AI strongly influence their adoption decisions. Using the UTAUT2 model, Wijaya et al.43 identified performance expectancy as the primary factor shaping Chinese pre-service mathematics teachers’ intention to use AI chatbots, indicating a focus on practical teaching benefits over social influences or habitual factors. Similarly, Al Darayseh44applying TAM, concluded that teachers generally show high acceptance of classroom AI integration, with minimal impact from anxiety.

As generative AI evolves, mathematics teachers increasingly recognize its potential in STEM education. Generative AI can enhance learner engagement, provide personalized learning experiences, track student performance, and alleviate anxiety, thereby promoting inclusive education37. However, teachers remain concerned about AI tools’ maintenance and costs, especially in budget-limited institutions. Additionally, integrating AI with STEM teaching significantly improves students’ capability development compared to traditional methods34indicating AI’s critical role in transforming STEM educational practices.

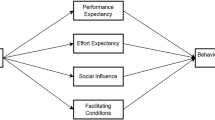

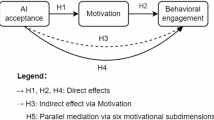

The literature review shows that various factors influence mathematics teachers’ acceptance of AI technologies in STEM education. This study utilizes the Unified Theory of Acceptance and Use of Technology 2 (UTAUT2) as its framework. Developed by Venkatesh et al.45 UTAUT2 extends the original UTAUT model and is widely employed to examine technology adoption in educational contexts. The model has been extensively used to analyze user behavior across various technologies28,46. This study examines key UTAUT2 constructs—Performance Expectation, Effort Expectation, Social Influences, Hedonic Motivation, Habit, Facilitating Conditions, and Price Value—on mathematics teachers’ behavioral intentions and usage behavior regarding AI in STEM education. Figure 1 illustrates the theoretical model.

Research hypotheses

The hypotheses proposed in this study are shown in Fig. 1.

Performance Expectancy (PE) is considered one of the core predictors in the UTAUT model47. Performance expectancy refers to the extent to which an individual believes that using technology will enhance their job performance or tasks45. This interpretation closely relates to the concept of perceived usefulness found in the Technology Acceptance Model48. Previous research on technology acceptance consistently demonstrates that Performance Expectancy significantly influences an individual’s willingness to embrace new technologies49. Therefore, this study hypothesizes that performance expectancy positively influences mathematics behavioral intentions (H1a) and usage behavior (H2a) when adopting AI technologies in STEM education.

Effort Expectancy (EE) refers to the ease of use associated with technology27. Research has shown that when teachers view artificial intelligence as easy to use, they are more likely to integrate it into their teaching practices50. AI helps teachers identify students’ needs and optimize strategies via data analysis and feedback, encouraging adoption51. Therefore, this study hypothesizes that effort expectancy positively influences mathematics teachers’ behavioral intentions (H1b) and usage behavior (H2b) regarding the adoption of AI technologies in STEM education.

Social Influences (SI) refers to how individuals believe that important others, such as colleagues or supervisors, think they should adopt new technology27. Social norms and peer pressure significantly influence technology adoption. This study defines social influence as the extent to which teachers feel encouraged by peers and leaders to incorporate artificial intelligence into STEM teaching. It is a critical factor affecting technology usage behavior and positively impacts users’ willingness to adopt new technologies52. Therefore, this study hypothesizes that social influences positively affect mathematics teachers’ behavioral intentions (H1c) and usage behavior (H2c)in adopting AI technologies in STEM education.

Hedonic Motivation (HM) is a newly introduced variable in the UTAUT2 model, reflecting the enjoyment or satisfaction users experience when interacting with technology27. If using technology is enjoyable, it can strongly motivate its use26. The integration of AI into STEM education is emerging as a novel teaching approach for mathematics teachers, potentially offering a more enjoyable teaching experience for them and creating a more engaging and lively classroom for students6,53. Therefore, this study hypothesizes that hedonic motivation positively influences mathematics teachers’ behavioral intentions (H1d) and usage behavior (H2d) in adopting AI technologies in STEM education.

Perceived Risk (PR) refers to users’ subjective assessment of potential negative consequences or losses linked to the adoption of new technology. Many studies indicate that aspects related to perceived risk are variables that negatively affect consumers’ intentions to use technology54. Individuals perceive uncertainty before adopting a behavior, believing that it will not increase expected benefits55. Research shows that perceived risk significantly affects users’ behavioral intentions to use technology, and this impact is often negative56. Therefore, this study hypothesizes that Perceived Risk (PR) negatively influences mathematics teachers’ behavioral intentions (H1e) and usage behavior (H2e) in adopting AI technologies in STEM education.

Habit (HT) is defined as the degree to which an individual instinctively engages in certain behaviors as a result of prior learning27. It can also be viewed as user behavior that is directed toward future actions, representing a perceptual structure shaped by past experiences and outcomes47. The more familiar a person becomes with using technology, the more likely they are to continue its use57. Therefore, this study hypothesizes that Habit (HT) positively influences mathematics teachers’ behavioral intentions (H1f) and usage behavior (H2f) in adopting AI technologies in STEM education.

Facilitating Conditions (FC) refer to an individual’s perception of the availability of technical and organizational resources that enable and support the effective use of technology45. Research indicates that FC is a key factor influencing users’ behavioral intentions and actual usage behaviors. Organizations or environments that provide technical support and timely assistance can increase users’ acceptance of technological products, thereby fostering the adoption of new technologies25. Therefore, this study hypothesizes: Habit (HT) positively influences mathematics teachers’ behavioral intentions (H1g) and usage behavior (H2g) in adopting AI technologies in STEM education.

Price Value (PV) refers to the belief that the amount paid for a technological tool is matched by the benefits received. If the perceived benefits outweigh the Price Value (PV), this will positively influence Behavioral Intention (BI)45. In the integration of AI into STEM education, teachers often do not have to pay direct costs, but the “price” they pay is in terms of time spent learning, creating, and applying these materials. In this scenario, it is assumed that teachers will carefully weigh the relationship between benefits and costs. Since Price Value (PV) applies to voluntary practitioners58this concept is suitable for scenarios involving the integration of AI into STEM education. Therefore, this study hypothesizes that Price Value (PV) positively influences mathematics teachers’ behavioral intentions (H1h) and usage behavior (H2h) in adopting AI technologies in STEM education.

Behavioral Intentions (BI) refer to the extent to which an individual is inclined, prepared, or willing to adopt and use a particular technology45. When users exhibit Behavioral Intentions (BI) while using innovative technologies, they are likely to engage in usage behaviors59. Behavioral Intentions can reasonably and accurately predict consumers’ future behaviors60. In this study, Behavioral Intentions represent the extent to which mathematics teachers are willing to integrate AI into their STEM teaching practices. Ultimately, Use Behavior pertains to the actual interaction with technology, reflecting how an individual’s intent to use the technology translates into practical application45. UTAUT2, developed by Venkatesh, explains how Use Behavior (UB) is influenced by Behavioral Intentions.

Use Behavior (UB) refers to the actual behavior of using AI in STEM Education, which can also be measured by the frequency of AI usage in STEM Education over a given period of time. In this study, Use Behavior is defined as mathematics teachers’ actual implementation and application of AI in their STEM education practices.

Previous research indicates that behavioral intention is a crucial determinant in predicting the actual use behavior of technology in education27. This relationship has been confirmed in studies examining teachers’ adoption of artificial intelligence in education61. Therefore, this study further suggests that Mathematics teachers’ behavioral intentions significantly and positively influence their actual use of AI in STEM education(H3).

Methodology

This study employs quantitative research methods to explore how to enhance K-12 mathematics teachers’ willingness and use behavior in integrating Artificial Intelligence (AI) into STEM education. In our experiments, informed consent was obtained from all participants, and all methods were performed in accordance with relevant guidelines and regulations.

Sampling

The sample included K–12 mathematics teachers from various age groups, education levels, and teaching stages (primary, junior high, Vocational School (Junior), and high school), providing a diverse representation of frontline educators in China’s basic education system. This study conducted an online questionnaire survey to explore the attitudes and perceptions of Chinese mathematics teachers regarding the integration of Artificial Intelligence (AI) into STEM education. The survey was designed and distributed using the Wenjuanxing(https://www.wjx.cn) platform and was disseminated to mathematics teachers in four provinces—Qinghai in Northwest China, Henan and Beijing in Central China, and Hainan in Southern China—via social media tools such as WeChat. The study selected four provinces—Beijing, Henan, Hainan, and Qinghai—to ensure geographic diversity and include perspectives from various economic development levels. Convenience sampling recruited mathematics teachers in each province. Although convenience sampling was used, the inclusion of economically diverse provinces helps mitigate regional bias and increases the generalizability of findings across different educational contexts in China. Data collection occurred from October to December 2024, covering groups of mathematics teachers who have used AI in STEM teaching activities and those who have not. To ensure participants fully understood the context of the survey, the questionnaire began with a video introduction and a written explanation about integrating AI in STEM education, asking participants to answer after reading the full explanation. A total of 503 questionnaires were retrieved. After excluding responses with missing data on core items or uniform answers (e.g., selecting “1” for all items), 474 valid samples were retained, resulting in a 94.2% effective response rate. Participants were grouped by age in ten-year intervals and were recruited voluntarily basis. This exceeds the minimum sample size recommended for SEM and ensures sufficient power for analyzing the model’s eight latent constructs and 30 indicators62. In this study sample, there was a significant gender imbalance, with female teachers dominating (398) and fewer male teachers (76). This gender imbalance trend is consistent with the overall population distribution in China’s teaching industry. In China, women hold the majority of teaching positions, especially in elementary and middle schools63. All respondents submitted their questionnaires digitally, and demographic details are provided in Table 1.

Questionnaire design

A questionnaire survey method was used to meet the research objectives. The final questionnaire was organised into two sections: the first part collected demographic information, including teacher gender, age, region, K-12 education level, and frequency of AI usage (refer to Table 1). The second part, which is the main section of the questionnaire, based on the modified UTAUT2 model, included 30 items (3 items per construct) designed to assess mathematics teachers’ perceptions of the factors influencing their behavioural intentions and actual usage behaviours when using AI in STEM education. The survey employed a 5-point Likert scale, ranging from 1 (strongly disagree) to 5 (strongly agree). Drawing on previous research, all variables and the questionnaire were meticulously refined and revised43,45,64. The full list of questionnaire items is provided in the Supplementary Information.

Results

The preliminary data underwent screening using IBM SPSS 27, including tests for normality, reliability analysis, and exploratory factor analysis. Subsequently, confirmatory factor analysis and structural equation modeling were conducted using IBM AMOS 24 to validate the measurement model and examine the proposed relationships. The findings confirm that the reliability and validity of all constructs align with the recommended standards, ensuring the robustness of the measurement model. AMOS is software designed for Structural Equation Modeling65. SEM is a statistical method that allows researchers to explore intricate relationships between observed and latent variables. This study utilized AMOS software and embraced a covariance-based structural equation modeling approach, particularly suited for validating established theories. CB-SEM necessitates stringent compliance with data assumptions, making it well-suited for hypothesis testing and theory validation. Conversely, PLS-SEM provides greater flexibility and is frequently employed for exploratory research and theory development66. If the goal of the research is to test and validate theories, then CB-SEM is the appropriate method to use. In contrast, for objectives focused on prediction and theory development, PLS-SEM is the better choice. Conceptually and practically, PLS-SEM resembles multiple regression analysis. Its primary aim is to maximize the explained variance in causal constructs while evaluating the data quality based on the measurement characteristics model67.

Descriptive statistics and normality assessment

Descriptive statistics, including means, standard deviations, skewness, and kurtosis, examined the distribution properties of the observed variables and assessed normality. Mean values ranged from 2.97 to 3.82, indicating general agreement with questionnaire statements. Standard deviations varied from 0.564 to 0.918, reflecting differing response dispersion across constructs. Skewness ranged from − 0.808 to − 0.057, and kurtosis from − 0.545 to 1.01, all within accepted thresholds of |skewness| < 1 and |kurtosis| < 268,69. These results suggest approximate univariate normality and meet assumptions for covariance-based structural equation modeling (CB-SEM), making the dataset suitable for further confirmatory modeling.

Reliability analysis

Table 3 presents the findings from the reliability analysis. The results show that all items have Corrected Item-Total Correlation (CITC) values exceeding 0.5, which indicates that scores for all items within the different constructs are reliably grouped within a comparable range, reflecting solid internal consistency70. All constructs have a Cronbach’s alpha value greater than 0.7, indicating good reliability of the questionnaire71. Furthermore, the Cronbach’s alpha for individual constructs does not improve upon removing any items, indicating good internal consistency in the data72. Therefore, the collected data are highly reliable and suitable for further analysis. The assessment of Common Method Bias (CMB) adhered to Kock’s73 framework, which indicates that CMB in Structural Equation Modeling (SEM) primarily stems from measurement methods utilized. To evaluate this, a comprehensive collinearity test was conducted on all latent variables within the model, as shown in Table 3. The Variance Inflation Factors (VIF) derived from this analysis are below the critical threshold of 3.3, as set by Kock73signifying that Common Method Bias (CMB) is not a major concern for this study. This result indicates that our model is not notably affected by Common Method Bias (CMB), which reinforces the strength of our research methodology.

Exploratory factor analysis

Exploratory Factor Analysis. We conducted principal component analysis using the varimax rotation method for our exploratory factor analysis (see Table 4). The Kaiser–Meyer–Olkin (KMO) test returned a value exceeding 0.5, while Bartlett’s test of sphericity produced a p-value of under 0.05. These findings indicate a strong partial correlation among the items, allowing us to reject the null hypothesis that the correlation matrix is an identity matrix, thereby confirming that the collected data is suitable for factor analysis analysis74. The exploratory factor analysis findings indicated that each item’s communality value was above 0.5 within its construct, confirming that all items had strong correlations with others in the same construct75. Upon extracting the new factors, each construct revealed one factor with an eigenvalue above 1, and the cumulative variance explained was over 50%. Additionally, every item exhibited factor loadings exceeding 0.6. Thus, the data fulfills the unidimensionality requirement as indicated in previous research76.

Confirmatory factor analysis

Confirmatory Factor Analysis (CFA) was performed to evaluate the model’s reliability and validity (Table 5). Compared to the benchmarks suggested by Hair et al.77the model fit indices in this study satisfied the recommended thresholds, indicating a good model fit. The RMSEA and SRMR decreased by less than 0.05, whereas GFI, AGFI, NFI, and CFI saw an increase that stayed within 0.1. These results indicate that Common Method Bias (CMB) does not pose a significant problem in the dataset78.

Table 6 presents the results of the Confirmatory Factor Analysis (CFA). The findings show that all items had factor loadings greater than 0.6, and the squared multiple correlations (SMC) surpassed 0.4, satisfying the recommended criteria established in the study by Taylor and Todd79. The Average Variance Extracted (AVE) and Composite Reliability (CR) for each construct were calculated based on factor loadings. The results indicated that the AVE for each construct exceeded 0.4, while the CR values surpassed 0.6, suggesting that the data demonstrated sufficient convergent validity80.

The Fornell–Larcker criterion was used to assess discriminant validity, as shown in Table 7. This table displays the diagonal elements as each construct’s square root of the Average Variance Extracted (AVE). In contrast, the off-diagonal elements represent the Pearson correlation coefficients between various constructs. According to the results, the square root of AVE for each construct exceeds its highest correlation with any other construct, suggesting that each construct exhibits more significant shared variance with its indicators than those of different constructs. Furthermore, all correlation coefficients remain below 0.8, a threshold frequently referenced in prior research to confirm discriminant validity81. This ensures that the constructs in the study maintain sufficient conceptual distinction, preventing excessive overlap and reinforcing the integrity of the measurement model82.

Structural equation model

This study utilized maximum likelihood estimation to build the structural equation model (SEM). To ensure robust results, 2000 bootstrap samples were utilized, with 95% confidence intervals calculated for parameter estimation. The model fit indices shown in Table 8 demonstrate that all values meet the recommended thresholds from previous research, affirming that the model shows a strong fit to the data. These findings validate the appropriateness of the SEM framework for analyzing the relationships among the study variables77.The calculation results of the structural equation model are shown in Fig. 2; Table 9.

The results indicate that only performance expectancy, hedonic motivation, and price value have a direct and significant effect on teachers’ behavioral intentions. In contrast, factors like effort expectancy, social influence, perceived risk, habit, and facilitating conditions do not significantly impact behavioral intention. Consequently, hypotheses H1a, H1d, and H1h are validated, whereas hypotheses H1b, H1c, H1e, H1f, and H1g are not backed by the data.

The research found that effort expectancy, facilitating conditions, and price value have a significant impact on teachers’ actual usage behavior. In contrast, performance expectancy, social influence, habit, hedonic motivation, and perceived risk do not significantly affect this behavior effect. Therefore, hypotheses H2b, H2d, and H2h are upheld, affirming that ease of use, supportive conditions, and cost factors are significant in the adoption process. Conversely, hypotheses H2a, H2c, H2e, H2f, and H2g lack support, suggesting these elements may not be crucial to teachers’ technology usage behavior.

The study revealed a notable positive correlation between behavioral intention and actual usage behavior, showing that the intention to utilize AI in STEM courses significantly predicts actual usage among mathematics teachers. This supports earlier research that emphasizes behavioral intention as a vital and impactful element in technology adoption. These results highlight the need to foster positive attitudes towards technology to improve actual usage. The detailed relationships among the variables and comprehensive findings are illustrated in the final model, found in Fig. 3, titled “Path Analysis Results.”.

Discussion

This study examined the factors affecting K–12 mathematics teachers’ adoption of AI in STEM education using the UTAUT2 model. Results indicate that Performance Expectancy, Hedonic Motivation, and Price Value significantly influence teachers’ behavioral intentions, while Effort Expectancy, Facilitating Conditions, and Price Value impact actual use. Conversely, Social Influence, Habit, and Perceived Risk had no significant effects. These results partly support the UTAUT2 framework, suggesting some factors may behave differently in K–12 STEM contexts. The following discussion elaborates on each hypothesis.

Performance expectancy

The results support H1a, showing that performance expectancy positively influences mathematics teachers’ behavioral intention to use AI in STEM education. This aligns with previous research suggesting that when teachers believe AI can enhance instructional effectiveness, they are more likely to consider adopting it83,84,85. In the context of STEM education, mathematics teachers may be especially motivated by AI’s ability to enhance efficiency in lesson planning, assessment, and student differentiation.

H2a was unsupported; performance expectancy did not significantly affect actual usage. This suggests a gap between intention and action, likely due to limited access to AI tools, lack of school support, or perceived implementation complexity. While teachers see AI as beneficial, real-world barriers may hinder consistent use. This aligns with previous research in STEM education, which shows that positive attitudes toward technology don’t always result in classroom implementation due to practical challenges constraints86,87,88. These findings underscore the importance of addressing real-world implementation challenges while reinforcing the perceived value of AI in education.

Effort expectancy

H1b was unsupported; effort expectancy did not significantly influence mathematics teachers’ intention to use AI in STEM education. This indicates that even if AI tools seem easy to use, this alone may not drive teachers’ adoption intentions. This finding contrasts with earlier research identifying effort expectancy as a key factor in technology adoption83,84. In STEM education, mathematics teachers may prioritize pedagogical value and classroom impact over perceived ease of use when forming their intentions.

H2b was supported, indicating that effort expectancy positively affects actual usage behavior. While ease of use may not initially motivate teachers to consider AI, it becomes crucial once they try it. If AI tools are perceived as user-friendly and requiring minimal effort, teachers are more likely to continue using them. This aligns with findings highlighting usability as essential for sustained technology use in STEM, where time and cognitive load are limited89. These results emphasize the need for designing AI tools that are pedagogically meaningful and intuitive, aiding both initial use and long-term integration.

Social influence

H1c and H2c were unsupported; social influence did not significantly affect mathematics teachers’ behavioral intention or actual AI usage in STEM education. This suggests that encouragement from colleagues, administrators, or institutional culture may not decisively influence teachers’ decisions to adopt AI tools in STEM education. These findings deviate from some previous studies89,90which suggested that peer and organizational expectations could shape technology adoption, particularly in the early stages of diffusion.

In STEM education, this result may reflect the individualistic and expertise-driven nature of mathematics instruction, where teachers prioritize personal judgment over external opinions. Given the early stage of AI adoption in many schools, social norms around AI integration may not be well established, reducing their influence on teacher behavior2. Thus, creating a supportive culture around AI use—through communities of practice, peer modeling, and visible leadership—may be necessary before social influence can meaningfully affect adoption processes.

Hedonic motivation

The results support H1d, indicating that hedonic motivation significantly and positively influences mathematics teachers’ behavioral intention to use AI in STEM education. This suggests that when teachers find AI tools enjoyable or intrinsically satisfying to use, they are more likely to express a willingness to adopt them. This finding is consistent with previous studies showing that the pleasure or enjoyment derived from using new technologies can enhance adoption intentions, particularly during the early stages of diffusion83,85. In the context of STEM teaching, the novelty and interactivity of AI tools may stimulate positive emotional responses, thereby increasing teachers’ openness to technology integration.

H2d was unsupported; hedonic motivation did not significantly affect usage behavior. This shows that while mathematics teachers may be curious about AI, their classroom implementation relies more on practical factors like time constraints, curriculum fit, and technical support43,91,92. This intention–behavior gap is common in educational technology research and highlights the limitations of emotion-driven factors in overcoming real-world barriers93. Thus, to ensure sustained AI use in STEM education, it is crucial to supplement motivation-building strategies with concrete resources and implementation support.

Perceived risk

H1e and H2e were unsupported; perceived risk did not significantly affect AI usage among mathematics teachers in STEM education. Concerns about negative outcomes, like data privacy, job displacement, or ethics, may not be central to their AI adoption decisions. This finding contrasts with studies in other domains where perceived risk hinders technology uptake91.

One explanation lies in the institutional and cultural context of Chinese K–12 education. AI tools are often introduced through top-down selection by school administrators or education bureaus, who vet tools for safety and alignment with standards. Consequently, mathematics teachers are less likely to evaluate risks independently and may view approved tools as inherently trustworthy. Additionally, the controlled digital environment in schools and limited autonomy in tool selection may further reduce perceived uncertainty. This filtering may lead teachers to see AI not as disruptive, but as a sanctioned aid for instruction, particularly in structured STEM subjects, such as mathematics94.

Habit

H1f and H2f were both unsupported; habit did not significantly affect mathematics teachers’ behavioral intention or actual usage of AI in STEM education. In STEM education, particularly for mathematics teachers, AI tools are still in the early stages of integration and are not yet embedded in daily teaching practices. The limited and irregular use among teachers suggests that AI habits are not yet established. This aligns with studies indicating that when technology is new or used sporadically, habits have a minimal influence on adoption decisions45,89. Until such conditions are established, habit is unlikely to serve as a reliable driver of AI adoption in educational contexts. In STEM education, AI integration remains relatively novel, and teachers have yet to develop consistent routines for its use. Without sustained exposure and repeated application, AI tools are unlikely to become part of teachers’ automatic instructional behaviors. Therefore, habit may become more relevant in the later stages of adoption, once the technology is embedded in curriculum practices and supported by school-wide implementation structures.

Facilitating conditions

H1g was unsupported; facilitating conditions did not significantly affect mathematics teachers’ intent to use AI in STEM education. This suggests that infrastructure, training, and support may not directly shape teachers’ willingness to adopt AI tools. Teachers may prioritize perceived usefulness or motivational factors over logistical considerations when adopting AI. One possible explanation is that when teachers evaluate whether to adopt AI, they may prioritize perceived usefulness or motivational factors over logistical considerations12.

H2g support indicates that facilitating conditions significantly affect actual usage behavior. This reveals a gap between intention and action: support systems are crucial for enabling classroom use, even if they don’t drive adoption intent95. Access to reliable technology, support, and training in STEM influences mathematics teachers’ follow-through. These findings highlight the need to enhance practical support to close the gap between interest and implementation.

Price value

Both H1h and H2h were supported, showing that price value significantly impacts mathematics teachers’ intention and actual use of AI in STEM education. In China’s K–12 system, most AI tools are offered free by schools or platforms, so the perceived “value” for teachers refers to non-monetary costs like time investment, cognitive effort, and instructional alignment. When teachers believe that AI tools’ educational benefits outweigh the learning efforts, they are more likely to form a strong intention to use and apply these tools in practice40,89.

This finding indicates that AI tools must show clear instructional value while reducing the learning curve and operational complexity to gain acceptance among teachers. In STEM education, enhancing usability, integration, and pedagogical compatibility can increase perceived value and encourage classroom adoption.

Behavioral intentions and usage behavior

H3 supported the notion that behavioral intention significantly predicts actual AI usage among mathematics teachers in STEM education, confirming the UTAUT2 model’s assumption that intention drives behavior. In structured STEM classrooms, teachers intending to use AI are likely to act on that intention, given adequate support and resources. This underscores the necessity to strengthen teachers’ motivation to use AI and ensure conditions exist to translate intention into practice.

Implications

This study examined AI usage and intentions among mathematics teachers in STEM education using an adapted UTAUT2 model. The findings confirm the model’s applicability in K–12 STEM education and reveal key factors influencing teachers’ adoption of AI tools. This study provides significant contributions for researchers, educators, and technology developers to improve AI integration in STEM education.

Theoretical implications

This study offers several theoretical contributions. First, it adapts and empirically validates the UTAUT2 model within the context of K–12 mathematics teachers utilizing AI tools in STEM education, an area that has been infrequently examined in the existing literature. In contrast to prior studies that frequently identify multiple factors impacting behavioral intention40,96,97our findings suggest that only a subset of UTAUT2 constructs—specifically, Performance Expectancy, Hedonic Motivation, and Price Value—serve as significant predictors of intention, with Performance Expectancy emerging as the predominant predictor. This observation underscores the distinctive motivational framework of STEM educators, who tend to prioritize instructional effectiveness and perceived advantages over social pressure or habitual influences.

Second, the differentiation between factors affecting intention and those determining actual behavior provides deeper insights into the intention–behavior gap, a persistent challenge within technology acceptance research. The significance of constructs such as Effort Expectancy and Facilitating Conditions solely for actual usage emphasizes the necessity of distinguishing between motivational influences and implementation facilitators within the UTAUT2 framework98,99.

Lastly, by reconceptualizing Price Value in terms of time and cognitive effort rather than financial expenditure—an adjustment that is particularly pertinent to the Chinese K–12 education system, where AI tools are generally provided at no cost—this study contributes to a more contextually relevant understanding of technology acceptance constructs91. These findings not only augment the explanatory capacity of UTAUT2 in educational environments but also lay the groundwork for future cross-cultural comparisons, especially regarding the role of practical constraints in the adoption of AI by educators.

Practical implications

This study offers several significant practical implications for fostering the adoption and effective utilization of artificial intelligence tools among mathematics educators within STEM education. Firstly, the finding that performance expectancy serves as the most robust predictor of behavioral intention underscores the necessity for developers and school leaders to articulate clearly how AI tools can enhance instructional effectiveness43. Demonstrating tangible benefits, such as improved lesson efficiency, differentiated instruction, and real-time student feedback, can fortify teachers’ willingness to embrace such technologies.

Secondly, the prominence of effort expectancy and facilitating conditions in forecasting actual usage behavior highlights the critical importance of mitigating technical barriers. AI tools must be designed with simplicity, intuitive interfaces, and seamless integration into existing teaching workflows. Furthermore, educational institutions should provide continuous technical support and targeted professional development, ensuring that educators feel equipped and supported in their effective use of AI tools96,100.

Thirdly, the reconceptualization of price value as primarily indicative of time and cognitive effort rather than financial cost bears direct implications for policy and training. In light of the substantial workload faced by K–12 educators, AI tools should be perceived not as an additional burden but as time-saving, workload-reducing assistants89,91. Training programs ought to elucidate not only how to utilize AI but also demonstrate how it can alleviate teaching pressures, such as through the automation of routine tasks or the enhancement of student engagement.

Finally, collaboration between AI developers and educational institutions is imperative. Engaging educators directly in the design and feedback process can ensure that AI tools are tailored to meet real classroom needs. By involving end-users—specifically mathematics teachers—in the piloting, refinement, and contextualization of AI applications, developers can create tools that are pedagogically relevant, user-friendly, and aligned with the specific goals of STEM education. Such partnerships also foster a sense of ownership among teachers, potentially leading to more sustainable and innovative applications of AI in the classroom97.

Limitations

This study presents several limitations. First, the sample was confined to K–12 mathematics educators in China, potentially limiting the generalizability of the findings to other subjects, educational levels, or cultural contexts. Second, the cross-sectional design captures teacher perceptions and behaviors at a singular point in time, without accounting for possible changes or long-term adoption patterns. Third, the reliance on self-reported data may introduce bias, as actual classroom usage behavior may differ from what respondents reported. Furthermore, the study did not differentiate between various types of AI tools, which may exhibit variability in terms of usability and impact. Lastly, while the UTAUT2 model provided a robust framework, other potentially relevant factors—such as data privacy concerns or students’ AI readiness—were not included and necessitate further exploration in subsequent research.

Conclusion

This research applied the UTAUT2 model to assess the relationship between mathematics teachers’ intentions and their actual implementation of AI in STEM education. The findings partly validate the model’s relevance in educational technology adoption, yet indicate that certain conventional pathways are not significant in understanding how mathematics teachers use AI behavior97. To clarify, this study implemented and refined the UTAUT2 model specifically for mathematics education. It’s important to recognize that while this finding may not represent a groundbreaking advance for the theory, it does empirically demonstrate the model’s adaptability within this particular field. Furthermore, the research confirms the successful validation and implementation of the model among mathematics teachers. Importantly, the theoretical conclusions from this study depend on the current state of AI development in STEM education. As technology progresses and teachers accumulate more experience, the impact of related variables on teacher behavior might change. This study validates that Performance Expectancy, Hedonic Motivation, and Price Value have a significant effect on mathematics teachers’ behavioral intentions, whereas Effort Expectancy, Social Influence, Perceived Risk, and Habit do not significantly affect these intentions in this context study91. The study also revealed that time cost plays a crucial role in the Price Value perception among mathematics teachers. This implies that teachers prioritize whether AI tools can lessen their teaching burdens over the tools’ economic costs. Conversely, factors like Effort Expectancy, Facilitating Conditions, and Price Value greatly influence actual usage behavior. This suggests that when mathematics teachers consider the long-term use of AI tools, the usability of the technology and the potential time savings are pivotal. Additionally, while teachers’ intentions to use these tools do impact their actual usage behavior, the influence is limited. This limitation may be linked to the current ubiquity of AI tools and the ongoing adaptation process teachers undergo with technology. Finally, the study proposes that the key factors affecting mathematics teachers’ AI usage may continue to change as technology improves, policies evolve, and teacher training deepens. Future research could investigate how mathematics teachers across various educational stages or cultural contexts adapt to and embrace AI tools technology.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Ouyang, F. & Jiao, P. Artificial intelligence in education: the three paradigms. Computers Education: Artif. Intell. 2, 100020 (2021).

Xu, W. & Ouyang, F. The application of AI technologies in STEM education: A systematic review from 2011 to 2021. Int. J. STEM Educ. 9, 59 (2022).

Yang, Y., Sun, W., Sun, D., & Salas-Pilco, S. Z.Navigating the AI-Enhanced STEM education landscape: a decade of insights, trends, and opportunities. Research in Science & Technological Education, 1–25. https://doi.org/10.1080/02635143.2024.2370764 (2024).

Kohnke, S. & Zaugg, T. Artificial intelligence: an untapped opportunity for equity and access in STEM education. Educ. Sci. 15, 68 (2025).

Latif, E. et al. Artificial General Intelligence (AGI) for Education. (2023). https://doi.org/10.48550/arXiv.2304.12479

El Fathi, T., Saad, A., Larhzil, H. & Lamri, D. Al ibrahmi, E. M. Integrating generative AI into STEM education: enhancing conceptual understanding, addressing misconceptions, and assessing student acceptance. Disciplinary Interdisciplinary Sci. Educ. Res. 7, 6 (2025).

Sari, J. M. & Purwanta, E. The implementation of artificial intelligence in STEM-based creative learning in the society 5.0 era. Tadris 6, 433–440 (2021).

Walter, Y. Embracing the future of artificial intelligence in the classroom: the relevance of AI literacy, prompt engineering, and critical thinking in modern education. Int. J. Educational Technol. High. Educ. 21, 15 (2024).

Cviko, A., McKenney, S. & Voogt, J. Teacher roles in designing technology-rich learning activities for early literacy: A cross-case analysis. Comput. Educ. 72, 68–79 (2014).

Mulyani, H., Istiaq, M. A., Shauki, E. R., Kurniati, F., & Arlinda, H. Transforming education: exploring the influence of generative AI on teaching performance. Cogent Education, 12(1), https://doi.org/10.1080/2331186X.2024.2448066 (2025).

Filiz, O., Kaya, M. H., Adiguzel, T. & Teachers Understanding the factors influencing AI integration in K-12 education. Educ. Inf. Technol. https://doi.org/10.1007/s10639-025-13463-2 (2025).

Hazzan-Bishara, A., Kol, O. & Levy, S. The factors affecting teachers’ adoption of AI technologies: A unified model of external and internal determinants. Educ. Inf. Technol. https://doi.org/10.1007/s10639-025-13393-z (2025).

Meylani, R. Artificial intelligence in the education of teachers: A qualitative synthesis of the cutting-edge research literature. J. Comput. Educ. Res. 12, 600–637 (2024).

Nazaretsky, T., Ariely, M., Cukurova, M. & Alexandron, G. Teachers’ trust in AI-powered educational technology and a professional development program to improve it. Br. J. Edu. Technol. 53, 914–931 (2022).

Brown, R. E. & Bogiages, C. A. Professional development through STEM integration: how early career math and science teachers respond to experiencing integrated STEM tasks. Int. J. Sci. Math. Educ. 17, 111–128 (2019).

Hwang, G. J. & Tu, Y. F. Roles and research trends of artificial intelligence in mathematics education: A bibliometric mapping analysis and systematic review. Mathematics 9, 584 (2021).

Martín-Páez, T., Aguilera, D., Perales-Palacios, F. J. & Vílchez-González, J. M. What are we talking about when we talk about STEM education? A review of literature. Sci. Educ. 103, 799–822 (2019).

Nicol, C., Thom, J. S., Doolittle, E., Glanfield, F. & Ghostkeeper, E. Mathematics education for STEM as place. ZDM Math. Educ. 55, 1231–1242 (2023).

Baya’a, N. & Daher, W. Mathematics teachers’ readiness to integrate ICT in the classroom. Int. J. Emerg. Technol. Learn. 8, 46–52 (2013).

Perifanou, M., Economides, A. A. & Nikou, S. A. Teachers’ views on integrating augmented reality in education: needs, opportunities, challenges and recommendations. Future Internet. 15, 20 (2023).

Li, M. & Li, Y. Investigating the interrelationships of technological pedagogical readiness using PLS-SEM: A study of primary mathematics teachers in China. J. Math. Teacher Educ. https://doi.org/10.1007/s10857-025-09694-2 (2025).

Jeyaraj, A., Dwivedi, Y. K. & Venkatesh, V. Intention in information systems adoption and use: current state and research directions. Int. J. Inf. Manag. 73, 102680 (2023).

Al-Adwan, A. S. et al. Closing the divide: exploring higher education teachers’ perspectives on educational technology. Inform. Dev. https://doi.org/10.1177/02666669241279181 (2024).

Kim, J. Types of teacher-AI collaboration in K-12 classroom instruction: Chinese teachers’ perspective. Educ. Inf. Technol. 29, 17433–17465 (2024).

Tseng, T. H., Lin, S., Wang, Y. S., & Liu, H. X. Investigating teachers’ adoption of MOOCs: the perspective of UTAUT2. Interactive Learning Environments, 30(4), 635–650. https://doi.org/10.1080/10494820.2019.1674888 (2019).

Du, W. & Liang, R. Teachers’ continued VR technology usage intention: an application of the UTAUT2 model. SAGE Open. https://doi.org/10.1177/21582440231220112 (2024).

Venkatesh, V., Morris, M. G., Davis, G. B. & Davis, F. D. User acceptance of information technology: toward a unified view. MIS Q. 27, 425–478 (2003).

Tamilmani, K., Rana, N. P., Wamba, S. F. & Dwivedi, R. The extended unified theory of acceptance and use of technology (UTAUT2): A systematic literature review and theory evaluation. Int. J. Inf. Manag. 57, 102269 (2021).

Sun, F., Tian, P., Sun, D., Fan, Y. & Yang, Y. Pre-service teachers’ inclination to integrate AI into STEM education: analysis of influencing factors. Br. J. Edu. Technol. 55, 2574–2596 (2024).

Chang, Y. S. & Chou, C. Integrating artificial intelligence into STEAM education. In Cognitive Cities (eds Shen, J. et al.) 469–474 (Springer, 2020). https://doi.org/10.1007/978-981-15-6113-9_52.

Darayseh, A. A. & Mersin, N. Integrating generative AI into STEM education: insights from science and mathematics teachers. INT. ELECT. J. MATH. ED. 20, em0832 (2025).

Hwang, G. J., Xie, H., Wah, B. W. & Gašević, D. Vision, challenges, roles and research issues of artificial intelligence in education. Computers Education: Artif. Intell. 1, 100001 (2020).

Kanyike, J. The integration and impact of AI technologies in STEM education. (2024).

Huang, X. & Qiao, C. Enhancing computational thinking skills through artificial intelligence education at a STEAM high school. Sci. Educ. 33, 383–403 (2024).

Weng, X., Ye, H., Dai, Y., & Ng, O. Integrating Artificial Intelligence and Computational Thinking in Educational Contexts: A Systematic Review of Instructional Design and Student Learning Outcomes. Journal of Educational Computing Research, 62(6), 1420–1450. https://doi.org/10.1177/07356331241248686 (2024).

Wang, Y., Liu, C. & Tu, Y. F. Factors affecting the adoption of AI-based applications in higher education: an analysis of teachers perspectives using structural equation modeling. Educational Technol. Soc. 24, 116–129 (2021).

Kasneci, E. et al. ChatGPT for good? On opportunities and challenges of large Language models for education. Learn. Individual Differences. 103, 102274 (2023).

Zawacki-Richter, O., Marín, V. I., Bond, M. & Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education – where are the educators? Int. J. Educational Technol. High. Educ. 16, 39 (2019).

Das, S., Mutsuddi, I. & Ray, N. Artificial intelligence in adaptive education: A transformative approach. in 21–50 (2025). https://doi.org/10.4018/979-8-3693-8227-1.ch002

Li, M. & Manzari, E. AI utilization in primary mathematics education: A case study from a Southwestern Chinese City. Educ. Inf. Technol. https://doi.org/10.1007/s10639-025-13315-z (2025).

Smith-Mutegi, D., Mamo, Y., Kim, J., Crompton, H. & McConnell, M. Perceptions of STEM education and artificial intelligence: A Twitter (X) sentiment analysis. Int. J. STEM Educ. 12, 9 (2025).

Nikou, S. A. & Economides, A. A. Factors that influence behavioral intention to use mobile-based assessment: A STEM teachers’ perspective. Br. J. Edu. Technol. 50, 587–600 (2019).

Wijaya, T. T., Su, M., Cao, Y., Weinhandl, R. & Houghton, T. Examining Chinese preservice mathematics teachers’ adoption of AI chatbots for learning: unpacking perspectives through the UTAUT2 model. Educ. Inf. Technol. https://doi.org/10.1007/s10639-024-12837-2 (2024).

Al Darayseh, A. Acceptance of artificial intelligence in teaching science: science teachers’ perspective. Computers Education: Artif. Intell. 4, 100132 (2023).

Venkatesh, T. & Xu Consumer acceptance and use of information technology: extending the unified theory of acceptance and use of technology. MIS Q. 36, 157 (2012).

Wei, W. et al. Using the extended unified theory of acceptance and use of technology to explore how to increase users’ intention to take a Robotaxi. Humanit. Soc. Sci. Commun. 11, 1–14 (2024).

Palau-Saumell, R., Forgas-Coll, S., Sánchez-García, J. & Robres, E. User acceptance of mobile apps for restaurants: An expanded and extended UTAUT-2. Sustainability 11, 1210 (2019).

Davis, F. D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 13, 319–340 (1989).

Wangdi, T., & Rigdel, K. Exploring perceived factors influencing teachers’ behavioural intention to use ChatGPT for teaching. Journal of Education for Teaching, 51(1), 202–204. https://doi.org/10.1080/02607476.2024.2362197 (2025).

Molefi, R. R., Ayanwale, M. A., Kurata, L. & Chere-Masopha, J. Do in-service teachers accept artificial intelligence-driven technology? The mediating role of school support and resources. Computers Educ. Open. 6, 100191 (2024).

Wang, X., Xu, X., Zhang, Y., Hao, S. & Jie, W. Exploring the impact of artificial intelligence application in personalized learning environments: thematic analysis of undergraduates’ perceptions in China. Humanit. Soc. Sci. Commun. 11, 1–10 (2024).

Wijaya, T. T. et al. Factors affecting the use of digital mathematics textbooks in Indonesia. Mathematics 10, 1808 (2022).

Wang, S., Li, J. & Yuan, Y. The power of convergence: STEM education in the era of artificial intelligence. In Disciplinary and Interdisciplinary Education in STEM: Changes and Innovations (eds Li, Y. et al.) 63–80 (Springer Nature Switzerland, 2024). https://doi.org/10.1007/978-3-031-52924-5_4.

Featherman, M. S. & Pavlou, P. A. Predicting e-services adoption: A perceived risk facets perspective. Int. J. Hum. Comput. Stud. 59, 451–474 (2003).

Roselius, T. Consumer rankings of risk reduction methods. J. Mark. 35, 56–61 (1971).

Alalwan, A. A., Dwivedi, Y. K. & Williams, M. D. Customers’ intention and adoption of telebanking in Jordan. Inform. Syst. Manage. 33, 154–178 (2016).

Zhou Y, Li X and Wijaya TT Determinants of Behavioral Intention and Use of Interactive Whiteboard by K-12 Teachers in Remote and Rural Areas. Front. Psychol. 13, 934423. https://doi.org/10.3389/fpsyg.2022.934423 (2022).

Tamilmani, K., Rana, N. P., Dwivedi, Y. K., Sahu, G. P. & Roderick, S. Exploring the role of ‘price value’ for understanding consumer adoption of technology: 22nd pacific asia conference on information systems - opportunities and challenges for the digitized society: are we ready? PACIS 2018. Proceedings of the 22nd Pacific Asia Conference on Information Systems - Opportunities and Challenges for the Digitized Society (2018).

Chatterjee, S., Rana, N. P., Khorana, S., Mikalef, P. & Sharma, A. Assessing organizational users’ intentions and behavior to AI integrated CRM systems: A meta-UTAUT approach. Inf. Syst. Front. 25, 1299–1313 (2023).

Dean, D. & Suhartanto, D. The formation of visitor behavioral intention to creative tourism: the role of push–pull motivation. Asia Pac. J. Tourism Res. 24, 393–403 (2019).

Li, W. et al. An explanatory study of factors influencing engagement in AI education at the K-12 level: an extension of the classic TAM model. Sci. Rep. 14, 13922 (2024).

Kline, R. Principles and Practice of Structural Equation Modeling (Guilford Press, 2010).

Zhou, Y. Exploring the gender imbalance in teaching staff in china: how to break gender stereotypes in education industry. J. Educ. Humanit. Social Sci. 12, 211–216 (2023).

Ramírez-Correa, P., Rondán-Cataluña, F. J. & Arenas-Gaitán, J. Martín-Velicia, F. analysing the acceptation of online games in mobile devices: an application of UTAUT2. J. Retailing Consumer Serv. 50, 85–93 (2019).

AL-Fadhali, N. An AMOS-SEM approach to evaluating stakeholders’ influence on construction project delivery performance. Eng. Constr. Architectural Manage. 31, 638–661 (2022).

Dash, G. & Paul, J. CB-SEM vs PLS-SEM methods for research in social sciences and technology forecasting. Technol. Forecast. Soc. Chang. 173, 121092 (2021).

Hair Jr., J. F., Matthews, L. M., Matthews, R. L., & Sarstedt, M. PLS-SEM or CB-SEM: Updated guidelines on which method to use. International Journal of Multivariate Data Analysis, 1(2), 1-10 (2017).

Kim, H. Y. Statistical notes for clinical researchers: assessing normal distribution (2) using skewness and kurtosis. Restor. Dentistry Endodontics. 38, 52–54 (2013).

Kline, R. B. Principles and Practice of Structural Equation Modeling, 4th Ed. xvii, 534The Guilford Press, New York, NY, US, (2016).

Zijlmans, E. A. O., Tijmstra, J., van der Ark, L. A., & Sijtsma, K. Item-score reliability as a selection tool in test construction. Frontiers in Psychology, 9, 2298. https://doi.org/10.3389/fpsyg.2018.02298 (2019).

Agarwal, R. & Prasad, J. A conceptual and operational definition of personal innovativeness in the domain of information technology. Inform. Syst. Res. 9, 204–215 (1998).

Jansen, M. et al. Psychometrics of the observational scales of the Utrecht scale for evaluation of rehabilitation (USER): content and structural validity, internal consistency and reliability. Arch. Gerontol. Geriatr. 97, 104509 (2021).

Kock, N. Common method bias in PLS-SEM: A full collinearity assessment approach. Int. J. e-Collaboration. 11, 1–10 (2015).

Cheung, G. W., Cooper-Thomas, H. D., Lau, R. S. & Wang, L. C. Reporting reliability, convergent and discriminant validity with structural equation modeling: A review and best-practice recommendations. Asia Pac. J. Manag. 41, 745–783 (2024).

Klein, L. L., Alves, A. C., Abreu, M. F. & Feltrin, T. S. Lean management and sustainable practices in higher education institutions of Brazil and portugal: A cross country perspective. J. Clean. Prod. 342, 130868 (2022).

Kohli, A. K., Shervani, T. A. & Challagalla, G. N. Learning and performance orientation of salespeople: the role of supervisors. J. Mark. Res. 35, 263–274 (1998).

Hair, J. F. Multivariate Data Analysis (Pearson Prentice Hall, 2006).

Lee, S. B., Lee, S. C. & Suh, Y. H. Technostress from mobile communication and its impact on quality of life and productivity. Total Qual. Manage. Bus. Excellence. 27, 775–790 (2016).

Taylor, S. & Todd, P. Decomposition and crossover effects in the theory of planned behavior: A study of consumer adoption intentions. Int. J. Res. Mark. 12, 137–155 (1995).

Mandhani, J., Nayak, J. K., & Parida, M. Interrelationships among service quality factors of Metro Rail Transit System: An integrated Bayesian networks and PLS-SEM approach. Transportation Research Part A: Policy and Practice, 140, 320–336. https://doi.org/10.1016/j.tra.2020.08.014 (2020).

Provenzano, D., Washington, S. D., & Baraniuk, J. N. A machine learning approach to the differentiation of functional magnetic resonance imaging data of chronic fatigue syndrome (CFS) from a sedentary control. Frontiers in Computational Neuroscience, 14, 2. https://doi.org/10.3389/fncom.2020.00002 (2020).

Fornell, C. & Larcker, D. F. Structural equation models with unobservable variables and measurement error: algebra and statistics. J. Mark. Res. 18, 382–388 (1981).

Scherer, R., Siddiq, F. & Tondeur, J. The technology acceptance model (TAM): A meta-analytic structural equation modeling approach to explaining teachers’ adoption of digital technology in education. Comput. Educ. 128, 13–35 (2019).

Faraon, M., Rönkkö, K., Milrad, M. & Tsui, E. International perspectives on artificial intelligence in higher education: an explorative study of students’ intention to use ChatGPT across the nordic countries and the USA. Educ. Inf. Technol. https://doi.org/10.1007/s10639-025-13492-x (2025).

Alshammari, S. H. & Alkhwaldi, A. F. An integrated approach using social support theory and technology acceptance model to investigate the sustainable use of digital learning technologies. Sci. Rep. 15, 342 (2025).

Arbulú Ballesteros, M. et al. The sustainable integration of AI in higher education: analyzing ChatGPT acceptance factors through an extended UTAUT2 framework in Peruvian universities. Sustainability 16, 10707 (2024).

Uygun, D. Teachers’ perspectives on artificial intelligence in education. Adv. Mob. Learn. Educational Res. 4, 931–939 (2024).

Zhao, J., Li, S. & Zhang, J. Understanding teachers’ adoption of AI technologies: an empirical study from Chinese middle schools. Systems 13, 302 (2025).

Elyakim, N. Bridging expectations and reality: addressing the price-value paradox in teachers’ AI integration. Educ. Inf. Technol. https://doi.org/10.1007/s10639-025-13466-z (2025).

Kurup, S. & Gupta, V. Factors influencing the AI adoption in organizations. Metamorphosis https://doi.org/10.1177/09726225221124035 (2022).

Hu, L., Wang, H. & Xin, Y. Factors influencing Chinese pre-service teachers’ adoption of generative AI in teaching: an empirical study based on UTAUT2 and PLS-SEM. Educ. Inf. Technol. https://doi.org/10.1007/s10639-025-13353-7 (2025).

Liu, N., Deng, W. & Ayub, A. F. M. Exploring the adoption of AI-enabled english learning applications among university students using extended UTAUT2 model. Educ. Inf. Technol. https://doi.org/10.1007/s10639-025-13349-3 (2025).

Zhao, Y. & Frank, K. A. Factors affecting technology uses in schools: an ecological perspective. Am. Educ. Res. J. 40, 807–840 (2003).

Watted, A. Teachers’ perceptions and intentions toward AI integration in education: insights from the UTAUT model. Power Syst. Technol. 49, 164–183 (2025).

Dahri, N. et al. Investigating AI-based academic support acceptance and its impact on students’ performance in Malaysian and Pakistani higher education institutions. Educ. Inform. Technol. 29, 18695–18744 (2024).

Md Zuber, M. & Mansor, M. A. UTAUT2 Questionnaire to Access Perception and Acceptance Degree among Primary School Mathematics Teachers on the Integration Programming in Mathematics Instruction. (2024).

Ateş, H. & Gündüzalp, C. Proposing a conceptual model for the adoption of artificial intelligence by teachers in STEM education. Interact. Learn. Environ. 1–27. https://doi.org/10.1080/10494820.2025.2457350 (2025).

Ali Phd, I., Warraich, N. & Butt, K. Acceptance and use of artificial intelligence and AI-based applications in education: A meta-analysis and future direction. Inform. Dev. https://doi.org/10.1177/02666669241257206 (2024).

Bayrak, C. & Liman-Kaban, A. Understanding the adoption and usage of gamified web tools by K-12 teachers in turkey: A structural equation model. Educ. Inf. Technol. 29, 24759–24781 (2024).

Weinhandl, R., Helm, C., Anđić, B., & Große, C. S. Uncovering mathematics teachers’ instructional anticipations in a digital one-to-one environment: A modified UTAUT study. Heliyon, 10(15), e35381. https://doi.org/10.1016/j.heliyon.2024.e35381 (2024).

Funding

This research was supported by the National Research Institute for Mathematics Teaching Materials, Beijing, China (Project Number: 2023GH-ZDA-JJ-Y-04), and the Guangdong Provincial Education Science Planning (Project Number: 2024GXJK689).

Author information

Authors and Affiliations

Contributions

Conceptualization, Wen Du and Muwen Tang; Methodology, Yiming Cao; Software, Wen Du; Validation, Muwen Tang and Guofeng Wang; Formal Analysis, Muwen Tang; Investigation, Muwen Tang; Resources, Muwen Tang; Data Curation, Wen Du and Yiming Cao; Writing—Original Draft Preparation, all authors; Writing—Review and Editing, all authors; Visualization, Wen Du; Supervision, Fang Wang; Project Administration, Fang Wang; All authors have read and agreed to the published version of the manuscript.

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

This study was conducted in strict accordance with the principles of the Helsinki Declaration, utilizing non-invasive and anonymized data collection methods. Ethical approval was granted by the Institutional Review Board at Beijing Normal University, China (IRB approval number: 202408020). Prior to participation, informed consent was obtained from all individuals in the study, ensuring their voluntary engagement and the ethical use of their data for research purposes. This process reflects our commitment to maintaining high ethical standards and safeguarding participant integrity and safety throughout the research.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Du, W., Cao, Y., Tang, M. et al. Factors influencing AI adoption by Chinese mathematics teachers in STEM education. Sci Rep 15, 20429 (2025). https://doi.org/10.1038/s41598-025-06476-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-06476-x

Keywords

This article is cited by

-

Modelling STEM students’ intention to learn artificial intelligence (AI) in Ghana: a PLS-SEM and fsQCA approach

Discover Artificial Intelligence (2025)