Abstract

The individual adaptive behavioral interpretation of students’ learning behaviors is a vital link for instructional process interventions. Accurately recognizing learning behaviors and conducting a complete judgment of classroom meta-action sequences are essential for the individual adaptive behavioral interpretation of students’ learning behaviors. This paper proposes a learning behavior classification model based on classroom meta-action sequences (ConvTran-Fibo-CA-Enhanced). The model employs the Fibonacci sequence for location encoding to augment the positional attributes of classroom meta-action sequences. It also integrates Channel Attention and Data Augmentation techniques to improve the model’s ability to comprehend these sequences, thereby increasing the accuracy of learning behavior classification and verifying the completeness of classroom meta-action sequences. Experimental results show that the proposed model outperforms baseline models on human activity recognition public datasets and learning behavior classification and meta-action sequences completeness judgment datasets in smart classroom scenarios.

Similar content being viewed by others

Introduction

Learning behaviors consist of a sequence of fundamental classroom actions, such as “Take Phone,” “Take Pen and Write,” and “Lie on the Desk,” which are defined as classroom meta-actions. The individual adaptive behavioral interpretation of students’ learning behaviors is an important tool to assist teachers in their instruction. Since students’ learning behaviors are composed of a series of classroom meta-actions, accurately identifying learning behaviors and assessing the completeness of meta-action sequences (the order and integrity of specific meta-action combinations within the sequence) are crucial for providing individualized behavioral explanations for students.

Traditional human activity recognition (HAR) methods are based on converting specific body motion features into sensor signals, followed by perception and classification1, or through end-to-end deep learning frameworks that automatically extract feature representations of latent semantic information from time series data2. Both CNN and LSTM have demonstrated outstanding performance in deep learning models. Yang et al.3 proposed an automatic feature extraction method based on CNN, which uses convolution and pooling operations to capture significant features of sensor signals across different time scales from multi-channel time series data. All identified significant features are systematically unified across multiple channels and eventually mapped to different human activity categories. Shi et al.4 introduced ConvLSTM, which extends the fully connected LSTM (FC-LSTM) by incorporating convolutional structures in both input-to-state and state-to-state transitions, thus better capturing the spatiotemporal correlations in sequences. Ordóñez et al.5 proposed a general deep-learning framework for activity recognition based on convolutional and LSTM recurrent units. This framework is suitable for multi-modal wearable sensors and enables the natural fusion of sensor data. Foumani et al.6 introduced a multivariate time series classification approach, termed ConvTran, which integrates tAPE/eRPE with convolutional input encoding to enhance the positional and data embedding of time series data.

However, existing video-based understanding methods achieve relatively high accuracy in recognizing individual classroom meta-actions (e.g., “Take Pen and Write,” “Read a Book,” and “Look Up and Listen”); they struggle, however, with interpreting complex learning behaviors composed of sequences of multiple meta-actions (e.g., “Take Notes” and “Attend Lecture”)7. The same classroom meta-action can carry different meanings depending on the context. For instance, the “Talk” meta-action could indicate either distraction or responding to a question. Therefore, it is essential to consider the contextual sequence of actions in order to accurately interpret students’ learning behaviors.

To learn more discriminative feature representations and effectively extract features from classroom meta-action sequences, this paper introduces an attention mechanism to capture the key feature information within the meta-action sequences. We propose a learning behavior classification model based on classroom meta-action sequences (ConvTran-Fibo-CA-Enhanced). Specifically, by using surveillance images from smart classrooms, student meta-action time series data are obtained through the DPE-SAR network8 and then passed through the ConvTran-Fibo-CA-Enhanced model for learning behavior classification and completeness assessment of the classroom meta-action sequences. The main contributions of this study can be summarized as follows:

-

1.

A learning behavior classification model based on classroom meta-action sequences, named ConvTran-Fibo-CA, is proposed. This model enhances the positional features of elements in classroom meta-action sequences by utilizing Fibonacci Sequence Temporal Absolute Positional Encoding. Additionally, a channel attention mechanism is introduced to assign different weights to each channel in the feature map, significantly improving the model’s performance.

-

2.

A student learning behavior classification dataset, GUET5, was constructed in the context of smart classroom scenarios, and a meta-action sequence completeness judgment dataset was constructed based on GUET5. The ConvTran-Fibo-CA model was validated on publicly available human activity recognition datasets, demonstrating its effectiveness and reliability across various behavior recognition scenarios. This work offers a new technical approach to the fields of smart education and behavior analysis.

-

3.

The ConvTran-Fibo-CA model is enhanced by the introduction of the Focal Loss function to mitigate class imbalance and differences in classification difficulty, hence augmenting the model’s recognition accuracy.

The remainder of this paper is organized as follows: “Related work” introduces the related work. “Dataset” presents the datasets used for the experiments. “A learning behavior classification model based on classroom meta-action sequences” describes the learning behavior classification model based on classroom meta-action sequences. “Experimental results and analysis” outlines the experimental process and analyzes the results to evaluate the performance of the proposed algorithm. Finally, “Conclusions” concludes the work with an outlook.

Related work

Currently, learning behavior detection methods in smart classrooms are predominantly based on image recognition techniques derived from the YOLO series of models9,10. Pabba et al.11 proposed a learning behavior classification system that integrates human posture and facial features, categorizing student behaviors into “active” and “passive” classes by extracting body pose, proximity movement, and facial expressions. The integration of multimodal visual features has been shown to further enhance the accuracy of behavior recognition12. While the accuracy of vision-based learning behavior recognition continues to improve, existing research lacks an in-depth analysis of the constituent elements of learning behaviors themselves.

The individual adaptive behavioral interpretation of students’ learning behaviors is an extension of human activity recognition (HAR), which involves classifying learning behaviors and assessing the completeness of classroom meta-action sequences after recognizing the student’s classroom meta-actions. This field primarily focuses on multi-dimensional time series classification (MTSC) algorithms, including network structures such as CNN, LSTM, and Transformer. Mohammadi et al.13,14 reviewed the current state of the rapidly developing deep learning field in time series classification, revisiting the various network architectures and training methods used for these tasks while also discussing the challenges and opportunities faced when applying deep learning to time series data.

Due to its ability to effectively extract latent features from time series data, CNN has become a popular deep learning architecture in the field of multi-dimensional time series classification15,16, including full convolutional networks (FCN) and residual networks (ResNet). Researchers have proposed a lightweight Convolutional Neural Network with a Bi-directional Gated Recurrent Units (CNN-BiGRU) model for classifying human activities using inertial sensor data collected from wearable smart devices17. To effectively search for the optimal CNN architecture for HAR tasks, Genetic Algorithms (GA) have been applied to optimize CNN encoding pattern structures18.

The deep learning human activity recognition (HAR) architecture based on Convolutional Neural Networks and Long Short-Term Memory networks (CNN-LSTM) not only improves the prediction accuracy of human activities from raw data but also reduces the model complexity, effectively eliminating the need for advanced feature engineering19. This model integrates gating units into a single cell to control its internal memory. Most methods applied in HAR are variants of LSTM20,21. While existing CNN or LSTM-based models effectively extract features, they still have some drawbacks: 1) they require complex manual data preprocessing, and 2) they are limited to solving specific single-person activity recognition problems.

Compared to existing CNN and LSTM-based methods, Transformer-based approaches offer stronger data fitting ability, generalization, and scalability22,23. To capture both long-term and short-term dependencies, researchers have proposed a top-down feature fusion method that uses reverse attention for self-calibration throughout the learning process. This approach normalizes the attention module and dynamically adjusts the learning rate24. Additionally, research utilizing multi-scale convolutional hybrid attention models has proven effective in capturing not only individual human activity features but also the interactive behavioral features between people25,26. The two-stream self-attention network (TTN) captures both temporal dynamic features and spatial pattern features from sensor signals through two distinct information flows (time flow and space flow), thus extracting complementary feature sets to improve the model’s comprehension of human activity27,28.

Dataset

This paper uses a publicly available human activity recognition dataset composed of five real-world multivariate time series datasets, similar to those used in the learning behavior section, including the FingerMovement, HandMovementDirection(HMD), RacketSports, and Handwriting datasets from the UEA Repository29, for experiments. The data dimensions range from 2 to 1345, and the time series lengths vary from 8 to 17,984. The HAR dataset6 describes six daily activities performed by 36 users in a controlled laboratory environment using a smartphone placed in their pockets and sampled at a rate of 20Hz. The six activities are “walking,” “jogging,” “climbing stairs,” “sitting,” “standing,” and “lying.” The details are provided in Table 1:

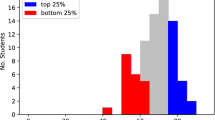

Since students’ learning behaviors consist of classroom meta-action sequences, the key foundational task for providing the individual adaptive behavioral interpretation of students’ learning behaviors is constructing a learning behavior classification dataset. Therefore, this paper constructs the student learning behavior classification dataset GUET5 under the smart classroom. This dataset is composed of classroom meta-action sequences from students enrolled in a course in 2023. It includes five learning behavior categories: “ Daydreaming,” “ Answering Questions,” “ Listening to the Lecture,” “ Taking Notes,” and “ Using A Phone.” The distribution of learning behaviors and classroom meta-actions is illustrated in Fig. 1. Detailed descriptions of each meta-action along with their associated key values are provided in Table 2.

The completeness judgment of classroom meta-action sequences is a crucial factor in delivering the individual adaptive behavioral interpretation of students’ learning behaviors. To validate the completeness of the meta-action sequences contained within learning behaviors, this paper constructs a meta-action sequence completeness judgment dataset based on GUET5. If the classroom meta-action sequence meets the criteria for learning behavior classification, it is considered “complete”; otherwise, it is regarded as “incomplete.” The details are provided in Table 3.

In Table 3, the asterisk (*) denotes indispensable meta-actions, the caret (\(^{\wedge }\)) indicates the number of times a meta-action is repeated, and the arrows represent the sequence of the meta-actions.

A learning behavior classification model based on classroom meta-action sequences

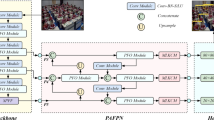

ConvTran-Fibo-CA-enhanced overall architecture

This paper uses student classroom meta-action sequences obtained from high-definition cameras in a smart classroom as the input for the learning behavior classification model based on classroom meta-action sequences (ConvTran-Fibo-CA-enhanced). The overall architecture of the model is shown in Fig. 2:

First, the T-frame images obtained from high-definition cameras in the smart classroom are used as input to the DPE-SAR model to generate the student classroom meta-action sequences. The learning behavior with the shape of \(L \times d_x\) is extracted from it as input data.M time filters are applied, and the output of the time filters is convolved with filters of shape \(d_x \times M\) in the \({d_{model}}\) space to capture the correlations between variables in the multivariate time series, and an input embedding of size \({d_{model}}\) is then constructed. Before passing the input embeddings into the MHA block, Fibonacci position encoding is added to the input embedding vectors, enabling the model to capture the positional features of the time series. The size of the embedding vector is \({d_{model}}\), which is the same as that of the input embeddings.

In the Multi-Head Attention (MHA), the input with \(L \times {d_{\bmod el}}\) dimensions is first transformed into a shape of \(L \times {d_z} \times 3\) through a linear layer. This transformation generates the user-defined qkv matrices, where \({d_z}\) represents the model’s dimensionality. Each of the three matrices, with a shape of \(L \times {d_z}\), represents the query (q), key (k), and value (v) matrices. These q, k, and v matrices are reshaped into a shape of \(h \times L \times {d_z} / h\) to represent h attention heads, with each attention head responsible for capturing different latent features in the time series. After computing the Multi-Head Attention, Channel Attention (CA) is applied to further enhance the sequence information features, enabling the model to focus more effectively on the most relevant parts of the sequence.

The FFN (Feed-Forward Network) is a multi-layer perceptron block consisting of two linear layers, with Gaussian Error Linear Units (GELUs) serving as the activation function. The input to the FFN block is passed through a skip connection, producing the final output of the Transformer block. Finally, before the fully connected layer, max pooling and global average pooling (GAP) are applied to the output of the ELU activation function from the final layer. This process results in the complete learning behavior classification and completeness judgment model based on classroom meta-action sequences.

Fibonacci sequence temporal absolute positional encoding

In the Transformer, since it does not incorporate recursion or convolution, the self-attention layer is unable to retain the positional information of the time series. However, local positional information, that is, the order of the time series, is crucial. Therefore, it is crucial to focus on positional encoding methods to enhance the positional features of the sequence. Absolute positional encoding was originally proposed for natural language processing and typically employs high embedding dimensions, such as 512 or 1024, to encode the positions of inputs30. As shown in Eq. (1):

In this equation, PE represents the positional encoding, and pos refers to the position of a token in the time series. Each token’s positional encoding is a vector, where i denotes the index of each element in this vector, and \({d_{model}}\) represents the dimensionality of the positional encoding vector. To mitigate the anisotropy phenomenon that arises as \({d_{model}}\) increases, Temporal Absolute Positional Encoding (tAPE) is proposed, with the specific formula shown in Eq. (2):

In Eq. (2), k represents the position of an element in the sequence, L denotes the total length of the sequence, and \(\omega _k^{\text {new}}\) represents the phase encoding representation after applying Temporal Absolute Positional Encoding (tAPE).

Fibonacci numbers, as a feature enhancement technique, are widely applied in prediction tasks31. The specific formula is presented in Eq. (3):

The learning behavior classification model, based on the complete classroom action sequence (ConvTran-Fibo-CA), enhances the sequence’s positional feature information by utilizing the Fibonacci sequence for positional encoding. Specifically, positional encoding is performed using Eq. (4) when the sequence is \(\mathrm{{i}} \notin \text {Fibonacci}\), and Eq. (5) is used when the sequence is \(\mathrm{{i}} \in \text {Fibonacci}\).

Efficient relative positional encoding

Efficient Relative Positional Encoding (eRPE) is a relative positional encoding technique that operates independently of input embeddings. It represents an improvement over the relative vector encoding32, with the specific formula provided in Eq. (6):

In Eq. (6), L is the sequence length, \(A_{i,j}\) is the attention weight, and \(w_{i - j}\) is a learnable scalar that represents the relative positional weight between position i and position j. The Vector and eRPE network structure is shown in Fig. 3:

Channel attention

The attention mechanism enables neural networks to capture deep spatiotemporal features hierarchically, assisting the model in better understanding patterns and trends in time series data and distinguishing different learning behaviors. Channel Attention captures statistical features across channels using global average pooling and global maximum pooling. These features are subsequently used to compute the attention weights for each channel. By emphasizing the relationships between channels, Channel Attention enhances the model’s ability to discern the significance of different features within classroom meta-action sequence data33. Equation (7) presents the computational formula for channel attention.

In Eq. (7), F represents the feature map of input size \(H \times W \times C\), and \(\sigma\) denotes the activation function. The input feature map F is processed through both global average pooling and global max pooling to generate two feature maps, which are then fed into a shared multi-layer perceptron (SharedMLP) that includes two 1D convolutional layers (fc1 and fc2) and a ReLU activation function. The outputs from the SharedMLP are added together and passed through a Sigmoid function to obtain the final channel attention weights \(M_c\). The network structure of the channel attention mechanism is illustrated in Fig. 4:

Data augmentation

Given the class imbalance in the GUET5 completeness judgment dataset, this paper incorporates the Focal Loss function into the ConvTran-Fibo-CA model for data augmentation, resulting in the ConvTran-Fibo-CA-Enhanced model. The specific formulas for Focal Loss are presented in Eqs. (8) and (9).

In Eq. (8), y takes values \(\{1, -1\}\), representing positive and negative samples, respectively. p is the model’s predicted probability for the label, where if \(p > 0.5\), the sample is classified as positive; otherwise, it is classified as negative. In Eq. (9), \(\gamma\) is the modulation factor, and \(\gamma\) takes values in the range [0,5]. When \(\gamma = 0\), the function is equivalent to the cross-entropy (CE) loss. The larger the value of \(\gamma\), the more the model focuses on hard and easy samples. By introducing the \(\alpha _t\) parameter, the loss weights for positive and negative samples can be adjusted, thereby balancing their impact on the model’s training process.

Experimental results and analysis

The experimental setup of this paper is shown in Table 4.

In this study, experiments were conducted using a Transformer-based model. The total number of iterations was set to 100 or 300 epochs, with a batch size of 128. The learning rate was set to 0.001, dropout was set to 0.01, and the optimizer used was RAdam.

Learning behavior classification experiment

ConvTran was used as the baseline model for comparison to assess the advantages and effectiveness of the proposed classroom-based complete meta-action sequence learning behavior classification model (ConvTran-Fibo-CA). Experiments were conducted on five public datasets as well as the GUET5 student learning behavior classification dataset in a smart classroom environment. Accuracy and Precision were used as the evaluation metrics for the models. The comparison results with the baseline model are presented in the curve shown in Fig. 5.

Figure 5 illustrates the training results of the two models on five public datasets, with the x-axis representing the number of iterations (epochs) and the y-axis showing the prediction accuracy every 25 epochs. As shown in Fig. 5c, d , the accuracy of the proposed model is identical to that of the baseline model on the HandMovementDirection and RacketSports datasets. In contrast, as shown in Fig. 5a, b, e, ConvTran-Fibo-CA achieves accuracy improvements of 1.563%, 0.627% and 10%, respectively, on the remaining four public datasets.

From Fig. 5a, d , it can be observed that the proposed model and the baseline model achieve high accuracy on the HAR and RacketSports datasets, both around 90%, indicating that the models perform well in predicting behaviors involving large body movements. As analyzed from Fig. 5b, c, e , for behaviors with smaller body movements (e.g., finger motions), both models show relatively lower prediction accuracy.

Table 5 presents the accuracy comparison between the two models on the test sets of the public datasets, where “Avg Train” refers to the number of samples in the training set. The detailed analysis is as follows: On the HandMovementDirection and RacketSports datasets, although both models exhibit the same maximum accuracy on the training curves, ConvTran-Fibo-CA demonstrates superior performance on the test set compared to the baseline model ConvTran. This result effectively validates that, with the enhancement of positional features through Fibonacci Sequence Temporal Absolute Positional Encoding and the introduction of channel attention, ConvTran-Fibo-CA is better able to focus on understanding the features of time series. Consequently, it provides more accurate predictions for human activity recognition tasks, highlighting the superiority of the proposed model for this task.

Ablation experiment

To evaluate the effectiveness of the Fibonacci sequence-based temporal absolute positional encoding, this paper conducts a comparative experimental study between the proposed encoding method and the conventional absolute positional encoding. The experimental results are presented in Fig. 6.

Figure 6 presents a comparison of positional encodings at Position 1 and Position 30. The horizontal axis represents the dimension index of the encoding vector, while the vertical axis denotes the corresponding value of each dimension. It can be observed that under the conventional absolute positional encoding scheme, the embedding vectors of Positions 1 and 30 become nearly identical beyond Dimension 30. In contrast, after applying the Fibonacci sequence-based temporal absolute positional encoding, the embedding vectors of these two positions exhibit noticeable differences across most dimensions.

To evaluate the effectiveness of ConvTran-Fibo-CA for classifying student learning behaviors in smart classrooms and to assess the validity of the proposed modules, ablation experiments were conducted using the GUET5 learning behavior classification dataset. The results are presented in Fig. 7:

Figure 7 presents the training comparison curves of the models on the GUET5 dataset, with the x-axis representing the number of iterations and the y-axis indicating the prediction accuracy every 25 epochs. A detailed analysis is as follows: on the GUET5 dataset, the three models proposed in this paper (ConvTran-Fibo, ConvTran-CA, and ConvTran-Fibo-CA) all outperform the baseline model ConvTran. This result further confirms the effectiveness of Fibonacci number-based positional encoding in enhancing positional features. It demonstrates that, with the introduction of channel attention, ConvTran-Fibo-CA is more effective at extracting features from time series, resulting in higher prediction accuracy. This demonstrates the superiority of the proposed model in the task of learning behavior classification.

The performance metrics of the proposed model, ConvTran-Fibo-CA, and the baseline model, ConvTran, on the GUET5 dataset are presented in Table 6. The detailed analysis is as follows:

-

1.

For Fibonacci sequence temporal absolute positional encoding, the results from ConvTran-Fibo show that, compared to the baseline model ConvTran, ConvTran-Fibo achieves a 1.25% improvement in accuracy and a 2.09% improvement in precision. The application of Fibonacci sequence position encoding strengthens the positional features of the time series.

-

2.

For channel attention, the results from ConvTran-CA show a 2.08% increase in accuracy and a 2.7% increase in precision compared to the baseline model, ConvTran. Incorporating channel attention improves the model’s ability to capture and refine features in time series data.

-

3.

For the ConvTran-Fibo-CA model, a comparison with the baseline model ConvTran reveals a 2.5% improvement in accuracy and a 2.85% improvement in precision. These results validate the effectiveness of the proposed model for student learning behavior classification in smart teaching environments.

Judgment of the completeness of meta-action sequences in learning behaviors

In order to verify the completeness of the classroom meta-action sequences contained in learning behaviors, comparative experiments were conducted on the GUET5 completeness judgment dataset. The experimental results are shown in Fig. 8:

Figure 8 presents the training results of the models on the GUET5 Completeness Judgment dataset, with the x-axis denoting the number of iterations and the y-axis indicating the prediction Accuracy at every 25 epochs. From the analysis of Fig. 8, it can be observed that, on the GUET5 dataset, ConvTran-Fibo-CA demonstrates a 0.416% improvement in terms of accuracy compared to the baseline model, ConvTran. Additionally, after applying data augmentation, ConvTran-Fibo-CA-Enhanced exhibits a 0.834% improvement in training performance compared to ConvTran-Fibo-CA.

Table 7 presents the test results of three models on the GUET5 Completeness Judgment dataset. The analysis is as follows: Focal Loss enhances the models performance by introducing a modulation factor that enables the model to place greater emphasis on difficult-to-classify samples. Furthermore, by balancing the loss for positive and negative samples via the \(\alpha _t\) parameter, the models performance is further improved in tackling the class imbalance issue.

As shown in Fig. 9, after applying the FocalLoss function for data augmentation, the accuracy for “Incomplete” increased by 0.38461%, and the accuracy for “Complete” increased by 1.4018%. Incorporating the FocalLoss function into ConvTran-Fibo-CA-Enhanced allows the model to better capture the features of imbalanced classes, thereby improving its accuracy in predicting the completeness of classroom meta-action sequences.

Limitations

The completeness judgment experiment of classroom meta-actions in this model was conducted only on the GUET5 dataset. While the dataset covers a range of learning behaviors typically found in classroom environments, it may not comprehensively represent all potential learning behaviors. Future work will focus on continuously improving the dataset and establishing more comprehensive criteria for the completeness of meta-action sequences within learning behaviors. Additionally, the proposed model is currently unable to detect the timing of learning behaviors; it only recognizes pre-extracted behavior segments. In future work, we plan to introduce a learning behavior detection module to enable the model to operate in a fully automated manner.

Conclusions

The individual adaptive behavioral interpretation of students’ learning behaviors plays a crucial role in assistive teaching. Learning behaviors are composed of complex sequences of classroom meta-actions. Accurately identifying students’ learning behaviors and assessing the completeness of these meta-action sequences are essential tasks in achieving the individual adaptive behavioral interpretation of students’ learning behaviors. This paper proposes a learning behavior classification model based on classroom meta-action sequences (ConvTran-Fibo-CA-Enhanced). Specifically, by utilizing high-definition cameras in smart classrooms to capture students’ images, identifying the students’ classroom meta-action sequences, and applying Fibonacci numbers for temporal absolute position encoding, we enhance the positional features of these sequences. Additionally, a channel attention mechanism is employed to strengthen the model’s ability to capture features from classroom meta-action sequences, thus improving the model’s accuracy in learning behavior classification. Moreover, to assess the completeness of classroom meta-action sequences, this paper introduces the FocalLoss function for data augmentation, further improving the accuracy in identifying imbalanced samples.

Experimental results show that the proposed model outperforms the baseline model in terms of accuracy on publicly available human activity recognition datasets and the GUET5 student learning behavior classification dataset from smart classrooms. These results demonstrate the effectiveness of the ConvTran-Fibo-CA-Enhanced model in learning behavior classification and completeness judgment based on classroom meta-action sequences, confirming its suitability for smart classroom scenarios. The model presented in this paper demonstrates its potential in real-world applications, offering valuable insights for future research and applications related to student learning behavior classification and the individual adaptive behavioral interpretation of students’ learning behaviors.

Data availability

The data are available from the corresponding author on reasonable request.

References

Saha, A., Rajak, S., Saha, J. & Chowdhury, C. A survey of machine learning and meta-heuristics approaches for sensor-based human activity recognition systems. J. Ambient Intell. Hum. Comput. 15, 29–56 (2024).

Dentamaro, V., Gattulli, V., Impedovo, D. & Manca, F. Human activity recognition with smartphone-integrated sensors: A survey. Expert Syst. Appl. 123–143 (2024).

Yang, J., Nguyen, M. N., San, P. P., Li, X. & Krishnaswamy, S. Deep convolutional neural networks on multichannel time series for human activity recognition. In Ijcai. Vol. 15. 3995–4001 (2015).

Shi, X. et al. Convolutional lstm network: A machine learning approach for precipitation nowcasting. Adv. Neural Inf. Process. Syst. 28 (2015).

Ordóñez, F. J. & Roggen, D. Deep convolutional and lstm recurrent neural networks for multimodal wearable activity recognition. Sensors 16, 115 (2016).

Foumani, N. M., Tan, C. W., Webb, G. I. & Salehi, M. Improving position encoding of transformers for multivariate time series classification. Data Min. Knowl. Discov. 38, 22–48 (2024).

Sun, B. et al. Student class behavior dataset: A video dataset for recognizing, detecting, and captioning students’ behaviors in classroom scenes. Neural Comput. Appl. 33, 8335–8354 (2021).

Shou, Z. et al. A dynamic position embedding-based model for student classroom complete meta-action recognition. Sensors 24, 5371 (2024).

Sheng, X., Li, S. & Chan, S. Real-time classroom student behavior detection based on improved yolov8s. Sci. Rep. 15, 14470 (2025).

Han, L., Ma, X., Dai, M. & Bai, L. A wad-yolov8-based method for classroom student behavior detection. Sci. Rep. 15, 9655 (2025).

Pabba, C., Bhardwaj, V. & Kumar, P. A visual intelligent system for students’ behavior classification using body pose and facial features in a smart classroom. Multimed. Tools Appl. 83, 36975–37005 (2024).

Zhou, Y., Wang, J. & Zhang, J. A multimodal image recognition system for student behavior analysis in smart classrooms in universities. Traitement Signal 41 (2024).

Mohammadi Foumani, N. et al. Deep learning for time series classification and extrinsic regression: A current survey. ACM Comput. Surv. 56, 1–45 (2024).

Kumar, P., Chauhan, S. & Awasthi, L. K. Human activity recognition (HAR) using deep learning: Review, methodologies, progress and future research directions. Arch. Comput. Methods Eng. 31, 179–219 (2024).

Wang, Z., Yan, W. & Oates, T. Time series classification from scratch with deep neural networks: A strong baseline. In 2017 International Joint Conference on Neural Networks (IJCNN). 1578–1585 (IEEE, 2017).

Yao, M. et al. Revisiting large-kernel cnn design via structural re-parameterization for sensor-based human activity recognition. IEEE Sens. J. (2024).

Imran, H. A., Riaz, Q., Hussain, M. & Tahir, H. & Arshad, R. A powerful combination for human activity recognition. IEEE Sens. J. Smart-Wear. Sens. CNN-Bigru Model (2023).

Ismail, W. N., Alsalamah, H. A., Hassan, M. M. & Mohamed, E. Auto-HAR: An adaptive human activity recognition framework using an automated cnn architecture design. Heliyon 9 (2023).

Khatun, M. A. et al. Deep cnn-lstm with self-attention model for human activity recognition using wearable sensor. IEEE J. Transl. Eng. Health Med. 10, 1–16 (2022).

Mutegeki, R. & Han, D. S. A cnn-lstm approach to human activity recognition. In 2020 International Conference on Artificial Intelligence in Information and Communication (ICAIIC). 362–366 (IEEE, 2020).

Hamad, R. A., Hidalgo, A. S., Bouguelia, M.-R., Estevez, M. E. & Quero, J. M. Efficient activity recognition in smart homes using delayed fuzzy temporal windows on binary sensors. IEEE J. Biomed. Health Inform. 24, 387–395 (2019).

Wolf, T. Transformers: State-of-the-art natural language processing. arXiv preprint arXiv:1910.03771 (2020).

Yeh, C. et al. Attentionviz: A global view of transformer attention. In IEEE Transactions on Visualization and Computer Graphics (2023).

Pramanik, R., Sikdar, R. & Sarkar, R. Transformer-based deep reverse attention network for multi-sensory human activity recognition. Eng. Appl. Artif. Intell. 122, 106150 (2023).

Mehmood, F., Chen, E., Abbas, T., Akbar, M. A. & Khan, A. A. Automatically human action recognition (HAR) with view variation from skeleton means of adaptive transformer network. Soft Comput. 1–20 (2023).

Liu, Y. et al. Transtm: A device-free method based on time-streaming multiscale transformer for human activity recognition. Defence Technol. 32, 619–628 (2024).

Li, B. et al. Two-stream convolution augmented transformer for human activity recognition. Proc. AAAI Conf. Artif. Intell. 35, 286–293 (2021).

Du, W., Côté, D. & Liu, Y. Saits: Self-attention-based imputation for time series. Expert Syst. Appl. 219, 119619 (2023).

Bagnall, A. et al. The UEA multivariate time series classification archive, 2018. arXiv preprint arXiv:1811.00075 (2018).

Mekruksavanich, S. & Jitpattanakul, A. Hybrid convolution neural network with channel attention mechanism for sensor-based human activity recognition. Sci. Rep. 13, 12067 (2023).

Pereira, J. L. J., Francisco, M. B., Ma, B. J., Gomes, G. F. & Lorena, A. C. Golden Lichtenberg algorithm: A Fibonacci sequence approach applied to feature selection. Neural Comput. Appl. 36, 20493–20511 (2024).

Shaw, P., Uszkoreit, J. & Vaswani, A. Self-attention with relative position representations. arXiv preprint arXiv:1803.02155 (2018).

Xu, S. et al. Channel attention for sensor-based activity recognition: Embedding features into all frequencies in DCT domain. IEEE Trans. Knowl. Data Eng. 35, 12497–12512 (2023).

Funding

This work was supported by The National Natural Science Foundation of China(62177012). This research was supported by Guangxi Natural Science Foundation under Grant No. 2024GXNSFDA010048. Supported by the Project of Guangxi Wireless Broadband Communication and Signal Processing Key Laboratory(GXKL06240107). Innovation Project of Guangxi Graduate Education(YCBZ2024160).

Author information

Authors and Affiliations

Contributions

Conceptualization, Z.S. and X.Y.; methodology, X.Y.; software, X.Y.; validation, D.L., J.M. and H.Z.; formal analysis, H.Z.; investigation, H.Y.; resources, Z.S.; data curation, X.Y.; writing original draft preparation, X.Y.; writing review and editing, Z.W.; visualization, X.Y.; supervision, Z.S.; project administration, Z.S.; funding acquisition, Z.S. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval and consent to participate

This study adhered to the principles outlined in the Helsinki Declaration. All participants were thoroughly informed about the purpose and procedures of the research, and written informed consent was obtained from each participant before the experiment commenced.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Shou, Z., Yuan, X., Li, D. et al. A learning behavior classification model based on classroom meta-action sequences. Sci Rep 15, 22226 (2025). https://doi.org/10.1038/s41598-025-06901-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-06901-1