Abstract

Accurate prediction of crash injury severity and understanding the seriousness of multi-classification injuries is vital for informing authorities and the public. This Knowledge is crucial for enhancing road safety and reducing congestion, as different levels of injury necessitate distinct interventions, policies and responses to support sustainable transportation. Existing ML techniques often face class imbalance issues, resulting in suboptimal performance. Multi-class imbalance, more challenging than two-class imbalance, is frequently overlooked in traffic risk assessments. To accurately estimate the multi-class accident injuries and comprehend their severity we proposed a novel method called Bayesian Optimized Dynamic Ensemble Selection for Multi-Class Imbalance (DES-MI) with Ensemble Imbalance Learning (EIL), which involves; generating a pool of base classifiers with EIL methods and utilizing DES-MI to choose suitable classifiers. Utilizing homogeneous and heterogeneous pools of EIL classifiers, our findings demonstrate that DES-MI with EIL considerably enhances classification performance for datasets with multi-class imbalances. DES-MI with Heterogeneous EIL outperformed in performances while DES-MI with BRF achieved notable results in homogeneous ensembles. Important variables including road user gender, occupant age, month, airbag deployment, and road profile are also identified using SHAP for interpretability. Our DES-MI model with EIL classifiers and SHAP, by addressing multi-class imbalance, offers insightful information to stakeholders in road traffic safety by supporting the development of safer, efficient and sustainable urban road transport systems.

Similar content being viewed by others

Introduction

The Traffic accidents cause an alarming number of injuries and fatalities annually, making them a major global public safety concern. The severity of this problem is evident, with an estimated 20 to 50 million people suffering non-fatal injuries and 1.19 million lives lost annually as a result of traffic accidents. Road accidents, furthermore, are the biggest cause of death among 5-29-year-olds, underlining the urgent need for measures to restrict and reduce severity. This study aims to directly address this pressing issue by developing innovative predictive models that can effectively reduce the severity of traffic-related injuries. Victims of road crashes experience enormous pain and injury; besides they are costly. Sustainable development goals (SDGs) demand immediate action to reduce road fatalities worldwide by 50% by 20301. Our research is particularly relevant as it aligns with the global agenda of enhancing road safety and sustainability, addressing both societal and economic challenges. To meet with the agenda of halving the road fatalities, there is a dire need of sustainable road transport systems for the development of safer, efficient, and resilient urban environments. The critical challenge that persists is to appropriately manage crash risks and reduce road congestion. Thus, accurate assessment and forecasting of vehicle crash injury severity are pivotal to inform transportation authorities and stakeholders, thereby enhancing road traffic safety, and contributing to the sustainability of transportation systems.

Meanwhile, researchers are trying to come up with new methods that will help improve road safety and mitigate accident consequences by developing modern forecasting techniques capable of predicting crash injuries. However, despite advancements in smart applications and models that enhance safety and performance, completely preventing accidents or effectively managing traffic remains challenging2. In the domain of road safety research, it is critical to comprehend and forecast the severity of multi-level injuries sustained in traffic accidents to implement efficient mitigation techniques. This highlights the significant gap in the literature where many studies do not adequately address multi-class injury severity, which is essential for crafting targeted interventions. Comprehending the seriousness of multiclassification injuries is essential, as varying injury levels necessitate distinct interventions, policies, and responses. However, even with the recent increase in the use of machine learning techniques to forecast crash severity, a significant issue that is commonly overlooked is the severe imbalance that exists in accident datasets3,4.

In multiclass contexts, this imbalance is most apparent when there are relatively fewer incidents of serious injury or death compared to those of minor injury or property damage alone. Training classifiers is significantly hampered by multiclass imbalance because models typically predict occurrences of the majority class more accurately than those of the minority class5,6,7. Consequently, neglecting this imbalance undermines the predictive capacity of classifiers, especially for crashes with higher severity. Therefore, it is crucial and imperative to develop such models that can improve predictive performances in the case of multi-class situations and that can efficiently facilitate safety actions that are targeted at each class.

Addressing the class imbalance issue typically involves employing three main strategies: a data-level, an algorithm-level, and ensemble learning. Despite various strategies explored in the literature, many fail to tackle the complexities of multi-class data effectively. Ensemble level approaches rely on resampling data and leveraging the advantages of several algorithms simultaneously to address the issue of imbalance7.

In reviewing the literature, it is evident that focusing imbalance nature of accident data, researchers have employed various strategies, including resampling techniques like up-sampling minority classes8,9,10,11 under-sampling majority classes3,8,10,12 and data augmentation to generate synthetic data points4,10,11,13,14,15,16,17,18,19,20,21,22,23 to mitigate class imbalance. Ensemble methods18,24 have also been used to combine multiple classifiers and improve predictive performance, particularly for underrepresented classes. Table 1 presents recent studies from the past five years identified in the literature that have used imbalanced datasets.

Road accident severity modeling has received a lot of attention in previous research but despite notable achievements in crash prediction and safety enhancement, several limitations and gaps remain in performance evaluation and crash prediction. A review of the literature (provided) reveals several key issues:

First, most studies2,25,26,27,28,29,30,31,32,33,34,35 do not consider the multi-class problem or the handling of imbalanced data. Second, studies3,4,11,13,20,24,36 that do focus on balancing data typically address binary class problems, which is because binary classes are easier to handle and yield better prediction performance. This oversight limits the scope of safety interventions and fails to account for the full range of injury severity. The dependent variable with only two choices is extremely basic and has a major constraint, because no two injuries are the same from a safety perspective, and a two-class problem is unable to capture the entire range of injury severity. There is a vast variety of injuries in real-world scenarios, from minor cuts to life-threatening situations or fatalities and to aggregate all injury levels into a single two-class category would be oversimplified. Third, some studies8,9,12,14,15,16,17,22,23,30,37,38 that address more than binary class and balancing often only scratch the surface by using tertiary class problem, failing to delve deeply into the issues of multi class and address the root causes comprehensively. This lack of depth limits the potential for targeted safety interventions that could address specific types of injuries more effectively. Fourth, even when solutions based on sampling strategies are proposed by some researchers, they often rely on techniques such as random under-sampling2,8,10,12,20 random over-sampling8,9,10,11,38 or data augmentation methods4,10,11,14,15,16,17,18,19,20,21,22,23,37. These conventional approaches may not be sufficient to tackle the unique challenges posed by multi-class imbalances in road accident data, as these approaches have significant drawbacks; under-sampling can result in the loss of valuable information, while over-sampling can lead to overfitting and increased learning time5,6,7,39,40. Over-sampling minority classes can also cause classifiers to overfocus on specific instances, leading to generalization problems3. Data augmentation techniques like SMOTE and ADASYN may generate unrealistic instances, such as interpolated labels that do not fit the categorical nature of the data5,40,41.

In the context of existing literature on crash injury severity, this article provides significant contributions in addressing imbalanced data in multi-class crash severity scenarios. Specifically; The study investigates a set of ensemble imbalance learning (EIL) strategies that integrate algorithm-level and data-level cost-sensitive learning to enhance predictive performance, especially for the minority class. This integration is critical for developing robust predictive models that can accurately reflect the complexities of real-world traffic accidents. A novel technique called dynamic ensemble-selection multi-class imbalance with EIL as base, is utilized, which selects a set of appropriate classifiers for each query sample based on the classifier’s competency.

Actually, in an ensemble learning system, static and dynamic ensemble selection are considered as the two primary and robust techniques. The former typically chooses the most suitable single classifier for a query instance based on the average performance of base classifiers, while later dynamically assembles a classifier system comprising several competent classifiers and selects the classifier based on its competence for a given instance42,43. Despite the widespread application and notable performance of traditional static ensemble methods, these ensemble approaches may encounter challenges when confronted with complex multi-class imbalance data distributions. However, dynamic ensemble selection (DES) methods have gained considerable attention owing to their effectiveness and efficiency. DES dynamically identifies a classifier for each test data instance, allowing it to leverage the benefits of less biased and low-variance predictions provided by diverse machine learning approaches simultaneously44,45. Multi-class imbalance and data heterogeneity present significant challenges in road traffic safety analysis. Combining dynamic ensemble selection with ensemble imbalance learning offers a promising solution to address these issues.

To the best of our knowledge, research in the field has yet to tackle the challenge of multi-class imbalance by concurrently incorporating state-of-the-art ensemble imbalance learning (EIL) strategies with dynamic ensemble selection (DES) methods, largely unexplored in the existing literature. Hence, this paper introduces an impactful approach termed DES-MI with EIL, which stands for dynamic ensemble selection for multi-class imbalanced datasets integrated with ensemble imbalance learning strategies, to handle multi-class imbalanced datasets.

The following sums up the primary objectives:

-

Generating a pool of base classifiers (both homogeneous and heterogeneous) using ensemble imbalance learning (EIL) strategies including Self-Paced Ensemble (SPE), RUS Boost Classifier (RBC), Balanced Random Forest (BRF) and Over Boost Classifiers (OBC), with diversity which is achieved by hybridly integrating techniques at the algorithm and data levels, as well as by incorporating different numbers of estimators into the ensemble imbalance framework.

-

Introducing a dynamic scheme for multi-class imbalance (DES-MI) that dynamically selects the most competent candidate classifiers from ensembles of classifier systems based on their competence. Utilizing Bayesian optimization strategy with an expected improvement acquisition function to enhance the predicted competence and various parameters of the DES method. The optimal classifier, demonstrating higher competence and greater efficacy in classifying minority classes, is selected for evaluation and predictions.

-

Evaluating each factor’s local impact and global contribution to the occurrence of traffic crashes in the context of a multi-class scenario using the SHapely Additive exPalantion (SHAP) interpretation approach.

To achieve the specified research objectives, we employ a dataset covering the years 2009 to 2015 obtained from the National Automotive Sampling System (NASS) General Estimates Data System (GES) of the United States, focusing on incidents categorized as No-Injury, Possible Injury, Minor Injury and Serious Injury within the context of multi-class imbalance. Additionally, we conduct a comparative analysis of ensemble imbalance learning (EIL) classifiers with and without utilizing Dynamic Ensemble Selection for multi-class imbalance (DES-MI) algorithm. Model performance was evaluated using Precision, Recall, F1 score, and G-mean scores for each class, supported by confusion matrices and AUC scores. Our findings illustrate that integrating more reliable and intelligent DES-MI with EIL techniques results in superior predictive capabilities compared to traditional static ensemble learning methods. The remainder of the paper is organized as follows. Linked works are reviewed in Section II. Section III outlines our approach and the resources used. The experiments conducted are presented in Section IV, along with a discussion. Section V offers concluding observations and study limitations.

Materials and methods

Research framework

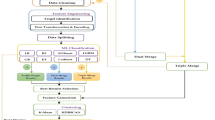

The study introduces a methodology tailored to address multi-class imbalance problems in road traffic safety research, which are notably more complex than binary imbalanced issues. The research introduces the DES-MI algorithm, a cutting-edge approach, with ensemble imbalance learning strategies designed for imbalanced datasets as base classifiers. This approach facilitates the effective use of resources by offering accurate and useful information for managing traffic safety and to promote sustainable transportation. The analysis was conducted utilizing open-source Python packages, including DESlib 0.3 (DESlib · PyPI), imbens.ensemble 0.2.1 (imbalanced-ensemble · PyPI) and SHAP 0.45 (shap · PyPI) among others. These tools were integral to the implementation of the proposed machine learning models, facilitating data preprocessing and subsequent analytical procedures. Specifically, the DESlib package was employed for dynamic ensemble selection, while imbens.ensemble provided various ensemble imbalance learning techniques to address data imbalance. Additionally, SHAP was utilized for interpretability, enabling a deeper understanding of feature contributions to model predictions. This comprehensive approach ensured a robust analysis aligned with our research objectives, clearly demonstrating how the data was systematically handled and analyzed throughout the study. The complete workflow of the proposed methodology is depicted in Fig. 1, detailing each step of the process from data preprocessing to the final interpolation of the optimal model.

The goal is to improve the prediction of crash risks in scenarios where classes are unevenly distributed. The effectiveness of each Ensemble Imbalance Learning algorithm and DES-MI with homogeneous and heterogeneous ensemble of base EIL classifiers is measured using a comprehensive set of metrics, including Precision, Recall, F1 Score and G-Mean Score, which are substantiated by confusion matrices and AUC scores.

Data description and preprocessing

The dataset utilized in this study is derived from the General Estimate System (GES) of the National Automotive Sampling System (NASS), encompassing police reports of car accidents from 2009 to 2015. Given its integration of trauma center and engineering information, the dataset includes diverse attributes such as patient vital signs, hospital records, laboratory tests, and treatment orders, vehicle manufacturing and model details, were excluded due to their lack of relevance to accident-causing factors. Crash severity is recorded using the KABCO injury classification represented in Fig. 2.

Accidents with unknown severity, totaling only 75 cases, were included in the possible injury category for further analysis while Fatal injuries, due to the low count with only 31 cases, were combined with Serious injuries to facilitate model fitting and testing and to reduce misclassification. Consequently, the selected 18 independent features classified into categories as personal, road-related, environmental, temporal and vehicular characteristics, are summarized in Table 2.

Intuitively, this study addresses the multi-class imbalance problem in crash severity by considering class scenarios categorized as No Injury (O), Possible Injury (C), Minor Injury (B), and Serious Injury (K + A). For model training and testing, the dataset was divided into two subsets: 70% for training and 30% for testing.

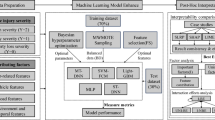

Ensemble imbalance learning (EIL)

Ensemble methods are pivotal in addressing the class imbalance problem in machine learning by leveraging the combined strengths of multiple classifiers to enhance overall accuracy. These methods work by training several classifiers and amalgamating their decisions to yield a single output, thereby boosting the performance of individual classifiers. The number of approaches shown in Fig. 3, which integrate ensemble methods with data-level and cost-sensitive techniques, have shown promising results. This highlights the critical importance of ensemble methods in developing robust classifiers capable of handling imbalanced datasets in various application domains46.

In addressing the challenge of class imbalance in machine learning, Liu et al.47 introduced Ensemble Class-imbalanced Learning. Their proposed framework leverages ensemble learning techniques to improve the performance of classifiers on imbalanced datasets. By combining resampling methods, algorithmic modifications like reweighting or cost-sensitive learning and hybrid approaches, EIL offers a comprehensive solution to bias reduction towards majority classes. Implemented in Python, it provides accessible tools for preprocessing, model training and evaluation, specifically tailored to enhance the detection and prediction capabilities for minority classes. Hence, EIL is a valuable technique for applications in areas where the cost of misclassifying minority instances can be significant.

In our investigation, we utilized a variety of Ensemble Imbalance Learning (EIL) classifiers, including Easy Ensemble, Self-Paced Ensemble, Balance Cascade, Balanced Random Forest, RUS Boost, Over Boost, and Ada U Boost Classifier, as base classifiers for DES-MI within both homogeneous and heterogeneous ensemble frameworks for multi-class classification tasks. Upon evaluating their performances, we identified that certain algorithms delivered significantly superior results. Consequently, we have chosen the Balanced Random Forest48 from the bagging-based ensemble, RUS Boost49 and Over Boost46,50 focusing on under-sampling and over-sampling from boosting-based ensembles, and the Self-Paced Ensemble51 from hybrid ensembles of these EIL algorithms. These algorithms will be considered as base classifiers within the dynamic ensemble selection (DES-MI) approach.

Balanced random forest (BRF)

Chen, Liaw, and Breiman48 explore in their groundbreaking work how to overcome the difficulties caused by imbalanced datasets by utilizing Random Forest, a potent ensemble learning technique. They introduce advanced methods such as Balanced Random Forest, which balances bootstrap samples through under sampling the majority class or oversampling the minority class, and cost-sensitive learning, which increases misclassification costs for the minority class to enhance sensitivity. These approaches enable Random Forest to effectively handle class imbalance, making it particularly valuable for applications in risk management, such as crash severity analysis.

RUS boost classifier (RBC)

In their influential paper, Seiffert et al.49 introduce RUS Boost, a hybrid ensemble learning method designed to address class imbalance. RUS Boost combines random under-sampling of the majority class with the AdaBoost algorithm, thereby enhancing the classifier’s sensitivity to the minority class. By integrating these techniques, RUS Boost effectively mitigates bias towards the majority class while maintaining robust performance. Because of this, it is especially useful for risk management applications like crash severity analysis, where it is crucial to correctly identify occurrences of the minority class.

Over boost classifier (OBC)

Over Boost classifier is an innovative ensemble method designed to address class imbalance. It integrates random over-sampling with the boosting algorithm, creating synthetic instances of the minority class to balance the dataset during training. The classifier’s performance in the minority class is improved by this hybrid strategy, greatly enhancing the effectiveness of classification as a whole. By focusing on both data-level (over sampling) and algorithm-level (boosting) techniques, Over Boost effectively mitigates the bias towards the majority class. This makes it particularly valuable in critical applications such as medical diagnosis, risk management and fraud detection, where accurate identification of minority class instances is essential46,50.

Self-Paced ensemble (SPE)

Liu et al.51 introduce Self-Paced Ensemble, an innovative ensemble learning framework designed to address the challenges of highly imbalanced and massive data classification. SPE progressively harmonizes data hardness through under sampling in a self-paced manner, starting with the easiest examples from the majority class and gradually incorporating more challenging examples based on their predicted difficulty. This curriculum learning approach allows the model to capture simple patterns first before tackling harder instances and minority class examples. SPE maintains computational efficiency by employing strictly balanced under sampling without distance calculations. It gradually focuses on more difficult examples in an ensemble context, providing a reliable and effective method for learning from complex imbalanced data.

Pool of classifiers

Classifier pool generation is a pivotal step for dynamic ensemble selection methods. This process involves strategies for selecting or updating ensemble members to enhance performance over time, making it essential for adapting to changing conditions and maintaining predictive accuracy. For the base classifier in our DES-MI method, we have considered two scenarios for generating a pool of classifiers;

-

Homogeneous ensemble:

This involved initializing a pool of classifiers for each selected EIL method – BRF, RBC, OBC and SPE – each configured with 100 estimators with 10 instances for each classifier, ensuring diversity within the ensemble. This homogeneous pool forms a critical component of our methodology, enabling dynamic selection and adaptation based on classifiers’ performance and the characteristics of the current data.

-

Heterogeneous ensemble: This approach involves pooling all the aforementioned EIL methods but with varying numbers of estimators. This strategy aims to combine the strengths of different algorithms, further enhancing the ensemble’s overall performance and adaptability to diverse data scenarios.

Dynamic ensemble selection

Dynamic ensemble selection is a promising strategy for improving the performance of classification algorithms on multi-class imbalanced datasets. The key idea is to dynamically select the most competent ensemble of classifiers from a pool for each test instance, rather than using a single ensemble for all instances. This approach aims to leverage the strengths of different ensembles for different regions of the input space, thereby enhancing the overall classification accuracy. As shown in Fig. 4, the dynamic ensemble selection process typically involves the following steps42,43.

(i). Generate a pool of diverse base classifiers or ensembles using different learning algorithms or data sampling techniques. (ii). Define a measure to estimate the competence or local expertise of each base classifier/ensemble for a given test instance. (iii). Select the k-most competent base classifier(s) or ensemble(s) from the pool for the current test instance based on the competence estimates. ( iv). Combine the predictions of the selected base classifier(s) or ensemble(s) to obtain the final prediction for the test instance.

Dynamic Ensemble Selection for Multi-class Imbalance (DES-MI) offers several advantages for multi-class imbalanced datasets: (a). It can effectively handle the class imbalance problem by selecting ensembles that are specialized in classifying minority class instances. b). It can improve the overall classification performance by exploiting the strengths of different ensembles for different regions of the input space. (c). It can adapt to the local characteristics of the data, providing more accurate predictions for complex and non-linear decision boundaries specifically in case of imbalance datasets4.

-

Hyperparameter tuning: During the Dynamic Ensemble Selection phase, the goal is to choose proficient and diverse classifiers for each test sample. This is achieved using Bayesian optimization with the open-source Hyperopt library (hyperopt.PyPI), focusing on key parameters: the region of competence, pct_accuracy, alpha and instance hardness. These parameters are crucial for tailoring the DES-MI method to the dataset’s characteristics, optimizing classifier selection, and ensuring robust performance. Using the Expected Improvement acquisition function to improve F1 Score if the classifier is considered competent, determined by these optimal parameters, is then incorporated into the ensemble and trained on the data, finalizing the process to achieve high predictive accuracy for multi-class imbalanced datasets.

Models performance evaluation

Given the inherent class imbalance within our multi-class dataset, it is crucial to employ a range of performance metrics to effectively evaluate predictions. Model performance is assessed using Precision, Recall, F1 Score and G-mean Score for each class, supported by confusion matrices and AUC scores. These metrics are particularly important in the context of imbalanced data as they offer a nuanced assessment of model performance across all classes and are emphasized due to their ability to capture the performance of models in identifying both minority and majority classes accurately. Both static ‘Ensemble Imbalance Learning’ and dynamic ensemble ‘DES-MI with EIL’ methods are systematically evaluated and compared with the help of aforementioned metrices to ensure comprehensive performance evaluation. Below are concise descriptions of each evaluation metric (with the expressions expressed in Eqs. 1–4) used to assess injury severity prediction in the context of multi-class imbalanced data:Precision

-

Precision: Precision measures the proportion of true positive predictions among all positive predictions, as shown in Eq. (1). It is crucial for understanding the accuracy of the model in predicting positive instances and is particularly important in the context of imbalanced data where false positives can be costly.

.

-

Recall: Also known as sensitivity or true positive rate, Recall is crucial in the context of imbalanced datasets as it measures the model’s capability to detect positive instances (minority class) without misclassifying them as negative instances (majority class), as demonstrated in Eq. (2).

.

-

F1 score: The F1 Score as shown in Eq. (3), is the harmonic mean of Precision and Recall, providing a single metric that balances both. It is particularly useful for imbalanced datasets as it combines the strengths of both Precision and Recall, offering a comprehensive measure of a model’s performance.

.

-

G-Mean score: G-Mean measures the geometric mean of sensitivity (Recall) and specificity, providing a balanced assessment of the model’s performance across all classes, as depicted in Eq. (4). This metric is important for ensuring that the model performs well for both majority and minority classes.

.

These performance indicators guarantee a comprehensive and precise evaluation of the model’s efficacy in managing datasets with imbalanced classes and hence allows for well-informed decision-making.

Optimal model interpretation by SHAP

For interpretation of models, SHapley Additive exPlanations (SHAP), developed by Lundberg et al. (2017)52 is most frequently adopted as it explains the predictions of instances by computing the importance of each feature, interaction with others, feature dependance and also its percent contribution to the occurrence of event. SHAP enhances the interpretability of machine learning models used in crash injury severity prediction by quantifying the significance of each feature.

The SHAP mechanism uses an interpretable approximation that is a linear function of feature values, via additive factors attribution strategy, as shown in Eq. (5).

.

where N is the number of input attributes, øo is the base value, and øi is the Shapley value for the i-th feature. The output of the original model is obtained by adding the values of all feature characteristics.

The theorem for the SHAP analysis approach, has a game-theory foundation for interpreting machine learning models. The main goal is to interpret the marginal contribution of each feature to the model output from both local and global perspectives. Locally, SHAP provides precise explanations of how each feature impacts specific predictions, while globally, it highlights overarching patterns in feature importance across the dataset. The Shapley value for each feature’s contribution is represented by Eq. (6).

.

where i is the Shapley value for feature i, f represents the black-box model, δ denotes a subset of features, and N is the total number of features. This equation ensures that each feature’s contribution is fairly and accurately assessed, providing valuable insights into the model’s decision-making process.

Results and discussion

In this study, we focused on multi-class imbalance problem in crash severity forecasting using data from the NASS-GES. Given the significant imbalance in the dataset, where Serious and Fatal injuries account for only 239 and 31 cases respectively, compared to substantially higher counts in the other three classes, fitting algorithms directly to this data with Serious and Fatal injuries as separate classes could lead to underrepresentation of these minority classes. To address this issue, we combined the Fatal injury class with the Serious injury class during the analysis and fitting of algorithms. In our study, crash severity was aggregated into four categories: No Injury, Possible Injury, Minor Injury, and Serious Injury.

Additionally, the dataset was split into training/validation (70%) and test sets (30%) to ensure consistent analysis and prediction accuracy. This partitioning enabled us to thoroughly test and validate the performance of our models, ensuring they are reliable and accurate for crash severity forecasting. We evaluated the performance of our models’ using precision, recall, F1-score and G-mean score, along with the confusion matrix and AUC-ROC curve. Notably, we utilized Ensemble Imbalance Learning (EIL) as the base classifiers for the Dynamic Ensemble Selection for Multi-class Imbalance (DES-MI) model in our multi-class crash severity analysis. We separately assessed the performance of the base classifiers and the DES-MI model with EIL classifiers, employing both homogeneous and heterogeneous pools of base algorithms.

The primary objective of this study is to leverage and evaluate the performance of Ensemble Imbalance Learning (EIL) classifiers in conjunction with the Dynamic Ensemble Selection for Multi-class Imbalance (DES-MI) method. To assess their robustness and effectiveness in addressing multi-class imbalance, we also incorporated widely recognized traditional data balancing techniques, including SMOTE, SMOTE Tomek, SMOTEENN, and ADASYN, employing a Bagging Classifier as the foundational model for DES-MI. The Bagging technique facilitates the construction of a diverse ensemble of classifiers by randomly selecting different subsets of the training data for each classifier’s training53. This methodological approach enables a comparative analysis of EIL classifiers against these established data handling techniques, thereby demonstrating how EIL can surpass alternative strategies in effectively managing multi-class imbalance.

Performance evaluation of EIL strategies

The evaluation of advanced Ensemble Imbalance Learning models, including BRF, RBC, OBC and SPE, was initially performed as standalone models. Subsequently, these algorithms were employed as base estimators for the DES-MI model. Although the standalone models demonstrated adequate performance in addressing class imbalance when applied to the imbalanced dataset, the prediction performance improved when used as base estimators for the DES-MI model.

Given the high degree of data imbalance, classifier models may struggle to accurately predict the minority classes. Therefore, confusion matrices for each classifier are provided, and performance metrics focusing on each class are considered measures of their efficiency. Figure 5(a–d) presents the confusion matrices and AUC-ROC curves for the standalone EIL models.

Figure 5 illustrate the Confusion Matrices and AUC-ROC Curves for EIL algorithms, representing (a) for BRF model, (b) the RBC model, (c) OBC Model and (d) the SPE model, highlighting the performance for each class.

The BRF and OBC models attained the AUC-ROC with the highest values for each class as compared to other two classifiers. The confusion matrices for the standalone EIL models also reveal that OBC was more effective in correctly classifying minority classes compared to other classifiers. The ROC curves further illustrate that the area under the curve for the minority class is significantly greater than that for the majority class. Notably, although the SPE model lagged behind in terms of overall results, it performed well in predicting the Minor Injury class compared to other classifiers. The comparative evaluation of standalone ‘EIL’ methods versus DES-MI (EIL) will be presented in Table .

Performance evaluation of DES-MI(EIL)

Subsequently, the EIL algorithms – BRF, RBC, OBC, and SPE – were employed in conjunction with the Dynamic Ensemble Selection for Multi-class Imbalance (DES-MI) algorithm within both homogeneous and heterogeneous pools of base classifiers. Prior to evaluating the performance of DES-MI(EIL) with each classifier pool, to select the proficient and diverse classifiers for each test sample, Bayesian optimization with the Expected Improvement acquisition function was used to enhance F1 Score. The key parameters with their ranges, and optimal values are detailed in Table 3. These parameters are critical for tailoring the DES-MI method to the dataset’s characteristics, optimizing classifier selection, and ensuring robust performance. If a classifier is deemed competent, based on these optimal parameters, it is then incorporated into the ensemble for further processing.

Eventually, performance of DES-MI algorithm with homogeneous and heterogeneous pools of EIL classifiers was evaluated. The confusion matrices and AUC-ROC curves for DES-MI with each EIL classifier as a homogeneous ensemble are shown in Fig. 6(a-d), while those for the heterogeneous ensemble of the aforementioned classifiers is presented in Fig. 7.

Figure 6 illustrate the Confusion Matrices and AUC-ROC Curves for (a) DES-MI(BRF), (b) DES-MI(RBC), (c) DES-MI(OBC), (d) DES-MI(SPE), in homogeneous ensemble of EIL classifiers. It indicates a clear increase in the prediction accuracy for each class by 3 to 4%. Notably, DES-MI (BRF) achieved the highest ROC curve value for each class in this ensemble.

Conversely, DES-MI with a heterogeneous ensemble of EIL classifiers outperformed the homogeneous ensembles in predictive performance as predicted in Fig. 7. The confusion matrices for the DES-MI(EIL) models also reveal that all models were more effective in correctly classifying minority classes compared to standalone EIL classifiers. The ROC curves further illustrate that the area under the curve for the minority class is significantly greater than that for the majority class.

Models’ performance comparison

This research proposes the application of Dynamic Ensemble Selection for a multi-class imbalance strategy, utilizing various Ensemble Imbalance Learning (EIL) algorithms as base estimators to address the challenge of multi-class imbalance and predict the severity of vehicular crashes. In this study, we conducted a class-specific evaluation and comparison of the performance of the employed DES-MI(EIL) models.

It is noteworthy that previous studies18,19,33 have utilized conventional accuracy for comparing results in multi-class scenarios, which can be misleading since the model may neglect some classes. Consequently, we employed the precision, recall, F1-score, and G-mean score, with particular emphasis on the minority (Severe) classes in our study to comprehensively compare model performances, as these matrices provides a reliable measure of overall model performance that is not overly influenced by class distribution. The prediction results specific to every class are presented in Table 4.

It is evident from the results in Table 4 that, among the standalone EIL classifiers, BRF outperformed all other EIL classifiers in overall performance. In class-specific performance of stand-alone ensemble imbalance learning (EIL) classifiers although the BRF model demonstrates a superior performance, achieving the highest precision for the ‘No Injury’ class at 0.86 and an overall average precision of 0.68, it excels in predicting non-injury cases. Additionally, BRF achieves the highest recall for the ‘Serious Injury’ class at 0.52, which is crucial for minimizing false negatives in severe cases. Moreover, for the ‘Possible Injury’ class, the RBC model excels with the highest recall of 0.41, indicating better identification of these cases. The SPE leads in predicting the ‘Minor Injury’ class with a recall of 0.36, an F1 score of 0.26, and a G-Mean of 0.56, showcasing its strength in detecting less severe injuries. While the OBC model shows slightly better recall, F1, and G-Mean scores on average, indicating its strengths for forecasting ‘Serious Injury’ which make it the most compelling choice for applications where the cost of under-prediction is high, such as in crash injury severity prediction.

In the context of homogeneous and heterogeneous ensemble pools of EIL classifiers within the DES-MI framework, the analysis indicates that the DES-MI with Heterogeneous Ensemble of EIL classifiers outperform all other classifiers across all severity levels. It achieves the highest average precision (0.69), recall (0.58), F1 score (0.62) and G-mean (0.64). In comparison, the Balanced Random Forest classifier within the DES-MI framework utilizing a homogeneous ensemble of EIL classifiers, demonstrates superior performance compared to other homogeneous ensembles, with average precision, recall, F1 score and G-mean scores of 0.68, 0.54, 0.59 and 0.63 respectively, followed by DES-MI(RCB), DES-MI(SPE), and DES-MI(OBC), respectively. Notably, DES-MI(BRF) in case of homogeneous ensembles achieves the highest recall, F1, and G-mean scores for both minor and serious injury classes, highlighting its efficacy in accurately classifying severe injury cases and its robustness in handling imbalanced datasets. These findings underscore the nuanced capabilities of the DES-MI(BRF) classifier, establishing it as a pivotal tool in the predictive analysis of road traffic injury severity.

Although, the main purpose of this study is to implement and evaluate the performance of Ensemble Imbalance Learning (EIL) classifiers in conjunction with the Dynamic Ensemble Selection for Multi-class Imbalance (DES-MI) method. While our focus is on implementing EIL techniques to address multi-class imbalance in crash injury prediction, we have conducted a comparative analysis with widely used data balancing methods, including SMOTE, SMOTE Tomek, ADASYN, and SMOTEENN, utilizing a Bagging Classifier as the base for DES-MI. To assess and compare the performance of the DES-MI in conjunction with these data balancing techniques, we have included only the confusion matrix in our analysis. Figure 8 presents the confusion matrices for DES-MI method alongside these balancing techniques.

The confusion matrices in Fig. 8 reveals the predictive performance of DES-MI utilizing bagging classifier as base across multiple data treatment methods aimed at addressing class imbalance and to compare the results of proposed DES-MI (EIL) methods with these approaches. The results illustrate that across all balancing techniques, SMOTEENN demonstrates relatively better performance for minority classes compared to the other methods, however, there is a recurring misclassification of minority classes as majority classes. This indicates that these techniques, though popular for handling imbalanced data, may not sufficiently address the needs of multi-class problems where multiple minority classes are present. In comparison to the results presented in Figs. 5 and 6, our evaluation indicates that EIL methods, both independently and when integrated with DES-MI, surpass traditional data balancing techniques, particularly in their ability to predict minority classes and improve overall predictive accuracy across various injury severity levels.

The findings demonstrate that DES-MI combined with EIL significantly enhances classification performance for datasets characterized by multi-class imbalances. By delivering more accurate predictions, this approach supports the sustainability of transportation systems by informing more effective traffic safety interventions and alleviating the societal burden of road accidents. In culmination, DES-MI(EIL) classifier with heterogeneous ensemble shows superior performance, particularly in the critical ‘Serious Injury’ class. Its ability to maintain high performance metrics across all classes makes it the most suitable for addressing the multi-class imbalance problem, ensuring accurate identification of all severity levels, with a notable strength in recognizing serious injuries. Consequently, this model is highly valuable for applications that require precise injury severity prediction, making it ideal for scenarios where accurately identifying severe injuries is crucial.

Validation of model

The performance of any predictive model is largely determined by its ability to perform effectively on real-world data. In this study, we validated the proposed model using multiple datasets to ensure robustness and its applicability in different data scenarios. We performed validation through internal validation, external validation on intersection crash records, and external validation with driving style data.

For the internal validation, we utilized the same dataset while considering three classes: No Injury (0), Minor Injury (1), and Major Injury (2). The primary purpose of this validation was to assess how well the model performs with a more balanced and diverse class distribution.

We evaluated the model using the same standard performance metrics previously employed to assess the model’s performance. The results from the validation are summarized in Table 5.

For the external validation we used two different external datasets: Intersection Crash Records from the NASS-GES comprised of 3988 counts and 14 independent variables, categorized into three classes: No Injury (0), Minor Injury (1), and Major Injury (2), (External validation-1) and an open-source Driving Style Data comprising of 16,255 with 19 independent variables and three categories: Aggressive Driving (0), Normal Driving (1), and Vague Driving (2) (External Validation-2). These datasets were used to evaluate the model’s performance on unseen, real-world data.

The results from Table 5 demonstrates that DES-MI(EIL) outperforms other models, achieving the highest predictive performances for both the Internal validation and External validation-2, while DES-MI(OBC) has shown the competitive performance in external validation-1 with intersection cars records.

As the classifier ‘DES-MI(EIL)’ outperformed all other machine learning algorithms ‘stand-alone and combination’ in case of multi-class imbalance problems, it could be utilized along with SHAP analysis to present the feature importance and contribution of features in accidents for safety improvements.

Optimal model interpretation

Global feature interpretation

To thoroughly analyze the effect of traffic factors on injury severity likelihood, the SHAP technique is employed. The purpose of SHAP interpretation is to elucidate how a machine-learning model behaves across the entire spectrum of its input factors’ values. Global interpretation involves analyzing the overall impact of each feature on the model’s predictions across the entire dataset. This is achieved by averaging the SHAP values for each feature, offering insights into the relative importance of different risk factors. The global interpretation helps identify which features have the most significant influence on the model’s outcomes and provides a holistic view of the model’s behavior.

This study employs the optimal DES-MI(EIL) heterogeneous ensemble model to evaluate the significance of each risk factor to the model’s estimation. Figure 9 illustrates the influence of these risk factors, determined by averaging the absolute Shapley values across the training dataset.

The analysis reveals that road user gender and age have the most substantial effects on accident severity, followed by the month of the year, vehicle age, and road profile. Conversely, factors such as drug involvement, accident type, road alignment, road work zones, and alcohol involvement exhibit minimal impact on incident severity. These findings highlight the importance of each risk factor while emphasizing the necessity of understanding how each contributing factor influences crash severity. The use of local feature importance further underscores the critical role of these risk factors in shaping the outcomes and interpretations of the model. These insights gained from our DES-MI(EIL) model with SHAP offer valuable information for stakeholders in traffic safety, supporting informed decision-making and effective policy development. This aligns with the principles of sustainable development, as it promotes collaborative governance arrangements and enhances the overall safety and reliability of transportation systems and infrastructures.

Local feature interpretation

Local interpretation focuses on understanding individual predictions. By examining the SHAP values for a specific instance, it is possible to determine the contribution of each feature to that particular prediction. To interpret which features are most influential for a particular prediction, such as an individual injury case, and to understand how they interact to lead to the model’s final decision, we have utilized the SHAP force plot. It is an effective method for enhancing the transparency and comprehensibility of machine learning models, at a local level.

In the context of the force plot, the ‘Base Value’ serves as the reference point from which feature contributions are measured, typically representing the average model output across the dataset. In our study, the base values are; 0.3809 for ‘No Injury’, 0.2266 for ‘Possible Injury’, 0.1953 for ‘Minor Injury and 0.1971 for serious injury. These values indicate that, the model predicts the probability of no injury to be approximately 38.09%, a possible injury ‘22.66%’, minor injury ‘19.53%’ and 19.71% for a serious injury in a random case from the training data.

Figure 10 represents the model’s findings for all four levels of injury severity that were chosen by calculating the probabilities for each injury severity level separately and selecting the cases with their maximum likelihood.

Figure 10 (a) shows a model prediction with a 67% probability of being a serious injury. The color intensity and length of the boxes represent the impact magnitude of each feature on the predicted injury severity. The most influential features for predicting the likelihood of a “Serious Injury” in the selected instance include “Gender (0: female)”, “Weather_Condition (2: Snow, Hail)”, “Month_of_Year (0: January)”, “Road_Surface_Condition (1: Wet)”, and “Occupant_Age (1: 20 to 29 years)”. The values next to these features indicate their respective contributions to the prediction.

Figure 10 (b & c) represent scenarios of Minor and Possible injury cases, with likelihoods of 65% and 54%, respectively. In both cases, “Gender (0: female)” is the most significant predictor. For Minor Injury case, other contributing factors include “Road_Junction (1: Intersection)”, “Month_of_Year (7: June)”, “Occupant_Age (4: 50–59 years)”, and “Road_Profile (0: Level)”. For Possible Injury cases, significant predictors include “Road_Traffic_Way (0: 2-way not divided)”, “Occupant_Age (1: 20–29 years)”, and “Month_of_Year (7: June)”. Similarly, Fig. 10(d) illustrates the factors contributing to a No Injury outcome, highlighting “Occupant_Age (3: 40 to 49 years)”, “Month_of_Year (5: April)”, and a level road within a non-intersection area as the dominant predictors.

The force plot provided represents the maximum probability of a specific injury outcome for each independently chosen instance. To gain a deeper understanding, a random instance is selected to examine how the model predicts the behavior of each injury aspect in the selected instance and to identify the factors contributing to each outcome, as shown in Fig. 11. The depicted instance classifies the different injury severity levels with a likelihood of 44% for Serious Injury, 24% for Minor Injury, 17% for Possible Injury, and a 15% probability of No Injury.

Figure 11 (a) demonstrates that factors such as Alcohol Involvement (1: Involved), Time of Day (0: Night), Light Condition (1: Dark with road lights), Bag Deployment (1: Not deployed), Month of Year (4: March), and Type of Day (0: Weekends) significantly contribute to the prediction of a Serious Injury. Conversely, Road Profile impacts against this suspected outcome, which is reelected in part (d) of Fig. 11, slightly countering the likelihood of non-injury or a property damage case only. Moreover, Fig. 11(b & c) illustrate the influential factors for Minor and Possible injury scenarios. For Minor Injury, features like bag-deployment, month of the year, occupant age in red indicates the contribution positively towards the prediction of the suspected injury, while features in blue contribute against it. Similarly, for Possible Injury, road junction and road profile are the features have a positive impact on the prediction of this injury.

To add transparency to the model’s prediction and complimenting the SHAP’s interpretations, the Fig. 12 provides a more granular understanding by visualizing the exact influence of individual features with LIME, for the same instance demonstrated in Fig. 11.

Figure 12 demonstrates the local interpretations for an instance, with prediction probabilities aligns closely with those generated by SHAP. In the analysis, the model predicts a 44% probability for Serious Injury, with Alcohol Involvement, Bag Deployment, Light Condition, and Month of Year shown as significant contributors, indicated by the red bars. These factors strongly favor the prediction of Serious Injury, similar to the SHAP analysis, where these features also had a notable positive impact, while Road Profile (as in part d of fig.) acts against it, consistent with SHAP’s outcome of Road Profile having an influence against Serious Injury.

For Minor Injury (24% probability), indicated by green bars in Fig. 12 (b), features like Bag Deployment, Month of the year, Road Traffic Way, and Occupant age positively contribute, while the Possible Injury outcome (17% probability), shown in Fig. 12 (c) is primarily influenced by Road Junctions, Road profile, Occupant Age, and Accident Type. These outcomes also align with SHAP, where Bag Deployment, Month of the Year and Occupant Age also influenced the prediction. Finally, for No Injury (15% probability), the blue bars represented in Fig. 12 (d), presents features such as Occupant Age, Accident Type, Vehicle age and Road Work Zone other than the Road Profile (consistent with SHAP) as contributing factors.

In summary, this LIME analysis provides a complementary view to SHAP, breaking down the instance-specific influences of each feature on different injury outcomes, with color-coded bars offering a clear visual of which features positively or negatively impact each predicted injury level. Together, SHAP and LIME provide a holistic view of feature importance and contribution, increasing interpretability consistency across different crash instances. However, it is important to note that while SHAP and LIME provide valuable insights into feature contributions at an instance level, local interpretability can vary between predictions. The influence of a particular feature may differ depending on the specific instance analyzed, making it crucial to interpret local explanations within the context of individual cases rather than generalizing findings across the entire dataset.

Implications

Road Traffic safety is a critical concern globally for policy makers, governments and road safety authorities. This study’s findings offer valuable insights for traffic managers and policy makers to enhance road safety by contributing significantly at both the predictive and practical application levels. By employing the Dynamic Ensemble Selection with Ensemble Imbalance Learning (DES-MI(EIL)) model, optimized for multi-class imbalance, the research provides accurate predictions of injury severity, particularly excelling in identifying serious injuries. Through interpretative tools like SHAP and LIME, the study reveals key factors affecting injury severity, such as road user demographics, road profile, vehicle age, and seasonal trends. Traffic managers can utilize these insights to implement targeted pre-emptive actions, including awareness campaigns for high-risk demographics, seasonal safety initiatives, and strategic infrastructure improvements in high-risk areas. Furthermore, the model supports reactive measures by informing resource allocation in high-risk zones and during critical times, enhancing emergency response effectiveness. Ultimately, this predictive approach aids policymakers in developing evidence-based interventions, ensuring safer transportation systems and reducing the societal impacts of road traffic injuries.

In addition to the predictive and practical contributions highlighted, the DES-MI(EIL) model has strong potential for real-world deployment in traffic management systems. It can be integrated into real-time applications such as automated traffic monitoring, and autonomous vehicles to predict injury severity in real-time scenarios. This model could be helpful to guide emergency response teams by providing timely, data-driven insights into crash severity. Additionally, it can help optimize resource allocation in emergency departments and improve decision-making during critical traffic incidents.

Conclusions and recommendations

This study addresses the critical challenge of multi-class imbalance in crash severity prediction, where different levels of injury severity necessitate distinct interventions, policies, and responses, using the National Automotive Sampling System (NASS) General Estimates System (GES) dataset. The primary focus was on improving the predictive accuracy for serious injuries, which are often underrepresented in such datasets. We employed advanced Ensemble Imbalance Learning (EIL) techniques, such as Balanced Random Forest, RUS Boost, Over Boost and Self-Paced Ensemble classifiers both as standalone models and as base estimators for the Dynamic Ensemble Selection for Multi-class Imbalance (DES-MI) algorithm. Our analysis revealed that while standalone EIL models provided adequate performance, the DES-MI model, particularly with a heterogeneous ensemble of EIL classifiers, exhibited superior predictive capabilities.

Moreover, unlike previous studies that identified influential factors without considering different injury severity levels separately, our research addressed this limitation by utilizing local feature interpretation techniques. By applying SHAP values for local interpretation and then complimenting with LIME, we examined the specific contributions of features to individual predictions for each severity level. This approach provided detailed insights into the factors influencing each class of injury severity, highlighting the varying impacts of different features across severity levels. The global and local interpretations using SHAP values provided valuable insights into the influence of various traffic factors on injury severity.

Lastly, the study acknowledges several limitations that necessitate future attention. Notably, excluding unknown counts from the dataset resulted in lost information and reduced sample size, limiting the dataset’s full utilization. Moreover, combining fatal injuries with serious injuries requires separate analysis to accurately discern their distinct impacts. Future research should prioritize achieving a balanced dataset with increased representation of serious and fatal injury cases to enhance the reliability and predictive accuracy of models used in multi-class injury severity assessment. Additionally, we recognize the potential benefits of incorporating deep generative models in our future work to effectively address data imbalance. These models may enhance predictive accuracy when integrated with Dynamic Ensemble Selection (DES) techniques. By leveraging these advanced methodologies, subsequent studies could further strengthen the robustness of injury severity predictions and yield more reliable insights for traffic safety interventions.

Data availability

The datasets analyzed during the current study are available from the corresponding author on reasonable request.

References

Global Status Report on Road Safety 2023, World Health Org. (2023).

Sattar, K. A., Ishak, I. & Affendey, L. S. Mohd rum, S. N. B. Road crash injury severity prediction using a graph neural network framework. IEEE Access. 12, 37540–37556 (2024).

Fiorentini, N. & Losa, M. Handling imbalanced data in road crash severity prediction by machine learning algorithms. Infrastructures (Basel) 5, (2020).

Tahfim, S. A. S. & Chen, Y. Comparison of Cluster-Based sampling approaches for imbalanced data of crashes involving large trucks. Information (Switzerland) 15, (2024).

García, S., Zhang, Z. L., Altalhi, A., Alshomrani, S. & Herrera, F. Dynamic ensemble selection for multi-class imbalanced datasets. Inf. Sci.. 446, 445 (2018).

Hakim, M., Hamid, A., Yusoff, M., Mohamed, A. & Alam, S. Survey on highly imbalanced Multi-Class data. IJACSA) Int. J. Adv. Comput. Sci. Appl. vol. 13 www.ijacsa.thesai.org

Leevy, J. L., Khoshgoftaar, T. M. & Bauder, R. A. & Seliya, N. A survey on addressing high-class imbalance in big data. J. Big Data 5, (2018).

Raja, K., Kaliyaperumal, K., Velmurugan, L. & Thanappan, S. Forecasting road traffic accident using deep artificial neural network approach in case of oromia special zone. Soft Comput. 27, 16179–16199 (2023).

Aldhari, I. et al. Severity prediction of highway crashes in Saudi Arabia using machine learning techniques. Applied Sci. 13, (2023).

Li, Y. et al. Crash injury severity prediction considering data imbalance: A Wasserstein generative adversarial network with gradient penalty approach. Accid Anal. Prev. 192, (2023).

Kuo, P. F., Hsu, W. T., Lord, D. & Putra, I. G. B. Classification of autonomous vehicle crash severity: solving the problems of imbalanced datasets and small sample size. Accid Anal. Prev. 205, (2024).

Kim, S., Lym, Y. & Kim, K. J. Developing crash severity model handling class imbalance and implementing ordered nature: focusing on elderly drivers. Int. J. Environ. Res. Public. Health. 18, 1–22 (2021).

Chen, H., Chen, H., Zhou, R., Liu, Z. & Sun, X. Exploring the Mechanism of Crashes with Autonomous Vehicles Using Machine Learning. Math. Probl. Eng. (2021). (2021).

Sangare, M., Gupta, S., Bouzefrane, S., Banerjee, S. & Muhlethaler, P. Exploring the forecasting approach for road accidents: analytical measures with hybrid machine learning. Expert Syst. Appl. 167, (2021).

Pérez-Sala, L., Curado, M., Tortosa, L. & Vicent, J. F. Deep learning model of convolutional neural networks powered by a genetic algorithm for prevention of traffic accidents severity. Chaos Solitons Fractals 169, (2023).

Mohammadpour, S. I., Khedmati, M. & Zada, M. J. H. Classification of truck-involved crash severity: dealing with missing, imbalanced, and high dimensional safety data. PLoS One 18, (2023).

Vanishkorn, B. & Supanich, W. Crash Severity Classification Prediction and Factors Affecting Analysis of Highway Accidents. in 9th International Conference on Advanced Informatics: Concepts, Theory and Applications, ICAICTA 2022 (Institute of Electrical and Electronics Engineers Inc., 2022). (Institute of Electrical and Electronics Engineers Inc.,). (2022). https://doi.org/10.1109/ICAICTA56449.2022.9932998

Roudnitski, A. Evaluating road crash severity prediction with balanced ensemble models. Findings https://doi.org/10.32866/001c.116820 (2024).

Ahmed, S., Hossain, M. A., Ray, S. K., Bhuiyan, M. M. I. & Sabuj, S. R. A study on road accident prediction and contributing factors using explainable machine learning models: analysis and performance. Transp Res. Interdiscip Perspect 19, (2023).

Chen, J. et al. A novel generative adversarial network for improving crash severity modeling with imbalanced data. Transp Res. Part. C Emerg. Technol 164, (2024).

Wang, X., Su, Y., Zheng, Z. & Xu, L. Prediction and interpretive of motor vehicle traffic crashes severity based on random forest optimized by meta-heuristic algorithm. Heliyon 10, (2024).

Cheng, C., Chen, S., Ma, Y., Qiao, F. & Xie, Z. Crash severity prediction and interpretation for road determinants based on a hybrid method. J. Transp. Saf. Secur. https://doi.org/10.1080/19439962.2024.2364661 (2024).

Ogungbire, A. & Pulugurtha, S. S. Effectiveness of data imbalance treatment in Weather-Related crash severity analysis. Transp. Res. Record: J. Transp. Res. Board. https://doi.org/10.1177/03611981241239962 (2024).

Asadi, R. et al. Self-Paced Ensemble-SHAP approach for the classification and interpretation of crash severity in work zone areas. Sustainability 15, (2023).

Yuan, C. et al. Application of explainable machine learning for real-time safety analysis toward a connected vehicle environment. Accid Anal. Prev. 171, (2022).

Niyogisubizo, J. et al. A Novel Stacking Framework Based On Hybrid of Gradient Boosting-Adaptive Boosting-Multilayer Perceptron for Crash Injury Severity Prediction and Analysis. in. IEEE 4th International Conference on Electronics and Communication Engineering, ICECE 2021 352–356 (Institute of Electrical and Electronics Engineers Inc., 2021). (2021). https://doi.org/10.1109/ICECE54449.2021.9674567

Obasi, I. C. & Benson, C. Evaluating the effectiveness of machine learning techniques in forecasting the severity of traffic accidents. Heliyon 9, (2023).

Islam, M. K. et al. Predicting road crash severity using classifier models and crash hotspots. Applied Sci. 12, (2022).

Niyogisubizo, J. et al. Predicting crash injury severity in smart cities: a novel computational approach with wide and deep learning model. Int. J. Intell. Transp. Syst. Res. 21, 240–258 (2023).

Liu, L., Zhang, X., Liu, Y., Zhu, W. & Zhao, B. An Ensemble of Multiple Boosting Methods Based on Classifier-Specific Soft Voting for Intelligent Vehicle Crash Injury Severity Prediction. in Proceedings – 2020 IEEE 6th International Conference on Big Data Computing Service and Applications, BigDataService 2020 17–24Institute of Electrical and Electronics Engineers Inc., (2020). https://doi.org/10.1109/BigDataService49289.2020.00011

Ren, Q., Xu, M., Zhou, B. & Chung, S. H. Traffic Safety Assessment and Injury Severity Analysis for Undivided Two-Way Highway–Rail Grade Crossings. Mathematics 12, (2024).

Megnidio-Tchoukouegno, M. & Adedeji, J. A. Machine learning for road traffic accident improvement and environmental resource management in the transportation sector. Sustainability 15, (2023).

Wahab, L. & Jiang, H. Severity prediction of motorcycle crashes with machine learning methods. Int. J. Crashworthiness. 25, 485–492 (2020).

Aboulola, O. I. Improving traffic accident severity prediction using MobileNet transfer learning model and SHAP XAI technique. PLoS One 19, (2024).

Shao, Y. et al. Injury severity prediction and exploration of behavior-cause relationships in automotive crashes using natural Language processing and extreme gradient boosting. Eng Appl. Artif. Intell. 133, (2024).

Aziz, K. et al. Road traffic crash severity analysis: A Bayesian-Optimized dynamic ensemble selection guided by instance hardness and region of competence strategy. IEEE Access. https://doi.org/10.1109/ACCESS.2024.3465489 (2024).

Chakraborty, M., Gates, T. & Sinha, S. Causal Analysis and Classification of Traffic Crash Injury Severity Using Machine Learning Algorithms.

Yang, D., Dong, T. & Wang, P. Crash severity analysis: A data-enhanced double layer stacking model using semantic Understanding. Heliyon 10, (2024).

He, H. & Garcia, E. A. Learning from imbalanced data. IEEE Trans. Knowl. Data Eng. 21, 1263–1284 (2009).

Gao, X. et al. An ensemble imbalanced classification method based on model dynamic selection driven by data partition hybrid sampling. Expert Syst. Appl. 160, (2020).

Roy, A., Cruz, R. M. O., Sabourin, R. & Cavalcanti, G. D. C. A study on combining dynamic selection and data preprocessing for imbalance learning. Neurocomputing 286, 179–192 (2018).

Ko, A. H. R., Sabourin, R. & Britto, A. S. From dynamic classifier selection to dynamic ensemble selection. Pattern Recognit. 41, 1718–1731 (2008).

Cruz, R. M. O., Sabourin, R. & Cavalcanti, G. D. C. Dynamic classifier selection: recent advances and perspectives. Inform. Fusion. 41, 195–216 (2018).

Yu-Quan, Z., Ji-Shun, O. & Geng, C. Hai-Ping, Y. Dynamic weighting ensemble classifiers based on cross-validation. Neural Comput. Appl. 20, 309–317 (2011).

Woloszynski, T., Kurzynski, M., Podsiadlo, P. & Stachowiak, G. W. A measure of competence based on random classification for dynamic ensemble selection. Inform. Fusion. 13, 207–213 (2012).

Galar, M., Fernandez, A., Barrenechea, E., Bustince, H. & Herrera, F. A review on ensembles for the class imbalance problem: Bagging-, boosting-, and hybrid-based approaches. IEEE Transactions on Systems, Man and Cybernetics Part C: Applications and Reviews vol. 42 463–484 Preprint at (2012). https://doi.org/10.1109/TSMCC.2011.2161285

Liu, Z., Kang, J., Tong, H. & Chang, Y. IMBENS: Ensemble Class-imbalanced Learning in Python. (2021).

Breiman, L. Random forests. Mach. Learn. 45, 5–32 (2001).

Seiffert, C., Khoshgoftaar, T. M., Van Hulse, J., Napolitano, A. & RUSBoost A hybrid approach to alleviating class imbalance. IEEE Trans. Syst. Man. Cybernetics Part. Syst. Hum. 40, 185–197 (2010).

Newaz, A., Hassan, S. & Haq, F. S. An empirical analysis of the efficacy of different sampling techniques for imbalanced classification. arXiv preprint arXiv:2208.11852 (2022).

Liu, Z. et al. Self-paced ensemble for highly imbalanced massive data classification. in Proceedings - International Conference on Data Engineering vols. -April 841–852 (IEEE Computer Society). (2020).

Lundberg, S., Lundberg, S. M., Allen, P. G. & Lee, S. I. A Unified Approach to Interpreting Model Predictions ChromNet View Project Shapley Additive ExPlanations (SHAP) View Project A Unified Approach to Interpreting Model Predictions. (2017). https://github.com/slundberg/shap

Wang, Y., Zhang, J. & Yan, W. An enhanced dynamic ensemble selection classifier for imbalance classification with application to China corporation bond default prediction. IEEE Access. 11, 32082–32094 (2023).

Acknowledgements

The authors extend their appreciation to Taif University, Saudi Arabia, for supporting this work through project number (TU-DSPP-2024-33) and also Ministry of Science and Higher Education of the Russian Federation for supporting this work and providing expert support.

Funding

This research was funded by Taif University, Saudi Arabia, Project No. (TU-DSPP-2024-33). Also, this research was partially funded by the Ministry of Science and Higher Education of Russian Federation (Funding No. FSFM-2024-0025).

Author information

Authors and Affiliations

Contributions

K.A and F.C done the data curation, K.A performed formal analysis, methodology and writing original draft, F.C done the validation and supervision. M.A and M.S.K done the visualization, review and editing. M.M.S.S had reviewed the content, done investigation, validation and funding acquisition. H.A done the visualization and funding acquisition. Manuscript reviewed by all authors.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Aziz, K., Chen, F., Ahmad, M. et al. An interpretable dynamic ensemble selection multiclass imbalance approach with ensemble imbalance learning for predicting road crash injury severity. Sci Rep 15, 24666 (2025). https://doi.org/10.1038/s41598-025-08935-x

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-08935-x