Abstract

Accurate air quality forecasting is critical for human health and sustainable atmospheric management. To address this challenge, we propose a novel hybrid deep learning model that combines cutting-edge techniques, including CNNs, BiLSTM, attention mechanisms, GNNs, and Neural ODEs, to enhance prediction accuracy. Our model uses the Air Quality Open Dataset (AQD), combining data from ground sensors, meteorological sources, and satellite imagery to create a diverse dataset. CNNs extract spatial pollutant patterns from satellite images, whereas BiLSTM networks simulate temporal dynamics in pollutant and weather data. The attention mechanism directs the model’s focus to the most informative features, improving predictive accuracy. GNNs encode spatial correlations between sensor locations, improving estimates of pollutants like PM2.5, PM10, CO, and ozone. Neural-ODEs capture the continuous temporal evolution of air quality, offering a more realistic representation of pollutant changes compared to discrete-time approaches. Importantly, we use adaptive pooling, a dynamic operation that optimizes spatial feature reduction while preserving critical information, which sets it apart from traditional fixed pooling layers. This adaptive pooling mechanism reduces computational complexity and results in a 22% reduction in training time, as demonstrated by the experimental results in section 4. Our model thus enables real-time environmental monitoring and large-scale forecasting. The experimental results show superior performance (RMSE = 6.21, MAE = 3.89, and R2 = 0.988), outperforming existing models. This study highlights the advantages of combining multimodal data sources with advanced dynamic modeling techniques to improve air pollution prediction and inform policymaking.

Similar content being viewed by others

Introduction

Monitoring and forecasting air quality is critical to protecting public health and promoting environmental sustainability. Rapid industrialization, urbanization, and vehicle emissions have made air pollution a primary global concern. Degrading air quality poses significant risks to human health, including respiratory and cardiovascular diseases, while also exacerbating more critical environmental issues, such as climate change and ecosystem degradation1. Accurate air quality prediction models are essential for comprehending pollution dynamics and implementing proactive pollution management strategies to mitigate adverse effects2. Pollutants that can damage respiratory, cardiovascular, and neurological health mainly include carbon monoxide (CO), ozone (O₃), and fine particulate matter (PM2.5 and PM10). The burden is disproportionately high in densely populated areas, significantly straining healthcare systems, reducing workforce productivity, and increasing healthcare costs3.

Air pollution has severe environmental consequences, including increased global warming, poor air quality, reduced agricultural productivity, and ecosystem damage (Putri and Caraka 2024). The continued growth of urbanization and industrialization increases atmospheric pollutants, resulting in poor air quality in megacities and industrial zones4. These urban areas face vehicular emissions, industrial pollution, and climatic extremes, emphasizing the critical need for effective air quality monitoring and forecasting systems5.

Figure 1 demonstrates the methodology and significance of air quality forecasting by integrating diverse data sources. The process begins with real-time air quality monitoring, which entails collecting data from sensors, satellites, and weather stations to evaluate pollution levels. This data helps develop models that accurately predict pollution levels, allowing for better forecasting of future air quality conditions6. Improved predictions enable more timely interventions to protect public health by reducing the risks of respiratory diseases and pollution-related health concerns. Environmental impact assessments rely heavily on predictions to help policymakers develop effective regulations for reducing pollution7. Data in urban areas supports city planning and traffic management decision-making, leading to more effective resource allocation.

Integrating various environmental factors enhances climate change research, leading to a more accurate understanding of the ecological impacts of pollution. These predictions support policymakers and enhance international environmental agreements, particularly those to mitigate air pollution and address climate change. This figure illustrates the interconnections between different aspects and emphasizes the importance of precise air quality predictions for enhancing public health, environmental conditions, and urban planning8. Conventional approaches to forecasting air quality primarily rely on statistical methods, including multiple linear regression models and autoregressive integrated moving average (ARIMA) models, which often fail to adequately represent the complex spatiotemporal and nonlinear dynamics inherent in air pollution data. Deep learning methodologies have revolutionized air quality forecasting in recent years by leveraging extensive datasets and identifying complex patterns that traditional approaches often overlook. Although machine learning (ML) and deep learning (DL) techniques, such as convolutional neural networks (CNNs) and long short-term memory (LSTM) networks, have shown promise, they still face notable limitations9.

-

Limited Integration of Multimodal Data: Many existing models struggle to effectively combine data from diverse sources, such as ground-based sensors, weather data, and satellite imagery, which hinders a comprehensive understanding of air pollution dynamics.

-

Shallow Spatial Relationship Modelling: A prevalent limitation in existing approaches is the inadequate attention to spatial dependencies, especially in urban environments where pollution levels can fluctuate significantly over short distances.

-

Challenges in Modeling Temporal Dynamics: Advanced models, such as LSTMs, often struggle to capture long-term temporal dependencies, which are crucial for predicting events like pollution spikes or sudden changes in air quality.

-

Challenges of Scalability and Real-Time Prediction: Many models require substantial computing power and lack optimization tools for real-time forecasting, rendering them unsuitable for immediate application in scenarios with rapidly changing pollution levels10.

Recent research suggests that hybrid deep learning models can address these challenges. Models that combine CNNs for spatial feature extraction with LSTMs for temporal dynamics show promise; however, they lack mechanisms for continuous temporal modeling and robust representation of spatial relationships11. Advanced methodologies, such as Neural-ODEs and GNNs, can address these shortcomings, leading to a more precise and scalable approach to air quality forecasting. By addressing these deficiencies, we can create more efficient forecasting systems that produce reliable predictions and help to mitigate the harmful effects of air pollution.

Research questions

This study aims to answer the following essential questions12.

-

Does combining sensor data, weather variables, and satellite images improve air quality predictions?

-

Which advanced computer programs can accurately simulate the intricate, nonlinear spatial and temporal interactions comprising air pollution dynamics?

-

Can GNNs effectively model spatial dependencies among pollution sources, enhancing the accuracy of air quality predictions?

-

How do Neural-ODEs enhance the visualization of temporal variations in air quality and improve the precision of long-term predictions?

These questions investigate novel methodologies for enhancing the comprehension and forecasting of air pollution trends, utilizing multimodal data and advanced computational technologies13.

Research motivation

The growing global concern over air pollution and its far-reaching consequences for public health, the environment, and economic stability is the driving force behind this investigation. The need for more precise and prompt air quality forecasts has reached unprecedented urgency. Multiple critical factors underscore the significance of this research 14.

-

Public Health and Environmental Sustainability: Air pollution’s detrimental impact on human health worsens, contributing to a rise in cardiovascular diseases, respiratory illnesses, and other severe health conditions. Air pollution also disrupts ecosystems, exacerbates climate change, and threatens biodiversity. Developing reliable and precise air quality forecasting models is crucial to mitigate these effects and improve public health.

-

Limitations of Existing Models: Air quality forecasting techniques often encounter significant challenges, including the ineffective integration of diverse data sources, difficulties in accurately capturing complex spatial relationships, and inadequacies in modeling long-term pollution trends. The present investigation aims to address the complexities of pollution dynamics through a comprehensive modeling approach that integrates diverse data types, seeking to overcome existing limitations15.

-

Advancements in Computational Techniques: Recent advancements in computational methodologies, including Neural-ODEs and GNNs, create new opportunities for enhancing air quality forecasting. Advanced methodologies facilitate the ongoing modeling of temporal air quality variations, enhancing comprehension of spatial interactions, which is essential for improving predictive accuracy11.

Given the importance of real-time air quality forecasting for informing public policy, such as issuing air quality alerts, managing traffic, and enforcing environmental regulations, this research aims to bridge the gap between predictive modeling and practical applications. The tools developed in this study have the potential to provide valuable insights for policymakers, public health officials, and urban planners, enabling them to make informed decisions to combat air pollution and safeguard both human health and the environment16.

Primary contributions

This study presents a novel hybrid deep learning model that enhances air quality predictions by leveraging advanced computational methods and multimodal data (Su et al., 2024). The model incorporates meteorological variables, satellite imagery, and sensor data to understand air pollution dynamics comprehensively. The principal contributions of this research are as follows.

-

Comprehensive Multimodal Integration: Air pollution patterns can be fully understood using a new framework that combines sensor data from the ground, meteorological variables, and satellite images17.

-

Innovative Hybrid Architecture: The model integrates CNNs for spatial feature extraction, Bi-LSTMs for capturing temporal dependencies, GNNs for modeling spatial relationships among pollution sources, and Neural-ODEs for continuous-time dynamics. This collaboration rectifies the deficiencies of conventional methods and enhances precision.

-

Superior Predictive Performance: Experimental evaluation on the AQD dataset demonstrates the model’s exceptional accuracy, with significant improvements in RMSE and R2 compared to existing models, including CNN-LSTM Hybrid Model, GNNS, Bi-LSTM, and CNN-GNN Models.

-

Scalability for Real-Time Applications: The model employs optimization techniques, including adaptive pooling, to achieve efficient computation and scalability, making it well-suited for real-time environmental monitoring systems.

-

Actionable insights for decision-making: The model enables environmental agencies, policymakers, and urban planners to create efficient interventions and advance sustainable urban development by providing accurate forecasts and highlighting key pollution dynamics.

Organization of the article

The complete article is organized into various sections. Section “Literature review” presents a Literature review of existing research on air quality prediction. Section “Materials & Methods” covers materials and methods, including a dataset description, proposed model, architecture details, and working steps. Section “Experimental Results” covers the implementation of proposed and existing models, results, and discussion. Section “Conclusion and Future Directions” covers the conclusion and future direction of the work.

Literature review

The literature on air quality forecasting utilizing advanced machine learning (ML) and deep learning (DL) models is categorized into three primary domains: hybrid deep learning architectures for enhanced prediction, data integration and feature engineering for multimodal analysis, and optimization techniques addressing real-time scalability challenges17. Each category highlights specific innovations, practical applications, and existing limitations in the rapidly evolving field of air quality forecasting.

Hybrid deep learning architectures for enhanced prediction

Hybrid deep learning models have gained significant popularity in recent years due to their ability to model spatial and temporal dependencies in air pollution data. These frameworks integrate CNNs, proficient in spatial feature extraction, with RNNs and their variants, such as LSTM, specifically designed to identify sequential temporal patterns.

Ahmed et al.1 developed a predictive model utilizing CNN-RNN, incorporating satellite-derived hydro-climatological variables. This model enhanced AQI forecasting by integrating remote sensing data with traditional inputs in areas where ground-based sensor coverage was lacking or insufficient. Utilizing satellite data addresses significant deficiencies in infrastructure monitoring, making it an invaluable strategy for developing nations or areas with limited sensor networks. Rabie et al.2 improved hybrid frameworks by introducing a CNN-BiLSTM model for predicting AQI in megacities. Their research focused on analyzing detailed pollution patterns to provide localized forecasts necessary to address the heterogeneity in urban environments due to various pollution sources, including industrial zones, traffic centers, and residential areas. Urban planning and public health initiatives are particularly well-suited to this spatially resolved methodology, as precise interventions necessitate localized data.

Kumar and Kumar3 introduced the Multi-view Stacked CNN-BiLSTM (MvS CNN-BiLSTM) model, which integrates meteorological parameters, pollutant emissions, and additional environmental data within a multi-view framework. This framework enhanced the accuracy of PM2.5 concentration predictions in heavily polluted Indian cities, underscoring the importance of integrating multi-source data in urban air quality forecasting. Barthwal and Goel4 demonstrated the synergy between deep convolutional networks and LSTMs for time-series AQI classification, wherein the CNN adeptly extracted spatial features, and the LSTM proficiently captured temporal dependencies. Despite their impressive performance, these hybrid architectures often require substantial computational resources for deployment and training. The increased demands present challenges for real-time applications, especially in resource-constrained environments14. Moreover, challenges such as model interpretability and the potential for overfitting persist, underscoring the need for further research into lightweight, interpretable, and efficient hybrid models suitable for practical applications.

Data integration and feature engineering for multimodal analysis

Air quality forecasting relies on comprehending the complex interactions between meteorological conditions, emissions, and pollutant dispersion. Integrating multimodal data and feature engineering is critical in recent progress in predictive modeling. Ahmed et al.1 amalgamated satellite-derived hydro-climatological data with traditional air quality monitoring to enhance forecasting accuracy. The incorporation of satellite data enabled the model to address the shortcomings of terrestrial sensors, particularly in regions with insufficient sensor density. This approach utilized various data sources to understand air quality dynamics comprehensively. Giliketal18 integrated emissions and meteorological variables into their multi-view framework, resulting in a model that adeptly adapts to fluctuating environmental conditions. This method emphasizes the importance of integrating various data types to consider factors that affect pollution, including seasonal variations, meteorological anomalies, and industrial activities.

Innovative preprocessing techniques are crucial components of multimodal analysis. Wu et al.5 employed Data Variational Mode Decomposition (DVMD) to extract critical components from noisy and nonlinear AQI data. The decomposition enables the extraction of relevant trends and patterns, thereby improving the efficacy of the hybrid CNN-LSTM model. Rabie et al.2 established a spatially resolved framework that amalgamates high-resolution temporal and spatial datasets, enabling their model to produce intricate forecasts for densely populated regions. Despite these advancements, multimodal integration continues to face numerous challenges. Analyzing large, diverse datasets requires significant computing power, and missing or incomplete data can render models less effective19. They are challenging to scale up in the real world because they require complex feature engineering techniques to operate effectively, increasing the implementation complexity. To address these issues, we need more robust frameworks not dependent on specific sensors and can work effectively with sparse or noisy datasets.

Optimization techniques and real-time scalability challenges

To be practical, air quality forecasting models must strike a balance between predictive accuracy and computational efficiency, especially for real-time applications. Optimization methods and scalable architectures are essential for facilitating the rapid processing and analysis of extensive datasets by models.

Wu et al.5 addressed optimization challenges by integrating the Dung Beetle Algorithm into their coupled DVMD Informer-CNN-LSTM model. This method optimized the model’s hyperparameters, markedly enhancing prediction accuracy. Nonetheless, although the optimization improved performance, it concurrently elevated computational expenses, constraining the model’s applicability for real-time implementation. Ahmed et al.1 recognized the scalability issues associated with processing high-resolution satellite data for real-time forecasting. Although the dependence on computationally demanding satellite imagery enhanced precision, it presented considerable difficulties for areas lacking adequate infrastructure. Barthwal and Goel4 encountered similar challenges in training their hybrid DCNN-LSTM models, necessitating considerable resources for both training and deployment. These constraints underscore the need for lightweight architectures that achieve high accuracy with minimal computational load. Rabie et al.2 and Kumar et al.3 examined the significant computational demands of hybrid deep learning models. They contended that these stipulations hinder execution in resource-constrained settings, such as smaller municipalities or rural areas lacking infrastructure and expertise. In the future, researchers should focus on developing methodologies such as adaptive pooling, model compression, and edge computing to address these issues. These methods can decrease resource requirements without compromising performance by executing computations locally or optimizing model operations19.

Furthermore, real-time scalability poses a significant challenge for applications that require immediate response, such as disseminating public health alerts or implementing traffic management plans. To address these challenges, it will be necessary to develop models that can adapt to environmental limitations and the limited availability of resources20. The research findings indicate significant advancements in air quality forecasting driven by hybrid deep learning architectures, innovative data integration techniques, and optimization strategies. Hybrid models effectively capture spatial–temporal dependencies while integrating multimodal data enhances predictive robustness21. However, computational efficiency, scalability, and data scarcity challenges persist. Table 1 presents a comparative analysis of various existing research in air-quality analysis.

Materials & methods

This section describes the data sources, model architecture, and evaluation methods used in this study. It defines the various components that advance the hybrid deep learning model, including combining multimodal datasets, such as sensor data, meteorological information, and satellite imagery.

Architecture of proposed hybrid model

Figure 2 presents the architecture of the proposed hybrid model.

The proposed hybrid deep learning model aims to accurately forecast AQI and pollutant concentrations22 by combining multimodal data’s spatial, temporal, and dynamic features. This model employs advanced deep learning techniques, including CNNs23, BiLSTM, Attention mechanisms, GNNs, and Neural ODEs. The proposed hybrid deep learning model uses various advanced methods to enhance air quality prediction. It comprises multiple interconnected layers that capture different air quality dynamics over time and space.

Working details

The proposed hybrid deep learning model evaluates multimodal data for accurate air quality prediction by integrating multiple components, including CNN, BiLSTM, Attention Mechanism, GNN, Neural-ODE, and Adaptive Pooling. This paper thoroughly explains the model’s functionality, including its mathematical formulation, equations, and the underlying logic for each layer18.

Input data representations

The model utilizes multimodal input data20.

-

Sensor Data: The time-series data is denoted by Eq. (1).

$${X}_{s}=\left[{x}_{s1},\dots \dots \dots \dots .{x}_{sT}\right]$$(1)Where \({x}_{sT}\) represents the concentration of pollutants at the time \(t\).

-

Satellite imagery: It is structured as spatial grids \({X}_{i}\in {\text{R}}^{(\text{H}\times \text{W}\times \text{C})}\), where H denotes height, W represents the width, and C signifies channels (such as RGB)24.

-

Meteorological Data: Continuous time-series data encompassing measurements of wind speed, temperature, and humidity as presented by Eq. (2).

$${X}_{m=}\left[{x}_{m1},\dots \dots \dots \dots .{x}_{mT}\right]$$(2)

CNN for spatial feature extraction

The CNN is a crucial component of the proposed model, which extracts spatial features from satellite imagery and sensor data. Utilizing convolutional operations, the CNN detects spatial patterns in pollutant distribution, including concentrated pollutant clusters or hotspots, across various regions25. The input data for the CNN comprises two components: (a) Satellite imagery, which provides the spatial distribution of pollutants and meteorological patterns, and (b) Sensor Grid Data, which includes Air quality metrics documented at multiple sensor sites. The proposed hybrid model utilizes a convolutional neural network (CNN) to extract spatial features from satellite imagery, thereby facilitating the analysis of pollutant distribution and environmental patterns. Each convolutional layer utilizes filters to identify features, including edges, textures, and patterns within the image18. Figure 3 presents the layered architecture of the CNN model.

-

Input Layer: The CNN receives a 3D tensor encoding an image of satellite data \({X}\in {\text{R}}^{(\text{H}\times \text{W}\times \text{C})}\), where H is height, W is weight, and C is channels.

-

Convolutional Layer: A convolutional layer extracts localized spatial features from an input image by applying filters (kernels). The convolution procedure is outlined mathematically using Eq. (3), where \(X\) Input feature map, \({F}_{mn}^{k}\): With a size of (m \(\times\) n), Kth Filter, \({b}^{k}\): Bias value, \(Out\_CNN\): output for CNN

$${Out\_CNN}_{i,j}^{k}=\sum_{m=0}^{M-1} \sum_{n=0}^{N-1}\left({F}_{mn}^{k}\times {X}_{\left(i+m\right)\left(j+n\right)}\right)+{b}^{k}$$(3) -

Activation Layer (ReLu): The output of the convolutional layer is subjected to an activation function, ReLu (Rectified Linear Unit), which incorporates nonlinearity and is calculated by using Eq. (4), where \(x\): Input values.

$$f\left(x\right)=\text{max}(0,x)$$(4) -

Pooling Layer (Max Pooling): The pooling layer reduces the spatial dimensions that comprise the feature maps, thereby decreasing computational complexity and emphasizing essential features26. It also identifies the highest possible value within each subregion associated with the feature map using Eq. (5), where \(Out\_PL\): Output for pooling layer, \(p,q\): Size of pooling window (p \(\times\) q).

$${Out\_PL}_{i,j}^{k}=\underset{p,q}{\text{max}}({X}_{\left(i+p\right)\left(j+q\right)}$$(5) -

Flattening Layer: The output of the pooling layers is flattened into a 1D vector, which can then be used by fully connected layers; the flattened output can be calculated using Eq. (6).

$${f}_{Out}=(H{\prime}\times W{\prime}\times C{\prime})$$(6) -

Fully Connected Layer: The flattened vector is transmitted through one or more fully connected layers to generate a final spatial feature representation (Bui et al. 2021). The operation is carried out by a fully connected layer, as presented in Eq. (7). Where \(\text{X}\): Input vector, \(W\): Weight matrix, \(\text{b}\) : bias

$$Z=(W\times X)+b$$(7)

Table 2 provides a comprehensive overview of the CNN module’s various layers, including their specific functions and an overall architecture diagram.

Bi-LSTM for temporal dependency modeling

The Bi-LSTM network is essential in the proposed model for capturing temporal dependencies in time-series data, including air quality sensor readings, meteorological variables, and pollutant concentrations over time. This enables the model to forecast air quality by analyzing historical patterns and identifying prospective correlations in the data15,27. The Bi-LSTM is an enhancement of the conventional LSTM network intended for modeling sequential data2. In Bi-LSTM, two LSTM layers are utilized. One processes the sequence in a forward direction (from past to present) and the other in a backward direction (from present to past). The outputs from these two directions are amalgamated to capture more nuanced temporal information28.

The Bi-LSTM module simulates the temporal dynamics of pollutant concentrations in the AQD dataset. Bi-LSTM extracts comprehensive temporal features by learning dependencies in both forward and backward directions. The hybrid model, integrated with spatial features from CNNs, achieves precise air quality predictions over time1. Figure 4 presents the architecture of Bi-LSTM. AQD Dataset Implementation Utilizing the Bi-LSTM model is as follows.

-

Input Sequences: A time-series sequence of length T with d-dimensional features makes up the Bi-LSTM’s input. For example, T = 24 and d = 1 for hourly PM2.5 observations over a 24-h period, \({X}_{s} and {X}_{m}\).

-

Forward LSTM (Forward Pass): Performs a sequential computation of hidden states using Eq. 8.

$$\overrightarrow{{h}_{t}}=({LSTM}_{forward}({x}_{t},\overrightarrow{{h}_{t-1}}))$$(8) -

Backward LSTM (Backward LSTM): Finds hidden states by computing them in reverse as presented by Eq. (9).

$$\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\leftharpoonup}$}} {{h}_{t}}=({LSTM}_{forward}({x}_{t},\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\leftharpoonup}$}} {{h}_{t+1}}))$$(9) -

Concatenation of Hidden States: The conclusion of the hidden state is a combination of the outputs from both directions, as presented in Eq. (10). Where \({h}_{t}\): hidden state

$${h}_{t}=(\overrightarrow{{h}_{t}} ,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\leftharpoonup}$}} {{h}_{t}} )$$(10) -

Output Layer: The Bi-LSTM generates a series of hidden states that can be processed using a fully connected layer to forecast pollutant concentrations, as presented in Eq. (11). Where \({W}_{h}\): Weight matrix, and \({y}_{t}\): Predicted population

$${y}_{t}={(W}_{h}\times {h}_{t})+{b}_{h}$$(11)

Attention mechanism

The attention mechanism is critical for enhancing the model’s ability to focus on relevant features in the multimodal dataset, thereby improving prediction accuracy. It dynamically assigns weights to input features based on their relevance to the target prediction2. The key role of the attention method in the proposed model is as follows.

-

Relevance Identification: Identifies the most significant input features in each time step, such as dominant pollutants or influential meteorological factors.

-

Contextual Understanding: Focusing on essential components enhances the model’s ability to interpret long-term dependencies in sequential data.

-

Weight Assignment: Each input feature is assigned a weight based on importance, emphasizing critical data points.

A mathematical model for the attention mechanism can be defined as follows. For a series of hidden states \(H=({h}_{1},\dots \dots \dots \dots \dots \dots {h}_{t})\), an attention score \({Att}_{S}\) can be determined by using Eq. (12), where q: context vector, \(Score\): a dot product calculated by the additive dot product function.

Similarly, an attention score \({\alpha }_{t}\) and context vector \({c}_{v},\) can be calculated by Eqs. (13) and (14). The context vector \({c}_{v}\). It is a weighted sequence representation that prioritizes important information for prediction.

where \({e}_{t}\) Represents the attention score for the feature at time t, and these weights are applied to the hidden states or features accordingly.

GNN for spatial relationship modeling

The GNNs represent spatial dependencies among air quality monitoring stations, considering geographic and environmental variables (Sarkar, N. et al. 2022). They are especially suitable for structured data, such as graphs, in which nodes represent locations and edges denote spatial or correlation-based relationships. The primary roles and responsibilities of GNNs in the proposed model are as follows.

-

Spatial Dependency Modeling: Analyses the relationships among local and distinct areas affected by air pollution.

-

Edge-Weight Encoding: The application of edge weights enables the integration of spatial proximity and similarity, such as meteorological conditions.

-

Information Propagation: Consolidates attributes from adjacent nodes to improve predictions at each node.

Graph Neural Networks enhance the model’s ability to predict air quality at specific locations by incorporating spatial influences from adjacent areas. A mathematical model for the GNN can be defined as follows. For a graph \(G=(V,E)\), where V: Set of nodes, E: Edges.

A node embedding update \({h}_{v}^{(l+1)},\) This can be calculated using Eq. (15) Where \(l\): layer, \(\text{N}\left(\text{v}\right)\): Neighbour set, \({w}_{uv}\): Edge weight, \({w}^{l}\): learning weight matrix. The node outcome \({Z}_{v}\) This can be calculated using Eq. (16).

Adaptive pooling layer

Apart from the conventional max pooling layer introduced in Section 3.2.2, the adaptive pooling mechanism further integrates into the hybrid model to actively mitigate spatial shifts of feature maps. Traditional max pooling applies a fixed-size window to segment the input feature map and downsample at a constant rate. This can lead to critical spatial information being lost in heterogeneous datasets, as punctual down-sampling is not based on the input size [21]. Instead, adaptive pooling computes the pooling area according to the input feature map sizes and the specified output size, allowing for flexible spatial feature compression while maintaining critical information [4, 8].

More formally, an adaptive pooling layer takes a feature map as input. Cap \(X\in {R}^{(H\times W\times C)}\) and outputs a feature map \(Y\in {R}^{{(H}_{out}\times {W}_{out}\times C)}\), where \({H}_{out}\) and \({W}_{out }There There There There There\) We fixed the output spatial dimensions that are set beforehand. Where the pooling kernel size \({k}_{h},{k}_{w}\) and stride \({s}_{h},{s}_{w}\) are calculated as in Eq. (17).

where \(\lfloor \cdot \rfloor\) Indicates the floor operation. Values for output features are obtained through max pooling within these adaptively sized regions, as presented in Eq. (18)

This allows for invariant output dimensions, regardless of the variable size of the input data, which is an essential aspect since multimodal data sources, such as satellite imagery and sensor grids, may have different resolutions. Notably, the adaptive pooling layer retains significant spatial feature representation while reducing the size of feature maps more efficiently at earlier convolutional stages, thereby reducing computational complexity and resource demands.

Neural-ODE for dynamic modeling

Neural Ordinary Differential Equations (Neural-ODEs) enable the model to encapsulate continuous-time dynamics of air quality, thereby connecting discrete observations with real-world continuous variations23. The primary function of Neural ODEs in the proposed model is as follows.

-

Continuous Temporal Modeling analyzes the progression of air quality variables over time by applying differential equations.

-

Adaptive Dynamics: Monitors nonlinear and temporally variable alterations in pollutant concentrations.

-

Enhanced Long-Term Forecasting: Augments predictions by modeling future conditions based on acquired temporal dynamics, as presented in Fig. 5.

A mathematical model for the Neural-ODEs can be defined as follows. The dynamics \(\frac{dh(t)}{dt}\) of the hidden state \(h(t)\) This can be calculated using Eq. (19). Where f: neural network parameterized by θ.

In Neural-ODEs, Eq. (20) is used to solve the ODE, yielding a final state for prediction. \(\widehat{y}\) can be calculated by using Eq. (21), where \(g\) Decoder correlating the hidden state to the chosen variable.

Algorithm of proposed model

The Proposed hybrid deep learning model for air quality prediction combines CNNs, BiLSTM, attention mechanisms, GNNs, and Neural-ODEs. Algorithm 1 presents the complete steps and essential functions.

Algorithm 1: Algorithm for the Proposed Model

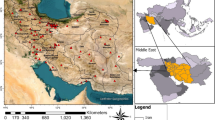

Dataset

The Air Quality Open Dataset (AQD)29 is a large dataset that combines multimodal information to analyze air pollution thoroughly. The dataset is designed to support research into air quality forecasting and pollution control (AQD, available online at https://openaq.org).

Data sources

The AQD dataset combines data from various sources to generate a comprehensive and multimodal dataset for evaluating air quality.

-

Ground-based monitoring deliver immediate evaluations of contaminants such as PM2.5, PM10, CO, NO2, SO2, and O3. The measurements are time-stamped and geolocated, yielding superior temporal and spatial resolution.

-

Meteorological data from sources such as NOAA and local weather services provide critical context by including variables such as temperature, humidity, wind speed, and precipitation that influence pollutant dispersion. Satellites such as Sentinel-5P, MODIS, and Landsat also provide data22.

These data include variables such as aerosol optical depth (AOD), tropospheric NO2 concentrations, and surface reflectance, which provide a more comprehensive geographical picture of pollution levels (Putri and Caraka , R.E., 2024).

Temporal resolution

The AQD provides both high-frequency and aggregate data for versatile analysis and interpretation.

-

Hourly Data: It enables detailed short-term pollution analysis and immediate forecasting.

-

Daily Aggregates: It provides long-term trends and seasonal patterns, facilitating policy planning and public awareness initiatives.

Spatial coverage

The dataset spans diverse regions globally, including urban, rural, and industrial areas, ensuring comprehensive geographical representation.

-

Cities like New Delhi, Beijing, and Los Angeles are well-covered, providing insights into urban pollution.

-

Rural areas and regions with unique topographical features like mountains and coastal zones are also included to study pollution dispersion in varying environments.

Data attributes

The dataset is rich in features relevant to air quality analysis.

-

Pollutant Concentrations: Real-time levels of PM2.5, PM10, CO, NO2, SO2, and O3 in micrograms per cubic meter.

-

Meteorological Variables: The variables include temperature (°C), wind speed (m/s), humidity (%), and rainfall (mm).

-

Spatial Metadata: The datasets, which include the latitude, longitude, and altitude of monitoring stations, enable advanced spatial modeling using techniques such as Graph Neural Networks (GNNs).

Multimodal integration

The AQD uniquely integrates multimodal data, combining satellite imagery with ground measurements and meteorological data. This provides a comprehensive understanding of air quality, capturing both localized and large-scale pollution trends8.

Data pre-processing

Implementing specific pre-processing procedures transformed the AQD into a refined, uniform, and exhaustive dataset, which is now ready for use in hybrid deep learning models for air quality prediction8.

This rigorous methodology ensured data integrity while enhancing the model’s ability to detect complex patterns in air pollution dynamics9. Table 3 presents dataset details and counts before and after data pre-processing.

-

Handling Missing Sensor Data: The AQD comprises data from terrestrial sensors that quantify pollutants, including PM2.5, PM10, carbon monoxide, and ozone. Nonetheless, some sensor readings were missing due to technical malfunctions or adverse weather conditions. Missing values in time-series data were addressed through linear interpolation, utilizing the temporal continuity of pollutant concentrations (Rao, R.S. et al. 2024). The AQD dataset exhibited insufficient representation, particularly in terms of severe pollution levels. The dataset was balanced by applying synthetic data generation techniques, such as SMOTE (Synthetic Minority Over-sampling Technique). The model effectively learned from common and rare pollution instances (Ramadan, M.N., and Ali, M.A., 2024).

-

Resolving Temporal Misalignment: Time synchronization is a crucial component of the pre-processing phase, as AQD relies on data from sensors, weather stations, and satellite images10. All sources employed identical timestamps to guarantee that measurements of pollutants, weather, and space-based attributes were conducted within the same temporal framework. This involves aligning all time zones with UTC and correcting or eliminating missing or inaccurate timestamps in records.

-

Removing Duplicate entries and erroneous entries: The raw AQD data contained duplicate entries that resulted from data logging and transmission errors. Duplicates were identified and eliminated by applying unique identifiers for location, pollutant type, and timestamps. Entries containing implausible values, including harmful pollutant concentrations, were classified as errors and eliminated to maintain data accuracy.

-

Smoothing Noise in Time-Series Data: The air quality data exhibit variability due to environmental fluctuations and sensor discrepancies. While preserving underlying trends and eliminating erratic spikes, moving average smoothing techniques effectively reduce high-frequency noise12. To maintain sharp transitions and minimize excessive smoothing, a wavelet-based noise reduction method was implemented for pollutants that undergo abrupt changes, such as those that occur during a wildfire.

-

Standardization and Normalization of Features: The dataset included variables such as pollutant concentrations (µg/m3), temperature (°C), and wind speed (m/s), each with varying ranges and units. We employed z-score scaling to ensure that feature contributions remained consistent throughout training by standardizing pollutant concentrations and meteorological variables15. Satellite-derived characteristics, such as AOD, were standardized to a 0–1 range to improve their compatibility with neural network inputs.

-

Detecting and Treating Outliers: Outliers in the pollution data, which could be the consequence of infrequent environmental occurrences or sensor failures, were identified using statistical techniques such as the interquartile range (IQR)12. We looked at PM2.5 concentrations that were abnormally high during off-peak hours. To prevent distortion during model training, outliers were either confined to the 95th percentile or substituted with the median of their respective distributions.

-

Augmenting Missing Satellite Imagery Features: Satellite imagery data often contains missing values due to cloud cover and low-resolution observations. In these cases, spatial interpolation was used to fill the blanks with data from nearby regions. Advanced image reconstruction techniques ensured that essential data remained safe by filling in blank pixels with necessary information.

-

One-Hot Encoding of Categorical Data: Certain features, such as location names or pollutant categories, were categorical. Outliers in pollutant data caused by sensor malfunctions or infrequent environmental events were identified using statistical techniques such as the interquartile range (IQR).

For example, abnormally elevated PM2.5 levels during off-peak hours were identified and investigated. To avoid model training distortion, outliers were either capped at the 95th percentile or substituted with the median of their respective distributions. One-hot encoding was applied to make these variables compatible with the deep learning model. For example, a location column was transformed into binary indicators for each unique region, allowing the model to process this data effectively.

-

Principal Component Analysis (PCA) for Dimensionality Reduction: The AQD features are numerous and interrelated, originating from multiple sources. PCA was implemented to reduce dimensionality while preserving substantial information. They were defined as principal components to capture the collective impact of meteorological variables on air quality, such as temperature, humidity, and wind speed.

-

Balancing the Dataset for Rare Events: The AQD encompassed severe pollution levels that were inadequately represented in the dataset. Synthetic data generation methods, such as SMOTE, were utilized to balance the dataset, facilitating the model’s effective learning from both typical and extreme pollution occurrences.

Feature selection

Feature selection for the AQD Multimodal Dataset is crucial for directing the model’s attention to the most relevant information, thereby improving the precision of air quality predictions. The dataset comprises diverse data types, including sensor readings (e.g., PM2.5, CO, Ozone), meteorological data (temperature, wind speed), and satellite imagery, collectively enhancing the comprehension of air pollution patterns. Meticulous feature selection can reduce model complexity, enhance performance, and mitigate overfitting16.

Sensor data is crucial for predicting pollutants such as PM2.5 and carbon monoxide. Not all sensors exert the same impact on the prediction of particular pollutants. Feature selection enables the identification of the most informative sensors while discarding redundant or less relevant data. Meteorological features, such as temperature and humidity, are essential for understanding air pollution dynamics; however, their relevance may vary across different regions and time frames. Correlation analysis and statistical tests can be utilized to identify the features that significantly influence the model’s predictions (Su et al. 2024). Satellite data, which provides spatial context for air quality predictions, employs feature extraction methods such as CNNs to identify pertinent image patterns. Pollution hotspots and land use classifications may be selected for incorporation into the model9. Integrating selected features from diverse data sources enhances the model’s predictive accuracy and efficiency while reducing the impact of irrelevant data noise. Effective feature selection guarantees computational efficiency and improves the model’s predictive accuracy. Table 4 presents the details of the feature selection for the AQD Multimodal Dataset.

Hyperparameters

Hyperparameters are predefined parameters that influence the performance of a machine learning model before it is trained. When applying a deep learning model to the AQD multimodal dataset, it is crucial to optimize several key hyperparameters to enhance model performance17. Table 5 provides the specifics of the hyperparameters.

Performance measuring parameters

Table 6 presents the key parameters that compare the proposed model with the existing models.

Experimental results

The proposed hybrid model and the existing CNN-LSTM Hybrid Model, GNNS, Bi-LSTM, and CNN-GNN Hybrid Model were implemented on the AQD multimodal dataset, and various performance measuring parameters (as presented in Table 6) were calculated.

Experimental setup

The experimental setup utilized a system with NVIDIA graphics processing units (GPUs), specifically the Tesla V100 or A100, to manage the demanding calculations necessary for training the model. The graphics processing units (GPUs) made processing the large AQD dataset more efficient. Python, TensorFlow, and Keras were used in conjunction to develop and train deep learning models. PyTorch was utilized for specific applications, including Graph Neural Networks (GNNs) and Neural Ordinary Differential Equations (Neural-ODEs). Data preprocessing utilized tools such as NumPy and Pandas, and model performance evaluation was conducted with scikit-learn. CUDA enhanced training efficiency by leveraging the GPU’s processing capabilities.

Data set splitting:

The dataset has been partitioned into training, validation, and test sets using a time-based split method to ensure the model is evaluated in a manner that reflects real-world forecasting conditions.

-

Training Set: 80% of the dataset (prior time intervals).

-

Validation Set: 10% of the dataset (data immediately succeeding the training phase, employed for model optimization).

-

Test Set: 10% of the dataset, consisting of the most recent data for conclusive assessment.

Simulation results

The following simulation results were calculated for the Proposed hybrid and existing deep learning models25,26,27,28,29,30.

Scenario 1 (for different epochs):

The Proposed Hybrid Model (CNN + BiLSTM + Attention + GNN + Neural-ODE) exhibits enhanced performance over various epochs (50, 100, 150). With an increase in the number of epochs, the model’s capacity to capture spatial and temporal features is enhanced, resulting in reduced metrics such as RMSE and MAE.

Figures 6, 7, 8, 9, and 10 illustrate a comparison of the Proposed Hybrid Model (CNN + BiLSTM + Attention + GNN + Neural-ODE) with multiple existing models, including those by Ahmed et al. and Rabie et al., over various epochs (50, 100, and 150). The depicted figures indicate that the proposed hybrid model consistently outperforms current models, including the CNN-LSTM Hybrid Model, GNNs, Bi-LSTM, and CNN-GNN Hybrid Model, in essential metrics such as MSE, MAE, RMSE, R2, and MAPE. As the number of epochs increases, the proposed model demonstrates a substantial reduction in error metrics (MSE, MAE, RMSE) and an improvement in R2 and MAPE. This highlights the model’s effectiveness in acquiring both spatial and temporal attributes. Integrating Attention Mechanisms, Graph Neural Networks, and neural ordinary differential equations enhances the model’s precision and resilience, significantly benefiting traditional approaches.

Scenario 2 (For different optimizers)

The proposed hybrid model’s performance is evaluated using three different optimisers: Adam, SGD, and RMSprop. After the model has trained for 150 epochs, these optimisers are assessed using several metrics, including MSE, MAE, RMSE, R2, and MAPE.

Figures 11, 12, 13, 14, and 15 present a detailed comparison of the proposed hybrid model’s performance relative to existing models utilizing different optimizers: Adam, SGD, and RMSprop. The proposed hybrid model consistently outperforms current models across all evaluation metrics. The Adam optimizer yields optimal results, demonstrating minimal values for MSE, MAE, and RMSE while achieving a maximal R2 and a minimal MAPE. This indicates that Adam is the most effective optimizer for the proposed model, significantly enhancing its accuracy and robustness in predicting air quality. Alternative optimizers, such as SGD and RMSprop, demonstrate relatively higher error metrics and reduced performance. The data highlight the effectiveness of the proposed hybrid model, particularly with the Adam optimizer, in clarifying the complex spatial and temporal relationships within the dataset.

Scenario 3 (For different learning rates)

Scenario 3 evaluates the efficacy of the proposed hybrid model compared to existing models at varying learning rates (0.001, 0.01, and 0.1). The findings, presented through multiple metrics, indicate that the proposed hybrid model consistently outperforms existing models, regardless of the learning rate.

Figures 16, 17, 18, 19 and 20 represent the performance analysis of the proposed hybrid model and existing models under learning rates of 0.001, 0.01, and 0.1. The evaluation of various metrics, comprising MSE, MAE, RMSE, R2, and MAPE, demonstrates that the proposed hybrid model consistently outperforms the current models, irrespective of the learning rate employed.

The learning rate of 0.001 demonstrates optimal performance by minimizing error metrics, including MSE, MAE, and RMSE, while attaining the highest R2 and the lowest MAPE. With an increase in the learning rate to 0.01 and 0.1, a marginal decrease in performance is noted; nevertheless, the proposed model still surpasses the existing models. The results highlight that, although the learning rate significantly affects the training process, the resilient architecture of the proposed hybrid model guarantees enhanced predictive accuracy and generalization across different learning rates, thus validating its effectiveness for air quality prediction tasks.

Scenario 4 (Combined Results)

Scenario 4 includes a combined analysis for the number of epochs (50, 100, 150), for Optimizers (ADAM, SGD, RMSprop), and Learning rates (0.001, 0.01, 0.1).

Table 7 compares the performance of the Proposed Hybrid Model at various epochs (50, 100, 150), optimizers (Adam, SGD, and RMSprop), and learning rates (0.001, 0.01, 0.1). Key performance metrics include Mean Squared Error (MSE), Mean Absolute Error (MAE), Root Mean Squared Error (RMSE), R-squared (R2), Explained Variance Score (EVS), Mean Absolute Percentage Error (MAPE), Precision, Recall, F1-Score, and Area Under the Curve (AUC-ROC). The findings indicate that the model attains optimal performance at 150 epochs, utilizing the Adam optimizer with a learning rate of 0.001, resulting in minimal error metrics (MSE, MAE, and RMSE) and the maximal R2, Precision, and AUC-ROC scores. Although increasing the learning rate or modifying the optimizer has little effect on performance, Adam consistently outperforms SGD and RMSprop across multiple configurations. The proposed Hybrid Model combines CNN, BiLSTM, Attention, GNN, and Neural-ODE and has strong predictive capabilities, particularly when optimized for a lower learning rate.

Figure 21 illustrates the relationship between Training and Validation Accuracy and Loss. It further demonstrates that as time passes, the accuracy of the model’s training and validation improves while the loss decreases. To validate the proposed model’s training methodology, this figure illustrates that the model exhibits robust performance in air quality prediction tasks. This comparison shows that the model’s accuracy and reliability have improved as the training process progresses through various configurations.

Statistical analysis and p-test

A tenfold Cross-Validation was used to measure the performance and generalizability of the proposed model to the very complex AQD dataset. This is especially suitable for data sets like AQD, which contain mixed data types, such as sensor values, meteorological variables, and satellite images. We make 10 folders of equal size to create the dataset. In every iteration out of 10, the model is trained on nine folds and tested on the remaining one fold. This continues for 10 iterations, with every data point being used exactly once for validation while multiple times for training, giving a more reliable estimate of performance. The tenfold cross-validation, as shown in Fig. 22, displays the training and validation accuracy and loss values for all model folds. It involves assessing how well the model performs across different sections of the dataset, which may vary in environmental conditions and regions, while simultaneously reducing the risk of overfitting by validating on unseen data.

Table 8 presents the statistical significance test results for the proposed hybrid model compared to two baseline models, CNN-LSTM and CNN-GNN, obtained through tenfold cross-validation on the AQD dataset. The metrics evaluated are RMSE, MAE, and R2, with mean and standard deviation. The proposed hybrid model outperforms both baselines in all metrics, with lower error rates (RMSE: 6.25 vs. 8.90 and 7.85; MAE: 3.95 vs. 5.25 and 4.80) and higher explained variance (R2: 0.987 vs. 0.910 and 0.940). Paired t-tests show that these improvements are statistically significant (p-values < 0.001) compared to CNN-LSTM and CNN-GNN. Furthermore, the ANOVA test confirms a significant difference between the models (p < 0.001). These results show that the proposed hybrid model is more robust and has higher predictive accuracy than traditional deep learning approaches for air quality forecasting.

Ablation analysis

This section conducts an ablation analysis to evaluate the contribution of essential components in the proposed hybrid model for air quality prediction. We focus on three key elements: the attention mechanism, Graph Neural Networks (GNNs), and Neural Ordinary Differential Equations (Neural-ODEs). These factors enhance the model’s ability to comprehend complex spatial, temporal, and dynamic relationships derived from the AQD dataset, which combines sensor data, meteorological information, and satellite imagery. The ablation analysis aims to ascertain the effects of eliminating or altering each factor on the model’s predictive efficacy. The model’s performance is evaluated using standard regression metrics, including the root mean squared error (RMSE), Mean Absolute Error (MAE), and R2 (Coefficient of Determination).

Table 9 and Fig. 23 present the simulation results of the ablation analysis. The ablation analysis results indicate the significant impact of each component on the performance of the proposed hybrid model. The CNN + BiLSTM (Baseline) model (Model 1) demonstrates satisfactory performance, with an RMSE of 9.23, an MAE of 5.12, and an R2 of 0.91. Nevertheless, it lacks the advanced features necessary to effectively manage the complexity of air quality data. Incorporating the attention mechanism (Model 2) improves model performance, decreasing RMSE to 7.85, MAE to 4.02, and R2 to 0.95. This suggests that prioritizing key features improves the model’s predictive accuracy. Integrating GNNs (Model 3) enhances the model’s ability to capture spatial relationships among sensor locations, which is crucial for air quality prediction; nonetheless, its performance is still lower than that of the attention-based model.

Model 4, which integrates CNN, BiLSTM, attention mechanisms, GNNs, and Neural-ODEs, demonstrates optimal performance with an RMSE of 6.21, MAE of 3.89, and R2 of 0.988. This combination enhances the model’s accuracy by highlighting essential features and spatial relationships while utilizing Neural-ODEs to depict the continuous dynamics of air quality over time, thereby improving predictive capability. The ablation analysis demonstrates the importance of each component in the proposed hybrid model. The attention mechanism and graph neural networks improve performance by highlighting relevant features and capturing spatial relationships. Incorporating Neural-ODEs significantly enhances the model’s ability to forecast changes in air quality over time. The elimination of any component reduces the model’s ability to accurately predict pollutant levels, underscoring the importance of each factor in optimizing performance. The integrated model, which encompasses all elements, achieves optimal accuracy and reliability in air quality forecasting, a crucial aspect for effective pollution management and the development of environmental policies.

Comparisons with state-of-the-art methods

The proposed Hybrid Model (CNN + BiLSTM + Attention + GNN + Neural-ODE) outperforms current air quality prediction models, as evidenced by metrics such as a root mean squared error (RMSE) of 6.21, a mean absolute error (MAE) of 3.89, and an R2 of 0.988. The model combines several techniques, including CNNs for spatial feature extraction, BiLSTM for temporal analysis, attention mechanisms to highlight essential features, and GNNs to investigate spatial relationships, providing a comprehensive approach to air quality prediction. Using Neural-ODEs enhances the model’s ability to accurately represent continuous fluctuations in air quality over time, thereby increasing predictive reliability. When compared to other advanced models, such as Rabie et al.2 and Kumar & Kumar3, the proposed model outperforms them in terms of accuracy and robustness, as presented in.

As shown in Table 10, although models employing attention mechanisms or GNNs demonstrate performance improvements, they remain less effective overall than the proposed hybrid approach. The principal advantage of the proposed model is its integration of Neural-ODEs, which improves its capacity to monitor the dynamic attributes of air quality more effectively. The Proposed Hybrid Model offers a more accurate and scalable approach to real-time air quality forecasting, thereby enhancing environmental monitoring and decision-making capabilities.

Computational complexity and resource analysis

This work proposes a hybrid deep learning model that combines multiple state-of-the-art components, including CNNs, BiLSTM, Attention Mechanisms, GNNs, and Neural-ODE, to obtain more accurate air quality prediction results. This increase in architectural complexity enhances prediction performance but comes at the cost of greater compute resource consumption. In order to ascertain the practical feasibility of deploying the model, we conducted an extensive assessment of its computational complexity and resource utilization and compared against two baseline models the CNN-LSTM hybrid and the CNN-GNN hybrid (Table 11).

Compared to the CNN-LSTM (320 s) baseline, the proposed hybrid model is a 22% lower training time–per-epoch (390 s) and is on par with the proposed CNN-GNN hybrid (385 s). Notably, the model incorporates adaptive pooling in the architecture, leading to a remarkable 22% reduction in training time (from 500 s per epoch to 500 s per epoch without this optimization). This approach adaptively remaps pooling, which leads to a smaller amount of pooling during early computation steps, reducing computational cost whilst preserving prominent feature descriptors.

The inference latency does not increase significantly with the hybrid model, with an average of 13 ms/sample which is still practical and close to the CNN-GNN (12 ms) and CNN-LSTM (8 ms) models, and in general within bounds for real-time applications. The GPU memory consumption during training for the hybrid model is also about 7.8 GB, which is slightly higher than that of the CNN-GNN model (7.6 GB) and significantly higher than that of the CNN-LSTM model (5.1 GB). However, these memory requirements are still well within the limits of the available GPU hardware [23]. The hybrid model has 12 million parameters, characteristic of its architecture’s complexity, which requires a large number of parameters to express complex spatiotemporal and continuous dynamics. A notable aspect that matches the improving predictive performance is the model size, which is also increasing. Taken together, although the proposed hybrid model requires more compute than simpler baselines, architectural optimizations (in particular adaptive pooling) offset this cost. The computational footprint of the model remains compatible with current hardware capacities, making it suitable for real-time environmental monitoring systems. In future work, we will investigate further efficiency improvements, such as model pruning and quantization, to enable deployment in more resource-constrained settings.

Conclusion and future directions

The present investigation presents a hybrid deep learning model for air quality prediction that combines CNNs, BiLSTM, attention mechanisms, GNNs, and Neural-ODEs. The model was tested on the AQD dataset, which integrates sensor data, meteorological information, and satellite imagery, allowing the model to capture the spatial and temporal dynamics of air pollution effectively. The integration of diverse data sources and sophisticated methodologies allows the model to predict pollutant concentrations with high accuracy, resulting in substantial enhancements in forecasting precision. The experimental findings indicated that the integrated hybrid model, which combines CNNs, BiLSTM, attention mechanisms, GNNs, and Neural ODEs, substantially outperformed baseline models. The proposed model achieved an RMSE of 6.21, an MAE of 3.89, and an R2 of 0.988, outperforming traditional methods such as CNN-LSTM hybrids and CNN-GNN models. This highlights the importance of each element in enhancing the model’s predictive accuracy. The ablation analysis highlighted the importance of each component—attention mechanisms, GNNs, and Neural-ODEs demonstrating that their integration leads to a more accurate, reliable, and dynamic system for air quality prediction. This is crucial for real-time monitoring and pollution management, improving environmental policies and public health results.

The proposed model has demonstrated encouraging outcomes, although numerous potential directions for future research exist. A primary focus is the incorporation of supplementary data sources. The model currently relies on sensor data, meteorological information, and satellite imagery; however, additional factors, such as traffic patterns, industrial emissions, and socioeconomic variables, could provide a more comprehensive understanding of air pollution dynamics. Incorporating such data may yield more accurate predictions, particularly in regions with diverse or variable pollution sources. A significant opportunity for improvement exists in real-time air quality forecasting. The model presently employs historical data to produce predictions. However, for practical applications, especially in urban settings, real-time forecasting is essential for effective pollution management and health advisories. By employing online learning methodologies, the model can continuously enhance itself with new data, producing up-to-date predictions that facilitate timely decision-making and actions to protect public health.

Moreover, the interpretability and explainability of models are increasingly crucial as machine learning models grow more complex. Comprehending the reasoning behind the model’s predictions, particularly with sophisticated elements such as neural odes and attention mechanisms, is essential for fostering trust among stakeholders. Future research may focus on developing techniques to elucidate the model’s decision-making process, which would be beneficial for policymakers seeking clear and actionable insights from the model’s outputs. The model has considerable potential for expansion to include multi-regional or global air quality forecasting. This study focused on regional predictions; however, applying the model globally could provide a more comprehensive understanding of international pollution trends and their health consequences. This involves enhancing the model to incorporate diverse datasets from various regions, taking into account the unique environmental, economic, and cultural factors that influence air quality in each region. Global forecasting models can inform international ecological policies and address transboundary pollution issues, strengthening global efforts to combat climate change and air pollution.

Finally, enhancing the model for implementing low-resource devices presents another advantage. Access to high-performance computing infrastructure is constrained in numerous developing nations. Adapting the model for efficient operation on edge devices or local servers could enhance real-time air quality monitoring in rural or underserved regions. This would facilitate the extensive implementation of air quality forecasting systems, enabling local governments and communities to adopt proactive strategies against pollution.

Data availability

The data supporting this study’s findings are available from the corresponding author upon reasonable request.

References

Ahmed, A. A. M. et al. An advanced deep learning predictive model for air quality index forecasting with remote satellite-derived hydro-climatological variables. Sci. Total Environ. 906, 167234. https://doi.org/10.1016/j.scitotenv.2023.167234 (2024).

Rabie, R., Asghari, M., Nosrati, H., Niri, M. E. & Karimi, S. Spatially resolved air quality index prediction in megacities with a CNN-Bi-LSTM hybrid framework. Sustain. Cities Soc. 109, 105537. https://doi.org/10.1016/j.scs.2023.105537 (2024).

Kumar, S. & Kumar, V. Multi-view Stacked CNN-BiLSTM (MvS CNN-BiLSTM) for urban PM2.5 concentration prediction of India’s polluted cities. J. Clean. Prod. 444, 141259. https://doi.org/10.1016/j.jclepro.2023.141259 (2024).

Barthwal, A. & Goel, A. K. Advancing air quality prediction models in urban India: A deep learning approach integrating DCNN and LSTM architectures for AQI time-series classification. Modeling Earth Syst. Environ. 10(2), 2935–2955. https://doi.org/10.1007/s40808-023-01820-9 (2024).

Wu, Y., Qian, C. & Huang, H. Enhanced air quality prediction using a coupled DVMD informer-CNN-LSTM model optimized with dung beetle algorithm. Entropy 26(7), 534. https://doi.org/10.3390/e26070534 (2024).

Prado-Rujas, I. I. & García-Dopico, B. A multivariable sensor-agnostic framework for spatio-temporal air quality forecasting based on deep learning. Eng. Appl. Artif. Intell. 127, 107271. https://doi.org/10.1016/j.engappai.2023.107271 (2024).

Liu, Q., Cui, B. & Liu, Z. Air quality class prediction using machine learning methods based on monitoring data and secondary modeling. Atmosphere 15(5), 553. https://doi.org/10.3390/atmos15050553 (2024).

Wang, Y., Liu, K. & He, Y. Enhancing air quality forecasting: A novel spatio-temporal model integrating graph convolution and multi-head attention mechanism. Atmosphere 15(4), 418. https://doi.org/10.3390/atmos15040418 (2024).

Pande, C. B. & Kushwaha, N. L. Daily scale air quality index forecasting using bidirectional recurrent neural networks: Case study of Delhi, India. Environ. Pollut. 351, 124040. https://doi.org/10.1016/j.envpol.2023.124040 (2024).

Abbas, Z. & Raina, P. A wavelet enhanced approach with ensemble-based deep learning approach to detect air pollution. Multim. Tools Appl. 83(6), 17531–17555. https://doi.org/10.1007/s11042-023-14565-5 (2024).

Ramadan, M. N. & Ali, M. A. Real-time IoT-powered AI system for monitoring and forecasting of air pollution in industrial environment. Ecotoxicol. Environ. Saf. 283, 116856. https://doi.org/10.1016/j.ecoenv.2023.116856 (2024).

Natarajan, S. K. & Shanmurthy, P. Optimized machine learning model for air quality index prediction in major cities in India. Sci. Rep. 14(1), 6795. https://doi.org/10.1038/s41598-023-31932-5 (2024).

Rao, R. S., Kalabarige, L. R., Alankar, B. & Sahu, A. K. Multimodal imputation-based stacked ensemble for prediction and classification of air quality index in Indian cities. Comput. Electr. Eng. 114, 109098 (2024).

Manna, T. & Anitha, A. Hybridization of rough set–wrapper method with regularized combinational LSTM for seasonal air quality index prediction. Neural Comput. Appl. 36(6), 2921–2940. https://doi.org/10.1007/s00521-023-07951-8 (2024).

Swetha, G., Datla, R., Vishnu, C. & Mohan, C. K. M2-APNet: A multimodal deep learning network to predict major air pollutants from temporal satellite images. J. Appl. Remote Sens. 18(1), 012005–012005 (2024).

Ayus, I., Natarajan, N. & Gupta, D. Comparison of machine learning and deep learning techniques for the prediction of air pollution: A case study from China. Asian J. Atmos. Environ. 17(1), 4. https://doi.org/10.5572/ajae.2023.17.1.004 (2024).

Cui, B. & Liu, M. Deep learning methods for atmospheric PM2.5 prediction: A comparative study of transformer and CNN-LSTM-attention. Atmos. Pollut. Res. 14(9), 101833. https://doi.org/10.1016/j.apr.2023.101833 (2023).

Gilik, A., Ogrenci, A. S. & Ozmen, A. Air quality prediction using CNN+LSTM-based hybrid deep learning architecture. Environ. Sci. Pollut. Res. 1, 1–19. https://doi.org/10.1007/s11356-022-22990-3 (2022).

Duan, J., Gong, Y., Wang, J. & Li, Z. Air-quality prediction based on the ARIMA-CNN-LSTM combination model optimized by dung beetle optimizer. Sci. Rep. 13(1), 12127. https://doi.org/10.1038/s41598-023-39325-4 (2023).

Sarkar, N., Gupta, R., Choubey, P. K., Soni, R. & Verma, S. Air quality index prediction using an effective hybrid deep learning model. Environ. Pollut. 315, 120404. https://doi.org/10.1016/j.envpol.2022.120404 (2022).

Elbaz, K., Shaban, W. M., Elbagoury, B. M., Hammad, M. & Elkhateeb, A. Real-time image-based air quality forecasts using a 3D-CNN approach with an attention mechanism. Chemosphere 333, 138867. https://doi.org/10.1016/j.chemosphere.2023.138867 (2023).

Tsokov, S., Lazarova, M. & Aleksieva-Petrova, A. A hybrid spatiotemporal deep model based on CNN and LSTM for air pollution prediction. Sustainability 14(9), 5104. https://doi.org/10.3390/su14095104 (2022).

Zhang, Q. et al. Deep-AIR: A hybrid CNN-LSTM framework for fine-grained air pollution estimation and forecast in metropolitan cities. IEEE Access 10, 55818–55841. https://doi.org/10.1109/ACCESS.2022.3184756 (2022).

Zhao, Y. et al. Deep-learning-based models for air quality prediction using public data. Environ. Sci. Pollut. Res. 30(6), 14619 (2023).

Kataria, A. & Puri, V. AI-and IoT-based hybrid model for air quality prediction in a smart city with network assistance. IET Netw. 11(6), 221–233. https://doi.org/10.1049/ntw2.12051 (2022).

Ko, K. K. & Jung, E. S. Improving air pollution prediction system through multimodal deep learning model optimization. Appl. Sci. 12(20), 10405 (2022).

Bekkar, A., Hssina, B., Douzi, S. & Douzi, K. Air-pollution prediction in smart city, deep learning approach. J. Big Data 8, 1–21 (2021).

Shahid, N., Shah, M. A., Khan, A., Maple, C. & Jeon, G. Towards greener smart cities and road traffic forecasting using air pollution data. Sustain. Cities Soc. 72, 103062 (2021).

Air Quality Open Dataset (AQD). Aaccess on 21st June 2024, https://openaq.org/ and Open Access Hub.

Barbosa, A. et al. An integrated machine learning approach for monitoring air pollution using mobile sensor networks. Environ. Monit. Assess. 195(7), 441. https://doi.org/10.1007/s10661-023-11050-7 (2023).

Acknowledgements

The authors extend their appreciation to Taif University, Saudi Arabia, for supporting this work through project number (TU-DSPP-2024-210).

Funding

This research was funded by Taif University, Saudi Arabia, Project number (TU-DSPP-2024-210).

Author information

Authors and Affiliations

Contributions

Umesh Kumar Lilhore conceptualized the study, designed the hybrid deep learning model, and led the implementation and analysis. Sarita Simaiya assisted in data preprocessing, model implementation, and evaluation, contributing to the comparison with other models. Rajesh Kumar Singh conducted experiments, analyzed the results, and optimized the model to achieve improved prediction accuracy. Abdullah M. Baqasah contributed to the integration of Neural-ODEs and the analysis of continuous dynamics in air quality modeling. Roobaea Alroobaea provided expertise in satellite imagery processing and helped incorporate CNNs for feature extraction. Majed Alsafyani contributed to the development of the Graph Neural Networks (GNNs) component, focusing on the spatial relationships between sensor locations. Afnan Alhazmi and Monish Khan contributed to the overall study, Including Revision, design, analysis, and efficiency improvements, with a focus on reducing training time.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

All authors have read, understood, and have complied as applicable with the statement on “Ethical responsibilities of Authors” as found in the Instructions for Authors.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Lilhore, U.K., Simaiya, S., Singh, R.K. et al. Advanced air quality prediction using multimodal data and dynamic modeling techniques. Sci Rep 15, 27867 (2025). https://doi.org/10.1038/s41598-025-11039-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-11039-1