Abstract

This study aims to establish and validate prediction models based on novel machine learning (ML) algorithms for augmented renal clearance (ARC) in critically ill patients with sepsis. Patients with sepsis were extracted from the Medical Information Mart for Intensive Care IV (MIMICIV) database. Seven ML algorithms were applied for model construction. The Shapley Additive Explanations (SHAP) method was used to explore the significant characteristics. Subgroup analysis was conducted to verify the robustness of the model. A total of 2673 septic patients were included in the analysis, of which 518 patients (19.4%) developed ARC within one week after ICU admission. The Extreme Gradient Boosting (XGBoost) model had the best predictive performance (AUC: 0.841) with the highest balanced accuracy (0.778) and the second-highest NPV (0.950). The maximum creatinine level, maximum blood urea nitrogen level, minimum creatinine level, and history of renal disease were found to be the four most significant parameters through SHAP analysis. The AUCs were higher than 0.75 in predicting ARC through subgroup analysis. The XGBoost ML prediction model might help clinicians to predict the onset of ARC early among septic patients and make timely dose adjustments to avoid therapeutic failure.

Similar content being viewed by others

Introduction

Augmented renal clearance (ARC) is characterized by the increased renal elimination of circulating solutes1,2. Previous studies have reported a prevalence of ARC ranging from 14 to 80% in general intensive care unit (ICU) patients, indicating its common occurence3,4,5,6,7,8,9,10. This phenomenon, while not yet fully understood, is likely linked to systemic inflammatory syndrome resulting in increased cardiac output, reduced vascular resistance and, ultimately, augmented renal blood flow11,12. ARC has also been associated with reserved renal function and increased ability to endure critical illness13. As ARC accelerates the glomerular filtration of renally eliminated drugs, it leads to decreased serum drug concentrations, increased risk of treatment failure, and the development of antibiotic resistance4,14,15,16. Early and adequate antibiotic administration is important for the treatment and prognosis of critically ill septic patients17,18. ARC is a phenomenon that renal clearance is accelerated beyond normal range and it has been historically under-reported. Considering an accurate assessment of renal function remains a challenge in critically ill patient, clinicians may overlook this medical complications and result in treatment failure. To avoid therapeutic failure, early identification of ARC and optimal adjustments in drug dosing are essential.

ARC is most commonly defined by creatinine clearance (CrCl) higher than 130 mL/min/1.73 m22,13,19,20,21,22. However, complex and time-consuming CrCl measurements are not routinely performed in the ICU for daily treatments12. To monitor glomerular filtration rate (GFR), various estimation formulas, such as the Cockcroft–Gault (CG) equation, Modification of Diet in Renal Disease (MDRD) Study equation, and the Chronic Kidney Disease Epidemiology Collaboration (CKD-EPI) equation, have been adopted in ICU daily monitoring; however, these formulas have been verified to be inaccurate for patients with ARC in ICU9,13,23,24,25. Therefore, a robust model for predicting ARC specifically in septic patients in ICU is still lacking.

In recent years, with the development of statistical theory and computer technology, machine learning (ML) techniques have become increasingly popular among medical professionals26. The clinical applications of ML range from early diagnosis to prognostic prediction, and various ML models have been beneficial in numerous diseases27,28,29,30,31. Meanwhile, the prediction results of machine learning methods may be superior to those obtained with classical logistic regression and Cox regression analyses32,33,34,35,36,37. However, the advantage of ML algorithms in predicting ARC in septic ICU patients has not yet been demonstrated. To this end, the main objective of this study was to develop and validate an optimal interpretable machine learning model for early prediction of ARC in septic ICU patients, in order to assist clinicians in making timely and accurate drug dosing adjustments, therefore helping to improve the prognosis of the patients.

Results

Patient characteristics

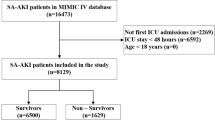

A total of 32,982 patients were diagnosed with sepsis on admission to ICU. However, 30,309 patients were excluded, in a stepwise manner, according to the exclusion criteria (Fig. 1). Finally, 2673 patients with sepsis were included in the final analysis, of which 518 patients (19.4%) had ARC within 7 days after ICU admission, and1532 of the 2673 patients (57.3%) were male. Blood infection (33.6%) was the most common among the specific infection sites. The median age was significantly lower (58 vs. 68; P < 0.001) in the ARC group at admission, compared with the non-ARC group. Patients from the ARC group were found to have a lower SOFA score (5 vs. 7; P < 0.001), less use of vasopressor (40.3% vs. 48.4%; P = 0.001), and more use of mechanical ventilation (73.6% vs. 62.8%; P < 0.001) on ICU admission, and they were more commonly complicated with cerebrovascular disease (24.7%). The baseline characteristics and laboratory data of the ARC and non-ARC groups are detailed in Supplementary TableS1.

The ARC group included survivors (ICU mortality: 12.4% vs. 21.2%; P < 0.001) with longer lengths of stay in ICU (ICU LOS: 9.64 vs. 6.68; P < 0.001), as detailed in Supplementary Table S2. Additionally, the average plasma concentration during antimicrobial therapy in the ARC group was significantly lower among patients administered with vancomycin (15 vs. 19; P < 0.001; see Supplementary Table S3).

Feature selection

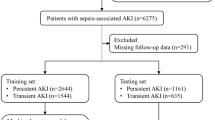

A total of 159 baseline clinical variables were used as candidate predictors for LASSO regression (Supplementary TableS1). Of these, 37 variables, including routinely available demographic, clinical characteristics, and laboratory data, were finally selected as statistically significant predictors of ARC through LASSO analysis (Fig. 2). Baseline characteristics of the selected variables are presented in Table 1. Based on the selected features, we built seven machine learning models: SVM, XGBoost, RF, LR, DT, KNN and ANN.

Model interpretation

We generated seven ML models to predict the development of ARC in patients with sepsis after admission to ICU. Figure 3 shows the discriminative performance of the seven models in terms of their ROC curves. Among the seven models, XGBoost (AUC = 0.841) showed the best predictive effect for ARC among septic patients in the validation cohort, outperforming random forest (AUC = 0.835), SVM (AUC = 0.753), decision tree (AUC = 0.772), ANN (AUC = 0.644), KNN (AUC = 0.516) and logistic regression (AUC = 0.830). We also used area under precision–recall curve (AUPRC) to compare performance between models and AUPRC are better for imbalanced datasets at assessing performance. The best-performing model on the test set based on AUPRC was the XGBoost (AUPRC 0.496), then comes logistic regression (AUPRC 0.487). random forest (AUPRC 0.470), SVM (AUPRC 0.436), decision tree (AUPRC 0.422), KNN (AUPRC 0.392) and ANN (AUPRC 0.194) (see Supplementary SFig. 1). We also evaluated the accuracy, sensitivity, specificity, PPV, NPV and kappa coefficient, as summarized in Table 2. The XGBoost model had the best discrimination with the highest balanced accuracy (0.778) and Kappa coefficient (0.397), as well as the second-highest NPV (0.950). To examine the calibration of the models, calibration curves for the seven ML models were generated, as shown in Supplementary SFig. 2. XGBoost model was well-calibrated in the validation set and achieved a low brier score of 0.144. Overall, these results indicate that the XGBoost model was the optimal model. As shown in Supplementary SFig. 3, our machine learning model clearly outperforms the ARCTIC score developed by Barletta et al.7 In trauma subset, our model shows a higher AUC compared with ARCTIC score (0.751 vs. 0.624).

Using the SHAP values, we hoped to gain more insight into how the XGBoost model predicts the development of ARC. In Fig. 4, the y-axis represents a feature and the x-axis location indicates the mean SHAP value. For the XGBoost model, the SHAP summary graphic reveals that the maximum creatinine level, maximum blood urea nitrogen level, minimum creatinine level, and history of renal disease are the four most significant parameters. We then took two random samples from the validation cohort and ran the LIME algorithm using the data (Fig. 5). The expected probability was 0.97 for non-ARC group and 0.70 for ARC group, according to the XGBoost model. Results for both samples confirmed the prediction ability of the XGBoost model.

Two examples for explaining individuals’ prediction results using the LIME algorithm. The length of the bar for each feature reflects the weight of that feature in the prediction. A longer bar represents a characteristic that contributes more to ARC (example 1) or to non-ARC (example 2). In this context, example 1 has a higher risk of ARC while there is a higher probability to have no ARC for example 2.

Subgroup analysis

A subgroup analysis was conducted based on age, SOFA score, BMI and history of CVD. Each AUC was more than 0.75 for ARC prediction, indicating robustness of the XGBoost model among patients from various subgroups. Notably, the XGBoost model for the subgroup of patients with SOFA higher than 6 (AUC = 0.843) showed the best prediction effect. More details regarding the subgroup analysis are shown in Table 3.

Discussion

In this study, we developed and verified seven machine learning prediction models estimating the risk of ARC after ICU admission. The XGBoost model showed good predictive accuracy in terms of discrimination and calibration, outperforming other ML models such as RF, SVM, DT, KNN, ANN, and LR models. The robustness of the model was then proved among patients with different age, BMI, severity of the disease or history of CVD through a subgroup analysis. Maximum creatinine level, maximum blood urea nitrogen (BUN) level, minimum creatinine level on ICU admission, and history of renal disease were the four most important variables for the XGBoost model, as determined through the feature importance evaluation based on SHAP values. In addition, the predictive ability of the model for individuals was then confirmed using the LIME algorithm. To the best of our knowledge, this is the first study to establish an ARC prediction model for septic patients using a machine learning algorithm, although machine learning has been widely applied to solve clinical problems38.

We found that the prevalence of ARC among septic ICU patients was 19.4% in the current study. Although the incidence of ARC was probably underestimated due to inclusion of ARC within 7 days after ICU admission only, the result was similar to the prevalence from 19.6 to 65% among critically ill patients in mixed ICUs according to previous studies13,39. Septic patients with ARC were found to be approximately 10 years younger than those without ARC, as well as with a lower SOFA score, less vasopressor use, and more mechanical ventilation use, according to this present study. Comparing to previous studies, Bilbao-Meseguer et al.13 reported younger age, polytrauma, lower severity illness, more mechanical ventilation use and less vasopressor use as risk factors through a systematic analysis. Udy et al.8 identified age younger than 50, trauma, and a modified SOFA score lower than 4 as significant risk factors for ARC among septic patients. As renal function reserve has been found to decrease with age, the association between younger age and higher probability of ARC among critically ill patients—especially those with sepsis—seems valid. However, the relevance of ARC and the physio-pathological basis of severe infection, particularly for sepsis, was not apparently demonstrated according to real-world clinical characteristics, besides similar risk factors through brief statistics. Further development of specific prediction models based on more intelligent algorithms is, therefore, required.

Septic patients with ARC, suggesting alteration of renal function associated with critical illness, seemed more likely to be associated with better outcomes1,40. More survivors within 28 days (17.2% vs. 31.0%; P < 0.001) were found in the ARC group in the present study. Renal function reserve—referring to the renal regulatory capacity for increasing GFR when exposed to stimuli—is considered as one of the most important mechanisms of ARC, which might partially explain such relevance5,13. The risk of failure to achieve an effective plasma concentration of drugs such as vancomycin in these patients—determined in part by increased renal blood flow and drug elimination—remains high in clinical practice41. Appropriate antimicrobial therapy is part of the foundation of sepsis management, especially for patients suffering from hyper-inflammation. Based on pathophysiological alterations in sepsis (especially those indicative of reserved renal function), timely optimized and individualized approaches targeting septic patients with ARC can be expected to lead to a higher probability of better prognosis42. Therefore, greater focus on ARC, more reliable risk factors and furtherly more applicable and accurate prediction model are in demand, considering the need to optimize therapeutic dosing in critically ill patients for routine ICU treatment.

Evaluation of renal function—especially early recognition of ARC based on separate biomarkers and basic calculations—has been limited, as previously developed models were neither appropriate for septic patients nor sufficiently interpretable. Previous equations including Cockcroft–Gault (CG), Modified CG, 4-variable Modification of Diet in Renal Disease (MDRD-4), and 6-variable Modification of Diet in Renal Disease (MDRD-6) were found to underestimate the measured value of CrCl value43,44. As 24-hour creatinine clearance (CrCl) is not part of common monitoring through daily tests in the ICU and is less accessible, and as application of the Chronic Kidney Disease Epidemiology Collaboration (CKD-EPI) formula as a substitute is also limited, the development of scales or models for the prediction of ARC is required. In this context, the Augmented Renal Clearance in Trauma Intensive Care (ARCTIC) scoring system was developed by Barletta et al.7 to predict ARC in trauma patients, with the score reaching an AUC of 0.813. The ARCTIC score was developed based on 133 traumatic patients with serum creatinine within the normal range (< 1.3 mg/dL); however, the ARCTIC score has been applied to a limited extent in critically ill patients with serum creatinine higher than 1.3 mg/dL. Huang et al.45 developed three models based on data from 2825 critically ill patients using the gradient boosting method machine learning algorithm to predict CrCl the next day, which inspired further modelling based on machine learning approaches for the prediction of outcomes concerning renal function in the ICU. The prediction model reported by Gijsen et al.39 was developed based on patients from adult medical, surgical, and cardiac surgery ICU, and was confirmed through external validation with an AUC of 0.88 and sensitivity of 84.1%. The development cohort was based on a heterogeneous population from mixed ICUs and, so, its applicability to specific populations in the ICU (e.g., septic patients) demanded further validation.

Accordingly, we developed an interpretable machine learning prediction model using various approaches and verified the robustness of the model through subgroup analyses in the present study. We focused on a cohort of septic patients, as well as subgroups of these patients divided in terms of their age, SOFA score, BMI, and history of CVD. AUPRC is based on precision and recall (also known as PPV and sensitivity, respectively) and is un-affected by class imbalance46. The XGBoost model was chosen, as it presented the best performance (AUC = 0.841, AUPRC = 0.496) for predicting ARC within 7 days among septic patients, outperforming the other six models. Moreover, SHAP values were used to reveal the most important variables for modelling, and the LIME algorithm was introduced to improve the interpretability of the prediction model, given the black-box nature of machine learning approaches. The SHAP summary graphic reveals that the maximum creatinine level, maximum blood urea nitrogen level, minimum creatinine level, history of renal disease and CCI score are the five most significant parameters. Both creatinine and urea nitrogen are mainly cleared by glomerular filtration and ARC is known as glomerular hyperfiltration or enhanced renal clearance which could manifested as decreased serum creatinine level and blood urea nitrogen level. Clinicians should pay attention to these renal function indicators to prepare for the occurrence of ARC. Both methods were utilized to help us partially explain the decision-making process. In particular, we also verified the robustness of the XGBoost model through subgroup analysis, indicating that more investigation on septic patients with more illness severity (SOFA score higher than 6) is necessary. Approximately half of the ARC cases occur within 3 days after ICU admission and most of the other cases occurred within 1 week47. The ARC predictor reported by Barletta et al.7 allows clinicians to identify patients who are likely to have ARC on the next day after evaluation during ICU stay. However, our ML model could reach an early prediction of ARC occurring within 7 days based on variables recorded on the first day after ICU admission. With the application of machine learning approaches, the model was built based on 37 variables selected through primary screening by experienced clinicians as well as LASSO regression to reduce overfitting during feature selection. Considering the collection and analysis of 37 variables, it is feasible to integrate the model application with the electronic health record (EHR). Deeply integrate with the hospital’s EHR system and develop dedicated interfaces to enable the model to automatically obtain real-time data of patients without the need for manual input by medical staff, thereby reducing the workload. When medical staff check the patient’s medical history, the system can automatically invoke the model for prediction and display the results on the interface, providing real-time reference for decision-making. The maximum and minimum creatinine level and the maximum BUN level on admission were identified as the most important variables for development of the model, which are routinely monitored intensively during ICU stay. ARC was found to be associated with baseline renal function, evaluated by serum creatinine and BUN on admission and history of renal disease in the present study, which is in accordance with the findings that the emergence of ARC indicates probability of renal function reserve1. Although some novel biomarkers are successively introduced for the investigation of renal function, serum creatinine is still considered as crucial indicator of glomerular filtration rate48.

There were some limitations in this study. First, the study was based on a retrospective dataset, which introduced bias due to clinical management referring to various guidelines over a wide range of time. Secondly, despite the fact that the machine learning model was made more interpretable using the SHAP and LIME approaches, the explanation relied on the specific variables used for model construction. Moreover, the data of MIMIC-IV came from a single center in the United States, which may affect the extension of the prediction model to other populations. Therefore, it is necessary to perform an external validation in an independent ICU population for further research of the extrapolation and discrimination ability of the model. Lastly, though we introduced the commonly adopted cutoff value, variation of the definition of ARC was discussed in some previous studies based on different measurement49,50. As such, application and interpretation of the prediction model should be carried out with caution. Future studies with more comprehensive data collection as well as more representative and larger cohorts are needed to further validate and refine the model.

Methods

Source of data

Data of patients with sepsis were extracted using the Structured Query Language from the Medical Information Mart for Intensive Care IV database Version 2.2 (MIMIC-IV v2.2 https://doi.org/10.13026/6mm1-ek67), a large US-based critical care database51. MIMIC-IV is a contemporary electronic health record dataset covering over 70,000 ICU admissions between 2008 and 2019. The database includes comprehensive information for each patient, such as demographic characteristics, length of stay, laboratory tests, imaging examination, vital signs, and so on. Data extraction was conducted by the researcher (T.W.) through qualified access (Certificate No.33461595 for the protection of human research participants examination) to MIMIC-IV database. As data have been de-identified in the MIMIC-IV database, the ethical approval statement was waived.

Participants and definition of ARC

According to the Third International Consensus Definitions for Sepsis and Septic Shock (Sepsis-3), patients with sepsis—defined as patients with probable infectious cause and an acute change in total SOFA scores of ≥ 2 within the first day of ICU admission—were included17. For patients re-admitted to ICU, we analyzed data from the first ICU stays only. Patients with age younger than 18 years old, ICU stays shorter than 48 h, or records without necessary variables for 24-hour creatinine clearance (CrCl) calculation were excluded. Patients receiving renal replacement therapy within 7 days after ICU admission were also excluded. ARC was defined as urinary 24-hour creatinine clearance (CrCl) ≥ 130 mL/min/1.73m2, according to previous studies13,19,20,21,22. 24-hour creatinine clearance (CrCl) was calculated using the urinary volume (UV, mL), mean urinary creatinine (UCr, mg/dL) concentration, and mean serum creatinine (SCr, mg/dL) concentration collected over 1 complete ICU day (1,440 min) and adjusted for an average body surface area using the Dubois and Dubois formula. 24-hour creatinine clearance (CrCl) = [UCr × UV]/[SCr × 1,440] × [1.73/(0.007184 × height [cm] 0.725 × weight [kg] 0.425)]). The participants were then separated into two groups, including an ARC group with 24-hour creatinine clearance (CrCl) > 130 mL/min/1.73m2 and a non-ARC group with 24-hour creatinine clearance (CrCl) ≤ 130 mL/min/1.73m2, based on laboratory variables and the aforementioned formula within 7 days after ICU admission. A patient with more than one record of 24-hour creatinine clearance (CrCl) > 130 mL/min/1.73m2 within 7 days after ICU admission was recognized as a patient with ARC.

Data extraction and processing

Demographic features including gender, age, ethnicity, and Body Mass Index (BMI) were extracted. Comorbidities including myocardial infarct, congestive heart failure, peripheral vascular disease, cerebrovascular disease (CVD), dementia, chronic pulmonary disease, rheumatic disease, peptic ulcer disease, diabetes, paraplegia, renal disease, tumor, liver disease, acquired immune deficiency syndrome (AIDS) and Charlson comorbidity index (CCI) were assessed. Infection sites, use of vasopressor, sepsis-related organ failure assessment (SOFA) and organ support were also obtained from the database. Laboratory data and vital signs within the first 24 h after ICU admission included heart rate, blood pressure, respiratory rate, percutaneous arterial oxygen saturation, partial pressure of oxygen, partial pressure of carbon dioxide, base excess, hematocrit, hemoglobin, platelet count, white blood cell count, red blood cell count, serum chloride, serum calcium, serum potassium, serum sodium, anion gap, blood urine nitrogen, alanine aminotransferase, alkaline phosphatase, aspartate transaminase and bilirubin (described in Supplementary TableS1). Both the maximum and minimum values of the laboratory variables were analyzed. No attempt was made to estimate the sample size of the study, as this was a hypothesis-generating epidemiological study. Instead, all eligible patients in the MIMIC-IV database were enrolled to achieve a maximized statistical power. Variables with > 30% missing values were excluded in order to minimize the bias resulting from missing data, while other variables with less than 30% missing values were processed using a multiple imputation method52.

Statistical analysis

Continuous variables are presented as the median with interquartile range (IQR) and were compared using the Wilcoxon rank-sum test. Categorial variables are presented as count and percentage and were compared using the Chi-square test or Fisher’s exact test, as appropriate53. Least absolute shrinkage and selection operator (LASSO) regression was performed to identify the significant clinical variables for feature selection, using 10-fold cross-validation to reduce overfitting54. The variables were then selected by using lamda minimum criteria in LASSO regression. Coefficient profiles for these features indicate the optimal point for retaining variables with non-zero coefficients. The sample size was furtherly verified to assure event per variable ratio of 10:1 in the models.

Seven ML algorithms—support vector machine (SVM), Extreme Gradient Boosting (XGBoost), decision tree (DT), random forest (RF), k-nearest neighbors (KNN), artificial neural network (ANN), and logistic regression (LR) —were employed for model construction. The data set was then randomly divided into a training set (70%) and a validation set (30%). In our cases, the model with the highest area under curve (AUC) of the receiver operating characteristic (ROC) curve was selected as the optimal candidate model. The prediction ability of the ML models was quantitatively measured using the AUC of the ROC curve, sensitivity, specificity, accuracy, positive predictive value (PPV), and negative predictive value (NPV)55. The Shapley Additive Explanations (SHAP) method was used to explore each characteristic’s significance in the optimal ML model’s output. Interpretability of models was further confirmed through the Local Interpretable Model-agnostic Explanations (LIME) algorithm.

Ridge regression, linear regression with a regularization term, can provide a fast and well-understood benchmark in and other linear regression such as LASSO regression56 is worth considering to develop a model rely on a minimal number of features within the available data. However, the relationships between features are often non-linear, models such as SVM can be a good choice for these cases. SVMs are powerful regression and classification models that can be used to perform both linear and nonlinear regression, which uses kernel functions to transform inseparable problems into separable problems57,58,59. The k-nearest neighbours classification60 can also provide a useful baseline performance marker compared with more complex models such as SVMs. Ensemble-based models such as random forests61 and XGBoost62 are both powerful non-linear models that have the advantages including providing feature importance estimates and requiring minimal hyperparameter tuning.

Subgroup analyses based on age, SOFA score, BMI and history of cerebrovascular disease (CVD) were then conducted. The medians of age, SOFA score, and BMI in the cohort were selected as cutoff values for subgrouping.

All statistical analyses were conducted using R version 4.3.3, and a two-tailed P-value below 0.05 was regarded as statistically significant.

Conclusion

In conclusion, the XGBoost model was the most effective model among all of the predictive ML models; it may help clinicians to predict the onset of ARC among patients with sepsis in ICU early and make timely dose adjustments to avoid treatment failure. Despite the promising performance of the obtained model, the results should be prospectively validated in more randomized controlled data sets.

Data availability

The data set supporting the findings of this study are available from the corresponding author on reasonable request.

References

Udy, A. A. et al. Augmented renal clearance: implications for antibacterial dosing in the critically ill. Clin. Pharmacokinet. 49, 1–16. https://doi.org/10.2165/11318140-000000000-00000 (2010).

Cook, A. M. & Hatton-Kolpek, J. Augmented Ren. Clear. Pharmacotherapy ; 39: 346–354. https://doi.org/10.1002/phar.2231 (2019).

Kawano, Y. et al. Augmented renal clearance in Japanese intensive care unit patients: a prospective study. J. Intensive Care. 4, 62. https://doi.org/10.1186/s40560-016-0187-7 (2016).

Carlier, M. et al. Meropenem and piperacillin/tazobactam prescribing in critically ill patients: does augmented renal clearance affect pharmacokinetic/pharmacodynamic target attainment when extended infusions are used? Crit. Care. 17, R84. https://doi.org/10.1186/cc12705 (2013).

Udy, A. A. et al. Augmented renal clearance in the ICU: results of a multicenter observational study of renal function in critically ill patients with normal plasma creatinine concentrations*. Crit. Care Med. 42, 520–527. https://doi.org/10.1097/ccm.0000000000000029 (2014).

Minville, V. et al. Increased creatinine clearance in polytrauma patients with normal serum creatinine: a retrospective observational study. Crit. Care. 15, R49. https://doi.org/10.1186/cc10013 (2011).

Barletta, J. F. et al. Identifying augmented renal clearance in trauma patients: validation of the augmented renal clearance in trauma intensive care scoring system. J. Trauma. Acute Care Surg. 82, 665–671. https://doi.org/10.1097/ta.0000000000001387 (2017).

Udy, A. A. et al. Augmented renal clearance in septic and traumatized patients with normal plasma creatinine concentrations: identifying at-risk patients. Crit. Care. 17, R35. https://doi.org/10.1186/cc12544 (2013).

Ruiz, S. et al. Screening of patients with augmented renal clearance in ICU: taking into account the CKD-EPI equation, the age, and the cause of admission. Ann. Intensive Care. 5, 49. https://doi.org/10.1186/s13613-015-0090-8 (2015).

Baptista, J. P. et al. Accuracy of the Estimation of glomerular filtration rate within a population of critically ill patients. J. Nephrol. 27, 403–410. https://doi.org/10.1007/s40620-013-0036-x (2014).

Sime, F. B., Udy, A. A. & Roberts, J. A. Augmented renal clearance in critically ill patients: etiology, definition and implications for beta-lactam dose optimization. Curr. Opin. Pharmacol. 24, 1–6. https://doi.org/10.1016/j.coph.2015.06.002 (2015).

Mahmoud, S. H. & Shen, C. Augmented renal clearance in critical illness: an important consideration in drug dosing. Pharmaceutics 9 https://doi.org/10.3390/pharmaceutics9030036 (2017).

Bilbao-Meseguer, I. et al. Augmented renal clearance in critically ill patients: A systematic review. Clin. Pharmacokinet. 57, 1107–1121. https://doi.org/10.1007/s40262-018-0636-7 (2018).

Claus, B. O. et al. Augmented renal clearance is a common finding with worse clinical outcome in critically ill patients receiving antimicrobial therapy. J. Crit. Care. 28, 695–700. https://doi.org/10.1016/j.jcrc.2013.03.003 (2013).

Carrié, C. et al. Increased β-Lactams dosing regimens improve clinical outcome in critically ill patients with augmented renal clearance treated for a first episode of hospital or ventilator-acquired pneumonia: a before and after study. Crit. Care. 23, 379. https://doi.org/10.1186/s13054-019-2621-4 (2019).

Cucci, M. et al. Incidence and risk factors associated with Multi-Drug-Resistant pathogens in a critically ill trauma population: A retrospective cohort study. Surg. Infect. (Larchmt). 21, 15–22. https://doi.org/10.1089/sur.2019.031 (2020).

Singer, M. et al. The third international consensus definitions for Sepsis and septic shock (Sepsis-3). Jama 315, 801–810. https://doi.org/10.1001/jama.2016.0287 (2016).

Rhodes, A. et al. Surviving Sepsis campaign: international guidelines for management of Sepsis and septic shock: 2016. Intensive Care Med. 43, 304–377. https://doi.org/10.1007/s00134-017-4683-6 (2017).

Baptista, J. P. et al. Prevalence and risk factors for augmented renal clearance in a population of critically ill patients. J. Intensive Care Med. 35, 1044–1052. https://doi.org/10.1177/0885066618809688 (2020).

Kawano, Y. et al. Outcomes in patients with infections and augmented renal clearance: A multicenter retrospective study. PLoS One. 13, e0208742. https://doi.org/10.1371/journal.pone.0208742 (2018).

Baptista, J. P. et al. Augmented renal clearance in septic patients and implications for Vancomycin optimisation. Int. J. Antimicrob. Agents. 39, 420–423. https://doi.org/10.1016/j.ijantimicag.2011.12.011 (2012).

Udy, A. A. et al. Subtherapeutic initial β-lactam concentrations in select critically ill patients: association between augmented renal clearance and low trough drug concentrations. Chest 142, 30–39. https://doi.org/10.1378/chest.11-1671 (2012).

Cockcroft, D. W. & Gault, M. H. Prediction of creatinine clearance from serum creatinine. Nephron 16, 31–41. https://doi.org/10.1159/000180580 (1976).

Levey, A. S. et al. A more accurate method to estimate glomerular filtration rate from serum creatinine: a new prediction equation. Modification of diet in renal disease study group. Ann. Intern. Med. 130, 461–470. https://doi.org/10.7326/0003-4819-130-6-199903160-00002 (1999).

Levey, A. S. et al. A new equation to estimate glomerular filtration rate. Ann. Intern. Med. 150, 604–612. https://doi.org/10.7326/0003-4819-150-9-200905050-00006 (2009).

Carlos, R. C., Kahn, C. E., Halabi, S. & Intelligence, A. Data Science: Big Data, Machine Learning, and J. Am. Coll. Radiol. ; 15: 497–498. https://doi.org/10.1016/j.jacr.2018.01.029 (2018).

Hou, N. et al. Predicting 30-days mortality for MIMIC-III patients with sepsis-3: a machine learning approach using XGboost. J. Transl Med. 18, 462. https://doi.org/10.1186/s12967-020-02620-5 (2020).

Du, M. et al. Comparison of the Tree-Based machine learning algorithms to Cox regression in predicting the survival of oral and pharyngeal cancers: analyses based on SEER database. Cancers (Basel). 12 https://doi.org/10.3390/cancers12102802 (2020).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444. https://doi.org/10.1038/nature14539 (2015).

Obermeyer, Z. & Emanuel, E. J. Predicting the Future - Big data, machine learning, and clinical medicine. N Engl. J. Med. 375, 1216–1219. https://doi.org/10.1056/NEJMp1606181 (2016).

Yang, F. et al. Using random forest for reliable classification and cost-sensitive learning for medical diagnosis. BMC Bioinform. 10 (Suppl 1), S22. https://doi.org/10.1186/1471-2105-10-s1-s22 (2009).

Linden, A. & Yarnold, P. R. Modeling time-to-event (survival) data using classification tree analysis. J. Eval Clin. Pract. 23, 1299–1308. https://doi.org/10.1111/jep.12779 (2017).

Hu, Y. J. et al. Decision tree-based learning to predict patient controlled analgesia consumption and readjustment. BMC Med. Inf. Decis. Mak. 12, 131. https://doi.org/10.1186/1472-6947-12-131 (2012).

Hsieh, M. H. et al. An artificial neural network model for predicting successful extubation in intensive care units. J. Clin. Med. 7 https://doi.org/10.3390/jcm7090240 (2018).

Flechet, M. et al. Machine learning versus physicians’ prediction of acute kidney injury in critically ill adults: a prospective evaluation of the akipredictor. Crit. Care. 23, 282. https://doi.org/10.1186/s13054-019-2563-x (2019).

Shillan, D. et al. Use of machine learning to analyse routinely collected intensive care unit data: a systematic review. Crit. Care. 23, 284. https://doi.org/10.1186/s13054-019-2564-9 (2019).

Zhang, Z., Ho, K. M. & Hong, Y. Machine learning for the prediction of volume responsiveness in patients with oliguric acute kidney injury in critical care. Crit. Care. 23, 112. https://doi.org/10.1186/s13054-019-2411-z (2019).

Ma, C. et al. A deep learning approach for chromium detection and characterization from soil hyperspectral data. Toxics 12 https://doi.org/10.3390/toxics12050357 (2024).

Gijsen, M. et al. Development and external validation of an online clinical prediction model for augmented renal clearance in adult mixed critically ill patients: the augmented renal clearance predictor. Crit. Care Med. 48, e1260–e1268. https://doi.org/10.1097/ccm.0000000000004667 (2020).

Di Giantomasso, D., May, C. N. & Bellomo, R. Vital organ blood flow during hyperdynamic sepsis. Chest 124, 1053–1059. https://doi.org/10.1378/chest.124.3.1053 (2003).

Baptista, J. P. et al. Decreasing the time to achieve therapeutic Vancomycin concentrations in critically ill patients: developing and testing of a dosing nomogram. Crit. Care. 18, 654. https://doi.org/10.1186/s13054-014-0654-2 (2014).

Udy, A. A. et al. Association between augmented renal clearance and clinical outcomes in patients receiving β-lactam antibiotic therapy by continuous or intermittent infusion: a nested cohort study of the BLING-II randomised, placebo-controlled, clinical trial. Int. J. Antimicrob. Agents. 49, 624–630. https://doi.org/10.1016/j.ijantimicag.2016.12.022 (2017).

Baptista, J. P. et al. A comparison of estimates of glomerular filtration in critically ill patients with augmented renal clearance. Crit. Care. 15, R139. https://doi.org/10.1186/cc10262 (2011).

Gijsen, M. et al. Can augmented renal clearance be detected using estimators of glomerular filtration rate? Crit. Care. 24, 359. https://doi.org/10.1186/s13054-020-03057-4 (2020).

Huang, C. Y. et al. Development and validation of the creatinine clearance predictor machine learning models in critically ill adults. Crit. Care. 27, 272. https://doi.org/10.1186/s13054-023-04553-z (2023).

Saito, T. & Rehmsmeier, M. The precision-recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets. PLoS One. 10, e0118432. https://doi.org/10.1371/journal.pone.0118432 (2015).

Mikami, R. et al. Onset timing and duration of augmented renal clearance in a mixed intensive care unit. J. Intensive Care. 11, 13. https://doi.org/10.1186/s40560-023-00660-9 (2023).

Stevens, L. A. et al. Assessing kidney function–measured and estimated glomerular filtration rate. N Engl. J. Med. 354, 2473–2483. https://doi.org/10.1056/NEJMra054415 (2006).

Carrié, C. et al. Association between augmented renal clearance, antibiotic exposure and clinical outcome in critically ill septic patients receiving high doses of β-lactams administered by continuous infusion: a prospective observational study. Int. J. Antimicrob. Agents. 51, 443–449. https://doi.org/10.1016/j.ijantimicag.2017.11.013 (2018).

Lannou, A. et al. Renal response after traumatic brain injury: A pathophysiological relationship between augmented renal clearance and salt wasting syndrome? Anaesth. Crit. Care Pain Med. 39, 239–241. https://doi.org/10.1016/j.accpm.2019.11.001 (2020).

Johnson, A. E. W. et al. MIMIC-IV, a freely accessible electronic health record dataset. Sci. Data. 10, 1. https://doi.org/10.1038/s41597-022-01899-x (2023).

Zhang, Z. Multiple imputation with multivariate imputation by chained equation (MICE) package. Ann. Transl Med. 4, 30. https://doi.org/10.3978/j.issn.2305-5839.2015.12.63 (2016).

Zhang, Z. et al. Comparing baseline characteristics between groups: an introduction to the CBCgrps package. Ann. Transl Med. 5, 484. https://doi.org/10.21037/atm.2017.09.39 (2017).

Ramsay, I. S. et al. Model selection and prediction of outcomes in recent onset schizophrenia patients who undergo cognitive training. Schizophr Res. Cogn. 11, 1–5. https://doi.org/10.1016/j.scog.2017.10.001 (2018).

Guan, C. et al. Interpretable machine learning model for new-onset atrial fibrillation prediction in critically ill patients: a multi-center study. Crit. Care. (London, England). 28, 349. https://doi.org/10.1186/s13054-024-05138-0 (2024).

Tibshirani, R. Regression shrinkage and selection via the Lasso. J. Roy. Stat. Soc.: Ser. B (Methodol.). 58, 267–288. https://doi.org/10.1111/j.2517-6161.1996.tb02080.x (1996).

Noble, W. S. What is a support vector machine? Nat. Biotechnol. 24, 1565–1567 (2006).

Kircher, M. et al. A general framework for estimating the relative pathogenicity of human genetic variants. Nat. Genet. 46, 310–315. https://doi.org/10.1038/ng.2892 (2014).

Driscoll, M. K. et al. Robust and automated detection of subcellular morphological motifs in 3D microscopy images. Nat. Methods. 16, 1037–1044. https://doi.org/10.1038/s41592-019-0539-z (2019).

Bzdok, D., Krzywinski, M. & Altman, N. Machine learning: supervised methods. Nat. Methods. 15, 5–6. https://doi.org/10.1038/nmeth.4551 (2018).

Wang, C. & Zhang, Y. Improving scoring-docking-screening powers of protein-ligand scoring functions using random forest. J. Comput. Chem. 38, 169–177. https://doi.org/10.1002/jcc.24667 (2017).

Zeng, W., Wu, M. & Jiang, R. Prediction of enhancer-promoter interactions via natural Language processing. BMC Genom. 19, 84. https://doi.org/10.1186/s12864-018-4459-6 (2018).

Acknowledgements

This work was supported by National Natural Science Foundation of China [8822241033], Guangci discipline group construction of public health and disaster emergency center [XKQ-09]. This work was also supported by the Shanghai “Rising Stars of Medical Talents” Youth Development Program-Clinical Laboratory Practitioner Program.

Funding

This work was supported by Shanghai Municipal Health Commission’s Seed Program for Medical New Technology Research and Translation [2024ZZ2045]. National Natural Science Foundation of China [8822241033], Guangci discipline group construction of public health and disaster emergency center [XKQ-09]. This work was also supported by the Shanghai “Rising Stars of Medical Talents” Youth Development Program-Clinical Laboratory Practitioner Program.

Author information

Authors and Affiliations

Contributions

T.W. and R.Z. designed the study, conducted the analyses, and wrote the manuscript. T.W. extracted the data. Y.W. contributed to the data analyses and charting. D.D., X.W. and H.Q. contributed to the design of the work. J.W. and Y.L. assisted and supervised the conduction of the study, and edited the manuscript. All the authors approved the final version of this manuscript, and agreed to take responsibilities for the work.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval and consent to participate

As data have been de-identified in the MIMIC-IV database, the ethical approval statement was waived.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wu, T., Zhuang, RY., Wu, YZ. et al. Machine learning for the prediction of augmented renal clearance (ARC) in patients with sepsis in critical care units. Sci Rep 15, 26119 (2025). https://doi.org/10.1038/s41598-025-11313-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-11313-2