Abstract

By integrating electric vehicles (EVs), the multi-microgrids (MMGs) can significantly enhance their resilient operation capabilities. However, existing works face challenges in formulating optimal routing and scheduling strategies for EVs, due to the spatial-temporal uncertainty of the distribution and transportation networks, as well as incomplete information. This paper addresses the coordination problem of EVs for the resilience enhancement of MMGs, using a distributed multi-agent deep reinforcement learning approach to minimize the load-shedding cost. Specifically, a coupled power-transportation network (CPTN) model is constructed to facilitate EV routing and scheduling for resilience enhancement, considering the uncertainties associated with distributed renewables, load profiles, and traffic flow. Then, the coordination problem of each EV is formulated as a partially observable Markov decision process, and an attention-based distributed multi-agent deep deterministic policy gradient method, namely AD-MADDPG, is proposed to learn the optimal strategies. The proposed method applies an architecture with multi-actor, single-learner to reduce training complexity, employing a convolutional neural network to capture spatial characteristics from the CPTN, and incorporating a long short-term memory to derive temporal sequence features across multiple time steps, thereby enhancing the exploration efficiency of the action space. Simulation results implemented on the modified IEEE 33-bus test feeder demonstrate that AD-MADDPG outperforms all other baselines in terms of load restoration, restoration fairness, and energy consumption when varying different numbers of EVs, maximum discharging proportion, and maximum moving distance.

Similar content being viewed by others

Introduction

Background and motivation

The continuous warming of the global climate poses significant challenges to the stable operation of power systems, increasing the occurrence of high-risk, low-probability extreme events1,2,3. To alleviate the impacts stemming from extreme events, enhancing the resilience of power systems has become a recent research focus4,5. Multi-microgrids (MMGs) architecture, comprising distributed renewable energy sources (DRES), demand response strategies, and flexible load resources, has emerged as a highly promising solution to enhance the resilience of distribution systems6. In the event of regional faults or outages in the utility grid, MMGs can operate in an off-grid mode, curtailing a portion of the load to maintain voltage and frequency within acceptable ranges, coordinating DRES to restore load as much as possible7. However, relying solely on the reconfiguration of distribution networks and intermittent DRES to maintain loads is challenging in practical operation, as their load restoration capabilities are limited and unstable. Moreover, multiple isolated microgrids may not be able to support each other, due to the random damage of distribution network lines.

Electric vehicles (EVs), with their excellent mobility and flexible charging and discharging capabilities, have been utilized in a lot of recent research to enhance the resilience of distribution networks8. The core issues of using EVs in resilience enhancement of MMGs lie in optimizing the routing and charge-discharge scheduling of EVs, maximizing load restoration while reducing energy consumption, under the premise of satisfying operational constraints9. To solve the optimal routing and dispatching problems, many model-based offline one-shot optimization methods have been proposed, such as mixed integer linear programming (MILP)10, stochastic programming11, and robust optimization12, etc. However, comprehensively considering the uncertainties of DRES and flexible loads imposes significant computational burdens, which may reduce the methods’ responsiveness to dynamic load changes.

To improve the adaptability of methods to time-varying environments, model-free approaches have been developed, such as deep reinforcement learning (DRL)13, offering a solution method to the decision-making problems in resilience enhancement of MMGs. DRL’s capability to deliver fast responses and accommodate uncertainties and contingencies is particularly advantageous. By training agents to iteratively interact with the environment without prior knowledge and learning the optimal policies for the dynamic scheduling of EVs. However, the existing DRL-based approaches14,15,16 usually treat the routing and scheduling of EVs separately, without considering their temporal and spatial coordination. Moreover, they simplify the routing of EVs to discrete path choices on a 2D vector map, ignoring the temporal and spatial characteristics of the movement process.

To solve these research gaps, we aim to design a coordinated and efficient routing and dispatch strategy for EVs to contribute to the resilience enhancement of MMGs, extract both temporal and spatial features, and improve the approaches’ responsiveness under incomplete information.

Literature review

Model-based methods

In recent years, model-based mathematical approaches have been extensively developed to model the routing and scheduling of EVs to enhance the resilience of power networks. In works17,18,19, a mixed-integer linear programming (MILP) model is proposed to achieve coordinated operation among EVs and MGs. In work20, a mixed-integer quadratically constrained programming (MIQCP) model is proposed to optimize the rerouting and dispatching of EVs for resilience enhancement in coupled traffic-electric networks. In work21, a two-stage stochastic optimization scheduling framework is proposed to coordinate the scheduling of EVs for the restoration of interconnected power-transportation systems under natural hazard risks. In works22,23, a robust optimization method is proposed to schedule the EVs’ mobility and its charging and discharging strategies to enhance the resilience of coupled networks by minimizing both investment and operational costs under uncertain traffic demands. In work24, a particle swarm optimization (PSO) algorithm is proposed to optimize the load dispatch of the microgrid containing EVs. In work25, a hybrid genetic algorithm and simulated annealing method (GA-SAA) is used to optimize the placement of EV charging station, reducing power losses and maintaining acceptable voltage levels. In work26, four metaheuristic algorithms, including differential evolution (DE), PSO, whale optimization algorithm (WOA), and grey wolf optimizer (GWO), are applied to schedule the charging/discharging activities of EVs, reducing the daily costs. However, when the number of considered scenarios is large, addressing uncertainties through stochastic programming or robust optimization can impose a significant computational burden. Furthermore, problem-solving based on heuristic algorithms cannot guarantee the accuracy of the solutions obtained.

Value-based DRL methods

Considering these limitations, DRL emerges as a data-driven and model-free approach27, offering a new paradigm for resilient operations involving MMGs and EVs. In one aspect of the existing literature, the value-based DRL methods, have been adopted to train the optimal routing and scheduling decisions of EVs. In references28,29, a deep Q-network (DQN) based model is proposed to dispatch a set of EVs to supply energy to different consumers at different locations, enhancing power system resilience while considering the uncertainties in power supply and demand. In references15,30, a double deep Q-networks (DDQN)-based method is proposed to solve the routing and scheduling coordination problem of mobile energy storage systems in the load restoration. In reference31, an enhanced dueling DDQN algorithm with mixed penalty function is developed to optimize the energy management of MG incorporating EVs. Although DQN or DDQN utilize deep neural networks to effectively handle high-dimensional state space and mitigate the curse of dimensionality, they still face significant challenges in balancing exploration and exploitation in such large spaces. Furthermore, their capability to handle continuous action spaces is limited, rendering them inadequate for addressing stochastic decision problems.

Policy-based DRL methods

The other side, therefore, employs policy-based DRL methods that can directly optimize the probability of taking an action or the action value rather than estimating the Q-value function. In reference32, a decentralized Actor-Critic (AC) method is proposed to solve the routing and scheduling of a large fleet of EVs, addressing the scalability issues in large-scale smart grid systems. In reference33, a heterogeneous multi-agent hypergraph attention Actor-Critic (HMA-HGAAC) framework is proposed to solve the joint EVs routing and battery charging scheduling problem in a transportation network with multiple battery swapping stations. In references34,35, a deep deterministic policy gradient (DDPG) method is proposed to enhance the resilience of EV charging stations in the presence of cyber uncertainties. In reference36, a twin-delayed deep deterministic policy gradient (TD3) is developed to obtain the optimal driving strategy of EVs in a traffic scenario with multiple constraints. In reference37, a hybrid parameter sharing proximal policy optimization (H-PSPPO) method is proposed to compute both discrete and continuous actions simultaneously that align with the nature of EV routing and scheduling problems in power-transportation network, aiming at enhancing power system resilience.

It can be concluded that, despite various types of DRL methods being applied to the routing and scheduling of EVs participating in the resilient operation of MMGs, the previous work exhibits the following potential limitations: i) existing studies typically treat EVs’ routing as a discrete action problem, without considering the extraction of spatial-temporal features of EVs’ movement within the transportation network; ii) the methods mentioned above may not deliver timely services for load restoration due to the increasing system scale and complexity in training within a multi-agent setting; iii) the inherent instability of the environment, coupled with the information asymmetry among multiple agents, exacerbates the difficulty of achieving stable learning dynamics and converging to optimal strategies.

Contribution

To address the above limitations and achieve multi-objective optimization, we propose an attention-based distributed MADRL algorithm with a multi-actors and single-learner framework to conduct the routing and scheduling of EVs. The main contributions of this paper can be summarized as follows:

-

A coupled power-transportation network (CPTN) is constructed to investigate the coordination effect of EVs routing and scheduling problems for the resilient load restoration of MMGs. Each EV routing and scheduling coordination problem is then formulated as a partially observable Markov decision process, exploiting the EVs’ flexibility in temporal and spatial.

-

An attention-based distributed multi-agent deep deterministic policy gradient method, namely AD-MADDPG, is proposed to solve the routing and scheduling coordination decision-making problem of EVs. This method employs a multi-actor, single-learner interaction architecture and utilizes a convolutional neural network (CNN) to extract spatial state features from the CPTN.

-

To enhance the efficiency of action exploration and exploitation, a long short-term memory (LSTM) network is employed to capture multi-step temporal characteristics. By incorporating a prioritized experience replay mechanism, the multi-step reward is calculated, giving a more precise approximation.

-

Comparative simulation results with other benchmark algorithms demonstrate that the proposed AD-MADDPG achieves superior performance in terms of load restoration, energy consumption, and restoration fairness.

Paper organization

The remainder of this paper is organized as follows. The section II presents the spatial-temporal network model of CPTN. The section III describes the problem formulation about the optimal routing and scheduling of EVs. In the section IV, the proposed AD-MADDPG algorithm is introduced to improve the load restoration performance. Performance evaluations are carried out in Section V to demonstrate the effectiveness of AD-MADDPG. Finally, the section VI concludes the paper.

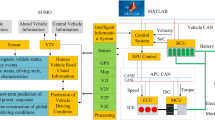

Spatial-temporal network modeling of coupled power-transportation network

The constructed spatial-temporal network model for the coupled power-transportation network (CPTN) is illustrated in Fig. 1. Considering disconnection from the utility grid, the radial network of MMGs operating in off-grid mode can be represented as a tree-topological graph \(\varvec{G}_{mg}=\left( \varvec{B},\varvec{L} \right)\), where \(\varvec{B}=\{1,2,...,B\}\) denotes the set of buses, and \(\varvec{L}=\{1,2,...,L\}\) indicates the set of branches. Similarly, the transportation network is modeled as a directed connected graph \(\varvec{G}_{tn}=(\varvec{V}_{tn},\varvec{E}_{tn})\), where \(\varvec{V}_{tn}=\{1,2,...,V\}\) represents the sequentially numbered vertices and \(\varvec{E}_{tn}=\{1,2,...,E\}\) denotes the edges of the connected graph, indicating road intersections (including origin and destination points) and directed road segments, respectively. The CPTN model also incorporates charging-discharging stations (CDSs) that couple MMGs and transportation networks together, defined as \(\varvec{H}=\{1,2,...,H\}\). Additionally, the set of microgrids is represented as \(\varvec{M}=\{1,2,...,M\}\).

During extreme events such as earthquakes, typhoons, prolonged icy weather, etc., which result in failures or power outages in the utility grid area, MMGs can operate in islanded mode, utilizing local DRES, diesel generation (DG) and energy storage system (ESS) to maintain power supply to critical loads. In this paper, we focus on the optimal routing and scheduling of EVs \(\varvec{I}=\{1, 2,..., I \}\) in the CPTN to enhance the resilient load restoration of isolated MMGs. Each MG monitors and collects information on bus and branch failures in the network, as well as the local generation and load, to form the supply-demand balance deviation requirements for the islanded operation of MGs. Additionally, each EV moves between different CDSs of MGs, considering the real-time traffic conditions of the transportation network, to facilitate load restoration after extreme events and ensure the stable operation of MMGs.

MMGs network modeling

After extreme events occur (e.g., line faults at B1-B2, B4-B5, B5-B6, B11-B12 in Fig. 6), the distribution network is segmented into multiple autonomous MGs. Each MG operates independently by leveraging local resources (DRES, DG, ESS) and internal grid reconfiguration capabilities38. MMGs typically shed a portion of the load as an emergency response to prevent sustained frequency and voltage decline when operating in islanded mode38. Subsequently, based on the locally available renewable energy generation capacity and dispatchable resources on the load side, MMGs gradually restore critical loads during the blackout period. We assume no internal bus-level damage within MGs, focusing on post-segmentation coordination. This simplification aligns with standard test systems (e.g., IEEE 33-bus) for resilience studies3,6. In this paper, the load restoration problem aims to maximize load recovery while minimizing economic costs, subject to safety constraints.

Operation costs of MG

The operation costs of each MG consist of three components: (1) DG generation cost, (2) load shedding cost, and (3) ESS battery degradation cost, as expressed in Eq. (1).

where \(\alpha _m\) and \(P_{m,t}^{dg}\) represent the unit generation cost and active power output of DG, respectively. \(c_{m}^{ls}\) and \(P_{m,t}^{ls}\) refer to the load shedding cost and the quantity of load shedding for MG m. \(c_{m}^{ess}\) and \(P_{m,t}^{essd}\) represent the battery degradation cost coefficient of ESS and the amount of energy discharged. The objective of each MG is to minimize its operational cost while satisfying operational constraints. Additionally, to prioritize the load restoration of islanded MG, we set \(c_{m}^{ls}\gg \alpha _m\) and \(c_{m}^{ls}\gg c_{m}^{ess}\), indicating that the penalty cost for load shedding is higher than the DG generation cost and ESS battery degradation cost.

Operation constraints

System-level constraints: The secure operation of MGs requires compliance with corresponding system-level power flow constraints as well as internal component-level constraints within the MGs. In this paper, the linearized DistFlow model39 is adopted to describe the power flow and voltage of MGs.

where Eqs. (2) and (3) represent the active power and reactive power from bus n to j at time step t, respectively. \(\varvec{B} _j\) is the set of buses that take bus j as the parent node. Equations (4) and (5) represent the voltage of bus and related constraints. Here, \(r_{nj}\) and \(w_{nj}\) represent the resistance and reactance of the branch (bus n to j), where the branch \(\left( n,j \right) \in \varvec{L}\).

Component-level constraints: The operational constraints of each component (including DG, ESS, PV, WT) within the MGs are represented as follows:

where Eqs. (6) and (7) represent the active power and reactive power constraints of DG, while (8) and (9) represent the power constraints of ESS.

Transportation network modeling

The transportation network model is illustrated in Fig. 2. Each node \(e, g \in \varvec{V}_{tn}\) represents a road intersection, which could also serve as the original or destination point of a journey. An edge \((e, g) \in \varvec{E}_{tn}\) denotes an available road connecting nodes e and g. For each road, the length \(D(e, g) \in \mathbb {R}^{\ge 0}\) determines the distance traveled along the road, while \(t_{rd}(e, g) \in \{1, 2, \ldots , T\}\) represents the travel time on the road without congestion. The maximum capacity of the road, \(V_{(e, g)}^{\max } \in \mathbb {R}^{\ge 0}\), indicates the maximum number of vehicles (per unit time) the road can accommodate without causing congestion from external traffic.

EVs traveling through the transportation network can both charge and discharge at the CDSs of MGs. When there is an excess of renewable energy generation in isolated MGs, EVs can serve as charging loads to absorb the surplus energy. Conversely, when there is insufficient generation in isolated MGs leading to load shedding, EVs can discharge, thereby enhancing the operational resilience of MGs. \(N_{h\in \varvec{H}}^{\max }\in \mathbb {N}\) denotes the maximum number of EVs that can charge or discharge simultaneously at CDS h. In this paper, EVs freely enter/exit the network. Our model optimizes participating EVs (fixed during scheduling horizons), consistent with real-world V2G contracts.

Real-time traffic flow

Due to the spatial-temporal dynamics of traffic flow in the transportation network, the travel time of road (e, g) at time t is significantly influenced by the real-time traffic volume. This paper models the impact of real-time traffic volume on road travel time as follows15:

where \(T_{\left( e,g \right) ,t}^{dri}\) represents the real-time travel time of the road (e, g), \(F_{\left( e,g \right) ,t}^{rt}\) denotes the real-time traffic flow, \(\delta ^{rd}\) and \(\rho ^{rd}\) represent congestion factors. \(d_{\left( e,g \right) ,t}^{rd}\) indicates the basic traffic volume40, i.e., the other types of vehicles with specific daily travel patterns in the transportation network. \(\sum _{i\in \varvec{I}}{N_{i,\left( e,g \right) ,t}^{rd}}\) represents the number of EVs participating in the resilient operation of islanded MGs on the road.

Dispatching costs of EVs

As EVs move within the transportation network, they consume energy, and their travel time is also affected by real-time road congestion. Therefore, we consider the energy consumption cost, time cost, and battery degradation cost of EVs moving between different CDSs for charging and discharging, calculated as follows:

where \(d_{bat}^{ev}\) represents the battery depreciation cost per unit of charging/discharging for EVs, \(P_{i,t}^{evd}/P_{i,t}^{evc}\) indicates the discharging/charging power of EV i at time t. \(\kappa\) and \(\sigma\) represent the energy consumption cost per unit distance and the cost coefficient per unit time for EVs, respectively. \(D_{i,t}^{ev}\) and \(T_{i,t}^{dri}\) represent the moving distance and traveling time.

Moreover, the constraints of routing and scheduling behaviors for EVs are represented as follows:

where Eqs. (16) and (17) represent the charging and discharging constraints of EVs. The binary variables \(\mu _{i,t}^{c}\) and \(\mu _{i,t}^{d}\) represent the charging and discharging decisions of EV. Equation (18) guarantees that the charging and discharging patterns of EV i cannot be triggered simultaneously. Equations (19) and (20) indicate the energy storage dynamic and the minimum and maximum energy storage levels of EVs.

Problem formulation

The key issue of this paper is to optimize the routing and charging-discharging scheduling of EVs in the CPTN. The goal is to achieve maximum load restoration in MMGs with the minimum energy consumption cost of the EVs.

POMDP modeling

To address the resilient load restoration problem of MMGs, the optimal routing and scheduling of EVs is formulated as a partially observable Markov decision process (POMDP33), denoted as \(\langle \varvec{S}, \varvec{O}, \varvec{A}, \varvec{R}, \varUpsilon \rangle\). Then a distributed MADRL-based method is proposed to solve the optimization problem.

State space

The state space of the CPTN system is defined as \(\varvec{S} _t=\left\{ \varvec{S} _1, \varvec{S} _2, \varvec{S} _3 \right\}\), comprising three parts:

-

(1)

The first channel of state \(\varvec{S}_1\) is shown in Fig. 3a, including the positions \(\left( x\left( h \right) , y\left( h \right) \right) _{h\in \varvec{H}}\) of the CDSs and the quantity of shedding load \(P_{h,t}^{ls}\), i.e., \(\varvec{S} _1=\left\{ \left( x\left( h \right) , y\left( h \right) , P_{h,t}^{ls} \right) \right\} _{h\in \varvec{H}, t\in \varvec{T}}\). Here, we utilize the index of CDSs to represent their corresponding MGs. Additionally, when \(P_{h,t}^{ls}\) is positive, it indicates that the MG corresponding to CDS h experiences load shedding; when \(P_{h,t}^{ls}\)is negative, it signifies that the MG corresponding to CDS h has surplus electricity.

-

(2)

The second channel of state \(\varvec{S}_2\) is shown in Fig. 3b, including the positions \(\left( x\left( e \right) , y\left( e \right) \right) _{e\in \varvec{V}}\) of nodes, and the traffic volume \(F_{\left( e,g \right) ,t}^{rt}\) of road (e, g), i.e., \(\varvec{S} _2={\left\{ \left( x\left( e \right) , y\left( e \right) , F_{\left( e,g \right) ,t}^{rt} \right) \right\} }_{e\in \varvec{V}, t\in \varvec{T}}\).

-

(3)

The third channel of state \(\varvec{S}_3\) is shown in Fig. 3c, which includes the positions \(\left( x\left( i \right) , y\left( i \right) \right) _{i\in \varvec{I}}\) and remaining energy \(E_{i,t}^{ev}\) of all EVs, i.e., \(\varvec{S} _3=\left\{ \left( x\left( i \right) , y\left( i \right) , E_{i,t}^{ev} \right) \right\} _{t\in \varvec{T}}\).

Action space

The action space is defined as \(\varvec{A} \triangleq \left\{ a_{i,t}=\left( \theta _{i,t}, l_{i,t}, P_{i,t}^{sch} \right) _{i\in \varvec{I}} \right\}\), where \(\theta _{i,t}\) and \(l_{i,t}\) represent the moving direction and distance of EV i at time step t, respectively, \(P_{i,t}^{sch}\in \left[ -1,1 \right]\) denotes the charging/discharging scheduling of EVs. At each time slot t, each EV decides its direction \(\theta _{i,t}\in \left[ 0, 2\pi \right)\) and distance \(l_{i,t}\in \left[ 0, l_{\max } \right]\) of movement based on the load reduction of each MG and the real-time traffic conditions, aiming to achieve the maximum load restoration with minimum movement cost. Additionally, EVs must comply with driving regulations and travel along the roads, incurring penalties if the direction and distance of movement exceed the boundaries (coordinates) of the roads. The charging/discharging schedule \(P_{i,t}^{sch} \in \left[ -1, 1 \right]\) represents the percentage of charging (positive) or discharging (negative) power of EVs relative to its battery capacity \([ -P_{i,\max }^{evd}, P_{i,\max }^{evc} ]\) when it arrives at a specific CDS. It is important to note that, unlike existing work, in this paper, the direction and distance of the EV’s movement are treated as continuous variables.

Reward function

The objective of this paper is to minimize the load reduction costs of isolated MGs in an energy-efficient way. Then, combining Eqs. (1) and (15), the reward function can be defined as follows:

where \(\omega _t\) denotes the fairness of load restoration in the MG, \(c_{m}^{ls}P_{m,t}^{ls}\) represents the load shedding cost of MG, \(C_{i,t}^{ev}\left( P_{i,t}^{evd} \right)\) and \(C_{i,t}^{ev}\left( P_{i,t}^{evc} \right)\) are the discharging and charging cost of EV i, respectively. Rewards incentivize load restoration (\(-c_{m}^{ls}P_{m,t}^{ls}\)) and efficient EV usage. Traffic congestion costs are inherently captured by \(T_{i,t}^{dri}\) in \(C_{i}^{ev}\) (Eq. 15).

Load restoration fairness

However, driven by the rational pursuit of profit, an EV is inclined to discharge at nearby MGs and then recharge at the closest ones, potentially neglecting MGs located in more remote areas. This tendency may result in insufficient or even no load restoration for certain MGs. Therefore, we introduce a fairness index \(\omega _t\) for load restoration across all isolated MGs, which can be represented by the Jain’s fairness index41, defined as:

where \(P_{t}^{load}\left( \pi ,m \right)\) represent the load restoration amount of MG m under EV scheduling strategy \(\pi\). It can be seen that as the value of \(\omega _t\left( \pi \right) \in [ \frac{1}{M}, 1 ]\) increases, the load restoration process among different MGs becomes more equitable.

According to the predetermined state transition \(\varUpsilon\) and reward function \(r_{i,t}\), the optimization problem in this paper can be formulated as:

where \(\gamma\) denotes the discount factor. At time slot t, each EV i makes decisions to learn the optimal scheduling policy \(\pi _{i}^{*}\), aiming to maximize its cumulative total reward, which can be defined as follows:

To solve the continuous optimal routing and scheduling problem of EV i, we proposed a distributed multi-agent DRL (MADRL) method to learn the optimal policy \(\pi _{i}^{*}\) for each EV. However, the optimal routing decision of an EV involves the spatial features of the CPTN. Additionally, due to the limited maximum distance an EV can move within a single time slot t, reaching a CDS may require multiple steps. Therefore, exploiting both the spatial and temporal features of EV movements is critical to solving our problem. In this paper, we use a convolutional neural network (CNN) to capture the spatial characteristics and integrate a long short-term memory (LSTM) network to extract temporal features, thereby improving the long-term performance of our model.

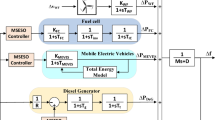

Distributed deep deterministic policy gradient framework

In this section, we propose an attention-based distributed multi-agent deep deterministic policy gradient (AD-MADDPG) framework to address the continuous routing and scheduling problem for each EV.

Attention-based distributed actor-critic with spatial state modeling

Each EV aims to navigate through the transportation network to reach the corresponding CDS associated with each isolated MG, minimizing load shedding losses for the MG during disconnection periods. Simultaneously, to reduce its energy consumption, EV i needs to optimize both its charging/discharging decisions and its movement paths within the transportation network. Therefore, acquiring effective spatial state information about the CPTN is crucial for the EV’s decision-making. To facilitate the extraction of spatial features from the CPTN, we divide the transportation network into a grid graph and utilize a CNN to extract the spatial information, as illustrated in Fig. 4.

Furthermore, for each EV i, four deep neural networks (DNNs) are implemented as Actor network \(\theta _{i}^{a}\), Target Actor network \(\theta _{i}^{ta}\), Critic network \(\theta _{i}^{c}\) and Target Critic network \(\theta _{i}^{tc}\). At each time slot t, EV i obtains an observation \(o_{i,t}\) and then the Actor \(\theta _{i}^{a}\) decides on an action \(a_{i,t}\) to execute according to the policy \(\pi _i\left( a_{i,t}\left| o_{i,t} \right. \right)\) combined with an attention mechanism. Here the attention mechanism learns a weight distribution \(W_i\) over the input observation and applies it to the original features, enabling the learning task to focus on the most important features, thereby improving efficiency. The attention mechanism is calculated as follows:

The attention mechanism can adaptively adjust the attention weights \(W_i\) and feature weighting based on changes in the environment and tasks, thereby optimizing policy selection and improving reinforcement learning performance.

After each EV takes actions according to its private observation, the corresponding reward \(r_{i,t}\) is calculated for each EV, and the state \(s_t\) transitions to \(s_{t+1}\). This transition includes the amount of load shedding \(P_{m,t}^{ls}\), the traffic volume \(F_{\left( e,g \right) ,t}^{rt}\) of road (e, g), the EV location \(\left( x\left( i \right) , y\left( i \right) \right) _{i\in \varvec{I}}\), and the remaining energy \(E_{i,t}^{ev}\) of each EV. At the end of each time slot t, the state transition tuple \((o_{i,t}, a_{i,t}, r_{i,t}, o_{i,t+1})\) generated from the interaction between EV and the environment is stored into the experience replay memory. After a fixed number of interactions, a mini-batch of state transitions is sampled from the experience replay memory to train the DNNs. Then, the critic network \(\theta _{i}^{c}\) is updated by minimizing the following loss function:

where the target Q-value \(y_{i,t}^{\theta _{i}^{tc}}\) is calculated by:

Finally, the actor network \(\theta _{i}^{a}\) is updated using gradients as:

Given the scale of the CPTN network, EVs require significant time and numerous transitions to fully explore their spatial and temporal features. To reduce the training complexity and enable the agent to learn optimal routing and scheduling strategies efficiently, we adopt a multi-actor, single-learner training framework. Specifically, the learner is implemented using the DDPG method, which consists of four DNNs (see Fig. 4). Each independent actor (EV) copies the policy network parameters from the learner and updates its policy network periodically. Each actor maintains a local experience replay memory \(B_{i}^{local}\) to store the generated transitions sequentially within its local observation, while the learner utilizes a global memory \(B_{i}^{global}\) for each EV \(i\). The local memory \(B_{i}^{local}\) sends all data to the global memory \(B_{i}^{global}\) once \(B_{i}^{local}\) is full. Since the multi-actors and single-learner operate in parallel, this framework allows for distributed execution of exploration and learning tasks, improving efficiency.

LSTM-enhanced temporal sequence features extraction

Within a single time slot t, the immediate step reward \(r_{i,t}\) only reflects benefits or losses at that moment and cannot capture the cumulative rewards of the EV over multiple time slots, which is essential for long-distance travel (since reaching a CDS may require multiple steps due to the maximum distance constraint within a single time slot t). Therefore, relying solely on the value of previous observations and actions based on \(Q_i\left( o_{t+1}, a_{i,t+1} \right)\) may not be accurate during the initial stages of training. Additionally, some EVs may develop a tendency to rely on specific CDSs that are suboptimal or may frequently return to recharge without contributing to load restoration rewards. Hence, it is crucial to extract multi-step temporal sequence features to address this issue. To better capture the long-term impact of policy choices and help the agent evaluate the future value of its actions, this paper calculates the cumulative reward over multiple steps as follows:

Therefore, the new transition will use \(o_{i,t}\) and \(o_{i,t+N-1}\) as the initial and final observations, generating a new transition \(\left( o_{i,t}, a_{i,t}, \lambda _{i,t}, o_{i,t+N-1} \right)\).

To account for the multi-step reward for each EV i, with a transition starting from t to \(t+N-1\), we employ an LSTM network to capture additional temporal sequence information. Specifically, we fed two sequences of observations \(\left\{ o_{i,t-\varepsilon +1}, o_{i,t-\varepsilon +2},\cdots , o_{i,t} \right\}\) and \(\left\{ o_{i,t+N-\varepsilon }, o_{i,t+N-\varepsilon +1},\cdots , o_{i,t+N-1} \right\}\) into the LSTM network to generate new observations \(\varPsi _{i,t}\) and \(\varPsi _{i,t+N-1}\) respectively, as shown in Fig. 5. This allows us to obtain a new transition \(\left( \varPsi _{i,t}, a_{i,t}, \lambda _{i,t}, \varPsi _{i,t+N-1} \right)\) based on the LSTM network modeling. Here, \(\varepsilon\) is the LSTM sequence length. In this way, as an EV learns from mini-batches, it retrospectively considers multiple time slots, gaining insight into the cumulative impact of a sequence of actions and decisions. Additionally, the EV can simultaneously learn optimal charging or load restoration locations through this iterative process.

Action clipping for safe exploration

When exploring the action space using DDPG, the physical system constraints of the EVs and MMGs must be satisfied. Otherwise, the resulting actions may threaten the safe operation of the MMGs and lead to severe consequences. Therefore, we clip the agents’ actions to ensure that the action exploration of EV agents complies with the constraints. In the load restoration problem of an isolated microgrid system, Eqs. (16)–(20) account for the constraints of EV charging/discharging scheduling. To satisfy these constraints, the Sigmoid activation function is designed in the output layer of the Actor network, guaranteeing that the output actions \(\left[ \theta _{out,i,t},l_{out,i,t},P_{out,i,t}^{sch} \right]\) are normalized values between 0 and 1. These normalized values are then mapped back to the absolute values within the operational range, expressed as follows:

where Eqs. (30) and (31) denote the action constraints for the EV’s movement direction and distance, respectively. Equation (32) represents the charging/discharging power constraint for the EV, while Eq. (33) ensures that the EV’s energy level at time step t always remains within its lower and upper bound.

Algorithm description

In this subsection, the AD-MADDPG algorithm for load restoration in isolated MMGs is illustrated, which combines a CNN for spatial information extraction and integrates an LSTM to capture multi-step temporal sequence features. The single-learner, utilizing the Deep Deterministic Policy Gradient (DDPG), replays mini-batch of transitions to update the policy network. The multi-actors operate independently within the environment, evaluating a local policy \(\pi\) derived from the Learner, and recording the observed transition data into their local replay memory. Periodically and asynchronously, these actors transmit their local buffer data to the Learner for each EV i. The training process of AD-MADDPG is described in Algorithms 1 and 2.

Performance evaluation

Simulation settings

To validate the performance of the proposed AD-MADDPG algorithm for resilient load restoration in EV-coordinated MMGs, we model the transportation network as a 2D square grid with dimensions of 32 \(\times\) 32 units. For the power network, we construct an off-grid operation scenario consisting of 5 MGs, based on a modified IEEE 33-bus test system, with the topological structure shown in Fig. 6. The CPTN includes 5 CDSs, which support EVs for charging and discharging. We assume that at 10:00 a.m., multiple faults occur, causing the MMGs to disconnect from the utility grid. The expected duration of the utility grid outage is 6 hours, with an EV scheduling interval of 0.5 hours (i.e., \(T = 12\)). Additionally, to validate the performance of EVs in participating in the load restoration of MMGs, we assume that the following lines are disconnected due to faults: B1-B2, B4-B5, B5-B6, and B11-B12. As a result, the MMGs are decomposed into 5 autonomously operating MGs, each utilizing internal DRES (e.g., PV and WT), DG, ESS and EVs to supply power to the loads within their respective islands. The technical parameters of each MG and EV are shown in Table 1.

For the DNN structure design, we use a 4-layer fully connected neural network with 2 hidden layers for the Actor, Critic, and Target networks. The neurons of hidden layers are 64, and the activation function is ReLU. Additionally, we employ a CNN with 3 hidden layers to extract spatial features. In the i-th layer of CNN, the number of filters is \(16\times 2^{\left( i-1 \right) }\), each of size \(3\times 3\) with stride 2. To prevent gradient explosion, batch normalization is applied in the CNN, and layer normalization is adopted in the LSTM. For the LSTM, the gain is set to 1.0, and the shift is set to 0.0. The sequence length \(\varepsilon\) is chosen from the set \(\left\{ 2,3,4 \right\}\).

To validate the effectiveness of the proposed AD-MADDPG algorithm, we compared it with several baseline algorithms, which are listed as follows:

-

MADDQN15: It investigates the optimization of routing and scheduling of mobile energy storage systems (MESS) for load restoration. To implement the MADDQN-based simulation, we discretized the routing and scheduling of EV to verify its convergence.

-

MADDPG35: It is regarded as the state-of-the-art method for multi-agent deep reinforcement learning, demonstrating superior performance in cooperative and competitive multi-agent environments compared to other DRL methods. In this paper, we employ MADDPG’s centralized training with decentralized execution to learn optimal routing and scheduling policies.

-

AD-MADDPG w/o LSTM: In this version, the policy network relies only on the current observation \(o_{i,t}\) at time slot t, without utilizing the proposed LSTM-enhanced Multi-step temporal sequence features extraction.

Evaluation of AD-MADDPG

Convergence analysis

The training curves for the EVs are compared and presented in Fig. 7. The left side of Fig. 7 shows the training loss for both the Actor and Critic networks, while the right side displays the average reward along with the standard deviation (shaded region) over 10000 episodes. From the figure, it can be observed that the loss values for both the Actor and Critic networks initially decrease rapidly due to the imprecise approximation, which cause high loss values at the beginning. With the use of multi-actor, single-learner, along with LSTM-enhanced Multi-step temporal sequence features extraction, the losses gradually converge to a smaller numerical range around 4000 episodes, effectively demonstrating the convergence of the proposed AD-MADDPG algorithm. Furthermore, the right side of Fig. 7 shows that our AD-MADDPG algorithm outperforms the three baselines in terms of both the converged average rewards and convergence speed. Specifically, the converged average reward for AD-MADDPG is 21.5% higher than AD-MADDPG w/o LSTM. These results indicate that our method is learning a more effective policy, maximizing load restoration in isolated MMGs while reducing the energy consumption of EVs.

Voltage analysis of MGs

The hourly voltage fluctuation curves for different buses in MMGs are shown in Fig. 8. All bus voltages remain within the range of 0.95-1.05 p.u., confirming that each MG independently sustains stable voltage profiles. In particular, even Bus 6 with the largest fluctuations (±0.015 p.u.) strictly adheres to the safe range. In addition, voltage fluctuations intensify during 08:00–19:00 due to concurrent spikes in MG load consumption and renewable generation (PV/WT). The increased PV/WT output elevates local voltages, while load surges create temporary drops. Our coordination framework dynamically balances these opposing effects through EV scheduling, ensuring overall stability.

Computational performance

The computational performance of the evaluated methods is shown in Table 2. It is evident that MADDQN has the longest total training time, primarily due to the near-linear increase in the number of DNNs as the number of EVs grows and the large-scale discrete action space. In contrast, MADDPG has the shortest training time, thanks to its deterministic policy updates and simplified action selection process. Additionally, the AD-MADDPG algorithm converges in the fewest episodes, benefiting from the multi-actor, single-learner architecture of MADRL and the LSTM-enhanced Multi-step temporal sequence modeling. These results indicate that our AD-MADDPG algorithm achieves higher action exploration efficiency during training, leveraging multi-step expected discounted reward calculation. This leads to higher average rewards for EVs in load restoration and demonstrates superior computational performance within a reasonable training time.

Evaluation of load restoration

To verify the performance of EV scheduling policies for load restoration in islanded MMGs, we conducted a comparative analysis between scenarios with and without EV participation. The comparison results are illustrated in Fig. 9.

From Fig. 9a, it can be observed that MG 1 experiences issues with DRES (wind and solar) power curtailment due to an abundance of renewable energy when EVs are not participating in the strategy. However, when EVs are incorporated into MG 1’s operation scheduling, the surplus renewable energy is effectively utilized. After being fully charged in MG 1, these EVs can leverage their mobile energy storage capability to discharge in other MGs where power generation is insufficient. Fig. 9b demonstrates that MG 3 achieves a basic supply-demand balance without EVs. However, this balance comes at the cost of extensive diesel generator use and frequent ESS charge-discharge cycles, leading to high operational costs and significant carbon emissions. With the participation of EVs, the reliance on diesel generation and ESS scheduling in MG 3 is significantly reduced, resulting in substantial cost savings. Fig. 9c shows that MG 4 experiences substantial load shedding when EVs are not involved, in order to maintain power balance due to insufficient output from DRES. When EVs participate, they facilitate a better load recovery, utilizing their batteries to discharge energy back into the MGs. Table 3 provides a comparison of the total costs under the two different strategies. Compared to Strategy 1, Strategy 2 achieves a reduction in both DRES curtailment costs and load shedding costs, directly saving an economic loss of 42659.33 (CNY), which accounts for approximately 52.53% of the total cost under Strategy 1.

Accordingly, under the strategy where EVs do not participate in the operation scheduling of MMGs, due to the lack of effective energy transmission channels, the surplus DRES in MG 1 cannot support the load of MG 4, resulting in curtailment of wind and solar power in MG 1 and load shedding in MG 4. Conversely, when EVs participate in the scheduling of MMGs, the mobile energy storage feature of EVs can be fully utilized to establish temporary electricity transmission channels, thereby transferring surplus renewable energy from MG 1 to support critical loads in MG 4, enhancing the overall operational resilience of the entire MMGs.

Evaluation of EVs scheduling

To evaluate the scheduling performance of EVs, we compare the proposed AD-MADDPG with three other baselines (as stated in section V.A.) in terms of the following three metrics.

-

Load restoration ratio \(\sigma ^l\): determined as the ratio of the total amount of load restored to the initial amount of shed load over T time slots.

-

Restoration fairness \(\omega _t\): defined by Eq. (22) to illustrate how evenly the load associated with MGs is restored by all EVs over T time slots.

-

Energy consumption ratio \(\sigma ^e\): computed as the ratio of the total energy consumed (for movement) by all EVs to the initial energy reserve over T time slots.

We conduct three sets of simulations by varying the number of EVs I, maximum discharging proportion \(P^{sch}_{\max }\), and the maximum moving distance \(l_{max}\) in a time slot. The comparison results in terms of load restoration ration \(\sigma ^l\), restoration fairness \(\omega _t\), and energy consumption ratio \(\sigma ^e\) are illustrated in Fig. 10. As shown in Fig. 10a, we fixed \(P^{sch}_{\max }=70\%\), \(l_{max}=1.0\), while the number of EVs I changes form 20 to 60. In this case, we observe that our AD-MADDPG consistently outperforms the other three baselines in terms of load restoration. For example, when \(I=30\), AD-MADDPG achieves a load restoration ratio of 0.924, compared to 0.814 achieved by the best baseline AD-MADDPG w/o LSTM, making a 13.5% improvement. On average, our AD-MADDPG improves the load restoration ratio by 9.5%, 22.6%, and 41.5% over AD-MADDPG w/o LSTM, MADDPG and MADDQN, respectively. In addition, we can see that with the increase in the number of EVs, the load restoration, restoration fairness and energy consumption are increasing due to the increased total energy consumption. However, benefiting from the LSTM-based N-step temporal sequence modeling and multi-actor, single-learner architecture designing, our AD-MADDPG method facilitates effective collaboration among multiple EVs. This leads to better scheduling strategies during the learning process, thereby improving the load restoration ratio and restoration fairness, while keeping the increase in energy consumption relatively slow. Specifically, for the energy consumption ratio, our AD-MADDPG achieved an average reduction of 8.9%, 17.8%, and 21.4% over AD-MADDPG w/o LSTM, MADDPG, and MADDQN, respectively.

In Fig. 10b, we fixed \(I=30\), \(l_{\max }=1.0\), while the maximum discharging ratio \(P^{sch}_{\max }\) changes from 0.4 to 0.8 with a step size of 0.1. As can be seen, AD-MADDPG outperforms all baselines in terms of load restoration, restoration fairness, and energy consumption. This is because as the maximum discharging ratio increases, EVs can strategically adjust their discharging rates based on their remaining energy status during each time slot. This enables them to supply more electrical energy to MGs experiencing energy deficits, thereby increasing the load restoration ratio. Furthermore, by discharging more energy in each time slot, EVs can reduce the frequency of their movements required for charging and discharging, consequently lowering energy consumption costs and enhancing overall operational efficiency. These results demonstrate that our spatial-temporal cooperative method effectively trains multiple agents to cooperate in a distributed manner, thereby enhancing the load restoration ratio while reducing energy consumption and maintaining a high level of restoration fairness.

Figure 10c evaluates the impact of maximum moving distance \(l_{\max }\) of EVs in a time slot on the three metrics. We fixed \(I=30\), \(P^{sch}_{\max }=80\%\), while the maximum moving distance \(l_{\max }\) changes from 0.6 to 1.4 with a step size of 0.2. As can be observed from Fig. 10c, compared to the three benchmark algorithms, the proposed algorithm demonstrates a significant performance improvement. Specifically, it achieves an average increase in load restoration ratio of 9.6%, 19.8%, and 25.5%, respectively. Additionally, it improves restoration fairness by 7.9%, 16.5%, and 40.9%, and reduces the energy consumption ratio by 8.2%, 15.7%, and 23.6% on average, respectively. We can see that as the maximum moving distance increases, the load restoration ratio of MMGs and restoration fairness gradually improve and reach the bottleneck, while the energy consumption ratio exhibits only slight fluctuations. This occurs because increasing the maximum moving distance of EVs within a single time slot allows the EVs to cover greater distances over the entire episode with a fixed number of slots. As a result, EVs can reach more distant MGs during the resilient load restoration process, providing more flexible routing and charge-discharge scheduling. This enhances the overall system’s collaborative performance.

Conclusion

This paper focuses on improving the resilient load restoration of MMGs by integrating EVs. The impact of EVs on load restoration of MMGs is analyzed using a constructed CPTN system, which couples the MMGs’ power network with the transportation network. To reduce load-shedding costs during off-grid operation and save energy consumption of EVs, a distributed DDPG algorithm with a multi-actor, single-learner training structure is implemented to explore the performance of routing and charging-discharging scheduling. The main findings of this paper can be concluded as follows:

-

To investigate resilient load restoration in MMGs, we constructed a coupled power-transportation network. This model represents the off-grid operation of MMGs and the optimal routing and scheduling of EVs, taking into account the uncertainty factors of both MGs and the transportation network.

-

To maximize the load restoration and restoration fairness among MMGs while minimizing the energy consumption of EVs, a distributed multi-agent DDPG approach is proposed, called AD-MADDPG. This approach incorporates CNN for spatial feature extraction and LSTM for temporal sequence modeling. Furthermore, we make an improvement for AD-MADDPG by integrating a multi-actor, single-learner framework to improve the learning speed and quality.

-

Simulation results demonstrate that: (1) the involvement of EVs in the resilient operation of MMGs significantly reduces the load shedding costs; (2) Compared to benchmark algorithms, the proposed AD-MADDPG utilizes a framework of multi-actor, single-learner, along with LSTM-enhanced Multi-step temporal features extraction, effectively accelerates DNN training and facilitates the learning of multi-agent cooperation strategies, thereby improving load restoration and restoration fairness while reducing energy consumption.

In future work, we will provide a more detailed modeling of the uncertainty factors within transportation networks and analyze their impact on EV scheduling. Additionally, curriculum learning and parameter sharing will be integrated into the multi-actor single-learner framework to further enhance the learning efficiency of the distributed multi-agent training process, thereby establishing a robust foundation for the practical application of the AD-MADDPG algorithm in real-world environments.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Xiong, H. et al. Resilience enhancement for distribution system with multiple non-anticipative uncertainties based on multi-stage dynamic programming. IEEE Trans. Smart Grid 15, 5706–5720 (2024).

Amini, F., Ghassemzadeh, S., Rostami, N. & Tabar, V. S. A stochastic two-stage microgrid formation strategy for enhancing distribution network resilience against earthquake event incorporating distributed energy resources, parking lots and responsive loads. Sustain. Cities Soc. 101, 105191 (2024).

Kamal, F., Chowdhury, B. H. & Lim, C. Networked microgrid scheduling for resilient operation. IEEE Trans. Ind. Appl. 60, 2290–2301 (2023).

Zhang, W. et al. Spatial-temporal resilience assessment of distribution systems under typhoon coupled with rainstorm events. IEEE Trans. Ind. Inform. 21, 188–197 (2024).

Shi, W., Liang, H. & Bittner, M. Data-driven resilience enhancement for power distribution systems against multishocks of earthquakes. IEEE Trans. Ind. Inform. 20, 7357–7369 (2024).

Qin, H. & Liu, T. Resilience enhancement of multi-microgrid system of systems based on distributed energy scheduling and network reconfiguration. J. Electr. Eng. Technol. 19, 2135–2147 (2024).

Monteiro, M. R., de Souza, A. Z. & Abdelaziz, M. Hierarchical load restoration for integrated transmission and distribution systems with multi-microgrids. IEEE Trans. Power Syst. 39, 7050–7063 (2024).

Liang, Z., Qian, T., Korkali, M., Glatt, R. & Hu, Q. A vehicle-to-grid planning framework incorporating electric vehicle user equilibrium and distribution network flexibility enhancement. Appl. Energy 376, 124231 (2024).

Zou, X., Wang, Y. & Strbac, G. A resilience-oriented pre-positioning approach for electric vehicle routing and scheduling in coupled energy and transport sectors. Sustain. Energy, Grids Netw. 39, 101484 (2024).

Mondal, S., Ghosh, P. & De, M. Evaluating the impact of coordinated multiple mobile emergency resources on distribution system resilience improvement. Sustain. Energy, Grids Netw. 38, 101252 (2024).

Kazemtarghi, A. & Mallik, A. A two-stage stochastic programming approach for electric energy procurement of ev charging station integrated with bess and pv. Electr. Power Syst. Res. 232, 110411 (2024).

Xie, H., Gao, S., Zheng, J. & Huang, X. A three-stage robust dispatch model considering the multi-uncertainties of electric vehicles and a multi-energy microgrid. Int. J. Electr. Power Energy Syst. 157, 109778 (2024).

Chen, L., He, H., Jing, R., Xie, M. & Ye, K. Energy management in integrated energy system with electric vehicles as mobile energy storage: An approach using bi-level deep reinforcement learning. Energy 307, 132757 (2024).

Wang, Y., Qiu, D., He, Y., Zhou, Q. & Strbac, G. Multi-agent reinforcement learning for electric vehicle decarbonized routing and scheduling. Energy 284, 129335 (2023).

Wang, Y., Qiu, D. & Strbac, G. Multi-agent deep reinforcement learning for resilience-driven routing and scheduling of mobile energy storage systems. Appl. Energy 310, 118575 (2022).

Liu, L., Huang, Z. & Xu, J. Multi-agent deep reinforcement learning based scheduling approach for mobile charging in internet of electric vehicles. IEEE Trans. Mob. Comput. 23, 10130–10145 (2024).

Masrur, H., Shafie-Khah, M., Hossain, M. J. & Senjyu, T. Multi-energy microgrids incorporating ev integration: optimal design and resilient operation. IEEE Trans. Smart Grid 13, 3508–3518 (2022).

Ebadat-Parast, M., Nazari, M. H. & Hosseinian, S. H. Distribution system resilience enhancement through resilience-oriented optimal scheduling of multi-microgrids considering normal and emergency conditions interlink utilizing multi-objective programming. Sustain. Cities Soc. 76, 103467 (2022).

Wang, W., Xiong, X., He, Y., Hu, J. & Chen, H. Scheduling of separable mobile energy storage systems with mobile generators and fuel tankers to boost distribution system resilience. IEEE Trans. Smart Grid 13, 443–457 (2022).

Gan, W., Wen, J., Yan, M., Zhou, Y. & Yao, W. Enhancing resilience with electric vehicles charging redispatching and vehicle-to-grid in traffic-electric networks. IEEE Trans. Ind. Appl. 60, 953–965 (2023).

Kong, L., Zhang, H., Xie, D. & Dai, N. Leveraging electric vehicles to enhance resilience of interconnected power-transportation system under natural hazards. IEEE Trans. Transp. Electrif. 11(1), 1126–40 (2024).

Wen, J., Gan, W., Chu, C.-C., Jiang, L. & Luo, J. Robust resilience enhancement by ev charging infrastructure planning in coupled power distribution and transportation systems. IEEE Trans. Smart Grid 16, 491–504 (2024).

Lu, Z., Xu, X., Yan, Z. & Shahidehpour, M. Multistage robust optimization of routing and scheduling of mobile energy storage in coupled transportation and power distribution networks. IEEE Trans. Transp. Electrif. 8, 2583–2594 (2021).

Zhang, X., Wang, Z. & Lu, Z. Multi-objective load dispatch for microgrid with electric vehicles using modified gravitational search and particle swarm optimization algorithm. Appl. Energy 306, 118018 (2022).

Kumar, B. A. et al. Hybrid genetic algorithm-simulated annealing based electric vehicle charging station placement for optimizing distribution network resilience. Sci. Rep. 14, 7637 (2024).

Shaheen, H. I., Rashed, G. I., Yang, B. & Yang, J. Optimal electric vehicle charging and discharging scheduling using metaheuristic algorithms: V2g approach for cost reduction and grid support. J. Energy Stor. 90, 111816 (2024).

Abid, M. S. et al. A novel multi-objective optimization based multi-agent deep reinforcement learning approach for microgrid resources planning. Appl. Energy 353, 122029 (2024).

Alqahtani, M. & Hu, M. Dynamic energy scheduling and routing of multiple electric vehicles using deep reinforcement learning. Energy 244, 122626 (2022).

Gautam, M., Bhusal, N. & Ben-Idris, M. Postdisaster routing of movable energy resources for enhanced distribution system resilience: A deep reinforcement learning-based approach. IEEE Ind. Appl. Mag. 30, 63–76 (2023).

Ding, Y., Chen, X. & Wang, J. Deep reinforcement learning-based method for joint optimization of mobile energy storage systems and power grid with high renewable energy sources. Batteries 9, 219 (2023).

Zhao, C., Li, Y., Zhang, Q. & Ren, L. Low carbon economic energy management method in a microgrid based on enhanced d3qn algorithm with mixed penalty function. IEEE Trans. Sustain. Energy https://doi.org/10.1109/TSTE.2025.3528952 (2025).

Alqahtani, M., Scott, M. J. & Hu, M. Dynamic energy scheduling and routing of a large fleet of electric vehicles using multi-agent reinforcement learning. Comput. Ind. Eng. 169, 108180 (2022).

Mao, S., Jin, J. & Xu, Y. Routing and charging scheduling for ev battery swapping systems: Hypergraph-based heterogeneous multiagent deep reinforcement learning. IEEE Trans. Smart Grid 15(5), 4903–16 (2024).

Sepehrzad, R., Khodadadi, A., Adinehpour, S. & Karimi, M. A multi-agent deep reinforcement learning paradigm to improve the robustness and resilience of grid connected electric vehicle charging stations against the destructive effects of cyber-attacks. Energy 307, 132669 (2024).

Sepehrzad, R., Faraji, M. J., Al-Durra, A. & Sadabadi, M. S. Enhancing cyber-resilience in electric vehicle charging stations: A multi-agent deep reinforcement learning approach. IEEE Trans. Intell. Transp. Syst. 25, 18049–18062 (2024).

Wu, X., Li, J., Su, C., Fan, J. & Xu, M. A deep reinforcement learning based hierarchical eco-driving strategy for connected and automated hevs. IEEE Trans. Veh. Technol. 72, 13901–13916 (2023).

Qiu, D., Wang, Y., Zhang, T., Sun, M. & Strbac, G. Hybrid multiagent reinforcement learning for electric vehicle resilience control towards a low-carbon transition. IEEE Trans. Ind. Inform. 18, 8258–8269 (2022).

Shen, F. et al. Transactive energy based sequential load restoration of distribution systems with networked microgrids under uncertainty. IEEE Trans. Smart Grid 15, 2601–2613 (2023).

Du, Y. & Wu, D. Deep reinforcement learning from demonstrations to assist service restoration in islanded microgrids. IEEE Trans. Sustain. Energy 13, 1062–1072 (2022).

Sun, Y., Chen, Z., Li, Z., Tian, W. & Shahidehpour, M. Ev charging schedule in coupled constrained networks of transportation and power system. IEEE Trans. Smart Grid 10, 4706–4716 (2018).

Hussain, A. & Musilek, P. Fairness and utilitarianism in allocating energy to evs during power contingencies using modified division rules. IEEE Trans. Sustain. Energy 13, 1444–1456 (2022).

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant 62302154 and Grant 62306108, in part by the Natural Science Foundation of Hubei Province under Grant 2024AFB882, in part by the National Natural Science Foundation of China Cultivation Project under Grant 2024pygpzk09, in part by the Doctoral Scientific Research Foundation of Hubei University of Technology under Grant XJ2022006701, in part by the Key Research and Development Program of Hubei Province under Grant 2023BEB024; in part by Open Project Funding of the Key Laboratory of Intelligent Sensing System and Security (Hubei University), Ministry of Education under Grant KLISSS202404.

Author information

Authors and Affiliations

Contributions

Yuxin Wu wrote the manuscript and performed the data analysis; Ting Cai performed the formal analysis; Xiaoli Li performed the validation.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wu, Y., Cai, T. & Li, X. Resilience driven EV coordination in multiple microgrids using distributed deep reinforcement learning. Sci Rep 15, 27038 (2025). https://doi.org/10.1038/s41598-025-12471-z

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-12471-z

Keywords

This article is cited by

-

A review of urban energy resilience assessment under extreme climate conditions

International Journal of Environmental Science and Technology (2026)