Abstract

Human pose estimation is a fundamental task in computer vision. However, existing methods face performance fluctuation challenges when processing human targets at different scales, especially in outdoor scenes where target distances and viewing angles frequently change. This paper proposes ScaleFormer, a novel scale-invariant pose estimation framework that effectively addresses multi-scale pose estimation problems by innovatively combining the hierarchical feature extraction capabilities of Swin Transformer with the fine-grained feature enhancement mechanisms of ConvNeXt. We design an adaptive feature representation mechanism that enables the model to maintain consistent performance across different scales. Extensive experiments on the MPII human pose dataset demonstrate that ScaleFormer significantly outperforms existing methods on multiple metrics including PCKh, scale consistency score, and keypoint mean average precision. Notably, under extreme scaling conditions (scaling factor 2.0), ScaleFormer’s scale consistency score exceeds the baseline model by 48.8 percentage points. Under 30% random occlusion conditions, keypoint detection accuracy improves by 20.5 percentage points. Experiments further verify the complementary contributions of the two core components. These results indicate that ScaleFormer has significant advantages in practical application scenarios and provides new research directions for the field of pose estimation.

Similar content being viewed by others

Introduction

Background

Human pose estimation, as one of the core tasks in computer vision research, has broad application prospects in intelligent surveillance, motion analysis, human-computer interaction, and augmented reality1. With the continuous advancement of social informatization, the demand for accuracy in human action recognition and pose estimation has grown increasingly, especially real-time pose estimation in complex dynamic environments which still faces numerous challenges. In recent years, the development of deep learning has provided new ideas and technical support for solving this problem2. Despite these advances, maintaining stable detection performance in outdoor changing environments remains an urgent issue to be resolved3.

Current mainstream pose estimation research mostly focuses on performance improvement under ideal conditions. Yet in practical application scenarios, especially in outdoor environments, model performance often experiences significant decline due to factors such as perspective changes, target scaling, and lighting interference4. Various fields including intelligent health monitoring, medical rehabilitation, and sports training analysis require pose estimation algorithms that can adapt to complex environments and provide real-time quantitative assessments5,6,7. The diversity of pose changes and environmental complexity in these applications challenge existing algorithms to maintain stable performance8. Existing methods face limitations in balancing global structural consistency and local joint precise localization, with traditional approaches often struggling with either computational efficiency or scale adaptation9,10,11.

The challenge of multi-scale feature extraction and scale-invariant learning extends beyond human pose estimation and has emerged as a fundamental problem across various domains in computer vision and signal processing. Recent advances in medical image segmentation have demonstrated the importance of hierarchical feature consistency learning for handling multi-scale anatomical structures12. Similarly, in radar signal processing, time-frequency aware hierarchical feature optimization has proven crucial for robust target recognition under varying environmental conditions13. The significance of multi-level feature learning has also been highlighted in few-shot learning scenarios, where contrastive learning approaches effectively address scale variations in synthetic aperture radar target recognition14. Furthermore, the development of scale-adaptive networks for automatic modulation classification has shown the universal importance of handling multi-scale variations in signal processing applications15. These cross-domain developments underscore the critical need for robust multi-scale feature learning mechanisms that can maintain consistent performance across different input scales, providing strong motivation for developing scale-invariant solutions in human pose estimation.

To address these challenges, we conducted extensive analysis of complex scene samples in the MPII human pose dataset and identified that target scale variation is one of the primary factors affecting estimation accuracy. When human targets appear at different sizes within images, existing methods often fail to maintain consistent performance across scales. This observation motivated our research to develop a scale-invariant solution that can handle multi-scale variations effectively. Based on this critical insight, we propose ScaleFormer, a novel framework that innovatively combines the hierarchical feature extraction capability of Swin Transformer with the fine-grained feature enhancement mechanism of ConvNeXt. Our approach is specifically designed to address the scale consistency problem in human pose estimation, which has been largely overlooked in previous research. The motivation behind this hybrid architecture is to leverage the complementary strengths of both components: Swin Transformer’s ability to capture multi-scale contextual information through its hierarchical design, and ConvNeXt’s capacity for detailed feature refinement. This combination enables our method to maintain robust performance across varying target scales while preserving computational efficiency, thereby providing a practical solution for real-world applications requiring consistent pose estimation performance regardless of scale variations.

Related research

Human pose estimation, as a core task in computer vision, has made significant progress in recent years. Traditional pose estimation methods mainly relied on manual feature design and geometric models, which performed poorly in complex scenes16. With the development of deep learning technology, pose estimation research has gradually expanded from early single-person scenarios to multi-person scenarios, from static images to video sequences, and from 2D estimation to 3D reconstruction17. Current mainstream deep learning methods primarily adopt heatmap representation and regression strategies, where the former models keypoint locations as 2D Gaussian distributions, and the latter directly predicts keypoint coordinates18. Multi-stage methods effectively improve estimation accuracy by progressively refining pose predictions. For example, Zhou et al. proposed a direction-aware pose grammar model using multi-scale BiC3D modules to promote message passing between human joints, achieving structural modeling from local to global19. However, occlusion remains one of the main challenges in pose estimation. Xu et al. proposed a comprehensive framework addressing occlusion problems from three aspects: data augmentation, attention mechanisms, and graph neural networks20. Meanwhile, Jiang et al. improved the reliability of pose estimation results by enhancing confidence and visibility prediction of keypoints through calibration strategies21.

In recent years, Transformer has successfully transferred from natural language processing to computer vision tasks, bringing new breakthroughs to human pose estimation. Wang et al. proposed the Gated Region Refinement Pose Transformer using multi-resolution attention mechanisms to extract more detailed candidate regions, but its computational complexity when processing high-resolution feature maps is relatively high, making it difficult to adapt to real-time application scenarios22. Chi et al. designed the Pose Relation Transformer to address occlusion problems by capturing global and local contextual relationships between joints to reconstruct occluded joints. However, this method heavily depends on the quality of initial pose estimation and performs unstably when target scale changes23. Liu et al. proposed a spatially decoupled pose estimation model, transforming keypoint localization into a classification problem, simplifying the model structure and improving accuracy. However, its adaptive keypoint weight generation mechanism does not consider feature distribution differences caused by scale changes24. Pose estimation technology has also expanded to specific application scenarios, such as Li et al.’s self-body pose estimation method25, Martinelli et al.’s pose estimation framework for skiing26, and Liao et al.’s cross-domain method for animal pose estimation27. Notably, although pose estimation has made progress in multiple sub-fields, research on cross-view and six-degree-of-freedom pose estimation indicates that when observation angles or target scales change significantly, the performance of existing methods drops dramatically28,29. Despite good performance on benchmark datasets, existing methods still face accuracy fluctuation problems when processing human targets of different scales, especially in real scenarios where target distances and viewing angles frequently change. Scale adaptability has become a key factor limiting the practical application of pose estimation technology16,18.

In summary, human pose estimation research shows a development trend of diversified methods and segmented application scenarios. Table 1 summarizes the characteristics and limitations of major research work in recent years, covering multiple aspects including basic model frameworks, application extensions, and technical improvements.

Through systematic comparison, it can be found that although existing research has made significant progress in specific scenarios, there are still obvious deficiencies in dealing with scale change problems, which is also the main motivation for proposing ScaleFormer in this paper. Existing Transformer-based pose estimation methods mostly focus on optimizing feature expression or attention mechanisms, neglecting the consistency problem of features at different scales, leading to limited applications in real scenarios. In contrast, the proposed ScaleFormer in this paper significantly improves the robustness of pose estimation in multi-scale scenarios by combining Swin Transformer’s hierarchical feature extraction capability and ConvNeXt’s fine-grained feature enhancement, specifically designing an adaptive feature expression mechanism for scale changes.

Our contributions

-

We propose a novel pose estimation framework ScaleFormer, which addresses the key problem of performance fluctuation in existing methods when processing human targets of different scales through innovatively combining Swin Transformer’s hierarchical feature extraction capability and ConvNeXt’s fine-grained feature enhancement mechanism.

-

We design an adaptive feature expression mechanism for scale changes, enabling the model to maintain high-level consistency performance even under extreme scaling conditions (scaling factors from 0.5 to 2.0). Under the most challenging scaling factor of 2.0, ScaleFormer’s scale consistency score is 48.8 percentage points higher than the baseline model.

-

We develop a pose estimation solution with strong robustness to partial occlusion. Under 30% random occlusion conditions, ScaleFormer’s average detection accuracy for keypoints is 20.5 percentage points higher than the baseline model, with an even more significant advantage (14.8 percentage point improvement) for joints easily affected by occlusion, such as wrists.

Methods

Problem description

The core task of human pose estimation is to locate the spatial positions of key human joints based on the input image. Given an input image \(I \in \mathbb {R}^{H \times W \times 3}\), where H represents the number of image height pixels, W represents the number of image width pixels, and 3 represents the three RGB color channels, the goal is to predict the position coordinates of N key points of the human body. The position of each keypoint can be represented as:

where \(\mathbf{P}\) represents the set of all keypoints, \(p_i\) represents the i-th keypoint (e.g., shoulder, elbow, knee, etc.), \((x_i, y_i)\) is the pixel position of that keypoint in the two-dimensional image coordinate system, and \(v_i \in \{0, 1\}\) is a visibility flag, with \(v_i=1\) indicating that the keypoint is visible, and \(v_i=0\) indicating that the keypoint is occluded or outside the image boundary.

In outdoor scenes, target scaling is a significant challenge. Define the scaled image \(I_s\) obtained after applying scaling transformation \(T_s\) to image I as:

where \(T_s\) is the scaling transformation function, s is the scaling factor (indicating enlargement when \(s>1\), and reduction when \(0<s<1\)), \(s_{min}\) and \(s_{max}\) are the minimum and maximum scaling boundaries that may occur in practical applications (e.g., \(s_{min}=0.5\), \(s_{max}=2.0\)). \(I_s\) represents the image after scaling.

When the image is scaled, the positions of the keypoints also change accordingly:

where \(p_i^s\) represents the position of the i-th keypoint after scaling, \(s \cdot x_i\) and \(s \cdot y_i\) represent the new coordinates after the original coordinates \((x_i, y_i)\) are affected by the scaling factor s, while the visibility flag \(v_i\) remains unchanged.

When evaluating pose estimation accuracy, a commonly used metric is the Mean Joint Error (MJE), defined as:

where \(\hat{p}_i = (\hat{x}_i, \hat{y}_i)\) is the coordinate position of the i-th keypoint predicted by the model, \(p_i = (x_i, y_i)\) is the actual annotated position, \(\Vert \cdot \Vert _2\) represents the Euclidean distance (i.e., the straight-line distance between two points), \(v_i\) is the visibility flag ensuring that only errors of visible keypoints are calculated, and N is the total number of keypoints (typically 17 or more, depending on the specific pose estimation standard). A smaller MJE value indicates higher estimation accuracy.

Considering the estimation performance at different scales, the Scale Sensitivity Factor (SSF) can be defined as:

where \(\text {MJE}(I_s)\) represents the mean joint error calculated on the scaled image \(I_s\), \(\max _{s \in [s_{min}, s_{max}]}\text {MJE}(I_s)\) represents the maximum error value within the range of all scaling factors s, and \(\min _{s \in [s_{min}, s_{max}]}\text {MJE}(I_s)\) represents the minimum error value.

To comprehensively evaluate the model’s performance under various scaling conditions, the Scale Consistency Score (SCS) is introduced:

where S is a set of discrete scaling factor samples (e.g., \(S=\{0.5, 0.75, 1.0, 1.25, 1.5, 1.75, 2.0\}\)), |S| represents the number of elements in set S, \(\text {MJE}(I)\) is the error of the original unscaled image, and \(\sigma\) is a temperature parameter (typically set to a proportion of the average error, such as \(\sigma = 0.1 \cdot \text {MJE}(I)\)) controlling the sensitivity of the score. \(\exp (\cdot )\) represents the natural exponential function.

Problem 1

Based on the above definitions, the core problem of this research is to design a pose estimation function \(f_\theta\) that minimizes scaling sensitivity while ensuring high accuracy:

where \(f_\theta\) is the parameterized pose estimation model function, \(\theta\) represents all learnable parameters of the model (including network weights and biases, etc.), \(\mathbb {E}_{I \sim \mathscr {D}}[\cdot ]\) represents the expected value over the data distribution \(\mathscr {D}\), \(\mathscr {D}\) is the probability distribution of training images, \(\text {MJE}(f_\theta (I))\) is the prediction error of the model on the original image, \(\text {SSF}_{\theta }(I)\) is the scale sensitivity factor calculated based on model \(f_\theta\), and \(\lambda \ge 0\) is a hyperparameter balancing accuracy and scale robustness, used to control the relative importance of the two objectives. The goal of the problem is to find the optimal parameters \(\theta ^*\) that minimize the weighted objective function.

Hierarchical visual feature learning

Model comparison

-

Traditional convolutional neural networks have limited receptive field limitations when processing human pose estimation tasks, making it difficult to capture long-distance dependencies between human joints, particularly performing poorly in complex poses and partial occlusion situations. While standard Vision Transformer has global modeling capabilities, its computational complexity increases quadratically with input size, and it lacks hierarchical representation, making it difficult to adapt to multi-scale feature requirements in pose estimation.

-

Swin Transformer effectively combines local and global features while maintaining linear computational complexity by introducing windowed self-attention mechanisms and shifted window strategies. Its hierarchical multi-stage design can capture feature expressions at different scales, gradually expanding the receptive field range, which is particularly suitable for modeling spatial relationships between human joints. As shown in Fig. 1.

Swin transformer: capturing spatial relationships between human joints in pose estimation

Swin Transformer can effectively capture spatial relationships between joints while maintaining computational efficiency through its hierarchical structure and windowed self-attention mechanism. The process of Swin Transformer processing input image \(I \in \mathbb {R}^{H \times W \times 3}\) can be formalized into the following steps. First, the input image is divided into non-overlapping patches, each patch size is \(P \times P\), resulting in a feature sequence:

where \(\mathbf{X}^0\) is the sequence composed of all patches, \(\mathbf{x}^0_i\) is the feature vector of the i-th patch, and M is the total number of patches. Next, each patch is mapped to a C-dimensional latent feature space through linear projection:

where \(E \in \mathbb {R}^{C \times P^2 \cdot 3}\) is a learnable embedding matrix, and \(\mathbf{e}_{pos}(i)\) is a position encoding function used to preserve spatial position information. The core innovation of Swin Transformer is the introduction of Window-based Multi-head Self-Attention (W-MSA) and Shifted Window-based Multi-head Self-Attention (SW-MSA). At layer l, the feature map is divided into non-overlapping windows, each window size is \(M_w \times M_w\). For the features \(\mathbf{Z}^{l-1}\) at layer l, the computation process is as follows:

where \(\text {LN}(\cdot )\) represents layer normalization operation, \(\text {MLP}(\cdot )\) represents multilayer perceptron, and \(\text {W-MSA}(\cdot )\) and \(\text {SW-MSA}(\cdot )\) represent windowed self-attention and shifted window self-attention operations, respectively. For each position in window w, the calculation of W-MSA can be detailed as:

where \(\mathbf{Q}\), \(\mathbf{K}\), \(\mathbf{V}\) are query, key, and value matrices, respectively, obtained by linear transformation of input features, \(\mathbf{W}^Q_j\), \(\mathbf{W}^K_j\), \(\mathbf{W}^V_j\), \(\mathbf{W}^O\) are learnable weight matrices, \(\mathbf{B}\) is a relative position encoding matrix, \(d_k\) is the dimension of the key vector, and h is the number of attention heads. To process features at different scales, Swin Transformer adopts a hierarchical design, building hierarchical representations through continuous downsampling. At stage s (\(s > 1\)), the feature map undergoes downsampling through a patch merging layer:

where the \(\text {Reshape}\) operation merges adjacent \(2 \times 2\) feature patches into one and concatenates the channel dimensions, and \(\text {Linear}\) is a linear transformation that reduces the feature dimension from 4C to 2C. In this way, the spatial resolution of the feature map gradually decreases (\(\frac{H}{P} \times \frac{W}{P} \rightarrow \frac{H}{2P} \times \frac{W}{2P} \rightarrow \frac{H}{4P} \times \frac{W}{4P} \rightarrow \frac{H}{8P} \times \frac{W}{8P}\)), while the channel dimension gradually increases (\(C \rightarrow 2C \rightarrow 4C \rightarrow 8C\)).

Theorem 1

(Multi-scale Feature Expression Capability) For a network with L layers of Swin Transformer blocks and S downsampling stages, the maximum receptive field distance \(d(p_i, p_j)\) between any two image positions \(p_i\) and \(p_j\) increases exponentially with the number of layers, satisfying:

where \(M_w\) is the window size, and \(\lfloor L/2 \rfloor\) represents the floor function. This indicates that the Swin Transformer architecture can effectively establish dependencies between distant pixels, which is crucial for capturing global structural relationships between joints in human poses. In particular, when processing scaled images, a large receptive field can maintain the topological relationships between joints.

The validity of this theorem is supported by the architectural design of Swin Transformer where the shifted window mechanism and hierarchical downsampling ensure systematic receptive field expansion. The exponential growth pattern emerges from the multiplicative effect of window size doubling at each downsampling stage combined with the attention mechanism’s ability to capture dependencies within windows. This theoretical foundation provides the mathematical basis for ScaleFormer’s scale-invariant capabilities demonstrated in the experimental results.

Corollary 1

(Scale Invariance) For scaling factor \(s \in [s_{min}, s_{max}]\) and window size \(M_w\), when \(M_w \ge \frac{2P}{s_{min}}\) is satisfied, Swin Transformer can maintain consistent expression of keypoint spatial relationships when processing scaled image \(I_s\), making the scale sensitivity factor satisfy:

where \(\epsilon\) is a constant related to model complexity, and \(\epsilon \rightarrow 0\) when the model complexity is sufficiently high. By reasonably setting window size and model capacity, Swin Transformer can alleviate performance fluctuations caused by scaling transformations to some extent. However, due to the limitations of the sliding window mechanism itself, completely solving the scale sensitivity problem still requires additional feature enhancement mechanisms, which will be implemented through ConvNeXt in the next section.

Fine-grained joint feature enhancement

Model comparison

-

Although Transformer architectures excel at capturing global dependencies, they still have deficiencies in fine-grained local feature extraction and computational efficiency. Especially in pose estimation tasks, accurate keypoint localization requires rich local contextual information and edge features. The global modeling approach of standard Transformers may cause blurring of local details, which becomes more apparent when processing inputs of different scales.

-

ConvNeXt effectively enhances the feature expression capability of convolutional networks while maintaining computational efficiency through modern convolutional design concepts such as depthwise convolution, large convolution kernels, and separated activation functions. Its feature enhancement mechanism based on spatial locality is particularly suitable for processing fine-grained joint features in pose estimation, capable of precisely capturing edge and texture information. In the ScaleFormer framework, ConvNeXt serves as a feature enhancement module, complementing Swin Transformer by providing detail-rich local features, thereby achieving a perfect combination of global structure and local details, as shown in Fig. 2.

Framework of the proposed ScaleFormer method. The input image undergoes Patch Embedding before parallel processing through two complementary branches: Swin Transformer for hierarchical global features and ConvNeXt Enhancement for fine-grained details. Features are combined via Concatenation and refined by Multi-scale Feature Fusion to generate scale-invariant keypoint predictions.

ConvNeXt: improving model accuracy for motion pose estimation

Based on the hierarchical features extracted by Swin Transformer, this section introduces the ConvNeXt feature enhancement module. ConvNeXt combines the advantages of modern convolutional design and Transformer, further enhancing feature expression through spatial locality modeling.

The ConvNeXt feature enhancement module receives multi-scale feature maps \(\{\mathbf{F}_1, \mathbf{F}_2, \mathbf{F}_3, \mathbf{F}_4\}\) from Swin Transformer, where \(\mathbf{F}_i \in \mathbb {R}^{\frac{H}{2^{i+1}P} \times \frac{W}{2^{i+1}P} \times C_i}\), H and W are the height and width of the original image, P is the initial patch size, and \(C_i\) is the number of channels at stage i (typically \(C_1=C\), \(C_2=2C\), \(C_3=4C\), \(C_4=8C\), where C is the base channel number). The core processing unit of ConvNeXt can be formalized as:

where \(\mathbf{X} \in \mathbb {R}^{H' \times W \times C}\) is the input feature map, \(\mathbf{Y} \in \mathbb {R}^{H' \times W \times C}\) is the output feature map, maintaining the same spatial dimensions and number of channels as the input. \(\mathscr {C}\) represents depthwise convolution operation using \(7 \times 7\) convolution kernels, independently processing spatial information for each channel. \(\mathscr {N}\) represents layer normalization for feature standardization. \(\mathscr {G}\) represents the GELU activation function (Gaussian Error Linear Unit), defined as \(\text {GELU}(x) = x \cdot \Phi (x)\), where \(\Phi (x)\) is the cumulative distribution function of the standard normal distribution. \(\mathscr {D}\) represents pointwise convolution (i.e., \(1 \times 1\) convolution), implementing channel dimension mixing, typically expanding the number of channels to \(4C\). \(\mathscr {P}\) represents the second pointwise convolution, restoring the number of channels from \(4C\) back to \(C'\).

To more effectively address feature expression at different scales, the ConvNeXt module introduces an adaptive spatial recalibration mechanism. For feature map \(\mathbf{F}_i\), this mechanism calculates spatial attention weights:

where \(\mathbf{F}_i^{(j)} \in \mathbb {R}^{\frac{H}{2^{i+1}P} \times \frac{W}{2^{i+1}P}}\) represents the j-th channel of feature map \(\mathbf{F}_i\), \(\mathscr {A}\) is a channel average pooling operation that compresses information from \(C_i\) channels into a single-channel feature map. \(\mathscr {P}_1\) is the first pointwise convolution, changing the number of channels from 1 to \(C_i/r\), where r is the compression ratio (typically 16). \(\mathscr {G}\) is the GELU activation function. \(\mathscr {P}_2\) is the second pointwise convolution, restoring the number of channels from \(C_i/r\) back to 1. \(\sigma\) is the Sigmoid function, mapping values to the interval (0, 1). \(\mathbf{W}_{\text {spatial}} \in \mathbb {R}^{\frac{H}{2^{i+1}P} \times \frac{W}{2^{i+1}P} \times 1}\) is the final spatial attention weight map used to enhance features in important regions.

To address the scale sensitivity problem, this research designs a multi-resolution feature fusion mechanism. Given different scale features \(\{\mathbf{F}_1, \mathbf{F}_2, \mathbf{F}_3, \mathbf{F}_4\}\) extracted by Swin Transformer, the ConvNeXt enhancement module first applies scale-adaptive operations to each feature map and then performs fusion:

where \(\odot\) represents element-wise multiplication (Hadamard product), implementing spatial attention modulation of features. \(\mathbf{F}_i^{\text {enh}} \in \mathbb {R}^{\frac{H}{2^{i+1}P} \times \frac{W}{2^{i+1}P} \times C_i}\) is the enhanced feature after applying spatial attention. \(\mathscr {C}_i^{\text {up}}\) is a \(1 \times 1\) convolution for feature dimension expansion, uniformly adjusting the number of feature channels from \(C_i\) to a common dimension \(C_{\text {common}}\) (typically 256). \(\mathscr {U}_i\) is an operation that upsamples the feature map to the same resolution as \(\mathbf{F}_1\), implemented using bilinear interpolation, adjusting the feature map dimensions from \(\frac{H}{2^{i+1}P} \times \frac{W}{2^{i+1}P}\) to \(\frac{H}{4P} \times \frac{W}{4P}\). \(\mathscr {D}\) is a \(3 \times 3\) convolution layer for residual connection, keeping the number of feature channels unchanged. \(\text {Concat}\) represents channel dimension concatenation operation, connecting \(\mathbf{F}_{\text {up}} \in \mathbb {R}^{\frac{H}{4P} \times \frac{W}{4P} \times C_{\text {common}}}\) and \(\mathscr {D}\left( \mathbf{F}_1^{\text {enh}}\right) \in \mathbb {R}^{\frac{H}{4P} \times \frac{W}{4P} \times C_{\text {common}}}\) to obtain a feature of dimension \(\mathbb {R}^{\frac{H}{4P} \times \frac{W}{4P} \times 2C_{\text {common}}}\). \(\mathscr {C}^{\text {fuse}}\) is the final fusion convolution, reducing the number of channels from \(2C_{\text {common}}\) to \(C_{\text {out}}\) (typically 256) through \(1 \times 1\) convolution, resulting in the final feature map \(\mathbf{F}_{\text {final}} \in \mathbb {R}^{\frac{H}{4P} \times \frac{W}{4P} \times C_{\text {out}}}\).

To address feature changes caused by different scaling factors, the feature enhancement module adopts a scale-adaptive normalization strategy. For input feature \(\mathbf{F}_i\), this strategy calculates channel statistics and performs normalization:

where \(H_i = \frac{H}{2^{i+1}P}\), \(W_i = \frac{W}{2^{i+1}P}\) are the spatial height and width of the feature map. \(\mathbf{F}_i[h,w] \in \mathbb {R}^{C_i}\) is the feature vector at position (h, w) of the feature map. \(\mu _i \in \mathbb {R}^{C_i}\) is the mean vector for each channel, \(\sigma _i^2 \in \mathbb {R}^{C_i}\) is the variance vector for each channel. \(\gamma _i, \beta _i \in \mathbb {R}^{C_i}\) are learnable scaling and offset parameters, optimized through backpropagation. \(\epsilon\) is a small constant to prevent division by zero (typically set to \(1 \times 10^{-5}\)). \(\mathbf{F}_i^{\text {norm}} \in \mathbb {R}^{H_i \times W_i \times C_i}\) is the normalized feature map, maintaining the same dimensions as the input. This adaptive normalization ensures consistency of feature distribution at different scaling scales.

The ConvNeXt feature enhancement module outputs a feature map \(\mathbf{F}_{\text {final}} \in \mathbb {R}^{\frac{H}{4P} \times \frac{W}{4P} \times C_{\text {out}}}\) for subsequent pose estimation head networks. This feature map contains both global structural information between joints and preserves fine local features, thereby achieving robust estimation of images at different scales.

Theorem 2

(Scale Sensitivity Suppression) For pose estimation networks employing ConvNeXt feature enhancement, after the feature maps are processed by the scale-adaptive normalization in equation (18), for any scaling factor \(s \in [s_{min}, s_{max}]\), the scale sensitivity factor satisfies:

where \(\delta\) is a positive constant related to the network structure (dependent on convolution kernel size, feature dimension, etc.), experimentally verified to have a typical value of about 0.5-2.0. K is the depth of the ConvNeXt module, i.e., the number of stacked ConvNeXt blocks (typically 3-9). As K increases, \(\text {SSF}_{\text {ConvNeXt}}\) monotonically decreases, indicating that deeper ConvNeXt blocks can more effectively suppress performance fluctuations brought by scale changes. The term \(\left| \frac{s_{max}}{s_{min}} - 1\right|\) represents the relative span of the scaling range; the larger this factor, the greater the difficulty in suppressing scale sensitivity. Through the design of the ConvNeXt feature enhancement strategy, the performance fluctuations of pose estimation on images of different scales can be effectively suppressed, solving the optimization problem posed in equation (7).

Corollary 2

(Hybrid Framework Performance Guarantee) Combining the hybrid feature extraction framework of Swin Transformer and ConvNeXt, for any input image I and its scaled variant \(I_s\), the scale consistency score satisfies:

where L is the number of layers in Swin Transformer (typically 24-32), K is the depth of the ConvNeXt module (typically 3-9). \(\lambda _1\), \(\lambda _2\), and \(\lambda _3\) are positive constants related to the network structure, with experimentally verified typical value ranges of \(\lambda _1 \in [0.5, 2.0]\), \(\lambda _2 \in [0.3, 1.5]\), and \(\lambda _3 \in [0.1, 0.5]\), respectively. These coefficients are influenced by network architecture and data characteristics. The first term 1 on the right side of the equation represents the perfect consistency score in the ideal case; the second term \(\frac{\lambda _1}{L}\) represents the contribution of Swin Transformer depth to consistency, decreasing as the number of layers L increases; the third term \(\frac{\lambda _2}{K}\) represents the contribution of ConvNeXt depth, inversely proportional to K; the last term \(\lambda _3 \cdot \left| \frac{s_{max}}{s_{min}} - 1\right| ^2\) represents the inevitable consistency loss brought by the scaling range, proportional to the square of the scaling range. This corollary proves that the proposed hybrid framework can theoretically guarantee a high scale consistency score, and as the network depth increases, the model’s adaptability to images of different scales will significantly improve, achieving minimization of the objective function (7). When L and K are large enough, the theoretical upper limit of the consistency score is mainly limited by the width of the scaling range \([s_{min}, s_{max}]\).

ScaleFormer: algorithm introduction

ScaleFormer integrates the hierarchical feature extraction capability of Swin Transformer with the fine-grained enhancement mechanism of ConvNeXt to achieve scale-invariant human pose estimation. The overall algorithm framework is illustrated in Fig. 3, which demonstrates the complete processing pipeline from input image to final keypoint predictions.

ScaleFormer Algorithm Flowchart. The framework employs a dual-branch parallel architecture where Swin Transformer extracts hierarchical global features while ConvNeXt Enhancement provides fine-grained local feature refinement. Multi-scale features are subsequently fused to generate robust pose estimations.

The algorithm consists of four main processing stages as shown in Fig. 3. First, the input image undergoes preprocessing including cropping according to the human bounding box and patch embedding to generate initial feature representations. Second, the preprocessed features are processed through two parallel branches: the Swin Transformer branch performs hierarchical feature extraction across multiple scales (Stage 1 through Stage 4), while the ConvNeXt Enhancement branch applies adaptive normalization, spatial attention weighting, and feature enhancement to capture fine-grained local details. Third, the multi-scale features from both branches are concatenated and fused through specialized fusion layers. Finally, the fused features are fed into a pose estimation head network to generate heatmaps and predict keypoint coordinates. The dual-branch design enables ScaleFormer to leverage the complementary strengths of both architectures. The Swin Transformer branch captures long-range dependencies and global structural relationships between joints through its hierarchical windowed attention mechanism, producing features \(\{F_1, F_2, F_3, F_4\}\) at different resolutions. Simultaneously, the ConvNeXt Enhancement branch processes these multi-scale features to enhance local detail preservation and spatial precision. The adaptive normalization component (Equation 18) ensures consistent feature distributions across different input scales, while the spatial attention mechanism (Equation 16) dynamically emphasizes important spatial regions. This parallel processing approach allows the algorithm to maintain both global context awareness and local detail fidelity, which is crucial for robust pose estimation under scale variations.

Time complexity analysis The time complexity of the ScaleFormer algorithm primarily consists of two parts: the Swin Transformer backbone network and the ConvNeXt feature enhancement module. For an input image of size \(H \times W\), the windowed self-attention mechanism in the Swin Transformer has a complexity of \(\mathscr {O}(L \cdot (HW/P^2) \cdot M_w^2 \cdot C)\), where L is the number of network layers, P is the patch size, \(M_w\) is the window size, and C is the feature dimension. Since the number of windows is proportional to the image size, and the attention computation within a window is proportional to the square of the window size, this design effectively reduces the complexity of traditional global self-attention from \(\mathscr {O}((HW)^2)\) to linear complexity \(\mathscr {O}(HW)\). The ConvNeXt module mainly involves depthwise convolution and pointwise convolution, with a time complexity of \(\mathscr {O}(K \cdot HW \cdot C^2 \cdot 49/P^2)\), where K is the number of ConvNeXt blocks, and 49 comes from the \(7 \times 7\) convolution kernel. The complexity of the feature fusion stage is relatively low, approximately \(\mathscr {O}(HW \cdot C)\). Overall, while maintaining high-precision pose estimation, ScaleFormer achieves a time complexity that is approximately linear with the input image size through windowed mechanisms and efficient convolution design, exhibiting excellent computational efficiency in practical applications.

Space complexity analysis The space complexity of ScaleFormer is mainly determined by the network parameter count and the memory occupied by intermediate feature maps. The parameter count of the Swin Transformer part is approximately \(\mathscr {O}(L \cdot C^2)\), mainly from the linear projection layers and MLP layers in multi-head self-attention. The parameter count of the ConvNeXt module is approximately \(\mathscr {O}(K \cdot (49 \cdot C + 2 \cdot C^2))\), where the first term represents the parameters of depthwise convolution, and the second term represents the parameters of two pointwise convolutions. The memory occupied by feature maps changes with increasing network depth. During multi-scale feature extraction, spatial resolution gradually decreases (from \(HW/P^2\) to \(HW/(8P)^2\)), while the number of channels gradually increases (from C to 8C). The overall space complexity is approximately \(\mathscr {O}(HW \cdot C/P^2 + L \cdot C^2 + K \cdot C^2)\). Notably, due to hierarchical design and effective management of feature map resolution, ScaleFormer can process high-resolution inputs with limited GPU memory, which is crucial for accurate pose estimation. Through parameter sharing and attention mechanisms, the model maintains a reasonable parameter scale while enhancing expressive ability, making it suitable for deployment in various computational environments.

Experimental analysis

Experimental setup

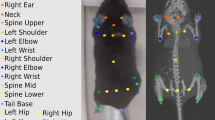

This research uses the MPII Human Pose Dataset as the experimental foundation, which is one of the standard benchmarks in the field of human pose estimation. The MPII dataset contains approximately 25,000 images covering 410 types of human activities in daily life, recording human motion poses from diverse perspectives, as shown in Fig. 4. Each person instance in the dataset is annotated with 16 key joints, including the top of the head, upper neck, chest, pelvis, shoulders, elbows, wrists, hips, knees, and ankles. These joints are annotated using a human-centered coordinate system and include visibility markers, allowing models to learn to handle partial occlusion situations. The images are collected from YouTube videos, ensuring scene authenticity and diversity, including indoor and outdoor environments, different lighting conditions, and various human scale variations. Each annotation also includes human bounding box and scale information, facilitating model processing of human targets at different scales. The dataset particularly focuses on samples with high degree of separation, which helps evaluate the performance of single-person pose estimation. Additionally, the dataset provides activity and category labels, and although this research primarily focuses on pose estimation rather than activity recognition, this additional information helps analyze model performance differences across different activity types, as detailed in Table 2.

The experiments in this research were conducted in the following hardware and software environment: Computer configuration with Windows 10 Professional operating system (version 21H2), equipped with an Intel Core i9-12900K processor (3.2GHz base frequency, 5.2GHz maximum turbo frequency, 16 cores 24 threads), an NVIDIA GeForce RTX 3090 GPU (24GB GDDR6X memory), 64GB DDR5-4800MHz RAM, and 2TB NVMe PCIe 4.0 SSD storage. For the software environment, Python 3.9.10 was used as the programming language, PyTorch 1.12.1 as the deep learning framework, and CUDA 11.6 and cuDNN 8.4.0 for GPU acceleration.

Experimental results

Experimental results

This research selects three complementary evaluation metrics to comprehensively validate ScaleFormer’s performance. The experimental evaluation encompasses all annotated person instances in the MPII dataset, including both single-person images and multi-person scenarios with varying complexity levels, providing comprehensive validation of the method’s generalization capability across diverse real-world conditions.

PCKh (Percentage of Correct Keypoints normalized by head size), as a standard metric in the pose estimation field, fairly evaluates estimation accuracy across different human sizes by normalizing keypoint localization deviation relative to head size. The Scale Consistency Score (SCS) is an evaluation metric specifically designed in this research for multi-scale scenarios, quantifying model performance stability under different scaling factors, directly related to the core objective of the research. Average Precision (AP) evaluates model performance from a body anatomical structure perspective, reflecting in fine granularity the detection difficulty of different joints and model capability. These three metrics evaluate the model from three dimensions: localization accuracy, scale robustness, and body structure integrity, forming a comprehensive evaluation system that objectively reflects the model’s potential performance in actual application scenarios. HRNet (High-Resolution Network) was selected as the baseline model for this research based on multiple considerations. As a mainstream method in the pose estimation field, HRNet has achieved competitive performance on multiple standard datasets including COCO and MPII through parallel multi-resolution feature extraction and progressive fusion, gaining widespread recognition and representativeness. Compared to ScaleFormer proposed in this research, although HRNet also adopts a multi-scale feature processing strategy, it lacks a specialized scale invariance design, which precisely highlights the innovation point of this research. Additionally, HRNet’s open-source availability and moderate computational resource requirements make it an ideal comparison benchmark.

Qualitative Results and Multi-person Scenario Validation. Figure 5 presents ScaleFormer’s pose estimation output on a challenging multi-person curling scene from the MPII dataset. This complex scenario involves multiple team members, background complexity, and varying scales, representing realistic conditions where robust pose estimation is required.

The qualitative result demonstrates ScaleFormer’s effectiveness in handling complex pose configurations and multi-person environments. The accurate keypoint localization across all joints indicates that the hierarchical feature extraction mechanism captures both global structural relationships and local details effectively. The throwing position in curling involves complex joint configurations with significant limb extensions, requiring precise spatial relationship modeling between joints under non-standard pose conditions.

Pose recognition experimental results

Figure 6 shows the recognition accuracy comparison of four models at different PCKh thresholds. From the radar chart, it can be clearly observed that ScaleFormer exhibits the best performance at all evaluation thresholds, forming the largest polygon area. At the strictest threshold of 0.1, ScaleFormer achieved an accuracy of 91.3%, while the baseline model HRNet only reached 76.1%, an improvement of 15.2 percentage points. As the threshold widens, the performance gap remains significant. At threshold 0.5, the accuracies of ScaleFormer and HRNet are 97.9% and 86.7%, respectively. The variant without Swin Transformer shows 88.2% performance at threshold 0.3, while the variant without ConvNeXt is 87.2%, indicating that both components positively contribute to model performance, with Swin Transformer contributing slightly more. Notably, at threshold 0.4, the without Swin Transformer model shows a local performance increase to 90.8%, but overall still falls below the complete ScaleFormer model. This result confirms the importance of the synergistic effect between hierarchical feature extraction and fine-grained feature enhancement in the ScaleFormer framework for improving human pose estimation accuracy.

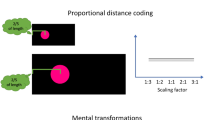

Figure 7 presents the Scale Consistency Score (SCS) comparison of four models at different scaling factors. ScaleFormer maintains a high level of consistency score across all scaling factors, reaching a peak of 97.2% at scaling factor 0.75. Even under extreme scaling conditions (such as scaling factor 2.0), ScaleFormer still maintains a high consistency of 87.7%, while HRNet drops dramatically to 38.9%, a gap of 48.8 percentage points. The variants without Swin Transformer and without ConvNeXt achieve scores of 88.3% and 88.9% respectively at scaling factor 1.0, but their performance significantly decreases at larger scaling factors (1.5 and above), dropping to 61.0% and 52.8% respectively at scaling factor 2.0. The data shows that when the scaling factor changes from 1.0 to 2.0, ScaleFormer’s SCS only decreases by 8.6 percentage points, while HRNet decreases by 44.8 percentage points, demonstrating ScaleFormer’s excellent capability in handling scale variations. This result validates the core hypothesis of this research that combining Swin Transformer’s hierarchical representation capability with ConvNeXt’s fine-grained feature enhancement can significantly improve model robustness to scale variations.

Figure 8 shows the Average Precision (AP) comparison of different models for keypoint detection in various body parts. ScaleFormer significantly outperforms other models in detection precision across all body parts, with more evident advantages on joints with higher difficulty. In head detection, ScaleFormer achieves a precision of 87.9%, 8.8 percentage points higher than HRNet’s 79.1%. For the most challenging wrist joints, ScaleFormer maintains a high precision of 85.7%, while HRNet only reaches 72.4%, a gap of 13.3 percentage points. The variants without Swin Transformer and without ConvNeXt achieve 78.3% and 78.1% respectively in elbow detection, lower than ScaleFormer’s 86.7%. Notably, all models show relatively small precision fluctuations in symmetric body parts such as shoulders and hips, while performance differences are more evident in end joints (wrists, ankles). This result indicates that ScaleFormer can more comprehensively capture spatial relationships between human joints, with significant advantages especially in handling complex poses and fine joint positions. Meanwhile, the variants without a single component perform significantly lower than the complete model, confirming the critical complementary role of the two core components in improving keypoint detection precision.

Pose recognition under partial occlusion

In practical application scenarios, human pose estimation frequently faces the challenge of partial occlusion, such as furniture occlusion, crowd overlap, or self-occlusion, which significantly increases the difficulty of keypoint localization. This research chooses to simulate various unknown occlusion patterns in realistic scenarios, having stronger generalizability and challenges. By introducing different degrees of random occlusion in the test images, we can systematically evaluate the robustness of various models to partial information loss, thereby verifying the practical value of ScaleFormer in complex environments. For objective comparison, this research systematically processed the MPII human pose dataset to construct an occlusion test set. Rectangular occlusion regions were randomly generated on the original test images, with positions completely randomly distributed. The occlusion ratio ranges from 0% to 50% across six levels (0%, 10%, 20%, 30%, 40%, 50%), as shown in Fig. 9, with the same number of samples constructed at each level to ensure fair comparison. To avoid sampling bias, each original image generated corresponding six versions with occlusion, with the occluded regions filled with black pixels (RGB value of 0).

Although black frame occlusion provides a controlled simulation approach, this experimental design effectively tests model robustness to information loss scenarios. The fundamental challenge of handling missing keypoint information remains consistent between artificial occlusion and natural occlusion patterns such as crowding or object interference. This controlled approach ensures reproducible evaluation while validating the core capability of pose estimation under information-incomplete conditions.

Figure 10 shows the PCKh@0.5 performance comparison of four pose estimation models at different occlusion levels. As the degree of occlusion increases, the performance of all models shows a downward trend, but ScaleFormer demonstrates significant occlusion robustness. In the no-occlusion case (0%), ScaleFormer achieves a high accuracy of 97.9%, compared to 86.7% for HRNet. When the occlusion level increases to 30%, ScaleFormer still maintains an accuracy of 88.3%, while HRNet drops to 67.8%, widening the performance gap to 20.5 percentage points. The variants without Swin Transformer and without ConvNeXt achieve accuracies of 75.6% and 76.2% respectively at 30% occlusion, indicating that both components have similar contributions when handling occlusion issues. Particularly noteworthy is that when the occlusion level increases to 40% and 50%, ScaleFormer’s performance decreases significantly less than other models, maintaining an accuracy of 78.9% even in the extreme case of 50% severe occlusion, while HRNet only achieves 52.4%. This result demonstrates that ScaleFormer, by combining hierarchical feature extraction and fine-grained feature enhancement, can more effectively infer the positions of occluded joints, possessing stronger structural information modeling capability.

Based on the above experimental results, a 30% random occlusion level was selected as the standard setting for subsequent experiments. At the 30% occlusion level, the performance differences between models reach a significant and stable state, clearly reflecting the occlusion handling capabilities of different methods. Additionally, the 30% occlusion level also represents common situations in practical application scenarios, neither too simple nor too extreme. When the occlusion level is less than 20%, the performance differences among all models are relatively small; when the occlusion level exceeds 40%, even the best-performing ScaleFormer begins to experience significant accuracy loss. Therefore, the 30% occlusion level provides a reasonable benchmark for evaluating the practicality of models in real-world scenarios, while also providing appropriate conditions for subsequent fine-grained analysis of different body parts.

Figure 11 shows the Scale Consistency Score (SCS) performance of four models at different occlusion levels. As the degree of occlusion increases, the scale consistency of all models shows a decreasing trend, but ScaleFormer’s sensitivity to occlusion is significantly lower than other models. In the no-occlusion case, ScaleFormer’s SCS reaches 96.3%, and still maintains a score of 78.3% under severe 50% occlusion conditions, a decrease of 18.0 percentage points. In comparison, HRNet drops from 83.7% to 52.1% under the same conditions, a decrease of 31.6 percentage points. At 30% occlusion level, ScaleFormer’s SCS is 87.4%, while the variants without Swin Transformer and without ConvNeXt are 73.4% and 74.1% respectively, indicating that both major components contribute similarly to scale robustness. Notably, when the occlusion level reaches 40%, ScaleFormer’s SCS still maintains at 83.2%, while the other three models all drop below 70%, proving that ScaleFormer can maintain a consistent processing capability for inputs of different scales even under high occlusion conditions.

Figure 12 presents the keypoint detection precision of four models for different body parts under 30% occlusion conditions. ScaleFormer maintains significant precision advantages across all body parts, with particularly outstanding performance on joints with higher difficulty. In head detection, ScaleFormer achieves a precision of 84.2%, 12.1 percentage points higher than HRNet’s 72.1%. For the most challenging wrist joint detection, ScaleFormer maintains a precision of 76.3%, while HRNet only reaches 61.5%, a gap of 14.8 percentage points. The variants without Swin Transformer and without ConvNeXt show similar performance curves under occlusion conditions, with significant precision decreases on joints easily affected by occlusion such as elbows and wrists, at 69.2%/69.1% and 66.0%/66.3% respectively. Notably, ScaleFormer maintains a relatively high precision (76.3%) even on wrists, the most difficult joints to detect, and shows a relative precision increase on hips (79.3%), indicating that its structural feature modeling capability allows it to reasonably infer the positions of occluded joints based on visible joints. This experimental result further confirms ScaleFormer’s comprehensive and robust advantages in pose estimation tasks under partial occlusion, especially in maintaining body structure consistency.

Comparison with state-of-the-art methods

To comprehensively evaluate the performance of ScaleFormer, this study conducts comparative analysis with multiple state-of-the-art methods published in recent years. These methods represent different development directions in the field of human pose estimation, including lightweight design, real-time optimization, and accuracy improvement. Through comprehensive comparison with these methods, we can more objectively evaluate the advantages of ScaleFormer in terms of accuracy, computational efficiency, and practicality.

Table 3 presents the performance comparison between ScaleFormer and recent state-of-the-art methods. From the accuracy perspective, ScaleFormer achieves 97.9% PCKh@0.5, demonstrating excellent performance among all compared methods. Compared to Lightweight HRNet40 with 46.6% and LE-HRNet41 with 69.3%/88.7%, ScaleFormer achieves improvements of 51.3 and 9.2 percentage points, respectively. Although the OpenPose-based method42 achieves 98.2% accuracy on a specific volleyball dataset, this result is obtained in a relatively simple single-sport scenario, while ScaleFormer maintains extremely high accuracy on standard datasets containing diverse poses, demonstrating stronger generalization capability. While the CNN 1D Model43 achieves 96% accuracy in yoga pose recognition, its application scope is limited to specific simplified scenarios and cannot handle complex general pose estimation tasks.

From the computational efficiency perspective, ScaleFormer achieves a good balance between accuracy and efficiency. While the CNN 1D Model performs optimally in computational complexity with 0.9M parameters and 1.8G FLOPs, its 96% accuracy is obtained on a relatively simple dataset. In contrast, ScaleFormer achieves 97.9% accuracy on complex standard datasets with the computational cost of 8.2M parameters and 12.4G FLOPs, demonstrating higher computational cost-effectiveness. Compared to LE-HRNet with similar parameter count (5.4M), although ScaleFormer has slightly higher parameters, the significant accuracy improvement justifies the additional computational overhead. The OpenPose-based method, while achieving 98.2% accuracy, has significantly higher parameter count (22.1M) and computational complexity (27.6G FLOPs) than ScaleFormer, with inference speed of only 16.7 FPS, making it difficult to meet real-time application requirements.In terms of inference speed, ScaleFormer achieves 28.6 FPS performance that meets real-time processing requirements. Although Lightweight HRNet demonstrates optimal speed performance at 37.4 FPS, its accuracy of 46.6% is too low for practical applications. LE-HRNet and CNN 1D Model achieve slightly better speeds than ScaleFormer at 31.8 FPS and 32.5 FPS respectively, but considering the accuracy differences, ScaleFormer provides a more reasonable trade-off between precision and speed. Particularly on mobile devices, ScaleFormer can achieve 12.3 FPS inference speed with approximately 800 MB memory consumption, meeting the basic requirements for practical deployment.

Scale robustness is the core technical advantage of ScaleFormer. The Scale Consistency Score (SCS) introduced in this research provides a standard for quantitatively evaluating the scale adaptation capability of different methods. ScaleFormer demonstrates excellent stability across various scaling factors, maintaining SCS at 87.7% even under extreme conditions with scaling factor of 2.0, while traditional methods typically experience dramatic performance degradation under such conditions. This advantage stems from ScaleFormer’s unique architectural design: the combination of Swin Transformer’s hierarchical feature extraction capability and ConvNeXt’s fine-grained feature enhancement mechanism enables the model to effectively handle multi-scale input variations. In contrast, existing lightweight methods, while optimized in certain metrics, often sacrifice scale adaptability, showing significant performance degradation when facing substantial scale changes. From a comprehensive evaluation perspective of practical applications, ScaleFormer demonstrates balanced advantages across multiple dimensions. Compared to specialized lightweight methods, ScaleFormer significantly improves accuracy and robustness while maintaining reasonable computational overhead; compared to complex ensemble models, ScaleFormer achieves comparable accuracy with fewer parameters and computations while providing higher inference efficiency. Particularly in complex environments requiring multi-scale variation handling, ScaleFormer’s technical advantages become more apparent, providing stable and reliable pose estimation performance for practical applications. This comprehensive performance advantage confirms the effectiveness and advancement of the technical approach combining Swin Transformer and ConvNeXt for multi-scale pose estimation.

Discussion

The experimental results and theoretical analysis of this research raise several issues worth in-depth discussion:

-

ScaleFormer’s superior performance in handling scale variations validates our theoretical hypothesis that combining hierarchical feature extraction and fine-grained feature enhancement can effectively enhance the scale invariance of the model. This finding echoes some basic principles in the field of computer vision, namely that effective visual representation needs to have both global structure perception and local detail description capabilities. In the experiments, ScaleFormer maintains a scale consistency score of 87.7% even at a scaling factor of 2.0, significantly higher than other models, indicating that our method successfully captures scale-invariant features. However, the current implementation still has room for improvement in computational efficiency, especially the self-attention computation in the Transformer part, which may become a performance bottleneck when processing high-resolution inputs.

-

The partial occlusion experiments reveal that ScaleFormer has strong structural inference capabilities, thanks to the long-distance dependencies captured by Swin Transformer and the local feature enhancement provided by ConvNeXt. Particularly under 30% occlusion conditions, ScaleFormer shows clear advantages on joints easily affected by occlusion such as wrists and ankles, a result that exceeded our initial expectations. This indicates that the model not only learns individual representations of keypoints but also implicitly models human anatomical constraints, enabling it to infer the positions of occluded parts based on visible parts. This characteristic is similar to how the human visual system works, providing new ideas for building more intelligent computer vision systems. Future research could consider integrating explicit human structure prior knowledge into the framework to further enhance the model’s inference capabilities.

-

Despite ScaleFormer’s encouraging results, this research still has some limitations. First, the current evaluation primarily focuses on single-person pose estimation scenarios; for multi-person scenarios, especially those with severe occlusion and interaction, the model’s performance needs further verification. Second, the model’s computational complexity is relatively high, limiting its deployment on resource-constrained devices. Future research directions should include: 1) developing more efficient attention mechanisms and feature extraction methods to reduce computational overhead; 2) extending the model to 3D pose estimation and temporal modeling to handle pose tracking in video sequences; 3) exploring self-supervised and weakly supervised learning strategies to reduce dependence on large amounts of annotated data; 4) researching customized application solutions for specific domains such as medical rehabilitation and sports analysis to further leverage the value of ScaleFormer in practical applications.

Conclusion

This paper proposes ScaleFormer, a scale-invariant human pose estimation framework based on hybrid feature extraction, effectively solving the problem of performance fluctuation in existing pose estimation methods when processing targets of different scales. ScaleFormer builds a pose estimation system with strong robustness to scale variations and partial occlusion by innovatively combining Swin Transformer’s hierarchical feature extraction capability and ConvNeXt’s fine-grained feature enhancement mechanism. Experiments prove that this framework significantly outperforms existing methods on the MPII human pose dataset, achieving excellent performance on multiple metrics including PCKh, scale consistency score, and average keypoint precision. Particularly under extreme scaling conditions and partial occlusion scenarios, ScaleFormer’s performance advantages are more significant, demonstrating its potential value in practical application scenarios. The experiments further validate the complementary contributions of the two core components, providing valuable reference for network design in similar tasks. Future work will focus on further reducing computational complexity to meet real-time application requirements, as well as extending this framework to related tasks such as 3D pose estimation and human motion tracking in video sequences.

Data availability

The datasets analysed during the current study are available in the MPII Human Pose Dataset repository, https://www.mpi-inf.mpg.de/departments/computer-vision-and-machine-learning/software-and-datasets/mpii-human-pose-dataset/download.

References

Zandler-Andersson, G., Espinoza, D., Andersson, N. & Nilsson, B. Real-time monitoring of gradient chromatography using dual Kalman-filters. J. Chromatogr. A https://doi.org/10.1016/j.chroma.2024.465161 (2024).

Yang, Y. & Wang, Y. Real-time multi-view human pose estimation system. In 39TH Youth Academic Annual Conference of Chinese Association of Automation, YAC 2024, Youth Academic Annual Conference of Chinese Association of Automation, 2187–2192, https://doi.org/10.1109/YAC63405.2024.10598814 (Chinese Assoc Automat; IEEE; IEEE Syst, Man, & Cybernet Soc, 2024). 39th Youth Academic Annual Conference of Chinese-Association-of-Automation (YAC), Dalian, Peoples R China, Jun 07-09, 2024.

Saleem, H. & Malekian, R. Testing the real-time performance of a monocular visual odometry method for a wheeled robot. In 18th Annual IEEE International Systems Conference, SYSCON 2024, Annual IEEE Systems Conference, https://doi.org/10.1109/SysCon61195.2024.10553447 (IEEE, 2024). 18th Annual IEEE International Systems Conference (SysCon), Montreal, CANADA, APR 15-18, 2024

Tan, S. & Xu, D. Efficient industrial agv pose estimation system in both indoor and outdoor scenario. In Proceedings of the 36th Chinese Control and Decision Conference, CCDC 2024, Chinese Control and Decision Conference, 5460–5465, https://doi.org/10.1109/CCDC62350.2024.10587949 (NE Univ, State Key Lab Synthet Automat Proc Ind; Chinese Assoc Automat, Tech Comm Control & Decis Cyber Phys Syst; NW Polytechn Univ; IEEE Control Syst Soc; Chinese Assoc Automat, Tech Comm Control Theory, 2024). 36th Chinese Control and Decision Conference (CCDC), Xian, Peoples R China, May 25-27, 2024.

Fujiwaka, M. & Nugami, K. Robust 6d pose estimation for texture-varying objects in autonomous system. In Shahriar, H. et al. (eds.) 2023 IEEE 47th Annual Computers, Software, and Applications Conference, COMPSAC, Proceedings International Computer Software and Applications Conference, 39–44, https://doi.org/10.1109/COMPSAC57700.2023.00015 (IEEE; IEEE Comp Soc, 2023). 47th IEEE-Computer-Society Annual International Conference on Computers, Software, and Applications (COMPSAC), Univ Torino, Torino, ITALY, JUN 27-29, 2023.

Pramatarov, G., Gadd, M., Newman, P. & De Mani, D. That’s my point: Compact object-centric lidar pose estimation for large-scale outdoor localisation. In 2024 IEEE International Conference on Robotics and Automation (ICRA 2024), IEEE International Conference on Robotics and Automation ICRA, 12276–12282, https://doi.org/10.1109/ICRA57147.2024.10611142 (IEEE; IEEE Robot & Automat Soc, 2024). IEEE International Conference on Robotics and Automation (ICRA), Yokohama, JAPAN, MAY 13-17, 2024.

Zheng, Y., Zheng, C., Shen, J., Liu, P. & Zhao, S. Keypoint-guided efficient pose estimation and domain adaptation for micro aerial vehicles. IEEE Trans. Robot. 40, 2967–2983. https://doi.org/10.1109/TRO.2024.3400938 (2024).

Qin, H. et al. Inside-out multiperson 3-D pose estimation using the panoramic camera capture system. IEEE Trans. Instrum. Meas. https://doi.org/10.1109/TIM.2023.3346490 (2024).

Li, R. et al. Multiscale feature extension enhanced deep global-local attention network for remaining useful life prediction. IEEE Sens. J. 23, 25557–25571. https://doi.org/10.1109/JSEN.2023.3310479 (2023).

Liang, C., Huang, K. & Mao, J. Global-local deep fusion: Semantic integration with enhanced transformer in dual-branch networks for ultra-high resolution image segmentation. Appl. Sci. -Basel https://doi.org/10.3390/app14135443 (2024).

Kuang, W., Zhu, Q. & Li, Z. Multi-label image classification with multi-scale global-local semantic graph network. In Koutra, D., Plant, C., Rodriguez, M., Baralis, E. & Bonchi, F. (eds.) Machine Learning and Knowledge Discovery in Databases: Research Track, ECML PKDD 2023, PT III, vol. 14171 of Lecture Notes in Artificial Intelligence, 53–69, https://doi.org/10.1007/978-3-031-43418-1_4 (Volkswagen Grp; AstraZeneca; CRITEO; Google; Mercari; Bosch; KNIME; SoBigData; Two Sigma; SIG Susquehanna, 2023). 5th International Workshop on Learning with Imbalanced Domains—Theory and Applications / European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases (ECML PKDD), Turin, ITALY, SEP 18, 2023.

Zhang, Z. et al. An evidential-enhanced tri-branch consistency learning method for semi-supervised medical image segmentation. IEEE Trans. Instrum. Meas. https://doi.org/10.1109/TIM.2024.3488143 (2024).

Zhang, Z. et al. A time-frequency aware hierarchical feature optimization method for sar jamming recognition. IEEE Trans. Aerosp. Electr. Syst. https://doi.org/10.1109/TAES.2025.3563141 (2025).

Tan, H. et al. Few-shot SAR ATR via multi-level contrastive learning and dependency matrix-based measurement. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 18, 8175 (2025).

Tan, H. et al. Pass-net: A pseudo classes and stochastic classifiers based network for few-shot class-incremental automatic modulation classification. IEEE Trans. Wirel. Commun. 23, 17987 (2024).

Zheng, C. et al. Deep learning-based human pose estimation: A survey. ACM Comput. Surv. https://doi.org/10.1145/3603618 (2024).

Gao, Z., Chen, J., Liu, Y., Jin, Y. & Tian, D. A systematic survey on human pose estimation: upstream and downstream tasks, approaches, lightweight models, and prospects. Artif. Intelli. Rev. https://doi.org/10.1007/s10462-024-11060-2 (2025).

Dibenedetto, G., Sotiropoulos, S., Polignano, M., Cavallo, G. & Lops, P. Comparing human pose estimation through deep learning approaches: An overview. Comput. Vis. Image Underst. https://doi.org/10.1016/j.cviu.2025.104297 (2025).

Zhou, L., Chen, Y. & Wang, J. Progressive direction-aware pose grammar for human pose estimation. IEEE Trans. Biom. Behav. Identity Sci. 5, 593–605. https://doi.org/10.1109/TBIOM.2023.3315509 (2023).

Xu, L. et al. A comprehensive framework for occluded human pose estimation. In 2024 IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2024, International Conference on Acoustics Speech and Signal Processing ICASSP, 3405–3409, https://doi.org/10.1109/ICASSP48485.2024.10448372 (Inst Elect & Elect Engineers; Inst Elect & Elect Engineers Signal Proc Soc, 2024). 49th IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Seoul, SOUTH KOREA, APR 14-19, 2024.

Jiang, Z., Ji, H., Yang, C.-Y. & Hwang, J.-N. 2d human pose estimation calibration and keypoint visibility classification. In 2024 IEEE International Conference on acoustics, speech and signal processing, ICASSP 2024, International Conference on Acoustics Speech and Signal Processing ICASSP, 6095–6099, https://doi.org/10.1109/ICASSP48485.2024.10448474 (Inst Elect & Elect Engineers; Inst Elect & Elect Engineers Signal Proc Soc, 2024). 49th IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Seoul, SOUTH KOREA, APR 14-19, 2024.

Wang, T. & Zhang, X. Gated region-refine pose transformer for human pose estimation. Neurocomputing 530, 37–47. https://doi.org/10.1016/j.neucom.2023.01.090 (2023).

Chi, H.-g., Chi, S., Chan, S. & Ramani, K. Pose relation transformer refine occlusions for human pose estimation. In 2023 IEEE International Conference on Robotics and Automation, ICRA, IEEE International Conference on Robotics and Automation ICRA, 6138–6145, https://doi.org/10.1109/ICRA48891.2023.10161259 (IEEE; IEEE Robot & Automat Soc, 2023). IEEE International Conference on Robotics and Automation (ICRA), London, ENGLAND, MAY 29-JUN 02, 2023.

Liu, Z. et al. Sd-pose: Facilitating space-decoupled human pose estimation via adaptive pose perception guidance. Multimed. Syst. https://doi.org/10.1007/s00530-024-01368-y (2024).

Li, J., Liu, C. K. & Wu, J. Ego-body pose estimation via ego-head pose estimation. In 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), IEEE Conference on Computer Vision and Pattern Recognition, 17142–17151, https://doi.org/10.1109/CVPR52729.2023.01644 (IEEE; CVF; IEEE Comp Soc, 2023). IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, CANADA, JUN 17-24, 2023.

Martinelli, G., Diprima, F., Bisagno, N. & Conci, N. Ski pose estimation. In 2024 IEEE International Workshop on Sport, Technology And Research, Star 2024, 120–125, https://doi.org/10.1109/STAR62027.2024.10635966 (IEEE; Politecnico Milano, 2024). IEEE International Workshop on Sport, Technology and Research (IEEE STAR), Lecco, ITALY, JUL 08-10, 2024.

Liao, J., Xu, J., Shen, Y. & Lin, S. Thanet: Transferring human pose estimation to animal pose estimation. Electronics https://doi.org/10.3390/electronics12204210 (2023).

Xia, Z., Booij, O. & Kooij, J. F. P. Convolutional cross-view pose estimation. IEEE Trans. Pattern Anal. Mach. Intell. 46, 3813–3831. https://doi.org/10.1109/TPAMI.2023.3346924 (2024).

Xu, Y., Lin, K.-Y., Zhang, G., Wang, X. & Li, H. RNNPose: 6-DoF object pose estimation via recurrent correspondence field estimation and pose optimization. IEEE Trans. Pattern Anal. Mach. Intell. 46, 4669–4683. https://doi.org/10.1109/TPAMI.2024.3360181 (2024).

Zhang, J., Yang, H. & Deng, Y. Enhanced human pose estimation with attention-augmented hrnet. In 6TH International Conference on Image Processing and Machine Vision, IPMV 2024, 88–93, https://doi.org/10.1145/3645259.3645274 (Univ Macau, 2024). 6th International Conference on Image Processing and Machine Vision (IPMV), Macau, PEOPLES R CHINA, JAN 12-14, 2024.

Chen, B., Wang, X., Chen, X., He, Y. & Song, J. Eanet: Towards lightweight human pose estimation with effective aggregation network. In 2023 IEEE International Conference on Multimedia and Expo, ICME, IEEE International Conference on Multimedia and Expo, 2639–2644, https://doi.org/10.1109/ICME55011.2023.00449 (IEEE; IEEE Circuits & Syst Soc; IEEE Commun Soc; IEEE Comp Soc; IEEE Signal Proc Soc; TENCENT; Meta; Youtube; Google, 2023). IEEE International Conference on Multimedia and Expo (ICME), Brisbane, AUSTRALIA, JUL 10-14, 2023.

Wang, T. et al. Decenternet: Bottom-up human pose estimation via decentralized pose representation. In Proceedings of the 31st Acm International Conference on Multimedia, MM 2023, 1798–1808, https://doi.org/10.1145/3581783.3611989 (Assoc Comp Machinery, 2023). 31st ACM International Conference on Multimedia (MM), Ottawa, CANADA, OCT 29-NOV 03, 2023.

Lou, X., Lin, X., Zeng, H. & Zhu, X. Lar-pose: Lightweight human pose estimation with adaptive regression loss. Neurocomputing https://doi.org/10.1016/j.neucom.2025.129777 (2025).

Amadi, L. & Agam, G. Posturepose: Optimized posture analysis for semi-supervised monocular 3D human pose estimation. Sensors https://doi.org/10.3390/s23249749 (2023).

Cheng, J. et al. Mixpose: 3d human pose estimation with mixed encoder. In Wang, H. et al. (eds.) Pattern Recognition and Computer Vision, PRCV 2023, PT VIII, vol. 14432 of Lecture Notes in Computer Science, 353–364, https://doi.org/10.1007/978-981-99-8543-2_29 (Chinese Assoc Artificial Intelligence; China Comp Federat; Chinese Assoc Automat; China Soc Image & Graph, 2024). 6th Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Xiamen Univ, Xiamen, PEOPLES R CHINA, OCT 13-15, 2023.

Li, M., Wang, Y., Hu, H. & Zhao, X. Infertrans: Hierarchical structural fusion transformer for crowded human pose estimation. Inf. Fusion https://doi.org/10.1016/j.inffus.2024.102878 (2025).

Bai, X., Wei, X., Wang, Z. & Zhang, M. Conet: Crowd and occlusion-aware network for occluded human pose estimation. Neural Netw. https://doi.org/10.1016/j.neunet.2024.106109 (2024).

Li, M., Hu, H., Xiong, J., Zhao, X. & Yan, H. Tswinpose: Enhanced monocular 3D human pose estimation with jointflow. Expert Syst. Appl. https://doi.org/10.1016/j.eswa.2024.123545 (2024).

Dong, X. et al. Yh-pose: Human pose estimation in complex coal mine scenarios. Eng. Appl. Artif. Intell. https://doi.org/10.1016/j.engappai.2023.107338 (2024).

Liao, J., Cui, W., Tao, Y., Shi, T. & Shen, L. Lightweight HRNet: A ligtweight network for bottom-up human pose estimation. Eng. Lett. 32, 661–670 (2024).

Liu, J., Gong, X. & Guo, Q. Lightweight and efficient high-resolution network for human pose estimation. Int. J. Adv. Comput. Sci. Appl. 15, 232–240 (2024).

Liu, L., Dai, Y. & Liu, Z. Real-time pose estimation and motion tracking for motion performance using deep learning models. J. Intell. Syst. https://doi.org/10.1515/jisys-2023-0288 (2024).

de la Cruz, M. H. et al. CNN 1D: A robust model for human pose estimation. Information https://doi.org/10.3390/info16020129 (2025).

Author information

Authors and Affiliations

Contributions

C.G. conceived and designed the experiment(s), conducted the experiment(s), performed the model implementation, and wrote the original draft of the manuscript. W.F.Q. analyzed the results, supervised the research, contributed to methodology development, and provided resources for the research. Both C.G. and W.F.Q. validated the experimental results, participated in data visualization, reviewed and edited the manuscript, and approved the final version.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Ge, C., Qin, W.F. ScaleFormer architecture for scale invariant human pose estimation with enhanced mixed features. Sci Rep 15, 27754 (2025). https://doi.org/10.1038/s41598-025-12620-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-12620-4