Abstract

This study intends to effectively forecast solubility parameter of diverse polymers by creating machine learning models that can grasp the complex relationships between essential input factors like molecular weight, melting point, boiling point, liquid molar volume, radius of gyration, dielectric constant, dipole moment, refractive index, van der Waals area and reduced volume, and parachor, alongside the target variable, which is solubility coefficient of polymers. The goal is to create strong models that accurately capture these intricate relationships to facilitate accurate forecasts of the solubility parameter for polymers. Multiple machine learning algorithms, ranging from basic methods like Linear Regression to advanced techniques such as Artificial Neural Networks (ANNs), Ridge Regression, Lasso Regression, Support Vector Machines (SVMs), Linear Regression, Random Forests (RFs), Gradient Boosting Machines (GBM), K-Nearest Neighbors (KNN), Elastic Net, Decision Trees, Light Gradient Boosting Machine (LightGBM), Categorical Boosting (CatBoost), Convolutional Neural Networks (CNNs), and Extreme Gradient Boosting (XGBoost) were utilized. These methods were utilized to create data-driven models that adeptly seize the intricate connections between input characteristics and output variable, facilitating precise predictions of the solubility parameter for polymers. The efficacy of the developed models was rigorously evaluated using statistical metrics such as R², RMSE, and MRD%, along with visual tools including cross-plots, deviation plots, and SHAP analysis to enhance interpretability and predictive reliability. To guarantee the dataset’s reliability, consisting of 1,799 datapoints on the solubility parameter of polymers, the Monte Carlo outlier detection algorithm was utilized. This stage verified the dataset’s accuracy and appropriateness for model training and evaluation. Results indicated that the models CatBoost, ANN, and CNN surpassed other techniques, attaining superior accuracy shown by the highest R-squared values and the lowest error rates. Sensitivity analysis showed that every input feature impacted the target variable, while SHAP analysis determined that dielectric constant was the most significant factor influencing the solubility parameter of polymers. These results highlight the efficiency of the utilized machine learning methods and emphasize the vital importance of these input parameters in establishing the solubility parameter of polymers. This method not only verifies that the models can make accurate predictions but also provides valuable insights into the impact of input features on solubility parameters of polymers, enhancing algorithm interpretability and scientific understanding.

Similar content being viewed by others

Introduction

In the fields of pharmaceutical, petroleum, chemical and polymer engineering, scientists and engineers prioritize identifying the most suitable solvents per explicit industrial applications. Solubility parameter provides a critical measure of a solvent tendency to solve a given solute. The idea of the solubility factor was formalized in the 1930s by Hildebrand, building on earlier work by Scatchard on cohesive energy density. This theory has since been extensively studied and applied across various chemical processes1. Subsequent contributions by Hildebrand2,3 and Hansen4 further refined the notion by introducing additional parameters and expanding its applicability. The solubility parameter has proven instrumental in polymer processing, surface treatments and coating technologies5.

The theoretical foundation of the solubility parameter lies in molecular interaction and chemical structure energies5. In the case of similarity of solubility factors for two molecules, the miscibility between one molecule (solution or liquid) and another (solid) tends to improve6. This principle, known as “like dissolves like” underpins many solvent selection strategies6. In condensed phases, intermolecular forces, primarily cohesive forces and van der Waals attractions, govern molecular behavior6. Hildebrand5 expanded on earlier cohesive energy theories by introducing the concept of the solubility factor, which relates to the energy density of cohesion of materials. Generally, the solubility parameter can be determined as the square root of the cohesive pressure and is often called the Hildebrand solubility parameter5. However, the Hildebrand parameter’s inability to account for hydrogen bonding limits its application to slightly polar or nonpolar materials deprived of hydrogen bonding5. To overcome this limitation, Hansen introduced solubility parameter of three-component type, which takes the account of dipolar, nonpolar and hydrogen-bonding interactions7. Hansen solubility parameters (HSP) have found widespread use in some industries such as coatings and paints, where solvent-polymer interactions should be essentially taken into account4. Additionally, HSP has been extended to represent materials like nanoparticles, pigments DNA, and others, enabling the analysis of interactions with plasticizers, solvents, foodstuffs, fragrance chemicals and more8,9.

Recognizing the importance of solubility parameter, numerous approaches have been developed to calculate or estimate it. Equation-of-state (EOS)-based methods have been extensively considered for this purpose over the past years10,11,12,13. Group contribution (GC) methods, pioneered by van Krevelen14, enable the assessment of partial solubility parameters for pure organic and polymer compounds.

Quantitative structure-property/activity relationship (QSPR/QSAR) method, leveraging classification/regression techniques, is broadly employed in engineering and chemical science to forecast physical characteristics15,16. Although QSPR/QSAR models deliver accurate results, determining molecular descriptors involves time-intensive processes such as optimization, structure drawing and calculation, which may pose challenges for researchers lacking advanced computational chemistry expertise.

This study employs machine learning (ML), known as a multidisciplinary arena combining ideas from probability theory, statistics, approximation theory, and other domains17, to develop predictive models for the solubility parameter of diverse polymer solutions using a QSPR strategy. Advanced ML algorithms, including linear regression, convolutional and artificial neural networks, lasso and ridge regression, support vector machines, elastic net, random forests, gradient boosting, k-nearest neighbors, decision trees, extreme gradient boosting, categorical boosting, light gradient boosting, and Gaussian processes, are used. The Monte Carlo outlier detection algorithm ensures dataset suitability for model training. Model performance is assessed using multiple metrics and visualization tools, while SHAP values provide insights into the impact of essential characteristics on solubility estimations. A detailed description of the methodology is provided in Fig. 1.

Machine learning backgrounds

Convolutional neural network

A Convolutional Neural Network (CNN) is a type of deep learning algorithm tailored to handle structured data, including images and time-series data. These networks excel in visual computing applications, like object detection, image classification, and intricate pattern recognition18. The structure of a CNN is composed of several layers, with pooling layers, convolutional layers, and completely linked layers playing crucial roles18,19. Convolutional layers employ filters or kernels to detect fundamental characteristics such as lines and patterns, which are subsequently processed by deeper layers to identify more complex patterns, such as shapes or objects. Pooling layers help reduce data dimensionality, allowing the network to focus on the primary significant features19. CNNs are usually trained using the backpropagation algorithm, which enables the network to fine-tune its weights by comparing predictions with actual outcomes20. These networks are highly effective in various fields, including facial recognition, medical imaging, and general image analysis, and can autonomously learn hierarchical features with little human input. A key advantage of CNNs is their ability to generalize well to new, previously unseen data, making them particularly efficient for tasks involving large datasets21,22. Recent improvements in hardware, especially the adoption of Graphics Processing Units (GPUs), have greatly enhanced the training speed of these networks, further boosting their widespread use21,22.

K-nearest neighbors

The K-Nearest Neighbors (KNN) method is among the most commonly used supervised learning methods in machine learning. It operates by evaluating distances between data points to make predictions or classifications23,24. When estimating the value or class of a new data point, KNN identifies the K closest points from training dataset and determines prediction based on majority label among these K neighbors23. This process usually involves distance metrics such as Euclidean distance, meaning that closer data points have a stronger influence on the outcome. A key advantage of KNN is its non-parametric nature, which means it does not need a clear training or learning stage24. Rather than constructing a complex predictive model, KNN directly uses the training data to make predictions at runtime. However, this also leads to one of its main drawbacks: the algorithm can be computationally expensive during the prediction phase, especially with large datasets, since it must determine distances for every data point in the training set25.

Additionally, execution of KNN is highly responsive to the choice of the K factor and method used to measure distances. Selecting an inappropriate K value or distance metric can greatly influence results accuracy26. Despite these limitations, KNN is extensively applied in different fields, like text classification, and junk email filtering, due to its simplicity and effectiveness in many scenarios26.

Lasso regression

Lasso Regression is a linear regression algorithm designed for feature selection and mitigating overfitting in regression models. The name “Lasso” stands for " Selection Operator and Least Absolute Shrinkage”27. This technique incorporates an L1 regularization to reduce features coefficients, bringing certain coefficients to zero and effectively removing the corresponding features from the model27. This process allows for automatic feature selection, as less important or irrelevant features are discarded, leading to a model that is easier to understand and interpret.

A major benefit of Lasso Regression is its capability to automatically identify relevant features and optimize the model, particularly when dealing with datasets that include numerous irrelevant or highly correlated features28,29. It is especially effective in high-dimensional scenarios in situations with a substantial amount of characteristics, and some may be redundant or non-informative. By simplifying the model, Lasso Regression improves the model’s capability to apply to novel, unencountered data30.

Despite its advantages, Lasso Regression has some drawbacks. It may struggle when features are highly correlated, as it tends to randomly select one feature from a group of correlated predictors and disregard the others29. In such situations, alternative approaches like Ridge Regression might be more suitable. Nevertheless, Lasso Regression continues to be an influential instrument in machine learning and data assessment, providing a reliable method for constructing streamlined models in cases with intricate feature relationships31.

Decision tree

The Decision Tree algorithm is a frequently used technique in machine learning for solving regression and classification problems. It constructs a model as a hierarchical tree structure, with each node symbolizing a decision or condition that partitions the data into subsets32. The tree is built using the input features, with the process continuing until it reaches leaf nodes, which provide the final predictions or outcomes33. While building the tree, metrics such as Gini Index or Entropy are employed as splitting criteria to minimize impurity and enhance the accuracy of classifications at each step34.

One of the primary strengths of decision trees is their interpretability. The logic behind the decisions made by the model can be easily traced and understood, rendering them appropriate for uses that require transparency is important35. Moreover, decision trees are capable of managing both numerical and categorical data without the need for feature scaling, adding to their versatility29,36.

However, decision trees have some limitations. They are susceptible to overfitting, particularly when handling noisy data, since the model might grasp irrelevant patterns or anomalies found in the training dataset36,37. To mitigate this issue, methods such as ensemble methods or pruning are often utilized. These approaches help simplify the tree and improve its capacity to apply to unknown data, thereby enhancing the general durability and efficiency of the model38.

Linear regression

Linear Regression is among the most frequently utilized machine learning methods for representing the relationship between a dependent parameter and a single or several independent variables. This method operates under the assumption that there is a straight relationship between the input characteristics and the output labels39. The primary objective of linear regression is to identify a line or hyperplane that most suitable for the data. Mathematically, this is represented by a linear equation, where the coefficients are optimized using techniques such like the least squares approach40.

Linear regression finds applications in various domains, including predicting real estate prices, forecasting product sales, and analyzing economic trends. One of its key advantages is its simplicity and interpretability, as the results are straightforward to understand and explain39,40. Additionally, when the connection between parameters is genuinely linear, linear regression can generate highly accurate predictions41.

However, linear regression may struggle with datasets that exhibit complex or nonlinear relationships, leading to issues such as underfitting or overfitting. In such cases, more advanced models, such as polynomial regression or nonlinear methods, might be more appropriate42. In spite of these constraints, linear regression remains a powerful instrument for comprehension and forecasting outcomes in scenarios where the relationships between variables are approximately linear43.

Gradient boosting machine

The Gradient Boosting Machine (GBM) is a potent machine learning algorithm designed to tackle regression and classification jobs. It leverages boosting technique, which enhances predictive performance by combining several fragile models into a more robust one ensemble44. In GBM, models are constructed sequentially, with every following model aimed at correcting the errors of those before it. This repetitive method makes use of the Gradient Descent improvement method to minimize prediction errors by targeting the residuals (differences between actual and predicted values) at each step45.

At its core, during each training phase, GBM ensures that the new model specifically addresses the weaknesses of the previous one, progressively improving overall accuracy. A primary benefit of GBM is its capacity to manage intricate, nonlinear datasets effectively, resulting in high predictive precision46. By relying on weak models and gradually refining them, GBM achieves strong generalization capabilities, rendering it appropriate for various uses, such as fraud detection, sales forecasting, and disease diagnosis47.

Despite its strengths, GBM does have some drawbacks. It can be sensitive to noisy data, which may lead to overfitting if not properly managed. Additionally, the algorithm typically requires more training time compared to other methods due to its sequential nature. Another challenge lies in parameter tuning, as selecting optimal hyperparameters for GBM can be complex and computationally demanding47,48. Nevertheless, when properly configured, GBM remains a highly effective tool to address different real-life issues48.

Support vector machine

Support Vector Machine (SVM) is a robust machine learning method primarily utilized for classification jobs, although it can likewise be modified for regression jobs. The core objective of SVM is to create a perfect decision boundary, referred to as a hyperplane, that efficiently divides data points that pertain to various categories49. In classification, this hyperplane is designed to maximize margin between classes, ensuring the most effective separation possible. For datasets that are not linearly separable, SVM utilizes kernel functions to transform the data into a higher-dimensional space, enabling linear separation in this transformed space50.

A major advantage of SVM is its ability to manage intricate, nonlinear relationships within data by leveraging various kernel functions, like radial basis function (RBF) kernels or polynomial. This makes SVM particularly effective in high-dimensional spaces, where it has demonstrated strong predictive accuracy51. As a result, SVM has been effectively utilized in various fields, such as facial recognition, illness diagnosis, and text categorization51.

However, SVM does have notable limitations. It is highly sensitive to parameter settings, especially the choice of kernel function and the tuning of regularization parameter C, which manages the balance between obtaining a low error on the training dataset and maintaining a smooth decision boundary52. Additionally, training an SVM model may require significant computational resources, especially for extensive datasets, as it entails addressing a quadratic optimization challenge. This requirement for significant computational resources and time can make SVM less practical for very large-scale applications. In spite of these difficulties, SVM continues to be an effective instrument for numerous machine learning applications, especially when dealing with complex, high-dimensional data53.

Categorical boosting

Categorical Boosting, commonly known as CatBoost, is an innovative machine learning method aimed at enhancing the efficacy of classification models, particularly when working with datasets that include categorical attributes54. In contrast to conventional boosting algorithms like XGBoost and LightGBM, which mainly target numerical data and necessitate preprocessing steps such as One-Hot Encoding for categorical variables, Categorical Boosting directly manages categorical features without extensive preprocessing requirements. This is accomplished via cutting-edge encoding techniques that are incorporated into the model’s structure55.

CatBoost integrates Gradient Boosting concepts with unique feature engineering methods specifically designed for categorical data. This approach removes the necessity for manual encoding or changing of categorical variables, thereby decreasing preprocessing time and complexity56. The algorithm utilizes advanced techniques like target-oriented encoding and mixtures of categorical attributes to efficiently grasp the connections between categories and the target variable57.

A major benefit of Categorical Boosting is its capacity to improve model precision while streamlining the process. By directly training on categorical data, the model can utilize information from various classes at once, resulting in enhanced classification effectiveness58. This is especially beneficial in situations where data shows batch traits or when the dataset includes many categorical variables. Moreover, CatBoost’s method of managing categorical features guarantees that the model stays strong and efficient, even when dealing with high-cardinality data56.

In conclusion, Categorical Boosting provides an effective approach for classification tasks by overcoming the challenges of conventional boosting algorithms when working with categorical data. Its capacity to enhance preprocessing efficiency, along with its robust predictive capabilities, renders it a useful resource in multiple areas, such as customer segmentation, fraud identification, and natural language processing. Nonetheless, similar to other intricate models, it might need precise adjustment of hyperparameters for optimal performance59.

Artificial neural network

An Artificial Neural Network (ANN) is a computing framework intended to mimic information functioning abilities of the human mind. It is made up of linked processing units known as neurons, which function similarly to biological neurons. Each neuron receives inputs from other neurons, processes them using a mathematical operation such as an activation function and then sends an output to subsequent neurons60. ANNs are especially proficient at addressing intricate jobs like pattern identification and forecasting, and data classification, as they have the ability to learn from datasets and progressively enhance their results gradually61.

Typically, ANNs are structured in multiple layers, forming what is commonly referred to as a Multilayer Perceptron (MLP). These layers include a layer for input, several hidden layers, and a layer for output62. The input layer takes in data, while the hidden layers handle processing it through various transformations, and output layer provides the final result. In the training period, algorithms like backpropagation are employed to adjust the weights between neurons. This adjustment minimizes prediction errors and gradually enhances the network’s accuracy by fine-tuning its internal parameters63.

ANNs are especially well-suited for handling intricate, nonlinear problems that demand substantial computational power. As a result, they are widely used in various areas like processing of language, recognition of image and speech, and many other areas within data science63,64. Their capacity to represent intricate connections and adjust to new data makes them an effective instrument for resolving real-world problems across a variety of industries64.

10. Extreme gradient boosting

Extreme Gradient Boosting (XGBoost) is an exceptionally powerful and robust machine learning method celebrated for its skill in addressing intricate regression and classification challenges, particularly in extensive datasets. XGBoost, as an enhanced version of the gradient boosting framework, builds upon conventional boosting methods by merging various weak models usually decision trees for building a robust forecasting model65. In this algorithm, every a new tree is formed to address the errors of the earlier one, gradually enhancing the predictions and moving closer to the best solution.

A key attribute of XGBoost is its incorporation of regularization methods, which aid in avoiding overfitting and improving the model’s generalization abilities. This renders it especially efficient in managing noisy or high-dimensional data. XGBoost is renowned for its speed and efficiency, capable of providing very accurate predictions even when managing large data sets65. Utilizing parallel processing, the algorithm greatly shortens computation duration, making it highly appropriate for practical applications that deal with extensive datasets66.

XGBoost is highly effective in handling complex and unstructured data, providing strong solutions for different activities, such as recognition of image and processing of natural language. Its extensive customizability enables users to adjust multiple parameters, including learning rate, tree depth, and regularization strength, to enhance performance for particular applications. These features have resulted in its extensive use in both scholarly research and commercial applications, including data mining contests such as those on Kaggle, where it has repeatedly shown exceptional performance67.

To summarize, XGBoost is acknowledged as one of the greatest preferred algorithms in machine learning because of its effectiveness, precision, and versatility. Its capacity to manage intricate datasets while ensuring high performance has established its status as a preferred approach for addressing prediction and classification problems across various fields68.

11. Light gradient boosting machine

The LightGBM is a cutting-edge machine learning algorithm specifically designed to handle large and complex datasets efficiently. It represents an optimized variant of the gradient boosting method, offering significant benefits like quicker training speed, reduced memory usage, and improved accuracy compared to similar algorithms like XGBoost45. One of the key innovations of LightGBM is its unique approach to building decision trees using “leaf-wise” growth rather than the conventional “level-wise” method. This strategy allows the algorithm to create deeper trees more effectively, focusing on splits that contribute the most to performance of model, thereby enhancing accuracy69.

A major strength of LightGBM excels due to its capability to train models rapidly, even on massive datasets. By leveraging techniques such as parallel processing and batch sampling, LightGBM achieves superior performance while minimizing computational resources. Additionally, it is highly efficient in managing both discrete and continuous data, all while consuming significantly less memory than other gradient boosting frameworks. These features make LightGBM particularly well-suited for applications involving large-scale data modeling, such as fraud detection, sales forecasting, and improving search engine rankings70.

Due to its efficiency, scalability, and high performance, LightGBM has gained widespread adoption across various industries and research domains. Its ability to deliver accurate predictions with reduced resource requirements makes it serves as a perfect option for addressing practical issues where data size and complexity are significant challenges. Overall, LightGBM stands out as a powerful tool for building robust predictive models in large-scale applications71.

1 elastic net

Elastic Net is a regression approach that merges the benefits of two well-known regularization techniques: Lasso regression and Ridge regression. This combined strategy is especially useful for datasets that exhibit high dimensionality or significant correlations between features. Lasso regression prioritizes feature selection by forcing the coefficients of less significant features to zero, whereas Ridge regression combats overfitting by imposing penalties on large coefficients and lowering model variance30. Elastic Net combines these two approaches to find a balance between selecting features and applying regularization, which makes it particularly advantageous in situations where the feature count is greater than the observation count72.

A significant benefit of Elastic Net is its capacity to manage highly correlated features more efficiently than using Lasso or Ridge separately. In cases where several features are highly correlated, Lasso typically chooses one feature at random and disregards the rest, while Elastic Net can incorporate clusters of correlated features into the model. This renders Elastic Net ideal for datasets that exhibit intricate relationships among variables, as seen in fields like genomics, finance, or image processing73.

The algorithm attains this equilibrium through a weighted mix of the L1 and L2 penalties. By adjusting the mixing parameter, which governs the balance of each regularization technique, Elastic Net can enhance the compromise between selecting features and minimizing variance. This adaptability enables Elastic Net to enhance the precision of forecasts and generalization of models, particularly when handling numerous features or multicollinearity74.

In conclusion, Elastic Net is an effective method for regression issues that involve high-dimensional data or related attributes. Its capacity to integrate the advantages of Lasso and Ridge regression renders it a perfect option for improving model performance in intricate datasets, guaranteeing strength and dependability in numerous practical applications75.

13. Ridge regression

Ridge Regression is a regularization method employed in machine learning to avoid overfitting in regression models, especially when working with high-dimensional datasets. It is particularly useful in circumstances where the quantity of features surpasses the amount of observations or when there are significant correlations between the features75. In Ridge Regression, a penalty component is included in loss function to prevent the model from giving overly large weights to any individual feature. This penalty is determined by summing the squares of the weight values, promoting the model to allocate importance more uniformly among all features by diminishing the size of the coefficients76.

The hyperparameter, λ (lambda), referred to as the regularization coefficient, regulates the regularization strength in Ridge Regression. An increased value of λ enforces a more significant penalty on the model, resulting in reduced weights and a more straightforward model that performs better on new data. On the other hand, a smaller λ enables the model to align more precisely with the training data, potentially leading to overfitting if not adequately adjusted. By modifying λ, a balance between bias and variance can be achieved, allowing the model to stay both adaptable and strong77.

Ridge Regression is highly valued due to its user-friendliness and efficiency, rendering it a favored option in data analysis endeavors that include numerous features and intricate connections among them. It is especially beneficial when dealing with noisy data since it minimizes the influence of superfluous or redundant features by driving their coefficients closer to zero. This aids in enhancing the model’s forecasting accuracy and consistency, particularly in cases where more intricate models could overfit the data. In general, Ridge Regression offers an effective method for enhancement the generalization abilities of regression models when dealing with difficult datasets78.

14. Random forest

Random Forest is a robust machine learning algorithm that leverages an ensemble of decision trees to predict and analyze data. As a kind of ensemble learning technique, it integrates the forecasts from various weak models (individual decision trees) to create a greater precision and stable overall model. In Random Forest, instead of constructing a single decision tree, numerous trees are built, each using a random subset of data79. The ultimate prediction is decided via a voting process for classification tasks (majority vote) or averaging for regression tasks, based on the outputs of all the individual trees80,81.

One of the main benefits of Random Forest is its capability to significantly reduce overfitting, a common issue with individual decision trees. By introducing randomness in both the data samples and the characteristics employed to construct each tree, the algorithm ensures diversity among the trees, which enhances the model’s generalization capability. This randomness prevents any single tree from becoming too complex or overly tailored to training data, thereby enhancing the model’s effectiveness on unfamiliar data82.

Another notable feature of Random Forest is its capacity to manage high-dimensional datasets with noisy and correlated features. The algorithm’s inherent ability to work with subsets of features during the tree-building process ensures that it can effectively manage intricate relationships within the data. This makes Random Forest particularly well-suited for a broad variety of applications, comprising acknowledgment of pattern, processing of image, and forecasting of financial83.

In conclusion, Random Forest is an adaptable and robust tool for tasks involving both classification and regression. Its ability to handle complex, noisy datasets while reducing overfitting and improving accuracy has made it a popular choice across various domains, from data science to real-world problem-solving scenarios84.

Data description and analysis

The dataset utilized in this study originates from the DIPPR 801 database, maintained by the Design Institute for Physical Properties (DIPPR) of the American Institute of Chemical Engineers (AIChE)85. Constructed by Design Institute for Physical Properties (DIPPR) to address industry demands for reliable real estate information, DIPPR 801 database is renowned for its rigorous curation and validation processes. These processes ensure the consistency and precision of the information85. For this study, the independent parameters were extracted from the physical constants of the aforementioned database, which encompasses solubility factors of 1,889 polymeric compounds. Concerning this, the input variables include molecular weight, melting point, boiling point, liquid molar volume, dielectric constant, radius of gyration, refractive index, van der Waals area, reduced volume, and parachor, while the output factor refers to the solubility parameter of polymers, which is predicted using the QSPR approach developed through machine learning models. This comprehensive dataset enables the creation of sophisticated models that can accurately predict solubility parameters, thereby facilitating advancements in polymer science and engineering.

It is crucial to note that the solubility parameters of polymers were experimentally determined, as reported in the literature, based on a range of physicochemical properties and structural descriptors. The resulting experimental dataset consists of 1,799 records, which have been systematically divided into three subsets to facilitate model development and evaluation. Specifically, approximately 70% of the data (1,259 data points) is designated for training the predictive models, while 15% (270 data points) is reserved for testing and another 15% (270 data points) for validation. This strategic partitioning of the dataset ensures a rigorous and reliable framework for developing and assessing models aimed at accurately predicting the solubility parameters of polymers. Such an approach improves generalizability and robustness of the models, rendering them more relevant to real-world polymer processing scenarios.

The input parameters for the predictive model were carefully selected by identifying the key factors that significantly influence the solubility parameter of polymers. These parameters include a comprehensive set of physicochemical properties, such as molecular weight, melting point, boiling point, liquid molar volume, dipole moment, radius of gyration, dielectric constant, van der Waals area, refractive index, and reduced volume, and parachor. Each of these properties holds a crucial position in deciding behavior and interactions of polymers, thereby directly or indirectly affecting their solubility characteristics. For example, molecular weight has been shown to influence polymer solubility by affecting chain entanglement and free volume, with higher molecular weights typically reducing solubility due to increased chain stiffness and lower segmental mobility14. Similarly, dielectric constant reflects the polarity of the polymer, which directly affects interactions with polar or nonpolar solvents86. Boiling and melting points are indicators of intermolecular forces, such as van der Waals or hydrogen bonding, which also influence solubility behavior6.

In this study, the solubility parameter of polymers serves as the primary output variable, which the model aims to predict accurately based on aforementioned input parameters. To provide insights into the range, distribution, and relationships between the input parameters and the solubility parameter, scatter matrix plots have been generated and are presented in Fig. 2. These visualizations offer a valuable overview of the data, highlighting patterns, correlations, and potential outliers, which are crucial for grasping the foundational framework of the dataset and guiding the development of an effective predictive model.

Figure 3 presents the Pearson correlation coefficients for all parameter pairs analyzed in this study. The Pearson correlation coefficient r is calculated using the following formula:

\(\:{r}_{j}=\frac{\sum\:_{i=1}^{n}({I}_{i.j}-\stackrel{-}{{I}_{j}})({Z}_{i}-\stackrel{-}{Z})}{\sqrt{\sum\:_{i=1}^{n}{({I}_{i.j}-\stackrel{-}{{I}_{j}})}^{2}\sum\:_{i=1}^{n}{({Z}_{i}-\stackrel{-}{Z})}^{2}}}\)2

In the context of correlation analysis, Zˉ represents the mean of the second variable, while Z denotes the second variable itself.

According to the information shown in Fig. 3, it is clear that every input variable shows a connection with polymers solubility parameter. Significantly, boiling point, melting point, dipole moment, dielectric constant and refractive index, show a beneficial effect, while molecular weight, liquid molar volume, van der Waals area, radius of gyration and reduced volume, and parachor present an adverse correlation with the solubility parameter.

Prior to developing machine learning models to predict the solubility parameter of polymers, it is essential to ensure data reliability through the management of outliers. This research uses the Monte Carlo Outlier Detection (MCOD) algorithm, which effectively identifies outliers in extensive datasets by integrating random selection using density-driven techniques. To improve data quality, we applied a Monte Carlo Outlier Detection (MCOD) algorithm, which combines random sampling with local density estimation to identify and exclude outliers. This technique helps reduce computational complexity while retaining the representativeness of the dataset for model training. A boxplot summarizing the data distribution after applying MCOD is shown in Fig. 4.

Figure 4 presents a boxplot representation of databank employed in this research, showcasing the data’s distribution and defining the range considered appropriate for model creation. As illustrated, most data points reside within the allowable range, indicating excellent data quality. In this study, each data point from the collected dataset was used to train the machine learning methods. This method ensures that the models are built on a thorough databank, thus improving their ability to efficiently generalize to new, unknown databank. By including full spectrum of data, the algorithms are more adept at recognizing the fundamental patterns and discrepancies, which leads to more dependable and precise forecasts for determining the solubility parameter of polymers in different solvents.

Results and discussions

In this part, we perform a thorough evaluation of the dependability and efficacy of created data-driven models for forecasting the solubility parameter of various polymers. In order to achieve this, we utilize different machine learning methods, like ridge regression, Convolutional Neural Networks (CNN), linear regression, Artificial Neural Networks (ANN), elastic net, Support Vector Regression (SVR), lasso regression, Random Forest (RF), Gradient Boosting Machines (GBM), K-Nearest Neighbors (KNN), Decision Trees (DT), LightGBM, XGBoost, and CatBoost.

In order to evaluate the effectiveness of these models, a range of metrics are employed, like R-squared (R²), average squared error (MSE), mean relative deviation percentage (MRD%), and standard deviation of residuals (σ). The meanings of these metrics are outlined below:

\(\:R-squared\left({R}^{2}\right)=1-\frac{{\sum\:}_{i=1}^{N}{({\text{y}}_{\text{i}}^{\text{r}\text{e}\text{a}\text{l}}-{\text{y}}_{\text{i}}^{\text{p}\text{r}\text{e}\text{d}\text{i}\text{c}\text{t}\text{e}\text{d}})}^{2}}{{\sum\:}_{i=1}^{N}{({\text{y}}_{\text{i}}^{\text{r}\text{e}\text{a}\text{l}}-\overline{{\text{y}}^{\text{r}\text{e}\text{a}\text{l}}})}^{2}}\) | 2 |

|---|---|

\(\:Mean\:squared\:error\:\left(MSE\right)\:=\:\frac{1}{N}{\sum\:}_{i=1}^{N}{({y}_{i}^{real}-{y}_{i}^{predicted})}^{2}\) | 3 |

\(\:Mean\:relative\:deviation\:\left(MRD\right)=\frac{100}{N}{\sum\:}_{i=1}^{N}\left(\frac{{y}_{i}^{real}-{y}_{i}^{predicted}}{{y}_{i}^{real}}\right)\) | 4 |

\(\:Residuals\:Standard\:Deviation\:\left(\sigma\:\right)=\sqrt{\frac{1}{N}{\sum\:}_{i=1}^{N}{({\text{y}}_{\text{i}}^{\text{r}\text{e}\text{a}\text{l}}-{\text{y}}_{\text{i}}^{predicted})}^{2}}\) | 5 |

Here, \(\:{\varvec{y}}_{\varvec{i}}^{\varvec{p}\varvec{r}\varvec{e}\varvec{d}\varvec{i}\varvec{c}\varvec{t}\varvec{e}\varvec{d}}\) and \(\:{\varvec{y}}_{\varvec{i}}^{\varvec{r}\varvec{e}\varvec{a}\varvec{l}}\) represent predicted and actual target values, while N denotes the total number of data points in dataset.

As per Table 1, the highest-performing models recognized are ANN, CNN, and Catboost. Table 1 validates their exceptional performance, as CNN records the highest R² values (training: 0.941, validation: 0.838, and testing: 0.914), the lowest RMSE (training: 1079, validation 1415, and testing: 1329), and the lowest MRD% (training: 3.8%, validation:4.3%, and testing: 4.6%). Likewise, ANN and Catboost show outstanding performance, exhibiting high R² metrics, minimal MSE, and relatively low MRD%, which affirms their reliability in predictive tasks.

In contrast, simpler models like Linear Regression, Decision Tree, and XGBoost demonstrate significantly lower performance metrics when compared to more advanced models. These models achieve R² values ranging from 0.70 to 0.83, which are notably lower than those of top-performing models. Additionally, they exhibit substantially higher Root Mean Squared Error (RMSE) values, varying between 1910 and 2540 indicating larger deviations from actual values.

The Decision Tree and XGBoost models, in particular, display perfect performance on the training data (R² = 1.0000, MSE = 0), but this is indicative of severe overfitting. As a result, its performance deteriorates markedly on validation and test sets, revealing poor generalization capabilities. Similarly, the KNN model demonstrates relatively acceptable R² values (0.88 for training, 0.80 for validation, and 0.79 for testing). However, its higher MRD% values in both validation (5.6%) and test sets (4%) suggest that it incurs greater relative errors compared to the leading models. These results highlight the constraints of these models in effectively capturing complex patterns within the data and emphasize the significance of selecting more sophisticated algorithms for improved predictive performance.

In summary, CNN, ANN, and Catboost emerge as the most precise and reliable frameworks for predicting the solubility parameter of diverse polymers, as evidenced by their robust performance across all evaluation metrics. Their superior performance is consistently demonstrated across all evaluation metrics, showcasing their ability to effectively manage the intricacies and fluctuations present in polymer datasets. These gradient boosting-based algorithms not only achieve high predictive accuracy but also exhibit strong generalization abilities, creating them well-suited for this challenging job. The robustness of these models regarding metrics like R², Root Mean Squared Error (RMSE), and Mean Relative Deviation (MRD%) further solidifies their position as leading choices for solubility parameter prediction in polymer science applications.

This study utilizes graphical techniques, including relative deviation values and crossplots, to evaluate the efficiency of machine learning models designed for forecasting the solubility parameter of various polymers. These visualization methods play an essential role in enhancing evaluation process by providing clear and intuitive comparisons of model performance. By plotting predicted solubility parameter values against actual values, these graphical tools facilitate a deeper understanding of the models’ accuracy and reliability. They also help identify any discrepancies or error patterns that may not be immediately apparent from numerical metrics alone.

Relative deviation values offer a quantitative measure of the disparities between forecasted and real outcomes, enabling a more nuanced evaluation of model precision. Crossplots, on the other hand, visually represent these deviations, allowing for easy identification of trends, outliers, or systematic biases in the predictions. Together, these techniques offer valuable perspectives on the strength and consistency of models, reinforcing overall assessment of their ability to accurately predict the solubility parameter of polymers across diverse datasets. This graphical approach ensures a comprehensive and reliable evaluation framework, supporting the development of effective predictive models in polymer science.

Figure 5 provides a comparative visualization of actual data points versus modeled data points based on the data point index for all developed models, covering all phases for the solubility parameters of various polymers. As depicted, the actual and predicted values for CatBoost, ANN, and CNN closely overlap, highlighting that these machine learning algorithms outperform all other methods evaluated in this study. This near-perfect alignment underscores their superior ability to generalize across different datasets.

Furthermore, Fig. 6 presents cross-plots comparing actual versus estimated values for all machine learning algorithms. A dense clustering of datapoints around the line y = x is clearly visible for CatBoost, ANN, and CNN, reinforcing their exceptional foretelling accuracy. The fitted lines derived from these cross-plot points for the three algorithms closely adhere to line y = x, indicating a strong linear correlation between real solubility values and estimations generated by these models.

These visual analyses collectively demonstrate the robustness and reliability of CatBoost, ANN, and CNN in predicting the solubility factors of diverse polymers. The high degree of correspondence between actual and predicted values not only validates exceptional performance of these algorithms but also confirms their suitability for real-world applications in polymer science and engineering.

Figure 7 presents scatter plots that show relative errors of developed algorithms in predicting the solubility parameters of various polymers. The distribution of these error values around the x-axis is particularly evident for CatBoost, ANN, and CNN models. This illustration emphasizes the slight difference between their predictions and the real solubility values, reinforcing strong alignment between the predicted and true values. Such a tight clustering of errors around zero indicates that these models exhibit high precision and accuracy in their predictions.

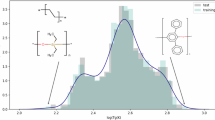

Figure 8 provides an additional layer of insight by showcasing the prediction distribution capabilities of all developed machine learning models across all phases. Notably, estimation profiles for CatBoost, ANN, and CNN show greater consistency across these phases compared to other methods. This consistency suggests that these Models excel not only on the training dataset but also generalize efficiently to unfamiliar testing and validation datasets.

The ability of CatBoost, ANN, and CNN to maintain consistent performance across different phases further validates their robustness and reliability. These findings collectively confirm that these three models are the most effective and dependable choices for estimating the solubility parameters of diverse polymers within the context of this study. Their superior performance makes them highly suitable for practical applications in polymer science and related fields.

Evaluating the importance of input features is a critical step in comprehending how different factors affect the prediction of solubility parameters for diverse polymers using machine learning models. In this research, the SHAP (Shapley Additive Explanations) method is employed to assess feature importance, offering a robust framework for interpreting the complexities of high-performance models. Rooted in game theory, the SHAP method assigns contribution values to every datapoint based on its input features, thereby enhancing algorithm interpretability at aggregate and individual levels. This approach offers important perspectives on the role of each input variable in shaping target output in this case, the solubility parameters of polymers.

Figure 9 presents the SHAP values for the input parameters and their corresponding importance, as established by the CNN model. The features are ranked from highest to lowest according to their mean SHAP values, with higher ranks indicating a greater influence on the solubility parameter estimations. The SHAP analysis identifies the dielectric constant as the most influential feature in determining the solubility parameter of polymers. This observation is consistent with the physicochemical interpretation that the dielectric constant reflects the polarity of a material. Polymers with higher dielectric constants tend to have stronger dipole–dipole interactions and enhanced compatibility with polar solvents, leading to higher solubility parameters. This relationship has been previously reported in polymer-solvent compatibility studies86,87, where higher polarity generally correlates with greater cohesive energy density. Conversely, materials with low dielectric constants exhibit weaker intermolecular interactions and thus lower solubility parameters, which the model accurately captures.

In addition to dielectric constant, other top-ranking features such as molecular weight, liquid molar volume, and melting point also contribute significantly. Molecular weight typically exhibits a negative correlation with solubility due to the increased rigidity and reduced free volume in higher molecular weight polymers, which hinders solvation14. Similarly, higher liquid molar volumes often indicate bulkier molecules, resulting in lower cohesive energy density and reduced solubility. Melting point, as an indicator of crystalline strength and intermolecular forces, tends to have a positive correlation, suggesting that polymers with higher melting points often possess stronger intermolecular cohesion, which influences their solubility characteristics12. These insights reinforce the interpretability of the model and its alignment with established physicochemical principles.

By providing a detailed breakdown of feature contributions, the SHAP method not only enhances the transparency of the Random Forest model but also offers actionable insights for improving predictive accuracy and understanding the underlying mechanisms governing polymer solubility. This analysis serves as a foundation for optimizing polymer design and processing strategies, making it an invaluable tool in the field of polymer science and engineering.

While several recent studies have employed machine learning to predict solubility parameters of polymers88,89, many of these efforts suffer from limitations such as the use of relatively small or domain-specific datasets, limited model diversity (e.g., reliance on linear models or single-layer ANNs), and a lack of interpretability analysis regarding feature importance. In contrast, the present study utilizes a significantly larger and more diverse dataset (1,799 polymers) extracted from the DIPPR 801 database, covering a broad range of physicochemical descriptors. Moreover, we implement and compare a wide variety of both classical and advanced machine learning models including CNN, CatBoost, and ANN and evaluate them comprehensively using multiple performance metrics and visualization techniques. Importantly, the incorporation of SHAP analysis allows us to go beyond predictive accuracy and offer insights into the relative importance and physical relevance of each input feature, thus improving model transparency and scientific interpretability. These advancements differentiate this work from prior studies and address common weaknesses in earlier approaches.

Conclusion

This paper is centered on development of data-driven models leveraging a wide array of machine learning algorithms to estimate solubility parameters of diverse polymers. The algorithms employed include Random Forests (RFs), Linear Regression, K-Nearest Neighbors (KNN), Convolutional Neural Networks (CNNs), Artificial Neural Networks (ANNs), Ridge Regression, Lasso Regression, Elastic Net, Support Vector Machines (SVMs), Extreme Gradient Boosting (XGBoost), Decision Trees (DTs), Light Gradient Boosting Machines (LightGBM), Gradient Boosting Machines (GBMs), and Categorical Boosting (CatBoost). These models were trained using key physicochemical descriptors such as molecular weight, melting point, liquid molar volume, radius of gyration, boiling point, dielectric constant, dipole moment, van der Waals area, refractive index and reduced volume, and parachor. To assess the effectiveness of the developed models, a combination of quantitative metrics and visualization tools was utilized. The Monte Carlo outlier detection method was utilized to guarantee the databank’s reliability, confirming that most of the information points were suitable for validation and training purposes. The superior performance of CatBoost, ANN, and CNN can be attributed to their inherent ability to capture complex, non-linear relationships and intricate feature interactions within the dataset. CatBoost, as a gradient boosting method tailored for handling categorical and numerical features, efficiently models non-linearities while mitigating overfitting through ordered boosting and advanced regularization. ANN and CNN, on the other hand, are deep learning architectures capable of learning hierarchical patterns and internal representations without explicit feature engineering. In particular, CNNs leverage local connectivity and weight sharing mechanisms that help identify spatial correlations and subtle dependencies among features. These capabilities allow the models to generalize better across the diverse physicochemical properties present in the polymer dataset, resulting in more accurate and robust predictions. Further analysis revealed that specific physicochemical properties, including boiling point, melting point, dipole moment, dielectric constant, and refractive index, significantly influence the solubility favtors of polymers. Some of these factors exhibited positive relationships with solubility, while others showed negative correlations. This finding underscores the models’ capability to successfully capture intricate and nonlinear connections present in the dataset, reinforcing their suitability for predictive applications in this domain. To enhance interpretability, SHAP (Shapley Additive Explanations) values were employed to clarify contributions of individual features to the predictions. The analysis identified the dielectric constant as the most influential factor affecting the solubility parameters of diverse polymers. This insight not only validates the robustness of the selected models but also provides deeper understanding of the critical elements influencing polymer solubility. In conclusion, this comprehensive approach not only demonstrates the effectiveness of CatBoost, ANN, and CNN in accurately predicting polymer solubility parameters but also offers valuable understanding of the fundamental processes motivating these predictions. Through the integration of sophisticated machine learning methods with interpretable feature importance analysis, this research opens the door for enhanced precision and transparency in future research, enabling more informed decisions in polymer science and engineering applications.

Data availability

The data that support the findings of this study are available from the corresponding author, Mohammad Reza Kezemi, upon reasonable request.

References

Scatchard, G. Equilibria in Non-electrolyte solutions in relation to the vapor pressures and densities of the components. Chem. Rev. 8 (2), 321–333 (1931).

Hildebrand, J. H. & Scott, R. L. The Solubility of Nonelectrolytes, Reinhold Pub3 (Co., 1950).

Hildebrand, J. H. A critique of the theory of solubility of non-electrolytes. Chem. Rev. 44 (1), 37–45 (1949).

Hansen, C. M. 50 years with solubility parameters—past and future. Prog. Org. Coat. 51 (1), 77–84 (2004).

Sun, R. & Elabd, Y. A. Synthesis and high alkaline chemical stability of polyionic liquids with methylpyrrolidinium, methylpiperidinium, methylazepanium, methylazocanium, and Methylazonanium cations. ACS Macro Lett. 8 (5), 540–545 (2019).

Barton, A. F. M. CRC Handbook of Solubility Parameters and Other Cohesion Parameters (Routledge, 2017).

Hansen, C. M. The Three Dimensional Solubility Parameter14 (Copenhagen, 1967).

Schwaighofer, A. et al. Accurate solubility prediction with error bars for electrolytes: A machine learning approach. J. Chem. Inf. Model. 47 (2), 407–424 (2007).

Cheng, T. et al. Binary classification of aqueous solubility using support vector machines with reduction and recombination feature selection. J. Chem. Inf. Model. 51 (2), 229–236 (2011).

Lozada, M., Monfort, J. P. & del Río, F. Calculation of solubility parameters from an equation of state. Chem. Phys. Lett. 45 (1), 130–133 (1977).

Panayiotou, C. Solubility parameter revisited: an equation-of-state approach for its Estimation. Fluid. Phase. Equilibria. 131 (1–2), 21–35 (1997).

Goharshadi, E. K. & Hesabi, M. Estimation of solubility parameter using equations of state. J. Mol. Liq. 113 (1–3), 125–132 (2004).

Stefanis, E., Tsivintzelis, I. & Panayiotou, C. The partial solubility parameters: an equation-of-state approach. Fluid. Phase. Equilibria. 240 (2), 144–154 (2006).

Van Krevelen, D. W., Te, K. & Nijenhuis Properties of Polymers: their Correlation with Chemical Structure; their Numerical Estimation and Prediction from Additive Group Contributions (Elsevier, 2009).

Wang, B. et al. Prediction of minimum ignition energy from molecular structure using quantitative structure–property relationship (QSPR) models. Ind. Eng. Chem. Res. 56 (1), 47–51 (2017).

Jiao, Z. et al. Review of recent developments of quantitative structure-property relationship models on fire and explosion-related properties. Process Saf. Environ. Prot. 129, 280–290 (2019).

Jiao, Z. et al. Machine Learning and Deep Learning in Chemical Health and Safety: a Systematic Review of Techniques and Applications27p. 316–334 (ACS Chemical Health & Safety, 2020). 6.

Morimoto, M. et al. Convolutional neural networks for fluid flow analysis: toward effective metamodeling and low dimensionalization. Theoret. Comput. Fluid Dyn. 35 (5), 633–658 (2021).

Yu, Y. et al. Torsional capacity evaluation of RC beams using an improved bird swarm algorithm optimised 2D convolutional neural network. Eng. Struct. 273, 115066 (2022).

Wei, S. et al. A quantum convolutional neural network on NISQ devices. AAPPS Bull. 32, 1–11 (2022).

Li, J. & Yang, H. The underwater acoustic target timbre perception and recognition based on the auditory inspired deep convolutional neural network. Appl. Acoust. 182, 108210 (2021).

Khandokar, I. et al. Handwritten Character Recognition Using Convolutional Neural Network. IOP Publishing. 1918 (4), 042152 (2021).

Lu, J. et al. Enhanced K-nearest neighbor for intelligent fault diagnosis of rotating machinery. Appl. Sci. 11 (3), 919 (2021).

Kang, S. K-nearest neighbor learning with graph neural networks. Mathematics 9 (8), 830 (2021).

Gallego, A. J., Rico-Juan, J. R. & Valero-Mas, J. J. Efficient k-nearest neighbor search based on clustering and adaptive k values. Pattern Recogn. 122, 108356 (2022).

Assegie, T. A. An optimized K-Nearest neighbor based breast cancer detection. J. Rob. Control (JRC). 2 (3), 115–118 (2021).

Lee, J. H., Shi, Z. & Gao, Z. On LASSO for predictive regression. J. Econ. 229 (2), 322–349 (2022).

Tibshirani, R. Regression shrinkage and selection via the Lasso. J. Royal Stat. Soc. Ser. B: Stat. Methodol. 58 (1), 267–288 (1996).

Hastie, T. The Elements of Statistical Learning: Data Mining, Inference, and Prediction (Springer, 2009).

Altelbany, S. Evaluation of ridge, elastic net and Lasso regression methods in precedence of multicollinearity problem: a simulation study. J. Appl. Econ. Bus. Stud. 5 (1), 131–142 (2021).

Gholami, H. et al. Modeling land susceptibility to wind erosion hazards using LASSO regression and graph convolutional networks. Front. Environ. Sci. 11, 1187658 (2023).

Ghiasi, M. M. & Zendehboudi, S. Application of decision tree-based ensemble learning in the classification of breast cancer. Comput. Biol. Med. 128, 104089 (2021).

Schidler, A. & Szeider, S. SAT-based decision tree learning for large data sets. J. Artif. Intell. Res. 80, 875–918 (2024).

Ren, Q. et al. Lithology identification using principal component analysis and particle swarm optimization fuzzy decision tree. J. Petrol. Sci. Eng. 220, 111233 (2023).

Qin, Z. et al. Are neural rankers still outperformed by gradient boosted decision trees?.

Breiman, L. Classification and Regression Trees (Wadsworth International Group, 1984).

Rokach, L. & Maimon, O. Top-down induction of decision trees classifiers-a survey. IEEE Trans. Syst. Man. Cybernetics Part. C (Applications Reviews). 35 (4), 476–487 (2005).

Patel, R. K. et al. A Logistic Regression and Decision Tree Based Hybrid Approach to Predict Alzheimer’s Disease. 2023 International Conference on Computational Intelligence and Sustainable Engineering Solutions (CISES). IEEE, 2023.

Alita, D., Putra, A. D. & Darwis, D. Analysis of classic assumption test and multiple linear regression coefficient test for employee structural office recommendation. IJCCS (Indonesian J. Comput. Cybernetics Systems). 15 (3), 295–306 (2021).

Goldsmith-Pinkham, P., Hull, P. & Kolesár, M. Contamination bias in linear regressions. Am. Econ. Rev. 114 (12), 4015–4051 (2024).

Rahimov, N. & Dilmurod, K. The application of multiple linear regression algorithm and python for crop yield prediction in agriculture. Harv. Educational Sci. Rev., 2(1). (2022).

Guo, L. et al. Data-driven power flow calculation method: A lifting dimension linear regression approach. IEEE Trans. Power Syst. 37 (3), 1798–1808 (2021).

Cheshmehzangi, A. et al. A hierarchical study for urban statistical indicators on the prevalence of COVID-19 in Chinese City clusters based on multiple linear regression (MLR) and polynomial best subset regression (PBSR) analysis. Sci. Rep. 12 (1), p1964 (2022).

Zhou, J. et al. Developing a hybrid model of Jaya algorithm-based extreme gradient boosting machine to estimate blast-induced ground vibrations. Int. J. Rock Mech. Min. Sci. 145, 104856 (2021).

Rawat, R. et al. Analysis of Darknet Traffic for Criminal Activities Detection Using TF-IDF and Light Gradient Boosted Machine Learning Algorithm. Innovations in electrical and electronic engineering: Proceedings of ICEEE 2021, Springer Singapore. 671-681 (2021).

Osman, M. et al. Ml-lgbm: A machine learning model based on light gradient boosting machine for the detection of version number attacks in rpl-based networks. IEEE Access. 9, 83654–83665 (2021).

Bibault, J. E., Chang, D. T. & Xing, L. Development and validation of a model to predict survival in colorectal cancer using a gradient-boosted machine. Gut 70 (5), 884–889 (2021).

Perez, B. C. et al. Prediction performance of linear models and gradient boosting machine on complex phenotypes in outbred mice. G3 12 (4), jkac039 (2022).

Gaye, B., Zhang, D. & Wulamu, A. Improvement of support vector machine algorithm in big data background. Math. Probl. Eng. 2021 (1), 5594899 (2021).

Zhang, H. et al. Distance-based support vector machine to predict DNA N6-methyladenine modification. Curr. Bioinform. 17 (5), 473–482 (2022).

Rodríguez-Pérez, R. & Bajorath, J. Evolution of support vector machine and regression modeling in chemoinformatics and drug discovery. J. Comput. Aided Mol. Des. 36 (5), 355–362 (2022).

Li, C. et al. Stability prediction of hard rock pillar using support vector machine optimized by three metaheuristic algorithms. Int. J. Min. Sci. Technol. 33 (8), 1019–1036 (2023).

Jun, Z. The Development and Application of Support Vector Machine. Journal of Physics: Conference Series, IOP Publishing. 1748 (5), 052006 (2021).

Dasi, H., Ying, Z. & Yang, B. Predicting the consumed heating energy at residential buildings using a combination of categorical boosting (CatBoost) and Meta heuristics algorithms. J. Building Eng. 71, 106584 (2023).

Rondinella, F. et al. Improved predictions of asphalt concretes’ dynamic modulus and phase angle using decision-tree based categorical boosting model. Constr. Build. Mater. 400, 132709 (2023).

Hussain, S. et al. A novel feature engineered-CatBoost-based supervised machine learning framework for electricity theft detection. Energy Rep. 7, 4425–4436 (2021).

Wang, Y. Personality Type Prediction Using Decision Tree, Gbdt, and Cat Boost. 2022 International conference on big data, information and computer network (BDICN), IEEE. 552-558 (2022).

Kim, J. S. Covid-19 prediction and detection using machine learning algorithms: catboost and linear regression. Am. J. Theoretical Appl. Stat. 10 (5), 208 (2021).

Lalwani, P. & Ramasamy, G. Hybrid Principal Component Analysis Using Boosting Classification Techniques: Categorical Boosting. International Conference on Data Science and Applications, Springer Nature Singapore. 229-242 (2023).

Linka, K. & Kuhl, E. A new family of constitutive artificial neural networks towards automated model discovery. Comput. Methods Appl. Mech. Eng. 403, 115731 (2023).

Indira, D. et al. [Retracted] improved artificial neural network with state order dataset Estimation for brain Cancer cell diagnosis. Biomed. Res. Int. 2022 (1), 7799812 (2022).

Kurani, A. et al. A comprehensive comparative study of artificial neural network (ANN) and support vector machines (SVM) on stock forecasting. Annals Data Sci. 10 (1), 183–208 (2023).

Liu, Q. et al. Prediction of chloride diffusivity in concrete using artificial neural network: modelling and performance evaluation. Constr. Build. Mater. 268, 121082 (2021).

Jawad, J., Hawari, A. H. & Zaidi, S. J. Artificial neural network modeling of wastewater treatment and desalination using membrane processes: A review. Chem. Eng. J. 419, 129540 (2021).

Ali, Z. A. et al. eXtreme gradient boosting algorithm with machine learning: A review. Acad. J. Nawroz Univ. 12 (2), 320–334 (2023).

Montomoli, J. et al. Machine learning using the extreme gradient boosting (XGBoost) algorithm predicts 5-day delta of SOFA score at ICU admission in COVID-19 patients. J. Intensive Med. 1 (02), 110–116 (2021).

Liu, Y. et al. Measuring distance using ultra-wideband radio technology enhanced by extreme gradient boosting decision tree (XGBoost). Autom. Constr. 126, 103678 (2021).

Haumahu, J. P., Permana, S. D. H. & Yaddarabullah, Y. Fake News Classification for Indonesian News Using Extreme Gradient Boosting (XGBoost). IOP Conference Series: Materials Science and Engineering, IOP Publishing. 1098 (5), 052081 (2021).

Saldanha, M. et al. Configurable fast block partitioning for VVC intra coding using light gradient boosting machine. IEEE Trans. Circuits Syst. Video Technol. 32 (6), 3947–3960 (2021).

Rufo, D. D. et al. Diagnosis of diabetes mellitus using gradient boosting machine (LightGBM). Diagnostics 11 (9), 1714 (2021).

Alghushairy, O. et al. Machine learning-based model for accurate identification of druggable proteins using light extreme gradient boosting. J. Biomol. Struct. Dynamics. 42 (22), 12330–12341 (2024).

Sanders, W. et al. Data-driven flood alert system (FAS) using extreme gradient boosting (XGBoost) to forecast flood stages. Water 14 (5), 747 (2022).

Ahrens, A., Hansen, C. & Schaffer, M. LASSOPACK: Stata module for lasso, square-root lasso, elastic net, ridge, adaptive lasso estimation and cross-validation. (2024).

Ayllón, S., Brugarolas, P. & Lado, S. New estimates for inequality of opportunity in Europe using elastic net algorithms. Appl. Econ. Lett., : pp. 1–5. (2024).

Alharthi, A. M. EMBEDDED FEATURE SELECTION METHODS WITH HIGH DIMENSIONALITY FOR ELASTIC NET AND LOGISTIC REGRESSION MODELS. (2022).

La Tour, T. D. et al. Feature-space selection with banded ridge regression. NeuroImage 264, 119728 (2022).

Abdulhafedh, A. Comparison between common statistical modeling techniques used in research, including: discriminant analysis vs logistic regression, ridge regression vs LASSO, and decision tree vs random forest. Open. Access. Libr. J. 9 (2), 1–19 (2022).

Patil, P. et al. Uniform Consistency of cross-validation Estimators for high-dimensional Ridge Regression. International conference on artificial intelligence and statistics, PMLR. 3178-3186 (2021).

Bai, J. et al. Multinomial random forest. Pattern Recogn. 122, 108331 (2022).

Breiman, L. Random forests. Mach. Learn. 45, 5–32 (2001).

Hastie, T., Tibshirani, R. & Friedman, J. The elements of statistical learning. Citeseer. (2009).

Wu, Y. & Chang, Y. Ransomware Detection on Linux Using Machine Learning with Random Forest Algorithm (Authorea Preprints, 2024).

He, S. et al. Predictive modeling of groundwater nitrate pollution and evaluating its main impact factors using random forest. Chemosphere 290, 133388 (2022).

Zhou, J., Huang, S. & Qiu, Y. Optimization of random forest through the use of MVO, GWO and MFO in evaluating the stability of underground entry-type excavations. Tunn. Undergr. Space Technol. 124, 104494 (2022).

Bloxham, J. C. et al. Proper use of the DIPPR 801 database for creation of models, methods, and processes. J. Chem. Eng. Data. 66 (1), 3–10 (2020).

Hansen, C. M. Hansen solubility parameters: a user’s handbook (CRC, 2007).

Barton, A. F. CRC Handbook of Solubility Parameters and Other Cohesion Parameters (Routledge, 2017).

Chi, M. et al. Atomistic descriptors for machine learning models of solubility parameters for small molecules and polymers. Polymers 14 (1), 26 (2021).

Ethier, J. G. et al. Predicting phase behavior of linear polymers in solution using machine learning. Macromolecules 55 (7), 2691–2702 (2022).

Acknowledgements

The authors express their gratitude to the Deanship of Scientific Research at Imam Mohammad Ibn Saud Islamic University (IMSIU) for supporting this research project (grant number IMSIU-DDRSP2502).

Funding

This research received financial support from the Deanship of Scientific Research at Imam Mohammad Ibn Saud Islamic University (IMSIU) (grant number IMSIU-DDRSP2502).

Author information

Authors and Affiliations

Contributions

Raouf Hassan: Data curation management, Modeling, Data curation, Drafted the initial manuscript, Reviewed and edited the manuscript.Mohammad Reza Kazemi: Conceptualization, Analyses, Reviewed and edited the manuscript, Supervision.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Authors contribution statement

Raouf Hassan: Data management curation, Modeling, Curation of data, Created the first draft of the manuscript, Evaluated and revised the manuscript.

Mohammad Reza Kazemi: Conceptualization, Analysis, Manuscript review and editing, Oversight.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Hassan, R., Kazemi, M.R. Data-driven frameworks to robustly predict solubility parameter of diverse polymers. Sci Rep 15, 31157 (2025). https://doi.org/10.1038/s41598-025-12758-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-12758-1