Abstract

Microinvasive adenocarcinoma (MIA) and invasive adenocarcinoma (IAC) require distinct treatment strategies and are associated with different prognoses, underscoring the importance of accurate differentiation. This study aims to develop a predictive model that combines radiomics and deep learning to effectively distinguish between MIA and IAC. In this retrospective study, 252 pathologically confirmed cases of ground-glass nodules (GGNs) were included, with 177 allocated to the training set and 75 to the testing set. Radiomics, 2D deep learning, and 3D deep learning models were constructed based on CT images. In addition, two fusion strategies were employed to integrate these modalities: early fusion, which concatenates features from all modalities prior to classification, and late fusion, which ensembles the output probabilities of the individual models. The predictive performance of all five models was evaluated using the area under the receiver operating characteristic curve (AUC), and DeLong’s test was performed to compare differences in AUC between models. The radiomics model achieved an AUC of 0.794 (95% CI: 0.684–0.898), while the 2D and 3D deep learning models achieved AUCs of 0.754 (95% CI: 0.594–0.882) and 0.847 (95% CI: 0.724–0.945), respectively, in the testing set. Among the fusion models, the late fusion strategy demonstrated the highest predictive performance, with an AUC of 0.898 (95% CI: 0.784–0.962), outperforming the early fusion model, which achieved an AUC of 0.857 (95% CI: 0.731–0.936). Although the differences were not statistically significant, the late fusion model yielded the highest numerical values for diagnostic accuracy, sensitivity, and specificity across all models. The fusion of radiomics and deep learning features shows potential in improving the differentiation of MIA and IAC in GGNs. The late fusion strategy demonstrated promising results, warranting further validation in larger, multicenter studies.

Similar content being viewed by others

Introduction

The increasing use of chest CT in clinical practice has led to a growing number of detected ground-glass nodules (GGNs)1,2. Persistent GGNs, defined as those remaining beyond three months, are frequently indicative of pre-invasive adenocarcinoma lesions, ranging from atypical adenomatous hyperplasia (AAH) to adenocarcinoma in situ (AIS), or represent early-stage lung adenocarcinoma, including microinvasive (MIA) and invasive adenocarcinoma (IAC)3. Surgical intervention is typically unnecessary for AAH, whereas complete resection of AIS and MIA is associated with a 100% ten-year disease-free survival rate4. In contrast, IAC often requires more extensive resection due to its invasive nature and is associated with a less favorable prognosis compared to AIS and MIA5,6. Therefore, accurate preoperative prediction of GGN pathology is essential for optimizing treatment strategies in patients with pulmonary nodules.

For GGNs associated with lung adenocarcinoma, direct surgical intervention remains the primary treatment approach. Traditionally, clinicians rely on imaging features (such as pleural indentation, lobulation, and tumor density, etc.) to assess invasiveness, a process that depends heavily on clinical expertise and diagnostic experience7,8. However, due to the dual characteristics of high malignant potential and indolent growth, these conventional methods may lead to overdiagnosis or overtreatment. In recent years, with the growing application of artificial intelligence in medical research, radiomics (Rad) and deep learning (DL) have shown great potential in disease diagnosis, molecular profiling, and treatment response prediction9,10,11. In parallel, recent advances have highlighted the growing application of machine learning (ML) and deep learning algorithms to microscopic images of cells and tissues, enabling accurate cell detection, segmentation, and phenotype classification across a range of disease contexts12. These developments highlight the expanding role of deep learning in biomedical image analysis beyond conventional radiology. While radiomics focuses on quantifying high-dimensional handcrafted features beyond human visual perception, deep learning algorithms automatically extract hierarchical representations from imaging data, offering enhanced diagnostic and prognostic capabilities13,14. Previous studies have demonstrated that radiomics and deep learning can effectively predict the invasiveness of early lung cancer15,16. However, most existing research relies on single-model approaches, often limited to either radiomics or 2D or 3D deep learning. Recent findings indicate that integrating radiomics and deep learning features, leveraging their complementary strengths, enhances predictive accuracy17,18. Therefore, we hypothesize that multi-model fusion will outperform single-model approaches in predicting early invasive lung adenocarcinoma.

Accordingly, this study aims to compare the predictive performance of radiomics, 2D and 3D deep learning models, and fusion strategies in identifying early invasive lung adenocarcinoma, with the goal of providing clinicians with a valuable reference for accurate differentiation.

Methods

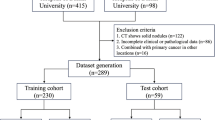

CT images from 252 patients who underwent surgery for suspected invasive lung adenocarcinoma at the First People’s Hospital of Yancheng between January and December 2021 were reviewed in this retrospective study. All scans were acquired using a Siemens Somatom Definition AS + Biograph 64 scanner, with a tube voltage of 120 kVp and a tube current ranging from 150 to 250 mA. Imaging data were retrieved from the hospital’s Picture Archiving and Communication System (PACS). Patients were included if they: (1) had a preoperative thin-slice chest CT (≤ 1 mm) within two weeks prior to surgery; (2) presented with a lung nodule with a maximum diameter of ≤ 3 cm, as in previous studies19,20; (3) had CT-suggested pure or mixed ground-glass nodules, with a solid component ratio between 0% and 50%; (4) were classified as cN0 lymph node stage with no evidence of distant metastasis or multiple cancers, as determined by clinical examination, B-ultrasound, and CT imaging; (5) underwent surgery as their initial intervention without prior neoadjuvant therapy; and (6) had a pathological diagnosis of MIA or IAC. Patients were excluded if they: (1) had pathological findings of precursor lesions or non-lung adenocarcinoma; (2) had poor-quality CT images; or (3) had received prior treatment or undergone needle biopsy. Stratified random sampling was used to allocate the 252 patients into training and testing sets at a 7:3 ratio, with each set containing approximately 60% IAC cases. Figure 1 shows the study workflow. This study was conducted in accordance with the Declaration of Helsinki and was approved by the Ethics Committee of the First People’s Hospital of Yancheng (Approval No. 2024-K-101). Due to the retrospective nature of the study, informed consent was waived by the Ethics Committee of the First People’s Hospital of Yancheng.

Flowchart of the predictive models. Rad model: Radiomics model, 2D_DL model: 2D deep learning model, 3D_DL model: 3D deep learning model, AUC: area under the receiver operating characteristics curve, LR: Logistic Regression, SVM: Support Vector Machine, KNN: k-Nearest Neighbors, ID3: Iterative Dichotomiser 3, RF: Random Forest, XGBoost: eXtreme Gradient Boosting, LightGBM: Light Gradient Boosting Machine.

All patients underwent multi-slice spiral CT scanning in the supine position, with the scan covering the entire lung field. All CT images were standardized with a window width of 1500 HU and a window level of -600 HU, then resampled to a voxel size of 1 mm x 1 mm x 1 mm using bicubic spline interpolation. Two radiologists (with 12 and 25 years of experience in chest CT, respectively) independently interpreted the scans without knowledge of patients’ clinical information, including pathological histology. For any uncertain or suspicious nodules, the radiologists discussed and reached a consensus on their classification as GGNs. Regions of interest (ROI) were manually segmented using ITK-SNAP software (version 4.2.0, http://www.itksnap.org). To ensure the reliability and consistency of ROI delineation, each lesion’s ROI was independently drawn twice (at the time of assessment and two months later) by both radiologists, followed by intraclass correlation coefficient (ICC) analysis of the data.

Radiomics model

Pyradiomics is an open-source Python package for extracting radiomic features from medical images21. These features include first-order statistics, shape descriptors, and texture features derived from matrices like the Gray Level Co-occurrence Matrix (GLCM) and Gray Level Run Length Matrix (GLRLM). In this study, we used Pyradiomics to extract a total of 1,834 features. Z-score normalization was applied to standardize the features. To reduce redundancy and ensure feature stability, features with a Pearson correlation coefficient > 0.9 or an ICC < 0.75 were excluded. For further feature refinement, we employed 10-fold cross-validated Least Absolute Shrinkage and Selection Operator (LASSO) regression for feature selection. A total of 17 features were ultimately retained to construct the predictive model.

Deep learning model

In this study, we developed a 2D diagnostic model based on the ResNet50 architecture to extract features from patient images. For each patient, we selected the axial slice with the maximum lesion cross-section and included two slices above and two below, forming a 5-channel input. All images were resampled to 256 × 256 pixels using linear interpolation. Data augmentation was performed on the resized images, followed by random cropping to a final input size of 224 × 224 pixels. Consistent with previous studies, we used a ResNet50 model pre-trained on the ImageNet dataset and fine-tuned it on our training set17,22,23. During training, cross-entropy loss was used as the objective function, and the Adam optimizer was applied with a learning rate of 0.001 and a batch size of 32. To mitigate overfitting, L2 regularization and an early stopping strategy were employed. After training the 2D ResNet50 model, 2048-dimensional feature vectors were extracted from each patient using the penultimate average pooling layer (avgpool). These features were subsequently reduced to 128 principal components via principal component analysis (PCA). Following a procedure similar to that used for Pyradiomics features, 8 features were ultimately selected for constructing the predictive model.

Compared to 2D approaches, 3D radiomics captures the full spatial depth of anatomical structures, providing a more comprehensive and detailed representation of spatial information. Given the limited availability of pre-trained 3D models in the medical domain, we adopted the ResNet50 model pre-trained on the Med3D dataset as the backbone for our 3D model. Med3D is a large-scale medical imaging dataset that offers a variety of pre-trained 3D deep learning models for common tasks such as classification, segmentation, and detection. It is designed to improve the efficiency and accuracy of 3D medical image analysis through transfer learning24. Data augmentation was applied to each patient’s 3D ROI, which was defined as a manually delineated bounding cube enclosing the tumor area. Linear interpolation was then performed to resample the ROI to a standardized input size of 64 * 64 * 64 voxels for the 3D deep learning model. To adapt the pre-trained 3D ResNet50 model for our classification task, we replaced its decoder layers with fully connected layers. The model was trained using the cross-entropy loss function and the Adam optimizer with a learning rate of 0.001, a batch size of 16, and for a total of 100 epochs. Upon completion of training, we extracted 2048-dimensional features from the penultimate average pooling layer (avgpool) of the model. These features were then reduced to 128 principal components using PCA. Following a selection process consistent with that used for the radiomics features, 7 key features were ultimately retained to construct the predictive model. To further enhance the interpretability of the deep learning models, we applied Gradient-weighted Class Activation Mapping (Grad-CAM) to visualize the discriminative regions contributing to classification decisions.

Fusion model and statistical analysis

To assess the predictive effectiveness of features extracted from radiomics and deep learning for invasive lung adenocarcinoma, we trained and evaluated seven classifiers: Logistic Regression (LR), Support Vector Machine (SVM), k-Nearest Neighbors (KNN), Iterative Dichotomiser 3 (ID3), Random Forest (RF), eXtreme Gradient Boosting (XGBoost), and Light Gradient Boosting Machine (LightGBM). Each classifier was evaluated using ten-fold cross-validation on the training set. Based on these cross-validation results, SVM demonstrated the best overall performance and was selected as the final classifier.

Additionally, we developed two fusion strategies: feature-level fusion (early fusion) and decision-level fusion (late fusion). In the early fusion approach, features extracted from the radiomics, 2D deep learning, and 3D deep learning models were concatenated into a unified feature set, followed by the construction of an SVM-based classifier. In the late fusion strategy, SVM-based stacking ensemble learning was used to integrate the output probabilities of the three individual models.

The performance of all models was evaluated using receiver operating characteristic (ROC) curves, confusion matrices, and diagnostic metrics, such as the area under the ROC curve (AUC), accuracy, and specificity. Comparisons of AUCs between models were conducted using the DeLong test. Confidence intervals (95%) and standard errors for the diagnostic metrics were calculated through 1,000 bootstrap resampling iterations. A two-sided P-value < 0.05 was considered statistically significant. All statistical analyses were performed using R (version 4.4.1) and Python (version 3.12.5).

Results

This study included 252 patients, comprising 187 cases of MIA and 65 cases of IAC. The cohort was randomly divided into a training set (n = 177) and a test set (n = 75) at a 7:3 ratio for model development and validation. No statistically significant differences were found in any clinical variables between the two sets (Table 1). The mean age was 61.1 ± 10.9 years in the training set and 63.2 ± 10.4 years in the testing set, with male proportions of 39.0% and 45.3%, respectively.

A total of 17 radiomic features, 8 2D deep learning features, and 7 3D deep learning features were selected using LASSO regression (Figure S1, S3 and 5). As shown in Figure S2, S4 and S6, the most important features identified within each model were logarithm_glcm_Correlation and original_firstorder_90Percentile for radiomic features, 2D_DL_13 and 2D_DL_16 for 2D deep learning features, and 3D_DL_1 and 3D_DL_6 for 3D deep learning features. Pearson correlation analysis was performed to explore the relationships among different feature types (Fig. 2). Radiomic features exhibited predominantly moderate correlations, indicating potential redundancy among some features. In contrast, correlations among 2D and 3D deep learning features were generally low, with 3D features showing minimal significant intercorrelation. To improve model interpretability, Grad-CAM heatmaps were generated for both the 2D and 3D ResNet50 models. As shown in Supplementary Figures S10–S13, these visualizations highlight critical regions within the tumors that contributed to classification decisions in representative cases of MIA and IAC.

The correlation heatmap of all key features. Radiomics features Rad_1–17 correspond to their original names listed in Supplementary Table S1.

We employed a variety of classifiers, including LR, SVM, KNN, ID3, RF, XGBoost, and LightGBM, to construct binary classification models for radiomics and deep learning features. Among these classifiers, SVM achieved the highest average AUC in both the 2D and 3D models, and ranked second in the radiomics model based on ten-fold cross-validation in the training set, supporting its selection as the final classifier (Figure S7, S8 and S9). Figure 3 illustrates the comparative performance of all classifiers within each model type. In the training set, the radiomics, 2D, and 3D models based on the SVM classifier achieved AUCs of 0.776 (95% CI: 0.685–0.852), 0.808 (95% CI: 0.721–0.885), and 0.843 (95% CI: 0.763–0.909), respectively. In the testing set, the corresponding AUCs were 0.794 (95% CI: 0.684–0.898), 0.754 (95% CI: 0.594–0.882), and 0.847 (95% CI: 0.724–0.945).

AUC comparison of machine learning models in radiomics, 2D and 3D deep learning testing groups and roc analysis of SVM models. LR: Logistic Regression, SVM: Support Vector Machine, KNN: k-Nearest Neighbors, ID3: Iterative Dichotomiser 3, RF: Random Forest, XGBoost: eXtreme Gradient Boosting, LightGBM: Light Gradient Boosting Machine.

The ROC-AUC values for each model are summarized in Table 2. In the training set, the early fusion model achieved the highest AUC of 0.925, slightly exceeding the late fusion model (0.901), and both outperformed the individual models. In the testing set, the late fusion model achieved the highest AUC numerically (0.898), followed by the early fusion model (0.857) and the individual models. However, the differences among models were not statistically significant according to DeLong’s test.

Radar charts (Fig. 4) were used to evaluate the diagnostic performance of each model in the validation set across five metrics: F1 score, accuracy, precision, sensitivity, and specificity, thereby providing a comprehensive assessment of performance balance. The radiomics and 2D models demonstrated relatively modest performance. The 3D model performed well on several metrics but was slightly outperformed by the late fusion model. The late fusion model exhibited superior performance across multiple metrics, achieving the highest values in F1 score, accuracy, precision, and specificity, and demonstrated the most balanced diagnostic capability among all models. To further compare the fusion strategies, Fig. 5 presents the ROC curves and confusion matrices for both the early and late fusion models. The late fusion model achieved a higher AUC and better classification accuracy than the early fusion model, as reflected in its ROC curve and confusion matrix.

Discussion

Currently, the clinical management of GGNs presents significant challenges, particularly due to overdiagnosis and excessive follow-up strategies. These issues often lead to unnecessary interventions and impose a considerable psychological and emotional burden on patients. This study compared several models, including radiomics, 2D deep learning, 3D deep learning, and two fusion strategies—for predicting invasive adenocarcinoma (IAC). Among these, the late fusion model achieved the highest performance in the testing set, with an AUC of 0.898. By integrating radiomics and deep learning features through ensemble learning, this model may offer a valuable approach for identifying IAC in GGNs with less than 50% solid components.

Most GGNs remain stable for long periods. However, some IACs may exhibit vascular or stromal invasion and even lymph node metastasis25,26. The management strategies and prognoses of GGNs differ substantially based on their degree of invasiveness, underscoring the importance of early and accurate identification of IAC to optimize treatment decisions and improve patient outcomes. At present, the prediction of GGN invasiveness in clinical practice primarily depends on conventional CT imaging features, such as nodule size, density, morphology, and vascularity, which remain highly reliant on radiologist expertise and are subject to interobserver variability. However, previous studies have shown that such conventional assessments generally achieve an AUC of approximately 0.727,28,29, which is insufficient for clinical application. In contrast, radiomics and deep learning approaches have demonstrated markedly improved performance in recent studies by extracting high-dimensional features beyond human perception9,10,11. Our study further supports the potential of CT-based artificial intelligence models to improve the prediction of GGN invasiveness. Nonetheless, despite these promising research findings, the routine clinical application of radiomics and deep learning remains limited due to challenges such as limited interpretability, lack of standardized validation, and insufficient integration into clinical workflows.

In this study, we developed and validated radiomics, 2D deep learning, and 3D deep learning models, with the 3D deep learning-based SVM model demonstrating the best overall performance. Consistent with our findings, radiomics-based models developed by Li et al. and Song et al. have shown good performance in differentiating between invasive and pre-invasive lesions across various imaging datasets30,31. A 3D deep transfer learning model trained on 999 lung GGN images demonstrated high diagnostic performance in distinguishing IAC from MIA, achieving AUCs of 95% in the training set, 89% in one validation cohort, and 82% in another32. Similarly, another study reported that a 3D deep learning approach outperformed other modeling techniques for GGN classification33. Huang et al. compared radiomics and deep learning methods using the DeLong test and found that deep learning exhibited superior performance in predicting GGN malignancy34. In our study, 3D deep learning also outperformed both 2D and radiomics models. This may be attributed to its ability to capture volumetric spatial context, better characterize tumor heterogeneity, and autonomously extract high-level features. Compared with 2D models that rely on individual slices, 3D approaches provide a more comprehensive representation of lesion morphology. Furthermore, unlike radiomics, which depends on predefined handcrafted features, deep learning enables data-driven feature learning, potentially improving performance in complex classification tasks.

In addition, our results suggest that the late fusion approach may offer advantages over early fusion. Consistent with this observation, Wang et al. reported that a late fusion strategy achieved better performance than early fusion in predicting occult lymph node metastasis in laryngeal squamous cell carcinoma using CT-based models17. Similarly, a study combining CT and electronic health records for pulmonary embolism prediction identified the late fusion model as the most effective among the fusion strategies evaluated35. A recent meta-analysis comparing radiomics, deep learning, and multimodal models also found that most (63%) fusion approaches performed favorably compared to single-modality models36. Compared with early fusion, late fusion presents several potential advantages. When input modalities are heterogeneous or weakly correlated, late fusion can help prevent one modality from dominating the predictive output by processing each source independently. This strategy may also reduce model complexity by avoiding high-dimensional concatenated feature vectors. Moreover, late fusion can be more adaptable in cases with incomplete data, as it allows for independent model training per modality and supports flexible integration mechanisms to account for missing inputs.

With the advent of the big data era, radiomics and deep learning have attracted increasing interest not only in thoracic oncology but also in a variety of other malignancies, including breast cancer, prostate cancer, brain tumors, and gastrointestinal cancers19,37,38. These techniques are increasingly being explored as potential tools for characterizing tumor heterogeneity, facilitating risk stratification, and supporting prognostic assessment. For instance, a radiomics model based on preoperative CT images, which combines handcrafted feature extraction with discriminant analysis, has been applied to classify low- and high-risk rectal cancer patients39. Similarly, a 3D ResNet-based convolutional neural network was developed to extract deep imaging features from CT scans across multiple solid tumors and, when combined with conventional classifiers, showed utility in identifying tumor subtypes in cancers such as lung, melanoma, and prostate40. In addition, recent studies have investigated the use of a 3D DenseNet-based model in predicting pathological grade in prostate cancer41and a modified ResNet-18–based AsymMirai model in assessing molecular subtypes in brain tumors42. These advances provide a foundation for further development of predictive models tailored to specific cancer types, including the present study focused on ground-glass lung adenocarcinoma.

This study has several limitations. First, it was a single-center retrospective study with a relatively small sample size and lacked external validation, which may limit the generalizability of the findings across institutions and imaging protocols. Second, only surgically resected cases were included to ensure definitive pathological confirmation, potentially introducing selection bias and limiting the applicability of the results to patients managed conservatively through surveillance or non-surgical approaches. Third, the exclusion of non-adenocarcinoma diagnoses and early preinvasive lesions (e.g., AAH, AIS) was intended to ensure histological consistency and focus on the clinically challenging differentiation between MIA and IAC; however, this may reduce the model’s applicability to the broader histological spectrum of GGNs. Fourth, although hyperparameter tuning was performed within the training set, final model selection was partially influenced by test set performance, potentially introducing overfitting. Finally, manual segmentation of regions of interest may lead to subjectivity and inter-operator variability, which could affect model robustness.

In conclusion, we developed a fusion model integrating radiomics, 2D and 3D deep learning features, which demonstrates the potential to enhance the accuracy of chest CT in classifying MIA and IAC. Although further validation is needed, this non-invasive and reproducible approach may assist in preoperative risk stratification and support individualized treatment strategies for patients with GGNs.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

de Koning, H. J. et al. Reduced Lung-Cancer mortality with volume CT screening in a randomized trial. N Engl. J. Med. 382, 503–513. https://doi.org/10.1056/NEJMoa1911793 (2020).

Zhong, Y. et al. Deep learning for prediction of N2 metastasis and survival for clinical stage I Non-Small cell lung cancer. Radiology 302, 200–211. https://doi.org/10.1148/radiol.2021210902 (2022).

Nicholson, A. G. et al. The 2021 WHO classification of lung tumors: impact of advances since 2015. J. Thorac. Oncol. 17, 362–387. https://doi.org/10.1016/j.jtho.2021.11.003 (2022).

Yotsukura, M. et al. Long-Term prognosis of patients with resected adenocarcinoma in situ and minimally invasive adenocarcinoma of the lung. J. Thorac. Oncol. 16, 1312–1320. https://doi.org/10.1016/j.jtho.2021.04.007 (2021).

Ma, Z., Wang, Z., Li, Y., Zhang, Y. & Chen, H. Detection and treatment of lung adenocarcinoma at pre-/minimally invasive stage: is it lead-time bias? J. Cancer Res. Clin. Oncol. 148, 2717–2722. https://doi.org/10.1007/s00432-022-04031-z (2022).

Watanabe, Y. et al. Clinical impact of a small component of ground-glass opacity in solid-dominant clinical stage IA non-small cell lung cancer. J. Thorac. Cardiovasc. Surg. 163, 791–801 e794 (2022). https://doi.org/10.1016/j.jtcvs.2020.12.089

Jiang, Y. et al. Radiomic signature based on CT imaging to distinguish invasive adenocarcinoma from minimally invasive adenocarcinoma in pure ground-glass nodules with pleural contact. Cancer Imaging. 21, 1. https://doi.org/10.1186/s40644-020-00376-1 (2021).

Volmonen, K. et al. Association of CT findings with invasive subtypes and the new grading system of lung adenocarcinoma. Clin. Radiol. 78, e251–e259. https://doi.org/10.1016/j.crad.2022.11.011 (2023).

Tran, K. A. et al. Deep learning in cancer diagnosis, prognosis and treatment selection. Genome Med. 13, 152. https://doi.org/10.1186/s13073-021-00968-x (2021).

Huang, S., Yang, J., Shen, N., Xu, Q. & Zhao, Q. Artificial intelligence in lung cancer diagnosis and prognosis: current application and future perspective. Semin Cancer Biol. 89, 30–37. https://doi.org/10.1016/j.semcancer.2023.01.006 (2023).

Zhang, C. et al. Novel research and future prospects of artificial intelligence in cancer diagnosis and treatment. J. Hematol. Oncol. 16, 114. https://doi.org/10.1186/s13045-023-01514-5 (2023).

Ali, M. et al. Applications of artificial intelligence, deep learning, and machine learning to support the analysis of microscopic images of cells and tissues. J. Imaging 11. https://doi.org/10.3390/jimaging11020059 (2025).

Lee, G., Park, H., Bak, S. H. & Lee, H. Y. Radiomics in lung cancer from basic to advanced: current status and future directions. Korean J. Radiol. 21, 159–171. https://doi.org/10.3348/kjr.2019.0630 (2020).

Zhou, S. K. et al. A review of deep learning in medical imaging: Imaging traits, technology trends, case studies with progress highlights, and future promises. Proc. IEEE Inst. Electr. Electron Eng. 109, 820–838 (2021). https://doi.org/10.1109/JPROC.2021.3054390

Sun, H. et al. Multi-classification model incorporating radiomics and clinic-radiological features for predicting invasiveness and differentiation of pulmonary adenocarcinoma nodules. Biomed. Eng. Online. 22, 112. https://doi.org/10.1186/s12938-023-01180-1 (2023).

Zhou, J. et al. An ensemble deep learning model for risk stratification of invasive lung adenocarcinoma using thin-slice CT. NPJ Digit. Med. 6, 119. https://doi.org/10.1038/s41746-023-00866-z (2023).

Wang, W. et al. Comparing three-dimensional and two-dimensional deep-learning, radiomics, and fusion models for predicting occult lymph node metastasis in laryngeal squamous cell carcinoma based on CT imaging: a multicentre, retrospective, diagnostic study. EClinicalMedicine 67, 102385. https://doi.org/10.1016/j.eclinm.2023.102385 (2024).

Pease, M. et al. Outcome prediction in patients with severe traumatic brain injury using deep learning from head CT scans. Radiology 304, 385–394. https://doi.org/10.1148/radiol.212181 (2022).

Xia, X. et al. Comparison and fusion of deep learning and radiomics features of Ground-Glass nodules to predict the invasiveness risk of Stage-I lung adenocarcinomas in CT scan. Front. Oncol. 10, 418. https://doi.org/10.3389/fonc.2020.00418 (2020).

AlShammari, A. et al. Prevalence of invasive lung cancer in pure ground glass nodules less than 30 mm: A systematic review. Eur. J. Cancer. 213, 115116. https://doi.org/10.1016/j.ejca.2024.115116 (2024).

van Griethuysen, J. J. M. et al. Computational radiomics system to Decode the radiographic phenotype. Cancer Res. 77, e104–e107. https://doi.org/10.1158/0008-5472.CAN-17-0339 (2017).

Lang, D. M., Peeken, J. C., Combs, S. E., Wilkens, J. J. & Bartzsch, S. Deep learning based HPV status prediction for oropharyngeal cancer patients. Cancers (Basel). 13. https://doi.org/10.3390/cancers13040786 (2021).

Tian, W. et al. Predicting occult lymph node metastasis in solid-predominantly invasive lung adenocarcinoma across multiple centers using radiomics-deep learning fusion model. Cancer Imaging. 24, 8. https://doi.org/10.1186/s40644-024-00654-2 (2024).

Zheng, S. C. K. M. Y. Med3D: transfer learning for 3D medical image analysis. https://doi.org/10.48550/arXiv.1904.00625 (2019).

Lu, C. H. et al. Percutaneous computed tomography-guided coaxial core biopsy for small pulmonary lesions with ground-glass Attenuation. J. Thorac. Oncol. 7, 143–150. https://doi.org/10.1097/JTO.0b013e318233d7dd (2012).

Chen, K. N. The diagnosis and treatment of lung cancer presented as ground-glass nodule. Gen. Thorac. Cardiovasc. Surg. 68, 697–702. https://doi.org/10.1007/s11748-019-01267-4 (2020).

Zheng, H., Zhang, H., Wang, S., Xiao, F. & Liao, M. Invasive prediction of ground glass nodule based on clinical characteristics and radiomics feature. Front. Genet. 12, 783391. https://doi.org/10.3389/fgene.2021.783391 (2021).

Zhao, Q., Wang, J. W., Yang, L., Xue, L. Y. & Lu, W. W. CT diagnosis of pleural and stromal invasion in malignant subpleural pure ground-glass nodules: an exploratory study. Eur. Radiol. 29, 279–286. https://doi.org/10.1007/s00330-018-5558-0 (2019).

He, W., Guo, G., Du, X., Guo, S. & Zhuang, X. CT imaging indications correlate with the degree of lung adenocarcinoma infiltration. Front. Oncol. 13, 1108758. https://doi.org/10.3389/fonc.2023.1108758 (2023).

Song, F. et al. A Multi-Classification model for predicting the invasiveness of lung adenocarcinoma presenting as pure Ground-Glass nodules. Front. Oncol. 12, 800811. https://doi.org/10.3389/fonc.2022.800811 (2022).

Fan, L. et al. Radiomics signature: a biomarker for the preoperative discrimination of lung invasive adenocarcinoma manifesting as a ground-glass nodule. Eur. Radiol. 29, 889–897. https://doi.org/10.1007/s00330-018-5530-z (2019).

Fu, C. L. et al. Discrimination of ground-glass nodular lung adenocarcinoma pathological subtypes via transfer learning: A multicenter study. Cancer Med. 12, 18460–18469. https://doi.org/10.1002/cam4.6402 (2023).

Wang, D., Zhang, T., Li, M., Bueno, R. & Jayender, J. 3D deep learning based classification of pulmonary ground glass opacity nodules with automatic segmentation. Comput. Med. Imaging Graph. 88, 101814. https://doi.org/10.1016/j.compmedimag.2020.101814 (2021).

Huang, W. et al. Baseline whole-lung CT features deriving from deep learning and radiomics: prediction of benign and malignant pulmonary ground-glass nodules. Front. Oncol. 13, 1255007. https://doi.org/10.3389/fonc.2023.1255007 (2023).

Huang, S. C., Pareek, A., Zamanian, R., Banerjee, I. & Lungren, M. P. Multimodal fusion with deep neural networks for leveraging CT imaging and electronic health record: a case-study in pulmonary embolism detection. Sci. Rep. 10, 22147. https://doi.org/10.1038/s41598-020-78888-w (2020).

Demircioglu, A. Are deep models in radiomics performing better than generic models? A systematic review. Eur. Radiol. Exp. 7, 11. https://doi.org/10.1186/s41747-023-00325-0 (2023).

Ardila, D. et al. Author correction: End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography. Nat. Med. 25, 1319. https://doi.org/10.1038/s41591-019-0536-x (2019).

Ardila, D. et al. End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography. Nat. Med. 25, 954–961. https://doi.org/10.1038/s41591-019-0447-x (2019).

Canfora, I. et al. A Predictive System to Classify Preoperative Grading of Rectal Cancer Using Radiomics Features. 431–440 (Springer).

Leung, K. H. et al. Deep semisupervised transfer learning for fully automated Whole-Body tumor quantification and prognosis of cancer on PET/CT. J. Nucl. Med. 65, 643–650. https://doi.org/10.2967/jnumed.123.267048 (2024).

Zhao, L. et al. Predicting clinically significant prostate cancer with a deep learning approach: a multicentre retrospective study. Eur. J. Nucl. Med. Mol. Imaging. 50, 727–741. https://doi.org/10.1007/s00259-022-06036-9 (2023).

Zhu, Y. et al. Deep learning and habitat radiomics for the prediction of glioma pathology using multiparametric MRI: A multicenter study. Acad. Radiol. 32, 963–975. https://doi.org/10.1016/j.acra.2024.09.021 (2025).

Funding

The study was supported by the Medical Empowerment Public Welfare Special Fund of the Chinese Red Cross Foundation (2022 Leading Elite Clinical Research Project, XM_LHJY2022_05_33), the 2024 Medical Research Program of the Yancheng Municipal Health Commission (YK2024015), and the 2024 Medical Research Program of the Yancheng Municipal Health Commission (YK2024092).

Author information

Authors and Affiliations

Contributions

Guarantors of integrity of entire study, QS, LY, JX, ShH; study concepts/study design or data acquisition or data analysis/interpretation, all authors; manuscript drafting or manuscript revision for important intellectual content, all authors; approval of final version of submitted manuscript, all authors; agrees to ensure any questions related to the work are appropriately resolved, all authors; literature research, QS, LY, CW, ZqS, W.L; clinical studies, QS, CW, ZqS, JX, ShH; experimental studies, QS, LY, CW, ZqS, JX, ShH; statistical analysis, QS, LY, WC, WL, ShH; and manuscript editing, QS, LY, JX, ShH.All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval and consent to participate

This study was conducted in accordance with the Declaration of Helsinki and was approved by the Ethics Committee of the First People’s Hospital of Yancheng (Approval No. 2024-K-101). Due to the retrospective nature of the study, informed consent was waived by the Ethics Committee of the First People’s Hospital of Yancheng. Prior to analysis, all patient information was anonymized.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Sun, Q., Yu, L., Song, Z. et al. Deep learning and radiomics fusion for predicting the invasiveness of lung adenocarcinoma within ground glass nodules. Sci Rep 15, 29285 (2025). https://doi.org/10.1038/s41598-025-13447-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-13447-9