Abstract

AI-based methods have been widely adopted in tourism demand forecasting. However, current AI-based methods are weak in capturing long-term dependency, and most of them lack interpretability. This study proposes a time series Transformer (Tsformer) with Encoder-Decoder architecture for tourism demand forecasting. The Tsformer encodes long-term dependencies with the encoder, merges the calendar of data points in the forecast horizon, and captures short-term dependencies with the decoder. Experiments on two datasets demonstrate that Tsformer outperforms nine baseline methods in short-term and long-term forecasting before and after the COVID-19 outbreak. Further ablation studies confirm that the adoption of the calendar of data points in the forecast horizon benefits the forecasting performance. Our study provides an alternative method for more accurate and interpretable tourism demand forecasting.

Similar content being viewed by others

Introduction

Despite the slowdown of the global economy, tourism spending continues to grow. From 2009 to 2019, the actual growth of international tourism income (54%) exceeded global GDP growth (44%)1. According to a report by Calderwood & Soshkin, tourism plays a vital role in the global economy and community. In 2018, the tourism industry contributed 10.4% of the global GDP, as did related jobs. Tourism is fast growing, and tourism demand continues to grow2. Accurate tourism demand forecasting benefits managers from enterprises and governments in formulating more efficient public policies and commercial decisions. Enterprises and governments have started to increase investment in tourism demand forecasting to increase the accuracy of tourism demand forecasting.

However, hotel closures, flight suspensions, and other problems caused by the COVID-19 pandemic have severely impacted the tourism industry. At present, the number of global tourists is significantly smaller than that before the COVID-19 pandemic and is slowly increasing3. Compared with that in 2019, the number of global tourists was 73% smaller in 2020 and 72% smaller in 20214. The income of the tourism industry is an important part of the global GDP, and the impact of COVID-19 on the tourism industry is also reflected in the global GDP. The 2020 global GDP loss from the COVID-19 pandemic is estimated to be approximately $2 trillion, or 2.2% of the total5. Forecasting the recovery of tourism demand is now a major focus of enterprises and governments.

Currently, methods based on time series models are widely adopted6. With the development of deep learning technology, several AI-based methods have been adopted in studies7, e.g., Artificial Neural Network (ANN)8, Long Short-Term Memory (LSTM)9, Support Vector Regression (SVR)10, and Convolutional Neural Network (CNN)11. Generally, AI-based methods achieve better performance, but AI-based methods are considered to be short of interpretability. In terms of the data source, various variables, e.g., weather, holiday, and search engine indices, are adopted as features or exogenous variables by current studies to enhance forecasting performance12,13.

In recent AI-based time series forecasting methods, ANN did not possess the structure to process time series14. The connection of LSTM forms a cycle and allows the signal to flow in different directions and process time series15. Nevertheless, LSTM still faces nonparallel training, gradient vanishing when the LSTM network becomes deeper16, and memory capacity limitations. In forward propagation, LSTM computes by time step. Owing to the insufficient memory capacity of the cell state, the long-term information can vanish gradually with the process of computation17. The CNN is more concerned with the local feature, and the CNN has an excellent ability for local feature extraction. However, for global features, multiple layers of CNN are required to obtain a larger receptive field18. In the study by Yan, et al.19, a combination of CNN and LSTM was adopted for air quality forecasting in Beijing. Lu, et al.20 proposed GA-CNN-LSTM for forecasting the daily tourist flow of scenic spots. CNN-LSTM only enhances the concern of short-term patterns but remains the issue of long-term information loss. Another considerable bottleneck of AI-based methods, including ANN and LSTM, is the lack of interpretability; i.e., researchers cannot reliably recognize which part of the input sequence contributes to the output of the models21.

We propose a time series Transformer (Tsformer) based on the Transformer for tourism demand forecasting. The advantages of the proposed Tsformer are parallel computation and long-term dependency processing, and the residual connection allows the Tsformer to stack deeper. The Tsformer overcomes nonparallel training, gradient vanishing, the memory capacity limitation of LSTM, and the requirement of a deeper CNN to obtain a larger receptive field. Currently, the Transformer is widely used in natural language processing (NLP) and is being adopted in the computer vision field; however, few studies have introduced the Transformer into time series forecasting. Thus far, studies have not used the Transformer for tourism demand forecasting. To exploit the excellent long-term dependency capturing ability of the Transformer for tourism demand forecasting, we apply architecture and Attention masking mechanism improvements to the Transformer and propose the Tsformer for tourism demand forecasting. Moreover, the calendar of data points in the forecast horizon was adopted to enhance the forecasting performance. To compare the performance of the proposed Tsformer and commonly used methods, experiments were designed to compare the Tsformer and nine baseline methods on the Jiuzhaigou Valley and Siguniang Mountain tourism demand datasets. The results of the experiments indicate that the Tsformer outperforms all the baseline methods in short-term and long-term tourism demand forecasting tasks before and after the COVID-19 outbreak. On the basis of the experiments, ablation studies were conducted on the two datasets and demonstrated that adopting a calendar of data points in the forecast horizon benefits the performance of the Tsformer for tourism demand forecasting. Finally, visualization of the Attention weight matrix is performed to reveal the critical information that the Tsformer attends to the Jiuzhaigou Valley dataset. This research indicates that the proposed Tsformer can extract dependencies of the tourism demand sequence efficiently, enhancing the interpretability of the proposed Tsformer.

In summary, the main contributions of this study are as follows:

(1) We propose Tsformer for tourism demand forecasting, making this work one of the very few that introduce a Transformer-based method to tourism demand forecasting. With an optimized structure for tourism demand forecasting, the proposed Tsformer performs well in short-term and long-term forecasting.

(2) We utilize multiple features for tourism demand forecasting, including the search engine index, daily weather, highest temperature, wind, air quality, and calendar. Moreover, a calendar of data points in the forecast horizon is introduced to enhance the performance of the proposed Tsformer. An ablation study is designed to demonstrate that the use of the calendar of data points in the forecast horizon benefits tourism demand forecasting.

(3) We conduct comparison experiments with several baseline methods on the Jiuzhaigou Valley and Siguniang Mountain datasets to demonstrate the effectiveness of the proposed Tsformer. The proposed Tsformer outperforms all the other baselines.

(4) We examine the performance of the proposed Tsformer in forecasting tourism demand recovery by running experiments on the Jiuzhaigou Valley and Siguniang Mountain datasets after the COVID-19 outbreak, and the proposed Tsformer achieves competitive performance.

(5) We interpret the results of the proposed Tsformer through visualization of the Attention weight matrix, whereas most of the AI-based tourism demand forecasting methods lack interpretability.

The other parts of this paper are arranged as follows. Section “Literature review” introduces the related work of this study. Section “Methodology” describes the vanilla Transformer and the proposed Tsformer. In Sect. “Experiments”, we present experiments among the proposed Tsformer and nine baseline models on the Jiuzhaigou Valley and Siguniang Mountain datasets, as well as corresponding ablation studies of the Tsformer performed on the two datasets. Section “Interpretability” analyses the interpretability of the Tsformer. Section “Discussion and conclusion” discusses the conclusions of this study and future work.

Literature review

Tourism demand forecasting models

Over 600 studies on tourism demand forecasting have been published in recent decades; some of these studies have been adopted in industry, which benefits governments and enterprises6. The current forecasting methods can be divided into four classes: subjective approaches, time series models, econometric models, and AI-based models. Some studies have used multiple methods or a combination of them to enhance forecasting performance.

Time series models are basic or advanced22. Basic time series models include Naive, Auto Regression (AR), Moving Average (MA), Exponential Smoothing (ES), Historical Average (HA), etc. Generally, the Naive method achieves better performance in datasets that do not change drastically in short-term patterns23. However, in drastically changing datasets or long-term forecasts, the performance of the Naive method declines dramatically24. The Naive method is widely adopted by studies as a primary benchmark for evaluating proposed methods. Basic time series models cannot harness seasonal features and require the input sequence to be stationary. Nevertheless, tourism activities have strong seasonal features, and seasonal features are considered essential features of tourism demand forecasting25. Compared with basic time series models, advanced time series models harness trend and seasonal features. The advanced time series models include seasonal Naive, ARIMA, ARIMAX26, SARIMA, SARIMAX27, etc. Seasonal Naive uses the historical ground truth as a prediction28, SARIMA is a variant of ARIMA with seasonality, and ARIMAX is an ARIMA with exogenous variables. On the basis of ARIMAX and SARIMA, SARIMAX harnesses seasonal features and exogenous variables simultaneously27 and has become one of the most widely used models in tourism demand forecasting.

Econometric models are static or dynamic22. Static econometric models include linear regression and the gravity model. Vector autoregressive (VAR), error correction models (ECM), and time-varying parameter (TVP) are three representative dynamic econometric models29. Compared with static econometric models, dynamic econometric models can capture the time variation of consumer preferences and enhance the forecasting performance of econometric models.

When processing a large amount of data, AI-based methods generally outperform traditional methods. The excellent performance of AI-based methods could rely on their internal feature engineering ability30. However, AI-based methods are considered black boxes, i.e., AI-based methods are weak in interpretability. Recently, with the development of AI, more AI-based methods, e.g., SVR, k-Nearest Neighbor (k-NN)31, ANN, and Recurrent Neural Network (RNN), have been adopted. Compared with linear regression, the hidden layers and activation functions of ANNs result in excellent nonlinear function fitting ability15,32. Nevertheless, the shortcoming of ANNs for time series forecasting is that ANNs do not have a good architecture for processing time series15. Therefore, RNNs have been adopted by some studies. LSTM is an improved variant of RNN. Compared with RNN, LSTM adds a cell state to memorize long-term dependencies and uses an input gate, an output gate, and a forget gate to handle the cell state and mitigate gradient vanishing33. LSTM is currently adopted in some tourism demand forecasting studies and has achieved high forecasting performance. Lv, et al.34 used LSTM to forecast the tourism demand of America, Hainan, Beijing, and Jiuzhaigou Valley. In the study by Zhang, et al.35, LSTM was adopted in hotel accommodation forecasting. In addition to LSTM, several variants of LSTM have been used in related studies. Bidirectional LSTM (Bi-LSTM) is considered to perform better in processing long-term dependency. In a recent study by Kulshrestha, et al.36, Bi-LSTM was used to forecast tourism demand in Singapore.

In 2017, Vaswani, et al.37 proposed Transformer to perform machine translation; then, the Transformer was used to construct large-scale language models such as BERT. BERT achieves state-of-the-art performance in multiple tasks38. Owing to its excellent performance on long sequences, the Transformer is introduced into computer vision. Related studies have been conducted on image classification39, object detection40, object tracking41, and semantic segmentation42. Recently, few studies have attempted to exploit the potential of Transformer for time series forecasting. Fan, et al.43 enhanced the ability to capture the subtle features in a data stream and reduced the memory usage of the Transformer in time series forecasting. Lim, et al.44 combined LSTM and Multi-Head Attention for time series forecasting. Although the Transformer outperforms LSTM in multiple fields, no study has introduced the Transformer into tourism demand forecasting.

We provide Table 1 to better compare the above methods:

Search engine index

In 2012, hotel room demand forecasting45 and travel destination planning forecasting46 adopted Google Trends and showed that the adoption of the search engine index enhances forecasting performance. Subsequent studies adopted Google Trends and the Baidu index. The Baidu index is used more in tourism activities related to China, whereas the Google Trends index is used more in the tourism activities of Europe and America7. According to the study by Yang, et al.47, the Baidu index outperforms Google Trends in China-related studies. In recent studies, the number of keywords on the search engine index has increased. Li, et al.13 use 45 keywords to forecast tourism demand in Beijing; the Pearson correlation coefficients between these indices and tourism demand are calculated, which indicates that tourism demand does not strongly correlate with all indices. To avoid loss of features, all search engine indices are preserved, and principal component analysis (PCA) is adopted to perform dimensionality reduction. In the study by Law, et al.30, up to 256 keywords were used for Macau tourist arrival volume forecasting. Researchers hope to represent every aspect of tourists’ interests in tourism destinations by covering multiple keywords related to tourism destinations.

Methodology

Transformer

Figure 1 presents the basic architecture of the Transformer. The Transformer is an Encoder–Decoder model based on Multi-Head Attention. The encoder generates the corresponding syntax vector of the input sequence, whereas the decoder generates the target sequence according to the input sequence of the decoder and the output syntax vector of the encoder. In recent studies, generally, LSTM or gated recurrent unit (GRU) have been adopted in sequence modelling. They are essentially RNNs, while the Transformer is a network that differs from the RNN; the core of the Transformer is the Multi-Head Attention mechanism, which allows the computing Attention of multiple time steps simultaneously and does not require state accumulation in order.

Multi-Head attention

The generalized Attention mechanism can be expressed as follows:

where Q, K, and V represent the query, key, and value in the Attention mechanism, respectively, and the weight of the value is calculated through the query and key. Then, the calculated weight is used to perform a weighted sum of the values. The Attention calculation in the Transformer is implemented by Scaled Dot-Product Attention, which is shown in Fig. 2(a). The Scaled Dot-Product Attention can be denoted as follows:

where \({d_k}\) represents the dimension of the key.

Figure 2(b) presents the calculation of Multi-Head Attention; in the Multi-Head Attention mechanism, the calculation of the i-th Attention head can be represented as follows:

where \(W_{i}^{Q}\), \(W_{i}^{K}\) and \(W_{i}^{V}\) denote the linear transformations of Q, K, and V of the i-th Attention head, respectively.

Multi-Head Attention is the concatenation of each Attention head. Let n denote the number of Attention heads; Multi-Head Attention can be denoted as follows:

where concat represents the concatenate operation. \({W^O}\) represents the linear transformation of the concatenated output.

The internal Multi-Head Attention helps the Transformer overcome the shortcomings of nonparallel training and the bottleneck of the memory capacity in processing the long-term dependency of the LSTM or GRU.

Feed-forward network

The feed-forward network (FFN) in Fig. 1 comprises two linear transformations and Rectified Linear Unit (ReLU) activation function. The computation of the feed-forward network is positionwise, i.e., the weight of the linear transformation of each time step is identical. The formula of the feed-forward network can be given as follows:

The ReLU activation function is used to mitigate gradient vanishing and gradient explosion and accelerate convergence. However, all the negative values of the output will be restricted to zero, which may cause the problem of neuron inactivation, and no valid gradient will be obtained in backward propagation to train the network. Consequently, we replace the ReLU activation function with Exponential Linear Unit(ELU) activation function48. Unlike the ReLU, the ELU has a negative output, which ensures that the ELU is more robust to noise. The ELU and feed-forward network with the ELU can be denoted as follows:

Masked Multi-Head attention

Masked Multi-Head Attention includes an optional masking mechanism, which determines the method of Attention calculation, i.e., prevents Attention from attending time steps in specific locations. In general, the mask in masked Multi-Head Attention is an \(m \times n\) matrix. In Self-Attention, m equals n, and the mask is a square matrix, where m or n denotes the length of the input sequence. Moreover, in encoder–decoder Attention, m denotes the length of the decoder Self-Attention results, and n denotes the length of the encoder output. The formula of Scaled Dot-Product Attention in masked Multi-Head Attention can be given as follows:

where M denotes the mask matrix, 0 elements in the mask represent the corresponding time steps that will be attended to, and negative infinity elements represent the corresponding time steps that will be ignored in the Attention calculation. After Softmax in Scaled Dot-Product Attention, the corresponding Attention weight of each negative infinity element will be infinitely close to 0, so any information from the masked time steps will not flow into the current time step.

Tsformer

Input and output of Tsformer

For model input, a rolling window strategy is adopted, that is, the data are taken for a period of time iteratively with a step size of 1 according to a specific window size. Three parameters are used to control the rolling window size and the number of steps to be forecasted: the encoder input length, the decoder input length, and the forecast horizon. When 1-day-ahead forecasting is performed, the three parameters are set to 7, 5, and 1. Figure 3 shows the input and output of the Tsformer; \({T_i}\) denotes the i-th day; and \({P_i}\) denotes the forecasting of the i-th day. The encoder and decoder inputs of the Tsformer in Fig. 3 contain overlap, aiming to reuse the potential raw features of short-term dependency to prevent the degradation of Attention to short-term dependency.

For 1-day-ahead forecasting, the proposed method utilizes features from the past 7 days. The inputs of the encoder are the features in \(\:{T}_{1}\) to \(\:{T}_{7}\), the inputs of the decoder are the features in \(\:{T}_{4}\) to \(\:{T}_{7}\) and \(\:Toke{n}_{8}\), which is a dummy time step containing the calendar of the forecast horizon. The output of the model is \(\:{P}_{4}\) to \(\:{P}_{8}\), and \(\:{P}_{8}\) denotes the forecast.

Unlike other models, models with Encoder-Decoder architecture are used for the sequence-to-sequence task; the output of the proposed Tsformer is a sequence whose length is determined by the decoder input length.

In the prediction stage, the decoder obtains information from the previous time steps to generate the next time step. To generate the decoder output \({P_8}\), \(Toke{n_8}\), which plays the role of a placeholder, is required to represent the unknown \({T_8}\), the number of tokens is determined by the forecast horizon, and scalar 0 is used to fill the tokens.

Input layer

The input layers of the encoder and decoder in the vanilla Transformer are implemented by an embedding layer, which transforms the discrete variables into dense vectors. As training progresses, the corresponding dense vectors of discrete variables present a particular distribution in the vector space; their distance in the vector space can be used to measure the difference between them.

The vanilla Transformer has excellent performance in machine translation; it accepts the vocabulary ID as input. As the frequency of each ID in the vocabulary is high in the corpus, the vanilla Transformer has a promising ability to distinguish dense vectors after embedding mapping. However, in time series forecasting, most of the variables in the input sequence are continuous, and a regression problem must be solved. However, the embedding layer can transform only discrete variables into dense vectors. In the proposed Tsformer, the embedding layer in the vanilla Transformer is replaced by a fully connected layer.

Positional encoding

The hidden states of the LSTM or GRU are calculated through the accumulation of states; the LSTM or GRU can naturally represent the location information of time steps. In the Attention mechanism of the Transformer, the Attention of all the time steps is calculated simultaneously. If the time steps in the Transformer are not distinguished, the Attention calculation at all time steps will yield the same result. In general, additional position information is required by the Transformer. The additional position information is introduced by positional encoding after the input layer.

Two positional encodings are commonly used: positional embedding in BERT and sinusoid positional encoding in the vanilla Transformer; the formula of sinusoid positional encoding can be denoted as follows:

where \({d_{model}}\) denotes the dense vector dimension of each time step mapped by the input layer; this dimension is consistent with the dimension of the input and output of the encoder and decoder. \(2i\) and \(2i+1\) represent the even and odd dimensions in \({d_{model}}\), respectively. \(pos\) represents the position index of the input time step. Let the length of the input sequence be N; then, the range of pos is \(\left[ {0,N - 1} \right]\).

The purpose of positional embedding is to implicitly learn a richer position relationship. However, the datasets used in tourism demand forecasting are generally small, making it more difficult for the learned positional embedding to learn the relative relationships of time steps. Compared with positional embedding, sinusoid positional encoding is advantageous because the encoding of the position is fixed for each time step, and training of additional weights is not needed. Therefore, sinusoid positional encoding is adopted in the proposed Tsformer to encode the positions of time steps explicitly. After positional encoding is generated, the output of the input layer is added positionwise, as shown in Fig. 4.

Attention masking mechanism

Context matters in the text sequence; in the vanilla Transformer, the Attention of each time step is calculated with other time steps to capture context information, which leads to complex Attention interactions. However, the time steps in the time series are related only to the previous time steps. Global Attention to every time step may result in the introduction of redundant information and prevent the discovery of the dominant Attention in Attention visualization. Therefore, encoder source masking, decoder target masking, and decoder memory masking are adopted to simplify Attention interactions in time series processing to avoid redundant information and highlight the dominant Attention.

Encoder source masking

To calculate Self-Attention in the encoding stage, encoder source masking is adopted to ensure that every time step only includes previous information to simplify the Attention interactions. Unlike LSTM, the hidden state of each time step does not rely on state accumulation, which is calculated in parallel. If long-term dependencies exist, since the hidden states of earlier time steps are restricted to contain less information, the Attention to earlier time steps in the encoder output will be more explicit in the encoder–decoder Attention. The encoder source mask in 1-day-ahead forecasting can be given as follows:

where \(- inf\) represents negative infinity.

Taking the calculation of the fifth time step of encoder Self-Attention in 1-day-ahead forecasting as an example, the green arrows represent the time steps pointed to participate in the Attention calculation, whereas the red arrows represent the time steps pointed to not attended. Let \(En{c_{Ii}}\) denote the i-th input time step of the encoder input. Figure 5 shows that to calculate\(En{c_{I5}}\), the time steps from \(En{c_{I1}}\) to \(En{c_{I5}}\) are attended.

Decoder target masking

To calculate Self-Attention in the decoder, Attention must be prevented from attending the follow-up time steps of the current time step. In multistep ahead forecasting, multiple tokens are included in the decoder input. When the current token is decoded, the follow-up tokens of the current token are equivalent to padding in the NLP models, which do not contribute to the forecasting of the current token and introduce redundant information. Therefore, decoder target masking is adopted to exclude the follow-up tokens of the current token properly. The decoder target mask in 1-day-ahead forecasting can be represented as follows:

Let \(De{c_{Ii}}\) denote the i-th time step of the decoder input. Figure 6 shows that the actually attended time steps in decoder Self-Attention in the calculation of \(De{c_{I5}}\) in 1-day-ahead forecasting are \(De{c_{I4}}\) and \(De{c_{I5}}\).

Decoder memory masking

Since the encoder input and decoder input overlap, when the encoder–decoder Attention between the calculation results of the decoder Self-Attention and encoder output is calculated, the unattended time steps in the encoder must be prevented from being attended to in the decoder through encoder–decoder Attention. Therefore, decoder memory masking is adopted to keep the current time step in the calculation results of the decoder from attending the follow-up time steps of the corresponding time step in the encoder output. The decoder memory mask in 1-day-ahead forecasting can be represented as follows:

Let \(En{c_{Oi}}\) denote the i-th time step in the encoder output and \(De{c_{si}}\) denote the i-th time step in the calculation results of the decoder Self-Attention. Figure 7 shows that when the encoder–decoder Attention between \(De{c_{S5}}\) and the encoder output in 1-day-ahead forecasting is calculated, the actually attended time steps in the encoder output range from \(En{c_{O1}}\) to \(En{c_{O4}}\).

The calendar of the data points in the forecast horizon

In Sect. “Input and output of Tsformer”, zero-filled vectors are used for tokens to represent the time steps to be forecasted. However, in tourism demand forecasting, the information of data points in the forecast horizon is not completely unknown; the calendar of data points in the forecast horizon can be obtained before forecasting. Weekday and month are adopted to represent the calendar for better learning of seasonality. Therefore, the calendar of data points in the forecast horizon is added to tokens, leaving the unknown tourism demand and search engine index filled by zero to enhance the forecasting performance of the proposed Tsformer model. Figure 8 presents the adoption of the calendar of \(Toke{n_8}\) in 1-day-ahead forecasting.

Output layer

The output of the vanilla Transformer is activated by Softmax and converted to the confidence of the IDs in the vocabulary. In this case, the vanilla Transformer solves the classification problem. The regression problem should be solved in tourism demand forecasting, so Softmax is removed from the last layer.

The architecture of the proposed Tsformer is shown in Fig. 9:

Experiments

Datasets

Tourism demand before the COVID-19 outbreak

As shown in Fig. 10, tourism demand data from 1 January 2013 to 30 June 2017 in Jiuzhaigou Valley49 and 25 September 2015 to 31 December 2018 at Siguniang Mountain50 were collected.

The two datasets were divided into a training set, a validation set, and a test set. For the Jiuzhaigou Valley dataset, data from 1 January 2013 to 30 June 2015 were used as the training set; 1 July 2015 to 30 June 2016 were in the validation set; and 1 July 2016 to 30 June 2017 were in the test set. For the Siguniang Mountain dataset, 25 September 2015 to 31 December 2017 were included in the training set; 1 January 2018 to 30 June 2018 were included in the validation set; and 1 July 2018 to 31 December 2018 were included in the test set. Table 2 presents the number of samples in the two datasets.

Tourism demand after the COVID-19 outbreak

To evaluate the performance of the proposed method in terms of forecasting the recovery of tourism demand after the COVID-19 outbreak, we collected tourism demand data from 31 March 2020 to 30 September 2021 in Jiuzhaigou Valley and from 31 March 2020 to 3 June 2021 at Siguniang Mountain, as shown in Fig. 11. For Jiuzhaigou Valley, data from 31 March 2020 to 31 March 2021 were used as the training set; 1 April 2021 to 31 May 2021 were in the validation set; and 1 June 2021 to 30 September 2021 were in the test set. For the Siguniang Mountain dataset, 31 March 2020 to 14 January 2021 were in the training set; 10 February 2021 to 9 April 2021 were in the validation set; and 10 April 2021 to 3 June 2021 were in the test set. Table 3 presents the number of samples in the two datasets.

We have published the datasets used in this study, which can be accessed online51.

Statistical analysis

As shown in Figs. 12 and 13, we plotted the ACF and PACF of the two datasets to analyse their characteristics and judge their stationarity. The ACF of both datasets decreases with increasing lag and eventually approaches 0, which is a long tail, whereas the PACF of both datasets is significant at the first order and quickly decreases to close to 0, which is a truncated tail. The long tail of the ACF also indicates that the data have long-term dependency in statistics. Furthermore, we performed ADF analysis on the two datasets, obtaining p values of approximately 0.00081 and approximately 0.00065 for Jiuzhaigou Valley and Siguniang Mountain, respectively. Both p- values are much lower than 0.05; thus, the null hypothesis is rejected, and the series is judged to be stationary. Therefore, the tourism demand of Jiuzhaigou Valley and Siguniang Mountain can be forecast, but legacy time series methods may not perform well because of potential long-term dependency.

Features for tourism demand forecasting

As the search engine index is considered the main feature of tourism demand forecasting, we used Jiuzhaigou Valley and Siguniang Mountain as keywords and collected the corresponding Baidu index, as well as the other 11 related keywords.

The Pearson correlation coefficient is a method that presents a linear correlation between two variables. The value of the Pearson correlation coefficient is between − 1 and 1, and a larger absolute value of the Pearson correlation coefficient represents a greater linear correlation. The formula of the Pearson correlation coefficient can be given as follows:

Table 4 shows all keywords in the two datasets and the Pearson correlation coefficients between their corresponding Baidu indices and tourism demand. The tourism demand strongly correlates with the search indices corresponding to keywords other than Jiuzhaigou ticket.

For richer features in tourism demand forecasting, daily weather, highest temperature, wind, and air quality data were collected. Since the annual air quality and wind in Jiuzhaigou Valley and Siguniang Mountain are stable, these data are excluded. Date type, month, and weekday were included in the two datasets, considering the influences of holidays, seasonality, and the low season and high season for tourism.

Dummy variable encoding

The tourism demand datasets of Jiuzhaigou Valley and Siguniang Mountain contain categorical variables such as weather and date type, which cannot be used directly by the model. To handle these variables properly, they should be encoded into dummy variables. The date types can be divided into working days, weekends, and holidays, as shown in Table 5.

Weather has many categories, and more categories lead to the risk of overfitting. Similar weather events were merged into five categories, as shown in Table 6.

Month and weekday data were used to represent the calendar, i.e., January to December were encoded as 0 to 11. Moreover, Monday to Sunday were encoded as 0 to 6.

Preprocessing

For the models trained by gradient descent algorithms, the dimensions of some variables in the datasets are inconsistent. To avoid overly large variables dominating the output of the models and accelerate the convergence of the models, the data must be normalized to make the variables comparable. Min–max normalization was adopted, which can be denoted as follows :

Metrics

To compare the performance of the models, the Mean Absolute Error (MAE), Root Mean Squared Error (RMSE), and Mean Absolute Percentage Error (MAPE) were used as metrics, which can be expressed as follows:

where \({\hat {y}_i}\) denotes the predicted value and where \({y_i}\) denotes the ground truth.

Setup

We utilized the implementation of SVR, k-NN in the Python package scikit-learn and the ARIMA series in the Python package statsmodels. For ANN, LSTM and the proposed Tsformer, we utilized the basic layers in PyTorch.

In the experiments, the forecast horizons are set to 1, 7, 15, and 30, and most of the hyperparameters are obtained through a grid search. The hyperparameters of the proposed Tsformer and other baseline models in 1-day-ahead forecasting on the Jiuzhaigou Valley and Siguniang Mountain datasets are given in Tables 7 and 8; the hyperparameters at other forecast horizons are similar. To improve the performance of the baseline models, some adjustments were made.

(seasonal) Naive

In 1-day-ahead forecasting, the Naive method uses the ground truth at time \(t - 1\) as the forecast at time t. However, the standard Naive method is not suitable for multiday-ahead forecasting. Therefore, in multiday-ahead forecasting, the seasonal Naive method is used to replace the standard Naive method, which uses the ground truth at time \(t - s\) as the forecast at time t. If time \(t - s\) is still in the forecast horizon, then the forecasts are given according to the valid ground truth from earlier periods.

ARIMA series models

In accordance with the Bayesian Information Criterion (BIC), the hyperparameters of the ARIMA series models were selected on the training set. ACF and PACF were used to determine the approximate range of the hyperparameters, and a grid search was performed to select the model with the lowest BIC. To handle multiday-ahead forecasting, out-of-sample forecasts were used in place of lagged dependent variables. For relative fairness in comparison with other models, rolling-origin evaluations52 were also applied to multiday-ahead forecasting of ARIMA series models.

SVR

SVR only supports single-target regression, and it cannot handle multiday-ahead forecasting natively. To solve this problem, we utilize the MultiOutputRegressor in scikit-learn to perform multiday-ahead forecasting with SVR. The MultiOutputRegressor builds multiple SVR models to forecast each day in the forecast horizon.

Results

Table 9; Fig. 14 present the results of the Tsformer and the other nine baseline models on the Jiuzhaigou Valley dataset. The proposed Tsformer outperforms the other methods in terms of the MAE and MAPE for the forecast horizon \(\in \left\{ {1,7} \right\}\). For the forecast horizon \(\in \left\{ {15,30} \right\}\), Tsformer outperforms all the baseline methods under all the metrics. A further comparison of the Siguniang Mountain dataset is shown in Table 9; Fig. 15, indicating that Tsformer results in a greater RMSE than does LSTM but has a lower MAE and MAPE when the forecast horizon is set to 7. For the forecast horizon \(\in \left\{ {1,15,30} \right\}\), Tsformer outperforms all the baseline methods under all the metrics.

With increasing forecast horizons, the MAE, RMSE, and MAPE of the (seasonal) Naive, ARIMA series, SVR, k-NN, and ANN increase faster than those of the LSTM and Tsformer do, and the former methods fail to perform well in long-term forecasting. Compared with Tsformer, the performance of the LSTM is more sensitive to the forecast horizon, which can be interpreted as follows: although the LSTM has a large receptive field, information from earlier time steps will vanish in the cell state of the LSTM because of its limited memory capacity. For the Tsformer, the receptive field is infinite, benefiting from Multi-Head Attention. Tsformer does not require the stepwise accumulation of calculations in time series processing but calculates the Attention of each time step simultaneously. Increasing the forecast horizon and input sequence length makes the performance advantage of the Tsformer in capturing long-term dependency more considerable. In summary, the proposed Tsformer performs competitively in short-term and long-term tourism demand forecasting, and it can be an alternative method for long-term tourism demand forecasting.

Efficiency evaluation

We further evaluated the efficiency between the proposed method and baseline models on a PC with an NVIDIA Titan X (Pascal) GPU, Intel Core I5 8400 CPU, and 16 GB RAM. In this experiment, we forecast the test set of the Jiuzhaigou Valley described in Sect. “Datasets” and record the average time taken by 5 executions of the proposed method and the baseline method. Table 10 presents the results:

The results demonstrate that although deep learning models are more complicated in architecture and require more computations, optimizations in the implementation can significantly increase the efficiency. The time complexity of Multi-Head Attention is

\(\:O\:\left({n}^{2}d\right)\), where \(\:n\) denotes the sequence length and where \(\:d\) denotes the dense vector dimension of each time step mapped by the input layer. We focus on the comparison between LSTM and Tsformer. The time complexity of LSTM is \(\:O\:\left({d}^{2}n\right)\). Without considering computational optimization, when the sequence length is less than the dimension, the computational efficiency of Multi-Head Self-Attention in Tsformer is greater than that of the LSTM. Considering computational optimization, each step and each attention head of Multi-Head Attention are parallelized. Therefore, overall, the computational efficiency of the Tsformer is greater than that of the LSTM. The time consumption of the Tsformer in this experiment is several times greater than that of LSTM because the Tsformer model uses multiple layers to achieve better performance, whereas LSTM does not converge with the same number of layers.

Ablation study

The Tsformer in Table 9 uses the calendar of data points in the forecast horizon. To evaluate the impact of using the calendar of data points in the forecast horizon on forecasting performance, ablation studies on the Jiuzhaigou Valley and Siguniang Mountain datasets are conducted to compare the forecasting performance of the Tsformer with and without the calendar of data points in the forecast horizon. Table 11 presents the results of the ablation studies. In 1-day-ahead forecasting on the Jiuzhaigou Valley dataset, the Tsformer with the calendar outperforms the Tsformer without the calendar under the three metrics of the MAE, RMSE, and MAPE. In 7-day- to 30-day-ahead forecasting, the Tsformer with the calendar outperforms that without the calendar under at least two metrics. For the Siguniang Mountain dataset, the MAE of the Tsformer with the calendar is slightly greater than that of the Tsformer without the calendar, but the Tsformer with the calendar performs better at other forecast horizons. The ablation studies on the two datasets demonstrate that the performance degrades when the calendar of data points in the forecast horizon is not used. Using the calendar of data points in the forecast horizon benefits the forecasting performance of the proposed Tsformer. Moreover, the ablation studies also indicate that even if the calendar of data points in the forecast horizon is not used, the Tsformer without the calendar still outperforms the other baseline models in Table 8 in most cases.

Tourism recovery forecasting after the COVID-19 outbreak

Experiments were conducted in Sect. “Results” to compare the proposed Tsformer with baseline methods. However, the data points used in the experiments have similar distributions. Tourism demand around the world has been greatly affected by COVID-19 and has started to recover gradually. Therefore, further experiments are conducted to evaluate the robustness of the proposed Tsformer under the recovery of tourism demand. Note that since the data points in the Siguniang Mountain dataset are insufficient for 30-day-ahead forecasting, 30-day-ahead forecasting on the Siguniang Mountain dataset is not considered.

Table 12 presents the performance of the proposed Tsformer and baseline methods on the two datasets after the COVID-19 outbreak. For the forecast horizon \(\in \left\{ {1,7} \right\}\) on the Jiuzhaigou Valley dataset and the forecast horizon \(\in \left\{ {1,7,15} \right\}\) on the Siguniang Mountain dataset, the forecasting performance of the proposed Tsformer is acceptable. For the forecast horizon \(\in \left\{ {15,30} \right\}\) on the Jiuzhaigou Valley dataset, all the methods in the experiments fail to provide reliable forecasts, which can be interpreted as data bottlenecks. First, tourism demand after the COVID-19 outbreak changed dramatically, and the seasonality of tourism demand decreased. Second, limited data points for training lead to limited generalization on the test set. Although the proposed Tsformer is capable of nonlinear modelling and long-term dependency capture, it performs well in a limited forecast horizon. The results reported in Table 10 show that the proposed Tsformer outperforms other baseline methods under at least two metrics despite the bottleneck of data, demonstrating that the proposed Tsformer is more robust and provides more accurate forecasting of tourism demand recoveries after the COVID-19 outbreak than other baseline methods.

Interpretability

To recognize the main focus of the Tsformer, a study on the interpretability of the Tsformer is conducted. In the 1-day-ahead forecasting using Day 1 to Day 7 to forecast Day 8 on the Jiuzhaigou Valley dataset, the Self-Attention and encoder–decoder Attention weight matrix in each decoder layer are visualized. All the samples from the training set are input into the Tsformer. After forward propagation, the Attention weight matrix of each sample is obtained. To be more generalized, the Attention weights of all the samples are averaged. As shown in Fig. 14, the Attention weights to other time steps are different for each time step. The decoder target masking mechanism makes the Attention weight of the follow-up time steps of each time step 0. It successfully prevents the Tsformer from paying Attention to information after the current calculation time step, which avoids redundant information.

Figure 16 presents the Self-Attention weight matrix of each decoder layer in the Tsformer. In the process of generating Day 8, layer 1 pays more Attention to Day 7 and Day 8 itself, and layer 2 mainly attends to Days 5, 6, and 7. Layer 3 focuses mainly on Days 6, 7, and 8, and the closer the day is to Day 8, the larger the Attention weight is. Layer 4 also follows this rule, focusing mainly on Days 4 to 8. The weight of Day 8 is close to that of Day 7, which indicates that the Tsformer uses the information from recent days and the calendar introduced by Day 8.

Figure 17 presents the encoder–decoder Attention weight matrix of each decoder layer in the Tsformer. When encoder–decoder Attention calculations are performed, each time step in the decoder can attend only the previous time steps in the encoder. The Attention weights of the other time steps are 0, which indicates that the decoder memory masking mechanism works properly. In layer 1, Days 3 to 7 receive a larger Attention weight. In layer 2, Days 1 to 7 have similar Attention weights, but Day 1 is mainly attended to, which demonstrates that the Tsformer uses seasonal features in weeks in the task of forecasting tourism demand on the 8th day. Days 1 to 7 are attended in layer 3, and days closer to Day 8 receive larger Attention weights. The visualization of the encoder–decoder Attention reveals that the Tsformer tends to use information from the previous five days for 1-day-ahead forecasting and can capture seasonal features of tourism demand.

Discussion and conclusion

Current AI-based methods for tourism demand forecasting are weak in capturing long-term dependency, and most of them lack interpretability. This study aims to improve the Transformer used for machine translation and proposes the Tsformer for short-term and long-term tourism demand forecasting. In the proposed Tsformer, the encoder and decoder are used to capture short-term and long-term dependency, respectively. Encoder source masking, decoder target masking, and decoder memory masking are used to simplify the interactions of Attention, highlight the dominant Attention, and implement time series processing in Multi-Head Attention. To further improve performance, a calendar of data points in the forecast horizon is used.

In this study, the Jiuzhaigou Valley and Siguniang Mountain tourism demand datasets are used to evaluate the performance of the proposed Tsformer and nine baseline models. The experimental results indicate that in short-term and long-term tourism demand forecasting before and after the COVID-19 pandemic, the proposed Tsformer outperforms the baseline models in terms of the three metrics of the MAE, RMSE, and MAPE in most cases and achieves competitive performance. To understand the impact of using the calendar of data points in the forecast horizon, ablation studies are conducted to evaluate the performance of the Tsformer with or without the calendar. The results of ablation studies demonstrate that using the calendar of data points in the forecast horizon benefits the performance of the proposed Tsformer. To improve the interpretability of the Tsformer as a deep learning model, we visualize Self-Attention and encoder–decoder Attention in each decoder layer of the Tsformer to recognize the features that the Tsformer pays Attention to. The visualization indicates that the Tsformer mainly focuses on recent days and earlier days to capture seasonal features. Tokens that include the calendar of data points in the forecast horizon also obtain large Attention weights, demonstrating that the proposed Tsformer can extract rich features of tourism demand data.

The efficiency analysis and evaluation of the proposed Tsformer and other baseline methods demonstrate that although the proposed Tsformer is a deep learning method, it has similar efficiency to SVR and runs much faster than the ARIMA series under GPU acceleration. Although the proposed Tsformer with more layers runs 5–7 times slower than other machine learning baseline methods, such as ANN and LSTM, the trade-off between efficiency and performance is acceptable since daily tourism demand forecasting does not require high timeliness but rather high accuracy. In practical deployment, the proposed Tsformer can satisfy tourism demand forecasting under other scales in terms of efficiency, and it can be deployed on a normal PC.

Nevertheless, this study also has several limitations, and some directions for future work should be discussed.

First, data availability is a considerable challenge. Many scenic spots have only recently begun publishing statistical data, resulting in tourism demand datasets that are relatively small, typically comprising only a few thousand samples. Furthermore, the number of available samples after the COVID-19 outbreak was substantially smaller than that before. Consequently, this study is based on restricted datasets, which inherently limit the generalizability of AI-based forecasting methods. While the proposed method achieves competitive performance compared with baseline models, training a model with limited datasets may still limit its ability to produce precise tourism demand forecasts. Future studies should explore techniques to improve the generalization performance of forecasting methods with limited data, such as the use of transfer learning, data augmentation, or semisupervised learning approaches.

Additionally, this study adopted the proposed Tsformer on two datasets for daily tourism demand forecasting, but the proposed Tsformer is a general method in theory and can be used on more datasets with diverse frequencies and features, such as hotel accommodations and inbound tourists, in the future.

Second, while the proposed method demonstrates high performance in forecasting tourism demand both before the COVID-19 outbreak and during the recovery phase, it remains less sensitive to demand changes caused by public emergencies. The existing features used for tourism demand forecasting do not sufficiently account for sudden disruptions, such as pandemics, natural disasters, or geopolitical events. Future studies should focus on improving the robustness of forecasting models in response to such crises. Potential solutions include integrating real-time event indicators (e.g., government policies, news sentiment, or mobility restrictions).

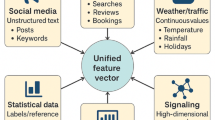

Moreover, the current study relies primarily on structured data, whereas unstructured data such as social media posts and online reviews could provide valuable supplementary insights. Given that the Transformer architecture was originally designed for natural language processing, future research could explore multimodal models that simultaneously process structured (e.g., historical visitor numbers) and unstructured (e.g., tourist sentiment from text) data to increase forecasting accuracy. Combining these data sources could enable more comprehensive and timely forecasting, particularly in volatile environments.

In summary, while this study contributes to advancing tourism demand forecasting, further investigations are needed to address data limitations, improve emergency responsiveness, and utilize multimodal data integration for more robust and generalizable forecasting.

Data availability

The data that support the findings of this study are openly available at [https://figshare.com/articles/dataset/Datasets_for_tourism_demand_forecasting_of_Jiuzhaigou_Valley_and_Siguniang_Mountain/29095091].

References

World Tourism Organization. International Tourism Highlights, 2020 Edition, UNWTO, Madrid, (2021), doi: https://doi.org/10.18111/9789284422456

Calderwood, L. U. & Soshkin, M. The Travel and Tourism Competitiveness Report 2019 (World Economic Forum, 2019).

Gu, Y., Onggo, B. S., Kunc, M. H. & Bayer, S. Small Island developing States (SIDS) COVID-19 post-pandemic tourism recovery: A system dynamics approach. Current Issues Tourism, 25, 9, pp. 1481–1508, 2022/05/03 2022, https://doi.org/10.1080/13683500.2021.1924636

UNWTO World Tourism Barometer and Statistical UNWTO & Annex, J. UNWTO World Tourism Barometer (English version), vol. 20, no. 1, pp. 1–40, 2022/01/31 2022, (2022). https://doi.org/10.18111/wtobarometereng.2022.20.1.1

UNWTO. The Economic Contribution of Tourism and the Impact of COVID-19p. 31 (UNWTO, 2021).

Song, H., Qiu, R. T. R. & Park, J. A review of research on tourism demand forecasting: launching the annals of tourism research curated collection on tourism demand forecasting. Annals Tourism Research, 75, pp. 338–362, 2019/03/01/ 2019, doi: https://doi.org/10.1016/j.annals.2018.12.001

Li, X., Law, R., Xie, G. & Wang, S. Review of tourism forecasting research with internet data. Tourism Management, 83, p. 104245, 2021/04/01/ 2021, doi: https://doi.org/10.1016/j.tourman.2020.104245

Höpken, W., Eberle, T., Fuchs, M. & Lexhagen, M. Improving tourist arrival prediction: a big data and artificial neural network approach. J. Travel Res. 60 (5), 998–1017 (2021).

Yao, Y. & Cao, Y. A neural network enhanced hidden Markov model for tourism demand forecasting. Applied Soft Computing, 94, p. 106465, 2020/09/01/ 2020, doi: https://doi.org/10.1016/j.asoc.2020.106465

Lijuan, W. & Guohua, C. Seasonal SVR with FOA algorithm for single-step and multi-step ahead forecasting in monthly inbound tourist flow. Knowledge-Based Systems, 110, pp. 157–166, 2016/10/15/ 2016, doi: https://doi.org/10.1016/j.knosys.2016.07.023

Bi, J. W., Li, H. & Fan, Z. P. Tourism demand forecasting with time series imaging: A deep learning model. Annals Tourism Research, 90, p. 103255, 2021/09/01/ 2021, doi: https://doi.org/10.1016/j.annals.2021.103255

Liu, Y. Y., Tseng, F. M. & Tseng, Y. H. Big data analytics for forecasting tourism destination arrivals with the applied vector autoregression model. Technological Forecast. Social Change, 130, pp. 123–134, 2018/05/01/ 2018, doi: https://doi.org/10.1016/j.techfore.2018.01.018

Li, X., Pan, B., Law, R. & Huang, X. Forecasting tourism demand with composite search index. Tourism Management, 59, pp. 57–66, 2017/04/01/ 2017, doi: https://doi.org/10.1016/j.tourman.2016.07.005

Abbasimehr, H., Shabani, M. & Yousefi, M. An optimized model using LSTM network for demand forecasting. Computers & Industrial Engineering, 143, p. 106435, 2020/05/01/ 2020, doi: https://doi.org/10.1016/j.cie.2020.106435

Parmezan, A. R. S., Souza, V. M. A., A., G. E. & Batista, P. A. Evaluation of statistical and machine learning models for time series prediction: identifying the state-of-the-art and the best conditions for the use of each model. Inf. Sci. 484, 302–337. 2019/05/01/ (2019).

Wang, J., Peng, B. & Zhang, X. Using a stacked residual LSTM model for sentiment intensity prediction, Neurocomputing, vol. 322, pp. 93–101, 2018/12/17/ 2018. https://doi.org/10.1016/j.neucom.2018.09.049

Wang, Y., Zhang, X., Lu, M., Wang, H. & Choe, Y. Attention augmentation with multi-residual in bidirectional LSTM, Neurocomputing, vol. 385, pp. 340–347, 2020/04/14/ 2020. https://doi.org/10.1016/j.neucom.2019.10.068

Xu, D., Guo, L., Zhang, R., Qian, J. & Gao, S. Can relearning local representation help small networks for human pose estimation? Neurocomputing, vol. 518, pp. 418–430, 2023/01/21/ (2023). https://doi.org/10.1016/j.neucom.2022.11.025

Yan, R. et al. Multi-hour and multi-site air quality index forecasting in Beijing using CNN, LSTM, CNN-LSTM, and Spatiotemporal clustering. Expert Syst. Applications, 169, p. 114513, 2021/05/01/ 2021, doi: https://doi.org/10.1016/j.eswa.2020.114513

Lu, W. et al. A Method Based on GA-CNN-LSTM for Daily Tourist Flow Prediction at Scenic Spots, Entropy, vol. 22, no. 3, (2020). https://doi.org/10.3390/e22030261

Xie, G., Li, Q. & Jiang, Y. Self-attentive deep learning method for online traffic classification and its interpretability, Computer Networks, vol. 196, p. 108267, 2021/09/04/ 2021. https://doi.org/10.1016/j.comnet.2021.108267

Peng, B., Song, H. & Crouch, G. I. A meta-analysis of international tourism demand forecasting and implications for practice. Tourism Management, 45, pp. 181–193, 2014/12/01/ 2014, doi: https://doi.org/10.1016/j.tourman.2014.04.005

Wu, D. C., Bahrami Asl, B., Razban, A. & Chen, J. Air compressor load forecasting using artificial neural network. Expert Syst. Applications, 168, p. 114209, 2021/04/15/ 2021, doi: https://doi.org/10.1016/j.eswa.2020.114209

Verma, D. & Rana, C. Comparative Analysis of Time Series Forecasting Algorithms, in Proceedings of Third International Conference on Communication, Computing and Electronics Systems, Singapore, V. Bindhu, J. M. R. S. Tavares, and K.-L. Du, Eds., 2022// : Springer Singapore, pp. 239–255. (2022).

Song, H., Qiu, R. T. R. & Park, J. Progress in tourism demand research: theory and empirics. Tourism Management, 94, p. 104655, 2023/02/01/ 2023, doi: https://doi.org/10.1016/j.tourman.2022.104655

Li, H., Hu, M. & Li, G. Forecasting tourism demand with multisource big data. Annals Tourism Research, 83, p. 102912, 2020/07/01/ 2020, doi: https://doi.org/10.1016/j.annals.2020.102912

Khatibi, A., Belém, F., Couto da Silva, A. P., Almeida, J. M. & Gonçalves, M. A. Fine-grained tourism prediction: Impact of social and environmental features, Information Processing & Management, vol. 57, no. 2, p. 102057, 2020/03/01/ 2020. https://doi.org/10.1016/j.ipm.2019.102057

Alexandrov, A. et al. GluonTS: probabilistic and neural time series modeling in python. J Mach. Learn. Res, 21, 116, pp. 1–6. (2020).

Jiang, P., Yang, H., Li, R. & Li, C. Inbound tourism demand forecasting framework based on fuzzy time series and advanced optimization algorithm. Applied Soft Computing, 92, p. 106320, 2020/07/01/ 2020, doi: https://doi.org/10.1016/j.asoc.2020.106320

Law, R., Li, G., Fong, D. K. C. & Han, X. Tourism demand forecasting: A deep learning approach. Annals Tourism Research, 75, pp. 410–423, 2019/03/01/ 2019, doi: https://doi.org/10.1016/j.annals.2019.01.014

Martínez, F., Frías, M. P., Pérez, M. D. & Rivera, A. J. A methodology for applying k-nearest neighbor to time series forecasting. Artificial Intell. Review, 52, 3, pp. 2019–2037, 2019/10/01 2019, https://doi.org/10.1007/s10462-017-9593-z

Xu, W. et al. A hybrid modelling method for time series forecasting based on a linear regression model and deep learning. Applied Intelligence, 49, 8, pp. 3002–3015, 2019/08/01 2019, https://doi.org/10.1007/s10489-019-01426-3

Landi, F., Baraldi, L., Cornia, M. & Cucchiara, R. Working memory connections for LSTM. Neural Netw. 144, 334–341. https://doi.org/10.1016/j.neunet.2021.08.030 (2021). 12/01/ 2021, doi.

Lv, S. X., Peng, L. & Wang, L. Stacked autoencoder with echo-state regression for tourism demand forecasting using search query data. Applied Soft Computing, 73, pp. 119–133, 2018/12/01/ 2018, doi: https://doi.org/10.1016/j.asoc.2018.08.024

Zhang, B., Pu, Y., Wang, Y. & Li, J. Forecasting Hotel Accommodation Demand Based on LSTM Model Incorporating Internet Search Index, Sustainability, vol. 11, no. 17, (2019). https://doi.org/10.3390/su11174708

Kulshrestha, A., Krishnaswamy, V. & Sharma, M. Bayesian BILSTM approach for tourism demand forecasting. Annals Tourism Research, 83, p. 102925, 2020/07/01/ 2020, doi: https://doi.org/10.1016/j.annals.2020.102925

Vaswani, A. et al. Attention is all you need, presented at the Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, California, USA, (2017).

Rogers, A., Kovaleva, O. & Rumshisky, A. A primer in bertology: what we know about how BERT works. Trans. Association Comput. Linguistics. 8, 842–866. https://doi.org/10.1162/tacl_a_00349 (2021).

Astolfi, G. et al. An approach for applying natural language processing to image classification problems, Neurocomputing, vol. 513, pp. 372–382, /11/07/ 2022, (2022). https://doi.org/10.1016/j.neucom.2022.09.131

Xia, R., Li, G., Huang, Z., Pang, Y. & Qi, M. Transformers only look once with nonlinear combination for real-time object detection. Neural Comput. Applications, 34, 15, pp. 12571–12585, 2022/08/01 2022, https://doi.org/10.1007/s00521-022-07333-y

Yang, J., Ge, H., Su, S. & Liu, G. Transformer-based two-source motion model for multi-object tracking. Applied Intelligence, 52, 9, pp. 9967–9979, 2022/07/01 2022, https://doi.org/10.1007/s10489-021-03012-y

Wang, W., Duan, L., En, Q., Zhang, B. & Liang, F. TPSN: Transformer-based multi-Prototype search network for few-shot semantic segmentation. Computers Electr. Engineering, 103, p. 108326, 2022/10/01/ 2022, doi: https://doi.org/10.1016/j.compeleceng.2022.108326

Fan, J., Wang, Z., Sun, D. & Wu, H. Sepformer-based models: more efficient models for long sequence Time-Series forecasting. IEEE Trans. Emerg. Top. Comput. 1–12. https://doi.org/10.1109/TETC.2022.3230920 (2022).

Lim, B., Arık, S. Ö., Loeff, N. & Pfister, T. Temporal fusion transformers for interpretable multi-horizon time series forecasting. International J. Forecasting, 37, 4, pp. 1748–1764. (2021).

Pan, B., Wu, D. C. & Song, H. Forecasting hotel room demand using search engine data. J. Hospitality Tourism Technol. 3 (3), 196–210. https://doi.org/10.1108/17579881211264486 (2012).

Choi, H. & Varian, H. A. L. Predicting the Present with Google Trends, Economic Record, https://doi.org/10.1111/j.1475-4932.2012.00809.x vol. 88, no. s1, pp. 2–9, 2012/06/01 2012, doi: https://doi.org/10.1111/j.1475-4932.2012.00809.x.

Yang, X., Pan, B., Evans, J. A. & Lv, B. Forecasting Chinese tourist volume with search engine data. Tourism Management, 46, pp. 386–397, 2015/02/01/ 2015, doi: https://doi.org/10.1016/j.tourman.2014.07.019

Clevert, D. A., Unterthiner, T. & Hochreiter, S. Fast and Accurate Deep Network Learning by Exponential Linear Units (ELUs), in Proceedings of the International Conference on Learning Representations (ICLR) (), 2016. [Online]. Available:), 2016. [Online]. Available: (2016). http://arxiv.org/abs/1511.07289. [Online]. Available: http://arxiv.org/abs/1511.07289

Daily tourist arrival of Jiuzhaigou Valley. accessed July 22, (2020). https://www.jiuzhai.com/news/number-of-tourists/

Daily tourist arrival of Siguniang Mountain. accessed July 22, (2020). https://www.sgns.cn/news/number

Yi, S., Chen, X. & Tang, C. Datasets for Tourism Demand Forecasting of Jiuzhaigou Valley and Siguniang Mountain Ed (figshare, 2025).

Tashman, L. J. Out-of-sample tests of forecasting accuracy: an analysis and review. Int. J. Forecast. 16 (4), 437–450. https://doi.org/10.1016/S0169-2070(00)00065-0 (2000). 10/01/ 2000, doi.

Funding

This research was financed by the Philosophy and Social Science Research Fund of Chengdu University of Technology, grant number YJ2022-ZD014.

Author information

Authors and Affiliations

Contributions

Conceptualization, Y.; methodology, Y.; software, Y.; validation, Y.; investigation, C.and T.; resources, C.; data curation, Y.; writing—original draft preparation, Y.; writing—review and editing, C.and T.; visualization, Y.; supervision, C.; project administration, C.; funding acquisition, C.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yi, S., Chen, X. & Tang, C. Time series transformer for tourism demand forecasting. Sci Rep 15, 29565 (2025). https://doi.org/10.1038/s41598-025-15286-0

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-15286-0