Abstract

This global survey investigated the use of virtual reality simulation (VRS) in ophthalmological surgery education. Questionnaires were distributed to authors of publications and directors of centers using VRS for surgical education in ophthalmology, then forwarded to residents and fellows of their team for completion. Out of 1845 questionnaires sent across 36 countries, 170 responses from 26 countries were analyzed, primarily from residents and fellows (75%). Mean access duration to VRS was 3.6 years, often at University Hospitals (43.5%). Notably, 12% of respondents traveled an average of 550 km to access VRS. The EyeSi VR Magic was the most frequently reported simulator (80%, mainly in Europe/North America), followed by HelpMeSee (48%, primarily in Europe/India/Madagascar). In 25 training centers across 12 countries, VRS was a mandatory prerequisite for patient access, functioning as a “surgical license”. A larger number of training centers (49 from 19 countries) favored such mandatory training. Junior surgeons perceived a greater impact of VRS on their surgical practice compared to senior surgeons (p = 0.032). The study concludes that while VRS holds a significant role in postgraduate ophthalmic surgical training, its access is unequal worldwide. Broader implementation and standardized practices could improve and maintain high educational standards in this field.

Similar content being viewed by others

Introduction

Effective surgical education during ophthalmology residency is crucial to maintaining high standards in surgical patient care. Indeed, the traditional “practice on patients” Halstedian apprenticeship model, which is commonly applied globally1, should be augmented by “pre-patient” simulation models. This has proven to improve surgical performance and reduce peri- and post-operative complication rates2,3,4,5,6,7. Many “pre-patient” simulation training models exist today. These include wet-lab training (i.e., using animal tissues, such as porcine eyes), and dry-lab training (i.e., using synthetic materials) which have shown encouraging results on surgical performance8,9. While providing a quite realistic experience, these models can be costly and, intrinsically, lack both variations of scenarios and objective metrics for performance evaluation. Over the past decades, the introduction of virtual reality simulation (VRS) has led to a revolutionary change in surgical training. Indeed, it already plays a core role in the post-graduate surgical curriculum in many surgical specialties. This technology proposes greater number of surgical scenarios and reproducible assessment metrics10,11. In the field of ophthalmology, validity evidences of the metrics used in VRS, as well as the efficacy of these trainings has been demonstrated for cataract and vitreoretinal surgery4,7,12,13,14. Efficacy results of VRS training tends to be higher when compared to older simulation training methods4,15,16,17 though the VRS training programs reported in the literature differ greatly from each other3.

While several virtual reality simulators are currently in use, they differ in training design (including hardware and software), purpose and accessibility. The EyeSi VR Magic (Haag-Streit, Heidelberg, Germany) has been commercially available since 2004, making it the first device on the market. It is by far the most represented virtual reality simulator worldwide with over one thousand simulators sold18. The system provides both cataract and vitreoretinal self-guided training modules and a slit lamp simulator19,20,21. In contrast, the HelpMeSee simulator (HMS, [HelpMeSee, Jersey City, New Jersey, United States]) is not yet commercially available. Its core function is to train manual small incision cataract surgery (MSICS), though phacoemulsification and vitrectomy modules are available. The focus on MSICS is because HMS specifically targets resource-limited areas for ophthalmic surgical training22. While other virtual reality simulator devices have been reported (i.e., MicroVisTouch, PhacoVision and in house virtual reality simulators), they require more evidence to establish their efficacy13,23,24.

The benefits of VRS in many forms of surgical training is not limited to ophthalmology and is supported by the evidence25,26. Based on these successes, the Simulation Subcommittee of the Ophthalmology Foundation (San Francisco, California, United-States of America) has emphasised the need to incorporate simulation-based education in ophthalmology training programs27. Given the performance of VRS to date, several training centers, notably in Belgium, France and the UK, have made it mandatory for ophthalmology residents before they are allowed to perform cataract surgeries on actual patients28,29,30,31. Despite the national recommendations within countries, the profession still lacks international harmonized best practices for the use of simulation training or VRS during surgical residency training. The heterogenous approach between centers leads to a lack of information regarding use of VRS and the difficulty in assessing its impact on surgical learning.

Given the practical limitations on training resources, program directors need to make evidence based and cost-effective decisions on their educational modalities. Gathering current, VRS use in ophthalmic surgery education around the world is the first step in evaluating the practices that could inform our training centers and resident curriculum developers. The aim of this study was to address the knowledge gap in practice patterns and report on the implementation of VRS training for ophthalmology surgical education worldwide.

Results

One hundred and twenty participants from 36 countries were invited to complete the survey. After snowballing as sample collection, 1725 additional questionnaires were sent. Of the 1845 surveys distributed, 199 answers were received from 32 countries (responding rate: 11%). Twenty-nine questionnaires had to be removed from the study due to addressing error (i.e., survey sent to non-VRS users) or to the absence of consent from a participant for the use of the survey responses for publication. Finally, 170 (9.2%) questionnaires of participants coming from 26 countries were included in the study (see Fig. 1).

Respondents’ characteristics and access to virtual reality simulation devices

Mean age of the respondents was 34.1 +/- 8.8 years. Seventy five respondents (44%) were under 30 years, 62 (36%) were between 31 and 40 years,19 (11%) were between 41 and 50 years, 8 (5%) were between 51 and 60 years and 4 (2%) were between 61 and 70 years. Two respondents did not indicate their age. The majority were residents (n = 104 [61%]; mean age = 30 +/- 4.7 years). Fellows, or those in equivalent positions of post-residency training, represented 14% (n = 24) of mean age 33 +/- 3.3 years) and senior surgeons 24% (n = 41) of mean age 44 +/- 9.9 years. One respondent did not indicate his position. General characteristics of the respondents are shown in (Table 1). The average time that simulator facilities had been available for training was 3.6 +/- 3.8 years, with the largest proportion being located in University Hospitals (n = 47; 43.5%). The EyeSi VR Magic (Haag-Streit, Heidelberg, Germany) was the most frequently used (n = 136, 80%, defined as group 1), followed by HMS (HMS, [HMS foundation, Jersey City, New Jersey, United States]) (n = 82, 48%, group 2). Other simulators were also reported (n = 17; 10%, group 3), mostly the Alcon Fidelis system (Alcon Inc., Geneva, Switzerland) (n = 11; 6.4%). Many respondents have had access to more than one simulation system (n = 61; 36%, group 4), mostly EyeSi and HMS (n = 55; 32%). Virtual reality simulator access data are shown in (Table 1). The majority of EyeSi users were in Europe (n = 87; 64%), followed by North America (n = 24; 18%), Madagascar (n = 17, 13%), and India (n = 8; 6%). HMS users were in Europe (n = 19 in France [23%] and n = 3 in Denmark [4%]), Mexico (n = 11; 13%), India (n = 22; 27%), Madagascar (n = 23; 28%) and Trinidad and Tobago (n = 1; 1%). Other eye surgery simulators (mainly the Alcon Fidelis) were mainly used in Europe (n = 9 for Alcon Fidelis system). There was one surgeon who had access to Meta fundamental VR [Fundamental VR Surgery, London, UK], in France. Distribution of virtual reality simulators in ophthalmology as used by the respondents around the world are represented in (Fig. 2).

Forty-three respondents (25%) originating from 12 different countries and 25 different training centers (see Fig. 3) declared that VRS training was a mandatory step prior to patient access, as a form of “surgical license” though this is typically a requirement of training body rather than a legal/governmental approval. Respondents from 19 countries and 49 training centers (n = 92; 54%) were in favor of requiring a form of “surgical license” prior to starting cataract surgery. These were representative of 20 training centers (from 9 countries) where a form of “surgical license” was already required and 29 training centers (from 19 countries) without any mandatory training prior to patient access.

Histogram presenting countries using a virtual reality simulation (VRS) surgical license for surgery learning prior to patient access. The number of training centers using a surgical license, according to respondents declarations, are represented in light grey for each country, with percentages from the total number of training center in the corresponding country (represented in dark grey). *Training centers represented in light grey (i.e., applying mandatory VRS) are: Minsk (Belarus), Brussels (Belgium), Copenhagen and Golstrup (Denmark), Grenoble, Paris and Strasbourg (France), Munich (Germany), Athens (Greece), Mumbai and Orrisa (India), Riga (Latvia), Antananarivo, Fianarantsoa, Morondava, Sambava, Toamasina and Tsiroanomandidy (Madagascar), Mexico City, Queretaro and Puebla mexico (Mexico), The Hague and Utrecht (The Netherlands) and Los Angeles and Portland (United States).

Comparison of virtual reality simulator use between senior and junior surgeons

Senior surgeons [group A] and junior surgeons [group B] were compared in their use of VRS. Senior surgeons had spent a significantly longer time on virtual reality simulators than junior surgeons (151 vs. 28 h; p = 0.04). They were also more capable of completing the most challenging modules proposed on the EyeSi simulator (48% of the senior surgeons vs. 37% of the junior surgeons reached CAT-D module; p = 0.05 and, 18% vs. 3.9% reached VRT-C module). Junior surgeons rated the impact of VRS training on their surgical practice higher than that of senior surgeons on a scale of 0 (no impact) to 10 (major impact, 5.8 vs. 4.5; p = 0.032). There was no difference found between the two groups in terms of the type of simulator used, nor was there a difference in simulator preference. There was also no difference in opinion regarding the need for a “surgical license” to commence surgical training. Characteristics and comparisons between these two subgroups are detailed in (Table 2).

Comparison of responses between the different types of virtual reality simulators used

Respondents from the four different types of virtual reality simulator use (i.e., EyeSi [group 1, n = 75], HMS [group 2, n = 27], other simulators [group 3, n = 7] and multiple simulators [group 4, n = 61]) did not differ for the number of cataract surgeries they had previously performed in the OR. Residents, fellows and senior surgeons were equally represented in the four groups.

Total simulation training time and single session training time were significantly different between simulators. HMS users reported significantly more training time in total compared to all other simulator users (228.5 vs. 32.8 h for EyeSi, 34 h for multiple simulators users and 7.3 h for other simulators users; p = 0.04). HMS and multiple simulators users reported longer time spent on the simulator for one single training session compared to other simulators users (35.3 and 11.7 h for HMS and multiple simulators users, respectively, vs. 2.1 h for EyeSi and 1.3 h for other simulators users; p < 0.001). Access to the simulator differed significantly between groups. Permanent and free of charge access was significantly more frequent in the EyeSi user groups (72% vs. 18.5% for HMS, 60.7% for multiple users and 28.5% for other simulators users for permanent access, p < 0.001; 89% vs. 51.9% for HMS, 24.6% for multiple users and 42.9% for other simulators users for free of charge access, p < 0.001). As-needed or permanent supervision were more frequently reported in the HMS user group (88.9% vs. 63% for EyeSi and 42.9% for other simulator users for available supervision, p < 0.01; 81.5% vs. 12% for EyeSi and 14.3% for permanent supervision, p < 0.001). There was a higher proportion of HMS users favorable to a “surgical license” than EyeSi users only (79.2% for HMS and 48.6% for EyeSi; p = 0.009).

HMS users rated the impact of the simulation training on their performance in the OR compared as significantly higher when compared to the other groups (p = 0.042).

Detailed comparisons of practice patterns between the different virtual reality simulators used by the respondents are presented in (Table 3). The main preferred features and features to improve for each simulator, according to the respondents, are also reported in (Table 3).

Males performed significantly more cataract surgeries in the OR compared to females (1809 +/- 4228 vs. 672 +/- 2063; p = 0.03). Females, on the other hand, spent longer training time in mean, even when adjusting to the simulator type (77.6 +/- 450.9 h for females vs. 36.5 +/- 43.3 h for males; p = 0.004). Both genders rated the impact of VRS training on their OR practice similarly (5.4 +/- 3.5 for females vs. 5.5 +/- 3.5 for males; p = 0.9). The proportion of respondents favorable to the implementation of a surgical license were similar between females and males (54% vs. 60%; p = 0.4). Access to VRS training was different as females were significantly more represented in HMS and other simulators groups (p = 0.007) (Table 3). Type of respondents, simulators accessed and opinions of the users (impact of the VRS training on OR surgery, need for a surgical license) are presented for each of the studied countries in Supplementary Material 2.

Discussion

This international survey aimed to explore global trends in VRS training through the distribution of an online questionnaire to active participants of ophthalmology surgery training centers worldwide. The EyeSi simulator was the most frequently used by respondents (80%) followed by the HMS (48%) simulator and other simulator options (11%). This likely reflects the first mover advantage with more than a thousand of EyeSi around the world, mainly in the US32,33,34,35,36, Europe12,37,38 and China18,39. While the EyeSi has been available for over 15 years40, the longest access reported in this survey was 6 years. Delayed acquisition may be related to the particularly high cost of the technology and lack of efficacy data originally41. Indeed, the EyeSi simulator price of acquisition is estimated over $200,000 and in 2013, the time to recoup this price in US residency program was an estimated 30 years42,43. However, recent reports estimated VRS systems to be more cost-effective than wet-laboratory simulation supports, even when considering recurrent expenditures such as hardware maintenance and software upgrades44. Their efficacy on complication rate reduction further improves cost effectiveness4,12, particularly when considering surgeon retraining (e.g., after accidents or maternity leave). It is noteworthy that residents, comprising the majority of the respondents, may not have provided precise information regarding the simulator’s acquisition date, given that its purchase may have occurred well before the beginning of their residency.

The uneven geographical and economical distribution of VRS technology is well known, with most being located in high-income countries3,26,45,46, while low-income countries face great access difficulties. To address this problem, the ESCRS has purchased an EyeSi simulator to rotate to lower income European countries47. Also, HMS training centers (located in the US, India, Mexico, Madagascar and France) propose access to their VRS trainings for surgeons from regions with a high demand for cataract surgery and a shortage of trained surgeons22,48. MSICS, a low-cost cataract surgery procedure, has been specifically developed on the HMS simulator, although many other procedures have been developed, including phacoemulsification and sutures training. The HMS simulator has been available on the market since 2022, as mentioned by our respondents, and coincides with its first efficacy assessment7. There were other VRS training reported, mainly in Europe (France, Germany and Portugal) and Madagascar, like the Alcon Fidelis system (9/17 respondents), though efficacy data is not yet available in the literature.

Twenty-one respondents (12%) had to travel to another city or country to access a virtual reality simulator (mean distance to travel was 550 [36-4000] kilometers). Twelve were from European countries (3 Belgium, 2 France, 1 Denmark, Serbia, Bulgaria, Germany, Hungary, Ukraine, UK), the others from India, Madagascar, and Trinidad and Tobago. They accessed an EyeSi simulator in 76% of cases and HMS in 52% of cases. Thus, access disparities to VRS trainings are not solely restricted to low-income countries. A recent European survey revealed that only 17.7% of the European ophthalmology residents performed at least 10 VRS training sessions during their residency49. Other training methods (wet- and dry-laboratory) were also barely used49. In these countries, limited use of simulation facilities or lack of a culture of simulation could explain these results.

In this study, 33% of the residents reported that they had never operated on real patients (mainly from Germany, Denmark, Belgium, Canada and Mexico), although they had used a virtual reality simulator. These results are in line with the recent Europe survey49 and illustrate the two different models of surgery learning. While some countries propose integrate surgery into residency training50,51, others reserve surgery for post residency fellowships52,53. These national variations and opinions on when to train cataract surgery have also made harmonization more difficult.

Ninety-three respondents (55%) were in favor requiring a form of “surgical license” as a requirement of training body (see Fig. 3B). Indeed, its use during residency did not reach a consensus54,55,56, even among residents who do not really feel that that it would increase their confidence when operating on real patients, though the benefit of such a license may be more to reassure the trainer rather than the student3,36,41,54. These diverging opinions could be related to the absence of standardized VRS training programs, as has been suggested by other surgical specialties3,25,57,58,59. Harmonization of VRS practices and assessment methods are key elements for the improvement of such training programs27. For now, there is a consensus that the use of a logbook is an essential element of a post-graduate surgical curriculum60,61,62,63, which is consistent with the growing interest for competency-based medical education (CBME) and entrustable professional activities (EPAs)26,56.

VRS trainings requires considerable investment from both users and instructors45, which may vary depending on the type of simulator used. In our study, longer and more frequently supervised training sessions were reported by HMS users. This corresponds to the proposed HMS training programs64. In this setting, users were more favorable to a “surgical license” requirement, which might be explained by higher self-confidence in the presence of a supervisor65. Although, finding one dedicated instructor may be challenging as surgery teaching is time consuming for experts. In contrast EyeSi users reported having permanent access to the simulator more frequently and this may be because a supervisor is not required. In this situation, it is for trainees to find dedicated time to train on the simulator, though they also would have preferred a dedicated supervisor54,55. Additional and optimized on-machine instructions and learnings support, like the VRM net (i.e., online simulation platform associated to the EyeSi simulator)66, remain valuable alternatives. Remote mentoring should also be considered as it might help reducing costs and overcome geographic limitations while optimizing experts supervision time46,58. Of note, 80% of the respondents in the present study were under 40 years old, thus including the trainee category for simulation programs. Therefore, results might skewed by trainees’ opinions compared to those of supervisors.

VRS trainings are frequently combined with dry-or wet-laboratory simulation training and theorical learning courses32,35,36 to help maximizing training efficacy58. These training methods are complementary. For example, one can easily imagine that haptic feedback or tissue realism is different on dry-lab simulation compared to virtual reality supports, while steps like cortex removal are easier to simulate on the virtual reality simulators67. Interestingly, quite a considerable number of respondents (n = 61; 36%) reported having access to multiple simulators which remains poorly reported elsewhere. A positive correlation of EyeSi and HMS simulators scores has been reported suggesting their validity equivalence68.

Finally, VRS needs to permanently adapt itself to new technologies, such as MetaVR, combining metaverse (individual immersion within the internet) and virtual reality69. For example, construct validity was demonstrated for RetinaVR, an affordable, portable and fully immersive virtual reality simulator for vitreoretinal surgery training70. In the present study, 24% of the respondents reported that they had heard about MetaVR but only 5% have seen it used, which illustrates its small penetration into the world of simulation training.

This study has some limitations that bear consideration. Although the response rate was relatively high for a worldwide distributed survey (11%), it remains lower compared to the average response rate for surveys in organizational research71. Indeed, the low response rate from countries with known established simulator courses means that we miss some key data. This was the case for the U.K. where there was only one respondent, and none for Ireland, where virtual reality simulators have been available for over a decade. In addition, we have little information from Turkey, Morocco, Egypt, Argentina, Brazil, Hong Kong and China where the EyeSi simulator is in frequent use18. This could be related to the difficulty to reach the desired contact person in training centers, or to a poor use of available simulators in these centers. To address this issue, additional methods of contact could be employed. For instance, non-responding centers could be contacted by phone calls in addition to email reminders. Other missing countries, notably LMICs like in Africa or South America, could be reached by alternative professional networks, such as in-person propositions during national or international meetings, invitations to webinars, or country-specific survey promotions. A longer inclusion period with more intensive communication would likely increase the response rate and the scope of the final results. On the contrary, countries particularly enthusiastic about the use of VRS were highly represented, accounting for the data from Madagascar. This might explain the high prevalence of HMS simulator users, although this simulator is not commercially available. It’s likely that individuals who applied for the HMS training program were easier to reach by email and more interested in participating in the survey, given their proactivity in seeking out the training. Response bias might have affected several survey answers, particularly the assessment of simulator time. Multimodal training methods for the preparation to the OR should be further studied, including non-technical skills evaluation, though they are not often reported despite being of great importance in surgical learning1.

Conclusion

The present survey supports the growing recognition of VRS-based training as a vital component of ophthalmology surgical education, with a significant number of training centers now considering it a prerequisite for operating room access. However, access to VRS is still unequal worldwide and efforts are needed towards its widespread implementation and the harmonization of training practices. Such initiatives are crucial for elevating the standards in the education of future ophthalmic surgeons. Furthermore, the increasing interest in logbooks, surgical license implementation, CBME and EPAs clearly signals an ongoing paradigm shift towards more structured and accountable surgical education.

Materials and methods

Questionnaire creation, dissemination, and data collection

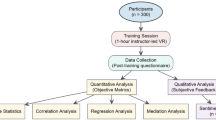

An anonymous 39-question survey entitled “Global Practice Patterns of Virtual Reality Simulation Training” was created and reviewed for relevance by ophthalmologists with specific expertise in VRS (A.S.S; T.B.; V.C.L.; BA.H.; C.A.: A.B.; D.B.; JL.B.; E.F.; H.P.F.; A.R.; B.S.) (Supplementary material 1). The final version of the questionnaire was prepared on an electronic platform (SurveyMonkey Europe UC; Dublin, Ireland). Email invitations to complete the survey were sent on November 20th, 2023, to active participants (defined as the first or last authors of ophthalmology VRS publications on Pubmed) and directors of centers that use VRS for surgical education in ophthalmology. Two follow-up reminders were sent via email. Participants were offered no compensation. The survey was closed on March 31st, 2024. Participants were asked to complete the questionnaire themselves and forward the link to residents and fellows of their team, asking them to complete the survey as well. The participants were asked about their gender, age and position (resident, fellow or senior surgeon). Given this design, two categories of respondents were recorded; senior surgeons, or those who had finished their fellowship, were classified into group A, and residents and fellows classified into group B. Respondents were asked to report the number of recipients to whom they distributed the questionnaire. No personal identifying data apart from the city and country of the respondents were collected. Four different groups of virtual reality simulators use were anticipated: EyeSi [group 1], HMS [group 2], other simulators [group 3], and multiple simulators [group 4]. Consent for the use of each participant’s answer was obtained via the last question of the survey: “I agree that the information I completed in this survey can be used for academic purpose in an aggregated and anonymous manner” with possible answers “Yes” or “No”. This study adhered to the tenets of the Declaration of Helsinki and was approved by the Ethics Committee of the French Society of Ophthalmology (IRB 00008855 Société Française d’Ophtalmologie IRB#1).

Statistical analysis

Quantitative variables for general characteristics of the participants were expressed in means, with standard deviation. Qualitative variables were expressed in numbers and proportions. Inferential analysis of qualitative variables was done either with a Chi2 test or a Fisher exact test, depending on theoretical frequencies in contingency tables. Post-hoc tests were performed with Benjamini and Hochberg’s alpha risk correction (false discovery rate).

Comparisons of quantitative variables between groups were carried out by a Student’s t-test (when the principal variable was normally distributed), with possible correction to take heterogeneity of variances into account (Welsh test), or by a non-parametric test (Mann-Whitney-U test or Kruskal-Wallis) for non-normally distributed variable. Post-hoc tests were performed with the Bonferroni-Holm alpha risk correction (false discovery rate). A p-value < 0.05 denoted statistical significance. Analyses were carried out with JASP Team (2022). JASP (version 0.16.4) [Computer software].

Data availability

The datasets used and analysed during the current study are available from the corresponding author on reasonable request.

References

Wood, T. C., Maqsood, S., Nanavaty, M. A. & Rajak, S. Validity of scoring systems for the assessment of technical and non-technical skills in ophthalmic surgery-a systematic review. Eye Lond. Engl. 35, 1833–1849 (2021).

Carricondo, P. C., Fortes, A. C. F. M., Mourão, P. & de Hajnal, C. Jose, N. K. Senior resident phacoemulsification learning curve (corrected from cure). Arq. Bras. Oftalmol. 73, 66–69 (2010).

Dormegny, L. et al. Virtual reality simulation and real-life training programs for cataract surgery: a scoping review of the literature. BMC Med. Educ. 24, 1245 (2024).

Lin, J. C., Yu, Z., Scott, I. U. & Greenberg, P. B. Virtual reality training for cataract surgery operating performance in ophthalmology trainees. Cochrane Database Syst. Rev. 12, CD014953 (2021).

Lucas, L., Schellini, S. A. & Lottelli, A. C. Complications in the first 10 phacoemulsification cataract surgeries with and without prior simulator training. Arq. Bras. Oftalmol. 82, 289–294 (2019).

Adnane, I., Chahbi, M. & Elbelhadji, M. Simulation Virtuelle pour l’apprentissage de La chirurgie de cataracte. J. Fr. Ophtalmol. 43, 334–340 (2020).

Nair, A. G. et al. Effectiveness of simulation-based training for manual small incision cataract surgery among novice surgeons: a randomized controlled trial. Sci. Rep. 11, 10945 (2021).

Geary, A. et al. The impact of distance cataract surgical wet laboratory training on cataract surgical competency of ophthalmology residents. BMC Med. Educ. 21, 219 (2021).

Jeang, L. J. et al. Rate of posterior capsule rupture in phacoemulsification cataract surgery by residents with institution of a wet laboratory course. J. Acad. Ophthalmol. 2017 14, e70–e73 (2022).

Lansingh, V. C. et al. Embracing technology in cataract surgical training - The way forward. Indian J. Ophthalmol. 70, 4079–4081 (2022).

Khan, A., Rangu, N., Thanitcul, C., Riaz, K. M. & Woreta, F. A. Ophthalmic education: the top 100 cited articles in ophthalmology journals. J. Acad. Ophthalmol. 2017. 15, e132–e143 (2023).

Thomsen, A. S. S. et al. Operating room performance improves after Proficiency-Based virtual reality cataract surgery training. Ophthalmology 124, 524–531 (2017).

Nayer, Z. H., Murdock, B., Dharia, I. P. & Belyea, D. A. Predictive and construct validity of virtual reality cataract surgery simulators. J. Cataract Refract. Surg. 46, 907–912 (2020).

Deuchler, S. et al. Clinical efficacy of simulated vitreoretinal surgery to prepare surgeons for the upcoming intervention in the operating room. PloS One. 11, e0150690 (2016).

Lee, R. et al. A systematic review of simulation-based training tools for technical and non-technical skills in ophthalmology. Eye Lond. Engl. 34, 1737–1759 (2020).

Carr, L., McKechnie, T., Hatamnejad, A., Chan, J. & Beattie, A. Effectiveness of the Eyesi surgical simulator for ophthalmology trainees: systematic review and meta-analysis. Can. J. Ophthalmol. 59, 172–180 (2024).

Deuchler, S. et al. Simulator-Based versus traditional training of fundus biomicroscopy for medical students: A prospective randomized trial. Ophthalmol. Ther. 13, 1601–1617 (2024).

Setting standards in. medical training, Training simulators, Haag-Streit Group. https://haag-streit.com/en/Products/Categories/Simulators_training/Training_simulators# (2023).

Oseni, J. et al. National access to EyeSi simulation: A comparative study among U.S. Ophthalmology residency programs. J. Acad. Ophthalmol. 15, e112–e118 (2023).

Deuchler, S. et al. Efficacy of Simulator-Based Slit lamp training for medical students: A prospective, randomized trial. Ophthalmol. Ther. 12, 2171–2186 (2023).

Deuchler, S., Dail, Y. A., Koch, F., Flockerzi, E. & Seitz, B. Introduction of the Eyesi slit-lamp simulator as a diagnostic training system. Indian J. Ophthalmol. 72, 1526–1527 (2024).

Broyles, J. R., Glick, P., Hu, J. & Lim, Y. W. Cataract blindness and Simulation-Based training for cataract surgeons: an assessment of the helpmesee approach. Rand Health Q. 3, 7 (2013).

Lam, C. K., Sundaraj, K., Sulaiman, M. N. & Qamarruddin, F. A. Virtual phacoemulsification surgical simulation using visual guidance and performance parameters as a feasible proficiency assessment tool. BMC Ophthalmol. 16, 88 (2016).

Lam, C. K., Sundaraj, K. & Sulaiman, M. N. A systematic review of phacoemulsification cataract surgery in virtual reality simulators. Med. Kaunas Lith. 49, 1–8 (2013).

Haskins, I. N. et al. Current status of resident simulation training curricula: pearls and pitfalls. Surg. Endosc. 38, 4788–4797 (2024).

Lu, J., Cuff, R. F. & Mansour, M. A. Simulation in surgical education. Am. J. Surg. 221, 509–514 (2021).

Filipe, H. et al. Good practices in simulation-based education in ophthalmology – A thematic series. An initiative of the simulation subcommittee of the ophthalmology foundation part IV: recommendations for incorporating simulation-based education in ophthalmology training programs. Pan-Am J. Ophthalmol. 5, 38 (2023).

Martin, G., Chapron, T., Bremond-Gignac, D., Caputo, G. & Cochereau, I. [Assessment of ophthalmological surgical training in Île-de-France: results of a survey on 89 residents]. J. Fr. Ophtalmol. 45, 883–893 (2022).

Mathis, T. et al. Evaluation of the time required to complete a cataract training program on EyeSi surgical simulator during the first-year residency. Eur. J. Ophthalmol. 11206721221136322 https://doi.org/10.1177/11206721221136322 (2022).

Cataract Surgery Training - CST. Ophthalmologica The Belgian Ophthalmoc Associations https://www.ophthalmologia.be/page.php?edi_id=1883 (2025).

Introduction to Ophthalmic Surgery course. The Royal College of Ophthalmologists https://ihub.rcophth.ac.uk/RCO/Events/Event_Display.aspx?EventKey=IOS100425 (2025).

Folgar, F. A., Wong, J., Helveston, E. M. & Park, L. Surgical outcomes in cataract surgeries performed by residents training with EYESI simulator system. Invest. Ophthalmol. Vis. Sci. (2007).

Daly, M. K., Gonzalez, E., Siracuse-Lee, D. & Legutko, P. A. Efficacy of surgical simulator training versus traditional wet-lab training on operating room performance of ophthalmology residents during the capsulorhexis in cataract surgery. J. Cataract Refract. Surg. 39, 1734–1741 (2013).

McCannel, C. A. Continuous curvilinear capsulorhexis training and non-rhexis related vitreous loss: The specificity of virtual reality simulator surgical training (An American Ophthalmological Society Thesis). Trans. Am. Ophthalmol. Soc. 115, T2 (2017).

Pokroy, R. et al. Impact of simulator training on resident cataract surgery. Graefes Arch. Clin. Exp. Ophthalmol. 251, 777–781 (2013).

Staropoli, P. C. et al. Surgical simulation training reduces intraoperative cataract surgery complications among residents. Simul. Heal. 13, 11–15 (2018).

Jacobsen, M. F. et al. Correlation of virtual reality performance with real-life cataract surgery performance. J. Cataract Refract. Surg. 45, 1246–1251 (2019).

Ferris, J. D. et al. Royal college of ophthalmologists’ National ophthalmology database study of cataract surgery: report 6. The impact of EyeSi virtual reality training on complications rates of cataract surgery performed by first and second year trainees. Br. J. Ophthalmol. 104, 324–329 (2020).

Ng, D. S. C. et al. Impact of virtual reality simulation on learning barriers of phacoemulsification perceived by residents. Clin. Ophthalmol. Auckl. NZ. 12, 885–893 (2018).

Mahr, M. A. & Hodge, D. O. Construct validity of anterior segment anti-tremor and forceps surgical simulator training modules: attending versus resident surgeon performance. J. Cataract Refract. Surg. 34, 980–985 (2008).

Ahmed, Y., Scott, I. U. & Greenberg, P. B. A survey of the role of virtual surgery simulators in ophthalmic graduate medical education. Graefes Arch. Clin. Exp. Ophthalmol. 249, 1263–1265 (2011).

Young, B. K. & Greenberg, P. B. Is virtual reality training for resident cataract surgeons cost effective? Graefes Arch. Clin. Exp. Ophthalmol. Albrecht Von Graefes Arch. Klin. Exp. Ophthalmol. 251, 2295–2296 (2013).

Lowry, E. A., Porco, T. C. & Naseri, A. Cost analysis of virtual-reality phacoemulsification simulation in ophthalmology training programs. J. Cataract Refract. Surg. 39, 1616–1617 (2013).

Ng, D. S. et al. Cost-effectiveness of virtual reality and wet laboratory cataract surgery simulation. Med. (Baltim). 102, e35067 (2023).

Qi, Z., Corr, F., Grimm, D., Nimsky, C. & Bopp, M. H. A. Extended Reality-Based Head-Mounted displays for surgical education: A Ten-Year systematic review. Bioeng. Basel Switz. 11, 741 (2024).

Kosieradzki, M., Lisik, W., Gierwiało, R. & Sitnik, R. Applicability of augmented reality in an organ transplantation. Ann. Transpl. 25, e923597 (2020).

ESCRS Moving Simulator. ESCRS https://www.escrs.org/education/escrs-moving-simulator/ (2022).

Nair, A. G., Mishra, D. & Prabu, A. Cataract surgical training among residents in india: results from a survey. Indian J. Ophthalmol. 71, 743–749 (2023).

Ní Dhubhghaill, S. et al. Cataract surgical training in europe: European board of ophthalmology survey. J. Cataract Refract. Surg. 49, 1120–1127 (2023).

Yaïci, R. et al. [Cataract surgery training in france: analysis of the results of the European board of ophthalmology survey in the French cohort]. J. Fr. Ophtalmol. 48, 104383 (2025).

Yaïci, R. et al. Training in cataract surgery in spain: analysis of the results of a survey of the European board of ophthalmology in a Spanish cohort. Arch. Soc. Esp. Oftalmol. 99, 373–382 (2024).

Yaïci, R. et al. Cataract surgery training in germany: A survey by the European board of ophthalmology. Klin. Monatsbl Augenheilkd. https://doi.org/10.1055/a-2462-8222 (2025).

Yaïci, R. et al. Cataract surgical training: analysis of the results of the European board of ophthalmology survey in the Swiss cohort. Eur. J. Ophthalmol. 11206721241304052 https://doi.org/10.1177/11206721241304052 (2024).

Mondal, S. et al. What do retina fellows-in-training think about the vitreoretinal surgical simulator: A multicenter survey. Indian J. Ophthalmol. 71, 3064–3068 (2023).

Cheema, M., Anderson, S., Hanson, C. & Solarte, C. Surgical simulation in Canadian ophthalmology programs: a nationwide questionnaire. Can. J. Ophthalmol. J. Can. Ophtalmol. 58, e11–e13 (2023).

Dormegny, L. et al. Is it the right time to promote competency-based European training requirements in ophthalmology?? A European board of ophthalmology? survey. Acta Ophthalmol. (Copenh). https://doi.org/10.1111/aos.17433 (2024).

Mao, R. Q. et al. Immersive virtual reality for surgical training: A systematic review. J. Surg. Res. 268, 40–58 (2021).

Ticonosco, M. et al. From simulation to surgery, advancements and challenges in robotic training for radical prostatectomy: a narrative review. Chin. Clin. Oncol. 13, 55–55 (2024).

Sinha, A. et al. Current practises and the future of robotic surgical training. Surgeon 21, 314–322 (2023).

Wasson, E., Thandi, C. & Bray, A. The use of a surgical logbook to improve training and patient safety: A retrospective analysis of 6 years’ experience in bristol, UK. Skin. Health Dis. 4, e386 (2024).

Delhorme, J. B. et al. Why and how to implement an electronic resident’s surgical logbook to improve operating-room training? First 5-year feedback from a French center. J. Visc. Surg. 159, 450–457 (2022).

Ripa, M. & Sherif, A. Cataract surgery training: report of a trainee’s experience. Oman J. Ophthalmol. 16, 59–63 (2023).

Kaur, H. et al. Operative caseload of general surgeons working in a rural hospital in central Australia. ANZ J. Surg. https://doi.org/10.1111/ans.19323 (2024).

Lansingh, V. C. & Nair, A. G. More than simulation: the helpmesee approach to cataract surgical training. Commun. Eye Health J. 36, (2023).

Lynds, R., Hansen, B., Blomquist, P. H. & Mootha, V. V. Supervised resident manual small-incision cataract surgery outcomes at large urban united States residency training program. J. Cataract Refract. Surg. 44, 34–38 (2018).

VRmNet Eyesi Surgical Brochure. https://fr.slideshare.net/slideshow/vrmnet-eyesi-surgical-brochure/245054308 (2021).

Raval, N., Hawn, V., Kim, M., Xie, X. & Shrivastava, A. Evaluation of ophthalmic surgical simulators for continuous curvilinear capsulorhexis training. J. Cataract Refract. Surg. 48, 611–615 (2022).

Yaïci, R. et al. Validity evidence of a new virtual reality simulator for phacoemulsification training in cataract surgery. Sci. Rep. 14, 25524 (2024).

Matwala, K., Shakir, T., Bhan, C. & Chand, M. The surgical metaverse. Cir. Esp. Engl. Ed. 102, S61–S65 (2024).

Antaki, F. et al. Democratizing vitreoretinal surgery training with a portable and affordable virtual reality simulator in the metaverse. Transl Vis. Sci. Technol. 13, 5 (2024).

Baruch, Y. & Holtom, B. C. Survey response rate levels and trends in organizational research. Hum. Relat. 61, 1139–1160 (2008).

Acknowledgements

The authors thank all participants who took their time to answer the questionnaire and thereby contributed to this study.

Author information

Authors and Affiliations

Contributions

L.D., R.Y and E.K. contributed to the acquisition, analysis and interpretation of data and drafted the work. S.D.,C.A. and A.B. contributed to the analysis of data and designed the software used for this purpose. J-L.B., G.D., B.D. and H.F. contributed to the design of the work and revised it, E.F., D.G., P.G. and B.H. participated to the acquisition and interpretation of data and revised the work. S.K., V.L, R.R. and J-M. A. participated to the interpretation of data and revised the work. G.R. and A.R. participated to the conception of the work and revised it. A.S., M.S., B.S. and L.S. designed the work, participated to the creation of the software to analyze data, and revised the work.A. S. T., N.C. and A.L. designed the work, contributed to the acquisition and revised the work. T.B. designed the work, participated to the acquisition and interpretation of data and revised the work.

Corresponding author

Ethics declarations

Competing interests

Five of the authors of the manuscript (C. Ahiwalay, A. Bacchav, V.C. Lansingh, R. Rafanomezantsoa and J-M Andre) are currently employed by HelpMeSee, Inc. (Jersey City, New York). All the other authors have no financial aid to disclose.

Ethics approval and consent to participate

Ethics approval was obtained by the Ethics Committee of Strasbourg University Hospital. Respondents were enrolled after providing written informed consent.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Dormegny, L., Yaïci, R., Koestel, E. et al. Global trends and practice patterns in virtual reality simulation training for ophthalmic surgery: an international survey use of virtual reality simulation training around the world. Sci Rep 15, 30886 (2025). https://doi.org/10.1038/s41598-025-16227-7

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-16227-7