Abstract

Digital e-governance has grown tremendously due to the massive information technology revolution. Banking, Healthcare, and Insurance are some sectors that rely on ownership identification during various stages of service provision. Watermarking has been employed as a primary factor in authenticating stakeholders in such circumstances. In this work, a three-layer feature-dependent image watermarking approach in the transform domain has been proposed. In this Discrete Wavelet Transform (DWT) influenced approach, the first decomposition level holds the Singular Values of a specific encrypted logo. In the second level of decomposition, the specific textual authentication signature is included in an arithmetic coding tag. The third level of decomposition has been utilised to keep the concerned identity of the owner in a compressed form using run-length coding. The proposed uniqueness of the scheme involves embedding a heavy payload watermark in the chosen grayscale cover image by utilising feature extraction and data compression, with a focus on preserving perceptual transparency and robustness. Various geometric variations and noise patterns, including Gaussian, salt and pepper, rotation, and cropping, were applied to the watermarked image to ensure its attack-resistant capability. After extracting the watermarks one by one, the reversibility of the cover image has been recovered through the Convolutional Neural Network (CNN) with a very low Mean Square Error (MSE). The proposed DWT-based scheme has achieved high perceptual transparency, as demonstrated by the Structural Similarity Index Measure (SSIM) and Normalised Correlation (NC) approach, which approaches unity. The integration of CNN enhances its robustness by recovering images against various attacks, as evidenced by achieving a PSNR of about 44 dB. Additionally, high SSIM (> 0.99) and NC (~ 1.0) values indicate enhanced perceptual transparency and robustness. The Bit error rate remained minimal post-attack, confirming reliable watermark recovery with CNN-aided restoration.

Similar content being viewed by others

Introduction

With the progression in information sharing, multimedia content has gained significant interest among researchers. The rapid growth of multimedia transmission, both online and offline, creates a unique demand for security and privacy. Digital Rights Management (DRM) is a crucial issue that must be addressed in such a scenario. Digital watermarking is a fundamental technique providing a promising solution to DRM issues. It is a method of authentication for multimedia content to secure integrity. Watermarking is used in authentication for communication and transmissions, copyright protection for intellectual properties, copyright control, broadcast monitoring, forensic applications, and tamper detection. It encompasses e-health, fingerprinting, forensic, social, digital content protection, e-voting, driver licenses, military, remote education, media file archiving, broadcast monitoring, and digital cinema1,2,3.

The image watermarking framework involves the host image and the watermark. In the watermark embedding process, the watermark is inserted into the host image to emphasise ownership. The resultant watermarked image is transmitted over the communication channel; it should be resilient against intentional and random noise during the transmission. Imperceptibility, robustness, security, and capacity are prime design parameters. However, due to their conflicting nature, it is not possible to achieve all design objectives simultaneously. So, based on their application, a trade-off is maintained between them. Existing watermarking algorithms are implemented in the spatial or transform domains to achieve authentication or data integrity. However, watermarking algorithms are designed to handle large payloads without compromising visual quality. Watermarking in the spatial domain is simple and achieves a high payload without compromising the visual quality of the embedding object. However, spatial embedding does not resist the impact of the image processing technique. Transform domain approaches offer high robustness.

Additionally, the complicated and reversible embedding schemes are compatible with a smaller payload. Transform domain-assisted procedures to provide better restoration. However, simple spatial domain approaches are vulnerable to image processing attacks4,5. Many spatial domains and transform domain watermarking schemes have been proposed6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35. N. Mohammad et al. proposed a lossless visible watermarking scheme that addresses the issue in BTC. In this method, Absolute Moment BTC (AMBTC) has been utilised to achieve the full visual perception of the host image and adequately reconstruct the original image without any distortion6. Wong and Memon have proposed a perfect authorisation for ownership verification. This method can detect any modifications to the host image and its results7.

Hierarchical watermarking is presented by Liu et al., where watermark extraction can be performed from either the encrypted or decrypted watermarked image, demonstrating the superiority of robustness and hierarchical security. The host image is initially divided into blocks and transformed into the wavelet domain. Then, the Arnold map is used to scramble the host image, where encryption is performed through Compressive Sensing. Finally, the watermark is embedded into the encrypted image through the Scalar Costa Scheme8. Liu et al. proposed a watermarking scheme against regional attacks on digital images. In this work, the variance and mean of the host image are calculated and used as side information, and scrambled watermarks are embedded into the divided parts of the host images. Watermark embedding steps are implemented by HVS and Particle Swarm Optimisation (PSO) methods9. Lang and Zhang recommend blind digital watermarking using the Fractional Fourier Transform (FRFT). The cover image is divided into non-overlapping blocks; each block is transformed using FRFT, and then each pixel value of the binary watermark is embedded into it. This watermarking solution resists JPEG compression noise attacks, image manipulation operations, and compound attacks10.

Yen and Huang et al. suggested a frequency-domain watermarking approach. DCT and back-propagation neural networks were used as significant operations to suppress the interferences that affect the watermark. A 32 × 32-pixel watermark has been embedded into a 256 × 256-pixel host image in the frequency domain, which is susceptible to common channel interferences. The DCT-BPNN method effectively enhanced the watermarking scheme’s resistance to attacks11. Roy and Pal presented a fusion image watermarking technique where RDWT-DCT plays a vital role. Embedding the watermark is performed after encrypting the watermark through the Arnold chaotic map to ensure confidentiality. Several attacks have been applied to this proposed technique to verify its robustness12. Nguyen et al. explained a DWT-based authentication scheme, a reversible watermarking technique with high tamper detection accuracy13.

SVD extracts the significant features which need to be embedded. The Genetic algorithm has been used to carry out host image analysis, identifying insensitive areas of the human eye where watermark embedding takes place14,15. Gonge and Ghatol have proposed the composition of the DCT and an SVD-based digital watermarking scheme. This scheme is implemented in three phases: embedding, attack, and extraction. Additionally, AES with a 256-bit key has been chosen to encrypt the watermarked image, providing an extra layer of security in terms of confidentiality16,17. Finally, an image watermarking scheme is proposed using the Arnold transform and the Back-propagation neural network (BPN)18.

Watermarking methods are proposed for copyright protection using Singular Value Decomposition (SVD) and multi-objective ant colony optimisation. This transforms domain approach to address the worst-case scenario of SVD, such as the False Positive Problem (FPP), and provides a solution to overcome it. This FPP occurs during the embedding process when singular values have been used as secret keys. Integer Wavelet Transform (IWT) has been used with S and V matrices to serve as dual keys19,20.

Li et al. present a robust watermarking approach based on the wavelet quantisation method. Significant Amplitude Difference (SAD) is adapted to localise the wavelet components in this proposed method. These significant wavelet coefficients have been considered for embedding the watermark, which increases robustness and fidelity21. Rastegar et al. also presented a hybrid watermarking algorithm that utilises the SVD and Radon transform. This method employs a simple watermark embedding procedure in which the original image is transformed using a 2D-DWT transform followed by a Radon transform. Finally, singular values of the watermark are embedded into the SVD-converted host image22.

Zear et al. have proposed a multiple watermarking approach in the transform domain for healthcare applications. This method used DWT, DCT, and SVD to embed the watermarks. BPNN is used to reduce the noise effects on the watermarked image. The security of the image watermark is achieved by performing encryption using the Arnold transform23. Fazli and Moeini have proposed an alternative transform domain approach to robust image watermarking using DWT, DCT, and SVD. In this work, geometrical attacks have been considered a significant threat, and the solution to this problem is given by dividing the host image into four non-overlapping rectangular regions. The watermark is embedded independently into each of them24. A.K. Singh et al. recommend using an SVD-based digital watermarking technique to achieve domain conversion. BPNN is applied in the extracted watermark to reduce noise effects and interferences25. S. Singh et al. proposed a hybrid semi-blind watermarking approach that focuses on utilising redundant wavelets. This work presents image and text watermarking by adapting the Non-Subsampled Contourlet Transform (NSCT) and the Redundant Discrete Wavelet Transform (RDWT) to address shift-invariance and directional information26.

Ernawan and Ariatmanto have analysed the effectiveness of scaling factor techniques for copyright protection, considering various factors such as the strength of the watermark, the robustness of the scaling factor generation algorithm, and the resilience of the watermark against attacks, including image manipulations or geometric transformations27. Awasthi and Srivastava have proposed the SURF algorithm, which incorporates several techniques to enhance its robustness and efficiency. It utilises an integral image representation to compute box filters for efficient, fast convolution operations. It also employs a fast approximation method based on Haar wavelets to accelerate descriptor calculations28. Sivaraman et al. have proposed that puli kolam-assisted medical image watermarking on reconfigurable hardware depends on the chosen watermarking algorithm, puli kolam pattern design, and the targeted reconfigurable hardware platform. These components require expertise in image processing, cryptography, and FPGA programming to develop a complete system29. Ravichandran, D., et al. have proposed that ROI-based medical image watermarking is a research area, and the specific techniques and algorithms used can vary based on the application’s requirements and constraints30. Authors have proposed the Pigeon algorithm, which guides the embedding process by efficiently exploring the DCT coefficient space to find optimal locations and strengths for embedding the watermark31. Kumar et al. have enhanced the utilisation of the PSO algorithm, which optimises the embedding parameters to strike a balance between visibility and its ability to withstand attacks. The LWT provides a frequency-based representation that allows for efficient embedding and extraction of watermarks32.

Watermarking techniques in deep-learning environments aim to protect intellectual property rights, ensure authenticity, and trace the origin of deep-learning models and their associated data. This comprehensive review will examine various watermarking techniques specifically designed for deep learning applications. Deep Learning Techniques: Integrating deep learning algorithms into watermarking methods shows promise in improving robustness, security, and attack resistance33,34,35,36,37,38,39,40,41,42.

The significant notification of the existing schemes is as follows

Watermarking in the wavelet subband offers good spatial and frequency locality, as well as flexibility, supporting biometric signal analysis and real-time image compression techniques8,9,10,11,12,13. However, DWT failed due to the lack of directional information, phase information, and shift sensitivity. On the other hand, many researchers have reported that SVD extracts only singular features, converts the varying size of a 2D signal into a square size, is invariant to scaling attacks, and is highly robust against various attacks14,15,16,17,18,19,20,21,22. Still, restoration is inconvenient due to a false-positive problem. Therefore, the proposed scheme combines SVD and DWT to enhance robustness by addressing these shortcomings.

Many research works consider A single watermark a payload6,7,8,9,10,11,12,13,14,15,16,17. This proposed method introduces a multi-watermarking scheme that incorporates QR codes, text, and biometric images, such as fingerprints, as watermarks. This scheme enhances the payload without compromising imperceptibility and robustness. This can be achieved through the appropriate selection of the embedding area, an independent embedding rule, and a suitable lossless compression and encoding scheme. However, this proposed scheme effectively handles the embedding process; open-channel transmission sometimes yields worthless output. To address this, denoising schemes might be integrated. However, existing image denoising schemes provide satisfactory output but require more time to process the data bundle and fail in real-time learning, which is essential for handling unpredictable channel noise4,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38.

It is essential to note that the successful application of deep learning in watermarking relies on the availability of large and diverse training datasets, the careful selection of network architectures, and the use of appropriate loss functions for training the models. Deep learning in watermarking is an active area of research, and ongoing advancements continuously improve the performance, security, and efficiency of watermarking techniques32,33,34,35 and the summary of related work is presented in Table 1.

Highlights of the proposed scheme are as follows.

-

1.

Multiple watermarks: Achieves confidentiality, integrity, and authentication through a single proposed scheme.

-

2.

Enhanced confidentiality: employing an encryption scheme and SVD compression for watermark1.

-

3.

Enrich Imperceptibility: Employing RLC and arithmetic lossless compression schemes on Watermark 2 removes redundancy, provides bit compactness, and protects the significant feature.

-

4.

RLC is chosen for watermark3; the bifurcation and minutiae details are the binary features of the watermark 3, due to its lossless compression nature; after the encoding, it embeds significant details only.

Fuzzy hashing is developed for Watermark 3 for tamper verification and localisation.

-

5.

Cover and watermarks can be recovered after the intentional or unintentional noise attack, with a support adaptive deep learning denoising model.

-

6.

Additionally, all the packed forms of confidential data have been embedded independently in the Wavelet coefficients.

The proposed algorithm can guarantee that the transmitted multimedia data is protected and authenticated using multilevel authentication verification. Thus, the proposed algorithm can provide a Complete intelligent system-assisted security scheme to offer Confidentiality, Integrity, and Authentication (CIA). The paper is organised as follows: Sect. 2 presents the preliminaries, and Sect. 3 explains the proposed multilevel watermarking scheme. Simulation outcomes are given in Sect. 4. Finally, Sect. 5 concludes the overall findings of this scheme.

Preliminaries

Convolutional neural network (CNN)

A convolutional neural network is a special deep-learning algorithm that accepts raw data. Explicitly, preprocessing cannot be employed as a separate module. Noise models, the creation of a noisy image database, and feature extraction are key components of CNN processing. Convolutional neural networks have been used to enhance the adaptability of conventional schemes. The convolutional neural network originated from the mathematical operation of convolution. With this CNN, Activation functions are derived based on the application mode, and those functions are considered a second input for the convolution operation. Another speciality of CNN is its architecture, which features flexible nodes and layers4,5.

Quantitative analysis

The proposed watermarking scheme should be analysed in terms of imperceptibility, robustness, and capacity. The following metrics are used to ensure the same.

MSE

Measures the average squared difference between pixel values of the original and test image.

PSNR

Indicates the peak error between two images; higher PSNR implies better quality.

NC

Measures similarity; values close to 1 mean high similarity.

\(\:I(i,j)\): Original Image Pixel \(\:K(i,j)\): Test Image Pixel.

SSIM

Evaluates structural similarity; ranges from − 1 to 1 (1 = identical).

\(\:{\sigma\:}_{x}^{2},{\sigma\:}_{y}^{2}\)- Variance of image x and y.

Proposed work

This watermarking method incorporates compression and encryption, thereby enhancing the payload capacity and confidentiality. Three different watermarks, including a company logo, biometric information, and text, have been embedded into varying levels of wavelet coefficients through subband coding. The arithmetic and run-length encoding are used to compress watermarks.

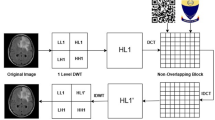

The singular features are extracted from the company logo watermark and encrypted using the chaotic logistic map. Figure 1 illustrates the fragile multiple watermarking schemes, and Table 2 expresses the significance of each stage of the numerous watermarking works. Especially, SVD-based watermarking can suffer from false positive errors due to similarity in singular value patterns. Our approach mitigates this by using multiple watermarks in distinct sub-bands and applying selective encryption and encoding, reducing collision probability.

Tables 2 and 3 highlight the importance of fusion, specifically in terms of the method, selection, and allocation of the sub-band in the proposed scheme.

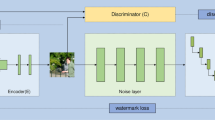

DCNN aided watermark restoration

Figure 2 illustrates the proposed adaptive denoise CNN model as the most effective method for restoring the watermark from the corrupted one. Prediction against unknown noise is difficult; the adaptive CNN can learn from the exposure and modify it to fulfil future demands. The watermarked image may often degrade due to nonlinear noise, cropping, and rotation attacks during transmission. Additionally, the extracted watermarks may not contain meaningful information, or the ROI may be corrupted. However, the extracted watermarks and cover image must be fully restored. This demand is fulfilled through the proposed DCNN denoising; it recovers images through denoising. A key feature of this algorithm is its anytime learning capability. The proposed algorithm utilises a pre-trained CNN model with selective image noise, and DCNN leverages self-learning from runtime input to improve prediction accuracy with subsequent inputs. For security applications, images cannot be limited. The training image dataset is not easily constructed, and a pre-trained model is considered. CNN is customised as per the requirement. Our proposed architecture in Fig. 2 is designed for image denoising under variable noise conditions. The model is trained from scratch using the Pristine dataset for analysis purposes. However, it can be extended to other datasets based on the target applications. This enables the model to learn noise patterns relative to real-world scenarios in critical applications.

DCNN model as follows

-

1.

Create a dataset that stores perfect watermark images and cover images.

-

2.

Develop the noise models for Gaussian, Speckle, salt and pepper, and rotation to create a denoising image database from immaculate watermark3 images.

-

3.

Choose the number of denoising layers and nodes in each layer.

-

4.

Train the network with the prepared dataset, specifying the denoised image data store as input and the respective noiseless image as the target for each training iteration. After the training phase, adaptive CNN layers are fixed.

-

5.

The pooling layer decides the filter size based on the dimension reduction.

-

6.

The convolution layer performs a convolution function for feature map extraction.

-

7.

Feature vectors are stored in the resultant database.

-

8.

After training the network, allow the network to process a noisy image.

-

9.

DCNN will calculate feature vectors for the runtime input and then find the distance vector between the features of the runtime input and stored features.

-

10.

After calculating the minimum distance feature vector, one of the immaculate watermark images was declared the winner.

Results and discussion

A different set of images was taken to evaluate the proposed scheme. The watermark1 (company ID) and watermark3 (Biometric ID) images, of sizes 64 × 64 and 32 × 32, were embedded as watermark1 and watermark3 in the host images of size 256 × 256. Instead of watermark1 and watermark3 images, gray images with a random distribution of gray scale are considered as watermark1 and watermark3. Figure 3a–j shows the test images of the cover: Male, Cameraman, Chemical plant, Girl, and medical images44. Figure 4a–d shows the sample gray images of watermark 1, Authentication check, Fig. 5a–d shows the sample gray images of watermark 3, Integrity check (a–d). This division presents various investigations to evaluate the performance of the proposed scheme.

Table 4 illustrates the imperceptibility of the watermarked image with varying gains; the tabulated results show that the PSNR was never below 30 dB, even when the gain factor was higher. SSIM and NC values are close to 1. The tabulated values in Table 4 represent the outcome of an analysis conducted to determine the gain factor. The authors proposed the DWT sub-band coding up to 3 levels. In each level, one secret image needs to be embedded. Therefore, a gain factor must be chosen at the appropriate level to achieve good perceptual transparency and robustness. The selection of the gain factor should maintain the trade-off between imperceptibility and robustness, as analysed and reported in Table 4. Based on experimental PSNR and SSIM outcomes (Table 4), a strength factor of 0.05 was selected to optimise robustness without compromising visual fidelity. The chosen gain factor is 0.05, which corresponds to the tabulated value, and it offers a PSNR above 43 dB. This implies that the proposed watermarking methodology preserved the quality of the image (Figs. 6 and 7).

Table 5 presents the optimal selection of the compression algorithm for confidential data, which offers better imperceptibility compared to the existing method. The comparison between the schemes is analysed through two metrics, PSNR and NC. Both metrics are used to maintain the trade-off between perceptual transparency and robustness. When the PSNR exceeds 40dB and the NC approaches unity, the scheme achieves excellent image quality both during embedding and recovery. DWT-based embedding enables the scheme to embed in any sub-band. With the proposed scheme, three different watermarks are embedded into LH1, HL2, and LL3, which is possible due to sub-band coding. An equal payload is inserted at every sub-band level as well as every pixel, although the PSNR and NC values exceed the required level. This confirmation is compared with recent schemes in Table 5.

The analysis was conducted using the selected cover image with different watermarks 1, 2, and 3. Various watermarks (images 1 and 3) and different sizes of text (watermark 2) are selected for analysis. The values confirm that perceptual transparency is maintained in the watermarked image, even when different distributions of grey levels in images and different lengths of text are chosen.

In Fig. 8, the MSE distribution, being clustered towards lower values, indicates better image imperceptibility. The PSNR ranges from 41 dB to 44 dB, indicating high image quality with the watermark embedded. The watermarked image is structurally similar to the original image, with a median SSIM of 0.994 and a median normalised correlation of more than 0.9994.

Figure 9 shows that the watermarked image quality is perpetuated by embedding the signature and the watermark image. Still, the parametric values listed in Fig. 9 remain almost constant. Thus, it can be inferred that the quality of the base image remains secure even when embedded with varied combination images. Therefore, the results indicate that the performance remains consistent across nearly all combinations of the watermark. In Table 6, the more the textual data is integrated into the image, the better the image performs. However, in the proposed approach, the values and metrics are used to produce the robustness of the method. The metrics’ values remain constant over the peak leap in the number of characters embedded into the image.

The values in Table 7 indicate that arithmetic coding helps achieve better imperceptibility. Also, the PSNR of the proposed work was comparable to that of the earlier work. The test images in Figs. 3a–j and 4a–d are used to analyse the imperceptibility of watermarked images while varying the size of the watermark images. From Fig. 6, it is inferred that the size of the image (Biometric ID) concerning the size of the watermark image has a lesser influence on the imperceptibility of the watermarked image. However, the size of the sample image (company ID) has a significant impact on the imperceptibility of a watermarked image. The biometric ID is embedded in DWT level 3 of the LL component. The company ID is embedded in DWT level 1 of the LH component.

Figure 7 demonstrates that the histograms of the cover and watermarked objects are similar, confirming imperceptibility and implying that the histogram, which represents the count of pixels at every intensity level, remains equal before and after embedding due to the sub-band coding of DWT. The Wavelet sub-band scheme offers a high level of robustness and imperceptibility; additionally, selecting a suitable sub-band for embedding the payload is crucial. DWT sub-band coding is feasible for any image size, not limited to block size, and can be customised to any frequency level. DWT has a multiresolution ability, which transforms images into any range of frequencies. This is confirmed with tabulated metrics against different payloads. RLC and SVD achieve a higher payload, which includes the images and alphanumeric characters. The analysis is conducted using various images and a maximum text payload. The tabulated metrics confirm the potential of the proposed scheme.

From Table 8 the mean square error values are ideally equal to zero; the benefits of PSNR are equal to infinite. The recovered images correlate perfectly with the watermark1 embedded in the base image.

Table 9 shows the quality measures of the watermark images recovered from the watermarked image at the receiver end, provided the noise is not reckoned in the transit. Unfortunately, the recovered image data seems highly corrupted due to the noise added to the transit. The execution average time for watermarking depends on subband coding, the number of watermarks, the size of the watermark, and the size of the cover object. The watermarking process takes 2 s. For extraction, it takes 1.6 s for the samples considered (watermark 1 and watermark 3 images, each of size 64 × 64 and 32 × 32, were embedded in host images of size 256 × 256). Watermarked images undergo various image processing attack analyses. Still, the data can be recovered to obtain the image by including the CNN.

Geometrical and signal processing attack

This section presents the IQM analysis to evaluate the efficiency of the proposed scheme after the channel attack, as shown in Fig. 10.

Figures 10a–d and 11a,b show the original watermarked image and the noisy versions such as salt & pepper of strength 0.1, Gaussian of strength 0.01, Speckle of strength 0.04, Rotation of\(\:\pm\:1{0}^{\circ\:}\) and cropping \(\:\approx\:\)approximately 15% of the original images, respectively.

The model achieved 100% training accuracy very early and maintained it, with the loss dropping near zero quickly in Fig. 12, indicating excellent fitting to the training data. The Error metric values of the recovered images are unacceptable because the PSNR (dB) will be visible only above 30 dB. Inferring from Table 10, the recovered watermark images are not always straightforward due to noise in the watermarked images. Therefore, it cannot be utilised for authentication purposes. The cover image is also essential in some cases. Instead, these recovered watermarks are given to the trained neural network, which employs a convolutional neural network (CNN), and the output converges to the original watermark images.

Watermark restoration using CNN

In the proposed watermarking scheme, a convolutional neural network (CNN) is incorporated to enhance robustness. The CNN is trained to resist various attacks, such as cropping, scaling, shifting, compression, Gaussian noise, and salt and pepper noise. CNN can do denoising and recover the original image. CNN training will enable annotation with all possible noises, including those with different ranges. CNN does not require a mathematical sense to customise the model; it can learn with features such as contour, repeated patterns, smaller changes, and the relationship between background and foreground. CNN is well-suited for denoising image recovery. The denoising depends on the training, architecture, and learning parameters.

The SIPI database and medical images are permitted for data augmentation. The image dataset comprises over 5,000 images, with 80% reserved for the training set. Specifically, we used a total of 5000 images, of which 2500 were used as cover images and 2500 as watermark images. These images were sourced from [The SIPI database] to ensure variability and robustness. We utilised a DnCNN pre-trained model to leverage transfer learning and improve convergence. However, the fine-tuning process was performed using the dataset as mentioned earlier to adapt the model to our specific watermarking task. DnCNN’s model has 17 layers, 64 filters in each layer, residual learning, batch normalisation, and ReLU.

Convolutional layers are balanced well. However, Shallow networks and very deep networks (20 + layers) are prone to overfitting or vanishing gradients. With residual learning (where the network learns noise, not the clean image), DnCNN is simpler to train, even with deeper models. This residual strategy also enhances denoising performance by reducing learning complexity and makes training more stable with deeper layers. These design decisions gave the optimal PSNR, SSIM, and generalisation performance to Gau denoising while maintaining the model compact, efficient, and trainable. Each layer employs 64 filters (nodes) of 3 × 3 size. 64 is a typical selection in most CNNs for a trade-off between feature richness and computation time. Filters enlarge memory consumption and training time with minimal performance gain. Batch Normalisation is employed in the majority of layers to stabilise training and facilitate deeper networks. ReLU activation introduces non-linearity to learn intricate mappings. Same padding preserves spatial dimensions, which is critical for pixel-wise prediction.

The loss function, training, and validation accuracy of the retrained watermark restoration and denoising model for the custom dataset over 120 epochs are shown in Fig. 10, illustrating the steep decline in error at the recovered image due to the noise during transmission. This fall in error is achieved by efficiently training the neural network over multiple epochs for the given training inputs. The embedded data can be retrieved precisely, even after noise, rotation, or cropping attacks in transit by intruders, thanks to competent artificial intelligence. In this training curve, after 1200 iterations, accuracy approaches 100%, and losses reach almost zero. This ensures that DCNN predicts and denoises images with both known and unknown noises after training. After successfully retraining the DCNN model with the custom dataset, the model is employed for watermark restoration. DCNN denoises the remaining percent of various noises and recognises them properly.

A training dataset comprising many noisy versions of the images is created using augmentation techniques and random parameter changes. If CNN is given enough training time and a specific architecture, it will use geometric and image filtering attacks, including cropping, rotation, compression, and filtering, to denoise the watermarked images. While comparing with recent works36,37,38] and [43, the proposed work utilises a CNN to denoise the degraded images. Recent works have achieved nearly unity in NC. Similarly, the proposed work is also analysed using SSIM and NC. The SSIM and NC are practically equal to one, and the resultant metrics are tabulated in Table 10. The values are comparable to those in recent works. The recovery of the image should not only be from the embedded image, but also from the noisy image. Sometimes, a filtering operation might introduce an unwanted component in the image, so analyses made against.

Averaging, Gaussian, Median filtering with various kernel sizes of (3 × 3), (5 × 5), (9 × 9). The capability of the image to withstand compression with different compression coefficients Q was also verified. Some of the intentional noise attacks such as rotation(30֩, 60֩, 90֩ degrees), contrast adjustment, histogram equalisation, cropping different percentages, scaling as shrinking and zooming, blurring using Gaussian noise with different mean (m), variance (v), and multiplicative noise such as speckle noise with different variance v, impulse noise such salt and pepper with different noise density d.

Time complexity analysis

The computational complexity of the proposed watermarking framework involves multiple stages, including multilevel DWT decomposition, SVD-based embedding, watermark compression, chaotic encryption, and CNN-based restoration, for a cover image of size N×N, the combined DWT decomposition and reconstruction results in a time complexity of O(N2). The singular value decomposition (SVD) is applied to sub-band matrices of size n×n. Hence, it incurs a per-block cost of O(n3), leading to a total of O(W⋅n3) across W watermark objects. The compression (via Run Length and arithmetic encoding), encryption (using logistic maps), and embedding operations are linear to watermark size m, contributing O(W⋅m) overall. The CNN-based restoration, in the inference phase, operates with constant complexity O(C) for a fixed CNN architecture. Hence, the cumulative time complexity per watermarking operation, excluding offline CNN training, can be expressed as:

This formulation highlights the efficiency of the proposed method.

Conclusion

A high payload compressed image watermarking scheme proposed in this work encompasses various significant biometric identifications of an entity. This domain-based ownership identification approach utilises the DWT with three different decomposition levels to conceal the parts of the identifying coefficients. The three-layer scheme was applied to four grayscale test images of size 256 × 256 to analyse its performance under the influence of geometric and noise attacks. In the case of PSNR between the test and three-level watermarked images, an average value of 44 dB was obtained for the four considered images.

Furthermore, the integration of a denoising CNN enables effective recovery of watermarks even under severe distortions. This approach combines essential parameters, serving as a watermark, with any image data applicable for e-verification. Compared to recent state-of-the-art methods, the proposed approach achieves superior performance in terms of imperceptibility, robustness, and computational efficiency. Future work will focus on integrating intelligent decision-making modules further to enhance resilience against advanced intrusion and distortion scenarios.

Data availability

All data generated or analysed during this study are included in this published article. All images are taken from the following https://sipi.usc.edu/database/ https://in.mathworks.com/matlabcentral/answers/54439-list-of-builtin-demo-images.

References

Ray, A. & Roy, S. Recent trends in image watermarking techniques for copyright protection: a survey. Int. J. Multimedia Inform. Retr. 9 (4), 249–270 (2020).

Sharma, S., Zou, J. J., Fang, G., Shukla, P. & Cai, W. A review of image watermarking for identity protection and verification. Multimedia Tools Appl. 83 (11), 31829–31891 (2024).

Melman, A. & Evsutin, O. Methods for countering attacks on image watermarking schemes: overview. J. Vis. Commun. Image Represent. 2, 104073. (2024).

Aberna, P. & Agilandeeswari, L. Digital image and video watermarking: methodologies, attacks, applications, and future directions. Multimedia Tools Appl. 83 (2), 5531–5591 (2024).

Hu, K. et al. Learning-based image steganography and watermarking: A survey. Expert Syst. Appl.20, 123715. (2024).

Mohammad, N. et al. Lossless visible watermarking based on adaptive circular shift operation for BTC-compressed images. ’Multimed Tools Appl. 76 (11), 13301–13313 (2017).

Wong, P. W. & Memon, N. Secret and public key image watermarking schemes for image authentication and ownership verification. IEEE Trans. Image Process. 10 (10), 1593–1601 (2001).

Liu, H., Xiao, D., Zhang, R., Zhang, Y. & Bai, S. Robust and hierarchical watermarking of encrypted images based on compressive Sensing’Signal. Process. Image Commun. 45, 41–51 (2016).

Liu, Y., Wang, Y. & Zhu, X. Novel robust multiple watermarking against regional attacks of digital images. ’Multimed Tools Appl. 74 (13), 4765–4787 (2015).

Lang, J. & Zhang, Z. G. Blind digital watermarking method in the fractional fourier transform domain. ’Opt Lasers Eng. 53, 112–121 (2014).

Yen, C. T. & Huang, Y. J. Frequency domain digital watermark recognition using image code sequences with a back-propagation neural network. ’Multimed Tools Appl. 75 (16), 9745–9755 (2016).

Roy, S. & Pal, A. K. A robust blind hybrid image watermarking scheme in RDWT-DCT domain using Arnold scrambling. ’Multimed Tools Appl. 76 (3), 3577–3616 (2017).

Nguyen, T. S., Chang, C. C. & Yang, X. Q. A reversible image authentication scheme based on fragile watermarking in discrete wavelet transform domain’AEU. Int. J. Electron. Commun. 70 (8), 1055–1061 (2016).

Wang, C., Zhang, Y. & Zhou, X. Review on digital image watermarking based on singular value decomposition. J. Inform. Process. Syst. 13 (6), 1585–1601 (2017).

Han, J., Zhao, X. & Qiu, C. A digital image watermarking method based on host image analysis and genetic algorithm. ’J Ambient Intell. Humaniz. Comput. 7 (1), 37–45 (2016).

Gonge, S. S. & Ghatol, A. Composition of DCT-SVD image watermarking and advanced encryption standard technique for still image’Intell. Syst. Technol. Appl. 530, 85–97 (2016).

Cheddad, A., Condell, J., Curran, K. & Mc Kevitt, P. Digital image steganography: survey and analysis of current methods. Sig. Process. 90 (3), 727–752 (2010).

Sun, L. et al. ‘A robust image watermarking scheme using Arnold transform and BP neural network. ’Neural Comput. Appl. 1–16. (2017).

Bhatnagar, G. & Jonathan Wu, Q. M. Biometrics inspired watermarking based on a fractional dual tree complex wavelet transform’Futur. Gener Comput. Syst. 29 (1), 182–195 (2013).

Makbol, N., Khoo, B. E., Rassem, T. & Loukhaoukha, K. A new reliable optimised image watermarking scheme based on the integer wavelet transform and singular value decomposition for copyright protection. ’Inf Sci. (Ny). 417, 381–400 (2017).

Li, C., Zhang, Z., Wang, Y., Ma, B. & Huang, D. Dither modulation of significant amplitude difference for wavelet based robust watermarking. Neurocomputing 166, 404–415 (2015).

Rastegar, S., Namazi, F., Yaghmaie, K. & Aliabadian, A. Hybrid watermarking algorithm based on Singular Value Decomposition and Radon transform’AEU. Int. J. Electron. Commun. 65 (7), 658–663 (2011).

Zear, A., Singh, A. & Kumar, P. A proposed secure multiple watermarking technique based on DWT, DCT and SVD for application in medicine. ’Multimed Tools Appl. 77, 4863–4882 (2016).

Fazli, S. & Moeini, M. A robust image watermarking method based on DWT, DCT, and SVD using a new technique for correction of main geometric attacks. ’Optik (Stuttg). 127 (2), 964–972 (2016).

Singh, A. K., Kumar, B., Singh, S. K., Ghrera, S. P. & Mohan, A. Multiple watermarking technique for Securing online social network contents using back propagation neural Network’Futur. Gener Comput. Syst. 86, 926–939. https://doi.org/10.1016/j.future.2016.11.023 (2018).

Singh, S., Rathore, V. S., Singh, R. & Singh, M. K. Hybrid semi-blind image watermarking in redundant wavelet domain. ’Multimed Tools Appl. 76 (18), 19113–19137 (2017).

Ernawan, F. & Ariatmanto, D. A recent survey on image watermarking using scaling factor techniques for copyright protection. Multimedia Tools Appl. 1–41. (2023).

Awasthi, D. & Srivastava, V. K. Robust, imperceptible and optimised watermarking of DICOM image using Schur decomposition, LWT-DCT-SVD and its authentication using SURF. Multimedia Tools Appl. 82 (11), 16555–16589 (2023).

Sivaraman, R. et al. Pullikolam assisted medical image watermarking on reconfigurable hardware. Multimed Tools Appl. 82, 21193–21203. https://doi.org/10.1007/s11042-023-14725-2 (2023).

Ravichandran, D., Praveenkumar, P., Rajagopalan, S., Rayappan, J. B. B. & Amirtharajan, R. ROI-based medical image watermarking for accurate tamper detection, localisation and recovery. Med. Biol. Eng. Comput. 59, 1355–1372. (2021).

AlShaikh, M., Alzaqebah, M. & Jawarneh, S. Robust watermarking based on modified pigeon algorithm in DCT domain. Multimed Tools Appl. 82, 3033–3053. https://doi.org/10.1007/s11042-022-13233-z (2023).

Kumar, L., Singh, K. U., Kumar, I., Kumar, A. & Singh, T. Robust medical image watermarking scheme using PSO, LWT, and Hessenberg decomposition. Appl. Sci. 13 (13), 7673. https://doi.org/10.3390/app13137673 (2023).

Aberna, P. & Agilandeeswari, L. Digital image and video watermarking: methodologies, attacks, applications, and future directions. Multimedia Tools Appl. 1–61. (2023).

Gull, S. & Parah SA advances in medical image watermarking: a state of the Art review. Multimed Tools Appl. https://doi.org/10.1007/s11042-023-15396-9 (2023).

Lakshmi, H. R. & Borra, S. Improved adaptive reversible watermarking in integer wavelet transform using moth-flame optimisation. Multimed Tools Appl. https://doi.org/10.1007/s11042-023-16203-1 (2023).

Alomoush, W. et al. Improved security of medical images using DWT–SVD watermarking mechanisms based on firefly Photinus search algorithm. Discov Appl. Sci. 6, 366. https://doi.org/10.1007/s42452-024-06066-y (2024).

Begum, M. et al. Image watermarking using discrete wavelet transform and singular value decomposition for enhanced imperceptibility and robustness. Algorithms 17 (1), 32. https://doi.org/10.3390/a17010032 (2024).

Tang, M. & Zhou, F. A robust and secure watermarking algorithm based on DWT and SVD in the fractional order fourier transform domain. Array 15, 100230. https://doi.org/10.1016/j.array.2022.100230 (2022).

Sharma, S., Sharma, H. & Sharma, J. B. A new optimisation based color image watermarking using non-negative matrix factorisation in discrete cosine transform domain. J. Ambient Intell. Hum. Comput. 13, 4297–4319. https://doi.org/10.1007/s12652-021-03408-1 (2022).

Sharma, S. et al. A secure and robust color image watermarking using nature-inspired intelligence. Neural Comput. Applic. 35, 4919–4937. https://doi.org/10.1007/s00521-020-05634-8 (2023).

Amsaveni, A., SatheeshKumar Palanisamy, S., Guizani, H. & Hamam Next-Generation secure and reversible watermarking for medical images using hybrid Radon-Slantlet transform. Results Eng. 24, 103008. https://doi.org/10.1016/j.rineng.2024.103008 (2024).

Adi, P. W., Sugiharto, A., Hakim, M. M. & Winarno, E. De Rosal Ignatius Moses Setiadi, Efficient fragile watermarking for image tampering detection using adaptive matrix on chaotic sequencing, Intell. Syst. Appl. 26, 200530. https://doi.org/10.1016/j.iswa.2025.200530 (2025).

Satish, D., Mali, L. & Agilandeeswari Non-redundant shift-invariant complex wavelet transform and fractional Gorilla troops optimisation-based deep convolutional neural network for video watermarking. J. King Saud Univ. - Comput. Inform. Sci. 35 (8), 101688. https://doi.org/10.1016/j.jksuci.2023.101688 (2023).

Jagadeesh, S., Parida, A. K. & Meenakshi, K. Tamper detection, localisation and self-recovery using Slant transform with DNA encoding for medical images. Res. Eng. 105907. (2025).

Acknowledgements

The authors thank the Department of Science & Technology, New Delhi, for the FIST funding (SR/FST/ET-I/2018/221(C). Finally, the authors would also like to thank the Intrusion Detection Lab at the School of Electrical and Electronics Engineering, SASTRA Deemed University, for providing infrastructural support to carry out this research work.

Funding

DST FIST Fund SR/FST/ET-I/2018/221(C).

Author information

Authors and Affiliations

Contributions

C. Lakshmi, Sivaraman R, and Sridevi A, designed the study, conducted the experiments, and analysed the data. C Nithya , Santhiyadevi R, Dhivya Ravichandran, Aashiq Banu S , Hemalatha Mahalingam , Padmapriya Velupillai Meikandan and Rengarajan Amirtharajan provided critical feedback, helped write and edit the manuscript, and guided the project. All authors reviewed and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval

All authors have read, understood, and have complied as applicable with the statement on “Ethical responsibilities of Authors” as found in the Instructions for Authors.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Lakshmi, C., Nithya, C., Sivaraman, R. et al. Convolutional neural network and wavelet composite against geometric attacks a watermarking approach. Sci Rep 15, 31460 (2025). https://doi.org/10.1038/s41598-025-17059-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-17059-1