Abstract

Drug-induced liver injury (DILI) frequently complicates anti-tuberculosis (TB) treatment, particularly in regions with a high TB burden. Early pre-treatment identification of patients at elevated risk is essential for timely intervention and safer treatment outcomes. In this retrospective two-center cohort study, we collected baseline data from 2022 to 2024 of 2624 patients admitted to two tertiary hospitals before starting standard drug-susceptible anti-TB therapy (isoniazid, rifampicin, pyrazinamide, ethambutol). Patients were randomly divided into training (n = 1512), internal validation (n = 648), and external validation (n = 564) cohorts. Multivariable logistic regression found DILI predictors, and a pre—treatment risk—forecasting nomogram was built. Model performance was assessed by AUC, calibration plots, and decision curve analysis (DCA). Six baseline predictors emerged: age ≥ 60 years, BMI < 18.5 kg/m2, alcohol use, extrapulmonary TB, albumin < 35 g/L, and hemoglobin < 110 g/L. The nomogram demonstrated robust discrimination (AUCs: 0.80 training, 0.75 internal validation, 0.77 external validation) and favorable calibration and net clinical benefit on DCA. We developed and externally validated a pre-treatment nomogram for DILI risk in TB patients. By enabling risk stratification before therapy begins, this tool supports personalized monitoring and may enhance treatment safety.

Similar content being viewed by others

Introduction

The World Health Organization (WHO) reported 10 million incident with tuberculosis (TB) cases and 1.5 million deaths globally in 20201. Anti-TB first-line therapy (isoniazid, rifampicin, pyrazinamide, ethambutol) is effective, yet drug-induced liver injury (DILI) occurs in roughly 2–15% of treated patients—higher in certain settings depending on definitions and populations2. DILI not only interrupts treatment but is associated with treatment failure, drug resistance, prolonged hospitalization, and mortality3.

The hepatotoxic potential of first-line agents—particularly isoniazid, rifampicin, and pyrazinamide—is well established4. However, predicting which patients are at greatest risk for developing DILI remains challenging. Current clinical practice relies on monitoring liver enzymes after the onset of symptoms, often delaying diagnosis and intervention. Moreover, previous studies on risk prediction have been limited by small sample sizes, heterogeneity of inclusion criteria, lack of external validation, and inconsistent definitions of DILI5. Therefore, there is an urgent need to develop a simple, reliable, and generalizable risk prediction tool to facilitate early identification of high-risk patients prior to or early during treatment.

Several demographic, clinical, and biochemical factors have been implicated in increasing the risk of DILI, including older age, low body mass index (BMI), alcohol consumption, pre-existing liver disease, malnutrition, and baseline laboratory abnormalities such as hypoalbuminemia and anemia6,7. Recent studies show that low baseline serum albumin (e.g., < 35 g/L) and anemia (e.g., hemoglobin < 120 g/L) are associated with increased risk of anti-TB drug–induced hepatotoxicity, consistent with reduced hepatic reserve and altered drug handling8,9. Current AT-DILI models are largely single-center with only split-sample internal validation, and rigorous multi-center external validation is scarce5,10. Moreover, although extrapulmonary TB and low hemoglobin have been linked to higher hepatotoxicity risk, their inclusion in previous models has been inconsistent11,12.

In this study, we present a nomogram constructed exclusively from pre-treatment demographic, clinical, and laboratory parameters. Using data from a large, two-center retrospective cohort, we identified independent predictors via multivariable logistic regression and assessed our model’s discrimination, calibration, and clinical utility in both internal and external validation cohorts. Our aim is to furnish clinicians with a practical, baseline risk-stratification tool that guides personalized monitoring before anti-TB therapy begins.

Methods

Study design and population

From January 2022 to October 2024, we conducted a retrospective cohort study at two specialized centers. Baseline demographic, clinical, and laboratory data were collected within 7 days before treatment initiation. The training and internal validation cohorts were derived from the Fifth People’s Hospital of Ganzhou, and the external validation cohort was from the First People’s Hospital of Fuzhou City. Eligible participants were adults (≥ 18 years) with microbiologically or clinically confirmed active TB who started standard first-line therapy (isoniazid, rifampicin, pyrazinamide, and ethambutol). Exclusion criteria were: (1) pre-existing liver disease (e.g., chronic hepatitis B/C, fatty liver by imaging, alcoholic liver disease, cirrhosis, or other hepatic disorders); (2) use of hepatoprotective agents for treatment or prophylaxis at baseline or concomitant use of other non–anti-TB hepatotoxic drugs at baseline (per medication records); (3) HIV coinfection or malignancy (e.g., primary liver cancer); (4) incomplete or missing key baseline data; and (5) irregular adherence to anti-TB therapy or loss to follow-up. These eligibility criteria were pre-specified to align with contemporary hepatotoxicity guidance and causality assessment frameworks (ATS statement; EASL DILI guideline) and to minimize misattribution of liver injury in a retrospective design; we acknowledge that excluding baseline hepatoprotective/other hepatotoxic drugs and imaging-diagnosed fatty liver is slightly stricter than in some prior cohorts4,6.

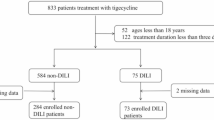

Across centres, participants from Ganzhou were randomly split 7:3—stratified by DILI status—into training and internal validation sets; all participants from Fuzhou comprised the external validation set. No cross-centre mixing occurred. A flow diagram summarises screening, exclusions, and allocation (Fig. 1). For clarity, the training set was used to fit the multivariable logistic model and construct the nomogram; the internal validation set (a 30% hold-out from the same centre) evaluated in-centre performance without refitting. The external validation set (the independent second centre) assessed transportability/generalizability, and no data were shared across sets.

Participant flow and dataset allocation by centre. Patients screened (n = 4586); excluded (n = 1862); included (n = 2724). Ganzhou (n = 2160) → training (n = 1512; DILI events = 76) and internal validation (n = 648; events = 32); Fuzhou (n = 564) → external validation (n = 564; events = 29). No cross-centre mixing; Ganzhou split 7:3 stratified by DILI status. TB Tuberculosis.

Definition of DILI

DILI was defined according to the ATS statement on hepatotoxicity of antituberculosis therapy and international DILI guidelines: (1) alanine aminotransferase (ALT) ≥ 3 × ULN with compatible symptoms; or (2) ALT ≥ 5 × ULN without symptoms; or (3) alkaline phosphatase (ALP) ≥ 2 × ULN in the absence of bone pathology4,6. Patients who met any of these diagnostic criteria during anti-TB treatment were classified into the DILI group.

Data collection and variables

Baseline demographic and clinical variables were extracted from the hospital electronic medical record system, including age, gender, BMI, smoking and drinking status, history of diabetes and hypertension, extrapulmonary TB involvement, and laboratory parameters such as albumin, hemoglobin (HB), neutrophil count (NEU), white blood cell count (WBC), red blood cell count (RBC), and platelet count (PLT). Laboratory results were collected within 7 days prior to initiating anti-TB therapy. Alcohol use was extracted from baseline records and coded as current drinking (yes/no). Individuals documented as abstinent at baseline were coded as no. Quantitative intake (units/day or AUDIT-C) was not uniformly available across centres. Extrapulmonary TB was coded as any documented TB involvement outside the lung parenchyma, including pleural and peripheral lymph-node disease, as well as CNS/meningeal, miliary/disseminated, abdominal (peritoneal/hepato-splenic), osteo-articular, genitourinary and cutaneous sites.

Model development and validation

Candidate predictors with univariable P < 0.10 entered a multivariable logistic regression (R stats::glm, family = binomial, logit link). Variable selection used backward AIC (MASS::stepAIC, k = 2) to derive a parsimonious final model. The final specification was summarized as adjusted odds ratios with 95% CIs. A nomogram was then generated with the rms package (lrm/nomogram), assigning points proportional to each regression coefficient (β) and mapping total points to predicted DILI probability via the inverse-logit function. No additional hyperparameter tuning or single-threshold was performed, consistent with the tool’s risk-stratification intent.

Performance evaluation

The nomogram’s predictive performance was evaluated by area under the receiver operating characteristic curve (AUC), calibration plots, and decision curve analysis (DCA). Model development and coefficient estimation were performed in the training set (n = 1512; DILI events = 76). Discrimination and calibration were assessed in the internal validation set (n = 648; events = 32) and in the external validation set from the independent centre (n = 564; events = 29). AUCs with 95% confidence intervals were obtained via bootstrap resampling (1000 iterations). Calibration was examined with calibration curves and the Hosmer–Lemeshow (HL) goodness-of-fit test; optimism-corrected performance in the training set was estimated by bootstrap. DCA was reported separately for each dataset.

Statistical analysis

Continuous variables are summarized as mean ± SD when approximately normal and as median (interquartile range, IQR) when skewed. Normality was assessed by histograms and Q–Q plots, with confirmation by the Shapiro–Wilk test. Homogeneity of variances was evaluated using Levene’s test. Between-group comparisons used Student’s t-test when assumptions were met; if variances were unequal, we used Welch’s t-test; and if normality was implausible, we used the Wilcoxon rank-sum test. Categorical variables are presented as n (%) and were compared using the chi-square test (or Fisher’s exact test when expected counts were < 5). Logistic-regression results are reported as odds ratios (ORs) with 95% confidence intervals (CIs). We screened for collinearity using pairwise correlations and variance inflation factors (VIF < 5). Model fitting used multivariable logistic regression with backward AIC (k = 2) selection; model performance was summarized by AUC with 95% CIs, bootstrap-corrected calibration (1000 resamples), HL tests, and DCA. The 7:3 outcome-stratified split of the Ganzhou cohort was performed with a fixed random seed to preserve DILI prevalence between training and internal validation sets; the Fuzhou cohort was used exclusively as the external validation set. All analyses were conducted in R (version 4.2.2); two-sided P < 0.05 was considered statistically significant.

Ethical approval

This study was approved by the Ethics Committees of Fifth People’s Hospital of Ganzhou and The First People’s Hospital of Fuzhou City. The requirement for written informed consent was waived by the Medical Ethics Committees of the Fifth People’s Hospital of Ganzhou and the First People’s Hospital of Fuzhou City due to the retrospective nature of the study and the use of anonymized data. All methods were carried out in accordance with relevant guidelines and regulations, including the ethical principles of the Declaration of Helsinki.

Results

Baseline characteristics

A total of 4586 patients with newly diagnosed TB who received standard first-line anti-TB therapy between January 2022 and October 2024 were initially screened from two dedicated TB centers in China. Of the 2724 patients remaining after exclusions, 2160 patients were from the Fifth People’s Hospital of Ganzhou and were randomly divided in a 7:3 ratio into a training cohort (n = 1512) and an internal validation cohort (n = 648); another 564 patients from the First People’s Hospital of Fuzhou City formed the external validation cohort. By design, the prevalence of DILI was preserved across datasets (training 76/1512 [5.0%]; internal validation 32/648 [4.9%]; external validation 29/564 [5.1%]) owing to the stratified split within the Ganzhou centre; no cross-centre mixing occurred.

Baseline characteristics were otherwise highly similar across cohorts (all P ≥ 0.43; Table 1), including sex distribution (males 65–66%), age (≈57% ≥ 60 years), nutritional status (≈29% BMI < 18.5 kg/m2), comorbidities (diabetes ≈10–11%; hypertension ≈27%), extrapulmonary TB (≈31%), hypoalbuminemia (≈22–23%), and anemia (≈21–22%). Hematologic indices were likewise comparable (mean WBC ≈6.3 × 109/L, RBC ≈4.3 × 1012/L, NEU ≈4.8 × 109/L, PLT ≈227 × 109/L).

In the combined training + internal validation cohort (n = 2160), patients who developed DILI (n = 108) differed markedly from those who did not. The DILI group was older (≥ 60 years: 74.1% vs. 56.1%, P < 0.001), more likely underweight (BMI < 18.5: 39.8% vs. 28.5%, P = 0.011), and had higher rates of alcohol use (44.4% vs. 27.0%, P < 0.001) and extrapulmonary TB (46.3% vs. 30.6%, P = 0.001). They also exhibited more hypoalbuminemia (< 35 g/L: 41.7% vs. 21.9%, P < 0.001) and anemia (HB < 110 g/L: 33.3% vs. 21.1%, P = 0.003). Other factors—including sex, smoking status, diabetes, hypertension, and mean WBC, RBC, NEU, and PLT counts—showed no significant differences between groups (all P > 0.10) (Table 2).

Univariable and multivariable logistic regression analyses

On univariable analysis, several baseline characteristics were significantly associated with DILI. Older age (≥ 60 vs. < 60 years), alcohol consumption, and extrapulmonary TB each more than doubled the odds of DILI, whereas a BMI ≥ 18.5 kg/m2, serum albumin ≥ 35 g/L, and hemoglobin ≥ 110 g/L were protective (Table 3).

When these six factors were entered into multivariable logistic regression, age (≥ 60 years; adjusted OR 2.75, 95% CI 1.67–4.18), alcohol use (adjusted OR 2.22, 95% CI 1.35–3.66), and extrapulmonary TB (adjusted OR 2.53, 95% CI 1.81–4.11) remained independent risk factors. Conversely, BMI ≥ 18.5 kg/m2 (adjusted OR 0.46, 95% CI 0.27–0.76), albumin ≥ 35 g/L (adjusted OR 0.46, 95% CI 0.28–0.76), and hemoglobin ≥ 110 g/L (adjusted OR 0.56, 95% CI 0.33–0.95) continued to confer a protective effect.

Nomogram construction

Using the final multivariable model, we built a nomogram to forecast each patient’s pre-treatment probability of developing DILI once anti-TB drugs are initiated (Fig. 2). Each predictor was assigned a weighted score, and the total score corresponded to a predicted probability of DILI (Table 4). For example, a 65-year-old male with a BMI of 17.8 kg/m2, alcohol use, extrapulmonary TB, albumin of 33 g/L, and hemoglobin of 106 g/L would receive: 100 points for age ≥ 60, 73 for low BMI, 76 for drinking, 82.5 for extrapulmonary TB, 80 for hypoalbuminemia, and 57 for anemia—a total of 468.5 points. According to the nomogram, this score corresponds to an estimated DILI risk of approximately 70–75%. This enables clinicians to stratify risk before treatment begins, guiding the intensity of monitoring and preventive strategies for those at highest risk.

Model performance and validation

The nomogram retained solid discriminatory ability in every cohort, yielding an AUC of 0.80 in the training set, 0.75 in the internal validation set, and 0.77 in the external validation set (Fig. 3A–C). Calibration was equally satisfactory. In each cohort the calibration curve tracked closely with the 45-degree reference line, and the HL goodness-of-fit test revealed no significant lack of fit (training set P = 0.213, internal validation set P = 0.186, external validation set P = 0.237), confirming that predicted and observed DILI risks were in close agreement (Fig. 3D–F). In addition to excellent discrimination and calibration, DCA further supported the clinical value of the predictive nomogram. Specifically, DCA revealed that the model yielded a positive net benefit over a wide range of threshold probabilities: from 2 to 76% in the training set, 2% to 68% in the internal validation set, and 2% to 62% in the external validation set (Fig. 3G–I). These findings indicate that the nomogram can be applied effectively across various clinical risk thresholds, allowing for flexible, individualized planning of liver function monitoring and prophylactic measures before and throughout anti-TB therapy.

Predictive performance of the nomogram across three cohorts: ROC curves: A (training), B (internal validation), C (external validation); Calibration plots: D (training), E (internal validation), F (external validation); DCA curves: G (training), H (internal validation), I (external validation). Given the cohort’s ~ 5% DILI prevalence, net-benefit curves chiefly reflect low threshold probabilities.

Discussion

In this large, two-center cohort study, we developed and externally validated a clinically practical nomogram to forecast pre-treatment risk of DILI before anti-TB therapy begins. The model incorporates six routinely accessible baseline variables—age, BMI, alcohol consumption, extrapulmonary TB, serum albumin, and hemoglobin—and demonstrated favorable discrimination and calibration across the training, internal validation, and external validation cohorts.

Although isoniazid and rifampicin contribute to drug-induced liver injury—particularly in combination—pyrazinamide is consistently identified as the first-line drug most strongly associated with hepatotoxicity, often within the first 2–8 weeks of therapy6. This is concordant with guideline statements and contemporary reviews4. Our cohort received an initial first-line regimen of isoniazid, rifampicin, pyrazinamide, and ethambutol; thus, the nomogram estimates baseline vulnerability rather than implicating a specific agent. High-risk patients may warrant closer liver function test monitoring and early reassessment of pyrazinamide exposure in line with guideline cautions.

Among the identified predictors, advanced age (≥ 60 years) emerged as a robust independent risk factor for DILI. This is consistent with prior studies showing that hepatic metabolic capacity declines with age, and that mitochondrial dysfunction, reduced hepatic blood flow, and impaired detoxification pathways collectively increase susceptibility to hepatotoxic agents13,14. Clinical guidelines have acknowledged the need for more vigilant monitoring in older adults receiving hepatotoxic drugs, including first-line anti-TB agents such as isoniazid and rifampicin6.

Alcohol consumption emerged as a significant risk factor for DILI, likely due to its established impact on cytochrome P450 enzymes and consequent oxidative damage. Chronic alcohol intake induces CYP2E1 as well as other CYP450 isoforms, increasing production of reactive oxygen species and enhancing hepatotoxicity, particularly in the context of anti-TB drugs such as isoniazid and rifampicin15. Alcohol-related oxidative stress also impairs mitochondrial function and weakens cellular antioxidant defenses, further exacerbating drug-induced liver injury during therapy16. Our findings emphasize the importance of thorough alcohol use history and, when feasible, alcohol cessation counseling before initiating TB therapy.

Extrapulmonary TB was also associated with higher DILI risk. This may be attributed to longer or more intensive treatment regimens, greater systemic inflammation, and the use of second-line or adjunctive hepatotoxic agents in disseminated disease12. Similar associations have been reported in previous clinical studies that identified extrapulmonary involvement as a risk enhancer for TB treatment-related complications17.

Nutritional status, as reflected by BMI, serum albumin, and hemoglobin levels, played a critical role in predicting hepatotoxicity. Hypoalbuminemia and anemia, in particular, emerged as strong risk factors. These biomarkers are objective and widely used surrogates for malnutrition and systemic inflammation. They also reflect diminished hepatic synthetic capacity and reduced antioxidant defenses, which can compromise the liver’s ability to withstand toxic insults8,18. Recent studies suggest that hypoalbuminemia is associated with poor liver outcomes not only in chronic liver disease but also in acute drug-induced liver injury scenarios19. Similarly, anemia has been linked to increased oxidative stress and tissue hypoxia, further predisposing the liver to injury20.

Given these associations, nutritional assessment and support should be considered integral to routine care in patients undergoing anti-TB treatment, particularly those presenting with low BMI, hypoalbuminemia, or anemia. While advanced age is a non-modifiable risk factor, and alcohol consumption requires targeted counseling and behavioral interventions, nutritional status is a modifiable domain with actionable potential. Nutritional interventions—including protein-rich diets, iron and micronutrient repletion, and management of underlying malnutrition—may not only improve general physiological resilience but also enhance hepatic regenerative capacity and antioxidant defenses. Recent clinical evidence supports that tailored nutritional support in TB patients improves treatment tolerance, reduces the risk of hepatotoxicity, and contributes to better overall outcomes21,22. Therefore, proactive identification and correction of nutritional deficits should be prioritized in TB management protocols to reduce preventable DILI-related morbidity.

Importantly, our model differs from many earlier predictive tools by excluding hepatic enzyme levels (e.g., ALT, AST), which are markers of established liver injury rather than pre-treatment risk. Instead, we focused on predictive indicators available prior to DILI onset, enhancing the model’s practical utility. Previous models were often limited by small sample sizes, lack of external validation, or inclusion of diagnostic rather than predictive variables5,23,24. In contrast, our study utilized a large training cohort (n = 2160) and an external validation set (n = 564), supporting the model’s stability and generalizability.

An additional noteworthy observation is that several conventionally cited risk factors—including sex, smoking status, diabetes, hypertension, and baseline leukocyte or platelet counts—did not remain significant in our final multivariable model. While these factors showed univariable associations, they lost significance after adjusting for the six retained predictors, suggesting that their apparent influence may be confounded or mediated by other clinical variables. This finding is supported by previous evidence indicating that risk estimates can shift substantially after accounting for collinearity25. Furthermore, the absence of an independent effect for diabetes or hypertension contrasts with smaller-scale studies26, and may reflect better glycemic and blood pressure control in our cohort. It also underscores the advantage of a large sample and multivariable approach in deriving more reliable risk estimators. Future research incorporating dynamic metrics—such as glycemic variability, time-in-range blood pressure, or inflammatory biomarkers—may clarify the residual prognostic value of these variables.

This study has several notable strengths. First, the model was developed from a large, two-center cohort and validated both internally and externally, enhancing its robustness and generalizability. Such dual validation is uncommon in previous DILI prediction studies and supports the model’s applicability across diverse clinical settings. Second, the six predictors—age, BMI, alcohol use, extrapulmonary TB, serum albumin, and hemoglobin—are routinely available, objective, and low-cost, making the nomogram readily implementable, even in resource-limited environments. Third, by excluding diagnostic markers like ALT and AST, the model focuses on pre-treatment risk, offering true predictive value rather than reactive assessment. Lastly, DCA showed clear net clinical benefit across a wide range of thresholds, underscoring the model’s potential to guide personalized pre-treatment monitoring intensity and preventive measures throughout anti-TB therapy.

For bedside use, nomogram total-points map to estimate the risk of DILI (e.g., ~ 210≈10%, ~ 265≈20%, etc.). Based on DCA and ~ 5% cohort prevalence, programs can use risk bands: low (< 3%), intermediate (3–10%), high (≥ 10%). A practical monitoring scheme is: low—baseline LFTs (Liver Function Tests) only; intermediate—baseline LFTs with repeat tests at ~ weeks 2 and 8; high—baseline plus every 2-week LFTs for 8 weeks then monthly, with alcohol abstinence, hepatotoxin review, and early pyrazinamide reassessment. These thresholds need local calibration and prospective evaluation.

Several limitations merit comment. First, the retrospective design may introduce residual confounding and selection bias despite standardized eligibility. Second, we relied on routinely collected clinical/laboratory data; pharmacogenomic variables (e.g., NAT2) were unavailable, and time-varying exposures or adherence were not captured. Third, the number of DILI events was modest (137/2,724; 5.0%), so we favored a parsimonious model and categorized some continuous predictors (e.g., albumin < 35 g/L; hemoglobin < 110 g/L), which may entail information loss. Alcohol exposure and EPTB were coded as binary variables (current use; any EPTB), precluding dose–response or site-specific analyses; future work will incorporate standardized alcohol measures (e.g., AUDIT-C) and EPTB subtyping. We did not evaluate penalized regression, interaction/non-linear terms, or tree-based/machine-learning approaches, which might further optimize performance. Given the cohort’s low DILI prevalence, DCA primarily informs low-risk thresholds and net benefit at higher thresholds remains uncertain. Generalizability is also limited by the single-country setting and comparatively strict eligibility. We excluded individuals with HIV coinfection to reduce etiologic heterogeneity (different TB regimens, concomitant antiretroviral therapy, and opportunistic infections with distinct hepatotoxic profiles). This limits generalizability to people living with HIV (PLHIV); validation and, if needed, recalibration in PLHIV and other high-risk subgroups are warranted.

Conclusion

In summary, we developed and externally validated a straightforward nomogram—built entirely on six baseline clinical variables—to forecast DILI risk before anti-TB therapy begins. The model showed robust discrimination and calibration across training, internal, and external cohorts, offering a practical means for pre-treatment risk stratification. By identifying high-risk patients prior to drug exposure, clinicians can tailor monitoring intensity, deploy preventive measures, and ultimately enhance treatment safety. Future prospective, multi-center, and multi-ethnic studies, as well as digital implementation in clinical workflows, are needed to confirm and extend its utility.

Data availability

Due to institutional and ethical restrictions, patient-level data are not publicly available. De-identified data and the nomogram specification (predictors, intercept, β-coefficients) with brief risk-calculation instructions are available from the corresponding author upon reasonable request for academic use.

References

Organization, W. H. WHO Consolidated Guidelines on Tuberculosis: Module 4: Treatment: Drug-Susceptible Tuberculosis Treatment (World Health Organization, 2022).

Rana, H. K., Singh, A. K., Kumar, R. & Pandey, A. K. Antitubercular drugs: Possible role of natural products acting as antituberculosis medication in overcoming drug resistance and drug-induced hepatotoxicity. Naunyn Schmiedebergs Arch. Pharmacol. 397, 1251–1273. https://doi.org/10.1007/s00210-023-02679-z (2024).

Lewis, J. H., Korkmaz, S. Y., Rizk, C. A. & Copeland, M. J. Diagnosis, prevention and risk-management of drug-induced liver injury due to medications used to treat mycobacterium tuberculosis. Expert Opin Drug Saf. 23, 1093–1107. https://doi.org/10.1080/14740338.2024.2399074 (2024).

EASL Clinical Practice Guidelines. Drug-induced liver injury. J. Hepatol. 70, 1222–1261. https://doi.org/10.1016/j.jhep.2019.02.014 (2019).

Ji, S., Lu, B. & Pan, X. A nomogram model to predict the risk of drug-induced liver injury in patients receiving anti-tuberculosis treatment. Front. Pharmacol. 14, 1153815. https://doi.org/10.3389/fphar.2023.1153815 (2023).

Saukkonen, J. J. et al. An official ATS statement: Hepatotoxicity of antituberculosis therapy. Am. J. Respir. Crit Care Med. 174, 935–952. https://doi.org/10.1164/rccm.200510-1666ST (2006).

Yimer, G. et al. Pharmacogenetic & pharmacokinetic biomarker for efavirenz based ARV and rifampicin based anti-TB drug induced liver injury in TB-HIV infected patients. PLoS ONE 6, e27810. https://doi.org/10.1371/journal.pone.0027810 (2011).

Liu, Q. et al. Clinical risk factors for moderate and severe antituberculosis drug-induced liver injury. Front. Pharmacol. 15, 1406454. https://doi.org/10.3389/fphar.2024.1406454 (2024).

Gezahegn, L. K., Argaw, E., Assefa, B., Geberesilassie, A. & Hagazi, M. Magnitude, outcome, and associated factors of anti-tuberculosis drug-induced hepatitis among tuberculosis patients in a tertiary hospital in North Ethiopia: A cross-sectional study. PLoS ONE 15, e0241346. https://doi.org/10.1371/journal.pone.0241346 (2020).

Zhong, T. et al. Predicting antituberculosis drug-induced liver injury using an interpretable machine learning method: Model development and validation study. JMIR Med. Inform. 9, e29226. https://doi.org/10.2196/29226 (2021).

Molla, Y., Wubetu, M. & Dessie, B. Anti-tuberculosis drug induced hepatotoxicity and associated factors among tuberculosis patients at selected hospitals, Ethiopia. Hepat Med. 13, 1–8. https://doi.org/10.2147/hmer.S290542 (2021).

Jiang, F. et al. Incidence and risk factors of anti-tuberculosis drug induced liver injury (DILI): Large cohort study involving 4652 Chinese adult tuberculosis patients. Liver Int. 41, 1565–1575. https://doi.org/10.1111/liv.14896 (2021).

Mangoni, A. A., Woodman, R. J. & Jarmuzewska, E. A. Pharmacokinetic and pharmacodynamic alterations in older people: What we know so far. Expert Opin Drug Metab. Toxicol. https://doi.org/10.1080/17425255.2025.2503848 (2025).

Cohen, E. B. et al. Drug-induced liver injury in the elderly: Consensus statements and recommendations from the IQ-DILI initiative. Drug Saf. 47, 301–319. https://doi.org/10.1007/s40264-023-01390-5 (2024).

Zhu, Q., Xie, X., Fang, L., Huang, C. & Li, J. Chronic alcohol intake disrupts cytochrome P450 enzyme activity in alcoholic fatty liver disease: Insights into metabolic alterations and therapeutic targets. Front. Chem. 13, 1509785. https://doi.org/10.3389/fchem.2025.1509785 (2025).

Yew, W. W., Chang, K. C. & Chan, D. P. Oxidative stress and first-line antituberculosis drug-induced hepatotoxicity. Antimicrob. Agents Chemother. https://doi.org/10.1128/aac.02637-17 (2018).

Celik, M. et al. Demographic, clinical and laboratory characteristics of extrapulmonary tuberculosis: Eight-year results of a multicenter retrospective study in Turkey. J. Investig. Med. 73, 206–217. https://doi.org/10.1177/10815589241299367 (2025).

Meadows, I. et al. N-acetylcysteine to reduce kidney and liver injury associated with drug-resistant tuberculosis treatment. Pharmaceutics https://doi.org/10.3390/pharmaceutics17040516 (2025).

Villanueva-Paz, M. et al. Oxidative stress in drug-induced liver injury (DILI): From mechanisms to biomarkers for use in clinical practice. Antioxidants (Basel) https://doi.org/10.3390/antiox10030390 (2021).

Galaris, D., Barbouti, A. & Pantopoulos, K. Iron homeostasis and oxidative stress: An intimate relationship. Biochim. Biophys. Acta Mol. Cell Res. 1866, 118535. https://doi.org/10.1016/j.bbamcr.2019.118535 (2019).

Mahapatra, A. et al. Effectiveness of food supplement on treatment outcomes and quality of life in pulmonary tuberculosis: Phased implementation approach. PLoS ONE 19, e0305855. https://doi.org/10.1371/journal.pone.0305855 (2024).

Mandal, S., Bhatia, V., Bhargava, A., Rijal, S. & Arinaminpathy, N. The potential impact on tuberculosis of interventions to reduce undernutrition in the WHO South-East Asian Region: A modelling analysis. Lancet Reg. Health Southeast Asia 31, 100423. https://doi.org/10.1016/j.lansea.2024.100423 (2024).

Makhlouf, H. A., Helmy, A., Fawzy, E., El-Attar, M. & Rashed, H. A. A prospective study of antituberculous drug-induced hepatotoxicity in an area endemic for liver diseases. Hepatol. Int. 2, 353–360. https://doi.org/10.1007/s12072-008-9085-y (2008).

Huang, D. et al. Time of liver function abnormal identification on prediction of the risk of anti-tuberculosis-induced liver injury. J. Clin. Transl. Hepatol. 11, 425–432. https://doi.org/10.14218/jcth.2022.00077 (2023).

Leeuwenberg, A. M. et al. Performance of binary prediction models in high-correlation low-dimensional settings: A comparison of methods. Diagn Progn. Res. 6, 1. https://doi.org/10.1186/s41512-021-00115-5 (2022).

[Guidelines for diagnosis and management of drug-induced liver injury caused by anti-tuberculosis drugs (2024 version)]. Zhonghua Jie He He Hu Xi Za Zhi 47, 1069–1090, https://doi.org/10.3760/cma.j.cn112147-20240614-00338 (2024).

Funding

None.

Author information

Authors and Affiliations

Contributions

G.F.Z. and C.Q. conceived the study, designed the analyses, and drafted the manuscript. D.Q.Z. and C.Q. collected and curated the clinical data. G.F.Z. performed the statistical analyses and prepared the figures. J.A. supervised the project, interpreted the data, and critically revised the manuscript for important intellectual content. All authors reviewed and approved the final version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare that there are no conflicts of interest relevant to the content of this article.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhou, GF., Qiu, C., Zhu, DQ. et al. Development and external validation of a pre-treatment nomogram for predicting drug-induced liver injury risk in tuberculosis patients. Sci Rep 15, 40631 (2025). https://doi.org/10.1038/s41598-025-24282-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-24282-3