Abstract

The natural habitats of wild horseshoe crabs (such as beaches, shallow water areas, and intertidal sediments) are complex, posing challenges for image capture, which is often affected by real noise factors. Deep learning models are widely used in image denoising techniques, including methods based on CNNs and Vision Transformers (ViT), each with its own advantages and disadvantages. In this paper, we construct a dataset of wild horseshoe crab images and propose a CNN-Transformer hybrid model that combines multi-head transposed attention mechanisms, gating mechanisms, and depth-wise separable convolution to reconstruct key features of wild horseshoe crab images. The model uses a linear complexity multi-head transposed attention mechanism applied to the channel dimension and combines it with gating mechanisms and depth-wise convolutions to reconstruct contextual features, fully leveraging global contextual relationships across feature dimensions to optimize denoising quality. Extensive experimental results show that the model can accurately restore key features of wild horseshoe crab images, which is of great significance for their tracking and localization.

Similar content being viewed by others

Introduction

The natural habitat of wild limulus is relatively complex, mainly consisting of beaches, shallow waters, and sediments near the intertidal zone. Capturing images in these environments is quite challenging, as the images are often affected by various natural noise factors. Image denoising1,2, being an ill-posed problem3, typically requires strong image priors to effectively reduce the noise. Furthermore, due to variations in noise type, intensity, computational resources, and desired image quality4,5, selecting an appropriate image denoising method remains a significant challenge.

In recent years, researchers have extensively explored and experimented with image denoising techniques. Currently, the primary methods are grounded in deep learning models, mainly including CNN-based approaches 6,7,8, ViT-based techniques9,10,11, and hybrid architectures12,13,14.

CNN-based methods offer significant advantages in image denoising tasks, such as automatic feature learning and hierarchical feature representation. These attributes allow CNNs to adapt to various types of noise and deliver precise denoising outcomes. Additionally, CNNs offer benefits like adaptability, parameter sharing, translation invariance, local perception, and end-to-end learning, making them highly efficient in handling diverse image denoising challenges while achieving good denoising performance. For instance, Zhang et al.6 proposed a robust deformable CNN for image denoising, which comprises three key blocks: a deformable block (DB) for extracting representative noise features based on neighboring pixel relationships, an enhanced block (EB) to boost the learning ability of the network, and a residual block (RB) to improve memory retention from shallow to deep layers, ultimately reconstructing clean images. Similarly, Zheng et al.15 introduced a hybrid denoising CNN that includes a dilated block (DB), a RepVGG block, a feature refinement block (FB), and single convolution. Despite their successes, CNN-based methods face challenges in practical applications, including the quality of training data, computational resource demands, network structure design, and limitations in feature extraction.

ViT-based methods have made significant strides in recent years. With their powerful feature extraction and representation capabilities, ViTs can capture high-level semantic information in images, leading to effective noise reduction. By leveraging matrix operations to process pixel features, transformers excel in extracting long-range dependencies within images, providing advantages in terms of parallelism, global context modeling, and flexibility in the denoising process. For example, Fan et al.9 proposed a restoration model called SUNet, which utilizes the Swin Transformer layer as a fundamental block and applies UNet for image denoising. Li et al.16 introduced a spectral-enhanced rectangle Transformer to explore the non-local spatial similarity and global spectral low-rank properties of hyperspectral images (HSIs). However, ViT-based methods face limitations, such as higher computational costs and increased data dependency. To address these challenges, researchers are exploring ways to optimize ViT structures to improve their performance in image denoising tasks. Moreover, integrating ViT with other deep learning techniques could yield better denoising results and broaden its application scope.

Currently, hybrid CNN-Transformer models primarily focus on general image datasets. However, real-world datasets, such as those containing limulus images, exhibit unique characteristics, including diversity, dynamic behavior, and amphibious environments, where general models may perform inadequately. To address these challenges, we propose a CNN-Transformer hybrid model that incorporates a multi-head transposed attention mechanism with linear complexity, applied between channels rather than in the spatial dimension. We also explore the use of a gating mechanism combined with depth-wise convolution to reconstruct contextual features in limulus images. Achieving high-precision restoration of critical features in wild limulus images, while fully utilizing global contextual relationships across feature dimensions, presents a key scientific challenge tackled in this paper. The main contributions of this study:

(1) This paper constructs the Amphibious Wild Limulus Image Dataset (AWLD). In addition to the images collected by our team, we also received support from the Beibu Gulf Marine Biodiversity Database. Based on the different living environments of wild horseshoe crabs, the AWLD dataset is divided into three categories: underwater images (UI), underwater and terrestrial images (UTI), and terrestrial images (TI).

(2) A hybrid CNN-Transformer-based model is proposed, which combines a multi-head transposed attention mechanism with linear complexity applied to channels rather than spatial dimensions. Additionally, we explore the use of a gated mechanism combined with depthwise separable convolutions to reconstruct the contextual features of horseshoe crab images. Achieving high-precision recovery of key features in wild horseshoe crab images while fully leveraging global contextual relationships across feature dimensions represents the critical scientific challenge addressed in this study.

The structure of the remainder of this paper is as follows: Section "Related work" reviews the related work, Section "Methods" provides a detailed explanation of the proposed wild horseshoe crab images denoising model based on CNN-Transformer architecture, Section "Numerical experiments" presents the experimental results, analysis, and discussion, and finally, Section "Conclusion" concludes the paper.

Related work

The methods for image denoising have evolved from utilizing CNNs to incorporating ViT models, with recent developments focusing on hybrid CNN-Transformer models that combine the strengths of both architectures. The CNN-Transformer approach leverages the complementary benefits of convolutional neural networks (CNNs) and Transformer models, making it highly effective for image denoising tasks. By integrating both local and global information, these models can adapt to varying image scales and resolutions, handling complex noise scenarios more efficiently.

Hybrid CNN-Transformer methods Yi et al.17 introduced a deblur model, an end-to-end network designed for single infrared image blind deblurring. This model incorporates multiple hybrid CNN-Transformer encoders for feature extraction, effectively capturing intrinsic features of infrared images. A fully connected bidirectional feature pyramid decoder further enhances multi-level feature reuse. However, the algorithm requires substantial computational resources and exhibits high complexity. Chen et al.18 proposed Dual-former, which uses convolutions for local feature extraction in both the encoder and decoder, while hybrid Transformer modules in the latent layer model long-range spatial dependencies and address uneven channel distribution. Despite these improvements, the model still faces challenges with computational complexity and limited denoising results. Zhao et al.19 introduced the model, which features a Transformer encoder based on Radial Basis Function (RBF) attention to enhance the model’s overall expressive capabilities. A residual CNN is employed for decoding. However, this algorithm suffers from low denoising efficiency, with performance falling short of expectations.

Building on these approaches, we aim to explore the performance of CNN-Transformer models on the small-sample wild limulus dataset, with the goal of developing a more lightweight, multi-level resolution image denoising model.

Methods

Due to the ill-posed nature of image denoising, powerful image priors are often required for effective restoration. CNNs excel at learning generalizable priors, however, CNNs also present two main challenges: first, convolutional operators have limited receptive fields, making it difficult to capture long-range or global pixel dependencies; second, the static weights of convolutional filters cannot adapt dynamically to input variations. To address these limitations, the model addresses the denoising problem of wild horseshoe crab images by combining the strengths of Convolutional Neural Networks (CNNs) and the Transformer architecture. First, the model utilizes CNNs to extract low-level features from the images. These features are then fed into an encoder-decoder structure, which transforms the low-level features into higher-level feature representations. This design allows the model to capture and reconstruct image features at different levels, effectively handling noise at various scales. The model incorporates a multi-head transposed attention mechanism, which has linear complexity and is used to capture global contextual relationships across channels. Additionally, the model employs a Feedforward Gated Depthwise Convolution approach (FGDA), which combines gating mechanisms with depthwise separable convolution to encode spatially adjacent pixel positions, effectively capturing the local structure of the image. To enhance feature preservation, the model adopts skip connections that directly link the encoder’s features to the decoder’s features. This aids in retaining more detailed information during the reconstruction process. Finally, the model combines the residual image with the degraded image to produce the denoised output. This residual learning approach helps the model recover image details and texture information more accurately. The overall pipeline of the proposed architecture is illustrated in Fig. 1.

Given a noisy wild limulus image \(I_{l} \in R^{H \times W \times 3}\), where H represents the matrix height, and W stands for matrix width, the process begins by using a CNN to extract low-level features \(F_{l} \in {\mathbb{R}}^{H \times W \times C}\), where C represents the number of channels. Next, a 4-level symmetric encoder-decoder structure transforms the low-level information into high-level features \(F_{h} \in {\mathbb{R}}^{H \times W \times 2C}\). Transformer blocks are incorporated at each level of the encoder-decoder, with the number of blocks increasing from high-level to low-level to maintain computational efficiency. For high-resolution images, the encoder progressively increases the number of channels while reducing the image size at each layer. The decoder then retrieves low-level features \(F_{s} \in {\mathbb{R}}^{{\frac{H}{8} \times \frac{W}{8} \times 8C}}\) and gradually reconstructs the high-level attributes. During feature extraction, pixel demixing and mixing operations are applied. Skip connections link the encoder features to the decoder features, facilitating the recovery process. After concatenation, a 1 × 1 convolution is applied to reduce the number of channels. Transformer blocks are employed to fuse low-level encoder features with high-level decoder features, which helps preserve the texture details and key information of wild limulus images. Finally, the residual image \(I_{R} \in {\mathbb{R}}^{H \times W \times 3}\) and the degraded image are summed to produce the restored image \(\hat{I} = I + R\). This architecture comprises two main modules: Linear multi-head Transposed Attention across Channels (LTAC) and the Feed-forward Gated-Dconv Approach (FGDA).

Linear multi-head transposed attention across channels

The primary computational overhead in Transformer architecture stems from the multi-head self-attention mechanism. This mechanism incurs substantial costs due to the need to compute attention weights to capture diverse relationships within the input sequence. Each attention head involves similarity calculations and weighted combinations for every position in the sequence, resulting in a large volume of matrix operations, especially when processing long sequences with multiple heads. To address this issue, the MDTA module with linear complexity is employed. Instead of applying self-attention in the spatial dimension, it computes cross-channel inter-covariance to generate an attention map that encodes implicit global context. Additionally, depth-wise convolution is used to emphasize local context and create a global attention map before calculating feature covariance.

The projections of the query (Q), key (K), and value (V) are produced by the module through layer normalization and are further enriched with local context integration. This is achieved by aggregating pixel-wise cross-channel context using 1 × 1 convolutions, followed by encoding channel-wise spatial context through 3 × 3 depth-wise convolutions, where \(Q = W_{d}^{Q} W_{p}^{Q} Y,\;K = W_{d}^{K} W_{p}^{K} Y,V = W_{d}^{V} W_{p}^{V} Y.\). In this process, the 1 × 1 point-wise convolution \(W_{p}^{( \cdot )}\) captures cross-channel interactions, while the 3 × 3 depth-wise convolution \(W_{d}^{( \cdot )}\)encodes spatial relationships. Our network employs unbiased convolutional layers to maintain the integrity of these operations. The query and key projections are then reshaped to generate a transposed attention map of size \(R^{{\hat{C} \times \hat{C}}}\)through dot-product interactions, rather than a large regular attention map of size \(R^{{\hat{H}\hat{W} \times \hat{H}\hat{W}}}\). In summary, the MDTA process can be described as follows:

Here, \(I_{i}\) represents the input feature maps, and \(I_{0}\) corresponds to the output feature maps. The \(\hat{Q},\hat{K}\) and \(\hat{V}\) matrices are derived by reshaping the tensor back to its original size \(R^{{\hat{H} \times \hat{W} \times \hat{C}}}\). Additionally, \(\alpha\) is a learnable scaling factor that regulates the magnitude of the dot product between \(\hat{K}\) and \(\widehat{Q}\) before applying the softmax function. Similar to traditional multi-head self-attention, we divide the channels into multiple heads and train them in parallel, with each head generating its own independent attention map.

Feed-forward Gated-Dconv approach

The Feedforward Network (FN) operates on each pixel position. It utilizes two 1 × 1 convolutions: the first expands the feature channels, typically by a factor of \(\gamma = 4\), while the second reduces them back to the original input dimension. This approach enhances the standard FN by incorporating gating mechanisms and depth-wise convolutions. The gating mechanism is defined as the element-wise product of two parallel paths within a linear transformation layer, where one path is activated by the GELU non-linear activation function. In the Gated Depthwise Feedforward Network (GDFN), depth-wise convolutions are applied to encode spatially adjacent pixel locations, proving highly effective in capturing local image structures. The GDFN formulation is as follows:

where G refers to the gating mechanism, \(\odot\) represents the element-wise product, \({\mathcal{G}}\) denotes the GELU activation function, and LN stands for layer normalization. The GDFN reduces the expansion ratio \(\gamma\) to lower computational complexity while maintaining performance.

Stage-wise learning

Patch-based CNNs used in image denoising often struggle to handle global image features, limiting the overall denoising performance. To address this, the model employs a progressive learning approach. In the early stages, smaller image patches are used for training during simpler tasks, while in the later stages, larger patches are introduced for more complex tasks. The batch size is reduced accordingly to maintain time efficiency. This staged learning process enhances the model’s ability to preserve the structural and textural features in complex wild limulus images.

Numerical experiments

Datasets

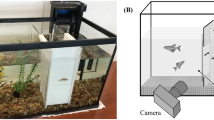

To study the living habits of wild limulus, we conducted collaborative research as the foundation of our work. We collected and curated a small sample dataset called the Amphibious Wild Limulus Image Dataset (AWLD). In addition to the images gathered by our team, we received support from the Beibu Gulf Marine Biodiversity Database. The AWLD dataset contains 1372 images and video data and is characterized by environmental diversity. It includes images of wild horseshoe crabs in various living environments, such as beaches, shallow waters, and intertidal sediments under complex natural conditions. The images are often affected by various natural noise factors, posing challenges to image quality. Based on the different living environments of wild horseshoe crabs, the AWLD dataset is divided into three categories: underwater images (UI), underwater and terrestrial images (UTI), and terrestrial images (TI). Figure 2 showcases examples from the wild limulus image dataset.

Objective results

Table 1 compares the F(G), P(M), and PSNR/SSIM values of current mainstream image denoising models under real noise conditions. These data indicate that, although our model is not optimal in terms of runtime and memory usage, its moderate performance on these metrics does not hinder its outstanding performance in image denoising. This suggests that our model may provide the best balance for practical applications. Our model achieves the highest average PSNR and SSIM. On the UTI and TI datasets, our model achieves the best results. On the UI dataset, our PSNR and SSIM are slightly lower than those of DDT, ranking second. Our performance on UI is very close to the best.

Table 2 compares the PSNR/SSIM results of state-of-the-art image denoising models under different noise levels (\(\sigma\)). Our model demonstrates the best overall performance on the UI, UTI, and TI image datasets, especially maintaining good performance at high noise levels, with the highest average PSNR and SSIM results, followed by DDT. The DDT model performs best on UTI at \(\sigma = 15\). MPRNet performs best on UI at \(\sigma = 25\) and 50. Restormer performs best on UTI at \(\sigma = 25\). SwinIR has the lowest average denoising performance, resulting in the poorest image denoising quality.

Through the quantitative evaluation of image denoising algorithms, we can clearly see that our method significantly enhances image denoising performance. A large number of experimental results demonstrate the effectiveness of our method in addressing the image denoising problem of wild horseshoe crabs, ensuring the accuracy of subsequent tracking and conservation efforts for wild horseshoe crabs.

Subjective results

Figure 3 demonstrates that on the UI dataset, our method achieves the best performance, with PSNR/SSIM values of 39.21/0.9788, followed by MPRNet, which achieves PSNR/SSIM values of 38.46/0.9623. The denoising performance of AP-BSN, MM-BSN, and MIRNetv2 is relatively worse, with PSNR/SSIM values of 35.70/0.9443, 35.16/0.9432, and 34.58/0.9257, respectively. DDPG exhibits the poorest denoising performance, with PSNR/SSIM values of 29.72/0.8713. On the UTI dataset, MPRNet performs the best with a PSNR value of 32.38 dB, followed closely by our method with a PSNR value of 32.36 dB. However, our method achieves the best SSIM result of 0.9012, while DDPG performs the worst. On the TI dataset, our method outperforms others, achieving PSNR/SSIM values of 33.23/0.9124. The closest contender is SwinIR, with a PSNR value of 32.72 and an SSIM value of 0.9021. MM-BSN and AP-BSN, with PSNR/SSIM values of 29.18/0.8706 and 28.17/0.8627, respectively, perform relatively poorly, while DDPG exhibits the worst performance, with PSNR/SSIM values of 26.34/0.8538.

Figure 4 shows that on the UI dataset, our method achieves the best image denoising performance, with PSNR/SSIM values of 36.74/0.9513. The PSNR/SSIM values of MM-BSN, AP-BSN, MPRNet, MIRNetv2, and DDT are 36.64/0.9443, 36.59/0.9453, 36.57/0.9441, 36.56/0.9439, and 36.25/0.9434, respectively, while SwinIR performs the worst. On the UTI dataset, our method achieves the best performance, with PSNR/SSIM values of 36.79/0.9516, followed by DDT, while SwinIR performs the worst. On the TI dataset, our method stands out, achieving PSNR/SSIM values of 38.23/0.9617, the highest among all methods. DDT follows as the second-best, while SwinIR performs the worst.

Extensive experimental results demonstrate that our method significantly improves image quality. Compared to other SOTA models, our method better restores image sharpness, details, and naturalness. Subjective evaluation results further validate the practical effectiveness of our approach.

Ablation studies

In this section, we conduct ablation studies to evaluate the performance of LTAC and FGDA on the AWLD dataset.

LTAC: LTAC enhances the ability to model global context and capture complex long-range dependencies. To evaluate the effectiveness of LTAC, we constructed a model using a standard attention mechanism to assess its impact on capturing global contextual relationships. The results are shown in Table 3. We observed that using the multi-head transposed attention mechanism resulted in PSNR and SSIM improvements of 0.05 dB and 0.0017 compared to the standard attention mechanism.

FGDA: FGDA improves the selectivity of local features and enables fine-grained control over noise interference. We replaced FGDA with simple convolutional layers to evaluate its contribution to feature reconstruction. The results are shown in Table 4. We observed that using FGDA, compared to standard CNN, improved the PSNR and SSIM by 0.02 dB and 0.0009, respectively.

Conclusion

This paper proposes a denoising method for wild horseshoe crab images based on a CNN-Transformer hybrid architecture. The method effectively restores clear images from noise while preserving key structural information and texture details. By constructing a small sample dataset containing wild horseshoe crabs and incorporating multi-head self-attention mechanisms as well as multi-scale and multi-layer modules, the method enhances the robustness of multi-resolution image denoising tasks while reducing computational complexity. Employing a progressive training strategy allows the model to better retain structural and texture features in complex wild horseshoe crab images. Through the use of Linear Transposed Attention across Channels (LTAC) and Feedforward Gated Depth Convolution (FGDA), the model effectively captures both global and local contextual information, which is crucial for image denoising tasks. Experimental results demonstrate that the model performs exceptionally well in denoising wild horseshoe crab images under various conditions. Compared to existing denoising methods, the model performs best on the AWLD image dataset with real noise. It achieves top performance on the UTI and TI datasets and ranks second on the UI dataset with scores of 38.12/0.9604, only slightly below DDT’s 38.16/0.9606, but very close to optimal. On the AWLD dataset with Gaussian noise, the model achieves optimal performance across multiple datasets, including UI and TI with Sigma = 15, TI with Sigma = 25, UTI and TI with Sigma = 50, and overall AVG. This study provides an effective solution for denoising wild horseshoe crab images, which is significant for advancing intelligent marine research as well as the tracking and localization of wild horseshoe crabs. Looking ahead, we plan to further optimize the model architecture to improve denoising performance and computational efficiency, while exploring the model’s potential for widespread applications in various image denoising tasks.

Data availability

The images, figures and other data supporting the findings of this study were collected by our team and obtained from the Beibu Gulf Marine Biodiversity Database, which were used under permission. The data can be obtained from the corresponding authors upon reasonable request and with permission from the Beibu Gulf Marine Biodiversity Database.

References

Kim, C., Kim, T.H., & Baik, S. LAN: Learning to adapt noise for image denoising. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 25193–25202 (2024).

Zhang, X. Image denoising and segmentation model construction based on IWOA-PCNN. Sci. Rep. 13(1), 19848 (2023).

Rajan, M. P. & Jose, J. An efficient discretization scheme for solving nonlinear ill-posed problems. Comput. Methods Appl. Math. 24(1), 173–184 (2024).

Min, X. et al. Perceptual video quality assessment: A survey. Sci. China Inf. Sci. 67(11), 211301 (2024).

Min, X., Gu, K. & Zhai, G. assessment: Overview, benchmark, and beyond. ACM Comput. Surv. (CSUR) 54(9), 1–36 (2021).

Zhang, Q. et al. A robust deformed convolutional neural network (CNN) for image denoising. CAAI Trans. Intell. Technol. 8(2), 331–342 (2023).

Han, L. et al. Non-local self-similarity recurrent neural network: Dataset and study. Appl. Intell. 53(4), 3963–3973 (2023).

Agarkar, A., Shrivastava, S., Bangde, D., et al. CNN based image-denoising techniques—A review. In 2024 3rd International Conference on Sentiment Analysis and Deep Learning (ICSADL). IEEE, 146–152 (2024).

Fan, C.M., Liu, T. J., & Liu, K. H. SUNet: Swin transformer UNet for image denoising. In 2022 IEEE International Symposium on Circuits and Systems (ISCAS). IEEE, 2333–2337 (2022).

Yin, H. & Ma, S. CSformer: Cross-scale features fusion based transformer for image denoising. IEEE Signal Process. Lett. 29, 1809–1813 (2022).

Gan, Y., Zhou, S., Chen, H., et al. Image denoising based on Swin transformer residual conv U-Net. In 2024 IEEE/ACIS 27th International Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing (SNPD). IEEE, 166–170 (2024).

Tian, C. et al. Multi-stage image denoising with the wavelet transform. Pattern Recogn. 134, 109050 (2023).

Deng, K. et al. Hybrid model of tensor sparse representation and total variation regularization for image denoising. Signal Process. 217, 109352 (2024).

Liang, Y., & Liang, W. ResWCAE: Biometric pattern image denoising using residual wavelet-conditioned autoencoder. http://arxiv.org/abs/2307.12255 (2023).

Zheng, M. et al. A hybrid CNN for image denoising. J. Artif. Intell. Technol. 2(3), 93–99 (2022).

Li, M., Liu, J., Fu, Y., et al. Spectral enhanced rectangle transformer for hyperspectral image denoising. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 5805–5814 (2023).

Yi, S., Li, L., Liu, X., Li, J. & Chen, L. HCTIRdeblur: A hybrid convolution-transformer network for single infrared image deblurring. Infrared Phys. Technol. 131, 104640 (2023).

Chen, S., Ye, T., Liu, Y. & Chen, E. Dual-former: Hybrid Self-attention Transformer for Efficient Image Restoration (2022).

Zhao, M., Cao, G., Huang, X. & Yang, L. Hybrid transformer-CNN for real image denoising. IEEE Signal Process. Lett. 29, 1252–1256 (2022).

Lee, W., Son, S., Lee, K. M.. Ap-bsn: Self-supervised denoising for real-world images via asymmetric pd and blind-spot network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 17725–17734 (2022).

Liu, K., Du, X., Liu, S., et al. DDT: Dual-branch deformable transformer for image denoising. In 2023 IEEE International Conference on Multimedia and Expo (ICME). IEEE, 2765–2770 (2023).

Zamir, S. W. et al. Learning enriched features for fast image restoration and enhancement. IEEE Trans. Pattern Anal. Mach. Intell. 45(2), 1934–1948 (2022).

Zhang, D., Zhou, F., Jiang, Y., et al. Mm-bsn: Self-supervised image denoising for real-world with multi-mask based on blind-spot network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 4189–4198 (2023).

Mehri, A., Ardakani, P. B., & Sappa, A. D. MPRNet: Multi-path residual network for lightweight image super resolution. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision 2704–2713 (2021).

Liang, J., Cao, J., Sun, G., et al. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision 1833–1844 (2021).

Garber, T., & Tirer, T. Image restoration by denoising diffusion models with iteratively preconditioned guidance. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 25245–25254 (2024).

Zamir, S.W., Arora, A., Khan, S., et al. Restormer: Efficient transformer for high-resolution image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 5728–5739 (2022).

Acknowledgements

The authors would like to thank the anonymous reviewers for their invaluable comments. Any opinions, findings and conclusions expressed in this paper are those of the authors and do not necessarily reflect the views of the sponsors. This work was partially funded by the Natural Science Foundation of Guangxi Zhuang Autonomous Region under Grant No. 2025GXNSFHA069149.

Author information

Authors and Affiliations

Contributions

Lili Han contributed to the conception of the study. Xiuping Liu performed the experiment. Qingqing Wang performed the data analyses. Tao Xu helped perform the analysis with constructive discussions.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

The Authors confirm that the work described has not been published before, and it is not under consideration for publication elsewhere.

Consent to participate

The Authors agree to participate.

Consent for publication

The Authors agree to publication in the Scientific Reports.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Han, L., Liu, X., Wang, Q. et al. Wild horseshoe crab image denoising based on CNN-transformer architecture. Sci Rep 15, 11622 (2025). https://doi.org/10.1038/s41598-025-96218-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-96218-w