Abstract

Accurate assessment of body composition is essential for evaluating the risk of chronic disease. 3D body shape, obtainable using smartphones, correlates strongly with body composition. We present a novel method that fits a 3D body mesh to a dual-energy X-ray absorptiometry (DXA) silhouette (emulating a single photograph) paired with anthropometric traits, and apply it to the multi-phase Fenland study comprising 12,435 adults. Using baseline data, we derive models predicting total and regional body composition metrics from these meshes. In Fenland follow-up data, all metrics were predicted with high correlations (r > 0.86). We also evaluate a smartphone app which reconstructs a 3D mesh from phone images to predict body composition metrics; this analysis also showed strong correlations (r > 0.84) for all metrics. The 3D body shape approach is a valid alternative to medical imaging that could offer accessible health parameters for monitoring the efficacy of lifestyle intervention programmes.

Similar content being viewed by others

Introduction

Body composition is strongly related to the risk of chronic disease morbidity and mortality1, and can be assessed accurately using medical imaging methods such as dual-energy X-ray absorptiometry (DXA), magnetic resonance imaging (MRI) and computed tomography (CT)2,3,4. However, these methods are not readily available to be used routinely in clinical practice and in epidemiological studies due to practical and ethical constraints, nor are they easily accessible to the general public5,6. In these settings, conventional anthropometry such as body mass index (BMI), waist, hip circumferences and waist-hip ratio are typically used to infer body composition. Parente et al.7 determine waist-height ratio and waist as best estimators for visceral fat in type-1 diabetes. Heymsfield et al.8 analyse simple skeletal muscle mass prediction formulas in different ethnicities. However, these indirect methods of assessing body composition are insufficiently accurate or convenient for longitudinal use as they often require face-to-face clinical visits and trained staff. Furthermore, these surrogate measures do not differentiate between fat and lean mass or their distribution9,10.

There is a need to develop simple, accessible, and relatively inexpensive tools to improve the accuracy of assessing body composition. This would provide better prediction of metabolic health and identify people at high risk of disease in the long term so that remedial action can be taken. Significant works in recent years have focused on the development of 3D optical (3DO) scanning11 to estimate body composition12,13,14,15. 3DO scanners use depth sensors by projecting infrared patterns onto the scan subject to rapidly construct a 3D point cloud using multiview stereo, and subsequently capture 3D surface shape information. Rather than predicting body composition from anthropometric measurements alone, 3D body shape as a whole provides more visual and implicit cues for predicting body composition more accurately. Additional 3D shape cues can either be additional landmark diameters, circumferences, surface areas and volumes from 3DO scans16, or parameters of a PCA shape space13,14,15. More recently Leong et al.17 use a variational autoencoder (VAE)18 to learn latent DXA encoding, and map 3DO scans to pseudo-DXA images. These works have shown that 3D shape information could augment conventional prediction models using anthropometry, or outperform them as a standalone predictor for a variety of body composition metrics. However, while the cost of 3DO scanners is comparatively lower than that of DXA, MRI or CT, obtaining 3DO scans still requires a dedicated apparatus, which makes it less accessible to the general public.

To derive shape information without full reliance on 3DO scanners to reconstruct 3D body meshes, recent works have taken advantage of developments in computer vision and machine learning algorithms, which have enabled accurate segmentation19 and pose estimation20 of objects including the human body from RGB images, which are easily obtainable using a smartphone camera. Majmudar et al.21 train a convolutional neural network (CNN) to directly predict percentage body fat from front and back images. Alves et al.22 locate key points from multiple views, derive circumferences, and predict percentage body fat. Xie et al.23 construct a PCA shape space from 2D DXA silhouettes and predict body composition. Sullivan et al.24 derive body volume from a single image by measuring horizontal landmark diameters and use the volume in a 3-compartment model (body mass, body volume and body water) to calculate percentage body fat. Smith et al.25 compare circumference estimation accuracy using a smartphone app and 3DO scanners, and claim that circumferences estimated from images can be relatively accurate. McCarthy et al.16 derive lengths, circumferences and volumes from body shape images, and predict skeletal muscle mass from these measurements alongside demographic variables. Graybeal et al.26 evaluate two smartphone apps and compare circumference and circumference ratio prediction accuracy. The remote data capture and modelling of 3D shapes has numerous applications, including helping patients track individual changes over time for commonly assessed anthropometric measurements. Furthermore, patients are not required to physically attend clinics to have these measures done, thus lowering the burden on health services and providing a more cost-effective way to monitor aspects of patient health.

Unfortunately, there are few large-scale datasets containing 3D body meshes with paired anthropometric and metabolic traits. Bennett et al.12 worked with a cohort of size 501, McCarthy et al.16 had a cohort size of 322, and Ng et al.14 had a cohort size of 407. These small cohorts prevent larger deep-learning models from being leveraged for body composition predictions. Klarqvist et al.27 used a large-scale MRI database from the UK Biobank28, to predict body composition from coronal and sagittal silhouettes. To the best of our knowledge, this is the only study that contains MRI data at this scale, as the use of MRI in population studies is limited due to cost and accessibility for research. 3D body shape datasets are therefore scarce, while datasets containing 2D DXA images with anthropometric and metabolic traits (body composition) are in abundance.

Therefore in this work, we present a novel method that first fits 3D body meshes to DXA silhouettes and paired anthropometry measurements consisting of height, waist and hip circumferences. Similar to our approach, Keller et al.29 register a 3D mesh to a DXA silhouette to infer skeletal structure, and Tian et al.30 fit a 3D mesh to a pose-constrained frontal image. However, these methods are limited to the coronal silhouette, while our method injects sagittal information in the form of waist and hip circumferences. Using our method, we generate a large 3D body shape database of 17,461 meshes. We then show that using the fitted meshes, total and regional body composition metrics can be predicted accurately.

We also test and evaluate the performance of a smartphone app (3D Body Shape App) that uses phone images alone to make it easier for individuals to visualise and track changes in their body shape31. The app captures four photographs (front, back, left-side, and right-side profiles of the participant), and reconstructs a 3D body mesh using these images. McCarthy et al.16 and Smith et al.25 also generate 3D body meshes from RGB images using an app, which is the most similar to our smartphone approach. However, their app requires constrained A-pose for the photographs and could fail due to noisy background in our testing. In contrast, our method is robust to background, participant poses, camera orientation, and could be extended to accept an arbitrary number of input images. We show preliminary body composition prediction results using the app. Our aim is to improve performance by increasing reconstruction accuracy in the future.

In summary, we make the following contributions in this study:

-

Construct a large 3D body shape database derived from 2D DXA silhouettes and paired anthropometry measurements (height, waist and hip circumferences);

-

Predict, from 3D body shape, total and regional body composition metrics including:

-

— Total fat mass;

-

— Percentage body fat (PBF);

-

— Android fat mass;

-

— Gynoid fat mass;

-

— Peripheral fat mass;

-

— Visceral adipose tissue (VAT) mass;

-

— Abdominal subcutaneous adipose tissue (SCAT) mass;

-

— Total lean mass;

-

— Appendicular lean mass;

-

— Appendicular lean mass index (ALMI).

-

-

Evaluate and show preliminary results of a smartphone app that predicts body composition by reconstructing 3D body meshes from images only.

To the best of our knowledge, our method is the first that fits 3D body meshes to DXA images and predicts downstream body composition metrics. In this way, we show that accurate 3D meshes can be derived from a single 2D silhouette plus simple anthropometry (height, waist and hip circumferences), from which body composition metrics can be predicted.

Results

The demographic and anthropometric characteristics of the Fenland study samples and the smartphone validation study are summarised in Table 1. Participants in the smartphone validation study were younger, lighter and leaner, compared to participants in the Fenland study. In terms of body volume, we observed a mean (SD) of 64.4 (13.2) liters in the smartphone validation study, the only dataset with air plethysmography measures.

3D body mesh fitting

Figure 1 shows samples of our fitted 3D body meshes. We show individuals from different weight groups to qualitatively demonstrate that our fitting pipeline works for different body shapes. Row 1 shows the raw DXA scans. Row 2 shows the initial pose and shape estimations obtained using HKPD20. These roughly capture the pose and shape of the body, but the fit to the coronal silhouette is often poor. Row 3 of Fig. 1 shows samples of optimised fits. We found that optimised meshes agree much better with the silhouettes of DXA images compared with the initial fit. Furthermore, Supplementary Fig. 1 shows samples of meshes before and after optimisation in sagittal view. We observed that the optimisation has resulted in significant changes to the body shapes in the depth dimension when comparing the initial meshes to the optimised meshes. This further shows that initial fits do not represent the actual body shape and that waist and hip circumferences were needed to generate meshes that better represent the true body shape of participants. Our method, in conclusion, has generated body meshes that are injected with 3D body shape information using paired anthropometry, while creating an improved fit to the DXA silhouettes. SMPL shape parameters corresponding to these optimised meshes are then used for the body composition regressor.

Body composition prediction

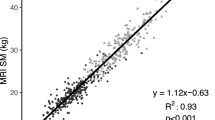

Table 2 shows the model performance on Fenland and smartphone datasets in the form of mean bias (95% limits of agreement), root-mean-square error (RMSE), and Pearson correlation for the agreement between ground truth body composition values from DXA and model predictions using the 3D meshes. The last column in this table is the model performance using the smartphone method. Supplementary Fig. 2 shows selected scatter plots of model predictions against target values on Fenland phase 2 data. Figure 2 shows scatter plots and Bland–Altman plots for percentage body fat for Fenland phase 1 (validation), phase 2 (validation), smartphone study using DXA silhouette optimisation, and smartphone study using RGB images.

Scatter plots (Row 1) and Bland–Altman plots (Row 2) for agreement between predicted and measured percentage body fat in the Fenland samples and the external validation set. All predictions are based on 3D meshes derived from DXA silhouettes, except last panel which is based on 3D meshes derived from four RGB photos from smartphone.

In the Fenland phase 1 validation sample, correlation coefficients between predicted and measured DXA parameters were strong (r > 0.89) for all fat mass and lean mass variables. Bland–Altman analyses revealed no significant mean bias for the following predicted DXA parameters: total fat mass, percentage body fat, android fat, appendicular lean mass and appendicular lean mass index (all P > 0.05). However, significant (P < 0.05) mean bias was observed for gynoid fat, visceral fat, abdominal SCAT mass, peripheral fat and total lean mass. In the Fenland phase 2 (validation) sample, which included now older individuals, correlation coefficients between predicted and measured DXA parameters were also strong (r > 0.86) for all the body composition variables. Agreement analyses revealed significant (P < 0.05) mean bias for all predicted DXA measured parameters. Mean bias was −0.24 (−6.7; 6.2)% for percentage body fat and for the other body composition metrics, the mean bias ranged between -0.04 to 0.41 kg. Similar results for the DXA silhouette method were also observed in the external validation (Column 3 of Table 2), which included younger individuals. Correlation coefficients between predicted and measured DXA parameters were r > 0.89. Mean bias was 1.13 (−7.2; 9.5)% for percentage body fat and for the other body composition parameters, the mean bias ranged between −1.21 to 1.13 kg.

Results using SMPL shape generated directly from the four smartphone images in this external validation are shown in Column 4 of Table 2. The correlation coefficients between DXA metrics and all the predicted body composition values were r > 0.84. Mean bias (95% LoA) was 1.62 (−9.2; 12.5)% for percentage body fat and for the other body composition parameters, the mean bias ranged between −1.51 to 0.93 kg. Volume derived from the smartphone achieved RMSE of 5.21 L compared to BODPOD volume, with mean bias (95% LoA) of 5.36 (−4.9; 15.6) L. We also compared accuracy of waist, hip, calf, and arm circumferences from the smartphone avatars, which achieved RMSE of 5.04 cm, 4.38 cm, 2.76 cm and 2.42 cm, respectively.

Comparison between prediction models

We conducted a comparison study on different regressor model inputs, to verify that 3D body meshes provide crucial information for the downstream composition regressor. Results of the different models investigated in the comparison study are shown in Table 3. Model A (weight and height only) achieved some level of predictive ability. Performance of the model was improved by adding waist and hip circumferences (Models B and C), as waist and hip are strong indicators of composition metrics such as android fat mass and gynoid fat mass. In the final model (Model E), we quantified the contribution of the SMPL shape parameters in addition to using height and weight only. This model substantially improved the estimation of the body composition metrics compared to anthropometry alone. The explained variance (R2) in percentage body fat increased from 73% to 82%; total fat mass from 88% to 92%; total lean mass from 91% to 93%; gynoid fat from 81% to 89%; android fat from 81% to 89%; peripheral fat mass from 80% to 87%; appendicular lean mass 90 to 93%; appendicular lean mass index from 74% to 86%; visceral fat from 70% to 80% and abdominal SCAT from 70% to 72%. We also attempted to predict body composition using a simple linear regressor (Model D), but the neural network approach (Model E) outperformed it noticeably.

Predictions of body composition change

A total of 5733 individuals participated in both Fenland Phase 1 and Phase 2, which enabled us to examine the model’s ability to detect within-individual body composition changes over a mean (SD) of 6.7 (2.0) years.

Table 4 shows model predictions of changes in body composition metrics for individuals present in both Fenland phases. Our model was able to detect change for numerous fat mass metrics. The agreement between predicted body composition values and DXA parameters (r) for changes in percentage body fat, total fat mass, gynoid fat mass, android fat mass, peripheral fat mass, visceral fat mass and abdominal SCAT mass were 0.92, 0.76, 0.87, 0.83, 0.74, 0.76, 0.82, respectively, with RMSE of 2.3%, 1.75 kg, 0.39 kg, 0.29 kg, 1.00 kg, 0.26 kg, 0.21 kg respectively. Changes in lean mass were less well captured, mainly due to the fact that lean mass largely remains unchanged for most individuals over this time period. r values for change for total lean mass, appendicular lean mass and ALMI were 0.60, 0.64, and 0.63, respectively, with RMSE of 1.82 kg, 1.06 kg, and 0.36 kg respectively. Figure 3 shows selected scatter plots between the predicted changes in percentage body fat, lean mass, android and gynoid fat mass against changes measured by DXA for the same variables and the corresponding Bland–Altman plots.

Discussion

In this paper, we derived a novel computer vision-based method that fits a 3D body mesh to a single DXA silhouette with paired anthropometry data (height, waist and hip circumferences). Using our method, we generated a large database of 3D body meshes (n = 17,461) with paired anthropometric and metabolic traits. We then showed that total and regional body composition metrics could be predicted accurately using these meshes. In the comparison study, we showed that shape parameters provide additional cues for predicting body composition by comparing the performance of models with different inputs. We demonstrated the derived model’s ability to detect longitudinal change in these characteristics over time by making predictions for individuals that were present in both phases of the Fenland study. In our smartphone validation study, we showed how avatars were generated using four smartphone photographs directly, without the use of optimisation. Finally, we showed preliminary body composition prediction results using these avatars.

The model using optimised 3D meshes predicted body composition metrics with sufficient accuracy to assess relative differences between individuals and was sufficiently accurate to predict absolute values for total and regional body composition including visceral fat and abdominal SCAT mass, as well as lean mass, appendicular lean mass, and ALMI. All the body composition metrics predicted from the optimised 3D meshes had small significant mean bias and showed Pearson correlation coefficients r > 0.86. These mean biases were likely caused by the older population in the phase 2 cohort compared to phase 1 used for training. Nevertheless, the mean biases were small (less than 2% for all metrics except visceral and abdominal SCAT mass (4%, and 7% respectively)), and the corresponding scatter plots showed strong prediction results (Fig. 2, Supplementary Fig. 2). In addition, our prediction model achieved similar performance for different body groups when stratified by BMI and sex (Supplementary Table 1). Our model outperformed those using traditional anthropometry7,8 discussed previously as expected. In a similar study, Xie et al.23 which used a 2D PCA shape space constructed from key points selected on a silhouette achieved the following results on a cohort of size 1554: percentage body fat R2 adj. (adjusted R2) = 0.728, RMSE = 3.12% for boys, and R2 adj. = 0.691, RMSE = 3.39% for girls. However, this comparison is limited as this study was conducted in children, as they have proportionally larger body surface area to volume ratio than adults, as well as sex differences in dimensions of body shapes such as central:peripheral ratio32. In a more relevant study, Ng et al.14 which predicted composition using PCA parameters of 3DO scans on a cohort of size 407, observed similar results to our analysis: total fat mass R2 = 0.91, RMSE = 3.07 kg for males, R2 = 0.95, RMSE = 2.63 kg for females; percentage body fat R2 = 0.70, RMSE = 3.55% for males, R2 = 0.72, RMSE = 3.88% for females. Similarly, Tian et al.30 fitted a 3D shape to a single coronal silhouette on a cohort of size 416, and predicted body composition with the following results: total fat mass R2 = 0.90, RMSE = 3.63 kg for males, R2 = 0.94, RMSE = 2.29 kg for females; percentage body fat: R2 = 0.725, RMSE = 3.90% for male, R2 = 0.74, RMSE = 3.29% for female. In comparison, we achieved similar performance on a much larger dataset n = 6102, with the following results: total fat mass R2 = 0.922, RMSE = 2.5 kg; percentage body fat R2 = 0.823, RMSE = 3.28% overall. In addition, our approach also injects information in the depth dimension by using waist and hip circumferences, while the fitting by Tian et al.30 is limited to the coronal silhouette. In Klarqvist et al.27, stronger correlations were observed for visceral fat, abdominal SCAT mass and gynoid fat, using coronal and sagittal silhouettes derived from MRI, the gold standard for those measures of adiposity. Our estimates for these metrics were based on an in-built algorithm from the DXA manufacturer, which is not a criterion method. However, compared to Klarqvist et al.27, our analysis included more body composition metrics such as appendicular lean mass and its index, which are used as a proxy for the assessment of sarcopenia33,34. Even though comparison to these studies may be limited as they were conducted in cohorts of different ages, using different body composition instruments and computer vision approaches, we have shown that our method produced meshes that were comparably accurate to 3DO scans14, by predicting body composition to a similar accuracy on a large test dataset. This allows for the construction of large 3D shape databases using our method, and in turn, enables larger deep-learning models to be used to analyse 3D body shapes. Our prediction results for change in body composition were similar to Wong et al.35 which report predicting DXA metrics from 3DO scanning images from 133 participants, where the change in fat mass is slightly underestimated, and lean mass overestimated.

The strengths of our study include the large sample size of the Fenland study, the same DXA instruments in the different samples, the same DXA analytical software, and the robust validation in two separate independent cohorts (Fenland phase 2, representing an older group from the derivation sample and the external validation, which consisted of younger individuals). Furthermore, our method assesses changes to body composition over time. Another benefit of the 3D body mesh approach is to enables anonymity of user data. Our method does not require raw images of the participants to be retained, rather we only store the generated body meshes, which could also be done efficiently by only storing the SMPL shape parameters. This provides additional incentive in scientific and clinical studies for participants to partake in data collection since concern over sharing sensitive information is largely eliminated. Compared to other smartphone apps that estimate body composition from photographs, we note that they either do not reconstruct 3D meshes21,24, or they require strict pose constraints16,24. Our app works robustly for noisy backgrounds, can be extended to incorporate an arbitrary number of images, and in practice works for arbitrary body and camera poses.

This work is not without limitations. Our optimised body mesh did not achieve a perfect fit to the DXA silhouette. The soft tissues of the DXA participants might be deformed since DXA scans were captured with participants lying flat on the scanbed. This was not modelled by our method. We acknowledge that our study samples were predominantly adults of white European origin. Future analyses should assess the validity of these models in other ethnic groups as well as younger populations since there are significant racial and age differences in body composition32,36,37,38,39. Future work should also focus on improving the avatar accuracy generated using the smartphone app. While our findings support the validity of our method in Fenland phase 2 data and in the smartphone validation study, we expected and found lower performance using avatars derived from RGB images, as avatars obtained using the phone app can be inaccurate. We do not optimise our smartphone-generated avatars although this would improve prediction accuracy since we do not retain the photographs due to ethical constraints and data security. Alternatively, using domain-agnostic representations such as waist-hip ratio, waist-height ratio might produce stronger results as they are normalised with respect to height. Choudhary et al.40 showed that accurate waist-hip ratio could be derived directly from images using attention based networks, which could prove useful in this regard. With improvements to avatar accuracy, app-generated avatars would be able to approach the prediction performance on Fenland.

Through the implementation of the app, users will be able to visualize their body shapes and track potential changes using a portable and relatively inexpensive but accurate device. Using our method in clinical research studies, we could potentially identify individuals at the highest risk of preventable complications (e.g. significant increase in body fat from their first assessment). For instance, Fig. 4 shows the modelled body shapes at phase 1 and 6 years later at phase 2 of three participants who either maintained, gained, or lost fat mass. The first and last three columns show two participants who lost and gained a significant amount of fat mass between the two phases. Significant changes could be seen comparing the two sets of meshes. The end goal would be to encourage users to adopt a healthier way of life, by visualizing changes to their body shapes with time, rather than just focusing on numerical values (e.g. increase in BMI).

In conclusion, capturing 3D body shape using two-dimensional (2D) images coupled with appropriate inference techniques to reconstruct a 3D model of the body, may be a viable alternative tool to clinical medical imaging, and it could offer a readily accessible health metric for monitoring the efficacy of lifestyle interventions.

Methods

The Fenland study

DXA scans, paired anthropometry data and metabolic health variables used for our method come from the Fenland study. Details of the study have been described elsewhere41. Briefly, the Fenland study is a population-based cohort study established in 2005. It comprises mainly participants of white European descent, born between 1950 and 1975, and recruited from general practice lists in Cambridgeshire (Cambridge, Ely, and Wisbech) in the United Kingdom. A total of 12,435 people took part in Phase 1 of the study (2005–2015) and 7795 in Phase 2 of the study (2014–2020). Exclusion criteria for the Fenland study were pregnancy, diagnosed diabetes, inability to walk unaided, or psychosis. The study was approved by the Cambridge Local Research Ethics Committee and performed in accordance with the Declaration of Helsinki. All participants provided written informed consent to participate in the study.

In the current analyses, we excluded participants whose DXA scans had technical irregularities such as missing tissues or other scan artefacts. The analyses included 11,359 individuals (5333 men and 6026 women) from Phase 1, 6102 individuals from Phase 2 (2979 men and 3123 women), of whom 5733 had valid data from both phases. 80% of the Fenland Phase 1 sample was used for the derivation and training of the 3D body shape composition models, and the remaining 20% of Phase 1 was used to test the validity of those models. The Phase 2 sample was used as a test dataset for validity in a now older population and to assess the sensitivity of prediction models to track within-individual changes over time.

Smartphone validation study

We also conducted a separate study which in addition to DXA scans also included air plethysmography (BODPOD) and a smartphone app capturing front, back and two-side pose images. This was carried out in a sample of 119 healthy adults (39 men and 80 women) aged between 18 and 65 years old, free from disease and medications between July and November 2023. The participants in this study were recruited locally through advertisements and approval was granted by the Cambridge Central Ethics Committee (REC 06.Q0108.84). Written informed consent was obtained prior to the participants’ visit. This independent sample was used to test the validity of our derived models from the Fenland study, as well as to evaluate the accuracy of the 3D shape obtained from smartphone images alone.

Anthropometry and body composition

In the Fenland study (both Phase 1 and Phase 2), weight was measured to the nearest 0.2 kg with a calibrated electronic scale (TANITA model BC-418 MA; Tanita, Tokyo, Japan). Height was assessed to the nearest 0.1 cm with a wall-mounted stadiometer (SECA 240; Seca, Birmingham, United Kingdom). Body mass index (BMI; in kg/m2) was calculated as weight divided by height squared. Waist circumference and hip circumference were measured to the nearest 0.1cm with a non-stretchable fibre-glass insertion tape (D loop tape; Chasmors Ltd, London, United Kingdom). Waist circumference was defined as the midpoint between the lowest rib margin and the iliac crest, and hip circumference was defined as the widest level over the trochanters. All measurements were taken by trained field workers. Body composition was assessed by DXA, a whole-body, low-intensity X-ray scan that precisely quantifies fat mass in different body regions (models used: Lunar Prodigy Advanced fan beam DXA scanner, or an iDXA system; GE Healthcare, Hatfield, UK). Participants were scanned supine by trained operators, using standard imaging and positioning protocols. All images were manually processed by one trained researcher, who corrected DXA demarcations according to a standardised procedure. In brief, the arm region included the arm and shoulder area (from the crease of the axilla and through the glenohumeral joint). The trunk region included the neck, chest, and abdominal and pelvic areas. The abdominal region (android region) was defined as the area between the ribs and the pelvis and was enclosed by the trunk region. The leg region included all of the area below the lines that form the lower borders of the trunk. The gluteofemoral region (gynoid region) included the hips and upper thighs and overlapped both leg and trunk regions. The upper demarcation of this region was below the top of the iliac crest at a distance of 1.5 times the abdominal height. DXA CoreScan software (GE Healthcare, Hatfield, UK) was used to determine visceral abdominal fat mass within the abdominal/android region. This software uses a proprietary inbuilt algorithm42 to derive visceral abdominal fat mass within the android region, validated by the manufacturer using computed tomography and magnetic resonance imaging. The inbuilt algorithm estimates visceral abdominal fat mass by firstly estimating the subcutaneous fat width and the anteroposterior thickness of the abdominal wall. These parameters together with derived geometric constants are implemented to extrapolate the amount of subcutaneous fat mass in the android region. Visceral abdominal fat mass is then calculated by subtracting the estimated subcutaneous abdominal fat mass from the total android fat mass. Subcutaneous abdominal fat is therefore android fat mass minus visceral fat mass. The other body composition variables used in these analysis are derived as follows: Appendicular lean mass (ALM) is the sum of the lean tissue mass in the arms and legs. Appendicular lean mass is scaled to height to derive appendicular lean mass index ALMI (ALM/height2)34. Peripheral fat mass is the sum of the fat tissue mass in the arms and legs.

In the smartphone validation study, demographic information on age, sex, ethnicity was self-reported. Trained staff acquired all clinical measures. Height and weight were measured using a column scale (Seca GmbH & Co. KG, Hamburg). Waist and hip circumferences were measured using a tape measurer (CEFES-FIBRE by Hoechstmass Germany). Body volume was assessed using air plethysmography (BODPOD ADP system, Cosmed Srl, Rome, Italy), for which participants were in fitted clothing without shoes, and wearing a swim cap before entering the system. Total and regional body composition was measured using an iDXA scanner (GE Healthcare, Hatfield, UK). Four 2D photographs were captured by a smartphone camera (iPhone X, Apple Inc. IOS v15.6.1) using our purpose-built 3D Body Shape app, which constructs a 3D body mesh using phone images only. We adopted a standardised image capture procedure: Participants wore form-fitting clothing, without shoes, and were asked to stand in an ‘A’ pose 2.5 m from the camera; The smartphone was held upright and positioned at chest level; Four photographs consisting of participant front, back, left-side and right-side profiles were taken.

Model derivation

Firstly, our method fits a 3D body mesh to a DXA silhouette with paired anthropometric measurements (participant height, waist and hip circumferences). Then, the fitted mesh shape parameters (SMPL shape \({\boldsymbol{\beta }}\,\in \,{{\mathbb{R}}}^{10}\)) are used to predict body composition metrics. Our smartphone validation study generates 3D body meshes from RGB images by averaging avatars across multiple views according to the uncertainty of shape parameters in each view31.

The following describes our fitting pipeline, the body composition regressor, and smartphone avatar generation.

DXA images are single view and orthographic43,44, and hence lack depth information. To fit a 3D mesh, we, therefore, augment DXA silhouettes with paired anthropometry measurements, namely height, waist and hip circumferences. Directly fitting a high-dimensional point cloud to a single silhouette is challenging. We utilise Skinned Multi-Person Linear Model (SMPL)45, a low-rank PCA shape representation, which provides a strong prior for human body shape. Predicting SMPL pose and shape accurately in one go is also challenging, thus we utilise a two-stage approach where an optimiser refines an initial guess. SMPL45 is widely used for Human Pose and Shape (HPS) regression tasks20,31,46. Given input pose and shape parameters \(\theta \in {{\mathbb{R}}}^{24\times 3},\beta \in {{\mathbb{R}}}^{10}\), SMPL returns a 3D mesh \(M\in {{\mathbb{R}}}^{6890\times 3}\) in a fully differentiable manner. Figure 5 shows the structure of the SMPL model.

SMPL model which uses a low-rank PCA representation of the body shape space. The model requires 24 joint rotation parameters and 10 shape parameters as input and returns an expressive body mesh in a differentiable manner. Example avatar is taken from https://meshcapade.wiki/SMPL.

To reconstruct a 3D mesh from a DXA image, we first make an initial guess of pose and shape using an off-the-shelf method. Most existing HPS networks take in RGB images as inputs and do not readily apply to DXA images47,48. Instead, we utilise proxy representations of DXA images, consisting of edge images and joint heatmaps46 in an attempt to bridge the domain gap between DXA and RGB images. Our initial pose and shape guess uses Hierarchical Kinematic Probability Distributions (HKPD) by Sengupta et al.20, which adopts this proxy representation, and regresses probability distributions over SMPL pose and shape parameters. Regressing distributions also enable us to aggregate information across different views for the smartphone validation study.

While initial predictions from the HKPD method yield an estimation of body pose and shape from a single coronal view of the DXA participant, it is not sufficiently accurate for downstream metric regression tasks. The reason for this is two-fold: firstly, the coronal DXA silhouette provides little information about the body shape in the depth dimension, which is important in terms of assessing body composition metrics such as visceral fat, which is correlated with the sagittal abdominal diameter (SAD)49; Secondly, HKPD is trained using synthetic body shapes sampled from Gaussian distributions and tends to predict body shapes biased towards the SMPL mean. Therefore, we construct an optimisation method to refine the initial guess, taking advantage of paired anthropometry measurements, DXA silhouettes, and further losses derived from special properties of DXA scanning (e.g. participants lying flat on the scanbed). We detail the optimisation losses below.

Anthropometry loss. We ensure that the mesh agrees with anthropometry measurements consisting of waist, hip circumferences and height by minimising the following loss:

where \(\hat{\cdot }\) are measurements from the optimised mesh. [CW, CH, H] stand for waist, hip circumferences and height of the participant respectively. Height of the mesh is measured using the extrema vertices of the T-pose mesh.

To inject waist and hip information to the mesh whilst maintaining differentiability, we use a local ellipsoidal approximation to estimate circumferences. This is done by selecting a ring of key points around waist and hip, then fitting an ellipse using least squares. The selected key points are projected onto the horizontal plane, as SMPL vertices usually do not share the same height, and their relative heights vary across different body shapes.

DXA silhouette loss. Our optimiser also fits to the silhouette of the scan by differentiably rendering the silhouette of the optimised mesh onto the image. We also impose a joint regulariser to prevent the optimised mesh from straying too far from the initial guess. This forms the second part of the loss function,

where \({\mathcal{R}}(\cdot )\) is a PyTorch3D50 differentiable silhouette renderer, c = [s, tx, ty] are weak perspective camera parameters consisting of scale and translation. Sgt is the ground truth silhouette obtained by thresholding the DXA image. J2D are 2D joint locations obtained by,

where Π(⋅) is orthographic projection, \({\mathcal{J}}\) is a linear vertex-to-joint regressor.

Scanbed alignment loss. Due to the orthographic nature of DXA images, pose of the DXA participant can be ambiguous from a single silhouette. As a result, initial guesses from HKPD often produce meshes that have forward-leaning torsos, or legs that are not fully extended. Since DXA participants are lying flat on the scanbed, we impose an additional ‘scanbed alignment constraint’. During implementation, we also impose a pose regulariser on the arms as well as a deviation from z loss to regularise arm poses.

where θ(1) is a subset of SMPL pose parameters containing spine and leg joints, θ(2) is a subset of SMPL pose parameters containing arm and wrist joints.

We optimise body pose, shape and camera parameters using the following total loss function,

where λi are manually tuned to produce the best fits. Figure 6 shows our full mesh fitting pipeline. Optimised SMPL shape parameters \(\hat{{\boldsymbol{\beta }}}\in {{\mathbb{R}}}^{10}\) are used for the next phase which regresses body composition metrics.

We construct a simple feed-forward neural network to regress body composition metrics from the 3D meshes. The inputs to the network are the 10 SMPL shape parameters obtained from DXA optimisation, height, weight, BMI, and gender of the participant. The outputs of the network are the estimated body composition metrics such as total fat mass, total lean mass, etc. We use residual connections in the first two layers as they slightly improve our lean mass predictions. The structure of our network is shown in Fig. 7.

The network is trained using a mean squared error (MSE) loss function constructed from target and predicted mass values. We weight the loss function using homoscedastic uncertainty51, and learn these metric-wise uncertainties automatically,

where \(\hat{\cdot }\) are model predictions, σi are metric-wise uncertainties.

The network is relatively small, with around 16,000 parameters, to prevent overfitting. We train the network using an Adam52 optimiser for 100 epochs with a learning rate of 0.01. Dropout53 is adopted to regularise the network. We train our model using an 80–20% train–validation split on Fenland phase 1 data. We test our method on Fenland phase 2 and the smartphone validation study.

The smartphone validation study uses the HKPD20 method and generates SMPL avatars using multiview information from RGB images. Given a group of images [I1, I2, …] of the same participant, each photo is firstly processed using HKPD to generate a Gaussian distribution over SMPL shape parameters p(β∣In). A final body shape is derived by combining shape information across multiple views according to,

where we have assumed conditional independence across views20,31.

Statistical analysis

Statistical analyses were performed using STATA version 17 (StataCorp, College Station, Texas, USA) and Python. A P value less than 0.05 was considered statistically significant. Descriptive data were reported as mean ± standard deviation (SD) or n (%). Using our methods, we constructed our model to predict total and regional body composition metrics. The performance of the derived model was compared by calculating the Pearson correlation coefficients r for each outcome parameter and root-mean-square error (RMSE) values. Pearson correlation coefficients were used to investigate associations between the different predicted values of body composition and the measurements of total and regional body composition from DXA. Scatter plots were used to visualise the associations between predicted and measured values. Bland–Altman analysis was used to investigate the agreement between the predicted body composition from our approach against DXA reference measures of total and regional body composition. In the Bland–Altman plot, the y-axis represents the difference or bias between predicted values and measured values (e.g. from DXA) with limits of agreement (LoA) described as the 95% confidence range (mean bias ± 1.96SD), while the x-axis represents the mean value of the reference method (e.g. DXA) rather than the mean between the two methods. Mean differences/biases between the two methods were calculated and significance was tested against zero by paired t-tests. For all the variables, change in body composition was defined as the difference between predictions from Fenland Phase 2 (follow-up assessment) and Fenland Phase 1 (baseline assessment). Scatter plots were used to compare changes in body composition from our predictions with DXA body composition changes. Bland–Altman plots were implemented to assess the agreement between changes in body composition predicted by our method and those measured by DXA. Root-mean-square error (RMSE) was used to assess the accuracy of these comparisons.

Data availability

The datasets generated and analysed during the current study are available at request via the MRC Epidemiology website (http://www.mrc-epid.cam.ac.uk/research/data-sharing/).

References

Baumgartner, R. N., Heymsfield, S. B. & Roche, A. F. Human body composition and the epidemiology of chronic disease. Obes. Res. 3, 73–95 (1995).

Haarbo, J., Gotfredsen, A., Hassager, C. & Christiansen, C. Validation of body composition by dual energy x-ray absorptiometry (dexa). Clin. Physiol. 11, 331–341 (1991).

Borga, M. et al. Advanced body composition assessment: from body mass index to body composition profiling. J. Investig. Med. 66, 1–9 (2018).

Tolonen, A. et al. Methodology, clinical applications, and future directions of body composition analysis using computed tomography (ct) images: a review. Eur. J. Radiol. 145, 109943 (2021).

Illes, J., Desmond, J. E., Huang, L. F., Raffin, T. A. & Atlas, S. W. Ethical and practical considerations in managing incidental findings in functional magnetic resonance imaging. Brain Cognit. 50, 358–365 (2002).

Fenton, J. J. & Deyo, R. A. Patient self-referral for radiologic screening tests: clinical and ethical concerns. J. Am. Board Fam. Pract. 16, 494–501 (2003).

Parente, E. B. et al. Waist-height ratio and waist are the best estimators of visceral fat in type 1 diabetes. Sci. Rep. 10, 18575 (2020).

Heymsfield, S. B., Stanley, A., Pietrobelli, A. & Heo, M. Simple skeletal muscle mass estimation formulas: what we can learn from them. Front. Endocrinol. 11, 31 (2020).

Prentice, A. M. & Jebb, S. A. Beyond body mass index. Obes. Rev. 2, 141–147 (2001).

Weber, D. R., Moore, R. H., Leonard, M. B. & Zemel, B. S. Fat and lean BMI reference curves in children and adolescents and their utility in identifying excess adiposity compared with BMI and percentage body fat. Am. J. Clin. Nutr. 98, 49–56 (2013).

Tinsley, G. M., Moore, M. L., Benavides, M. L., Dellinger, J. R. & Adamson, B. T. 3-dimensional optical scanning for body composition assessment: A 4-component model comparison of four commercially available scanners. Clin. Nutr. 39, 3160–3167 (2020).

Bennett, J. P. et al. Assessment of clinical measures of total and regional body composition from a commercial 3-dimensional optical body scanner. Clin. Nutr. 41, 211–218 (2022).

Bennett, J. P. et al. Three-dimensional optical body shape and features improve prediction of metabolic disease risk in a diverse sample of adults. Obesity 30, 1589–1598 (2022).

Ng, B. K. et al. Detailed 3-dimensional body shape features predict body composition, blood metabolites, and functional strength: the shape up! studies. Am. J. Clin. Nutr. 110, 1316–1326 (2019).

Tian, I. Y. et al. A device-agnostic shape model for automated body composition estimates from 3d optical scans. Med. Phys. 49, 6395–6409 (2022).

McCarthy, C. et al. Smartphone prediction of skeletal muscle mass: model development and validation in adults. Am. J. Clin. Nutr. 117, 794–801 (2023).

Leong, L. T. et al. Generative deep learning furthers the understanding of local distributions of fat and muscle on body shape and health using 3d surface scans. Commun. Med. 4, 13 (2024).

Kingma, D. P. & Welling, M. Auto-encoding variational bayes. arXiv:1312.6114 (2022).

Kirillov, A. et al. Segment anything. In Proc. of the IEEE/CVF International Conference on Computer Vision, 4015–4026 (2023).

Sengupta, A., Budvytis, I. & Cipolla, R. Hierarchical kinematic probability distributions for 3d human shape and pose estimation from images in the wild. In Proc. of the IEEE/CVF International Conference on Computer Vision, 11219–11229 (2021).

Majmudar, M. D. et al. Smartphone camera based assessment of adiposity: a validation study. NPJ Digit. Med. 5, 79 (2022).

Alves, S. S. et al. Sex-based approach to estimate human body fat percentage from 2d camera images with deep learning and machine learning. Measurement 219, 113213 (2023).

Xie, B. et al. Accurate body composition measures from whole-body silhouettes. Med. Phys. 42, 4668–4677 (2015).

Sullivan, K. et al. Agreement between a 2-dimensional digital image-based 3-compartment body composition model and dual energy x-ray absorptiometry for the estimation of relative adiposity. J. Clin. Densitom. 25, 244–251 (2022).

Smith, B. et al. Anthropometric evaluation of a 3d scanning mobile application. Obesity 30, 1181–1188 (2022).

Graybeal, A. J., Brandner, C. F. & Tinsley, G. M. Evaluation of automated anthropometrics produced by smartphone-based machine learning: a comparison with traditional anthropometric assessments. Br. J. Nutr. 130, 1077–1087 (2023).

Klarqvist, M. D. et al. Silhouette images enable estimation of body fat distribution and associated cardiometabolic risk. NPJ Digit. Med. 5, 105 (2022).

Bycroft, C. et al. The UK biobank resource with deep phenotyping and genomic data. Nature 562, 203–209 (2018).

Keller, M., Zuffi, S., Black, M. J. & Pujades, S. Osso: Obtaining skeletal shape from outside. In Proc. of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 20492–20501 (2022).

Tian, I. Y. et al. Predicting 3d body shape and body composition from conventional 2d photography. Med. Phys. 47, 6232–6245 (2020).

Sengupta, A., Budvytis, I. & Cipolla, R. Probabilistic 3d human shape and pose estimation from multiple unconstrained images in the wild. In Proc. of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 16094–16104 (2021).

Santos, L. P. et al. Body shape and size in 6-year old children: assessment by three-dimensional photonic scanning. Int. J. Obes. 40, 1012–1017 (2016).

Wen, Z. et al. Handgrip strength and muscle quality: results from the national health and nutrition examination survey database. J. Clin. Med. 12, 3184 (2023).

Kitamura, A. et al. Sarcopenia: prevalence, associated factors, and the risk of mortality and disability in Japanese older adults. J. Cachexia Sarcopenia Muscle 12, 30–38 (2021).

Wong, M. C. et al. Monitoring body composition change for intervention studies with advancing 3d optical imaging technology in comparison to dual-energy x-ray absorptiometry. Am. J. Clin. Nutr. 117, 802–813 (2023).

Hill, J. O. et al. Racial differences in amounts of visceral adipose tissue in young adults: the cardia (coronary artery risk development in young adults) study. Am. J. Clin. Nutr. 69, 381–387 (1999).

Perry, A. C. et al. Racial differences in visceral adipose tissue but not anthropometric markers of health-related variables. J. Appl. Physiol. 89, 636–643 (2000).

Kanaley, J., Giannopoulou, I., Tillapaugh-Fay, G., Nappi, J. & Ploutz-Snyder, L. Racial differences in subcutaneous and visceral fat distribution in postmenopausal black and white women. Metabolism 52, 186–191 (2003).

Conway, J. M., Yanovski, S. Z., Avila, N. A. & Hubbard, V. S. Visceral adipose tissue differences in black and white women. Am. J. Clin. Nutr. 61, 765–771 (1995).

Choudhary, S. et al. Development and validation of an accurate smartphone application for measuring waist-to-hip circumference ratio. NPJ Digit. Med. 6, 168 (2023).

Lindsay, T. et al. Descriptive epidemiology of physical activity energy expenditure in UK adults. Fenl. Study medRxiv 1, 19003442 (2019).

Diet, anthropometry and physical activity (DAPA) measurement toolkit. www.measurement-toolkit.org (2024).

Reyneke, C. J. F. et al. Review of 2-d/3-d reconstruction using statistical shape and intensity models and x-ray image synthesis: toward a unified framework. IEEE Rev. Biomed. Eng. 12, 269–286 (2018).

Yu, W., Tannast, M. & Zheng, G. Non-rigid free-form 2d–3d registration using a b-spline-based statistical deformation model. Pattern Recognit. 63, 689–699 (2017).

Loper, M., Mahmood, N., Romero, J., Pons-Moll, G. & Black, M. J. Smpl: A skinned multi-person linear model. In Proc. Seminal Graphics Papers: Pushing the Boundaries, vol. 2, 851–866 (2023).

Sengupta, A., Budvytis, I. & Cipolla, R. Synthetic training for accurate 3d human pose and shape estimation in the wild. In Proc. British Machine Vision Conference (BMVC) (2020).

Kanazawa, A., Black, M. J., Jacobs, D. W. & Malik, J. End-to-end recovery of human shape and pose. In Proc. of the IEEE conference on computer vision and pattern recognition, 7122–7131 (2018).

Bogo, F. et al. Keep it SMPL: automatic estimation of 3d human pose and shape from a single image. In Proc. Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part V 14, 561–578 (Springer, 2016).

Kahn, H. S. et al. Population distribution of the sagittal abdominal diameter (sad) from a representative sample of us adults: comparison of sad, waist circumference and body mass index for identifying dysglycemia. PloS one 9, e108707 (2014).

Ravi, N. et al. Accelerating 3d deep learning with pytorch3d. arXiv:2007.08501 (2020).

Kendall, A., Gal, Y. & Cipolla, R. Multi-task learning using uncertainty to weigh losses for scene geometry and semantics. In Proc. of the IEEE conference on computer vision and pattern recognition, 7482–7491 (2018).

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. arXiv:1412.6980 (2017).

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I. & Salakhutdinov, R. Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15, 1929–1958 (2014).

Acknowledgements

We thank all study volunteers who participated in the Fenland and validation studies as well as the research teams at MRC Epidemiology Unit and NIHR Cambridge Clinical Research Facility responsible for data collection and management. We also acknowledge support from the Medical Research Council (Unit programmes MC_UU_00006/1, MC_UU_00006/3, MC_UU_00006/4, MC_UU_00006/6). The Fenland Study was funded by the Medical Research Council and the Wellcome Trust. E.D.L.R., R.P., N.W. and S.B. are supported by the NIHR Biomedical Research Centre Cambridge [IS-BRC-1215-20014]. The NIHR Cambridge Biomedical Research Centre (BRC) is a partnership between Cambridge University Hospitals NHS Foundation Trust and the University of Cambridge, funded by the National Institute for Health Research (NIHR). The views expressed are those of the authors and not necessarily those of the NHS, the NIHR or the Department of Health and Social Care. L.P.E.W. is supported by the NIHR Cambridge Clinical Research Facility.

Author information

Authors and Affiliations

Contributions

C.Q. had full access to all of the data used in this analysis and takes responsibility for the integrity of the data and the accuracy of the data analysis. All authors have read and approved the manuscript. Concept and design: C.Q., E.D.L.R., E.M., S.B., R.C.; Acquisition, analysis, or interpretation of data: C.Q., E.D.L.R., E.M., A.S., R.P., L.P.E.W., S.B.H., J.A.S., N.W., S.B., R.C.; Drafting of the manuscript: C.Q., E.D.L.R., S.B., R.C.; Critical revision of the manuscript for important intellectual content: C.Q., E.D.L.R., E.M., A.S., R.P., L.P.E.W., S.B.H., J.A.S., N.W., S.B., R.C.; Statistical analysis: C.Q., E.D.L.R., R.P.; Obtained funding: S.B., N.W., L.P.E.W.; Administrative, technical, or material support: C.Q., E.D.L.R., R.P., L.P.E.W.; Supervision: S.B., R.C.

Corresponding author

Ethics declarations

Competing interests

S.B.H. declares the following competing interests: Medifast Corporation, Novo Nordisk, Amgen, Abbott, Versanis, Novartis. The remaining authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Qiao, C., Rolfe, E.D.L., Mak, E. et al. Prediction of total and regional body composition from 3D body shape. npj Digit. Med. 7, 298 (2024). https://doi.org/10.1038/s41746-024-01289-0

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41746-024-01289-0

This article is cited by

-

Smartphone and tablet-based 3D scanner for anthropometric assessments in adults: a systematic review of reliability, validity, and accuracy

BioMedical Engineering OnLine (2025)

-

Genetic ancestry influences body shape and obesity risk in Latin American populations

Scientific Reports (2025)

-

Assessing alternative strategies for measuring metabolic risk

npj Digital Medicine (2024)