Abstract

Mental health apps that adopt a transdiagnostic approach to addressing depression and anxiety are emerging, yet a synthesis of their evidence-base is missing. This meta-analysis evaluated the efficacy of transdiagnostic-focused apps for depression and anxiety, and aimed to understand how they compare to diagnostic-specific apps. Nineteen randomized controlled trials (N = 5165) were included. Transdiagnostic-focused apps produced small post-intervention effects relative to controls on pooled outcomes related depression, anxiety and distress (N = 23 comparisons; g = 0.29; 95% CI = 0.17–0.40). Effects remained significant across various sensitivity analyses. CBT apps and apps that were compared with a waitlist produced larger effects. Significant effects were found at follow-up (g = 0.25; 95% CI = 0.10, 0.41). Effects were comparable to disorder-specific app estimates. Findings highlight the potential of transdiagnostic apps to provide accessible support for managing depression and anxiety. Their broad applicability highlights their public health relevance, especially when combined with in-person transdiagnostic therapies to create new hybrid care models.

Similar content being viewed by others

Introduction

Depression and anxiety disorders are leading causes of disability worldwide, resulting in substantial economic, societal, and psychological burdens1,2. Despite the availability of evidence-based treatments3, rates of help-seeking remain low due to factors such as a shortage of trained mental health professionals, lengthy waitlists, low mental health literacy, perceived stigma, and high cost of treatment4,5.

Treatment programs delivered through technological means offer a promising solution to increase the availability and scalability of evidence-based psychological interventions. Technology-enabled interventions can overcome many barriers to traditional face-to-face care by offering greater accessibility, flexibility, cost-efficiency, and user autonomy6. These formats may be well suited for reaching certain populations who may be less receptive to face-to-face help options, reducing wait times, and supporting early intervention efforts7. Previous meta-analyses show that various modes of digital interventions, including web-based programs8,9, smartphone apps10,11, virtual reality12,13, and chatbots14 delivered either as stand-alone options or an adjunct to traditional care, outperform control conditions in reducing symptoms of depression or anxiety.

While much of the existing research on digital mental health interventions has focused on disorder-specific protocols, there is growing interest in transdiagnostic approaches as a means of further enhancing the reach and efficiency of digital care. These approaches integrate core components of established therapies into a unified protocol targeting the shared cognitive, behavioral, and emotional features that underlie multiple disorders15. This is particularly relevant for depression and anxiety, which often co-occur and exhibit overlapping symptoms, maintaining factors, and temperamental antecedents16,17. Transdiagnostic treatments also have the advantages of increased efficiency, ease of implementation (reduced complexity), and the ability for patients to address multiple comorbid symptoms simultaneously18. Transdiagnostic treatments differ from disorder-specific protocols in that they are designed to target common cognitive, emotional, and behavioral mechanisms underlying both depression and anxiety, rather than focusing on symptoms unique to a single disorder18. Core components often include psychoeducation, cognitive restructuring, behavioral activation, emotion regulation, and mindfulness strategies, each of which are known to be effective across diagnostic categories19,20. This unified approach can accommodate individuals presenting with depression, anxiety, or both, and are suggested to offer greater efficiency and scalability21.

A growing body of evidence supports the efficacy of transdiagnostic treatments, demonstrating their ability to reduce symptoms of depression and anxiety across diverse populations and protocols22,23,24. For example, a recent meta-analysis of 45 clinical trials of therapist-led transdiagnostic treatments delivered to participants with mixed depression and/or anxiety reported a pooled effect size of g = 0.54, which remained stable at 6-month follow-up25. An earlier meta-analysis of four randomized trials comparing transdiagnostic and disorder-specific protocols found preliminary evidence supporting the superiority of transdiagnostic approaches for reducing depressive symptoms (g = 0.58), but not anxiety symptoms (g = 0.15)18.

Given the promise of the transdiagnostic approach to address comorbid depression and anxiety, these interventions are increasingly being delivered through digital platforms to enhance scalability, accessibility, and user engagement26. Research integrating transdiagnostic protocols via digital means has predominately focused on developing and testing web-based interventions, with a considerable number of randomized trials documenting their benefits27,28. An earlier meta-analysis by Newby et al. 18 synthesized 17 randomized trials of web-based transdiagnostic interventions, reporting large pooled effect sizes (g = 0.84 for depression and g = 0.78 for anxiety) relative to controls. They also found preliminary evidence that transdiagnostic web programs may be superior to disorder-specific web programs in reducing symptoms of depression (g = 0.21).

Despite the encouraging evidence for web-based transdiagnostic interventions, there is a pressing need to examine the efficacy of smartphone app-based transdiagnostic programs as a distinct class of digital treatment. Unlike web-based programs, which are typically accessed through a desktop computer in longer, session-based formats29, apps are designed for brief, frequent, and context-sensitive interactions30. They allow users to engage with therapeutic content on-the-go and in real-time, potentially enhancing ecological validity and momentary support during episodes of distress. Apps also leverage innovative capabilities of smartphones, including passive data collection, mood tracking, and tailored push notifications, to support personalization and timely feedback, which may influence user engagement and therapeutic outcomes differently than more static web-based tools7,31,32,33. These features represent a significant technological evolution since the last major review by Newby et al., which focused exclusively on web-based programs and did not quantify attrition rates from transdiagnostic programs. Moreover, the Newby et al. review is now nearly a decade old, and the digital mental health landscape has since shifted dramatically, with the proliferation of mental health apps in public app stores and increasing emphasis on scalability and low-intensity interventions34,35. Given that engagement with apps tends to be shorter, more fragmented, and more influenced by design features36, it is unlikely that effect sizes from computer-based programs generalize to apps. A dedicated synthesis of transdiagnostic app trials is therefore needed to assess not only their clinical effectiveness but also their feasibility, adherence, and potential public health impact. This review fills that gap by aggregating the growing number of randomized trials testing stand-alone transdiagnostic apps and examining their unique contributions and limitations compared to prior digital delivery formats.

Given these technological, methodological, and clinical distinctions, a targeted synthesis of smartphone-delivered transdiagnostic interventions is warranted. Accordingly, the current meta-analysis aimed to examine the efficacy of transdiagnostic-focused apps for depression and anxiety. A secondary aim was to compare these effects to those recently reported for disorder-specific apps (g = 0.38 for depression and g = 0.20 for anxiety)10. Finally, we sought to explore whether key study features moderate intervention effects, and to examine patterns of engagement and attrition unique to this delivery format.

Results

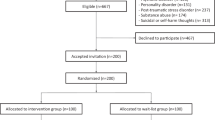

We identified 16 trials from Linardon et al.37 that met full inclusion criteria and located three additional trials in the updated search, bringing the total to 19 trials with 3033 participants randomized to the intervention groups and 2132 to the control groups. The flowchart describing the selection process is presented in Fig. 1.

Study characteristics

Table 1 presents the characteristics of the 19 included trials. All 19 trials used validated self-report scales to assess participant eligibility: 11 trials used cut-off scores from both the Generalized Anxiety Disorder 7-item scale (GAD-7) and/or the Patient Health Questionnaire (PHQ); two trials used a cut-off on the Hospital Anxiety Depression Scale (HADS) total score or its subscales; and one trial each used a cut-off score on either the Beck Depression Inventory-II (BDI-II) and/or the State Trait Anxiety Inventory (STAI), the General Health Questionnaire (GHQ), the Kessler Distress Scale (K-10), alone or in combination with the PHQ-9, and the Edinburgh Postnatal Depression Scale (EPDS)and/or the GAD-7.

There were 22 app conditions; 12 were coded as CBT, 5 offered human guidance, 12 offered in-app feedback, and 13 contained mood monitoring features. In terms of therapeutic components, five app conditions included behavioral activation, eight included goal setting, 11 included cognitive restructuring, 16 included mindfulness meditation, two included cognitive defusion, seven included coping skills, two included exposure tasks, one included valued activity checks, six included social connection/peer support, four included problem solving, two included behavior modification, three included emotion regulation skills, five included relaxation training, one included motivation enhancement, and one app condition included acceptance-based exercises. There were 20 control conditions, with waitlists offered in 11 of these, placebo apps in seven, and usual care offered in two conditions. The length of the intervention period ranged from three to 12 weeks. Ten trials reported outcomes at follow-up, ranging from six to 24 weeks. In terms of depression outcome assessments, 12 used some version of the PHQ, two used the HADS, two used the DASS, one used the BDI-II, and one used the EPDS. For anxiety, 12 used the GAD-7, two used the HADS, two used the DASS, and one used the STAI. Two trials met four of the risk of bias criteria, six met three of the criteria, seven met two of the criteria, three met one criterion, and one trial did not meet any of the criteria (see Supplementary Table 2 for risk of bias ratings for each study).

Main analyses

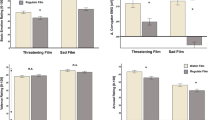

Table 2 presents the results of the meta-analyses comparing transdiagnostic-focused apps to control groups at post-test. When pooling all effect sizes indicating depression, anxiety and distress in one analysis, the overall effect size for the 23 comparisons was a statistically significant g = 0.29 (95% CI = 0.17, 0.40) corresponding to a NNT of 11.4, with high heterogeneity (I2 = 60%) and a broad prediction interval (−0.14, 0.72). See Fig. 2 for a Forest Plot of the main effect and Supplementary Fig. 1. This effect remained robust and similar in magnitude across all sensitivity analyses, including when limiting the analyses to one effect (both smallest and largest) per study, lower risk of bias trials, studies that were not considered outliers, and when applying the trim-and-fill procedure.

Table 2 also presents the results of the meta-analysis for the three specific outcomes. The pooled effect size for depression at post-test across 21 comparisons was a statistically significant g = 0.27 (95% CI = 0.14, 0.40) corresponding to a NNT of 11.9 with high heterogeneity (I2 = 68%) and a PI of −0.23 to 0.78. For anxiety, the pooled effect size across 20 comparisons was a statistically significant g = 0.30 (95% CI = 0.17, 0.42) corresponding to a NNT of 10.6, with high heterogeneity (I2 = 65%) and a PI of −0.16 to 0.76. For distress, only six comparisons were available, but a significant effect size of g = 0.29 (95% CI = 0.09, 0.50) was observed (NNT = 11.4), with no heterogeneity and a PI of 0.08 to 0.49. Effects for these outcomes remained significant and highly similar in magnitude across all sensitivity analyses.

Subgroup analyses were performed for the pooled outcomes and are reported in Table 3. Waitlist controls produced significantly larger effect sizes than other control groups comprised of placebo devices or usual care. CBT-based apps produced significantly larger effect sizes than non-CBT apps. Age group, human guidance, in-app feedback, and mood monitoring features were not significantly associated with effect sizes.

Ten trials reported outcomes at follow-up. The results for these analyses are presented in Table 4. The overall effect sizes were statistically significant for the combined outcomes (Ncomparisons = 11; g = 0.25, 95% CI = 0.10, 0.41; NNT = 12.9; I2 = 44%; PI = −0.14, 0.65; see Supplementary Fig. 2), and for depression (Ncomparisons = 10; g = 0.21, 95% CI = 0.04, 0.37; NNT = 15.6; I2 = 51%; PI = −0.22, 0.64) and anxiety (Ncomparisons = 10; g = 0.25, 95% CI = 0.10, 0.40; NNT = 12.9; I2 = 40%; PI = −0.11, 0.61) specifically. Supplementary Fig. 3 presents a Funnel Plot. There were not enough trials available to calculate effects at follow-up for distress. All effects remained significant in sensitivity analyses (one effect per study, lower risk of bias trials, outliers removed, and trim-and-fill procedure), except for when a trim-and-fill estimate was applied for anxiety at follow-up (g = 0.08, 95% CI = −0.07, 0.24).

Attrition Rates

The mean post-test attrition rate for 22 app conditions was 29.2% (95% CI = 21.8, 37.9), with high heterogeneity (I2 = 94%). The mean attrition rate at follow-up periods of ≤4 weeks from 11 conditions was 34.1% (95% CI = 24.6, 45.1), and for follow-up periods >4 weeks in the 11 other conditions was 26.2% (95% CI = 15.2, 41.4). The mean attrition rate for CBT apps was 39.2% (95% CI = 28.9, 50.4), and for apps with mood monitoring and feedback features it was 36.2% (95% CI = 24.7, 49.6) and 36.6% (95% CI = 28.3, 45.9), respectively. For guided apps the attrition rate was 14.9% (95% CI = 1.0, 75.0) and for unguided apps it was 34.0% (95% CI = 25.5, 43.7).

Engagement

Findings regarding app engagement were synthesized qualitatively due to variability in reporting. All but two trials reported patterns of user engagement with the app. Most trials (k = 11) reported the percentage of participants who did not access, download, or use their prescribed app, with non-initiation rates ranging from 1% to 79%. Seven studies reported metrics related to login frequency or days of use, six described rates of activity completion (e.g., percentage completing core features or average number of activities per week), and three provided data on time spent using the app (e.g., average minutes per week or session duration). While these findings offer valuable insight into user behavior, a synthesis is limited by substantial heterogeneity in reporting formats, assessment timeframes, and varied operationalizations of engagement across trials (see Table 1 for further details).

Discussion

We conducted a meta-analysis aimed to examine the efficacy of transdiagnostic-focused apps for depression and anxiety. We located 19 eligible trials with a total of 5165 participants. We found transdiagnostic-focused apps to have small effects on symptoms of depression, anxiety and general distress at post-test (gs ranged from 0.24 to 0.29), with effect sizes remaining stable across various sensitivity analyses that adjusted for outliers, small-study bias, and trial quality. Effect sizes were also significant at the last reported follow-up (gs ranged from 0.21 to 0.25), though the effect on anxiety became non-significant when applying the trim-and-fill estimate. Weighted attrition rates were estimated to be 29.2% and there was considerable variability in engagement, both in terms of user patterns and definitions applied. Overall, the current findings suggest that stand-alone apps that adopt a transdiagnostic focus may lead to small improvements in symptoms of depression and anxiety, although issues with attrition and engagement are apparent.

While the effect sizes observed in this meta-analysis are small, they may still be of clinical relevance. Recent work by Cuijpers et al.38 has proposed a tentative cut-off of g = 0.24 as a threshold for the minimally important difference in psychological treatment trials, suggesting that the post-test and follow-up effects reported here (ranging from g = 0.25–0.29) may exceed this threshold and therefore reflect meaningful change. Moreover, the estimated number-needed-to-treat (NNT ≈ 12) indicates that for every 12 individuals who use a transdiagnostic app, one is expected to achieve symptomatic improvement attributable to the intervention who would not have improved with a control condition. This is a promising result when considering the potential scalability, accessibility, and low cost of app-based delivery. Although these effects are smaller than those typically observed in therapist-led, face-to-face transdiagnostic interventions25, they may still translate into important public health gains given the widespread barriers to accessing traditional care.

The current findings are consistent across the broader literature highlighting the benefits of digital health tools for these symptoms. For example, a recent meta-analysis found that disorder-specific, self-guided mental health apps produce small-to-moderate effects on depression (g = 0.38; 95% CI = 0.28, 0.48) and anxiety (g = 0.20; 95% CI = 0.13, 0.28)10, suggesting that transdiagnostic tools may offer comparable benefits with broader applicability. However, effects of transdiagnostic treatments on these symptoms appear to be slightly larger when delivered via guided computerized web programs, according to the estimates reported in a recent meta-analysis of these interventions (g = 0.52 and 0.45 for depression and anxiety, respectively)26. These differences underscore a key finding from our review: effect sizes appear smaller for unguided, app-based interventions, which is consistent with evidence suggesting that therapist support nearly doubles the efficacy of digital interventions39. This highlights the importance of distinguishing between delivery formats (app vs. web-based) and guidance levels (guided vs. unguided) when evaluating intervention outcomes. Our review builds upon prior syntheses by focusing specifically on stand-alone transdiagnostic apps, a rapidly growing but previously unaggregated area of research, thereby offering new insights into their effectiveness and feasibility relative to other digital approaches.

We also identified some factors that moderate efficacy estimates. We showed that the effects of transdiagnostic apps are larger among trials that delivered a waitlist compared to other control conditions. This trend has been repeatedly observed in multiple meta-analyses on digital40 and in-person41 psychological treatments. It also supports the idea that a portion of the benefits of mental health apps may stem from a digital placebo effect42, where participants’ expectations about the app may partially drive improvements. Alternatively, the stronger effects observed in trials using waitlist controls likely reflect, at least in part, methodological artifacts rather than true differences in intervention efficacy. Waitlist conditions offer no therapeutic content, minimal participant engagement, and often fail to control for expectancy or nonspecific effects. Thus, comparisons to waitlists may artificially inflate effect sizes43. The frequent use of waitlist controls in digital health trials, which are often chosen for feasibility considerations or convenience, may therefore overestimate app efficacy. Future trials should prioritize active control groups that better match intervention conditions in terms of attention, engagement, and user expectations to yield more accurate estimates of treatment effects.

We also showed that effects are larger when transdiagnostic apps are based on CBT compared to other approaches. The most included components across the apps investigated were cognitive restructuring, behavioral activation, and goal setting, which are foundational in CBT programs20. Given that apps grounded in CBT showed stronger effects, it is plausible that those cognitive and behavioral components may be key therapeutic drivers. These components are mostly well-suited to digital delivery, offering users structured tools to modify thought patterns and engage in mood-enhancing activities. However, it is important to acknowledge that this apparent precision may, in part, reflect the historical dominance of CBT in transdiagnostic intervention development15, rather than the inherent superiority of CBT itself. Other structured and theory-driven modalities, such as schema therapy, may also lend themselves well to transdiagnostic applications but have received comparatively less attention. This raises the possibility that the effectiveness of CBT-based transdiagnostic apps may partly reflect a better alignment with users who resonate with CBT principles, rather than universally superior mechanisms of change.

Interestingly, we found no evidence that therapist guidance moderates efficacy estimates. This finding, however, likely reflects that fact that only four smaller trials offered therapist guidance, resulting in issues with power. Nonetheless, the role of therapist support in transdiagnostic app delivery warrants careful consideration. Given that transdiagnostic interventions are designed to be broadly applicable, scalable, and flexible across a range of symptoms25, reliance on therapist input may seem counterintuitive. Some transdiagnostic components, such as exposure exercises, may require a higher level of guidance to be implemented effectively. Future app designs could seek to bridge this gap through automated coaching, interactive modules, and tailored AI-powered feedback systems that simulate some aspects of human support44. Exploring these alternatives could help optimize outcomes while preserving the scalability and low-intensity advantages of app-based transdiagnostic care.

These efficacy results on transdiagnostic apps have implications for the fields of mental health app development and research. Given the high and ongoing costs of supporting apps45, the advantages of transdiagnostic apps in reducing the need for disorder-specific apps and thereby lowering maintenance costs are clear. With there already being thousands of mental health apps46, prioritizing the development and testing of scalable transdiagnostic tools could improve clinical translation for broader, comorbid populations. Importantly, these apps also have potential value as adjuncts to clinician-led telehealth therapies, particularly those with transdiagnostic frameworks such as the Unified Protocol, Acceptance and Commitment Therapy, or Mindfulness-Based CBT. In hybrid or blended models, transdiagnostic apps can extend therapeutic content beyond sessions, support home practice and self-monitoring, deliver just-in-time interventions, and provide personalized, low-intensity support, all of which may help reinforce treatment gains while reducing the need for frequent therapist input31. These features could improve treatment efficiency, expand access to care, and enhance scalability by enabling clinicians to support more patients with fewer resources.

Real-world implementations provide further support for this vision. One such example is the Tripartite Hybrid Digital Clinic Model, which integrates the mindLAMP app with weekly clinician-led transdiagnostic therapy and Digital Navigator support47. The model blends active and passive sensing, personalized data visualizations, and adaptive content to enhance engagement and guide treatment delivery, with recent data supporting the efficacy of this approach48. Transdiagnostic apps that combine both active intervention content and passive sensing capabilities represent an ideal solution for realizing this vision of scalable and effective implementation, as they can dynamically adapt to users’ evolving needs. Beyond mindLAMP, the transdiagnostic-focused Feel DTx app49 leverages this dual-functionality and may be another option for patients and clinicians seeking personalized, technology-enabled mental health support. Rather than allocating resources toward developing new apps, investing in the evaluation, refinement, and broader implementation of existing transdiagnostic platforms may be a more efficient and impactful strategy to meet the needs of diverse and comorbid populations.

This meta-analysis has important limitations to consider. First, we could not provide estimates of other clinically relevant outcomes such as recovery, remission or deterioration because only a couple of trials reported these outcomes. These absolute outcomes are easier to interpret from a clinician and patient perspective and are important for clinical decision making50. Future trials of mental health apps should report these outcomes so that subsequent meta-analyses can pool them to estimate their absolute efficacy. Second, none of the trials reported outcomes for those with specific symptom profiles (i.e., depression only, anxiety only, and depression and anxiety concurrently) at baseline. Therefore, it remains unclear whether transdiagnostic apps are more effective for specific clinical presentations. Third, results from the subgroup analyses must be interpreted with caution given the limited number of trials in many subgroups and the potential for unmeasured variables influencing outcomes. Fourth, there was a considerable risk of bias observed across the eligible trials which must be considered when interpreting the current findings, though it is important to reiterate that effects remained robust when excluding high-risk-of-bias trials.

In conclusion, the present meta-analysis of 19 RCTs found that transdiagnostic-focused apps lead to small improvements in symptoms of depression and anxiety. Effects appear to be larger when compared with waitlists, highlighting the need for future research to employ placebo controls to rule out competing explanations. Such tools may serve as a valuable low-intensity option for managing comorbid symptoms of depression and anxiety, complementing traditional care and expanding access to evidence-based treatments. To advance this field, future trials should prioritize the use of rigorous control groups, assess clinical significance outcomes such as remission and meaningful symptom improvement, and better report metrics of engagement to evaluate real-world feasibility. Moreover, detailed reporting and examination of intervention components, personalization features, and user engagement strategies will be critical for identifying which aspects underpin an app’s effectiveness. Large-scale, well-controlled trials that address these priorities are essential to realize the full potential of transdiagnostic apps in transforming global mental health care.

Methods

Identification of studies

This review was preregistered in PROSPERO (ID 640669) and conducted per the Preferred Reporting Items for Systematic Reviews and Meta-Analysis guidelines51. See Supplementary Table 1 for a PRISMA checklist. We began by identifying potentially eligible studies from a comprehensive 2024 review37 which examined adverse event reporting in all clinical trials of apps delivered to samples with pre-existing mental health problems (k = 171). This review located all available trials up to 2024 that would meet the full eligibility criteria for this research. However, to ensure that we included the most recent evidence, we updated the search in May 2025 using Medline, PsycINFO, Web of Science, and ProQuest Dissertation databases. The updated search employed the following key terms:

-

“smartphone*” OR “mobile phone” OR “cell phone” OR “mobile app*” OR “iPhone” OR “android” OR “mhealth” OR “m-health” OR “cellular phone” OR “mobile device*” OR “mobile-based” OR “mobile health”

-

“anxiety” OR “agoraphobia” OR “phobia*” OR “panic” OR “post-traumatic stress” OR “mental health” OR “mental illness*” OR “depress*” OR “affective disorder*”OR “bipolar” OR“ mood disorder*” OR self-harm” or “selfinjury” OR “severe mental” OR “serious mental ” OR distress OR “psychiatric symptoms”

-

random* OR “clinical trial”

We included RCTs that tested the effects of a transdiagnostic-focused app against a control group in participants with elevated depression, anxiety and/or distress, and provided sufficient data to calculate effect sizes for these outcomes. In this review, transdiagnostic apps were defined as those that deliver psychological content designed to target both depression and anxiety symptoms within a unified treatment protocol25. These interventions focus on shared etiological and maintaining factors, such as maladaptive cognitive, emotional, and behavioral processes, rather than treating each disorder separately24. Core components typically include (but are not limited to) psychoeducation, cognitive restructuring, behavioral activation, emotion regulation, mindfulness, and exposure, used flexibly to address either or both disorders15,25. To be classified as transdiagnostic, apps had to meet one or more of the following criteria: (a) explicitly described by study authors as transdiagnostic or designed for both depression and anxiety; (b) included in trials where eligibility required elevated symptoms of either or both depression and anxiety; or (c) delivered therapeutic content aimed at addressing symptoms across both conditions, rather than a single diagnostic focus.

In contrast, disorder-specific apps are those designed exclusively to treat symptoms of a single disorder (e.g., depression only or anxiety only), typically by adhering to established treatment protocols that map closely onto diagnostic criteria. These apps usually focus on techniques targeting disorder-specific processes. The therapeutic content and in-app language are tailored to the symptom profile of the targeted disorder, often using disorder-specific terminology, psychoeducation, and examples relevant only to that condition. Disorder-specific app trials were excluded from the present review, as they have been addressed in separate meta-analyses10.

The sample must have included a mixture of individuals with elevated depression, anxiety or both, even if these conditions did not co-occur within the same individual (i.e., trials that screened participants solely for depression or for anxiety were excluded, as they were analyzed in prior reviews of disorder-specific apps). Depression and/or anxiety could have been established through a diagnostic interview or by scoring above a cut-off on a validated self-report scale, such as scores of 5 or more on the PHQ and/or the GAD or a score of 16 or more on the BDI-II. We also included samples screened for elevated distress through validated scales like the K1052 or the GHQ53, as these instruments assess a combination of affective symptoms, and are reliable screening tools for both depressive and generalized anxiety disorders54,55. We included guided and unguided self-help apps, but excluded blended treatments that involved delivery of an app alongside a web program or face-to-face treatment. Control groups could include waitlists, placebo devices, care as usual, or information resources56. Trials that did not report symptoms of depression, anxiety or distress as outcomes were excluded. Published and unpublished data were eligible for inclusion.

Risk of bias and data extraction

We used the criteria from the Cochrane Collaboration Risk of Bias tool57 to assess risk of bias. These criteria include random sequence generation, allocation concealment, blinding of participants or personnel, and completeness of outcome data. Each domain received either a high risk, low risk, or unclear rating. We did not assess the blinding of the outcome domain because all included trials assessed participant outcomes through self-report scales, which do not require any contact with the researcher. Selection bias was rated as low risk if there was a random component in the allocation sequence generation. Allocation concealment was rated as low risk when a clear method that prevented foreseeing group allocation before or during enrollment was explicitly stated. Blinding of participants was rated as low risk when the trial incorporated a comparison condition that prevented participants from knowing whether they were assigned to the experimental or control condition (e.g., a placebo app). Completeness of outcome data was rated as low risk if intention-to-treat analyses included all randomized participants were conducted.

We also extracted various other features from trials: sample selection criteria; age group (coded as younger if the mean age was ≤25 years and older if the mean age was >25 years); app name; n randomized; CBT-based app (coded as yes if cognitive restructuring was a key app component); in-app components; guided support offered (coded as yes if a human offered therapeutic guidance or personalized feedback, and coded as no if professional support was not offered or if only technical support was provided); in-app feedback offered (coded as yes if there were app features that updated users on their progress or provided skill recommendations based on user progress); mood monitoring features (coded as yes if the app contained functionality that allowed users to rate and monitor their mood); type of control group; follow-up length; outcomes reported; attrition and engagement rates; and data required to calculate effect sizes. Two researchers performed data extraction, with minor discrepancies resolved through consensus.

Meta-analysis

For each comparison between app and control conditions, the effect size was computed by dividing the difference between the two group means at post-test by the pooled standard deviation. Sometimes trials reported standard errors or 95% confidence intervals instead, which were converted to standard deviations. The standardized mean difference was then converted to Hedges’ g to correct for small sample bias58. To calculate a pooled effect size, each study’s effect size was weighted by its inverse variance. Effect sizes of 0.8 were interpreted as large, 0.5 as moderate, and 0.2 as small59. For each trial, we calculated the effect size indicating the effects of the app depression, anxiety, and general distress outcomes (if available). In the primary analysis, we examined the effects of the pooled outcomes together. Then we calculated and pooled effects separately for depression, anxiety, and distress. Analyses were conducted at post-test and the last reported follow-up. Comprehensive Meta-Analysis software was used to conduct the analyses60.

Sensitivity analyses assessed the robustness of the main findings. We re-calculated the pooled effects when excluding high risk of bias trials (meeting 0 or 1 of the criteria) and the smallest and largest effect in each study to maintain statistical independence, if multiple intervention or control arms were used. We also pooled effects while excluding outliers using the non-overlapping confidence interval (CI) approach, in which a study is defined as an outlier when the 95% CI of the effect size does not overlap with the 95% CI of the pooled effect size61. The trim-and-fill procedure was also applied to assess for small-study bias62.

Since we expected considerable heterogeneity among the studies, random effects models were employed for all analyses. Heterogeneity was examined by calculating the I2 statistic, which quantifies heterogeneity revealed by the Q statistic and reports how much overall variance (0–100%) is attributed to between-study variance63. We also presented the prediction interval (PI), which indicates the range in which the true effect size of 95% of all populations will fall60.

We also reported the number-needed-to-treat (NNT). The NNT indicates the number of additional participants in the intervention group who would need to be treated in order to observe one participant who shows positive symptom change relative to the control group64.

Pre-planned subgroup analyses were also performed to examine the effects of the intervention according to major characteristics of the app (i.e., type, guidance, in-app feedback, mood monitoring) and trial (i.e., control group, age group). These analyses were conducted under a mixed effects model60.

We also calculated weighted average attrition rates for the app condition. Event rates were calculated using random effects models, indicating the proportion of participants randomized to the app condition who then failed to complete the post-test assessment for any reason. Event rates were converted to percentages and were presented overall and for specific study and app features. In one instance, zero dropouts were recorded, which was handled by applying a continuity correction of 0.5, per recommendations65.

Data availability

The datasets used and/or analyzed during the current study available from the corresponding author on request.

Code availability

Not applicable.

References

McGorry, P. D. et al. The Lancet Psychiatry Commission on youth mental health. Lancet Psychiatry 11, 731–774 (2024).

World Health Organization. World mental health report: transforming mental health for all (WHO, 2022).

Cuijpers, P., Cristea, I. A., Karyotaki, E., Reijnders, M. & Huibers, M. J. How effective are cognitive behavior therapies for major depression and anxiety disorders? A meta-analytic update of the evidence. World Psychiatry 15, 245–258 (2016).

Kazdin, A. E. Addressing the treatment gap: a key challenge for extending evidence-based psychosocial interventions. Behav. Res. Ther. 88, 7–18 (2017).

Alonso, J. et al. Treatment gap for anxiety disorders is global: results of the World Mental Health Surveys in 21 countries. Depression Anxiety 35, 195–208 (2018).

Torous, J. et al. The growing field of digital psychiatry: current evidence and the future of apps, social media, chatbots, and virtual reality. World Psychiatry 20, 318–335 (2021).

Torous, J. et al. The evolving field of digital mental health: current evidence and implementation issues for smartphone apps, generative artificial intelligence, and virtual reality. World Psychiatry 24, 1–19 (2025).

Edge, D., Watkins, E. R., Limond, J. & Mugadza, J. The efficacy of self-guided internet and mobile-based interventions for preventing anxiety and depression–a systematic review and meta-analysis. Behav. Res. Ther. 164, 104292 (2023).

Karyotaki, E. et al. Do guided internet-based interventions result in clinically relevant changes for patients with depression? An individual participant data meta-analysis. Clin. Psychol. Rev. 63, 80–92 (2018).

Linardon, J. et al. Current evidence on the efficacy of mental health smartphone apps for symptoms of depression and anxiety. A meta-analysis of 176 randomized controlled trials. World Psychiatry 23, 139–149 (2024).

Fuhrmann, L. M. et al. Additive effects of adjunctive app-based interventions for mental disorders-a systematic review and meta-analysis of randomised controlled trials. Internet Interv. 35, 100703 (2023).

Fodor, L. A. et al. The effectiveness of virtual reality based interventions for symptoms of anxiety and depression: a meta-analysis. Sci. Rep. 8, 10323 (2018).

Opriş, D. et al. Virtual reality exposure therapy in anxiety disorders: a quantitative meta-analysis. Depression Anxiety 29, 85–93 (2012).

Li, H., Zhang, R., Lee, Y.-C., Kraut, R. E. & Mohr, D. C. Systematic review and meta-analysis of AI-based conversational agents for promoting mental health and well-being. NPJ Digit. Med. 6, 236 (2023).

Barlow, D. H., Harris, B. A., Eustis, E. H. & Farchione, T. J. The unified protocol for transdiagnostic treatment of emotional disorders. World Psychiatry 19, 245 (2020).

Kessler, R. C. et al. Anxious and non-anxious major depressive disorder in the World Health Organization World Mental Health Surveys. Epidemiol. Psychiatr. Sci. 24, 210–226 (2015).

Goldberg, D., Krueger, R., Andrews, G. & Hobbs, M. Emotional disorders: Cluster 4 of the proposed meta-structure for DSM-V and ICD-11: Paper 5 of 7 of the thematic section:‘A proposal for a meta-structure for DSM-V and ICD-11’. Psychological Med. 39, 2043–2059 (2009).

Newby, J. M., Twomey, C., Li, S. S. Y. & Andrews, G. Transdiagnostic computerised cognitive behavioural therapy for depression and anxiety: a systematic review and meta-analysis. J. Affect. Disord. 199, 30–41 (2016).

Cuijpers, P., Harrer, M., Miguel, C., Ciharova, M. & Karyotaki, E. Five decades of research on psychological treatments of depression: a historical and meta-analytic overview. Am. Psychol. 80, 297–310 (2023).

Cuijpers, P. et al. Cognitive behavior therapy for mental disorders in adults: a unified series of meta-analyses. JAMA Psychiatry 82, 563–571 (2025).

Dear, B. F. et al. Transdiagnostic versus disorder-specific and clinician-guided versus self-guided internet-delivered treatment for generalized anxiety disorder and comorbid disorders: a randomized controlled trial. J. Anxiety Disord. 36, 63–77 (2015).

Carlucci, L., Saggino, A. & Balsamo, M. On the efficacy of the unified protocol for transdiagnostic treatment of emotional disorders: a systematic review and meta-analysis. Clin. Psychol. Rev. 87, 101999 (2021).

Reinholt, N. & Krogh, J. Efficacy of transdiagnostic cognitive behaviour therapy for anxiety disorders: a systematic review and meta-analysis of published outcome studies. Cogn. Behav. Ther. 43, 171–184 (2014).

Andersen, P., Toner, P., Bland, M. & McMillan, D. Effectiveness of transdiagnostic cognitive behaviour therapy for anxiety and depression in adults: a systematic review and meta-analysis. Behavioural Cogn. Psychother. 44, 673–690 (2016).

Cuijpers, P. et al. Transdiagnostic treatment of depression and anxiety: a meta-analysis. Psychological Med. 53, 6535–6546 (2023).

Kolaas, K. et al. Internet-delivered transdiagnostic psychological treatments for individuals with depression, anxiety or both: a systematic review with meta-analysis of randomised controlled trials. BMJ Open 14, e075796 (2024).

Fogliati, V. et al. Disorder-specific versus transdiagnostic and clinician-guided versus self-guided internet-delivered treatment for panic disorder and comorbid disorders: a randomized controlled trial. J. Anxiety Disord. 39, 88–102 (2016).

Titov, N. et al. Transdiagnostic internet treatment for anxiety and depression: a randomised controlled trial. Behav. Res. Ther. 49, 441–452 (2011).

Andersson, G. Internet-delivered CBT: distinctive features (Taylor & Francis, 2024).

Mohr, D. C. et al. Comparison of the effects of coaching and receipt of app recommendations on depression, anxiety, and engagement in the IntelliCare platform: factorial, randomized controlled trial. J. Med. Internet Res. 21, e13609 (2019).

Macrynikola, N. et al. Testing the Feasibility, Acceptability, and Potential Efficacy of an Innovative Digital Mental Health Care Delivery Model Designed to Increase Access to Care: Open Trial of the Digital Clinic. JMIR Ment. Health 12, e65222 (2025).

Stolz, T. et al. A mobile app for social anxiety disorder: a three-arm randomized controlled trial comparing mobile and PC-based guided self-help interventions. J. Consulting Clin. Psychol. 86, 493–504 (2018).

Currey, D. & Torous, J. Digital phenotyping data to predict symptom improvement and mental health app personalization in college students: prospective validation of a predictive model. J. Med. Internet Res. 25, e39258 (2023).

Camacho, E., Cohen, A. & Torous, J. Assessment of mental health services available through smartphone apps. JAMA Net. Open 5, e2248784 (2022).

Linardon, J. Navigating the future of psychiatry: a review of research on opportunities, applications, and challenges of artificial intelligence. Curr. Treat. Options Psychiatry 12, 8 (2025).

Torous, J., Nicholas, J., Larsen, M. E., Firth, J. & Christensen, H. Clinical review of user engagement with mental health smartphone apps: evidence, theory and improvements. Evid. Based Ment. Health 21, 116–119 (2018).

Linardon, J. et al. Systematic review and meta-analysis of adverse events in clinical trials of mental health apps. npj Digital Med. 7, 363 (2024).

Cuijpers, P., Turner, E. H., Koole, S. L., Van Dijke, A. & Smit, F. What is the threshold for a clinically relevant effect? The case of major depressive disorders. Depression Anxiety 31, 374–378 (2014).

Baumeister, H., Reichler, L., Munzinger, M. & Lin, J. The impact of guidance on Internet-based mental health interventions—a systematic review. Internet Interventions 1, 205–215 (2014).

Plessen, C. Y., Panagiotopoulou, O. M., Tong, L., Cuijpers, P. & Karyotaki, E. Digital mental health interventions for the treatment of depression: a multiverse meta-analysis. J. Affect. Disord. 369, 1031–1044 (2025).

Cuijpers, P., Miguel, C., Harrer, M., Ciharova, M. & Karyotaki, E. The overestimation of the effect sizes of psychotherapies for depression in waitlist controlled trials: a meta-analytic comparison with usual care controlled trials. Epidemiol. Psychiatr. Sci. 33, e56 (2024).

Torous, J. & Firth, J. The digital placebo effect: mobile mental health meets clinical psychiatry. Lancet Psychiatry 3, 100–102 (2016).

Michopoulos, I. et al. Different control conditions can produce different effect estimates in psychotherapy trials for depression. J. Clin. Epidemiol. 132, 59–70 (2021).

Linardon, J. & Torous, J. Integrating artificial intelligence and smartphone technology to enhance personalized assessment and treatment for eating disorders. Int. J. Eating Disorders n/a (2025).

Owen, J. E. et al. The NCPTSD model for digital mental health: a public sector approach to development, evaluation, implementation, and optimization of resources for helping trauma survivors. Psychological Trauma 17, S176–S185 (2025).

Torous, J. & Roberts, L. W. Needed innovation in digital health and smartphone applications for mental health: transparency and trust. JAMA Psychiatry 74, 437–438 (2017).

Rodriguez-Villa, E. et al. The digital clinic: implementing technology and augmenting care for mental health. Gen. Hosp. Psychiatry 66, 59–66 (2020).

Calvert, E. et al. Evaluating clinical outcomes for anxiety and depression: A real-world comparison of the digital clinic and primary care. J. Affect. Disord. 377, 275–283 (2025).

Fatouros, P. et al. Randomized controlled study of a digital data driven intervention for depressive and generalized anxiety symptoms. Npj Digital Med. 8, 113 (2025).

Cuijpers, P. et al. Absolute and relative outcomes of cognitive behavior therapy for eating disorders in adults: a meta-analysis. Eat. Disord. 8, 1–22 (2024).

Page, M. J. et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 372, n71 (2021).

Kessler, R. C. et al. Short screening scales to monitor population prevalences and trends in non-specific psychological distress. Psychological Med. 32, 959–976 (2002).

Goldberg, D. P. & Hillier, V. F. A scaled version of the General Health Questionnaire. Psychological Med. 9, 139–145 (1979).

Donker, T. et al. The validity of the Dutch K10 and extended K10 screening scales for depressive and anxiety disorders. Psychiatry Res. 176, 45–50 (2010).

Baksheev, G. N., Robinson, J., Cosgrave, E. M., Baker, K. & Yung, A. R. Validity of the 12-item General Health Questionnaire (GHQ-12) in detecting depressive and anxiety disorders among high school students. Psychiatry Res. 187, 291–296 (2011).

Goldberg, S. B., Sun, S., Carlbring, P. & Torous, J. Selecting and describing control conditions in mobile health randomized controlled trials: a proposed typology. NPJ Digital Med. 6, 181 (2023).

Higgins, J. & Green, S. Cochrane handbook for systematic reviews of interventions (John Wiley & Sons, 2011).

Hedges, L. V. & Olkin, I. Statistical methods for meta-analysis (Academic Press, 1985).

Cohen, J. A power primer. Psychological Bull. 112, 155–159 (1992).

Borenstein, M., Hedges, L. V., Higgins, J. P. & Rothstein, H. R. Introduction to meta-analysis (John Wiley & Sons, 2009).

Harrer, M., Cuijpers, P., Furukawa, T. A. & Ebert, D. D. Doing meta-analysis with R: a hands-on guide. (CRC press, 2021).

Duval, S. & Tweedie, R. Trim and fill: a simple funnel-plot–based method of testing and adjusting for publication bias in meta-analysis. Biometrics 56, 455–463 (2000).

Higgins, J. & Thompson, S. G. Quantifying heterogeneity in a meta-analysis. Stat. Med. 21, 1539–1558 (2002).

Cook, R. J. & Sackett, D. L. The number needed to treat: a clinically useful measure of treatment effect. BMJ 310, 452–454 (1995).

Sweeting, M., Sutton, A. & Lambert, P. What to add to nothing? Use and avoidance of continuity corrections in meta-analysis of sparse data. Stat. Med. 23, 1351–1375 (2004).

Bantjes, J. et al. Comparative effectiveness of remote digital gamified and group CBT skills training interventions for anxiety and depression among college students: results of a three-arm randomised controlled trial. Behav. Res. Therapy 178, 104554 (2024).

Bell, I. et al. A personalized, transdiagnostic smartphone intervention (Mello) targeting repetitive negative thinking in young people with depression and anxiety: pilot randomized controlled trial. J. Med. Internet Res. 25, e47860 (2023).

Comtois, K. A. et al. Effectiveness of mental health apps for distress during COVID-19 in US unemployed and essential workers: remote pragmatic randomized clinical trial. JMIR mHealth uHealth 10, e41689 (2022).

Cox, C. E. et al. Feasibility of mobile app-based coping skills training for cardiorespiratory failure survivors: the blueprint pilot randomized controlled trial. Ann. Am. Thorac. Soc. 20, 861–871 (2023).

Graham, A. K. et al. Coached mobile app platform for the treatment of depression and anxiety among primary care patients a randomized clinical trial. JAMA Psychiatry 77, 906–914 (2020).

Ham, K. et al. Preliminary results from a randomized controlled study for an app-based cognitive behavioral therapy program for depression and anxiety in cancer patients. Front. Psychol. 10, 1592 (2019).

Kerber, A., Beintner, I., Burchert, S. & Knaevelsrud, C. Effects of a self-guided transdiagnostic smartphone app on patient empowerment and mental health: randomized controlled trial. JMIR Mental Health 10, e45068 (2023).

McCloud, T., Jones, R., Lewis, G., Bell, V. & Tsakanikos, E. Effectiveness of a mobile app intervention for anxiety and depression symptoms in University students: randomized controlled trial. JMIR mHealth uHealth 8, e15418 (2020).

Moberg, C., Niles, A. & Beermann, D. Guided self-help works: randomized waitlist controlled trial of Pacifica, a mobile app integrating cognitive behavioral therapy and mindfulness for stress, anxiety, and depression. J. Med. Internet Res. 21, e12556 (2019).

Parkes, S. et al. Evaluating a smartphone app (MeT4VeT) to support the mental health of UK armed forces veterans: feasibility randomized controlled trial. JMIR Ment. health 10, e46508 (2023).

Reid, S. C. et al. A mobile phone application for the assessment and management of youth mental health problems in primary care: a randomised controlled trial. BMC Fam. Pract. 12, 131 (2011).

Renn, B. N., Walker, T. J., Edds, B., Roots, M. & Raue, P. J. Naturalistic use of a digital mental health intervention for depression and anxiety: arandomized clinical trial. J. Affect. Disord. 368, 429–438 (2025).

Stallman, H. M. Efficacy of the My Coping Plan mobile application in reducing distress: a randomised controlled trial. Clin. Psychologist 23, 206–212 (2019).

Stiles-Shields, C. et al. A personal sensing technology enabled service versus a digital psychoeducation control for primary care patients with depression and anxiety: a pilot randomized controlled trial. BMC Psychiatry 24, 1–15 (2024).

Sun, S. et al. A mindfulness-based mobile health (mHealth) intervention among psychologically distressed university students in quarantine during the COVID-19 pandemic: a randomized controlled trial. J. Counseling Psychol. 69, 157–171 (2022).

Tan, S., Ismail, M. A. B., Daud, T. I. M., Hod, R. & Ahmad, N. A randomized controlled trial on the effect of smartphone-based mental health application among outpatients with depressive and anxiety symptoms: a pilot study in Malaysia. Indian J. Psychiatry 65, 934–940 (2023).

Tighe, J. et al. Ibobbly mobile health intervention for suicide prevention in Australian Indigenous youth: a pilot randomised controlled trial. BMJ Open 7, e013518 (2017).

Zhang, X. et al. Effectiveness of digital guided self-help mindfulness training during pregnancy on maternal psychological distress and infant neuropsychological development: randomized controlled trial. J. Med. Internet Res. 25, e41298 (2023).

Acknowledgements

J.L. hold a National Health and Medical Research Council Grant (APP1196948); J.T. is supported by the Argosy Foundation.

Author information

Authors and Affiliations

Contributions

J.L. conceived the idea, performed the data analysis, and wrote the paper; J.T. wrote aspects of the paper, provided supervision, and edited the paper; C.A. performed data extraction and edited the paper; M.M. & C.L. edited the paper and conceived aspects of the paper.

Corresponding author

Ethics declarations

Competing interests

J.T. is an editorial board member for NPJ Digital Medicine. There are no other financial or non-financial competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Linardon, J., Anderson, C., Messer, M. et al. Transdiagnostic-focused apps for depression and anxiety: a meta-analysis. npj Digit. Med. 8, 443 (2025). https://doi.org/10.1038/s41746-025-01860-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41746-025-01860-3