Abstract

Background

Wearables with integrated electrocardiogram (ECG) acquisition have made single-lead ECGs widely accessible to patients and consumers. However, the 12-lead ECG remains the gold standard for most clinical cardiac assessments. In this study, we developed a neural network to reconstruct 12-lead ECGs from single-lead and dual-lead ECGs, and evaluated the mathematical accuracy.

Methods

We used lead I or leads I and II from 9514 individuals from the Physikalisch-Technische Bundesanstalt (PTB-XL) cohort and a generative adversarial network, with the aim of recreating the missing leads from the 12-lead ECG. ECGs were divided into training, validation, and testing (10%). Original and recreated leads were measured with a commercially available algorithm. Differences in means and variances were assessed with Student’s t-tests and F-tests, respectively. Calibration and bias were assessed with Bland-Altman plots. Inter-lead correlations were compared in original and recreated ECGs.

Results

The variability of precordial ECG amplitudes is significantly reduced in recreated ECGs compared to real ECGs (all p < 0.05), indicating regression-to-the-mean. Amplitude averages are recreated with bias (p < 0.05 for most leads). Reconstruction errors depend on the real amplitudes, suggesting regression-to-the-mean (R2 between target and error in R-peak amplitude in lead V3: 0.92). The relations between lead markers have a similar slope but are much stronger due to reduced variance (R-peak amplitude R2 between leads I and V3, real ECGs: 0.04, recreated ECGs: 0.49). Using two leads does not significantly improve 12-lead recreation.

Conclusions

AI-based 12-lead ECG reconstruction results in a regression-to-the-mean effect rather than personalized output, rendering it unsuitable for clinical use.

Plain language summary

Electrocardiogram (ECG) testing measures electrical signals from the heart and can be used to diagnose and monitor people with heart problems. Wearable devices have made ECG testing widely available for patients. However, ECG tests using only a single-lead to measure electrical signals from the heart lack the clinical detail provided by a 12-lead ECG test. In our study, we explored the use of artificial intelligence (AI) to convert single- or dual-lead ECGs into 12-lead ECGs and assessed the accuracy of the reconstructed ECGs using mathematical metrics and an AI algorithm. While the reconstructed ECGs appeared visually normal, we found that the AI algorithm generated the missing leads based on population averages rather than individual patient characteristics. These results reveal that lead conversion using AI is not reliable and should not be used clinically.

Similar content being viewed by others

Introduction

Electrocardiography is a simple, low-cost, and quick method for assessing the electrical activity of the heart in order to obtain clinical information about heart diseases. Over a span of ten seconds, this method captures the heart’s voltage using ten electrodes. Given the inherently three-dimensional (3D) composition of the human body, multiple electrodes are required to record the 3D electrical activity of the heart. From a mathematical perspective, only three orthogonal leads should be required to characterize the electrical dipolar vector in space; a concept well-known from the 3-lead Frank vectorcardiogram1.

The Frank vectorcardiogram simplifies the complexity of the heart’s electrical activity by treating it as an infinitesimal small stationary dipole in a large, uniform conducting medium. While this is a simplification, it allows for the creation of a model to understand the heart’s electrical activity. The standard clinical electrocardiogram (ECG) consists of 12 leads, with six leads originating from the extremities (four of these being mathematically redundant) and six leads from electrodes placed on the chest (precordial electrodes).

Principal Component Analysis (PCA), a statistical method that simplifies data by identifying key variables (principal components), demonstrates that 98% of the 12-lead ECG information in healthy subjects is captured by the first three principal components2. In patients with acute or chronic cardiac disease, the information in the first three principal components unfortunately decreases. As the heart is not an ideal dipole, because of its size and its movement during the heart cycle, the precordial leads not only have information about the Z-axis of the 3D electrical vector but also contain local electrical non-dipolar information from cardiac tissue just below the electrode. An example of important non-dipolar information is fractionated QRS complexes, which may demand local information from specific single leads and can not necessarily be constructed solely from other leads3.

Whereas 12-lead ECGs are the most common type of ECGs, in the acute context and for long-term recordings, a reduced set of electrodes, typically consisting of one or two leads, is utilized. The reduced leads contain nearly the same information in the time dimension, making them well-suited for arrhythmia analysis. However, the lack of dimensions may result in substantial loss of spatial information, which is essential for diagnosing myocardial infarction, hypertrophy, repolarization changes, and other localized cardiac disorders.

With the advent of one-lead portable ECG devices, there has been a renewed interest in the possibility of obtaining a full 12-lead ECG from single-lead devices. Because the spatial differences of these intervals are minimal, temporal information such as heart rate and intervals may be reliably measured from a single lead. This is not the case with amplitudes, which vary considerably depending on the angle between the lead vector’s direction and the 3D cardiac voltage vector. As a result, interval measurements from one-lead wearable devices are reliable, but one-lead devices cannot currently detect amplitude changes, for example, during cardiac ischemia and myocardial infarction. That raises the question of whether it is possible to use deep learning networks4 to create reliable 12-lead ECG from a single lead, as previously suggested5,6, to be implemented in one-lead wearables to detect cardiac ischemia. Advances in deep learning and neural networks have particularly spurred efforts in this area and the concept of reconstruction has spawned a number of publications in the field5,6,7,8. Sohn et al.9 proposed a three-lead chest device with four electrodes and a recurrent neural network employing long short-term memory (LSTM) to generate the missing leads. Both Grande et al.10 and Hussein et al.11 suggested using an artificial neural network (ANN) to generate the remaining leads from the initial three leads.

From a linear mathematical perspective, it is impossible to reconstruct a full 12-lead ECG from a single lead, since a lead contains no information on the two orthogonal directions required to reconstruct the 3D vector. Nevertheless, it has been claimed that 3D reconstruction from a single input using deep learning can be reliably performed. The purpose of this investigation is to evaluate the ability of neural networks to reconstruct a full 12-lead ECG from data obtained from single-lead or two-lead ECGs. Due to the advent of two-lead portable ECG devices in the consumer market, we investigated whether the reconstruction of 12-lead ECG from two-lead ECGs is better than with a single-lead.

The study’s main contributions include the implementation of generative adversarial network (GAN) models to reconstruct missing ECG leads using two input configurations: Lead I alone and a combination of Leads I and II. Performance is assessed using the Physikalisch-Technische Bundesanstalt (PTB-XL) dataset, revealing that while GAN-generated ECGs appear visually normal, they exhibit significant deficiencies compared to real ECGs. Specifically, the reconstructed precordial ECGs demonstrate reduced variability, reflecting a tendency to regress toward the population mean (all p < 0.05). Amplitude averages are recreated with bias (p < 0.05 for most leads), and reconstruction errors depend on the real amplitudes, further indicating regression-to-the-mean (R2 = 0.92 for R-peak amplitude errors in Lead V3). The relationships between lead markers maintain a similar slope but appear significantly stronger in the recreated ECGs due to reduced variance (R2 for R-peak amplitude between Leads I and V3: 0.04 in real ECGs vs. 0.49 in recreated ECGs). These findings highlight the limitations of deep learning-based methods in capturing individual variations and clinically significant features in ECG lead reconstruction.

Methods

Data

The models were trained and tested using the PTB-XL v1.0.312. This publicly available dataset consists of anonymized 12-lead ECGs obtained from 18,869 people. The dataset was approved for open-access publication by the Institutional Ethics Committee of the Physikalisch-Technische Bundesanstalt (PTB). Furthermore, the use of anonymized data from PTB-XL was approved by the Institutional Ethics Committee, and need for informed consent from people whose data was included was waived. We obtained no further approval because we worked with retrospective, de-identified data with no possibility to impact patient care. The dataset includes ECGs from both healthy individuals and patients with various pathologies. Expert cardiologists have labeled each record, assigning different conditions to them. For our study, we specifically focused on utilizing the ECGs categorized as ‘normal’, which amounted to a total of 9514 patients. Their ages vary from 2 to 95 years, having a mean and standard deviation of 52.86 ± 22.25. Among them, 46% are male and 54% are female.

Each ECG record in the dataset has a duration of 10 seconds and a sampling frequency of 500 Hz. The dataset was divided into three subsets: 80% for training (7611 samples), 10% for validation (951 samples), and 10% (952 samples) for testing purposes. The performance evaluation of the models was conducted on the PTB-XL test dataset12. The results were assessed using the MUSE 12SL13, which provides ECG measurements and arrhythmia diagnosis. The PTB-XL dataset contains sample values expressed in millivolts (mV). However, before passing the samples to the MUSE system, we rescaled them to microvolts (μV) since the system only accepts input in this unit. Hence, all results are expressed in microvolts (μV).

Initially, only Lead I was used as the input, and predictions were made for the remaining seven independent leads (Lead II, V1, V2, V3, V4, V5, V6). Subsequently, we aimed to assess whether employing both Lead I and Lead II as input and reconstructing only V1–V6 leads would result in better performance. Therefore, we trained additional models for this purpose.

Models

Two UNet14 architecture models were utilized: one employed a single lead (Lead I) as input to generate the seven missing leads (Lead II, V1–V6), while the second model utilized two leads (Leads I and II) to reconstruct the precordial leads (V1–V6). During their training, two distinct loss functions were used: an adversarial loss derived from a discriminator model combined with an L1 loss, and a mean-squared error (MSE) for the pure U-Net model. To maintain clear differentiation between these techniques, we designate the model utilizing the adversarial loss as the GAN, and the model with MSE loss as the U-Net. Both loss techniques yielded comparable performance, with the GAN slightly outperforming the U-Net. To simplify the presentation, we only include the GAN values in the Results section. The results for the U-Net are provided in Table S1 of the Supplementary material.

GAN, initially introduced in ref. 15, consists of two neural networks—a generator and a discriminator—that are trained together in a competitive manner, where they continuously learn from each other’s outputs and improve over time. The proposed generator takes as input either Lead I or Leads I and II of an ECG and produces the remaining leads. The discriminator’s role is to differentiate between real and generated signals. Through training, the generator learns to produce realistic ECGs that the discriminator cannot differentiate from genuine ECGs.

Architectures

The models’ architectures are inspired by the Pulse2Pulse model16 that was used to generate 8 × 5000 ECGs from an 8 × 5000 random noise vector. However, we have adapted our generator (and the basic network for the UNet) to accept either a single ECG lead (1 × 5000) or two ECG leads (2 × 5000) signals as input and produce the corresponding missing leads. The architecture comprises a down-sampling component and an up-sampling component. The down-sampling part reduces the input signal’s dimensionality while capturing the most important features, whereas the up-sampling component reconstructs the missing leads using the learned features. The former includes six 1D-convolution layers, each followed by a Leaky ReLU activation function17, as illustrated in Fig. 1a. The latter consists of an additional six convolution layers and a ReLU activation. The arrows depicted in Fig. 1a represent the skip connections of the UNet that connect the blocks of the two components, which led to improved outcomes.

a The generator takes Lead I or Leads I and II as input and reconstructs the additional electrocardiogram (ECG) leads V1, V2, V3, V4, V5, and V6 as output. b The discriminator receives real and synthesized (fake) ECGs as input and classifies them as real or fake. Each block represents a layer type, with colors distinguishing them. The generator follows an encoder-decoder structure, using one-dimensional convolutional layers (Conv1d()), Leaky Rectified Linear Unit activation (LeakyReLU()) and Rectified Linear Unit activation (ReLU()), reflection padding (ReflectPadding), and up-sampling layers (Upsample()), ending with a hyperbolic tangent activation (Tanh()). The discriminator consists of stacked one-dimensional convolutional layers (Conv1d()) with Leaky Rectified Linear Unit activation (LeakyReLU()), followed by a linear layer and sigmoid activation (Sigmoid()).

The discriminator’s role is to classify the ECGs as either real or fake. To efficiently train it, we implemented a patch discriminator approach, as proposed in18, where the discriminator evaluates patches instead of the entire image. While the Pix2Pix model applies the patch convolutionally across the entire image, our customized patch discriminator randomly selects a patch of a predetermined size (e.g., 8 channels × 800 samples) for each training batch. This strategy reduces training time and moderates the discriminator’s learning rate, ensuring a balanced training process with the generator, as the discriminator initially learns faster than the generator. The discriminator architecture depicted in Fig. 1b comprises seven convolutional layers, each followed by a Leaky ReLU activation function.

Training

To train the networks a Ubuntu workstation with two Xeon processors and a GeForce NVIDIA RTX 2080ti were utilized. The PyTorch library19 was employed for implementation. The GAN is using the Adam optimizer20, with a learning rate of 0.0001, β1 value of 0.5, and β2 value of 0.9. Due to training instability, a smaller learning rate of 0.00005 was used for the U-Net. A batch size of 32 was used for both models. The U-Net employs the mean squared error (MSE) loss function. For the GAN, we utilized the WGAN-GP technique with gradient clipping21, combined with the objective proposed in ref. 18. This training objective combines the discriminator loss with the L1 loss, which measures the error between the real and generated signals. Therefore, in addition to fooling the discriminator, the generator is also required to minimize the L1 loss. The discriminator’s parameters are updated after every batch, while the generator’s are updated after every other batch. To prevent overfitting, dropout was applied to three of the layers during training22. The networks were trained for a total of 1000 epochs. The final models were chosen based on the validation error.

Statistics and reproducibility

This study used statistical analyzes to evaluate the accuracy of ECG signal reconstruction, with all analyzes performed on the test dataset comprising 952 normal ECG samples from the PTB-XL dataset. All the tests are two-sided. Reproducibility was ensured through standardized data pre-processing and model training protocols, treating each ECG sample as an independent replicate (n = 952).

We assessed the signal average reconstruction error on a lead-by-lead basis. This was done by calculating the square root of the mean squared error (RMSE) between the real and reconstructed ECG. Based on the 12SL measurements, we analyzed the mean error and standard deviation of errors for the R peak, S peak, center ST segment (STM), and T peak amplitudes lead-by-lead. We used the subset of leads V1, V2, V3, and V6.

We utilized Student’s t-test and the F-test to assess differences in means and variances, respectively. The Student’s t-test was chosen as it is a robust statistical tool used to determine whether the means of two groups of data are significantly different from each other. This test is particularly suitable when dealing with normally distributed data, which is an assumption we are working with for our ECG data. The F-test was used to evaluate the equality of variances. Variance is a measure of dispersion in a dataset and can be particularly important when comparing the performance of different models, as done here. A notable result from an F-test suggests that the variances are different, which implies that one model is more consistent than the other one in its predictions. This is an important consideration in our evaluation of the accuracy and reliability of ECG reconstructions. A p-value < 0.05 was considered statistically significant.

Reconstruction errors should be independent of the amplitude that is being reconstructed. Any evidence to the contrary could suggest a bias or regression towards the population mean, rather than accurately reflecting individual variations. Therefore, we plotted the difference in amplitude (reconstructed vs. real for R, S, STM, T) as a function of the real amplitude in a Bland-Altman-like plot. As the objective measurement of independence, we calculated Pearson’s correlation coefficient between the difference and real amplitudes.

The reconstruction should not materially change the correlation between variables. If interlead correlations change materially, it is a sign that the model reconstructs leads based on one population mean and does not give personalized reconstructions based on the specific ECG. Therefore we calculated Pearson’s correlation coefficient between markers (e.g., R peak) from the precordial leads (V1, V2, V3, V6) and lead I in the real ECGs and in the reconstructed ECGs, respectively. We also plotted the markers against one another to visually display any changes.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Results

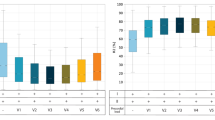

The GAN generated 12-lead ECGs with a natural appearance from both one- and two-lead ECGs. Figure 2 displays correlation plots depicting the relationship between amplitudes in lead I (real input lead) and lead V3 (reconstructed lead), which is almost orthogonal to lead I. Similar figures for lead II instead of lead I are presented in Fig. S1 of the supplementary material. The figures show that real ECGs exhibit a poor correlation between R and T amplitudes in lead I and Vn, whereas the generated ECGs have a much higher correlation. This suggests that the GAN overuses input information in its reconstructions. Additionally, according to the Bland-Altman plots in Figs. 3 and 4, the GAN utilizing either lead I alone or both lead I and II to predict lead V3 (one of the orthogonal precordial leads) systematically overestimated low R- and T-wave amplitudes and underestimated large amplitudes not only in lead V3 but also in lead V6. Figure 5 shows an example of generated ECGs compared to the real ECG for both one and two lead reconstructions (more samples can be found in the Supplementary Figs. S2, S3, S4, S5, and S6).

Relationship between Amplitudes in lead I and lead V3 for R-waves (a) and (b) and T-waves (c) and (d). Panels (a) and (c) show results from the 1-lead reconstruction, while panels (b) and (d) present results from the 2-lead reconstruction (n = 952 independent samples). Each point represents an individual sample, with real values shown in blue and generated values in red. The lines indicate linear regression fits for the real (blue) and generated (red) data. The coefficient of determination (R2) is reported for both cases. All amplitudes are measured in microvolts (μV).

a, b R peak amplitude in Lead V3, c, d T peak amplitude in Lead V3. Panels (a) and (c) show results from the 1-lead reconstruction, panels (b) and (d) present results from the 2-lead reconstruction (n = 952 independent samples). The plots display the reconstruction error (generated - real) against the amplitude of the real ECGs in lead V3. Each point represents a sample. The dashed lines denote the mean error and the limits of agreement at ±1.96 standard deviations. The coefficient of determination (R2) quantifies the correlation between real and generated data. Amplitudes are measured in microvolts (μV).

a, b R peak amplitude in Lead V6, c, d T peak amplitude in Lead V6. Panels (a) and (c) show results from the 1-lead reconstruction, panels (b) and (d) show results from the 2-lead reconstruction (n = 952 independent samples). The plots display the reconstruction error (generated - real) against the amplitude of the real electrocardiograms in lead V6. Each point represents a sample. The dashed lines denote the mean error and the limits of agreement at ±0.96 standard deviations. The coefficient of determination (R2) quantifies the correlation between real and generated data. Amplitudes are measured in microvolts (μV).

Table 1 shows the 5, 10, 90, and 95% reconstruction error percentiles for all precordial leads. The results indicate that the reconstruction may erroneously contain clinically significant alterations that are not present in the real ECG and which may change the interpretation of the ECG. Table 2 displays correlation coefficients between the real and the reconstructed values, with the 2-lead reconstruction exhibiting higher values. The average root mean squared error (RMSE) for each lead is presented in Table 3 for both 1- and 2-lead reconstructions using the GAN, while the corresponding results for the UNet are presented in Table S1 in the supplementary. Table 4 exhibits the standard deviation and mean values for R, T, and S peaks and STM of real and generated data in leads V1, V2, V3, and V6.

Discussion

Our study demonstrated that deep learning reconstruction from single- or dual-lead ECGs had substantial limitations, and was not clinically useful. The generated ECGs in the study had a normal appearance and by themselves, could not be distinguished from real ECGs. However, a careful analysis of the mean amplitudes of different waves in the generated and real ECGs showed that they were significantly different. Importantly, nearly all leads and parameters exhibited less variation in the generated ECGs compared to the real ECGs, suggesting a regression towards the mean; and this phenomenon is clearly demonstrated in the Bland-Altman plots. Low amplitudes in the real ECG were increased in the synthetic ECGs and vice versa with high amplitudes in the real ECG, indicating that the network attempted to fit the mean of the population and not the individual ECG. The consequence would be that a patient with an acute myocardial infarction located orthogonal to the input lead would present with normal ST-amplitudes in the orthogonal leads generated from the population. We speculate that were the network instead trained on myocardial infarction patients, normal healthy subjects would exhibit signs of myocardial infarction in their orthogonal leads.

Several authors attempted to predict all 12 ECG leads from one. Lee et al. were the first to use GAN techniques to investigate 12-lead reconstruction from a single lead, a modified Lead II5. To generate the synthetic ECG, their proposed GAN used R-peak-aligned single heartbeats and data augmentation. They failed to present clinically relevant measures such as raw amplitudes in mV and instead used advanced mathematical algorithms, making it impossible to determine whether a clinically relevant difference existed. They also ignored the fact that the GAN reproduces the input population of ECGs nicely, but randomly and with no obvious relationship to the individual ECG. Guessing on the mean results in low amplitude mean absolute errors, but very skewed distributions. Only using Bland-Altman plots and outlier distributions can it be determined whether a low mean absolute error simply is due to guessing on the mean of the population in every case, as our study showed.

Seo et al. used a patchGAN5 to create 12-lead ECGs from 2.5 s ECG patches. They evaluated their network both with non-clinical methods using Fréchet distance but also used mean square error, interval, and amplitude measurements. According to their Bland-Altman plots, the 95 percent confidence interval for the difference between the real and synthetic GAN-generated ECG for RR intervals was approximately ±120 ms, QT intervals were approximately ±130 ms, and QRS intervals were approximately ±30 ms. These errors are far from clinically acceptable. The US Federal Drug Administration believes that a 10 ms prolongation of the QT interval has clinical significance, a 30 ms QRS prolongation can cause an incorrect diagnosis of a bundle branch block, and a 120 ms adaptation of the RR interval can change a heart rate of 100 to anything between 83 and 125.

A recent study23 introduced a modified version of GAN, which incorporates two generators: one for expanding Lead I to generate the corresponding 12 leads and a second one that encodes and decodes Lead 1, acting like an autoencoder. The objective of the second generator is to approximate the latent vector of the main generator, ensuring that the 12-lead ECG retains essential characteristics of Lead I. The authors assessed their findings using a prediction model designed to detect left and right bundle branch blocks and atrial fibrillation, achieving favorable results. These features are not confined to one single lead but are more or less generalized to have information in many leads, therefore the good performance is not surprising. The dataset used for training the models is not publicly available.

Because we do not understand how advanced mathematical measures vary between healthy subjects and patients with heart disease, it becomes important to use relevant clinical outcomes as effect parameters.

We used one or two frontal limb leads (I or I+II) to train our GAN, as other authors7 have done. In theory, these leads should provide information from one or two orthogonal directions rather than just one—either the X-axis or the frontal XY-plane. We would expect the dual-lead input to produce better results than the single-lead input, however, surprisingly, the network did not benefit when two limb leads were used as input instead of one. This is most likely because the X-axis and the XY-plane hold similarly little information on the Z-dimension, which dominates some of the precordial V leads. Thus, information from all three dimensions is needed in order to reconstruct an accurate, personalized 12-lead ECG.

Why is it then, that the GAN reconstruction clearly outperforms linear regression (Table S2). This is most likely due to the GAN’s ability to extract vital biological information from the ECG in addition to the purely mathematical information, which the linear regression can also extract. It is well-known that sex, age, and body composition have large effects on the ECG, and neural networks may be able to extract these features from the ECG24,25. Patient characteristics—such as being young or old, female or male, and slim or overweight—are associated with increased amplitudes in the ECG in all dimensions and not just lead I. This biological correlation may explain why the neural networks are able to predict amplitudes in orthogonal leads, despite the fact that it should be mathematically impossible.

The study’s limitations are that our network is suboptimal for solving the task of lead reconstruction. The accuracy of our model’s predictions is inherently limited by the data on which it was trained, and a larger dataset might perform better. Future research could concentrate on improving these models to better capture this complexity, possibly by incorporating more patient-specific data into the model’s design. Furthermore, experimenting with different machine learning techniques or lead configurations may yield more effective ECG reconstruction strategies.

In conclusion, the current study used deep learning techniques to investigate the reconstruction of 12-lead ECGs from single and dual leads and demonstrated that the reconstructions are not reliable for use in clinical practice. It seems more clinically useful to improve methods to gather more information from single or reduced leads using AI instead of solving a mathematically impossible problem. However, we can investigate different hyper-parameters, such as learning rates, activation functions, and different numbers of layers, to get improved results in future studies, as hyper-parameter tuning was identified as one of the limitations of this study.

Data availability

The ECG data supporting the findings of this study are publicly available at Physionet.org with the identifier: https://doi.org/10.13026/kfzx-aw4512. The data used to generate the plots in Figs. 2, 3, 4 is available in our GitHub repository26 as a CSV file (12SL-ecg.csv), along with the corresponding code for plot generation.

Code availability

The code repository26 contains the source code for the showcased networks, including links to checkpoints of pre-trained models for reconstruction purposes, along with a collection of generated samples.

Change history

12 May 2025

In the original version of this article, an editorial summary was omitted. The original article has been corrected.

References

Frank, E. An accurate, clinically practical system for spatial vectorcardiography. Circulation 13, 737–749 (1956).

Francisco, C., Pablo, L., Leif, S., Andreas, B. & José Millet, R. Principal component analysis in ECG signal processing. EURASIP J. Adv. Signal Process. 13, 074580 (2007).

Hnatkova, K. et al. QRS micro-fragmentation as a mortality predictor. Eur. Heart J. 43, 4177–4191 (2022).

Hicks, S. A. et al. Explaining deep neural networks for knowledge discovery in electrocardiogram analysis. Sci. Rep. 11, 10949 (2021).

Lee, J., Oh, K., Kim, B. & Yoo, S. K. Synthesis of electrocardiogram V-lead signals from limb-lead measurement using R-peak aligned generative adversarial network. IEEE J. Biomed. Health Inform. 24, 1265–1275 (2020).

Seo, H.-C., Yoon, G.-W., Joo, S. & Nam, G.-B. Multiple electrocardiogram generator with single-lead electrocardiogram. Comput. Methods Prog. Biomed. 221, 106858 (2022).

Jo, Y.-Y., Choi, Y. S., Jang, J.-H. & Kwon, J.-M. ECGT2T: Towards Synthesizing Twelve-Lead Electrocardiograms from Two Asynchronous Leads. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 1–5 (2023).

Beco, S. C., Pinto, J. R. & Cardoso, J. S. Electrocardiogram lead conversion from single-lead blindly-segmented signals. BMC Med. Inform. Decis. Mak. 22, 314 (2022).

Sohn, J., Yang, S., Lee, J., Ku, Y. & Kim, H. C. Reconstruction of 12-lead electrocardiogram from a three-lead patch-type device using a LSTM network. Sensors 20, 3278 (2020).

Grande-Fidalgo, A., Calpe, J., Redón, M., Millán-Navarro, C. & Soria-Olivas, E. Lead reconstruction using artificial neural networks for ambulatory ECG acquisition. Sensors 21, 5542 (2021).

Atoui, H., Fayn, J. & Rubel, P. A novel neural-network model for deriving standard 12-lead ECGs from serial three-lead ECGs: application to self-care. IEEE Trans. Inf. Technol. Biomed. 14, 883–890 (2010).

Wagner, P. et al. PTB-XL a large publicly available electrocardiography dataset. Sci. Data 7, 154 (2020).

GE Healthcare. MarquetteTM 12SLTM ECG Analysis Program Physician’s Guide (2015).

Ronneberger, O., Fischer, P. & Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation, Vol. 9351, 234–241 (Springer, 2015).

Goodfellow, I. et al. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 27 (2014).

Thambawita, V. et al. DeepFake electrocardiograms using generative adversarial networks are the beginning of the end for privacy issues in medicine. Sci. Rep. 11, 21896 (2021).

Xu, B., Wang, N., Chen, T. & Li, M. Empirical evaluation of rectified activations in convolutional network. Preprint at https://arxiv.org/abs/1505.00853 (2015).

Isola, P., Zhu, J.-Y., Zhou, T. & Efros, A. A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, 1125–1134 (2017).

Paszke, A. et al. PyTorch: an imperative style, high-performance deep learning library. Advances in Neural Information Processing Systems. 32 (2019).

Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. International Conference on Learning Representations (ICLR). (2015).

Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V. & Courville, A. Improved training of Wasserstein GANs. Adv. Neural Inf. Process. Syst. 30, 5767–5777 (2017).

Hinton, G. E., Srivastava, N., Krizhevsky, A., Sutskever, I. & Salakhutdinov, R. R. Improving neural networks by preventing co-adaptation of feature detectors. Preprint at https://arxiv.org/abs/1207.0580 (2012).

Joo, J. et al. Twelve-lead ECG reconstruction from single-lead signals using generative adversarial networks. In Medical Image Computing and Computer Assisted Intervention – MICCAI 2023, 184–194 (Springer Nature Switzerland, 2023).

Attia, Z. I. et al. Age and sex estimation using artificial intelligence from standard 12-lead ECGs. Circulation: Arrhythmia Electrophysiol. 12, e007284 (2019).

Iconaru, E. I. & Ciucurel, C. The relationship between body composition and ECG ventricular activity in young adults. Int. J. Environ. Res. Public Health 19, 11105 (2022).

Dorobanţiu, A. & Thambawita, V. Simulamet-Host/ECG_1_to_8: v1.0.2. https://zenodo.org/records/14986849 (2025).

Acknowledgements

The research presented in this paper has benefited from the Experimental Infrastructure for Exploration of Exascale Computing (eX3), which is financially supported by the Research Council of Norway under contract 270053.

Funding

Open access funding provided by OsloMet - Oslo Metropolitan University.

Author information

Authors and Affiliations

Contributions

O.P., A.D., A.R.S., J.K.K., and V.T. conceived the experiment(s). O.P., A.D., and T.W. conducted the experiment(s). O.P., A.D., J.L.I., C.G., M.A.R., A.R.S., J.K.K., and V.T. analyzed the results. All authors reviewed and revised the manuscript.

Corresponding author

Ethics declarations

Competing interests

Arun Sridhar was previously an Editorial Board Member for Communications Medicine, and is currently a Guest Editor, but was not involved in the editorial review or peer review, nor the decision to publish this article. All other authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Presacan, O., Dorobanţiu, A., Isaksen, J.L. et al. Evaluating the feasibility of 12-lead electrocardiogram reconstruction from limited leads using deep learning. Commun Med 5, 139 (2025). https://doi.org/10.1038/s43856-025-00814-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s43856-025-00814-w

This article is cited by

-

Wearable device derived electrocardiographic age and its association with atrial fibrillation

npj Digital Medicine (2026)