Abstract

Diagnostic assessments of mild cognitive impairment (MCI) are lengthy and burdensome, highlighting the need for new tools to detect MCI. Time-domain functional near-infrared spectroscopy (TD-fNIRS) can measure brain function in clinical settings and may address this need. In this study (NCT05996575), MCI patients (n = 50) and age-matched healthy controls (HC; n = 51) underwent TD-fNIRS recordings during cognitive tasks (Verbal Fluency, N-Back). Machine learning models were trained to distinguish MCI from HC using neural activity, cognitive task behavior, and self-reported impairment as input features. Significant group-level differences (MCI vs HC) were demonstrated in self-report, N-Back and Verbal Fluency behavior, and task-related brain activation. Classifier performance was similar when using self-report (AUC = 0.76) and self-report plus behavior (AUC = 0.79) as input features, but was strongest when neural metrics were included (AUC = 0.92). This study demonstrates the potential of TD-fNIRS to assess MCI with short brain scans in clinical settings. Clinical trial registration: NCT05996575.

Similar content being viewed by others

Introduction

Mild Cognitive Impairment (MCI) is often regarded as the intermediary stage between normal aging and dementia1. In fact, 12–18% of older adults (age >60 years) live with MCI, and of those, 10–15% progress to dementia each year2. The emotional and socioeconomic burden of cognitive impairment3 has been driving development of dementia biomarkers capable of early detection and objective diagnosis with varying degrees of accuracy1,4,5. Standard assessments are commonly used to screen for potential cognitive decline and refer patients for further testing6,7,8. Diagnostic tools, however, span a range of modalities, including those readily available, such as neuropsychological assessments9 and digital biomarkers10, as well as those that are still being explored for accessibility and clinical potential, such as neural measurements11,12,13.

Many of the commonly used screening tests, such as Mini-Mental State Examination (MMSE) and Montreal Cognitive Assessment (MoCA), both fail to identify patients, including those with high cognitive reserve (false negative), and incorrectly flag patients with low education and/or high anxiety (false positives)14,15. Flagged patients are further subjected to comprehensive neuropsychological assessments which are cumbersome, stressful, and time-consuming for the patient and clinician alike as they may take up to 3-h9. Further complicating MCI diagnosis is the fact that even in healthy aging, seniors have a high-likelihood of failing at least one memory test, necessitating more than one assessment in that domain9.

One explanation for these challenges is that neuropsychological assessments only measure symptoms or expressions of the disease (e.g., cognitive deficits across multiple domains of functioning). Measures of the etiology underlying MCI—specifically, functional brain activity—may provide complementary diagnostic information, namely neural correlates of the disease. Dozens of studies over the past 20 years have confirmed that patients diagnosed with MCI have changes in brain activity detectable by functional near-infrared spectroscopy (fNIRS) measurements (for reviews see16,17). As such, multiple studies have explored the potential of fNIRS measurements in MCI diagnosis18,19,20,21. Time-domain fNIRS (TD-fNIRS) is a more advanced form of fNIRS which has better sensitivity to brain activations22,23. Despite these advantages, this technology has not been widely used in clinical trials or clinical care due to the high cost, operational complexity, and limited head coverage of the currently available systems22.

Here we employ a validated, state-of-the-art TD-fNIRS system with miniaturized components, dense whole head coverage, and the form factor of a helmet24,25,26. The technological advancements of this device greatly improve the portability and ease-of-use, making in-clinic brain imaging accessible for clinical MCI care. In light of this, we assessed the potential of diagnostic brain imaging as a replacement for standard neuropsychological evaluations in MCI. Specifically, MCI patients and age-matched healthy-controls (HCs) completed common clinical surveys and short cognitive tasks (<10 min each) administered on a computer while their brain data was recorded with the aforementioned TD-fNIRS headset. We selected tasks that target language production and working memory—two largely non-overlapping domains frequently affected in MCI that have been among the most commonly and successfully employed tasks in fNIRS research on biomarkers of cognitive impairment16,27. Afterall, behavior on the Verbal Fluency task has been shown to be useful in detecting MCI28,29,30,31 and, more importantly, some version of this task is administered in diagnostic and screening cognitive tests utilized in clinics (e.g., for both MMSE and MoCA6,7,32). Survey scores, task performance, and measures of brain activity were extracted, a subset of which were identified as statistically differentiating between the MCI and HC cohorts. These metrics were then used as an input to a series of classification models that quantified the ability of different combinations of each class (e.g. survey, behavioral, and neural metrics) to identify MCI. We show that neural and behavioral metrics offer diagnostic capabilities beyond the current standard of care. Moreover, we present a brain-based biomarker of MCI that is objective, scalable, and fast to administer, highlighting the potential of TD-fNIRS to alleviate the diagnostic and care management burden of this disease on clinicians, patients, and their families.

Results

General description

The two participant groups, MCI and age-matched HC (Table 1) wore the Kernel Flow2 TD-fNIRS headset and their neurophysiological data was measured while they performed cognitive tasks: Verbal Fluency and N-Back working memory (Methods). Note that we also collected resting state data that was not used for analyzes in this manuscript as here we focused on cognitive tasks that probe domains tested in clinical assessments. We verified that the two populations were truly age-matched by performing an independent t-test (p > 0.1). The groups were similar in terms of the number of participants with higher education as well (Table 1).

Our analyses, discussed below, focused first on characterizing the populations in terms of their cognitive assessment and survey scores. Next, we sought to find cognitive task-based features, both in terms of behavioral and neural measures that significantly differentiated between the two study cohorts. Finally, we aimed at building a Machine Learning (ML) model capable of classifying MCI with high performance.

Participant cognitive assessment and survey results

Cognitive assessments were performed in both the HC and MCI groups (Methods). In the HC population, this included the Mini-Cog, for which the majority of the participants had a score of 5 (Fig. 1a). In the MCI population, we examined the MMSE scores. Although many of the participants had a score above 25 (Fig. 1b), a cutoff often considered as normal cognitive function, the true clinical diagnosis was determined by expert clinicians (using a variety of tools). The only cognitive assessment done with both populations was the ADCS-ADL-MCI survey (Methods). As expected, survey scores were significantly different between MCI and HC (t-test: p = 5.43 × 10−6; Cohen’s d = 0.9496 with the MCI group reporting more functional impairment [lower scores; Fig. 1c]).

a The distribution of the Mini-Cog test scores, which was administered only to the HC population. The range of the scores is 0–5, with 5 indicating no cognitive decline. HC inclusion required a score > =3. b The distribution of the MMSE scores in the MCI population. The range of the scores is 0–30, with scores < =25 suggesting cognitive impairment (dashed line). There was no threshold for inclusion. c The ADCS-ADL-MCI survey scores were significantly different between the HC (blue) and MCI (red) populations with higher scores indicating better daily functioning. Mean ± 1 SEM (standard error) for MCI and HC across behavioral measures of task performance within each condition of N-Back (d) and Verbal Fluency (e). Independent t-tests were conducted. Uncorrected significant differences are indicated by symbols above the bars: †p ≤ 0.1, *p ≤ 0.05, **p ≤ 0.01, ***p ≤ 0.001, ****p ≤ 0.0001.

We examined the other two non-cognitive surveys, i.e., GAD-7 and GDS (Methods). GAD-7 showed a trend towards higher anxiety scores in the MCI population (t-test: T = −1.88, p = 0.06; Cohen’s d = -0.3745). Depression scores, as measured by GDS, were significantly different between the groups (t-test: T = −2.71, p = 7.95 × 10−3; Cohen’s d = −0.5392). Here, MCI participants reported higher depression severity compared to HC. It is worth noting that despite the statistical trend and significant differences in GAD-7 and GDS respectively, neither of the cohorts would pass the thresholds for anxiety or depression on average. We acknowledge, however, that depression and anxiety are common comorbidities of MCI, which has also been demonstrated in the prior literature33,34.

Task-related neural and behavioral features differentiating between MCI and HC

The cognitive tasks in this study were selected for their ability to probe cognitive dysfunction, and targeted working memory load (N-Back)35 and language (Verbal Fluency)36. Therefore, we extracted behavioral measures of task performance and explored whether they too were able to differentiate between the MCI and HC cohorts.

For N-Back, we evaluated both the accuracy (percent correct) and average reaction time (mean time from trial onset to response) for each of the three task conditions, yielding six total behavioral measures. Of these, we found that 1-back accuracy (independent t-test: p = 1.70 × 10−4, uncorrected; Cohen’s d = 0.7788) and 2-back accuracy (independent t-test: p = 6.71 × 10−3, uncorrected, Cohen’s d = 0.5511) significantly differentiated between the MCI and HC population (Fig. 1d).

The performance metrics considered for Verbal Fluency were the word rate (rate of word production over the entire block), the average inter-word interval (mean time between words, from first word to last word), and the inter-word variability (standard deviation of time between words) for each of the three task conditions, yielding nine total behavioral measures. Each of these metrics significantly differentiated between the MCI and HC populations, but to varying degrees (Fig. 1e). The semantic condition of the task exhibited the strongest differentiability in word rate (independent t-test: p = 1.00 × 10−5, uncorrected; Cohen’s d = 0.9193), inter-word interval (independent t-test: p = 7.00 × 10−5, uncorrected; Cohen’s d = −0.828), and inter-word variability (independent t-test: p = 3.10 × 10−4, uncorrected; Cohen’s d = −0.7440), followed by the phonological condition (independent t-test: word rate—p = 7.06 × 10−3; Cohen’s d = 0.5475, inter-word interval—p = 2.89 × 10−3; Cohen’s d = −0.6081, inter-word variability—p = 1.91 × 10−3; Cohen’s d = −0.6347, all uncorrected), and finally the control condition (independent t-tests: word rate—p = 0.03; Cohen’s d = 0.4348, inter-word interval—p = 0.048; Cohen’s d = −0.3972, inter-word variability—p = 2.11 × 10−3; Cohen’s d = −0.6285, all uncorrected). Taken together, these differences in task performance reaffirm our task selection and the role of these cognitive domains, working memory and language, in the manifestation of MCI-related impairments.

In order to capture corresponding differences in brain activity explicitly linked to different cognitive demands (of the tasks), hemodynamic response to task conditions was assessed using General Linear Models (GLMs; Methods). These models were applied separately for the Verbal Fluency and N-Back tasks. The resulting GLM test statistics quantified brain activation and deactivation patterns coupled to task conditions over the head. Group GLMs revealed activation and deactivation patterns for the MCI and HC cohorts (Supplement S1, N-Back; Supplement S2, Verbal Fluency). These activations/deactivations were averaged within 35 local regions (corresponding to module locations; Supplement S3). We then compared these values between the MCI and HC populations at each brain region, for both chromophores (HbO/HbR), and for each task condition using independent t-tests.

N-Back showed heightened differentiation in areas linked in the literature to task conditions37,38,39 such as regions within the left fronto-temporal cortex (Fig. 2a). Notably, this area exhibited reduced HbO during the 0-back condition (lowest working memory load) in HCs compared to MCI participants, possibly reflecting the lower effort required by HCs to complete the task (Fig. 2b, top). However, this pattern reversed in the more difficult task conditions. HCs displayed greater neural activity (HbO) in the left fronto-temporal areas, with the strongest response during the highest working memory load (2-back) (Fig. 2b, middle, bottom). This increased activity in task-related brain regions may be linked to the significantly better performance of HCs on the 1-back and 2-back tasks. Importantly, neural activity in other brain areas exhibited differences across the two cohorts, indicating that disease-linked differentiability spans the whole head, in line with prior research38,40.

a Shown are t-statistics from independent t-tests between HC with MCI applied to each local area of the GLM-based activation maps. Each row corresponds to a different task condition (top: 0-back, middle: 1-back, bottom: 2-back) and the two columns represent HbO/HbR respectively. Warmer colors represent more activation (or less deactivation) in the HC cohort, and cooler colors represent more activation (or less deactivation) in the MCI cohort. b Distribution of GLM statistics for representative modules shown for the HC (blue) and MCI (red) cohorts for each condition (corresponding row). Solid colored lines indicate the mean for HC (blue) and MCI (red). T-statistics and uncorrected p-value for the independent T-test (HC vs MCI), as well as Cohen’s d are displayed above each plot. For the location of each module number, see Supplement S3, or match symbols displayed next to the x-label to the topo plot from (a) in the same row.

Patterns of brain activity linked to conditions of the Verbal Fluency task not only exhibited group differences in HbO of left fronto-temporal and anterior temporal cortices (Fig. 3a, left), areas related to fluency tasks, but also exhibited strong group differences in HbR prominently in occipital regions (Fig. 3a, right). While the whole occipital region showed more deactivation in the HC group (compared to the MCI group), the right medial occipital area demonstrated the strongest and most significant difference between the two cohorts during both the control (Fig. 3b, top) and semantic conditions of the task. Similarly, the left temporal cortex demonstrated an overall increase in HbO that was strongest and most significant in the inferior medial temporal area (a region subserving categorical word retrieval41) during the control and semantic (Fig. 3b, middle) conditions of the task. Finally, during the phonological condition, the area that most strongly differentiated task-linked brain activity between the HC and MCI cohorts was the left fronto-temporal and prefrontal cortex (Fig. 3b, bottom). As with N-Back, HCs exhibited stronger activation in task-linked brain areas, while MCI participants showed increased activation (in the case of N-Back) or decreased deactivation (in the case of Verbal Fluency) during the easy conditions of the task. These findings of differential hemodynamic activity to cognitive tasks for MCI vs. HC agree with robust literature findings of similar differences38,42,43.

a Shown are t-statistics from independent t-tests between HC with MCI applied to each region of the GLM-based activation maps. Each row corresponds to a different task condition (top: control, middle: semantic, bottom: phonological) and the two columns represent HbO/HbR respectively. Warmer colors are indicative of more activation (or less deactivation) in the HC cohort while cooler colors are indicative of more activation (or less deactivation) in the MCI cohort. b Distributions of GLM test statistics for representative modules shown for the HC (blue) and MCI (red) cohorts for each condition (corresponding row). Solid colored lines indicate the mean for HC (blue) and MCI (red). T-statistics and uncorrected p-value for the independent T-test (HC vs MCI), as well as Cohen’s d are displayed above each plot. For the location of each module number, see Supplement S3, or match symbols displayed next to the x-label to the topo plot from (a) in the same row.

In summary, we have shown that not only does task performance discriminate between MCI and HC during specific conditions of the administered cognitive tasks, but so too does the underlying neural activity.

Machine learning models to detect MCI

While the group-level differences demonstrate potential of the metrics presented here to capture changes in MCI, clinical utility necessitates individual-level diagnostic accuracy. Currently, clinical assessments of MCI consist of subjective questionnaires and cognitive tests. For example, MMSE is often used by clinicians as a screening tool despite its poor sensitivity6,15. Indeed, in our MCI population, using a standard cutoff score of ≤25 only captures 34% of the MCI population (Fig. 1b). In light of this, we asked if using the rich data set at hand in conjunction with ML (Supplement S4), we would be able to detect MCI and determine which combination of features would afford the highest classification performance. To address this in a systematic way, we built successive machine learning models with different input data and evaluated their performance using standard metrics (Methods).

In the survey-only model, scores from ADCS-ADL-MCI (collected from HC and MCI cohorts) were considered as the sole input, representative of the type of self-report available to clinicians for diagnostic purposes. Similar to previous studies8, we observed that it is possible to detect MCI using this survey, although the performance of the model is not sufficient to satisfy clinical criteria (Fig. 4a, Table 2)44. It is worth noting that the performance of this model is driven by a high specificity (0.84) or proper labeling of HC individuals, while sensitivity or proper labeling of MCI individuals was rather low (0.62).

a Model performance when using only the data from ADCS-ADL-MCI survey score. Note that the model, while good at detecting healthy controls, does not have good sensitivity to MCI. b Combining behavioral metrics from tasks with survey responses did not improve the model performance. c When using survey data as well as both neural and behavioral features from tasks, the model performance was starkly improved. In (a-c) Left) Model prediction raw scores for each group (x-axis). Shaded gray areas demonstrate the utility of adapting thresholds to capture an “Inconclusive” population. Middle) ROC curve with AUC shown. The diagonal indicates chance level. Right) The confusion matrix when model outputs are binarized.

We also performed an additional analysis, beyond the traditional ML metrics, to further quantify the model performance in terms of its predictions, and highlight ways in which clinicians could use adaptive thresholds to meet screening needs (Methods). This method introduced a post-hoc relabeling of participants with inconclusive predictions. Notice the large percentage of participants that would be deemed “Inconclusive” when using only the ADCS-ADL-MCI score (50.50%).

In addition to survey-based self-reported deficits, we included cognitive performance as input to the model by adding the behavioral metrics from both the Verbal Fluency and N-Back tasks (10 total input features: 9 behavior; 1 survey). Similar task-based measures of cognition may also be available to clinicians. This model, with combined behavioral and survey data, performed very similarly to the model with only survey data as inputs (Fig. 4b). Here, too, the performance within the HC cohort was much better than the performance of the model within the MCI cohort (i.e., high specificity and low sensitivity, respectively). This further suggested that these features by themselves were not sufficient to provide a comprehensive diagnostic power.

Beyond measures currently available to clinicians, we added brain metrics as input to the model. These include GLM-derived measures of task-coupled activity for both chromophores, all brain areas, and all conditions from the Verbal Fluency and N-Back tasks (430 total input features: 420 GLM features; 9 behavior; 1 survey). The performance of this model not only surpassed those of previous models (Fig. 4c, Table 2), but also resulted in a sensitivity and specificity that were more closely matched. Notice that the raw scores (Fig. 4c, left) have shifted toward more decisive probabilities (e.g., 0 for HC and 1 for MCI). As a result, there are significantly fewer points that fall into the shaded region, representing “Inconclusive” participants (5.95%) in the adaptive decision-making threshold analysis.

The feature selection procedures performed within the nested CV resulted in approximately 100-200 features being passed to the logistic regression model on each outer fold (Supplement S5a). Visualization of the top features in the model (Supplement S5b) revealed that: (1) indeed regularization forced many of the weights towards zero such that the average weight of the top features falls off quickly (exponential decay); (2) many of the most important features corresponded to the task-related brain areas and behavioral measures that exhibited significant differences between cohorts in our preliminary analyses (e.g., Verbal Fluency Control HbR Occipital Module 30; 2-Back HbO Left Temporal Module 7; 1-Back accuracy); and (3) neural measures from both tasks, behavioral measures from both tasks, and the ADCS-ADL-MCI survey score all appear within the top 10 features.

Though ADCS-ADL-MCI ranked high in terms of feature importance, we tested whether a model with only task-based features (i.e., neural and behavioral data) could yield satisfactory results without the need to include survey data (429 total input features: 420 GLM; 9 behavior). This is of particular interest given that administering surveys is a time consuming task and self-report questionnaires are vulnerable to misrepresentation of symptomatology, deceit, and interindividual differences in subjective scales. The performance of this model was on par with the model that included ADCS-ADL-MCI survey (Table 2). This suggests that the addition of survey data may not lead to a significant information gain beyond that already available in the task-based features, or at least that the model is able to compensate by increasing the weights of task-based features that manifest some representation of the ADCS-ADL-MCI score.

Taken together, these results suggest that brain-based measures of cognitive function in domains affected by early cognitive impairment could serve as valuable tools for clinicians and primary care doctors. They may also offer a more accurate assessment of cognitive deficits compared to self-reported measures.

Discussion

In the current study, we sought to examine the ability of detecting MCI using data recorded with Kernel Flow2 while participants, MCI patients and age-matched HCs, performed short commonly-used cognitive tasks. We found that our ML model not only captured expert clinician-based diagnosis of MCI, but also exhibited much higher performance than screening tools such as both the MMSE and self-report ADCS-ADL-MCI surveys. Moreover, the model also shed light on the features necessary for classifying MCI and underscored the importance of both the brain and behavior data from the cognitive tasks.

To classify MCI, we used logistic regression with feature selection and regularization, which not only handled a relatively large number of input features, but also provided a clear view of feature importance by examining the model’s weights. The advantage of this approach was the offered transparency and interpretability that can be lacking in more complex models. Consistent with its role as a screening tool, ADCS-ADL-MCI ranked in the top five features, though it was prone to false negatives when used in isolation. Furthermore, reflecting the involvement of memory and language dysfunctions in MCI28,45,46,47, behavioral measures from both tasks were also top features. Highlighting the advantage of using finer-grained quantifications of task performance, it was not word rate, as typically assessed in clinical cognitive tests, but inter-word interval—an indirect measure of word clustering with demonstrated importance in the literature29,30,31—that had a more significant role in the model. Finally, we found that neural metrics across both chromophores, all task conditions (including control conditions), and over many regions of the head, especially temporal regions, appeared among the top features. This highlights the necessity of including neural activity recorded over tasks that cover different domains of cognitive impairment, and provides evidence that a similar protocol may be used in the future in lieu of or in addition to standard cognitive assessments.

Another strength of the current approach is that our model labels are based on clinician diagnoses. Our patient cohort was enrolled across several clinical sites with different doctors and clinical protocols. We view this “real world” labeling of our data as a strength, as it allows us to capture something broad and common across diverse protocols for diagnosing patients with MCI. These cognitive assessments usually involve a battery of tests and can take up to 3-h, therefore introducing more efficient and streamlined procedures, such as short neural recordings, could be beneficial for diagnostic purposes. A critical next step in the development of this biomarker is validation of the generalizability of the classification model to an out of sample cohort. To further enhance its clinical utility, future work should also investigate distinctions between amnestic and non-amnestic subtypes of MCI.

Historically, neural recordings were cumbersome and/or restricted to hospital settings. Here, we leverage the ease-of-use and portability of the Kernel Flow2 system, which allowed us to carry out the current study at multiple sites. It is possible, nonetheless, that data quality metrics may vary depending on the clinic in which data was collected. While we did not include “data collection site” as a factor in our model and analyses, we acknowledge that this may require additional controls. To ensure homogenous data quality across different locations, we not only monitored incoming data post-hoc but also conducted post-study interviews with individual clinics to receive feedback for further streamlining our protocols, which we plan to integrate into future data collection.

One limitation of our current data collection is potential differences in headset placement on different participants. Although operators are instructed to follow best practices48 to place the headset according to spatial landmarks on the head, we anticipate variability from participant to participant as well as between placements on a given individual. We have previously shown that within-individual placement variability is minimal24, and to further alleviate potential differences among participants, we averaged neural activity over larger areas (module-level analyses). Real-time placement aids, such as automated feedback during setup using computer vision can additionally remove this variability.

In the current manuscript we focused on cognitive tasks with behavioral components that are often incorporated into MCI clinical assessments; however, in future analyses we plan to assess both to what extent brain metrics extracted from resting state recordings (i.e. measures not linked to cognitive demands) can supplement the diagnostic power of our ML models and their stand-alone classification performance. A vast literature on MCI detection using resting state fMRI11,49 and fNIRS50,51 data provides evidence that features such as functional connectivity within and across different networks and fALFF may be clinically useful. Another feature that can be extracted from our TD-fNIRS data, absolute oxygenation during the resting state session16, may offer additional insights into the gradient of cognitive function in healthy controls, MCI, and Alzheimer’s disease.

Taken together, we showcase the ability of Kernel Flow2 in detecting MCI based on clinician’s diagnosis with high accuracy and performance. Although time-consuming battery of cognitive testing is the current gold standard of MCI diagnosis, we anticipate that neurobehavioral measurements can offer valuable insights into the trajectory of cognitive decline. With adequate testing and wide-spread adoption, these measurements may provide a shorter, more reliable, and more objective standardized replacement for these cognitive tests in the future.

Methods

Participants and screening procedures

Clinically diagnosed MCI patients (n = 50) and age-matched healthy controls (n = 51) completed this study (NCT05996575). All patients were diagnosed by an expert clinician (neurologist or psychiatrist). Participants gave written informed consent before beginning the study in accordance with the ethical review of the Advarra IRB (#Pro00071712), which approved this study, and the Declaration of Helsinki. Patient data were collected at two clinical locations, and healthy control data was collected at both clinical locations and Kernel.

All participants were required to meet the following inclusion criteria: (1) 55–85 years of age, inclusive at time of enrollment, (2) the ability to perform informed consent on their own, (3) fluent in English (speaking and reading), and none of the following exclusion criteria: (1) current substance or alcohol dependence, and/or alcohol or substance abuse as determined by CAGE-AID assessment for drug and alcohol abuse, with a score of 2 or higher resulting in exclusion, (2) uncorrected major visual or auditory deficits that would prevent them from completing a study task, (3) current or recent (in the past 6 months) chemotherapy and/or radiation for any cancer, and (4) major medical illnesses and psychiatric conditions (other than MCI). Additionally, MCI patients were eligible to participate in the study if they had a diagnosis of MCI (amnesiac or non-amnesiac) as determined by a clinician that had not progressed to an Alzheimer’s or dementia diagnosis. Whether or not they were suspected of having underlying AD pathology was not part of our inclusion or exclusion criteria. Moreover, healthy controls were eligible to participate in the study if they fulfilled none of the following additional exclusion criteria: (1) prior MCI or memory impairment diagnosis, (2) first-degree relative with dementia or clinically relevant memory problems, and (3) Alzheimer’s or dementia diagnosis.

Data was initially collected from 130 participants, 65 HCs and 65 MCI participants. We excluded 2 participants (1 HC and 1 MCI) who were out of the inclusion criteria age-range. Additionally, we excluded the 8 youngest HC participants (those under age 59) to age-match the MCI and HC cohorts. Of the remaining participants (56 HC and 62 MCI), only those with complete datasets, i.e., participants who finished the entire protocol, were considered for further analysis. There were 5 HCs with incomplete visits (4 encountered technical issues and 1 stopped early due to headset discomfort). In the MCI cohort, 12 participants had incomplete visits (1 fell asleep, 1 stopped early due to headset discomfort, 3 had missing data, 7 encountered technical issues many of which were related to clinic wifi connections). This left 51 HC and 50 MCI participants with complete visits, which were included in analyses.

Task design

Tasks were designed and presented using the Unity game engine while participants’ neurophysiological data was recorded with the Kernel Flow2 system (Fig. 5a, b). In the Verbal Fluency task (Fig. 5c), participants orally produced words in response to three types of prompts: phonological (words beginning with a specified letter), semantic (words from a given category), and control (reciting the days of the week repeatedly). Prompts appeared in white text on a black screen. Control instructions read, “At a slow and steady pace, repeat the days of the week.” Phonological prompts read, “Say words starting with {insert letter},” and semantic prompts read, “Say {insert category}.” Participants were instructed to generate as many relevant words as possible for as long as each prompt was on the screen. They were instructed to avoid repeat words and proper nouns. They were also required to press the spacebar each time they produced a word. They were told that they could whisper or speak the words aloud.

Each participant completed one full run of the task at their study visit. A run began with a 10-s rest period (reminding participants to press the spacebar) followed by a 20-s control block (“precontrol”; not utilized in behavioral or neural analysis) to get the participant in the rhythm of the task. It then progressed through a 60-s phonological block, in which three different letters were presented in succession for 20-s each. A 60-s control block followed. After a 10-s rest, the semantic block began, with three categories presented for 20-s each. The final block was a 60-s control block, and the task concluded with a 10-s rest period. All participants received identical prompts in the same order. The phonological prompts were F/A/S, and the semantic prompts were animals/fruits/sports. Before data collection, participants performed a practice session during which a semantic prompt of “colors” and phonological prompt of letter “V” was used.

The N-Back task is a working memory task where participants were asked to respond when the presented stimulus matched one presented N steps earlier (Fig. 5d). The difficulty was adjusted by modifying the load factor “N”. In this implementation, the task was gamified to resemble a card game for increased engagement. It included three block types: 0-back, 1-back, and 2-back, using numbers displayed on virtual playing cards.

All blocks of the task began with a stack of face-down cards in the upper right corner of the screen. In 0-back blocks, one card at a time slid down from the stack to the center of the screen, flipped to reveal its number, remained face-up for 0.75-s, and then flipped face-down as it slid left and exited the screen. Participants were asked to press the spacebar as quickly as possible when a card with the number “0” appeared face-up. This repeated for 28 trials. In 1-back blocks, participants were asked to press the spacebar if the number on the current card matched the one flipped immediately before it. The card movement was similar to a 0-back block, but as each card flipped face-down it slid to the left and remained face-down on the screen as the next card appeared and flipped face-up. If the numbers on two consecutive cards (the face-up and face-down cards) matched, the participant was instructed to press the spacebar. This process also repeated for 28 trials. Finally, in 2-back blocks, participants were asked to press the spacebar if the number on the current card matched the number presented two cards prior. The motion was similar to a 1-back block, but three consecutive cards remained on the screen at once—two face-down on the left and the current card face-up on the right. If the leftmost card matched the face-up card, the participant was to press the spacebar. The leftmost card slid off the screen when a new card appeared. This repeated for 28 trials. Each task run generated unique stimuli with a 25% match rate. The block order was always 0-back, 2-back, 1-back, 0-back, 1-back, 2-back. A 25-s rest period was included between blocks and at the beginning and end of the task. Prior to data collection, each participant completed a guided tutorial combining instructions and practice.

Cognitive screening and survey data collection

Multiple surveys were administered to the participants during the study. For the HC population, the Mini-Cog52 was employed as a quick assessment tool for dementia screening. With the scores varying between 0 and 5, a cutoff of ≥3 was used to verify healthy functioning, as is customary. For the MCI population, the MMSE7,32 scores were collected from the participating clinics. Here, the range of the scores is 0–30, with lower scores indicating lower cognitive functioning. There were additional surveys that were administered to both HC and MCI cohorts:

-

1.

Self-reported Alzheimer’s Disease Cooperative Study Activities of Daily Living (ADL) for Mild Cognitive Impairment (ADCS-ADL-MCI) survey53: This survey, which aims at assessing the degree of functional impairment as a result of cognitive impairment, consists of 20 questions and results in a score between 0 and 49, with lower scores suggesting more functional/cognitive impairment.

-

2.

General Anxiety Disorder (GAD)-754: Commonly used as an initial screening tool for screening anxiety disorders, this questionnaire consists of 7 questions, resulting in a score between 0 and 21, where higher scores suggest more severe symptoms.

-

3.

Geriatric Depression Scale (GDS)55: In older adults, this 15-item self-report questionnaire, resulting in a score from between 0 and 15, is an initial screening tool for depression. Higher scores indicate higher symptom severity.

fNIRS data collection and feature extraction

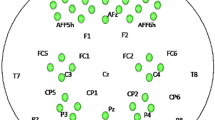

Neurophysiological data were collected using the Kernel Flow2 TD-fNIRS system25,26 as participants wore the headset during cognitive tasks. Systems specifications were described in detail previously24. Briefly, distributions of the times of flight of photons (DTOFs) of over 3000 source–detector pairs (channels) from 40 modules arranged over the prefrontal, parietal, temporal, and occipital areas were recorded (effective sampling rate 3.76 Hz) (Fig. 5a). Of the original configuration of modules, 35 were deemed to be most relevant to our tasks (the most posterior modules over the inferior occipital regions were removed) (Supplement S3). Note that the L/R parietal regions span mid to fronto-parietal cortex, while the occipital region spans occipital to posterior parietal cortex.

Data preprocessing and relative hemoglobin concentrations

Data preprocessing was done similar to those in prior publications24,56,57. A channel selection procedure (using histogram shape)25 was followed by computing DTOFs’ moments (i.e., sum, mean, and variance moments)58,59. We then used a sensitivity method23, deriving sensitivities from a finite element modeling forward model from NIRFAST60,61, to obtain the relative changes in absorption coefficients for each wavelength, which were subsequently converted to changes in oxyhemoglobin and deoxyhemoglobin concentrations (HbO and HbR, respectively) using the modified Beer–Lambert law (mBLL)62. These signals were further processed using motion correction (Temporal Derivative Distribution Repair63), cubic spline interpolation64, detrending using a moving average algorithm (100-s kernel), and finally short channel regression65 (SDS = 8 mm).

General linear model (GLM) approach

As previously described in detail56, a design matrix (X: a set of task specific regressors), and residuals (ε) were used to model the time course of each channel (y: relative HbO and separately relative HbR) using the following equation: y = Xβ + ε. Here, the β coefficients represent the contribution of each regressor. A least-squares solution combined with prewhitening of the time courses (using an autoregressive model of order 15, i.e., 4 × the sampling rate of our system) was used to solve this multiple linear regression problem. To construct the design matrix, X, we used the following regressors: (1) time course of the task blocks as square waves that were then convolved with a canonical hemodynamic response function for each block type); (2) drift regressors; and (3) a standard set of Discrete Cosine Transform regressors to remove trends in the data (with a period of 100 s and more) as implemented in nilearn Python package66. Test statistics, commonly referred to as the GLM Contrasts, and their p-values were computed between conditions of interest (using t-tests) on the β coefficients. This approach was used for both the Verbal Fluency and N-Back tasks.

-

1.

Verbal Fluency task: The three block types in the experimental design, i.e., control, semantic, and phonological were used as GLM contrasts.

-

2.

N-Back task: Similar to the Verbal Fluency task, the three block types in the experimental design, i.e., 0-back, 1-back, and 2-back were used as GLM contrasts.

Machine learning (ML) for classification

We used a logistic regression model to predict whether a participant belonged to the HC or MCI group (Supplement S4). Expert clinician diagnosis was used to label MCI rather than the score on any particular survey, cognitive test, or questionnaire. We trained and tested different models depending on the inputs (described below). Stratified nested K-fold cross validation was used (Kinner = 10, Kouter = 10) where the inner loop (Ntrain~81, Ntest~9) was used for regressing out confounds, feature selection, and hyperparameter optimization, while the outer loop (Ntrain~90, Ntest~10) was used to obtain the model performance on the independent test sets. An elastic net regularization with L1-ratio = 0.5 was used to push feature weights towards zero and avoid overfitting with a large number of input features.

In order to prevent data leakage, the following steps were fit on the training set of the inner cross-validation loop and applied to the test set:

-

1.

Imputation of input data: Missing input data, which could result from poor headset coupling in a region, was filled in as the mean value from the training set population.

-

2.

Standardization of the input data: Each input feature was z-scored with respect to the training set population.

-

3.

Residualization of confound variables: Age and gender both exhibited relationships with input features and thus were regressed out from the input features. Specifically, a linear model was fit between the confound variables and each input feature. Then the residuals were computed and replaced the original input features, as they represent the variability in each metric that could not be explained by the confound variables.

-

4.

Feature selection: Feature selection was implemented using statistical tests, namely by applying independent t-tests on a given feature between the two HC and MCI cohorts. The p-value from this test was later used in step (5).

-

5.

Hyperparameter tuning: The only hyperparameter that was tuned for this model was the alpha threshold, which was allowed to vary between 0.05 and 0.30 in steps of 0.05.

On each outer fold, the best hyperparameter from the inner loop cross validation was used. The model yielded both a predicted label (binary output) and a raw score (probability between 0 and 1). Predictions and scores were concatenated across all outer loops, resulting in one prediction for each participant.

To benchmark the performance of different types of input features we fit three different models: one using only survey response (score from ADCS-ADL-MCI) as input, one using survey responses and behavioral metrics from the two cognitive tasks as input, and one adding neural data thus yielding survey, behavioral, and brain metrics as input. Importantly, the folds were fixed such that they were the same when training all three models, so results could be directly compared. We also trained a model limiting the inputs to those available during the cognitive tasks, i.e., brain and behavioral metrics to investigate the necessity of the survey data to model performance.

To determine which features were contributing the most to the model, we ranked features by the absolute value of the average feature weights over the outer folds of the model and visualized the top 50 input features.

We computed the following metrics to capture the performance of our models in detecting MCI:

-

1.

Confusion matrix: Computing the number of true positives (TP), true negatives (TN), false positives (FP) and false negatives (FN)

-

2.

Accuracy: (TN + TP)/Ntotal

-

3.

Recall (Sensitivity): TP/(TP + FN)

-

4.

Precision: TP/(TP + FP)

-

5.

Specificity: TN/(TN + FP)

-

6.

Negative Predictive Value (NPV): TN/(TN + FN)

-

7.

AUC: Area under the receiver operating characteristic (ROC) curve.

-

8.

Adaptive thresholding of the model: Here we followed techniques demonstrated in the literature67 to split the model’s decision threshold into 2, creating a new label where scores between these thresholds are deemed “Inconclusive” or “Flagged for a “Follow-up”. Specifically, the upper threshold is adjusted such that it is the lowest value satisfying a particular specificity (here 0.90) and then the bottom threshold is similarly adjusted to be the highest value satisfying a particular sensitivity (here 0.90).

Data availability

The data that support the findings of this study are not openly available due to reasons of sensitivity and are available from the corresponding author upon reasonable request and with a data sharing agreement.

Code availability

The underlying code for this study is not publicly available for proprietary reasons.

References

Petersen, R. C. et al. Mild cognitive impairment: a concept in evolution. J. Intern. Med. 275, 214–228 (2014).

More than normal aging: understanding mild cognitive impairment. Alzheimer’s Assoc. (2022).

Frech F. H., et al. Economic impact of progression from mild cognitive impairment to Alzheimer disease in the United States. J. Prev. Alzheimer’s Dis. https://doi.org/10.14283/jpad.2024.68 (2024).

Hansson, O. Biomarkers for neurodegenerative diseases. Nat. Med. 27, 954–963 (2021).

Self, W. K. & Holtzman, D. M. Emerging diagnostics and therapeutics for Alzheimer disease. Nat. Med. 29, 2187–2199 (2023).

Nasreddine, Z. S. et al. The Montreal Cognitive Assessment, MoCA: a brief screening tool for mild cognitive impairment. J. Am. Geriatr. Soc. 53, 695–699 (2005).

Folstein, M. F., Folstein, S. E. & McHugh, P. R. “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. J. Psychiatr. Res. 12, 189–198 (1975).

Pedrosa, H. et al. Functional evaluation distinguishes MCI patients from healthy elderly people — The ADCS/MCI/ADL scale. J. Nutr. Health Aging 14, 703–709 (2010).

Anderson, N. D. State of the science on mild cognitive impairment (MCI). CNS Spectr. 24, 78–87 (2019).

Ding, Z., Lee, T. & Chan, A. S. Digital cognitive biomarker for mild cognitive impairments and dementia: a systematic review. JCM 11, 4191 (2022).

Ibrahim, B. et al. Diagnostic power of resting-state fMRI for detection of network connectivity in Alzheimer’s disease and mild cognitive impairment: a systematic review. Hum. Brain Mapp. 42, 2941–2968 (2021).

Lee, T., Guo, L. & Chan, A. S. fNIRS as a biomarker for individuals with subjective memory complaints and MCI. Alzheimer’s Dement. 20, 5170–5182 (2024).

Deng, J. et al. Novel methodology for detection and prediction of mild cognitive impairment using resting-state EEG. Alzheimer’s Dement. 20, 145–158 (2024).

Carson, N., Leach, L. & Murphy, K. J. A re-examination of Montreal Cognitive Assessment (MoCA) cutoff scores. Int. J. Geriat. Psychiatry 33, 379–388 (2018).

Gluhm, S. et al. Cognitive performance on the Mini-Mental State Examination and the Montreal Cognitive Assessment across the healthy adult lifespan. Cogn. Behav. Neurol. 26, 1–5 (2013).

Yeung, M. K. & Chan, A. S. Functional near-infrared spectroscopy reveals decreased resting oxygenation levels and task-related oxygenation changes in mild cognitive impairment and dementia: a systematic review. J. Psychiatr. Res. 124, 58–76 (2020).

Butters, E., Srinivasan, S., O’Brien, J. T., Su, L. & Bale, G. A promising tool to explore functional impairment in neurodegeneration: a systematic review of near-infrared spectroscopy in dementia. Ageing Res. Rev. 90, 101992 (2023).

Park, J.-H. Can the fNIRS-derived neural biomarker better discriminate mild cognitive impairment than a neuropsychological screening test?. Front. Aging Neurosci. 15, 1137283 (2023).

Yoo, S.-H. et al. Diagnosis of mild cognitive impairment using cognitive tasks: a functional near-infrared spectroscopy study. CAR 17, 1145–1160 (2021).

Yang, D. et al. Detection of mild cognitive impairment using convolutional neural network: temporal-feature maps of functional near-infrared spectroscopy. Front Aging Neurosci. 12, 141 (2020).

Li, R. et al. Early detection of Alzheimer’s disease using non-invasive near-infrared spectroscopy. Front. Aging Neurosci. 10, 366 (2018).

Lange, F. & Tachtsidis, I. Clinical brain monitoring with time domain NIRS: a review and future perspectives. Appl. Sci. 9, 1612 (2019).

Ortega-Martinez A., et al. How much do time-domain functional near-infrared spectroscopy (fNIRS) moments improve estimation of brain activity over traditional fNIRS? Neurophoton 10 https://doi.org/10.1117/1.NPh.10.1.013504 (2022).

Dubois, J. et al. Reliability of brain metrics derived from a time-domain functional near-infrared spectroscopy system. Sci. Rep. 14, 17500 (2024).

Ban H. Y., et al. Kernel Flow: a high channel count scalable time-domain functional near-infrared spectroscopy system. J. Biomed. Opt. 27 https://doi.org/10.1117/1.JBO.27.7.074710 (2022).

Chekin, Y. et al. A compact time-domain diffuse optical tomography system for cortical neuroimaging. Imaging Neurosci. 3, imag_a_00475 (2025).

Yang, D., Hong, K.-S., Yoo, S.-H. & Kim, C.-S. Evaluation of neural degeneration biomarkers in the prefrontal cortex for early identification of patients with mild cognitive impairment: an fNIRS study. Front Hum. Neurosci. 13, 317 (2019).

Nutterupham, K. et al. Verbal fluency performance in amnestic MCI and older adults with cognitive complaints. Arch. Clin. Neuropsychol. 23, 229–241 (2008).

Zhao, Q., Guo, Q. & Hong, Z. Clustering and switching during a semantic verbal fluency test contribute to differential diagnosis of cognitive impairment. Neurosci. Bull. 29, 75–82 (2013).

Bertola L., et al. Graph analysis of verbal fluency test discriminate between patients with Alzheimer’s disease, mild cognitive impairment and normal elderly controls. Front. Aging Neurosci. 6 https://doi.org/10.3389/fnagi.2014.00185 (2014).

Pakhomov, S. V. S. & Hemmy, L. S. A computational linguistic measure of clustering behavior on semantic verbal fluency task predicts risk of future dementia in the Nun Study. Cortex 55, 97–106 (2014).

Gallegos, M. et al. 45 Years of the Mini-Mental State Examination (MMSE): a perspective from ibero-america. Dement. Neuropsychol. 16, 384–387 (2022).

Ma, L. Depression, anxiety, and apathy in mild cognitive impairment: current perspectives. Front. Aging Neurosci. 12, 9 (2020).

Zacková, L., Jáni, M., Brázdil, M., Nikolova, Y. S. & Marečková, K. Cognitive impairment and depression: meta-analysis of structural magnetic resonance imaging studies. NeuroImage: Clin. 32, 102830 (2021).

López Zunini, R. A. et al. Event-related potentials elicited during working memory are altered in mild cognitive impairment. Int. J. Psychophysiol. 109, 1–8 (2016).

McDonnell, M. et al. Verbal fluency as a screening tool for mild cognitive impairment. Int. Psychogeriatr. 32, 1055–1062 (2020).

Lamichhane, B., Westbrook, A., Cole, M. W. & Braver, T. S. Exploring brain-behavior relationships in the N-back task. NeuroImage 212, 116683 (2020).

Papma, J. M. et al. The influence of cerebral small vessel disease on default mode network deactivation in mild cognitive impairment. NeuroImage Clin. 2, 33–42 (2013).

Yaple, Z. A., Stevens, W. D. & Arsalidou, M. Meta-analyses of the n-back working memory task: fMRI evidence of age-related changes in prefrontal cortex involvement across the adult lifespan. NeuroImage 196, 16–31 (2019).

Niu, H. et al. Reduced frontal activation during a working memory task in mild cognitive impairment: a non-invasive near-infrared spectroscopy study. CNS Neurosci. Ther. 19, 125–131 (2013).

Baldo, J. V., Schwartz, S., Wilkins, D., Dronkers, N. F. Role of frontal versus temporal cortex in verbal fluency as revealed by voxel-based lesion symptom mapping. J. Int. Neuropsych. Soc. 12 https://doi.org/10.1017/S1355617706061078 (2006).

Kim, M., et al. Investigation of cerebral hemodynamic changes in mild cognitive impairment due to Alzheimer’s disease during a verbal fluency task. In: Van Toi V., Le, T. Q., Ngo, H. T., Nguyen, T.-H., editors. 7th International Conference on the Development of Biomedical Engineering in Vietnam (BME7), vol. 69, 379–382 (Springer, 2020).

Liampas, I. et al. The contribution of Functional Near-Infrared Spectroscopy (fNIRS) to the study of neurodegenerative disorders: a narrative review. Diagnostics 14, 663 (2024).

Weissberger, G. H. et al. Diagnostic accuracy of memory measures in Alzheimer’s dementia and mild cognitive impairment: a systematic review and meta-analysis. Neuropsychol. Rev. 27, 354–388 (2017).

Monsch, A. U. et al. Comparisons of verbal fluency tasks in the detection of dementia of the Alzheimer type. Arch. Neurol. 49, 1253–1258 (1992).

Fraga, F. J. et al. Early diagnosis of mild cognitive impairment and Alzheimer’s with event-related potentials and event-related desynchronization in N-back working memory tasks. Comput. Methods Prog. Biomed. 164, 1–13 (2018).

Borkowska, A., Drożdż, W., Jurkowski, P. & Rybakowski, J. K. The Wisconsin Card Sorting Test and the N-back test in mild cognitive impairment and elderly depression. World J. Biol. Psychiatry 10, 870–876 (2009).

Yücel M. A., et al. Best practices for fNIRS publications. Neurophoton 8 https://doi.org/10.1117/1.NPh.8.1.012101 (2021).

Lin, L., Xing, G. & Han, Y. Advances in resting state neuroimaging of mild cognitive impairment. Front Psychiatry 9, 671 (2018).

Yang, D. & Hong, K.-S. Quantitative assessment of resting-state for mild cognitive impairment detection: a functional near-infrared spectroscopy and deep learning approach. JAD 80, 647–663 (2021).

Zhang, S. et al. Early screening model for mild cognitive impairment based on resting-state functional connectivity: a functional near-infrared spectroscopy study. Neurophotonics 9, 045010 (2022).

Borson, S., Scanlan, J., Brush, M., Vitaliano, P. & Dokmak, A. The Mini-Cog: a cognitive vital signs measure for dementia screening in multi-lingual elderly. Int. J. Geriat Psychiatry 15, 1021–1027 (2000).

Potashman, M. et al. Psychometric properties of the Alzheimer’s Disease Cooperative Study – Activities of Daily Living for Mild Cognitive Impairment (ADCS-MCI-ADL) scale: a post hoc analysis of the ADCS ADC-008 trial. BMC Geriatr. 23, 124 (2023).

Spitzer, R. L., Kroenke, K., Williams, J. B. W. & Löwe, B. A brief measure for assessing generalized anxiety disorder: the GAD-7. Arch. Intern. Med. 166, 1092 (2006).

Yesavage, J. A. & Sheikh, J. I. Geriatric Depression Scale (GDS): recent evidence and development of a shorter version. Clin. Gerontol. 5, 165–173 (1986).

Dubois, J. et al. Change in brain asymmetry reflects level of acute alcohol intoxication and impacts on inhibitory control. Sci. Rep. 13, 10278 (2023).

Castillo, A. et al. Measuring acute effects of subanesthetic ketamine on cerebrovascular hemodynamics in humans using TD-fNIRS. Sci. Rep. 13, 11665 (2023).

Wabnitz, H., Contini, D., Spinelli, L., Torricelli, A. & Liebert, A. Depth-selective data analysis for time-domain fNIRS: moments vs time windows. Biomed. Opt. Express 11, 4224 (2020).

Liebert, A. et al. Evaluation of optical properties of highly scattering media by moments of distributions of times of flight of photons. Appl. Opt. 42, 5785 (2003).

Dehghani, H. et al. Near infrared optical tomography using NIRFAST: Algorithm for numerical model and image reconstruction. Commun. Numer. Methods Eng. 25, 711–732 (2009).

Doulgerakis, M., Eggebrecht, A., Wojtkiewicz, S., Culver, J. & Dehghani, H. Toward real-time diffuse optical tomography: accelerating light propagation modeling employing parallel computing on GPU and CPU. J. Biomed. Opt. 22, 1 (2017).

Huppert, T. J., Diamond, S. G., Franceschini, M. A. & Boas, D. A. HomER: a review of time-series analysis methods for near-infrared spectroscopy of the brain. Appl. Opt. 48, D280 (2009).

Fishburn, F. A., Ludlum, R. S., Vaidya, C. J. & Medvedev, A. V. Temporal Derivative Distribution Repair (TDDR): a motion correction method for fNIRS. NeuroImage 184, 171–179 (2019).

Scholkmann, F., Spichtig, S., Muehlemann, T. & Wolf, M. How to detect and reduce movement artifacts in near-infrared imaging using moving standard deviation and spline interpolation. Physiol. Meas. 31, 649–662 (2010).

Gagnon, L. et al. Improved recovery of the hemodynamic response in diffuse optical imaging using short optode separations and state-space modeling. NeuroImage 56, 1362–1371 (2011).

Abraham A., et al. Machine learning for neuroimaging with scikit-learn. Front. Neuroinform. 8 https://doi.org/10.3389/fninf.2014.00014 (2014).

Arranz, J. et al. Diagnostic performance of plasma pTau217, pTau181, Aβ1-42 and Aβ1-40 in the LUMIPULSE automated platform for the detection of Alzheimer disease. Alzheimer's Res. Ther. 16, 139 (2024).

Acknowledgements

We gratefully acknowledge the data collection teams at Syrentis Clinical Research and Profound Research, and Dr. Lorrie Bisesi at Syrentis Clinical Research for the operational support. We also thank the participants for their time and contribution to our study. This study was funded by Kernel.

Author information

Authors and Affiliations

Contributions

Conceptualization: K.L.P., R.M.F. Software: J.D., Z.M.A., E.M.K. Methodology: J.D., E.M.K., Z.M.A., K.L.P. Formal analysis: J.D., E.M.K., Z.M.A., M.T., N.M. Investigation: M.T., N.M., E.M.K. Project administration: M.T., K.L.P. Data curation: J.D., E.M.K., Z.M.A., N.M. Medical oversight: J.G.D., G.S. Visualization: J.D., E.M.K., Z.M.A., N.M., M.T. Writing - Original Draft: E.M.K., Z.M.A., N.M., M.T. Writing - Review and Editing: all authors. Author order was determined alphabetically. Dr. Katherine Perdue agrees to be accountable for all aspects of the work, ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Corresponding author

Ethics declarations

Competing interests

This study was funded by Kernel, and authors J.D., R.M.F., E.M.K., Z.M.A., N.M., K.L.P., and M.T. are Kernel employees. Authors J.G.D. and G.S. received funding from Kernel to run this study.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dubois, J., Duffy, J.G., Field, R.M. et al. A functional neuroimaging biomarker of mild cognitive impairment using TD-fNIRS. npj Dement. 1, 14 (2025). https://doi.org/10.1038/s44400-025-00018-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s44400-025-00018-y