Abstract

Ancient scripts provide invaluable insights into ancient societies, and their effective recognition is crucial for cultural relic preservation, textual decipherment, and heritage. Current research primarily focuses on single mode ancient text data recognition such as processing rubbings or handwritten scripts independently, yet ancient scripts exhibit diverse forms across modalities. To address this, we propose a novel multi-modal recognition framework capable of processing hybrid inputs like rubbings of oracle bone inscriptions and handwritten scripts. Our method employs two additional modules, a cross-modal data homogenization module to unify heterogeneous data representations and a data augmentation module to enhance model robustness, then achieve the recognition with convolutional neural networks. Evaluated on oracle bone inscriptions and bronze inscriptions datasets, our approach outperforms baseline methods in recognition accuracy and generalization capability across modalities.

Similar content being viewed by others

Introduction

Character recognition is one of the foundational pillars of ancient scripts research and holds significant importance for exploring ancient civilizations. Ancient Greece, Rome, Egypt, and China each developed unique writing systems, many of which have faded or disappeared over centuries. Today, these texts are preserved in unearthed cultural relics, often fragmented and displaced—sometimes far from their original locations—due to natural decay or human activities such as trafficking.

Traditional methods of ancient script recognition rely heavily on accessing extensive repositories of information and the expertise of scholars. This process primarily depends on a researcher’s accumulated experience and the corpus they have access to. When specialists study these inscriptions, they must invest considerable effort and care in organizing relevant materials, often engaging in high-threshold tasks such as reconstructing missing texts and conducting comprehensive literature reviews. As a result, traditional methods are highly complex, time-consuming, and require specialized workflows, which have increasingly faced limitations in recent years.

In order to change the current predicament, some researchers are starting to explore new methods with computer technology. The initial exploratory methods used traditional machine learning methods. In those methods, features of ancient scripts images are analyzed and transformed into corresponding structural encoding forms1,2. Then, classification algorithms such as support vector machine and K-nearest neighbor are used to classify the results to conduct the recognition process3,4. These traditional methods generally demonstrate robust recognition capabilities for these ancient inscriptions with uncomplicated line structures. However, challenges remain when processing characters with complex structures, particularly for multi-component characters of the ancient scripts, where recognition performance tends to be significantly weaker.

The advent of artificial intelligence (AI) and deep learning technologies has opened new avenues for researchers, enabling them to uncover and leverage intricate statistical patterns within vast datasets. A notable example is Ithaca5, a groundbreaking tool that has reinforced confidence in this emerging research direction. By using Yolo6, Resnet 507, Inception-v38 and other deep learning models, the research on ancient character recognition has obtained many valuable research results9,10,11. Research on ancient character recognition such as Oracle bone inscriptions, bronze inscriptions and ancient Egyptian texts, has gained more effective ideas12,13,14. Even some niche ancient texts have gained new research space, such as the Shui characters15.

However, some practical problems faced by the research on ancient script recognition have constrained the development of related studies. Taking ancient Chinese scripts such as oracle bone inscriptions and bronze inscriptions as examples, existing research on character recognition faces the following challenges:

-

(1)

Limited data scale and highly uneven distribution. Existing data on oracle bone script and bronze inscriptions primarily consist of rubbings and their replicas. The overall volume of data is relatively limited. For instance, in the case of oracle bone script, some characters appear thousands of times, whereas others occur only once or twice. In deep learning, particularly with large models, the limited data volume hampers the application of related technologies.

-

(2)

Diverse forms of scripts. In addition to rubbings, handwritten forms are another primary medium for oracle bone inscriptions and bronze inscriptions. These handwritten forms are frequently encountered in academic papers and monographs. When retrieving information from diverse sources, both rubbings and handwritten forms are utilized. Therefore, the recognition system must accommodate hybrid type data.

-

(3)

High resource demand. Deep learning, particularly large language models, requires substantial hardware resources. This poses significant constraints on the advancement of related research, especially given that the study of ancient Chinese scripts is a niche field. Therefore, exploring methods to achieve effective identification results with fewer resources is of critical importance.

These special issues of ancient scripts have led to existing research mostly focusing on single type character recognition. And the current situation, where the scale of ancient text data is generally small restricts the application of new methods such as large models in the field of ancient text recognition. For the cold research field of ancient character recognition, in order to better meet practical research needs, we propose an ancient scripts recognition model based on separate studies of oracle bone inscriptions and bronze inscriptions. The model comprises two main components: (a) A data preprocessing unit, which includes a cross-modal data homogenization module and a data augmentation module; (b) A recognition unit based on CNN model.

Essentially, the use of artificial intelligence technology to study ancient scripts recognition is aimed at providing useful methods and approaches for heritage preservation work such as character decoding and data organization. Our model can effectively address the problems of small data scale and hybrid inputs, and can use CNN models to improve the accuracy of ancient scripts recognition.

Methods

Datasets

The experimental data includes two types of ancient scripts: the oracle bone inscriptions and the bronze inscriptions. Either of these types ancient scripts images has two categories: rubbings and handwritings.

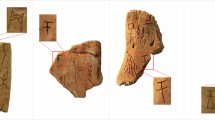

The oracle bone inscriptions dataset is collected from OBC306 and HWOBC on “Yin Qiwen Yuan Oracle Big Data Platform” (https://jgw.aynu.edu.cn/home/down/index.html). The HWOBC data set contains 3881 handwritten oracle bone inscriptions with 83,245 images. The OBC306 data set contains 306 oracle bone inscriptions with both handwriting and rubbing forms, as shown in Fig. 1. But as the uneven distribution of the data set some characters only has one or two images, which is too small to use for conducting experiments. Therefore, the original data set had been filtered and finally 165 oracle bone inscriptions were chosen for the experiments which have enough quantity and good image quality in both of the two data set as the experimental data.

Ultimately, the data set contains 12000 images, in which there are 8474 training images and 3526 testing images, as shown in Table 1.

The bronze inscriptions dataset is collected from “Jinwen scripts compilation”. As shown in Fig. 2. This dataset is still in the processing and organization stage, Tencent and Key Laboratory of Oracle Bone Inscriptions Information Processing are currently responsible for organizing relevant data. But even so we selected 60 characters as the experimental data.

Ultimately, the data set contains 2551 images, including rubbings and handwritings, the detailed information is shown in Table 2.

Proposed model

We proposed an idea which is homogenizing the input data and then applied the multi-modal ancient script recognition process. In the preliminary research, it was found that imitations have high accuracy in the recognition process, due to its simple image structure16. As for the rubbings, due to noise factors like wear and shield patterns in the image, the recognition accuracy of rubbings is relatively low. Therefore, a generative model that can transfer rubbings into handwritings to bypass the problem of rubbing recognition. Image generation like Image-to-Image, text-to-image, and image inpainting has been a popular research topic in many application areas over recent years17,18,19. Models such as U-net, diffusion and VAE have become important content in image generation research20,21.

But for the rubbing image of ancient scripts, they are usually grayscale images with fewer features. Besides the limited information contained in the rubbings themselves, the content that can be used to describe the images is also not rich. Some characters only have obscure and difficult to understand classical Chinese explanations, and some characters have not even been deciphered. In addition, the available rubbings data for training is relatively small, and a more effective method needs to be selected based on actual performance.

Compared with diffusion and VAE models, we using a U-net based module to achieve the data homogenization which can converts rubbing data into handwriting data in a better way. In addition, in response to the scarcity and uneven distribution of ancient text data, in order to better enhance the generalization ability of recognition methods, we also proposed a method for data augmentation. The proposed method specifically targets pictographic characters such as oracle bone inscriptions and bronze inscriptions, and are more effective than the general random methods. The relevant work was reflected in the ablation experiments. The architecture of our model is shown in Fig. 3.

Rubbings are the forms of ancient scripts after being rubbed and imprinted, and their essence is also a kind of engraved or written text. From the perspective of image features, rubbings have similarities with medical images. Therefore, semantic segmentation of ancient script symbols on rubbings can be achieved through generation methods, enabling the conversion of ancient script rubbings to handwritten styles.

U-net model possesses characteristics suitable for small-sample scenarios and edge-sensitive scenarios, making it more suitable for the task of generating handwritten forms of different ancient script rubbings compared to models such as diffusion model. Therefore, in the Data homogenization module, U-net model will be used as a generator, employing the CycleGAN training process to convert ancient text data from different modalities into the handwritten form modality. The process is illustrated in Fig. 4.

As a consequence, the data homogenization module is based on the U-net and for better results the spatial attention and channel attention mechanism are introduced in the process. The generator is as shown in Fig. 5. For low-order features, a residual block with spatial attention is inserted before the third down sampling step. For the high-order features after down-sampling, each channel of a feature map is considered as a feature detector, channel attention is induced to the residual blocks.

During the down-sampling process, the Resblock can be described as follows:

where \({W}_{1}\) and \({W}_{2}\) are the weights of two convolutions, the stride S of the \({W}_{1}\) is set to 2. The output size is as shown in the following equation:

The skip connection needs to synchronize down sampling, which is as follows:

where S = 2, and the convolutional kernel size is \(1\times 1\). The final output is:

The up sampling uses the transposed convolution with the output shown in Eq. 5:

F is the convolutional kernel with size \(2\times 2\), S is stride with value 2, P is the padding with value 0.

Based on the facsimile generation all rubbings can be converted to the handwriting form, which realizes the unified representation of each kind of oracle bone inscriptions data and bronze inscriptions data.

Based on the characteristics of ancient Chinese script such as oracle bone inscriptions and bronze inscriptions, we propose a targeted data augmentation plan, specifically, as follows:

Horizontal Flipping:

Giving a definition of a binary random variable α∼Bernoulli(0.5), The flipped image \({I}_{{flip}}\) is given by:

where W is the image width.

Rotation:

Random rotation angle\(\,\theta \sim {\rm{U}}(-20^\circ ,20^\circ )\), about center (xc,yc), Transformed coordinates:

Affine Transformation:

Scaling factor s∼U(0.6,1.2), and shear factor β∼U(5,13)

Empty areas filled with: \({I}_{{affine}}\left({x}^{{\prime} },{y}^{{\prime} }\right)=255\).

Salt-and-Pepper Noise:

Noise mask M∈{0,1}H×W, noise density, ρ = 0.15 (SNR = 0.85):

Application probability: P(apply)=0.3.

Gaussian Noise:

Noise η∼N(0,0.05):

Application probability: P(apply)=0.3.

Brightness/Contrast Adjustment:

Random gain γ∼U(0.8,1.2):

Grayscale Conversion:

Luminance transformation:

Gaussian Blur:

Kernel K of size 7×7 with σ = 0.15:

Application probability: P(apply)=0.3

In the course of practice, after successfully performing the steps above an augmented experiment data set can be achieved. These randomly changed samples can reduce the model’s dependence on certain attributes, thereby improving the model’s generalization ability.

Results

Baseline

Deep Convolutional Neural Networks (CNNs) have demonstrated exceptional performance in image recognition tasks, owing to their inherent advantages, including local receptive fields, parameter sharing mechanisms, hierarchical feature learning, translation equivariance, and dimensionality reduction via pooling operations. Given these merits, we adopt seven representative deep learning architectures as baselines: AlexNet, VGG-19, ResNet-50, GoogLeNet, ShuffleNet, Vision Transformer (ViT), ConvNeXt and Swing Transformer. Extensive comparative experiments substantiate the superiority of our proposed approach.

Experiment setup

To ensure the consistency and stability across all experiments, we used a dedicated deep learning device. The experiments are conducted on the same hardware environment and software environment. As shown in Table 3.

Due to the selection of multiple models in the experiments, in order to obtain more effective hyperparameters and ensure that each model can operate in its optimal state, we adopted the K-fold cross-validation method to estimate hyperparameters such as learning rate, batch size and weight decay, thereby guaranteeing the generalization ability of the model.

The experimental dataset of the ancient Chinese characters, encompassing oracle bone inscriptions and bronze inscriptions, is relatively small in terms of actual size. Therefore, K = 5 is selected for hyperparameter tuning using K-fold cross-validation and each fold has 100 epochs. Additionally, the training data is divided into a training set and a validation set at a ratio of 7:3, and the data within the sets are constructed through random sampling. Each model was trained for 100 epochs to ensure sufficient learning.

Finally, the hyperparameters of each model used in the experiment are shown in Table 4 (Taking the experiments on oracle bone inscriptions data as an example). The detailed experimental results will be discussed in the next section.

Results

Data homogenization is one of the core ideas of the multi-modal ancient scripts recognition method proposed in this paper. It achieves the unification of ancient scripts data from different modalities through a U-net model with an attention mechanism added, thus providing a foundation for subsequent recognition work. To illustrate the effectiveness of this module, we compared it with diffusion and VAE methods. These two models are widely used in image generation, but for ancient script rubbings, a special type of text image, the method proposed in this paper is more targeted.

We applied three image generation methods to the data homogenization process, and completed the recognition of ancient scripts after obtaining the corresponding generation results. Table 5 presents the recognition results of ancient scripts under the premise of different generation algorithms constituting the data homogenization module. The results indicate that the data processed based on U-net can achieve the best recognition results. This observation is reflected in both the oracle bone inscriptions dataset and the bronze inscriptions dataset.

From the view of result, it appears that the recognition accuracy of the bronze inscriptions data is relatively lower. This is due to the relatively small scale of bronze inscriptions data used in this study. Some models generally perform poorly on small datasets.

To analyze the strengths and weaknesses of various data homogenization methods from a more quantitative perspective, we conducted a comparative analysis from the perspectives of overall improvement in final recognition results and improvement across different models.

Figure 6 illustrates the results on the oracle bone dataset. The left part of Fig. 6 displays the overall distribution of the accuracy rates of different recognition models based on the data obtained from three image generation algorithms. According to the boxplot, the overall recognition accuracy of the model using U-net generated data is between 0.85 and 0.90, which is generally higher compared to the recognition results based on data generated by the other two algorithms.

The right part of Fig. 6 shows the recognition comparison results of different models using data from three algorithms. The upper graph depicts the difference in recognition accuracy obtained based on the U-net method compared to the results obtained based on the other two methods, while the lower graph shows the corresponding improvement ratio. From the two comparison methods, it can be seen that using U-net for data homogenization is more effective when dealing with ancient text rubbings.

Compared with the experimental results on oracle bone inscriptions data, a similar situation also occurred in the experiments with bronze inscriptions dataset as shown in Fig. 7. The results indicated that for the data homogenization our method based on U-net can outperform methods based on diffusion or VAE.

Based on the aforementioned experimental results, we have decided to complete the task of data homogenization using the U-net model. This approach is more suitable for the processing of ancient script rubbings and can provide a better data foundation for subsequent work.

In this study, we systematically implemented both the data homogenization and augmentation modules across all evaluated models, followed by comprehensive performance analysis.

Table 6 presents the comparative results on the mixed dataset, including four key metrics: Top-1 accuracy, F1 score, AUC, and Top-5 accuracy. The table contrasts two configurations: “Base” representing the baseline approach where models are trained solely on the original heterogeneous dataset containing both rubbing and handwritten images without any preprocessing; and “CA” our proposed framework incorporating the synergistic combination of data homogenization and augmentation components.

The experimental results shown in Table 6 indicate that on the hybrid dataset, the baseline models initially demonstrated only marginal performance. However, with the integration of our proposed data homogenization and augmentation modules, the results exhibited substantial improvement. The aforementioned situation occurred in every selected comparison metric. To better illustrate some detailed processes, taking the Top-1 accuracy as an example, Fig. 8 shows more information.

The blue solid line represents the accuracy of the proposed methods (CA Acc), the red solid line represents the corresponding loss (CA Loss). The blue dashed line represents the accuracy of the base method (Base Acc), and the red dashed line represents the corresponding loss (Base Loss). The eight sub-figures correspond to the methods listed in Table 6. From the results, it can be seen that the method proposed in this paper not only achieves better recognition accuracy but also converges faster in the process, with a smoother loss curve and a faster and more reasonable convergence rate. In contrast, these basic models have lower accuracy and greater fluctuation in loss when dealing with multimodal oracle bone inscriptions data. Especially for models like ViT, due to factors such as their own model structure, their accuracy is even worse when directly processing oracle bone inscriptions data.

In an effort to provide a better assessment of our model, we measured the performances of the proposed method with the bronze inscriptions data. Table 7 shows the performances of the baseline methods and the proposed method.

The results shown that for the Top-1 accuracy, F1 score, AUC and Top-5 accuracy indices, with the proposed data homogenization module and the augmentation module the recognition results had been improved, even the data scale of bronze inscriptions is relatively small.

Take the Top-1 accuracy as an example, compared with the experimental results of oracle bone inscriptions data, the bronze inscriptions recognition process better demonstrates the effectiveness of the method proposed in this paper, as shown in Fig. 9. The curve (red) of the Base method is more chaotic, which is due to the small scale of bronze inscriptions experimental data. Some models fail to exhibit their expected performance when recognizing bronze inscriptions. However, the method proposed in this paper (blue curve) yields better results in terms of convergence speed, changes in Loss value, and its final value.

To sum up, for the multi-modal ancient scripts, the proposed method performed notably better than the baseline methods.

Ablation study

To rigorously validate the efficacy of our proposed method, we conducted systematic ablation studies. The key contribution of this work lies in the integration of the data homogenization module and augmentation module with the recognition unit. Through controlled experiments, we quantitatively assessed the individual impact of each module on recognition performance.

In the first ablation experiment, we analyzed the impact of data homogenization. We isolated the effect of data homogenization by exclusively removing the data augmentation module while retaining other components. Subsequently, we evaluated the recognition performance using only the data homogenization module across both oracle bone inscriptions and bronze inscriptions datasets. For quantitative comparison, Top-1 and Top-5 accuracy were selected as evaluation metrics. The detailed results of this configuration are presented in Table 8.

The incorporation of the data homogenization module enhanced the recognition capability of baseline models, though the improvement margin remained limited. When evaluated against the complete model, architectures utilizing only the homogenization block exhibited inferior Top-1 and Top-5 accuracy across all benchmarks.

Among these methods, AlexNet, VGG19, ResNet50, ConvNext, and EfficientNet have all achieved further improvements on top of their original good results. And models like ShuffleNet, ViT, and SwinTransformer, which perform poorly even when directly processing raw data, have also seen significant improvements through data homogenization process. The complete model configuration consistently achieved significant accuracy improvements, underscoring the synergistic effect of integrated modules.

The same situation also occurred on experimental results with the bronze inscriptions data. Table 9 shows the Top-1 accuracy and Top-5 accuracy for all the methods to be compared.

From an overall perspective, the results shown in Table 9 are similar to these shown in Table 8. The data homogenization module could improve the performance of each baseline model but the improvement is limited. Compared with the complete model, the gap in accuracy still existed. This situation is attributed to the small scale of the bronze inscriptions dataset used in the experiment.

In the second ablation experiment, we analyzed the impact of data augmentation and performed comparative experiments analyzing its isolated contribution. Table 10 presents the Top-1 and Top-5 accuracy metrics for three configurations on the Oracle Bone Inscriptions dataset: Firstly, baseline models without data augmentation, secondly, models with only the data augmentation module, and thirdly, the complete integrated model.

The results in Table 10 indicate that the baseline models exhibit the worst recognition performance. The recognition results improved after data processing through the data augmentation module compared to the baseline, while the method proposed in this paper achieved the best recognition results. The experimental results demonstrate that the data augmentation module has a positive impact on the recognition of ancient scripts. Similarly, related experiments were also conducted on the bronze inscription dataset to evaluate the effect of data augmentation.

Table 11 shows the results of different models on bronze inscriptions Dataset. The results shown in the tables indicated that data augmentation module could also improve the performance of each baseline model. But compared with the complete model, the results of models with data augmentation were relatively low.

To summarize, all the above ablation experiments indicate that the two block we added in the recognition process can indeed improve the accuracy of ancient character recognition. Each single block can have a certain effect, and with the complete model we can effectively improve the performance of baseline models.

Discussion

This paper presents a multi-modal ancient script recognition method designed to recognize diverse script modalities, including oracle bone inscriptions, bronze inscription rubbing samples, and handwritten forms. The proposed framework comprises two core functional units. The first unit incorporates data homogenization and data augmentation modules for normalizing and enriching the input data. The second module implements the specific recognition task through a CNN-based deep learning architecture.

The experimental results show that compared to the baseline model, the method proposed in this paper achieves better performance on both oracle bone inscriptions and bronze inscriptions datasets. Especially for some smaller datasets, this method is also applicable.

To further demonstrate the effectiveness of the model, we also conducted detailed ablation experiments. The results showed that the two core modules in this method, data homogenization and data augmentation, both contribute to the improvement of the overall recognition performance, while the complete method achieves the best recognition results.

This study’s primary contribution lies in establishing a standardized preprocessing pipeline for multimodal ancient scripts. The proposed two-module mechanism provides both theoretical foundations and practical methodologies for archaeological text analysis, with experimentally validated efficacy.

Data availability

The original data used in this paper, HWOBC and OBC306, can be obtained from the website: http://jgw.aynu.edu.cn/DownPage; the complete data can be obtained from the website: https://github.com/Augety88/oracle-jinwen-code-data.

Code availability

The underlying code for this study is available in https://github.com/Augety88/oracle-jinwen-code-data.

References

Gu, S. T. Identification of oracle-bone script fonts based on fractal geometry. J. Chin. Inf. Process. 32 (2018).

Qu, H. Y., Liu, J. Z. & Wu, J. Oracle-bone inscriptions recognition based on topological features. Comput. Sci. Appl. 9, 1111–1117 (2019).

Liu, Y. G. & Liu, G. Y. Oracle bone inscription recognition based on SVM. J. Anyang Norm. Univ. 2, 54–56 (2017).

Zhao, R. Q., Wang, H. Q., Wang, K., Wang, Z. & Liu, W. T. Recognition of bronze inscriptions image based on mixed features of histogram of oriented gradient and gray level co-occurrence matrix. Laser Optoelectron. Prog. 57, 90–96 (2020).

Assael, Y. et al. Restoring and attributing ancient texts using deep neural networks. Nature 603, 280–283 (2022).

Fujikawa, Y. et al. Recognition of oracle bone inscriptions by using two deep learning models. Int. J. Digit. Humanit. 5, 65–79 (2023).

Qiao, Y. G. & Xing, L. Z. Applying deep learning algorithms for automatic recognition and transcription of texts in oracle bones and golden texts. Appl. Math. Nonlinear Sci. 9, 1–16 (2023).

Guo, Z. Y., Zhou, Z. H., Liu, B. S., Li, L. Q. & Jiao, Q. J. An improved neural network model based on inception-v3 for oracle bone inscription character recognition[J]. Sci. Programm. 7490363 (2022).

Liu, M. T., Liu, G. Y., Liu, Y. G. & Jiao, Q. J. Oracle bone inscriptions recognition based on deep convolutional neural network. J. image Graph. 8, 114–119 (2020).

Meng, L., Kamitoku, N. & Yamazaki, K. Recognition of oracle bone inscriptions using deep learning based on data augmentation. 2018 Metrology for Archaeology and Cultural Heritage. 33–38 (IEEE, 2018).

Mai, C., Penava, P. & Buettner, R. Oracle bone inscription character recognition based on a novel convolutional neural network architecture. J.]. IEEE Access. 12, 197021–197034 (2024).

Wu, X. Q., Wang, Z. Y. & Ren P. CNN-based Bronze Inscriptions Character Recognition. 2022 5th International Conference on Advanced Electronic Materials, Computers and Software Engineering. 514-519 (IEEE, 2022).

Xu, Y., Zhang, X. Y., Zhang, Z. X. & Liu, C. L. Large-scale continual learning for ancient Chinese character recognition. Pattern Recognit. 150, 110283 (2024).

Barucci, A. et al. A deep learning approach to ancient Egyptian hieroglyphs classification. IEEE Access 9, 123438–123447 (2021).

Zhao, H. S., Chu, H. Z., Zhang, Y. Y. & Yu, J. Improvement of ancient Shui character recognition model based on convolutional neural network. IEEE Access 8, 33080–33087 (2020).

Wang, N., Wang, C. J. & Jiao, Q. J. Research on Handwritten Oracle Bone Inscriptions Recognition Based on EasyDL. Electron. Technol. Softw. Eng. 3, 184–187 (2023).

Parmar, G. et al. Zero-shot image-to-image translation. ACM SIGGRAPH 2023 conference proceedings. 1-11 (ACM, 2023).

Li, Y. H. et al. Gligen: Open-set grounded text-to-image generation. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 22511-22521 (IEEE, 2023).

Zhang, X. B., Zhai, D. H., Li, T. R., Zhou, Y. X. & Lin, Y. Image inpainting based on deep learning: a review. Inf. Fusion 90, 74–94 (2023).

Kaneko, H., Yoshizu, Y., Ishibashi, R. & Meng, L. An attempt at zero-shot ancient documents restoration based on diffusion models. 2023 International Conference on Advanced Mechatronic Systems (ICAMechS). 1-6 (IEEE, 2023).

Chen, B. Z., Liu, Y. S., Zhang, Z., Lu, G. M. & Kong, A. Transattunet: Multi-level attention-guided u-net with transformer for medical image segmentation. IEEE Trans. Emerg. Top. Comput. Intell. 8, 55–68 (2023).

Acknowledgements

This study was funded by Natural Science Foundation of Henan Province, grant number 242300420680, Henan Province Science and Technology Research Project, grant number 222102320036.

Author information

Authors and Affiliations

Contributions

N.W.: Conceptualization. N.W. and B.L.: Methodology. W.W: Software. W.W. and N.W.: Validation. N.W.: Writing original draft preparation. H.Z.: Writing review and editing. Q.J. and C.L.: Data preparation. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, N., Wang, W., Li, B. et al. Multi-modal ancient scripts recognition via deep learning with data homogenization and augmentation. npj Herit. Sci. 13, 522 (2025). https://doi.org/10.1038/s40494-025-02095-x

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s40494-025-02095-x