Abstract

Understanding and accurately predicting hydrogen diffusion in materials is challenging due to the complex interactions between hydrogen defects and the crystal lattice. These interactions span large length and time scales, making them difficult to address with standard ab-initio techniques. This work addresses this challenge by employing accelerated machine learning (ML) molecular dynamics simulations through active learning. We conduct a comparative study of different ML-based interatomic potential schemes, including VASP, MACE, and CHGNet, utilizing various training strategies such as on-the-fly learning, pre-trained universal models, and fine-tuning. By considering different temperatures and concentration regimes, we obtain hydrogen diffusion coefficients and activation energy values which align remarkably well with experimental results, underlining the efficacy and accuracy of ML-assisted methodologies in the context of diffusive dynamics. Particularly, our procedure significantly reduces the computational effort associated with traditional transition state calculations or ad-hoc designed interatomic potentials. The results highlight the limitations of pre-trained universal solutions for defective materials and how they can be improved by fine-tuning. Specifically, fine-tuning the models on a database produced during on-the-fly training of VASP ML force-field allows the retrieval of DFT-level accuracy at a fraction of the computational cost.

Similar content being viewed by others

Introduction

The global imperative for sustainable and green energy solutions has intensified the search for efficient hydrogen storage materials. With the highest energy density among fuels1, hydrogen represents a promising alternative to fossil sources that can be produced with zero CO2 emissions powered by surplus renewable energy2, through methods such as electrolysis3,4,5. The main barrier preventing a future economy based on hydrogen energy is the cost of production, and the absence of a green, safe, and efficient way to store and transport it. Solid-state hydrogen storage technologies are the most studied in this regard, being the safest, offering higher volumetric densities6 than cryogenic or high-pressure gaseous alternatives7,8,9. Despite these advantages, the technology remains in its early stages and the search for materials allowing large-scale applications, like in the automotive industry10, remains open11,12.

Metal hydrides currently represent promising efficient and economical solutions13. Particularly, Magnesium stands out for its excellent hydrogen storage capacity14, environmental friendliness, and natural abundance, displaying theoretical storage capacities as high as 7.6% wt15. However, the slow kinetics of hydrogen in magnesium-based compounds still pose a limit to possible applications. Therefore, understanding and optimizing hydrogen diffusion pathways through theoretical modeling16,17,18 and experimental studies19,20,21 is crucial in order to improve performances of future Mg-based hydrogen storage materials. Despite numerous efforts over the past decade, modeling hydrogen dynamics in solid-state compounds remains challenging22,23,24,25. The low hydrogen diffusivity in magnesium requires prolonged simulation times, in the order of nanoseconds, for accurate studies using ab-initio Molecular Dynamics (MD). Consequently, ab-initio transition state calculations, such as the nudged elastic band (NEB) method, have emerged as the most effective approaches to reproduce and interpret the experimental data documented in the literature to date17. However, this technique is cumbersome and often impracticable for systems with high defect concentrations, or complex potential energy landscapes: manually describing all possible paths required by NEB may be very challenging and virtually impossible17.

Recently, Machine Learning accelerated MD (MLMD) has revolutionized the world of MD by making accurate simulations of large systems accessible over long time scales26. The application of such approach to study hydrogen-defective systems is of high interest27, since the prediction of dynamical properties would highly expand the limited landscape offered by today’s transition state computations. MLMD does allow the study of multi-component systems28 and can efficiently account for interaction between defects29. However, developing accurate interatomic potentials, especially for hydrogen-defective materials, is notoriously challenging25,30. Still, the field is rapidly growing and several new approaches were proposed to explore new and complex phase spaces. On one side, various pre-trained universal solutions31,32 start to be available, aiming to offer a convenient and versatile way of tackling the problem. However, as discussed in this work, while their training datasets are rich in chemical compositional space, the limited configurational sampling can significantly compromise their accuracy on previously unseen defected, metastable and transition states, leading to ungranted generalization capabilities. On the other side, active-learning approaches based on Bayesian force fields are showing a large versatility thanks to the construction of on-the-fly databases33,34. The error-oriented sampling of configurations allows these models to easily collect high-quality data widely spanning the configurational space, thus making them highly accurate despite their architecture, constrained compared to neural networks. The current study aims at illustrating a systematic procedure for ML-potentials applications in diffusive dynamics conditions, which can be used to enhance the study of different embedded defects, without departing from ab-initio accuracy. This procedure specifically consists in improving ML-based pre-trained models’ performance via actively learned configurations, generated by on-the-fly training of the Vienna Ab Initio Simulation Package Bayesian ML Force-Field (VASP-MLFF)33,34. We consider four different concentrations (MgH0.03125, MgH0.046875, MgH0.0625 and MgH0.078125) and compute the hydrogen diffusion coefficient at three different temperatures (300 K, 480 K, and 673 K), employing a proper methodology which ensures accurate analysis of unbiased dynamical properties. Two different Universal Interatomic Potentials (UIPs), CHGNet31 and MACE32, were considered. Dynamical properties were computed for VASP-MLFF alongside the pre-trained and fine-tuned versions of the UIPs. The comparison between the different results and experimental data showed excellent agreement, both with VASP-MLFF and fine-tuned potentials, while the pre-trained versions fail to reach a satisfactory accuracy. Interestingly, the fine-tuned potentials outperform VASP-MLFF by correctly predicting the temperature dependence of the diffusion coefficient.

Results

Validation of the ML-potentials

The results reported in Fig. 1 show how the predictions of the VASP-MLFF achieve an accuracy below 0.3 meV/atom for energies and below 10 meV/Å for forces, respectively, as reasonably expected compared to other studies involving MLFF-MD35,36,37,38. On the other hand, the UIPs pre-trained on the MP-database, respectively named on CHGNet_MP and MACE_MP, failed to reach such level of accuracy by more than one order of magnitude. The discrepancy was significantly reduced after fine-tuning the two models on the VASP-generated database: the error obtained shows that the CHGNet_FT performance reaches a level comparable with the VASP-MLFF, and MACE_FT even outperforms it. The performance differences between CHGNet_FT and MACE_FT may stem from the equivariant architecture employed by the latter. MACE turned out to be highly data-efficient, leading to better fine-tuning results over small datasets, compared to the more data-hungry architectures like CHGNet. The MACE model was also trained from scratch on the VASP-DFT configurations (MACE_TR), achieving slightly smaller errors on forces with respect to MACE_FT. Please note that all energy values at concentrations different from the training concentration exhibit a systematic shift dependent on the hydrogen content. However, the underlying physics of hydrogen dynamics is unaffected, as this shift remains consistent throughout simulations with a constant number of particles and does not influence the forces. Consequently, the models are still capable of accurately describing the hydrogen dynamics. For completeness, we also present the pristine validation and the corresponding R2 values, as shown in Supplementary Fig. SF2.

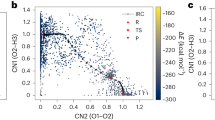

a RMSE values for the predictions of energies and forces on the validation set from every model considered in the study. These results are given at different concentrations, obtained using a supercell with a variable number of H atoms, removing the corresponding constant energy shift by setting the mean of all the configurations at the same concentration to zero. The subscript ’MP’ refers to the pre-trained model on the Material Project database, ’TR’ to the one trained from scratch and ’FT’ to the fine-tuned version. b Energy barrier values associated to the hydrogen transition between different octahedral (Oi) and tetragonal (Ti) sites, obtained through ciNEB calculations, using different ML-potentials and DFT reference (in yellow).

The climbing-image nudged elastic band (ciNEB) calculations conducted with the ML-potentials, allowed to estimate the transition energy barrier Eb between various octahedral and tetragonal hydrogen interstitial sites, and to further validate the accuracy of the ML-potentials. In fact, we compared the obtained Eb values through these methods with the VASP-DFT benchmark results. Our findings, shown in Fig. 1b, reveal that the universal potentials perform significantly less accurately in predicting the energy barriers. However, the performance of the trained and fine-tuned counterpart dramatically improves, producing results that are much closer to the VASP-DFT reference values. The VASP-MLFF method also gave excellent agreement with the DFT benchmark. As expected, the model with better accuracy on the validation set, especially on the forces, obtained results closer to the DFT values.

Diffusion coefficient and dynamical analysis

The diffusion coefficient D predicted for every model, using the methodology described in the “Methods” section, is reported in Fig. 2a, and compared with experimental19 and NEB17 results, at each investigated temperature. The experimental data from Nishimura et al. were collected in the temperature range of 474–493 K and then extrapolated at higher and lower temperatures using an Arrhenius relation. The results clearly show that our procedure provides multiple solutions with excellent experimental agreement, outperforming NEB computations16 that until now have represented the standard for such applications. This holds true for the MACE_FT potential in particular, which not only predicts the correct order of magnitude across all temperatures, but significantly agrees at 480 K. The VASP-MLFF instead, shows a good agreement at 480 K and 673 K, while missing the room temperature by one order of magnitude. Concerning CHGNet_FT, it provides remarkable agreement with the experimental value at 480 K, but at the lowest temperatures underestimates the result by one order of magnitude. As expected from the error analysis on energies and forces, both the pre-trained versions of the UIPs result in much lower agreement, with deviations of at least one order of magnitude from experimental values at most temperatures. A closer inspection of the temperature dependence of the diffusion coefficients, as shown in Fig. 2b, reveals that CHGNet_FT still outperforms the VASP-MLFF by better reproducing the Arrhenius curve observed in the experiments, while the MACE_FT solutions outperform both. This shows how with smaller errors, the deep networks are capable of better representing the shape of the energy landscape, with respect to the Bayesian alternative. In this regard, further quantitative analysis can be provided by comparing the predicted value of the activation energy Ea, obtained from the linear fits in the Arrhenius plots. In particular, we achieved values of 0.4 eV for VASP-MLFF, 0.19 eV for CHGNet_FT and 0.28 eV for MACE_FT, where the experiment value corresponds to 0.25 eV19. MACE_FT clearly excels at representing the energy landscape of the system.

a Diffusion coefficient values of hydrogen in MgH0.0625 at three different constant average temperatures of 380 K, 480 K and 673 K, and the corresponding activation energy, comparing results achieved via different potentials in our investigations, and previous studies17,19. The number in parentheses indicates the Standard Error of the Mean (SEM) in the last significant digit of the value. The colorbar highlights in logscale the relative deviation with respect to the experimental values. b Comparison between the dependence of the diffusion coefficient with temperature, for ML-models, the NEB17 and experimental results19. The experimental curve is extrapolated from data obtained at 474–493 K. c Radial distribution function of atom pairs at 673 K during 1 ns long NVE simulations. VASP-MLFF and MACE show a remarkable agreement over the whole domain for every pair, while CHGNet starts to differ at larger distances.

Furthermore, we computed D at various hydrogen concentrations using MACE_FT, along with the associated Ea, as shown in Fig. 3a. The results reveal a decrease in hydrogen mobility with increasing concentration, leading to a corresponding reduction in the diffusion coefficient. Simultaneously, the activation energy increases with higher hydrogen densities. The evaluation of D across different concentrations enabled a comparison with experimental values, demonstrating that the results for lower concentrations, specifically MgH0.03 and MgH0.045, show the best alignment. It should be noted that Nishimura et al.19 did not report the hydrogen concentration within their samples. These findings suggest that the target hydrogen concentration in Nishimura’s experiments may be lower than 0.0625, assumed in previous DFT studies17.

a Diffusion coefficients at different temperatures and H concentration, on top, and obtained activation energy at different concentrations, on the bottom. The target experimental value from ref.19 is reported as the dashed line in every plot. b Comparison of the simulated hydrogen pair distributions (np occurrences, bars) with theoretical Poisson distributions (points and dashed lines) at three temperatures (300 K, 480 K, and 673 K) for varying hydrogen concentrations. The lower panel shows the λ parameter (average number of pairs) as a function of temperature and concentration.

Further analysis has been performed on the dynamics of the system by evaluating the radial distribution function (RDF) in all of the NVE runs performed. We report in Fig. 2c the predicted behavior by the best performing models in the system at 673 K, while other temperature cases can be found in Supplementary Fig. SF6. A very good agreement between MACE_FT and VASP-MLFF is found, while the RDF of CHGNet_FT departs from the others at larger distances, by smoothing out peaks. From such curves it is possible to retain information about the behavior of hydrogen during the simulation. Proceeding in order with increasing distance radius, the first peak appears just before 2 Å in the Mg–H curve, in correspondence of the average distance of one Magnesium atom from the center of the nearest octahedral sites on which hydrogen tend to sit16. The second peak, belonging to the H–H pair, is very pronounced at around 2.6 Å, reflecting a correlation between hydrogen atoms at this distance. This behavior finds agreement in the literature for molecular dynamics with magnesium hydride nanoclusters30. In fact, the distance of 2.6 Å corresponds to the one between two octahedral sites along the c-direction16, as also reported in literature30, implying how hydrogen tends to sit on near sites. To highlight such behavior, we also report in Fig. 4 the extensive diffusion path of a representative hydrogen atom within the magnesium matrix during a 100 ps simulation, at 673 K. The trajectory is unwrapped in the replicas of the periodic images to enhance visibility and interpretation. The color-gradient serves as a temporal marker, with blue indicating the initial position of the hydrogen atom at the beginning of the simulation (t = 0 ps) and red indicating its position at the end of the studied interval (t = 100 ps). Intermediate colors (cyan, green, yellow, and orange) represent the progression of time between these two extremes, providing a visual cue for the temporal evolution of the atom’s diffusion path. The black circles highlight interstitial regions where the hydrogen atom tends to oscillate around the magnesium sites, indicating temporary trapping sites within the lattice structure, before continuing its diffusion trajectory. The third significant peak in the RDF is observed around 3 Å in the Mg–Mg curve, consistently with the typical magnesium distances in hcp structures. Multiple smaller peaks indicate further neighbor interactions in the crystal lattice. Analogous results were found in the RDF at 300 K and 480 K, where the peaks are sharper due to the reduced effect of thermal motion, see Supplementary Fig. SF6. In particular, a higher pronounced RDF at lower temperature indicates that hydrogen tends to spend more time in the vicinity of Magnesium, and diffuse less in the crystal structure.

The analysis of hydrogen pair (np) distributions presented in Fig. 3b provides a clear insight into the dependence of pair formation on temperature and concentration. At elevated temperatures, such as 480 K and 673 K, the simulated distributions align closely with the theoretical Poisson distribution, calculated as:

where λ represents the average number of pairs. This agreement demonstrates the random nature of hydrogen pairing, indicating a lack of correlation and independence from the initial atomic configuration. Furthermore, the convergence of λ values for different concentrations as temperature increases underscores this intrinsic randomness. Conversely, at 300 K, a deviation from the Poissonian behavior emerges, with λ showing a stronger dependence on hydrogen concentration. This behavior can be attributed to the reduced mobility of hydrogen atoms at low temperatures, where the thermal energy is insufficient to overcome the energy barriers for interstitial site transitions. Consequently, the hydrogen atoms remain in proximity to their initial positions, leading to non-equilibrated pair distributions within the simulation timescale. These results suggest that, while the system approaches a random distribution at higher temperatures, longer simulations would be required to achieve equilibration and Poissonian behavior at room temperature for low-mobility regimes.

Discussion

To summarize, our investigation enabled us to thoroughly characterize the kinetic properties and mobility of hydrogen within a structured environment, such as pure magnesium, across various temperatures through a rigorous and efficient methodology. We performed a systematic and comparative study of ML-based interatomic potential MD schemes, particularly focusing on Bayesian versus equivariant and graph neural networks (MACE and CHGNet), under different training modes (universal and fine-tuned). The results were validated by estimates of the diffusion coefficient and the activation energy values, which showed excellent agreement with experimental data. The obtained results proved that the VASP-DFT configurations, collected during the MLFF on-the-fly training, represent a complete set for the accurate modeling of interatomic interactions, between hydrogen and magnesium atoms. This strategy could be consistently applied to generate a comprehensive dataset for the proper training of existing or forthcoming potentials. In fact, we identified the limitations of pre-trained UIPs in studying materials with diffusing defects, due to the absence of representative high temperatures, defective and metastable states in their datasets. Particularly, we demonstrated the ability of state-of-the-art machine learning models to achieve DFT-level accuracy, after fine-tuning on actively learned DFT configurations, highlighting the importance of focusing on efficient dataset-building methods and their quality. Specifically, the importance of including defected and transition state configurations, as well as the role of transfer-learning, allowing pre-trained solutions to adapt to new systems. The obtained data offer valuable new insights into the collective dynamical properties at varying hydrogen concentrations, accurately predicting a decrease in hydrogen mobility as concentration increases. Additionally, the statistical analysis of hydrogen pair distributions indicates the system approaches a random distribution at higher temperatures. However, longer simulations would be necessary to achieve equilibration and Poissonian behavior at room temperature in low-mobility regimes.

These progresses not only improves our understanding of hydrogen interactions in magnesium, but also paves the way for future research into a broader range of multi-component systems and defected compositions. This is especially significant for systems with complex potential energy surfaces, where traditional ab-initio methods become impractical. Successfully modeling the hydrogen diffusion mechanism in magnesium via machine learning-accelerated molecular dynamics could facilitate the study and discovery of new, more efficient materials for hydrogen storage and beyond it, contributing to the transition towards a greener and more sustainable energy future.

Methods

MLFF-MD

The Density Functional Theory (DFT), MLFF-MD and climbing-image-NEB39 (ciNEB) calculations were performed using VASP version 6.4.2 and version 6.4.333,34,40,41, respectively. We utilized a 4 × 4 × 4 supercell, shown in Fig. 5, comprising 128 Mg atoms in hcp crystal symmetry, with 8 H atoms (MgH0.0625) randomly distributed in the lattice. We performed non-spin-polarized calculations, at the Perdew-Burke-Ernzerhof (PBE) functional level of theory42, using an energy cutoff of 600 eV and a 4 × 4 × 4 k-points grid, with convergence threshold of 0.1 meV, employing a Gaussian smearing with a sigma value of 0.05 eV. To ensure consistency and avoid any discrepancies with our database, we adopted the same convergence parameters as those used in the Materials Project Database. For VASP-MLFF we used the default parameters. The temperature was incrementally raised from 0 to 700 K over a 0.2 ns interval with VASP on-the-fly MLFF-MD. In this setup, derived configurations-including structure energy, forces acting on each atom, atomic coordinates, stress tensor, and lattice parameters-were used to train the interatomic potential. Whenever the Bayesian error surpassed the set fixed threshold, VASP reverted to DFT to generate a new configuration in the database. Such on-the-fly procedure allows the model to use the accumulated ab-initio configurations to gradually improve the predictions on subsequent steps. The threshold value of 5 meV/Å has been set to ensure a collection of diverse and well-spread representation of structures in the database from the explored configurational phase space. During the thermalization phase, we opted for an NpT ensemble while constraining the cell shape, employing the Langevin thermostat with a friction coefficient for the lattice and atomic degrees-of-freedom equal to 10 ps−1. We applied zero external pressure and a time step of 1 fs. At key temperatures of 300 K, 480 K and 673 K, we conducted further 100 ps long NpT simulations in training mode to accumulate additional configurations. A total of over 3700 ab-initio configurations have been stored, fewer than 1000 being recorded at each constant temperature, and the remaining configurations captured during the ramping phase. Subsequently, we switched to MLFF-MD in run mode and determined the average lattice volume over a 100 ps period at fixed temperatures of 300 K, 480 K, and 670 K. The average volume configurations were then utilized to conduct NVT simulations. Following the 100 ps NVT simulation, we extracted three structures with an energy close to the average value of the NVT run. To avoid any correlations, we ensured that the time interval between each of them was at least 10 ps. Subsequently, starting from those, we performed three distinct NVE simulations, where we computed the mean squared displacements (MSD) of hydrogen atoms as the ensemble average of

Where T is the total simulation time, and r is the trajectory of the atoms under analysis. During the final NVE run a reduced time step of 0.5 fs was employed to reduce energy fluctuations. All the MSD were constructed over NVE trajectories of 1 ns, and used to extract the diffusion coefficient D by fitting the linear part of the function with the Einstein relation

by fitting the initial 0.2 ns linear region of the simulation. The value of D at each temperature was computed as the average value of the three NVE replicas. The associated uncertainty was computed as the standard error of the mean SEM = SD/\(\sqrt{3}\), where SD is the standard deviation, and 3 the number of our collected predictions. An example of MSD fitting procedures is included in Supplementary Fig. SF1.

The ciNEB calculations were performed using a 2 × 2 × 2 supercell with one Hydrogen positioned along the main paths between interstitial sites identified in the literature17. A visualization of those sites is reported in Supplementary Fig. SF3. Also, every computation was performed using ten images and a 0.01 eV/Å forces convergence criterion.

Universal interatomic potentials UIPs

We consider two state-of-the-art best performing43 pre-trained UIPs: MACE44, based on an equivariant message passing neural network, and CHGNet31, a graph-based neural network. The models are imported in their pre-trained versions on the Materials Project (MP) relaxation trajectories database45, comprising 1.6 million crystal structures with the associated energies, forces, stresses, and magnetic moments. In order to tackle the predicted dynamical properties from such UIPs, we followed the same NEB and MD procedure (excluding the on-the-fly training phase) as explained in the previous section. Subsequently, CHGNet and MACE were fine-tuned on the VASP-DFT generated data obtained during the on-the-fly MLFF-MD. MACE was also trained from scratch on the dataset. Both training and fine-tuning of all the models were performed using the standard procedure and parameters suggested by the developers in the respective github repositories. During CHGNet’s MD simulations, we observed the need of a 0.5 fs time step to stabilize the dynamics also in the NPT and NVT runs, avoiding drifts in temperature for NVE. In this regard, we show examples of temperature oscillations during NVE simulations from 0.5 fs and 1.0 fs cases in Supplementary Figs. SF4 and SF5. To perform the MD simulations we employed LAMMPS46 and ASE47, for MACE and CHGNet respectively. A schematic view of the employed workflow is represented in Fig. 6. Furthermore, using the best performing model, (MACE_FT), we followed the same protocol to estimate D at three additional hydrogen concentrations, containing 10 H (MgH0.078125), 6 H (MgH0.046875) and 4 H (MgH0.03125), respectively. To assess the performance of every model, we evaluated the Root Mean Square Errors (RMSE) for the prediction of energy and forces over a test dataset of 1200 configurations. These were randomly sampled, with proper equal spacing in time, from the NVE replicas of the MACE_FT model, with 400 samples for each temperature. This validation process was independently repeated for each different hydrogen content to test the models’ accuracy beyond the training concentration. At last, NEB calculation on the same path studied using VASP were performed with every model using the ciNEB implementation of ASE employing the same number of images and convergence criteria.

The database of configurations is built both during NpT-MD thermalizations of the system from 0 K to 700 K via active learning of the VASP-MLFF, and at target temperatures (300 K, 480 K, and 673 K). Subsequently, the machine learned potentials are fine-tuned or trained, and after system-equilibration the MSD and the diffusion coefficient D at fixed T are computed.

Data availability

The training dataset can be openly accessed at https://figshare.com/articles/dataset/_b_ML_AB_b_/27922470?file=50852211.

References

Yang, J., Sudik, A., Wolverton, C. & Siegel, D. J. High capacity hydrogen storage materials: attributes for automotive applications and techniques for materials discovery. Chem. Soc. Rev. 39, 656–675 (2010).

Bhimineni, S. H. et al. Machine-learning-assisted investigation of the diffusion of hydrogen in brine by performing molecular dynamics simulation. Ind. Eng. Chem. Res. 62, 21385–21396 (2023).

Tarasov, B. P. et al. Metal hydride hydrogen storage and compression systems for energy storage technologies. Int. J. Hydrog. Energy 46, 13647–13657 (2021).

Turner, J. A. A realizable renewable energy future. Science 285, 687 - 689 (1999).

Gardner, D. Hydrogen production from renewables. Renewable Energy Focus 9, 34–37 (2009).

Aceves, S. M., Berry, G. D., Martinez-Frias, J. & Espinosa-Loza, F. Vehicular storage of hydrogen in insulated pressure vessels. Int. J. Hydrog. Energy 31, 2274–2283 (2006).

Sun, Y. et al. Tailoring magnesium based materials for hydrogen storage through synthesis: Current state of the art. Energy Storage Mater. 10, 168–198 (2018).

Felderhoff, M., Weidenthaler, C., von Helmolt, R. & Eberle, U. Hydrogen storage: the remaining scientific and technological challenges. Phys. Chem. Chem. Phys. 9, 2643–2653 (2007).

Hua, T. et al. Technical assessment of compressed hydrogen storage tank systems for automotive applications. Int. J. Hydrog. Energy 36, 3037–3049 (2011).

US Department of Energy. DOE technical targets for onboard hydrogen storage for light-duty vehicles. https://www.energy.gov/eere/fuelcells/doe-technical-targets-onboard-hydrogen-storage-light-duty-vehicles (2023).

Yartys, V. et al. Magnesium based materials for hydrogen based energy storage: past, present and future. Int. J. Hydrog. Energy 44, 7809–7859 (2019).

Hirscher, M. et al. Materials for hydrogen-based energy storage - past, recent progress and future outlook. J. Alloy. Compd. 827, 153548 (2020).

Klopčič, N., Grimmer, I., Winkler, F., Sartory, M. & Trattner, A. A review on metal hydride materials for hydrogen storage. J. Energy Storage 72, 108456 (2023).

Allendorf, M. D. et al. Challenges to developing materials for the transport and storage of hydrogen. Nat. Chem. 14, 1214–1223 (2022).

Gupta, A. & Faisal, M. Magnesium based multi-metallic hybrids with soot for hydrogen storage. Int. J. Hydrog. Energy 53, 93–104 (2024).

Schimmel, H., Kearley, G., Huot, J. & Mulder, F. Hydrogen diffusion in magnesium metal (α phase) studied by ab initio computer simulations. J. Alloy. Compd. 404-406, 235–237 (2005).

Klyukin, K., Shelyapina, M. G. & Fruchart, D. Dft calculations of hydrogen diffusion and phase transformations in magnesium. J. Alloy. Compd. 644, 371–377 (2015).

Shelyapina, M. G. Hydrogen diffusion on, into and in magnesium probed by dft: a review. Hydrogen 3, 285–302 (2022).

Nishimura, C., Komaki, M. & Amano, M. Hydrogen permeation through magnesium. J. Alloy. Compd. 293-295, 329–333 (1999).

Uchida, H. et al. Absorption kinetics and hydride formation in magnesium films: effect of driving force revisited. Acta Mater. 85, 279–289 (2015).

Renner, J. & Grabke, H. J. Bestimmung von diffusionskoeffizienten bei der hydrierung von legierungen. Int. J. Mater. Res. 69, 639–642 (1978).

Kwon, H., Shiga, M., Kimizuka, H. & Oda, T. Accurate description of hydrogen diffusivity in bcc metals using machine-learning moment tensor potentials and path-integral methods. Acta Mater. 247, 118739 (2023).

Tang, H. et al. Reinforcement learning-guided long-timescale simulation of hydrogen transport in metals. Adv. Sci. 11, 2304122 (2024).

Kimizuka, H., Thomsen, B. & Shiga, M. Artificial neural network-based path integral simulations of hydrogen isotope diffusion in palladium. J. Phys. Energy 4, 034004 (2022).

Lu, G. M., Witman, M., Agarwal, S., Stavila, V. & Trinkle, D. R. Explainable machine learning for hydrogen diffusion in metals and random binary alloys. Phys. Rev. Mater. 7, 105402 (2023).

Friederich, P., Häse, F., Proppe, J. & Aspuru-Guzik, A. Machine-learned potentials for next-generation matter simulations. Nat. Mater. 20, 750–761 (2021).

Yu, F., Xiang, X., Zu, X. & Hu, S. Hydrogen diffusion in zirconium hydrides from on-the-fly machine learning molecular dynamics. Int. J. Hydrog. Energy 56, 1057–1066 (2024).

Vandenhaute, S., Cools-Ceuppens, M., DeKeyser, S., Verstraelen, T. & Van Speybroeck, V. Machine learning potentials for metal-organic frameworks using an incremental learning approach. npj Comput. Mater. 9, 1–8 (2023).

Kývala, L., Angeletti, A., Franchini, C. & Dellago, C. Diffusion and coalescence of phosphorene monovacancies studied using high-dimensional neural network potentials. J. Phys. Chem. C 127, 23743–23751 (2023).

Wang, N. & Huang, S. Molecular dynamics study on magnesium hydride nanoclusters with machine-learning interatomic potential. Phys. Rev. B 102, 094111 (2020).

Deng, B., Zhong, P. & Jun, K. et al. Chgnet as a pretrained universal neural network potential for charge-informed atomistic modelling. Nat. Mach. Intell. 5, 1031–1041 (2023).

Batatia, I., Kovacs, D. P., Simm, G., Ortner, C. & Csanyi, G. Mace: higher order equivariant message passing neural networks for fast and accurate force fields. In Advances in Neural Information Processing Systems, Vol. 35 11423–11436 (eds Koyejo, S. et al.) (Curran Associates, Inc., 2022).

Jinnouchi, R., Karsai, F., Verdi, C., Asahi, R. & Kresse, G. Descriptors representing two- and three-body atomic distributions and their effects on the accuracy of machine-learned inter-atomic potentials. J. Chem. Phys. 152, 234102 (2020).

Jinnouchi, R., Karsai, F. & Kresse, G. On-the-fly machine learning force field generation: application to melting points. Phys. Rev. B 100, 014105 (2019).

Verdi, C., Ranalli, L., Franchini, C. & Kresse, G. Quantum paraelectricity and structural phase transitions in strontium titanate beyond density functional theory. Phys. Rev. Mater. 7, L030801 (2023).

Ranalli, L. et al. Temperature-dependent anharmonic phonons in quantum paraelectric ktao3 by first principles and machine-learned force fields. Adv. Quantum Technol. 6, 2200131 (2023).

Verdi, C., Karsai, F., Liu, P., Jinnouchi, R. & Kresse, G. Thermal transport and phase transitions of zirconia by on-the-fly machine-learned interatomic potentials. npj Comput. Mater. 7, 156 (2021).

Liu, P., Verdi, C., Karsai, F. & Kresse, G. Phase transitions of zirconia: machine-learned force fields beyond density functional theory. Phys. Rev. B 105, L060102 (2022).

Henkelman, G., Uberuaga, B. P. & Jónsson, H. A climbing image nudged elastic band method for finding saddle points and minimum energy paths. J. Chem. Phys. 113, 9901–9904 (2000).

Kresse, G. & Furthmüller, J. Efficient iterative schemes for ab initio total-energy calculations using a plane-wave basis set. Phys. Rev. B 54, 11169–11186 (1996).

Kresse, G. & Joubert, D. From ultrasoft pseudopotentials to the projector augmented-wave method. Phys. Rev. B 59, 1758–1775 (1999).

Perdew, J. P., Burke, K. & Wang, Y. Generalized gradient approximation for the exchange-correlation hole of a many-electron system. Phys. Rev. B 54, 16533–16539 (1996).

Riebesell, J. et al. Matbench discovery–an evaluation framework for machine learning crystal stability prediction. Preprint at https://arxiv.org/abs/2308.14920 (2023).

Batatia, I. et al. A foundation model for atomistic materials chemistry. Preprint at https://arxiv.org/abs/2401.00096 (2023).

Jain, A. et al. Commentary: The materials project: a materials genome approach to accelerating materials innovation. APL Mater. 1, 011002 (2013).

Thompson, A. P. et al. LAMMPS - a flexible simulation tool for particle-based materials modeling at the atomic, meso, and continuum scales. Comput. Phys. Commun. 271, 108171 (2022).

Larsen, A. H. et al. The atomic simulation environment-a python library for working with atoms. J. Phys. Condens. Matter 29, 273002 (2017).

Acknowledgements

C.F. and A.A. acknowledge the “Doctoral College Advanced Functional Materials - Hierarchical Design of Hybrid Systems DOC 85 doc.funds” funded by the Austrian Science Fund (FWF) and by the Vienna Doctoral School in Physics (VDSP). For Open Access purposes, the author has applied a CC BY public copyright license to any author accepted manuscript version arising from this submission. D.M. and S.P. were supported by the European Union Horizon 2020 research and innovation program under Grant Agreement No. 857470, from the European Regional Development Fund under the program of the Foundation for Polish Science International Research Agenda PLUS, grant No. MAB PLUS/2018/8, and the initiative of the Ministry of Science and Higher Education ’Support for the activities of Centers of Excellence established in Poland under the Horizon 2020 program’ under agreement No. MEiN/2023/DIR/3795. L.P. and C.F. acknowledge the National Recovery and Resilience Plan (NRRP), Mission 4 Component 2 Investment 1.3 - Project NEST (Network 4 Energy Sustainable Transition) of Ministero dell’Universitá e della Ricerca (MUR), funded by the European Union - NextGenerationEU. L.L. and C.F. acknowledge the NRRP, CN-HPC grant no. (CUP) J33C22001170001, SPOKE 7, of MUR, funded by the European Union - NextGenerationEU. The computational results were obtained using the Vienna Scientific Cluster (VSC) and the LEONARDO cluster. We acknowledge access to LEONARDO at CINECA, Italy, via an AURELEO (Austrian Users at LEONARDO supercomputer) project.

Author information

Authors and Affiliations

Contributions

Conceptualization: C.F., A.A., D.M., and L.L. Methodology and calculations: A.A., L.L., and D.M. Supervision: C.F. Writing: A.A., D.M., L.L., and C.F. Discussion and reviewing: All authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Angeletti, A., Leoni, L., Massa, D. et al. Hydrogen diffusion in magnesium using machine learning potentials: a comparative study. npj Comput Mater 11, 85 (2025). https://doi.org/10.1038/s41524-025-01555-z

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41524-025-01555-z

This article is cited by

-

Many-body perturbation theory vs. density functional theory: a systematic benchmark for band gaps of solids

npj Computational Materials (2026)

-

Predicting hydrogen diffusion in nickel–manganese random alloys using machine learning interatomic potentials

Communications Materials (2025)