Abstract

Understanding quantum phase transitions in physical systems is fundamental to characterize their behavior at low temperatures. Achieving this requires both accessing good approximations to the ground state and identifying order parameters to distinguish different phases. Addressing these challenges, our work introduces a hybrid algorithm that combines quantum optimization with classical machine learning. This approach leverages the capability of near-term quantum computers to prepare locally trapped states through finite optimization. Specifically, we apply LASSO for identifying conventional phase transitions and the Transformer model for topological transitions, utilizing these with a sliding window scan of Hamiltonian parameters to learn appropriate order parameters and locate critical points. We validated the method with numerical simulations and real-hardware experiments on Rigetti’s Ankaa 9Q-1 quantum computer. This protocol provides a framework for investigating quantum phase transitions with shallow circuits, offering enhanced efficiency and, in some settings, higher precision-thus contributing to the broader effort to integrate near-term quantum computing and machine learning.

Similar content being viewed by others

Introduction

Quantum phase diagrams are a fundamental tool to characterize the behavior of quantum systems under varying external conditions, such as temperature and magnetic fields1,2,3,4,5,6. A better understanding of quantum phase transitions finds application in the field of materials science, where it may inform the development of novel materials with unique properties. For instance, understanding superconductor-insulator phase transitions is instrumental in the research and development of high-temperature superconductors7,8. Due to the intrinsic complexity of quantum systems, the accurate determination of the ground-state phase diagram is a formidable challenge9,10. Experimentally achieving and maintaining the ground state is also difficult, requiring precise control of conditions and often near-zero temperatures11,12,13. Furthermore, the characterization of quantum critical points is often hindered by unknown order parameters, particularly in systems undergoing multiple unconventional phase transitions1,3,14. Traditional approaches, such as Landau’s theory, which link order parameters with symmetry changes, often fail for non-trivial topological phase transitions or phases without conventional symmetry breaking15,16,17.

In general, approximating the ground state of a system is known to be hard, even for a quantum computer10. Nonetheless, variational quantum approaches offer a practical alternative for creating states that capture correctly the physics of the ground state in some cases18,19. These methods have become increasingly important in the study of quantum phase transitions20,21,22,23,24,25,26,27. Particularly relevant and noteworthy are the works of Dreyer et al.20 and Bosse et al.24. In ref. 20 the authors utilized variational quantum optimization on the transverse-field Ising chain, revealing an intriguing behavior of the order parameter, i.e. the transverse magnetization: it exhibits scaling collapse with respect to the circuit depth exclusively on one side of the phase transition, illustrating distinct behaviors across the critical point. Bosse et al.24 explored the utility of the variational quantum eigensolver for learning phase diagrams of quantum systems, focusing on the use of both the change of the energy of the output states during optimization and a variety of traditional order parameters. This study underscores the potential of (low-fidelity) variationally optimized quantum states to provide reliable indicators of phase transitions across a variety of quantum models, including both one-dimensional and two-dimensional spin and fermion systems. However, even with polynomially-sized quantum circuits, classical optimization strategies may be hindered by locally trapped states within the optimization landscape28,29. Although these states may effectively reflect the physical properties of various phases, accurately identifying the specific order parameter that defines the phase they represent remains a challenging task.

At the same time, advancements in machine learning (ML) have opened new ways for analysing quantum many-body problems30,31,32. Notably, machine learning techniques—especially unsupervised learning—have been extensively employed to detect quantum phase transitions33,34,35,36,37,38,39,40,41. Studies such as those by Huang et al.40, Lewis et al.42, and Onorati et al.43,44 have further theoretically highlighted the efficacy of these algorithms in learning unknown properties of quantum systems’ ground states, significantly within identical phases, even in the absence of exponentially decaying correlations. This capability to distinguish ground-state data from different phases makes machine learning a potent tool for detecting phase transitions by incrementally adjusting the Hamiltonian parameter and modifying the dataset. This philosophy leverages machine learning’s differential performance across datasets to accurately identify critical phase changes. Despite these innovations, most studies (e.g., refs. 34,37,38,40,41) were focusing on data from exact ground states calculated via methods like exact diagonalization or quantum Monte Carlo simulations, which may limit the analysis of larger or classically intractable systems. Moreover, the determination of the order parameter following training remains ambiguous.

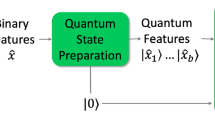

Inspired by these advancements, this work aims to study the use of locally trapped states to characterize phase transitions, without prior knowledge of the associated order parameters. We employ classical machine learning to distill meaningful patterns from these states, thereby learning effective order parameters. Local traps, including local minima, commonly found in the complex energy landscapes of quantum systems, often hinder the efficiency of optimization algorithms by preventing convergence to the global minimum28,45. Nevertheless, they can contain important information about the phase of a quantum system. Figure 1 provides a schematic overview of our approach. When the optimization deficit is relatively small—that is, the locally trapped states have a non-negligible overlap with the ground state—we show that classical machine learning can successfully detect ground-state phase transitions. Indeed, in certain cases, a ground-state phase transition affects the spectrum and properties of the low-lying excited states. Under these circumstances, we expect that the machine learning protocol will remain effective, even when the variationally optimized quantum state possesses minimal overlap with the ground state. As quantum phase transitions are well defined only in the thermodynamic limit, applying our approach to finite-size systems can only provide approximate estimates of the critical points. The finite-size-scaling technique is a well-established method to overcome this issue and to obtain accurate values of critical properties in the thermodynamic limit by extrapolating results obtained within finite-size systems46,47. In this work we show that, under certain assumptions, our algorithm can be employed to implement a variant of this technique in terms of quantum resources, quantified by the depth of the variational quantum circuits, that we term finite-depth extrapolation technique.

Schematic picture of (a) a finite-optimization phase diagram and (b) learning effective order parameters and phase transitions from local minima states. In (a), as the optimization deficit increases—attributable to either less expressive variational ansätze or inadequate optimization—the system becomes less capable of reaching the ground state, causing a slight shift of the system’s phase transition away from the critical point associated with the ground state. In (b), machine learning identifies potential phase transitions by constructing a classical loss landscape. Within this landscape, potential phase transitions are located by valleys.

In this study, we deploy a quantum optimization-machine learning hybrid algorithm to detect both traditional and topological phase transitions across various quantum systems, including the 1D and 2D transverse-field Ising models (TFIMs) and the extended Su-Schrieffer-Heeger (eSSH) model. Our methodology incorporates linear and deep learning machine learning techniques, namely LASSO48 and Transformer49. In quantum information, LASSO has been employed for tasks like predicting ground state properties42 and for learning unknown observables50. Here, we apply it to extract effective order parameters and accurately determine critical boundaries. Transformer models, while previously utilized, for example, to perform time-domain analysis from quantum Monte Carlo data51 or serving as ansätze within Monte Carlo frameworks52, are implemented in our work in a different way and with a distinct purpose: to capture non-trivial phase-transition-related non-local interdependencies within the data arising from locally trapped states from variational quantum circuits. We show that our protocol can identify critical points and discern candidate order parameters associated with the phase transitions. Notably, for the 1D TFIM, our approach outperforms traditional magnetization measurements and, through the application of the finite-depth extrapolation technique, our approach determines the critical point of the model’s quantum phase transition with high accuracy despite the maximum fidelity achieved by our shallow variational circuits near this point being only 0.75. In the 2D TFIM, we show that our algorithm accurately predicts phase transitions despite considerable gate noise, with validation provided through noisy experiments on Rigetti’s Ankaa 9Q-1 quantum device. Finally, for the eSSH model, we demonstrate that our method effectively learns an order parameter with minimal finite-size effects, yielding more precise critical—point estimates than those from the finite-size partial-reflection many-body topological invariant22.

While our demonstrations in some scenarios employ relatively shallow variational circuits and local observables—raising potential concerns about classical simulability in certain regimes53,54—our approach is not restricted to this setting. In practice, polynomial- or even logarithmic-depth circuits may remain classically intractable for large systems, particularly in the presence of long-range interactions or complex connectivity. Rather than making a formal claim of quantum advantage, our primary goal is to show that reliable phase transition detection remains possible even if the variational circuit does not closely approximate the ground state. We discuss the interplay between our method and potential classical simulability, along with other limitations, in the Discussion. Crucially, although many previous studies have focused on data derived from exact ground states for theoretical and numerical analyses, our methodology demonstrates that reliable phase transition detection can be achieved using variationally optimized local minima states. These states capture the essential physics even at shallow circuit depths, despite their deviation from the exact ground state.

Results

In this section, we apply our algorithm, described in Section METHODS, to study quantum phase transitions in various quantum models. We begin with the 1D transverse-field Ising model (TFIM) with periodic boundary conditions. This model, particularly well-suited for applying our finite-depth extrapolation method, allows us to verify the exponential scaling of Eq. (38) and to test the algorithm’s resilience to shot noise. Subsequently, our focus shifts to the 2D TFIM with open boundaries, explored through both numerical analysis and experiments on Rigetti’s Ankaa 9Q-1 quantum computer, where we assess the algorithm’s robustness to moderate levels of gate noise in our tested settings. Finally, we examine the non-integrable extended Su-Schrieffer-Heeger (eSSH) model55,56, characterized by its topological phase transitions. We apply the LASSO-based algorithm to the first two models, where phase transitions are identifiable through local order parameters. In contrast, the eSSH model, which lacks straightforward local order parameters, is analysed using a Set Transformer-based approach. This method demonstrates effectiveness in at identifying complex, non-local interdependencies and directly learning non-linear order parameters from the data. For each configuration considered, we initiate the optimization process with a single set of starting values for ζ and η, subsequently employing the Broyden-Fletcher-Goldfarb-Shanno algorithm57,58,59,60 to obtain local minima states. The quantum circuits are simulated using the Pennylane software framework61, with all loss functions being linearly normalized within the range [0, 1].

1D Transverse-field Ising Model

The initial subject of our investigation is the 1D TFIM with periodic boundary conditions, described by the following Hamiltonian:

The first term describes the interaction between neighbouring spins along the chain and the coupling constant J quantifies the strength of this interaction. The second term represents the influence of a transverse magnetic field applied perpendicular to the direction of spin-spin interaction. The competition between these two terms leads to a quantum phase transition at the critical point g = J1,6. For g < J, the spin-spin interaction dominates, resulting in a phase where spins are ordered. For g > J, the transverse field dominates, leading to a disordered phase where spins align with the field. Usually, this transition is characterized by measuring the magnetization in the x-direction, \({m}_{x}=1/n\mathop{\sum }\nolimits_{j = 1}^{n}{\sigma }_{j}^{x}\), where n is the number of spins in the system. In what follows, the coupling strength J is set to be 1.

Our approach employs the Hamiltonian variational ansatz, as detailed in refs. 62,63, parameterized by β, γ (see Section Algorithm):

where \({{\mathcal{H}}}_{zz}\) and \({{\mathcal{H}}}_{x}\) correspond to the sum of \({\sigma }_{j}^{z}{\sigma }_{j+1}^{z}\) and \({\sigma }_{j}^{x}\), respectively. As described in section Algorithm, β, γ are represented by a Fourier series, with Fourier coefficients \(\tilde{{\boldsymbol{\beta }}}\) and \(\tilde{{\boldsymbol{\gamma }}}\) expressed as polynomials of g up to the fourth order,

The vectors ζ and η are then optimized to minimize the energy function sum

where ρ(ζ, η; g) are the optimized states and \({{\mathcal{G}}}_{{\rm{opt}}}=\{{g}_{\min },{g}_{\min }+\delta g,\ldots ,{g}_{\max }\}\), with δg the optimization sampling resolution, is the optimization grid. The global optimization approach we adopt here ensures better stability and allows us to generate optimized states for any value of g. This is particularly useful in training the machine learning algorithm for the detection the system’s quantum phase transitions, where we prepare optimized states ρ(ζ, η; g) for all the values of g in the detection grid \({{\mathcal{G}}}_{\det }=\{{\hat{g}}_{\min },{\hat{g}}_{\min }+\delta \hat{g},\ldots ,{\hat{g}}_{\max }\}\), with \(\delta \hat{g}\) the detection sampling resolution. See section Algorithm for more details.

For the optimization process of the 1D TFIM, we choose \({g}_{\min }=0\) to \({g}_{\max }=2\), with a sampling resolution of δg = 0.1. The detection grid spans from \({\hat{g}}_{\min }=0.57\) to \({\hat{g}}_{\max }=1.13\), with a finer sampling resolution of \(\delta \hat{g}=0.001\). As long as n ≥ 2p + 2, the optimized local observable expectation values are ensured to remain consistent for infinitely large n. Beginning with p = 1 and n = 4, we sample initial values for ζ and η from a narrow range (− 10−3, 10−3), then we iteratively adjust their values to minimize the energy sum over \({{\mathcal{G}}}_{{\rm{opt}}}\). We found that different samples of the initial values of ζ and η within this near-zero range do not lead to significant differences in the outcomes. Hence, we present results based on a singular random sample in subsequent analyses.

Upon convergence, we prepare states for each g in \({{\mathcal{G}}}_{\det }\), measuring expectation values for all Pauli terms with weight smaller than 3:

Then we employ a sliding window with window size w = 30 and LASSO regression with an adaptive regularization parameter λ to locate the phase transition critical point. In practical scenarios, one might use the technique of classical shadows to obtain such local observable expectations simultaneously64.

In the process of adapting and utilizing optimized parameters for further analysis, we simultaneously increase both p and n, specifically,

thereby extending \(\tilde{{\boldsymbol{\beta }}}\) and \(\tilde{{\boldsymbol{\gamma }}}\) with zero initial values to accommodate the expanded circuit and system size. This procedure is iteratively executed in numerical simulations, enabling the prediction of critical points \({\tilde{g}}_{c}\) for various values of p. The recursive optimization strategy is depicted in Fig. 2. Instead of setting n = 2p for producing the exact ground states63, our simulations focuses on configurations where n ≥ 2p + 2—practically, n = 2p + 2—aiming to understand the finite-depth effects and explore the applications of finite-depth extrapolation discussed in Section Finite-Depth Extrapolation.

Although setting p = n/2 allows for the exact preparation of ground states as explored in63, our study focuses on the dynamics of locally trapped states under conditions where n ≥ 2p + 2, thus examining the effects of finite circuit depth. Importantly, for any given p, systems with n ≥ 2p + 2 can share the same optimized parameters. In our simulations, we specifically adopt n = 2p + 2 for optimization, as highlighted by the dotted dark green arrow, to maintain a consistent approach across different system sizes.

Numerical findings are illustrated in Fig. 3. Panel (a) shows the fidelity between optimized quantum states and true ground states, defined by

for p = 2, 3, ⋯ , 7 and n = 18 across the detection grid \({{\mathcal{G}}}_{\det }\). Fidelities at the critical point gc = 1 are recorded as 0.58, 0.68, 0.75, 0.80, 0.84, and 0.87, respectively. Near g ≈ 0, fidelities fluctuate considerably due to the small spectral gap present in this region, where optimization struggles to consistently identify states close to the true ground state. Despite the optimized quantum states achieving relatively stable low energy, the fidelity to the ground state remains inconsistent. Nevertheless this region is distant from gc = 1 and minimally impacts our findings. For g ≫ 1, even at p = 2, the fidelities significantly increase, as the ground states are close to the initial product state. The inset of panel (a) displays the derivative of fidelity, \(\partial {\mathcal{F}}/\partial g\), as a function of g, revealing that the peak does not align with the thermodynamic critical point and shifts away from it as p increases. Panel (b) presents the LASSO regression’s loss landscape, highlighting the minimum point which correlates with the anticipated phase transition critical point \({\tilde{g}}_{c}\). Utilizing a relatively large regularization parameter λ sharpens the valleys within the loss landscape, leading to the selection of a singular observable as the order parameter at the valley’s lowest point. The LASSO selected order parameter here is

which captures the long-range correlations within the system, yielding a more precise prediction of the critical point than the traditional x-direction magnetization, mx.

a The fidelity between optimized states and the true ground states, evaluated across varying p and g values, with the system size fixed at n = 18. The inset shows the fidelity derivatives. b The normalized loss landscape generated by LASSO regression, with the minimum point indicating the predicted phase transition critical point \({\tilde{g}}_{c}(p)\). c The exponential decay trend of the difference between \({\tilde{g}}_{c}\) and the theoretical value gc = 1 as a function p. Hollow triangles represent values derived from x-magnetization and solid triangles denote values from our loss landscape. d Finite-depth exponential extrapolation to estimate the critical point in the thermodynamic limit. The extrapolated values obtained for x-magnetization and our loss landscape are 0.921 ± 0.004 and 0.995 ± 0.002, respectively. e The average and standard deviation (represented by the shaded areas) of the predicted critical point \({\tilde{g}}_{c}\) as a function of the number of shots. In all panels, colours correspond to the different values of p defined in panels (a, b).

The exponential decay in the error between the estimated and the exact critical point as a function of the circuit depth p, as predicted by our algorithm and by mx, is shown in Fig. 3c, d. This suggests that, under certain assumptions (see Observation 1 in section Finite-Depth Extrapolation), the location of the critical point in the thermodynamic limit, gc, can be predicted by applying an exponential extrapolation approach to the results for finite depth p, \({\tilde{g}}_{c}(p)\), i.e., \({\tilde{g}}_{c}(p)={g}_{c}(p\to \infty )+c{e}^{-\nu p}\). By applying the equation above to the finite-p critical points obtained from mx, we obtain an extrapolated critical point \({\tilde{g}}_{c}(p\to \infty )=0.921\), with a mean square fitting error of 0.004. Moreover, using the finite-depth extrapolation technique in combination with our algorithm predicts \({\tilde{g}}_{c}(p\to \infty )=0.995\), yielding a mean square fitting error of 0.002, closely approximating the theoretical value of gc = 1. Compared with prior works20,24, our approach achieves stable and precise estimations of the critical point even with notably shallow circuits. For further evaluation, we applied polynomial fitting using the equation \({\tilde{g}}_{c}(p)={g}_{c}(p\to \infty )+c{p}^{-\nu }\). The extrapolated gc(p → ∞) values for mx and our algorithm are \({\tilde{g}}_{c}(p\to \infty )=1.566\pm 0.003\) and \({\tilde{g}}_{c}(p\to \infty )=2.545\pm 0.004\), respectively, both significantly deviating from the theoretical value of gc = 1, further corroborating the exponential convergence of \({\tilde{g}}_{c}(p)\) to the thermodynamic limit value. Furthermore, we compute the Pearson correlation coefficient r between the estimation error \(| {\tilde{g}}_{c}-{g}_{c}|\) and the fidelity at the critical point \({\mathcal{F}}({g}_{c})\), defined as65:

p indexes data from different circuit depths, and overlines denote mean values over different p values. Variables exhibit strong linear correlation for r ≈ 1, strong inverse correlation for r ≈ − 1, and no correlation for r ≈ 0. Our analysis reveals a Pearson correlation coefficient r = 0.999 between \(1-{\mathcal{F}}({g}_{c})\) and \(| {\tilde{g}}_{c}-{g}_{c}|\) with \({\tilde{g}}_{c}\) obtained from our loss landscape; and r = 0.998 between \(1-{\mathcal{F}}({g}_{c})\) and \(| {\tilde{g}}_{c}-{g}_{c}|\) with \({\tilde{g}}_{c}\) from mx. All these results support the existence of a robust relationship:

It is important to note that for panels (a) through (d) in Fig. 3, exact calculations of measurement expectations were employed, thereby excluding potential circuit or measurement errors. To simulate the constraints encountered in experimental setups due to finite measurements, we performed 100 numerical trials. In each trial, we used a finite number of measurement shots to estimate the expectation values of local observables within \({{\mathcal{P}}}_{f}\). The average and the standard deviation of the predicted critical point \({\tilde{g}}_{c}\) as a function of the number of shots is showcased in Fig. 3e. Remarkably, even with a relatively modest number of shots, approximately 5000, the standard deviation remains minimal, as depicted by the shaded area, underscoring the protocol’s robustness to shot noise and feasibility for practical quantum experiments.

As we will discuss in Algorithm, our algorithm can identify the most dominant feature that serves as an order parameter to characterize the phase transition. As an interesting extension, we can iteratively refine this process by manually removing the currently identified feature, re-running the algorithm to find the next dominant feature, and repeating this process until no stable central-minima loss valley is observed. This approach allows us to identify a series of order parameters that provide estimates of the critical point. We implement this iterative approach for optimized states from quantum circuits with depths p = 4 and p = 7, identifying the following symmetric order parameters:

where d = 1, 2, …, p + 1, and

where d = 2, 3, …, p + 1. The estimated critical points obtained from these learned order parameters over all the possible values of d are shown in Fig. 4. Each order parameter provides a more precise estimate of the critical point compared to traditional x-magnetization. Notably, even for these states with fidelities of only 0.75 (p = 4) and 0.87 (p = 7) at the critical point, \({{\mathcal{O}}}_{{\rm{zz}}}\) and \({{\mathcal{O}}}_{{\rm{yz}}}\) provides an accurate estimate of the critical point when d ≥ 4: \(0.98\,\lesssim\, {\tilde{g}}_{c}\,\lesssim\, 1.03\) without the need for extrapolation. Additionally, we notice that the difference between the estimated critical point \({\tilde{g}}_{c}\) and the theoretical value gc = 1 tends to diminish as d increases. Furthermore, for relatively small values of d, extrapolation in p can be employed to refine the critical point estimation.

a Results for optimized states at circuit depth p = 4, with the average of \({{\mathcal{O}}}_{{\rm{xx}}}(d)\) estimating the critical point as \({\tilde{g}}_{c}=0.83\pm 0.05\), the average of \({{\mathcal{O}}}_{{\rm{zz}}}(d)\) as \({\tilde{g}}_{c}=0.96\pm 0.03\), and the average of \({{\mathcal{O}}}_{{\rm{yz}}}(d)\) as \({\tilde{g}}_{c}=0.97\pm 0.06\). b Results for circuit depth p = 7. The average of \({{\mathcal{O}}}_{{\rm{xx}}}(d)\) estimates the critical point as \({\tilde{g}}_{c}=0.94\pm 0.03\), the average of \({{\mathcal{O}}}_{{\rm{zz}}}(d)\) as \({\tilde{g}}_{c}=1.00\pm 0.02\), and the average of \({{\mathcal{O}}}_{{\rm{yz}}}(d)\) as \({\tilde{g}}_{c}=0.99\pm 0.04\). In both panels, dashed lines indicate the average critical point estimates across different d values while the dotted lines display the critical point estimate obtained from the magnetization mx.

Additionally, we investigate the influence of noise on the optimization of the variational parameters. Our numerical simulations on the 1D TFIM (detailed in the Supplementary Material (SM)) reveal that even when the optimization is performed on noisy quantum circuits—where each 2-qubit gate is immediately followed by a local depolarizing noise channel with error rate ϵ(DP) = 0.01—the resulting optimized parameters are only marginally affected. Both the output fidelity as a function of g and the LASSO loss landscapes for circuit depths p = 2 and p = 3 show only slight degradations compared to the noiseless case. Notably, the learned order parameter remains \({{\mathcal{O}}}_{{\rm{ml}}}=(1/n){\sum }_{j}{\sigma }_{j}^{x}{\sigma }_{j+2}^{x}\), and the critical point estimates derived from the loss landscapes differ only minimally. These results indicate that our algorithm is robust to moderate hardware noise during the optimization process.

2D Transverse-field Ising Model

Now we first analyse a 2D TFIM consisting of a 3 × 3 qubit grid with open boundary conditions, described by the Hamiltonian

where 〈ij〉 indicates nearest neighbour qubits. We aim to utilize the developed algorithm to accurately estimate the phase transition critical point of this system, both through numerical simulations and experimental implementation.

To address the challenges posed by the varying roles of qubits and edges in the 2D lattice, we adopt a modified Hamiltonian variational ansatz. This approach specifically accounts for the distinct interactions and qubit positions as follows:

Here, \({{\mathcal{H}}}_{zz}\) and \({{\mathcal{H}}}_{zz}^{{\prime} }\) correspond to the sum of \({\sigma }_{j}^{z}{\sigma }_{j+1}^{z}\) for edges not involving and involving the central qubit (Q4), respectively. \({{\mathcal{H}}}_{x}\), \({{\mathcal{H}}}_{x}^{{\prime} }\), \({{\mathcal{H}}}_{x}^{{\prime\prime} }\) denote the sum of \({\sigma }_{j}^{x}\) operators for distinct sets of qubits: {Q0, Q2, Q6, Q8}, {Q1, Q3, Q5, Q7}, and {Q4}, respectively. This ansatz, visually depicted in Fig. 5(a), is specifically designed to respect the inherent reflection and rotational symmetries of the lattice, ensuring that the variational parameters are symmetrically adapted to the physical layout and interaction dynamics of the system.

a Quantum circuit design utilizing a tailored Hamiltonian variational ansatz that accommodates the distinct interactions within the grid. b Details of the four measurement protocols employed to measure the expectation values of one-weight and two-weight Pauli terms, using specific single-qubit unitaries for basis rotation before projective measurements. c Derivatives of the relative spectral gap and ground state entanglement entropy, hinting to a probable phase transition with a critical point between 1.68 and 1.84, contrasted against the derivative peak of x-magnetization at 1.34. d Loss minimization results from both computational and experimental analyses, pinpointing an estimate of the phase transition critical point at 1.60, which is closer to values given by relative spectral gap and entanglement entropy. The inset highlights the derivative of the learned order parameter 〈Oml〉, demonstrating the algorithm’s capability to predict critical points amidst experimental data variability. e Loss minimization results under various simulated amplitude damping (AD) and depolarizing (DP) noise rates, revealing the algorithm’s robustness to noise. The inset illustrates the critical point’s sensitivity to increased noise rates, with a notable shift in \({\tilde{g}}_{c}\) values, which also is accompanied by the selection of another order parameter.

To facilitate the estimation of expectation values for most Pauli terms in \({{\mathcal{P}}}_{f}\), which consist of weight one and two operators up to rotation and reflection symmetries, we implement four distinct measurement protocols, detailed in Fig. 5b. For qubits labeled with X, Y, and Z, pre-measurement single-qubit unitaries are applied to rotate the measurement basis accordingly, followed by projective measurements. This methodology ensures comprehensive analysis and measurement across the qubit array.

Before implementing our algorithm across a range of g values, we examine two metrics which have been used to analyse quantum phase transitions in the literature66,67,68,69: the relative spectral gap, defined as ∣(E1 − Egs)/Egs∣ where Egs represents the ground state energy and E1 denotes the first excited state energy of \({{\mathcal{H}}}_{{\rm{TI-2D}}}\), and the ground state entanglement entropy, with a focus on the entanglement between the central qubit, Q4, and its complement, \({Q}_{4}^{c}\), within the system. This is expressed as

where \({\rho }_{{Q}_{4}}\) is the reduced density matrix for Q4, defined as \({\rho }_{{Q}_{4}}={\text{Tr}}_{{Q}_{4}^{c}}(| {\psi }_{{\rm{gs}}}\left.\right\rangle \left\langle \right.{\psi }_{{\rm{gs}}}| )\). Here, \(| {\psi }_{{\rm{gs}}}\left.\right\rangle\) denotes the ground state of \({{\mathcal{H}}}_{{\rm{TI-2D}}}\).

In our exploration, the relative spectral gap and the entanglement entropy serve as indicators of shifts in the global properties of the ground state, as opposed to merely reflecting changes in local order parameters. Consequently, in comparison to a local order parameter such as the magnetization, these metrics are less affected by finite-size effects and emerge as more sensitive probes for detecting phase transitions within small systems. By computing the derivatives of these metrics, we identify peaks at 1.84 and 1.68, respectively, as illustrated in Fig. 5c. These peaks hint at a likely phase transition with critical point located near 1.68~1.84. In contrast, the derivative of the x-magnetization, \({m}_{x}=1/n\mathop{\sum }\nolimits_{j = 1}^{n}{\sigma }_{j}^{x}\), exhibits a sharp decline at 1.34, markedly deviating from the aforementioned range. Previous numerical studies have determined that the 2D TFIM undergoes a quantum phase transition at a critical point of approximately 3.04438 in the thermodynamic limit70,71. In the infinite-size limit, predictions of the critical point from relative energy gap, entanglement entropy, and x-magnetization are expected to converge to this value. However, owing to current hardware constraints, our analysis in this part is confined to a 3 × 3 qubit system. To further validate our protocol, we extend our numerical investigations to larger system sizes with periodic boundary conditions in the SM. For the 3 × 3 system considered here, estimates of the critical point from the peaks in the relative energy gap and entanglement entropy are also closer to the exact value and confirm the enhanced sensitivity of these probes with respect to a local order parameter. Since obtaining a precise estimate of the critical point would require a finite-size (or depth) extrapolation, which is beyond the scope of this work, in the following we will assume the range 1.68~1.84 as a benchmark for our protocol. If an order parameter yields an estimate that is closer to, or even surpasses, this benchmark—indicating that it converges more rapidly and is less affected by finite-size effects—then we consider that order parameter to be superior.

Proceeding to quantum optimization, we employ a shallow quantum circuit with p = 2. Implementing our algorithm, we carry out numerical simulations over the optimization grid points \({{\mathcal{G}}}_{{\rm{opt}}}=\{0,0.1,0.2.,\ldots ,3\}\). Then, we train the LASSO regressor over the detection grids \({{\mathcal{G}}}_{\det }=\{0,0.01,0.02.,\ldots ,3\}\) for numerical simulations and utilize \({{\mathcal{G}}}_{\det }={{\mathcal{G}}}_{{\rm{opt}}}\) for experiments conducted on the Rigetti Ankaa-9Q-1 machine72. For the former detection grid, the fidelity between the noiseless ideal output states and the true ground states vary, ranging from 0.200 to 0.999, with an mean fidelity of 0.987, indicating a minimal optimization deficit. The chosen window sizes are w = 40 for numerical data and w = 4 for experimental data.

The Ankaa-9Q-1 device’s performance metrics, documented around the time of the experiment, are detailed in Table 1. Our initial circuit configuration includes 24 Rzz rotation gates, 18 Rx rotation gates, and 9 additional single-qubit gates preceding measurements. However, the compiled circuits exhibit variability in the quantity and type of gates, contingent upon different parameters and measurements. On average, each compiled circuit incorporates 26 CZ gates, 26 iSWAP gates, 127 Rx gates, and 207 Rz gates. To compute expectation values we used 30,000 shots per circuit, divided across three rounds with 10,000 shots each. It is important to highlight that in this experiment, neither gate error nor readout error mitigation techniques were applied.

Both numerical and experimental investigations reveal a loss minimum at 1.6, as depicted in Fig. 5d, offering a refinement over estimates derived from mx. This also shows that our algorithm can accurately extract phase transition information without necessitating error mitigation. By integrating symmetry considerations into our assessment, we find the following learned order parameter

The inset of Fig. 5d showcases the derivative of \(\langle {{\mathcal{O}}}_{{\rm{ml}}}\rangle\) with respect to g, calculated using next-nearest values. Additionally, we assess the impact of hardware noise on \({{\mathcal{P}}}_{f}\), analysed under the quasi-GD noise approximation outlined in Theorem 1. For the purposes of our algorithm, the influence of hardware noise on \({{\mathcal{P}}}_{f}\) resembles a global depolarizing noise channel Λϵ( ⋅ ) with a noise rate of ϵ = 0.773. The average discrepancy between the ideal and the linearly rescaled noisy features is 0.047.

To further study the resilience to noise of our algorithm, we consider a scenario where each Rzz rotation is affected by either an amplitude damping noise channel with a damping rate ϵ(AD) or a local depolarizing noise channel with a noise rate ϵ(DP). Figure 5e illustrates that the predicted phase transition point \({\tilde{g}}_{c}\) remains approximately at 1.6 under noise levels ϵ(AD) = 0.06 or ϵ(DP) = 0.02. Specifically, under amplitude damping with ϵ(AD) = 0.06, the accumulated noise on \({{\mathcal{P}}}_{f}\) resembles a global depolarizing channel with a noise rate of ϵ = 0.317, where the average discrepancy between the ideal and the linearly rescaled noisy features is 0.074. For local depolarizing noise with ϵ(DP) = 0.02, the accumulative noise on \({{\mathcal{P}}}_{f}\) resembles a global depolarizing channel with a noise rate of ϵ = 0.244, yielding a significantly lower average discrepancy of 0.011. However, as the noise rate increases, \({\tilde{g}}_{c}\) abruptly shifts to around 1.2, indicating that higher noise levels disrupt entanglement, leading the regression model to opt for a local order parameter acting on individual, localized qubits:

resulting in a significantly less accurate estimate. The inset in Fig. 5e shows the relationship between \({\tilde{g}}_{c}\) and the noise rate for both amplitude damping and depolarizing channels, with crossover points at ϵ(AD) = 0.064 and ϵ(DP) = 0.028, respectively. In summary, our results demonstrate that the algorithm exhibits robust performance for small to moderate noise levels—i.e., the predicted phase transition point remains nearly unchanged relative to the ideal case. The observed abrupt shift at higher noise rates is consistent with the expectation that substantial noise will fundamentally alter the quantum state, thereby affecting the performance of any algorithm.

As discussed in the previous section, the iterative removal of identified dominant order parameters from the feature vectors facilitates the discovery of multiple additional order parameters. Table 2 presents the most dominant order parameter and some additional order parameters alongside the corresponding simulation and experimental results, showing a generally consistent alignment between the two.

In the SM, we investigate the critical boundary of 2D TFIM and the performance of our protocol for larger qubit grids. Applying our algorithm to the 4 × 4 system yields an estimated critical point of approximately 2.45, which is significantly closer to the thermodynamic limit value.

Extended Su-Schrieffer-Heeger Model

Now we consider a model featuring topological phase transitions, the eSSH model55,56. This model represents an extension of the SSH model proposed by Su, Schrieffer, and Heeger, to study topological phases within one-dimensional systems73. The eSSH model incorporates additional interactions beyond the nearest-neighbour hopping and, under open boundary conditions, is characterized by the Hamiltonian

where g modulates the interaction strength, δ denotes the anisotropy in the z-direction, and n denotes the system size. For our studies, n is set such that n = 4q, where \(q\in {{\mathbb{N}}}^{+}\).

In scenarios where the anisotropy parameter δ is small, increasing the coupling strength g from 0 to 1 drives a phase transition from a trivial to a topological phase within the system56. Initially, the trivial phase displays a dimerized configuration, with spins forming singlet pairs predominantly with their nearest neighbours. As g approaches 1, the system evolves into a topological phase, marked by the emergence of edge states that represent localized excitations at the system’s boundaries. Conversely, when δ exceeds a critical threshold (approximately δ⋆ = 1.6), the system’s phase diagram becomes more complex, maintaining the trivial and topological phases while also exhibiting a symmetry-broken phase characterized by antiferromagnetic ordering. Notably, for values of δ ≠ 0, 1, the model is non-integrable and lacks an analytical solution; hence, numerical and heuristic methods become essential for exploring its phase behavior.

The transitions between these phases are usually analysable through the partial reflection many-body topological invariant74

where ρI represents the density matrix of a subsystem I = I1 ∪ I2, where I1 includes qubits Qn/4, Qn/4+1, … , Qn/2−1 and I2 includes qubits Qn/2, Qn/2+1, …, Q3n/4−1, and \({{\mathcal{R}}}_{I}\) is the reflection operation within I. Note that \({\widetilde{{\mathcal{Z}}}}_{{\mathcal{R}}}\) is highly non-local and non-linear. In the thermodynamic limit, \({\widetilde{{\mathcal{Z}}}}_{{\mathcal{R}}}\) is expected to be −1 in the topological phase, 0 in the symmetry-broken phase, and 1 in the trivial phase. Therefore, the value of \({\widetilde{{\mathcal{Z}}}}_{{\mathcal{R}}}\) allows to identify each of the three phases.

In the framework of this model, we employ the Hamiltonian variational ansatz and execute numerical optimizations through two distinct initial setups:

-

Trivial phase initialization: This method begins with the initial state,

$$| {\psi }_{{\rm{in}}}\left.\right\rangle =\mathop{\bigotimes }\limits_{j=0}^{n/2-1}{| {\psi }^{-}\left.\right\rangle }_{2j,2j+1},$$(20)where \(| {\psi }^{-}\left.\right\rangle =\frac{1}{\sqrt{2}}\left(| 01\left.\right\rangle -| 10\left.\right\rangle \right)\) and the subscripts denote qubits. This state represents the ground state of \({{\mathcal{H}}}_{{\rm{eSSH}}}\) at g = 0 for any δ≥ − 1. The variational quantum circuit is then applied as follows:

$$\begin{array}{l}{U}_{{\rm{eSSH}}}^{{\rm{triv}}}({\boldsymbol{\beta }},{\boldsymbol{\gamma }})=\mathop{\prod }\limits_{j=1}^{p}\exp \left(-i\frac{{\beta }_{j}}{2}{{\mathcal{H}}}_{xy}\right)\exp \left(-i\frac{{\gamma }_{j}}{2}{{\mathcal{H}}}_{zz}\right)\\ \qquad\qquad\qquad\exp \left(-i\frac{{\beta }_{j}^{{\prime} }}{2}{{\mathcal{H}}}_{xy}^{{\prime} }\right)\exp \left(-i\frac{{\gamma }_{j}^{{\prime} }}{2}{{\mathcal{H}}}_{zz}^{{\prime} }\right),\end{array}$$(21)where \({{\mathcal{H}}}_{xy}\) and \({{\mathcal{H}}}_{zz}\) are the summations of \({\sigma }_{j}^{x}{\sigma }_{j+1}^{x}+{\sigma }_{j}^{y}{\sigma }_{j+1}^{y}\) and \({\sigma }_{j}^{z}{\sigma }_{j+1}^{z}\) on odd edges, respectively, and \({{\mathcal{H}}}_{xy}^{{\prime} }\) and \({{\mathcal{H}}}_{zz}^{{\prime} }\) are their counterparts on even edges.

-

Topological phase initialization: This approach starts with

$$| {\psi }_{{\rm{in}}}\left.\right\rangle ={| {\psi }^{-}\left.\right\rangle }_{0,n-1}\mathop{\bigotimes }\limits_{j=0}^{n/2-2}{| {\psi }^{-}\left.\right\rangle }_{2j+1,2j+2},$$(22)corresponding to one of the four ground states of \({{\mathcal{H}}}_{{\rm{eSSH}}}\) at g = 1 for any δ ≥ −1. The variational quantum circuit for this setup is applied as:

$$\begin{array}{r}{U}_{{\rm{eSSH}}}^{{\rm{topo}}}({\boldsymbol{\beta }},{\boldsymbol{\gamma }})=\mathop{\prod }\limits_{j=1}^{p}\exp \left(-i\frac{{\beta }_{j}^{{\prime} }}{2}{{\mathcal{H}}}_{xy}^{{\prime} }\right)\exp \left(-i\frac{{\gamma }_{j}^{{\prime} }}{2}{{\mathcal{H}}}_{zz}^{{\prime} }\right)\\ \quad \exp \left(-i\frac{{\beta }_{j}}{2}{{\mathcal{H}}}_{xy}\right)\exp \left(-i\frac{{\gamma }_{j}}{2}{{\mathcal{H}}}_{zz}\right),\end{array}$$(23)where \({{\mathcal{H}}}_{xy}\), \({{\mathcal{H}}}_{zz}\), \({{\mathcal{H}}}_{xy}^{{\prime} }\), and \({{\mathcal{H}}}_{zz}^{{\prime} }\) are defined as above.

The optimization grid for the trivial phase initialization spans from \({g}_{\min }=0\) to \({g}_{\max }=0.6\), with a sampling resolution of δg = 0.05. Conversely, the grid for the topological phase initialization ranges from \({g}_{\min }=0.4\) to \({g}_{\max }=1\), with the same sampling resolution of δg = 0.05. Given the emphasis on symmetry-oriented phase transitions within this investigation, we first consider a scenario where \({{\mathcal{P}}}_{f}\) includes all non-identity, reflection-symmetric Pauli operators on subsystem I, detailed as follows:

The simultaneous acquisition of their expectation values is facilitated through the execution of joint Bell measurements on pairs of qubits within I that are symmetrically positioned, such as (Qn/4, Q3n/4−1), (Qn/4+1, Q3n/4−2), and so forth, up to (Qn/2−1, Qn/2). Figure 6a displays these joint Bell measurements performed on the corresponding qubit pairs in subsystems I1 and I2, illustrating the method for deriving the feature vector for a 16-qubit quantum chain. Notably, in the absence of noise, classically computing expectation values of such large-weight Pauli operators in \({{\mathcal{P}}}_{f}\) becomes significantly more resource-intensive, indicating a possible avenue towards practical quantum advantage.

a Illustration of the eSSH model’s interaction scheme and the procedure for conducting joint Bell measurements on symmetrically positioned qubit pairs across subsystems I1 and I2 to derive the feature vector. b Fidelity comparison between the optimized quantum states and the true ground states, evaluated across various g and δ values. c Plot of both the original and Gaussian-filter-smoothed loss landscapes for various δ values, underscoring the identification of critical points. Note that for δ = 2, 3, 4 two minima can be identified, signalling the presence of the two possible phase transitions between the trivial and topological phases, and between the trivial and symmetry-broken ones. d Comparison between the predicted phase boundaries, represented by green and blue hollow triangles (indicative of transitions computed from optimized and ground states using the partial-reflection many-body topological invariant \({\widetilde{{\mathcal{Z}}}}_{{\mathcal{R}}}\)), and solid black diamonds representing the phase boundaries predicted by the Transformer model. This comparison is set against the phase diagram from ref. 22, derived numerically using the infinite-size density matrix renormalization group technique. e Application of learned order parameters to the modified eSSH model with a transverse field (\({{\mathcal{H}}}_{{\rm{T-eSSH}}}\)), where δ = 4. This panel tracks the phase transitions as the field strength h varies, with phase transitions identified where the Transformer model predicts shifts in phase labels, showcasing the adaptability of the learned parameters across different physical scenarios.

To implement our algorithm, we employ a Set Transformer-based regressor, described in Algorithm. This model is configured with four attention heads and operates at a learning rate of 0.001. Initially, the model applies a self-attention mechanism to the input features to discern dependencies and relationships. Following this, the architecture incorporates a predictive layer that performs a linear transformation, mapping the input features to an intermediate vector of dimension 128. This transformation is augmented by the ReLU activation function, which introduces non-linearity, and is followed by another linear transformation that reduces the feature dimensionality to produce a single output label. Here, the detection grid extends from \({\hat{g}}_{\min }=0\) to \({\hat{g}}_{\max }=1\), with a sampling resolution of \(\delta \hat{g}=0.001\). The analysis employs a window size of w = 50. The overall loss function landscape is constructed by integrating the losses from the trivial phase initialization for g ≤ 0.5 with those from the topological phase initialization for g > 0.5, for different values of δ. Considering the notable volatility observed in the loss landscape, a Gaussian filter is applied to smooth the curve effectively. It is important to note that while the mathematical form of the order parameter learned here is not straightforwardly intuitive, it is encoded within the weights of the Transformer’s neural network layers. This order parameter can be readily calculated from measurement results using the recorded parameters of the Transformer, facilitating practical applications and analyses.

For the eSSH model, we sample a single set of initial parameters for optimization, fixing n = 16, and circuit depth p = 5. The measurement scheme and numerical results of our method are presented in Fig. 6. Specifically, panel (b) shows the fidelities between the optimized states and the true ground states across a range of g values for δ = 0, 1, 2, 3, 4. Here, solid circles represent states from the trivial phase initialization (with g ∈ [0, 0.6]), while hollow circles indicate states from the topological phase initialization (with g ∈ [0.4, 1]). Notably, for states from the trivial phase initialization, the fidelity achieves its maximum near g = 0, then decreases as g increases. Conversely, states from the topological phase initialization exhibit high fidelity at relatively large g values, although fidelity near g = 1 can still be small due to the diminishing spectral gap. Panel (c) displays the original and smoothed loss landscapes for δ = 0, 1, 2, 3, 4. Notably, for δ = 0, 1, there is a single trivial-topological phase transition, while for δ = 2, 3, 4, two phase transitions are observed, including a symmetry-broken phase, in accordance with the known phase diagram of the eSSH model56.

The shaded areas in panel (d) of Fig. 6 reflect the phase diagram as reported in ref. 22, which was calculated using the partial-reflection many-body topological invariant \({\widetilde{{\mathcal{Z}}}}_{{\mathcal{R}}}\) employing the infinite-size density matrix renormalization group technique75. We compute \({\widetilde{{\mathcal{Z}}}}_{{\mathcal{R}}}\) for both the optimized and true ground states of \({{\mathcal{H}}}_{{\rm{eSSH}}}\) at n = 16. For δ < 1.6, the transition between the trivial and topological phases is marked where \({\widetilde{{\mathcal{Z}}}}_{{\mathcal{R}}}\approx 0\); for δ > 1.6, the transition between the trivial and symmetry-broken phases is identified where \({\widetilde{{\mathcal{Z}}}}_{{\mathcal{R}}}\approx 0.5\), and between the symmetry-broken and topological phases where \({\widetilde{{\mathcal{Z}}}}_{{\mathcal{R}}}\approx -0.5\). Green and blue hollow triangles represent the predicted phase boundaries from optimized and ground states, respectively, showing significant variance from those calculated using the partial-reflection invariant due to finite-size effects. Grey solid diamonds indicate the phase boundaries predicted by the Set Transformer for δ = 0, 0.5, 1, 1.5, …, 4, demonstrating a good agreement with the calculated ones. The small discrepancy is mainly due to residual finite-size effects. It is important to note that our method does not presuppose the number or location of phase transitions. Instead, the presence (or absence) of valleys in the loss landscape—observed during the variational optimization—serves as an indicator of phase transitions. Therefore, the tailored ansätze are employed solely to facilitate efficient convergence in the respective regimes, without relying on any prior knowledge of the eSSH model’s correct phase structure.

These results suggest that our methodology effectively learns an order parameter with minimal finite-size effects. Once learned, these order parameters (encoded within the Transformer’s weights where the loss function is minimal) can be further applied to various other quantum systems or models, particularly those within the same family or those exhibiting similar phase transition characteristics. As an example, we now focus on the eSSH model with δ = 4 and in the presence of a transverse field with strength h,

We reuse the two order parameters learned in the previous case with h = 0 to locate transitions between the trivial and symmetry-broken phases, and between the symmetry-broken and topological phases within this extended model.

Using exact diagonalization, we generate the ground states and corresponding feature vectors of \({{\mathcal{H}}}_{{\rm{T-eSSH}}}\) for various h and g values, fixing δ = 4. We apply the recorded Transformer order parameters to learn the phase diagram, depicted in panel (e) of Fig. 6. Phase transitions are estimated at points where the Transformer model predicts a label of 0, indicative of shifts between phases. Notably, when h < 1.7, the phase boundaries vary smoothly as a function of h; then, a sudden change occurs at h = 1.7, and between 1.7 ≤ h < 3, the boundaries are relatively stable with respect to g. For h ≥ 3, no stable phase transitions can be detected, indicating that the phase diagram is dominated by a single phase with respect to our order parameter in this regime.

Next, we consider an alternative scenario in which no prior knowledge of the eSSH model is assumed. In this case, we construct \({{\mathcal{P}}}_{f}\) by including all local Pauli operators with weight two or less. Employing the same hyperparameters as described earlier, our methodology retains its ability to detect the critical phase boundaries and identify the emergence of the symmetry-broken phase. The Transformer loss landscapes are depicted in Fig. 7a. They reveal two distinct minima for δ = 2, 3, 4, corresponding to the transitions between the trivial and symmetry-broken phases, and between the symmetry-broken and topological phases. The predictions remain largely consistent with the known phase boundaries, although finite-size effects become more pronounced in this setup, and the overall accuracy experiences a slight reduction, as shown in Fig. 7b. These results suggest that the non-linear functions of 2-local Pauli terms learned by the Transformer model are sufficient to characterize the topological phase transitions in the eSSH model, even without explicit prior knowledge of the system’s properties.

a Plot of the original and Gaussian-filter-smoothed loss landscapes constructed by applying the Transformer model using expectation values of all local Pauli operators with weight below three for various δ values. For δ = 2, 3, 4, two minima are identified. b Comparison between the predicted phase boundaries, represented by solid black diamonds (Transformer model predictions from 2-local Pauli terms), green hollow triangles (optimized states), and blue hollow triangles (ground states), against the phase diagram from ref. 22, which was derived numerically using the infinite-size density matrix renormalization group technique.

Discussion

In this work, we have presented a hybrid quantum optimization-machine learning algorithm designed to identify phase transitions in quantum systems, leveraging locally trapped states produced by shallow variational circuits instead of exact ground-state data. This approach is particularly significant in the context of near-term quantum devices, where noise and circuit depth limitations preclude the accurate preparation of exact ground states. Through numerical simulations and real-hardware experiments of the 1D and 2D transverse-field Ising models (TFIMs) and the extended Su-Schrieffer-Heeger (eSSH) model, we have demonstrated the algorithm’s robustness and precision under the tested conditions.

Our real-hardware experiments validate the performance of our method under realistic noise conditions and represent, to our knowledge, one of the first experimental identifications of such order parameters directly from quantum data. Our findings illustrate that the algorithm not only detects the critical points of phase transitions but also unveils novel order parameters with faster convergence rates toward the thermodynamic limit and reduced sensitivity to finite-size effects in the systems investigated. Specifically, the LASSO algorithm identifies physically meaningful order parameters that enhance interpretability, while the Transformer model captures complex, non-intuitive many-body topological invariants that—although less directly interpretable—provide useful insights into phase transitions. Moreover, we have developed a new global optimization strategy that generates a continuous set of quantum states as a function of the Hamiltonian parameter g, thereby allowing precise tracking of phase evolution without requiring significantly deeper circuits.

We acknowledge that the system sizes investigated in this study are relatively modest, due to current hardware limitations and the need to measure numerous observables for training. While the results are encouraging, we are not claiming a formal quantum advantage here. Instead, the capacity of near-term devices to measure multiple observables in parallel suggests a potential practical speedup, especially if deeper or more adaptive measurements are employed. It is worth noting that while previous studies such as Herrmann et al.76 and Cho et al.77 have employed machine learning on experimental data, our approach addresses the more demanding task of identifying unknown quantum phase transitions using shallow-circuit variational optimization under realistic noise conditions.

An open question is the extent to which our approach—particularly in the shallow-circuit or local-observable regime—remains classically efficiently simulable54,78. While shallow circuits with limited entanglement can, in some cases, be simulated using classical methods such as tensor networks79,80,81, our algorithm is not inherently restricted to such settings. A central finding of our study is that precision improves exponentially with circuit depth, enabling accurate results with circuits of depth \(O(\log n)\); notably, such circuits are not necessarily classically simulable. Finally, because our protocol does not require the ansatz to reach the exact ground state at every value of Hamiltonian parameter, we can use a curriculum approach: optimize the circuit in an easy, plateau—free region82, then transfer and fine—tune the parameters as we move into classically intractable regimes.

Furthermore, being—classically simulable in principle’ rarely translates into practical feasibility. In many scenarios, the required algorithms suffer from large polynomial overheads or complex data structures that become prohibitive as n grows54. Even if a shallow-circuit model is formally simulable, its resource demands may still outstrip available classical computing power for realistic system sizes. Mid-circuit measurements83,84 can further complicate classical strategies, and integrating our algorithm with established quantum-advantage protocols or fault-tolerant schemes85,86 may push shallow-depth training into regimes known to be classically intractable. While some configurations may appear classically tractable, the precise boundary between classical and quantum regimes remains unsettled, even in the shallow-depth, low-weight observable context explored here. Our results offer new insights into this ongoing debate.

Future improvements to our algorithm could explicitly focus on analysing differences between feature sets, rather than individual features alone. This strategy could enhance our understanding of transition dynamics, especially in systems where order parameters are not well-defined or universally applicable, such as in first-order phase transitions. Moreover, investigating our algorithm’s performance within the finite-temperature critical region surrounding quantum critical points represents a promising research direction. This exploration could provide deeper insights into how quantum fluctuations, thermal effects, and optimization deficits interact at criticality, thereby enriching our understanding of finite-temperature phase diagrams.

Our study leverages classical machine learning techniques to detect quantum phase transitions from quantum data. The efficiency of the quantum variational optimization subroutine, crucial for data acquisition, can be significantly enhanced by employing strategies such as parallelism and joint Bell measurements87,88. Given recent successes of quantum machine learning in phase recognition89,90, it is compelling to further explore quantum-enhanced methods specifically aimed at detecting quantum phases. Importantly, while we do not claim an unconditional quantum advantage for shallow-circuit regimes, the capacity to gather complex or global observables (e.g., multi-qubit correlators) will ultimately surpass classical capabilities in practice—particularly as hardware improves and deeper circuits become viable. Questions regarding the limitations of classical machine learning in this context, and whether quantum machine learning could offer an advantage, are ripe for investigation. The further exploration of these questions could open new applications of machine learning in quantum physics.

Methods

In this section, we outline the classical machine learning subroutines implemented in our study, describe the algorithm developed for detecting and locating phase transitions, and detail the extrapolation protocols designed to accurately determine the critical point of phase transition in the thermodynamic limit.

Machine Learning Preliminaries

LASSO for order parameter selection

The Least Absolute Shrinkage and Selection Operator (LASSO) algorithm is a regression technique in machine learning that incorporates both variable selection and regularization to enhance the prediction accuracy and interpretability of statistical models48. The target cost function for our LASSO application is formulated as:

where 2w is the number of feature vectors and ℓ represents the number of features within each vector. The label li (either − 1 or + 1) categorizes the phase associated with the i-th feature vector. Each component fij of the feature vector corresponds to the expectation value of the j-th Pauli operator (Oj) from a predefined Pauli set,

and calculated from the optimized quantum state ρi. The feature vector for each state is thus:

which captures essential characteristics critical to distinguishing phase properties. The coefficients κj are dynamically adjusted during the optimization, where λ is a non-negative regularization parameter that imposes a penalty on the magnitude of the coefficients. This penalty encourages sparsity in the model, enhancing interpretability by emphasizing only the most significant features, thus simplifying the model and aiding in the identification of key order parameters.

In our implementation, LASSO is employed to discover simpler and physically meaningful order parameters, enabling direct identification of critical physical quantities. By adaptively adjusting the λ parameter, our approach finely tunes the width of the valleys within the classical loss landscape—the larger the value of λ, the narrower the valleys—thereby avoiding issues of overly broad or narrow valleys, and ensuring the retention of essential features.

Transformers for complex order parameter synthesis

The Transformer neural network, developed by Vaswani et al. in 2017, significantly advances the handling of sequential data with its principal component: the self-attention mechanism, also known as scaled dot-product attention49. This mechanism enables the Transformer to dynamically prioritize different segments of the input feature vector fi based on the relevance of different features, quantified as

where Qi, Ki, and Vi represent the queries, keys, and values respectively, each derived from the input feature vectors fi. Here, dk is the dimension of the keys. Queries highlight the current focus within the input, keys facilitate the alignment of these queries with relevant data points, and values convey the substantial data intended for output. The attention formula enables the Transformer to adjust attention across the features dynamically, which enhances its ability to discern complex dependencies. This capability may be particularly valuable in detecting quantum phase transitions, as it not only identifies critical observables but also elucidates the intricate dependencies among their expectation values, thereby enabling a comprehensive understanding and synthesis of observables related to the phase transitions.

The multi-head attention concept49 further extends this capability, enabling the model to dynamically assign different weights of significance to various segments of the input data. This is achieved through the multi-head attention,

where each head, headj, performs attention operations independently:

using distinct weight matrices \({W}_{j}^{Q},{W}_{j}^{K},{W}_{j}^{V}\), and WO. The WO matrix is a final linear transformation applied to the concatenated outputs of all heads before producing the final output. This design enables the Transformer to capture a richer representation by focusing on different aspects of the input feature vectors in parallel.

Given the non-sequential nature of the input features in our study—labeled either 1 or −1 without a dependence on sequence—the Set Transformer, adapted by Lee et al.91, offers an ideal framework for our application. The Set Transformer excels at capturing complex interrelations within data sets by employing a series of computational stages:

-

1.

Normalization: The input feature vectors {fi} undergo layer normalization to reduce internal covariate shift, enhancing learning stability.

-

2.

Multi-head attention: The normalized feature vectors \(\{{\tilde{{\boldsymbol{f}}}}_{i}\}\) are processed with multi-head attention, allowing the model to concurrently analyse multiple features of the input set. Output from this stage includes a dropout step to prevent overfitting.

-

3.

Residual connection: Integrates the original feature vector with the attention output to preserve gradient flow, enhancing training effectiveness.

-

4.

Prediction layer: Finally, the attention-enhanced data passes through a fully connected layer with ReLU activation, where the final phase labels are predicted.

We utilize the Adam optimizer92 with an appropriate learning rate to optimize parameters within this framework, aiming to minimize the mean-square loss between the predicted phase labels and the assigned ones. Through its comprehensive handling of feature interdependencies, the Set Transformer exhibits improved performance in our benchmarks with complex and non-local order parameters. The architectural details and operational functionalities of our Set Transformer-based regression model are schematically depicted in Fig. 8. An exemplary implementation of the Set Transformer architecture used in our experiments, along with its corresponding complexity analysis, is provided in the SM.

The diagram methodically presents the model’s architecture, incorporating key processes like input state measurement processing, feature normalization, the generation of Q, K, and V through linear weight matrices, scaled dot-product attention, concatenation of multiple heads, a residual connection, a prediction layer for determining phase labels.

In this work, we employ a LASSO-based algorithm to detect phase transitions between the ferromagnetic and paramagnetic phases within 1D and 2D TFIMs in Sections 1D Transverse-field Ising Model and 2D Transverse-field Ising Model, respectively, and utilize a Transformer-based algorithm for identifying topological phase transitions among trivial, symmetry-broken, and topological phases in the eSSH model in Section Extended Su–Schrieffer–Heeger Model.

Algorithm

Quantum local traps

The first stage of our protocol is the acquisition of locally optimized quantum states through variational optimization18. We focus on a specific parameterized Hamiltonian \({\mathcal{H}}(g)\), where g is selected from the interval \([{\hat{g}}_{\min },{\hat{g}}_{\max }]\).

To systematically explore this range, we establish an optimization grid, \({{\mathcal{G}}}_{{\rm{opt}}}\), defined by points \(\{{g}_{\min },{g}_{\min }+\delta g,{g}_{\min }+2\delta g,\ldots ,{g}_{\max }\}\), where δg represents the optimization sampling resolution. Here, \({g}_{\min }\) is typically set to be smaller than \({\hat{g}}_{\min }\) but it might also be slightly greater, while \({g}_{\max }\) is generally larger than \({\hat{g}}_{\min }\) but it could also be marginally smaller. Introducing points outside the primary interval \([{\hat{g}}_{\min },{\hat{g}}_{\max }]\) in \({{\mathcal{G}}}_{{\rm{opt}}}\) helps to mitigate the risk of overfitting during the optimization process. When the ground state manifold exhibits relatively low complexity, it is feasible to employ a relatively large sampling resolution.

Subsequently, we introduce a variational quantum circuit U(γ, β; g) and apply the Fourier series method for its optimization, a strategy described by Zhou et al.93. For a p-layer quantum circuit, we express certain rotation angles within the j-th layer as

with the sine function being used. Conversely, the remaining rotation angles are written as

with the cosine function being employed. Considering the Hamiltonian variational ansatz for the transverse-field Ising model, as explored in refs. 62,63, we designate the Rzz rotation angle in the j-th layer as γj, and the Rx rotation angle in the same layer as βj. This approach to angle assignment can naturally generalize to variational ansätze that incorporate more parameters within each layer. The variational Fourier coefficients, \({\tilde{\gamma }}_{k}\) and \({\tilde{\beta }}_{k}\), play a pivotal role in this framework. In the update of \({\tilde{\gamma }}_{k}\) and \({\tilde{\beta }}_{k}\), each coefficient is written as a polynomial of degree M in the Hamiltonian parameter g,

Here, γ and β are indirectly optimized via the direct optimization of the vectors ζ and η. For the purposes of this study, we have selected M = 4 as the degree of these polynomials. Leveraging the Fourier strategy alongside the polynomial representation enables the simultaneous global optimization of γ and β across various values of g. This approach facilitates the minimization of the energy function sum

where ρ(ζ, η; g) = U(ζ, η; g)ρinU†(ζ, η; g) are the optimized states. \({{\mathcal{E}}}_{{\rm{opt}}}\) is theoretically lower bounded by the sum of the ground state energies on \({{\mathcal{G}}}_{{\rm{opt}}}\), \({{\mathcal{E}}}_{{\rm{opt}}}\ge {\sum }_{g\in {{\mathcal{G}}}_{{\rm{opt}}}}{E}_{{\rm{gs}}}(g)\). Starting with a variational quantum circuit with a single layer (p = 1), we uniformly sample the initial values of ζ, η from a predefined range. The optimization of ζ, η is conducted through the Broyden-Fletcher-Goldfarb-Shanno algorithm57,58,59,60, and the outcomes are then used to guide subsequent optimizations for circuits with increasing depth p + 1. With the increase of circuit depth p, the optimization deficit decreases, enhancing the precision of our estimates for the locations of critical points.

Adopting a global optimization approach, in contrast to individual optimizations for each g individually, confers significant benefits. Firstly, this strategy is more efficient, as, post-optimization, parameters corresponding to any given g value can be readily generated, enabling the preparation of the associated state through circuit execution. Furthermore, global optimization ensures stability across closely related g values, such as g = 0.400 and g = 0.401, allowing for the generation of states with consistent features. If the optimization were conducted separately for each g, even adjacent values of g could potentially settle into different local traps, leading to distinct states. This divergence could result in highly unstable data unsuitable for machine learning analysis.

The quantum optimization landscape is notoriously swamped with local traps28, presenting significant challenges in finding the global minimum. In this work, the quantum states we obtain from the variational quantum optimization, denoted as ρ(ζ, η; g), are characterized as locally trapped states that have higher energy than the global minima for several reasons. Firstly, the Fourier strategy approach utilized, as discussed in ref. 93, tends to be trapped in local minima when new parameters are extended by appending zero-vectors without integrating random perturbations upon the increase of p. Secondly, our approach is limited by the sampling of a single set of initial parameters for the optimization process, constraining the comprehensive exploration of the available parameter space. Lastly, we model the variables \({\tilde{\gamma }}_{k}\) and \({\tilde{\beta }}_{k}\) as low-degree polynomial functions of the Hamiltonian parameter g. This choice, while facilitating computational efficiency, limits full optimization for each g value, thereby reducing the chances of finding the global minima.

Classical loss landscape

The second stage of our methodology involves constructing a depiction of the classical machine learning loss function landscape across various magnitudes of the parameter g by employing classical regressors. Our objective is to pinpoint critical points of phase transitions, which can be identified by analysing the valleys in this landscape. The number of these valleys is utilized as an approximation for the total count of phase transitions. To facilitate this exploration, we set up a detection grid, \({{\mathcal{G}}}_{\det }\), consisting of a sequence of points \(\{{\hat{g}}_{\min },{\hat{g}}_{\min }+\delta \hat{g},{\hat{g}}_{\min }+2\delta \hat{g},\ldots ,{\hat{g}}_{\max }\}\) with \(\delta \hat{g}\) being the detection sampling resolution. For each g in \({{\mathcal{G}}}_{\det }\), we prepare the trapped quantum state ρ(ζ, η; g), then measure and record the feature vector, f(g), which comprises the expectation values of selected Pauli operators, represented by the set \({{\mathcal{P}}}_{f}\), from this state.

Our framework, termed presupposed phase label regression, aligns philosophically with the learning by confusion approach94,95 and effectively delineates phase boundaries using learnable order parameters. It identifies phase transition boundaries based on the performance fluctuations of the learning algorithm. The analysis employs a sliding window technique with a predetermined window size, \(w\in {{\mathbb{N}}}^{+}\), to systematically explore the parameter space. Initially, we create an empty set to record training losses, denoted as \({\mathcal{L}}=\varnothing\). Subsequently, for each value of g within the recalibrated range \([{\hat{g}}_{\min }+w\cdot \delta \hat{g},{\hat{g}}_{\max }-w\cdot \delta \hat{g}]\), the process is as follows: we start with an empty training set \({\mathcal{D}}=\varnothing\); for each \(\tilde{g}\) in the range \(\{g-w\cdot \delta \hat{g},\ldots ,g-\delta \hat{g}\}\), we assign a label of − 1 and add the pair \(({\boldsymbol{f}}(\tilde{g}),-1)\) to \({\mathcal{D}}\); similarly, for each \(\tilde{g}\) in \(\{g+\delta \hat{g},\ldots ,g+w\cdot \delta \hat{g}\}\), we assign a label of 1 and incorporate the pair \(({\boldsymbol{f}}(\tilde{g}),1)\) into \({\mathcal{D}}\). Following this, we train a supervised learning regressor (such as LASSO or Transformer) using the dataset \({\mathcal{D}}\), record the training loss, and append this loss to \({\mathcal{L}}\). The presence of valleys within \({\mathcal{L}}\) indicates potential phase transitions, as they represent points where the supervised learning model discerns a significant distinction between pre-transition and post-transition states, effectively leveraging learnable order parameters. The selection of the window size w is critical: if too small, the detection may lack stability; conversely, if too large, the detection may lack sensitivity and precision. The selection of w is influenced by the complexity of our model, allowing for adaptive adjustments to secure an optimal window size. Notably, our methodology exhibits significant robustness to variations in hyperparameters, thereby demonstrating its stability across a broad range of computational settings. For more detailed analysis, refer to the SM.

The pseudocode of the algorithm is shown in Fig. 9.

Noise robustness

Quantum gate noise is an inherent challenge in current quantum devices96, usually impacting the accuracy and reliability of quantum computations. Nevertheless, our framework exhibits substantial robustness to such disturbances, especially when the circuit noise scales linearly each feature vector. This is a practical assumption considering that local noise often approximates a global depolarizing noise channel as the circuit depth increases97,98. The following theorem illustrates the robustness of our algorithm against such quasi-global depolarizing (Quasi-GD) noise under specific conditions:

Theorem 1

(Robustness to Quasi-GD Noise) Let ζ and η be optimized parameters, with ρin representing the density matrix of the input state. For a Hamiltonian parameter g, consider ρ(ζ, η; g) as the corresponding ideal output state, and \({{\mathcal{N}}}_{{\boldsymbol{\zeta }},{\boldsymbol{\eta }};g}(\cdot )\) as the corresponding noisy quantum channel. If for any g in \({{\mathcal{G}}}_{\det }\) and any Pauli operator O in \({{\mathcal{P}}}_{f}\), the expectation values satisfy

where Λϵ( ⋅ ) denotes a global depolarizing channel with a fixed noise rate ϵ, then the channel \({{\mathcal{N}}}_{{\boldsymbol{\zeta }},{\boldsymbol{\eta }};g}(\cdot )\) is defined as a quasi-global depolarizing channel with respect to {ρ(ζ, η; g)} and \({{\mathcal{P}}}_{f}\). Given these conditions, the machine learning algorithms—LASSO and Transformer—are capable of predicting critical points from noisy quantum data that are consistent with those predicted from ideal quantum data.

This theorem implies that for noise channels which act like global depolarizing channels with respect to the set of Pauli operators \({{\mathcal{P}}}_{f}\), the overall shape of the classical loss function remains invariant. In other words, the machine learning subroutine effectively performs automatic quantum error mitigation in detecting phase transitions. When local gate noise is modeled as depolarizing noise and the quantum circuit achieves sufficient depth, the local noise effectively transforms into global white noise98, the expectation values of the Pauli operators in \({{\mathcal{P}}}_{f}\) are uniformly attenuated, and the condition is satisfied. However, it is important to note that the set \({{\mathcal{P}}}_{f}\) of interest typically only comprises finite special operators, such as low-weight local Pauli terms. Consequently, the aforementioned condition for noise robustness might be satisfied with very shallow circuits.