Abstract

Reward-predictive items capture attention even when task-irrelevant. While value-driven attention typically generalizes to stimuli sharing critical reward-associated features (e.g., red), recent evidence suggests an alternative generalization mechanism based on feature relationships (e.g., redder). Here, we investigated whether relational coding of reward-associated features operates across different learning contexts by manipulating search mode and target-distractor similarity. Results showed that singleton search training induced value-driven relational attention regardless of target-distractor similarity (Experiments 1a–1b). In contrast, feature search training produced value-driven relational attention only when targets and distractors were dissimilar, but not when they were similar (Experiments 2a–2c). These findings indicate that coarse selection training (singleton search or feature search among dissimilar items) promotes relational coding of reward-associated features, while fine selection (feature search among similar items) engages precise feature coding. The precision of target selection during reward learning thus critically determines value-driven attentional mechanisms.

Similar content being viewed by others

Introduction

Associative learning theory posits that past experiences shape stimulus representations to guide value-based behaviors1. Over recent decades, extensive research has shown that rewards can be associated with a wide range of stimuli, from basic features (e.g., colors, orientations, motion directions)2,3,4 to complex objects (e.g., shapes, natural scenes)5,6. Once learned, reward-associated stimuli gain enhanced attentional priority, even when they are task-irrelevant and no longer predictive of rewards7,8,9. However, in real-world scenarios, high-value stimuli rarely share the same physical properties or appear in the same environment, it is thus crucial to examine how this learned reward contingency generalizes to different items.

Previous research suggests that learned reward contingency can generalize to different items if they share the same or similar core features (e.g., color) that were previously associated with rewards10,11,12,13. Recent findings suggest an alternative mechanism that allows reward effects to generalize to different stimuli based on stable feature-context relationships14, emphasizing how an item differs from its surrounding rather than its exact feature value15,16. For example, if red is associated with rewards, an orange item among yellow items (redder) captures more attention than the same orange item among red items (yellower). Linking reward with relational information from the environment is thought to facilitate adaptive behavior17,18, as it allows more flexible generalization of learned value. However, this relational mechanism has so far been tested only in specific learning contexts (singleton search training among target-similar distractors). Thus, it remains unclear whether the reward effect on feature relationships is commonly utilized or merely reflects strategic adaptations to specific learning scenarios. One possible strategy is related to search mode: detecting a salient singleton (singleton search: target differs from all distractors by a single, unique feature) based on relative feature may be more efficient than searching for an exact feature value (feature search: target defined by specific feature values). Another strategy is related to target-distractor similarity: when items are perceptually similar, the target representation may shift toward an exaggerated feature value to enhance distinctiveness (as predicted by optimal tuning account)19,20,21,22.

To examine whether relational coding of reward-associated features is implemented across different learning contexts—particularly those engaging different modes of attention or varying stimulus similarity—participants learned feature-reward associations (high reward:red; low reward:yellow for half of the participants; reversed for the other half) using either a singleton search task (Experiments 1a and 1b) or a feature search task (Experiments 2a–2c), with different levels of target-distractor similarity (low vs. high similarity) during the training. Each participant was randomly assigned to one of the four training conditions. Next, we measured attentional capture by distractors that matched either previously rewarded feature values (red and yellow) or feature relationships (redder or yellower). In addition to the robust effects of reward on trained feature values, the effects of reward on feature relationships varied across experiments. Specifically, singleton search training led to reward effects on feature relationships, regardless of target-distractor similarity (Experiments 1a and 1b). In contrast, feature search training produced reward effects on feature relationships only when the target was dissimilar to the distractors (Experiment 2a), but not when they were similar (Experiments 2b and 2c). These findings suggest a key role of the precision of target selection during reward learning in shaping value-driven relational attention. When the learning task requires only coarse selection (e.g., singleton search or feature search among dissimilar items), a relational code for reward-associated feature is formed; however, when fine selection is necessary (e.g., feature search among similar items), a more precise code is utilized.

Results

Experiment 1: Singleton search training leads to relational coding of reward-associated features regardless of target-distractor similarity

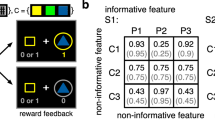

Participants completed a training and a test session on consecutive days in Experiments 1a and 1b (N = 40 for each). During training, they searched for a uniquely colored circle (red or yellow) among distractors and reported the orientation of the bar (horizontal or vertical) inside the target circle (Fig. 1a). The target color was dissimilar to the distractors in Experiment 1a (e.g., search for a red target among green distractors) and similar to the distractor in Experiment 1b (e.g., search for a red target among orange distractors). Correct responses were followed by monetary feedback. For instance, red was associated with an 80% probability of a high reward (¥10) and a 20% probability of a low reward (¥1), while yellow was associated with a high probability (80%) of a low reward (¥1) and a low probability (20%) of a high reward (¥10). Participants were not informed about the color-reward association. We confirmed our manipulation validity of target-distractor similarity between experiments in Control Experiment 1, by showing faster responses in low target-distractor similarity than high target-distractor similarity conditions (see Methods and Supplementary Figure 1 for details). Analysis of training performance revealed no significant differences in reaction time (RT; paired t-test: ps > 0.106) or accuracy (paired t-test: ps > 0.509) between high and low reward-associated colors in both experiments. The lack of reward effects may be attributed to near-ceiling performance during the singleton search tasks (>97%), potentially reducing sensitivity to detect the influence of reward, as also reported in previous studies4,23,24.

a Trial sequence in the training session. The task was to report the orientation of the bar inside a red or yellow singleton. A correct response was followed by a high (+¥10) or low reward (+¥1), depending on the pre-specified color-reward associations. The stimuli array comprised a color-singleton target presented among dissimilar (Experiment 1a) and similar (Experiment 1b) distractors, respectively. b Trial sequence in the test session. Participants were asked to find a uniquely-oriented bar (horizontal or vertical) inside a colored circle. No reward feedback was provided. The color of the singleton distractor matched reward in either feature value (feature-match condition) or feature relationship (relation-match condition). The dashed box indicates the target location (not shown in actual displays).

In the test session of Experiments 1 and 2, we used a targeted visual search task to examine value-driven attention. The target in the test task was defined as a uniquely-oriented bar (horizontal or vertical) within a colored circle. We manipulated a critical distractor that matched reward history in feature value or feature relationship. In the feature-match condition, a critical distractor that matched rewarded feature value (red or yellow) appeared in 50% of the trials, while the other items were in different colors (Fig. 1b). Note that this critical distractor was also relationally matched to reward history by virtue of being the reddest or yellowest. Therefore, this condition primarily served to confirm the acquisition of color-reward contingencies rather than to disentangle feature-value from feature-relation effects. In the relation-match condition, a singleton distractor that matched reward history in feature relationship (redder: yellow-orange among yellows; yellower: red-orange among reds) appeared in 50% of the trials. Crucially, to disentangle the influence of feature value in this condition, we used distractor colors that matched the previously high-rewarded feature relationship (e.g., yellow-orange is redder among yellows) but more closely resembled the previously low-rewarded feature value (e.g., yellow-orange is more similar to yellow than to red). These two types of conditions were interleaved across trials.

To examine the acquisition of color-reward contingency, we analyzed data from the feature-match condition (Fig. 2a). One-way repeated-measures ANOVAs (reward history: high-, low- vs. no-reward distractor) on RTs revealed a significant main effect of reward (Experiment 1a: F(2, 78) = 10.54, p < 0.001, ηp2 = 0.213; Experiment 1b: F(2, 78) = 3.98, p = 0.023, ηp2 = 0.093). Pairwise comparisons showed significantly slower search RTs in the presence of a high-reward distractor than in both low-reward distractor (Experiment 1a: t(39) = 2.18, p = 0.035, Cohen’s d = 0.345, 95% CI = [0.024, 0.662]; Experiment 1b: t(39) = 2.42, p = 0.020, Cohen’s d = 0.382, 95% CI = [0.059, 0.701]) and no-reward distractor condition (Experiment 1a: t(39) = 4.45, p < 0.001, Cohen’s d = 0.703, 95% CI = [0.353, 1.046]; Experiment 1b: t(39) = 2.09, p = 0.044, Cohen’s d = 0.330, 95% CI = [0.009, 0.646]). RTs were also significantly slower in the presence of a low-reward distractor than a no-reward distractor in Experiment 1a (t(39) = 2.51, p = 0.016, Cohen’s d = 0.396, 95% CI = [0.072, 0.716]), but not in Experiment 1b (p = 0.520). These subtle differences between the low-reward and no-reward conditions may reflect the influence of selection history (stimuli that previously served as targets without rewards), as reported in some previous studies25. A mixed two-way ANOVA (reward history: high- vs. low-reward × experiment: 1a vs. 1b) revealed no significant interaction effect (p = 0.968), with moderate evidence supporting the null effect (BF01 = 4.410). These results replicate previous findings on value-driven attention to trained feature values2,12,14,26. The same analyses applied to accuracy revealed no significant effects (ps > 0.180).

Importantly, we examined whether singleton search training with different target-distractor similarity influenced the effect of reward on feature relationships (Fig. 2b). Paired t-test analyses on data from the relation-match condition showed significantly slower RTs when the distractor had a relationship previously associated with high reward than low reward, in both experiments (Experiment 1a: t(39) = 2.53, p = 0.016, Cohen’s d = 0.400, 95% CI = [0.075, 0.720]; Experiment 1b: t(39) = 2.70, p = 0.010, Cohen’s d = 0.427, 95% CI = [0.100, 0.748]). A two-way mixed ANOVA (reward history: high- vs. low-reward × experiment: 1a vs. 1b) on RTs suggested an absence of an interaction effect (p = 0.865; BF01 = 4.538). These results support a relational coding of reward-associated features, regardless of the target-distractor similarity during training. They also ruled out the possibility of a strategic shift in the reward-associated feature toward a more extreme feature value to distinguish it from similar distractors – an optimal tuning account that is distinct from the relational account27,28,29. An alternative explanation for these results in the relation-match condition is differential attention and/or arousal elicited by non-singleton, previously rewarded colors (red or yellow). To address this possibility, we compared RTs between high- and low reward-associated colors in the distractor-absent trials of the relation-match condition (Fig. 1b). Planned t-tests revealed no significant differences between these two conditions (Experiment 1a: t(39) = -0.66, p = 0.510, Cohen’s d = -0.105, 95% CI = [-0.415, 0.206]; Experiment 1b: t(39) = 0.16, p = 0.871, Cohen’s d = 0.026, 95% CI = [-0.284, 0.336]; Fig. 2b), as evidenced by Bayesian analyses (BF01 > 4.767). The same analyses applied to accuracy revealed no significant effects (ps > 0.065).

Experiment 2: Feature search training among dissimilar distractors leads to relational coding of reward-associated features

Although the results of Experiment 1 suggest that the relational coding of reward-associated features is independent of target-distractor similarity, it remains unclear whether this coding schema requires singleton search that preferentially processes stimulus differences. To address this, Experiment 2 used a feature search task (i.e., report the bar orientation within red or yellow circles), with distractors that were either dissimilar (Experiment 2a) or similar (Experiment 2b) to the target during the training session (N = 40 for each; Fig. 3a). To validate our manipulation of search mode (singleton vs. feature search) during the training task in Experiments 1 and 2, we conducted Control Experiment 2 to compare search performance as a function of set size (4, 6 or 8) between the two search modes (see Methods and Supplementary Figure. 2 for details). We found that RT remained constant during singleton search (Experiment 1a: training task) but increased linearly during feature search (Experiment 2a: training task). These patterns align with theoretical predictions of distinct search modes30,31.

a Trial sequence in the training session in Experiment 2. The dashed box indicates the target location (not shown in actual displays). The stimuli array comprised a target color (red or yellow) presented among dissimilar (Experiment 2a) and similar (Experiment 2b and 2c) distractors, respectively. The only difference between Experiments 2b and 2c is the bar orientation inside the colored circle (2b: horizontal or vertical; 2c: leftward or rightward). The test task was identical to those in Experiment 1. b Search RTs in feature-match condition. c Search RTs in relation-match condition. Error bars reflect within-subject standard errors of the mean. ***p < 0.001, *p < 0.05.

The test task in Experiment 2b was identical to that in Experiment 1. However, a one-way repeated-measures ANOVA (reward history: high-, low- vs. no-reward distractor) on RTs from the feature-match condition (Fig. 3b, middle) revealed only a marginally significant effect of reward (F(2, 78) = 2.92, p = 0.060, ηp2 = 0.070). Paired t-test analyses on RTs from the relation-match condition (Fig. 3c, middle) revealed no significant differences between reward conditions in either the distractor-present (p = 0.899; BF01 = 5.816) and distractor-absent trials (p = 0.175; BF01 = 2.436). None of these analyses revealed significant effects on accuracy (ps > 0.494). These results leave two possible interpretations: either participants did not encode reward-associated features via relational codes, or they learned the color-reward contingencies less effectively. The latter account is possible because participants may have relied, at least to some degree, on the uniquely oriented bar (a salient feature) rather than the less discriminable color during visual search training. This differs from Experiments 1 and 2a, where target colors (presented as singletons or clearly distinctive from distractor colors) were more likely to be prioritized over orientation in visual search32. To resolve this issue, we reduced the salience of bar orientation (using diagonal orientations) during training in Experiment 2c. Here, participants discriminated leftward or rightward orientation (Fig. 3a, right) based solely on target color (red or yellow). As in Experiments 2a to 2c, they searched for color-defined targets (red or yellow) during training, with bar orientation serving only as a response-related feature. Experiment 2c thus preserved the key manipulation (feature search among similar items) from Experiment 2b. To streamline data presentation, we focused analyses on Experiments 2a and 2c.

During the training task, we observed that reward facilitated search RTs for the high reward-associated color compared to the low reward-associated color (Experiment 2a: t(39) = -3.60, p < 0.001, Cohen’s d = -0.570, 95% CI = [-0.901, -0.232]; Experiment 2c: t(39) = -2.61, p = 0.013, Cohen’s d = -0.413, 95% CI = [-0.733, -0.087]). Accuracy showed no significant differences between conditions (ps > 0.259) and remained high in both experiments (>91%).

The test performance in the feature-match condition was largely consistent with Experiment 1(Fig. 1b). A one-way repeated-measures ANOVA (reward history: high-, low- vs. no-reward) revealed a significant main effect of reward on RTs (Experiment 2a: F(2, 78) = 16.20, p < 0.001, ηp2 = 0.293; Experiment 2c: F(2, 78) = 11.14, p < 0.001, ηp2 = 0.222). Pairwise comparisons showed that the highly-rewarded distractor captured more attention than both the low-rewarded distractor (Experiment 2a: t(39) = 3.83, p < 0.001, Cohen’s d = 0.606, 95% CI = [0.265, 0.941]; Experiment 2c: t(39) = 2.45, p = 0.019, Cohen’s d = 0.387, 95% CI = [0.063, 0.706]) and no-reward distractor conditions (Experiment 2a: t(39) = 5.26, p < 0.001, Cohen’s d = 0.831, 95% CI = [0.467, 1.187]; Experiment 2c: t(39) = 4.19, p < 0.001, Cohen’s d = 0.663, 95% CI = [0.317, 1.003]). RTs were significantly slower for the low-rewarded than no-reward distractor condition in Experiment 2c (t(39) = 2.62, p = 0.012, Cohen’s d = 0.414, 95% CI = [0.089, 0.735]), but not in Experiment 2a (p = 0.121). A two-way mixed ANOVA (reward: high- vs. low-reward × experiment: 2a vs. 2c) on RTs from Experiments 2a and 2c revealed a significant interaction effect (p = 0.035). The stronger effect of reward on feature value in Experiment 2a was likely due to the similarity between the search contexts used during both the training (Fig. 3a) and the feature-match condition in the test session (Fig. 1b). Similarly, the same analysis on accuracy revealed a significant effect of reward for Experiment 2a (F(2, 78) = 4.73, p = 0.012, ηp2 = 0.108), but not for Experiment 2c (F(2, 78) = 0.934, p = 0.397, ηp2 = 0.023), indicating stronger reward modulation in the feature-match condition of the former experiment.

Importantly, we examined whether feature search training with different target-distractor similarity influenced the reward effect on feature relationships. We found that when the target was dissimilar to the distractors during training (Experiment 2a), a stronger capture effect was observed when the distractor was relationally matched to the high-reward compared to the low-reward condition (paired t-test: t(39) = 2.45, p = 0.019, Cohen’s d = 0.387, 95% CI = [0.064, 0.707]). However, when the target was similar to the distractors during training (Experiment 2c), this attentional bias was abolished during the test session (paired t-test: t(39) = -1.58, p = 0.122, Cohen’s d = -0.250, 95% CI = [-0.563, 0.066]). A two-way mixed ANOVA (reward history: high- vs. low-reward × experiment: 2a vs. 2c) on RTs revealed a significant interaction effect (F(1, 78) = 7.92, p = 0.006, ηp2 = 0.092). These results differ from those observed following singleton search training in Experiment 1, suggesting that neither search mode nor target-distractor similarity alone determines the impact of learned value on feature relationships.

Before drawing conclusions based on the lack of reward effects in the relation-match condition, we asked whether participants had learned to suppress target-similar colors (red-orange to yellow-orange) during training, thereby reducing the capture effect of reward-associated distractors sharing these colors during the test. If this holds, such learned suppression should probably manifest as faster RTs in distractor-present versus distractor-absent trials, as proposed by theories of suppression over singleton distractors33,34. However, we found numerically slower RTs in distractor-present trials compared to distractor-absent trials (613.35 ms vs. 609.17 ms; p = 0.097). This difference reached significance when combining speed and accuracy via inverse efficiency (RT/accuracy: t(39) = 2.47, p = 0.018, Cohen’s d = 0.391, 95% CI = [0.067, 0.711]), confirming that reward-associated distractors primarily elicited attentional capture. Furthermore, the observed reward modulation in the low target-distractor similarity condition (Experiment 2a) argues against an optimal tuning account, which would predict representational shifts only when targets are highly similar to the distractors, but not when they are dissimilar27,28,29. Additionally, no significant difference was observed between the two distractor-absent conditions (Experiment 2a: p = 0.983, BF01 = 5.860; Experiment 2c: p = 0.926, BF01 = 5.838), ruling out possible effects of reward linked to non-singletons. The same analyses applied to accuracy revealed no significant effects (Experiment 2a: ps > 0.656; Experiment 2c: ps > 0.405).

Therefore, the most parsimonious explanation for the differences observed in relation-match condition across experiments (1a, 1b, 2a vs. 2c) is that they reflect changes in target selection precision during training—a factor jointly determined by both search mode and stimulus similarity35. Specifically, when the learning task required only coarse selection (e.g., singleton search or feature search among dissimilar items), a relational code for reward-associated feature was formed. However, when fine selection was necessary (e.g., feature search among similar items), a more precise feature code was utilized.

Cross-experiment comparisons reveal variations in reward effects on feature relationships

To further compare the magnitudes of reward effects across learning contexts, for each experiment (1a, 1b, 2a and 2c), we calculated ΔRT (high-reward RT minus low-reward RT) separately for the feature-match and relation-match conditions (1a, 1b, 2a and 2c; Fig. 4). A two-way ANOVA on ΔRT in the feature-match condition, with search mode (singleton vs. feature search) and target-distractor similarity (low vs. high) as between-subject factors. This analysis revealed no significant main effects or interaction (ps > 0.146), suggesting that the reward effects on trained feature values were comparable across experiments. In contrast, the same two-way ANOVA conducted on ΔRT in the relation-match condition showed a significant main effect of search mode (F(1,156) = 5.40, p = 0.021, ηp2 = 0.033), and more importantly, a significant interaction effect (F(1,156) = 4.35, p = 0.039, ηp2 = 0.027). This interaction effect suggests that the influence of reward on feature relations depends jointly on the search mode and target-distractor similarity during training. The effect of target-distractor similarity did not reach statistical significance (F(1,156) = 3.40, p = 0.067, ηp2 = 0.021). The same results were obtained using raw RTs. Furthermore, planned comparisons confirmed that value-driven relational effects were significantly different in Experiment 2c (feature search with target-similar distractors) than in other experiments (two-sample t-tests: ps < 0.006), with no significant differences among the remaining comparisons (two-sample t-tests: ps > 0.730). In addition, one-sample t-test against zero confirmed significant reward effects in Experiments 1a, 1b and 2a (ps < 0.019), but not in Experiment 2c (p = 0.122).

The magnitudes of reward effects (indexed by RT differences between high-reward and low-reward condition) in the feature-match condition and relation-match condition. Green and purple dots represent low and high target-distractor similarity during training, respectively. Error bars reflect standard errors of the mean. **p < 0.01.

Discussion

The human attentional system can flexibly adapt to prioritize the processing of previously rewarded information7,8,9. Recent findings have provided a relational account of value-driven attention, demonstrating a mechanism for generalizing learned value across different items and contexts that share common feature relationships14. Here, we tested whether this relational coding of reward-associated features can be learned from various tasks that engaged different search modes (singleton search vs. feature search) and vary in stimulus similarity (low vs. high target-distractor similarity). Beyond the effects of reward on trained feature values, we observed differential effects of value-driven relational attention across training tasks. Specifically, singleton search training resulted in value-driven relational attention, independent of target-distractor similarity (Experiments 1a and 1b); whereas feature search training produced this attentional bias only when the target was dissimilar from the distractors (Experiment 2a), but not when they were similar (Experiments 2b and 2c). Our findings rule out explanations based solely on the search mode or target-distractor similarity. Given that increased target-distractor similarity in feature search is expected to enhance the precision of target selection compared to singleton search and feature search among dissimilar items35, we suggest that the relational coding of reward contingencies likely depends on the precision of attentional selection during learning: when the learning task requires only coarse selection (e.g., singleton search or feature search among dissimilar items), a relational code for reward-associated features is formed; however, when fine selection is necessary (e.g., feature search among similar items), a more precise code is utilized.

This study provides two important findings that elucidate the relational mechanisms underlying value-driven attention. First, we extend the relational account of value-driven attention to a broader range of scenarios beyond those tested in prior experiments14, encompassing the majority of variants of visual search training tasks reported in the literature2,11,12,26,36. Our results demonstrate the feasibility of acquiring the association between reward and relative features even when the target was non-salient (Experiment 2a) or dissimilar to the distractors (Experiment 1a), in line with the characteristics of relational attention theory27,37. Second, our findings highlight the critical role of selection precision (jointly contributed by search mode and stimulus similarity) during reward learning in modulating value-driven relational attention. Recent visual search theories suggest that attention is often guided by non-veridical features of the target, with relational coding as a typical format; a more precise, veridical representation is encoded only when detailed target information is essential for achieving the task goal29,38. Our findings demonstrate that task demands influence reward-associated stimulus representation: relational coding of rewarded features was employed when training required only coarse target selection, while its role diminished when fine selection was needed in training tasks. It is worth noting that in Experiment 2c, rather than showing stronger attentional capture by distractors matching a high-reward feature relationship, we observed an opposing trend, though it was statistically insignificant (Fig. 3c). This opposing result may be explained by a feature-specific account of learned value, as the distractor in the high-rewarded feature relationship was perceptually closer to the low-rewarded feature value.

Generalization allows rapid assessment of novel situations based on prior experiences—an adaptive mechanism essential for survival in complex and dynamic environments39. Our study demonstrates relation-based generalization of reward contingencies across multiple learning contexts, extending the broad applicability of this mechanism14,40. Previous studies have shown the transfer of reward effects to untrained stimuli sharing core reward-associated features10,11,12,13, or to category exemplars (e.g., cars, trees) paired with rewards, when these exemplars share few low-level characteristics6,41. In contrast, we observed generalization based on relational information, which was automatically acquired during training with constant target features and distractor contexts (e.g., red among red-oranges). This relational learning parallels findings in animal foraging; for instance, birds prefer larger flowers over smaller ones42, and bees rapidly learn spatial relationship (e.g., left/right) during training43. Our findings thus demonstrate that humans and animals similarly leverage relational information to locate rewarded items in visual searches.

Importantly, the learned effect persists even when the reward is no longer available, suggesting lasting changes in relational representations in the human brain due to reward learning. However, the specific neural mechanisms by which reward modulates relation-based attention remain unclear. Some neurophysiological studies suggest the possible existence of “relational neurons” that may be tuned to relative features in visual cortices44,45, while other research points to the posterior parietal cortex as a potential convergence site for relational information18, value46,47, and attentional priority48. Future work combining high-resolution neuroimaging techniques49,50,51 should explore whether reward modulates “relational neurons” and “feature-selective neurons” in the parieto-occipital regions, depending on the precision of attentional selection during reward learning.

Several limitations of our study should be considered. First, our findings were obtained using simplified visual search contexts, and their generalizability to naturalistic viewing conditions requires further investigation. Future studies should examine whether the precision-dependent modulations of value-driven relational attention represent a general mechanism in real-world scenes. Second, while our results reveal precision-related changes in attentional selection through manipulations of search modes and target-distractor similarity, future work should employ direct, continuous measures of attentional precision to characterize its interactions with value-driven mechanisms.

In conclusion, our study demonstrates that relational coding operates during reward-feature associative learning, with search mode and stimulus similarity jointly determining how reward-associated stimuli are encoded. These findings provide critical evidence bridging theories of experience-driven attention7,8,9,52 and visual search29,38, revealing that target selection precision modulates value-driven relational generalization. Considering that value-driven attention correlates with a variety of clinical syndromes53,54 and many neuropsychiatric disorders (such as substance use) involve aberrant generalization55, our findings may inform diagnostics and treatments for these disorders.

Methods

Participants

Two-hundred participants from Zhejiang University were recruited in the main experiments of the study. Each experiment included forty participants (Experiment 1a: mean age = 22.9; 26 females and 14 males; Experiment 1b: mean age = 21.5; 25 females and 15 males; Experiment 2a: mean age = 21; 22 females and 18 males; Experiment 2b: mean age = 22.7; 24 females and 16 males; Experiment 2c: mean age = 21.2; 24 females and 16 males). This sample size was determined by our prior study using very similar designs to examine value-driven attention based on feature relationship14. We entered the reported effect size of reward history (ηp2 = 0.18) into a simulated ANOVA using G*Power (Version 3.1)56 and showed that a sample size of 40 would provide power greater than 95% (α = 0.05) for detecting an effect of reward.

Sixteen participants (mean age = 22.75; 12 females and 4 males) were recruited for Control Experiment 1. The sample size was comparable with prior studies using similar designs of visual search tasks57. Using the estimated effect size of target-distractor similarity (d = 1.2) (Note. This effect size was estimated using data from Experiment 1. Because target-distractor similarity was manipulated between subjects (Experiments 1a vs. 1b), we computed normalized RTs by dividing training-task RTs (a red or yellow target among homogenous colors) by mean RTs from distractor-absent trials (all red or all yellow) in the relation-match condition during the test. Normalized RTs were significantly faster responses in low than high target-distractor similarity condition (two-sample t-test: t(78) = -5.37, p < 0.001, Cohen’s d = −1.200, 95% CI = [−1.673, −0.720]).) into a paired t-test using G*Power (Version 3.1)56, a sample of 16 was found to provide greater than 95% power (α = 0.05) to detect an effect. Forty-eight students (mean age = 22.94; 30 females and 18 males) participated in Control Experiment 2. To ensure no strategy transfer between search modes as the target shared identical colors, half of the participants performed singleton search and the other half performed feature search. The sample size was determined based on a similar study testing the search mode × set size interaction58. Using the effect size (ηp2 = 0.531) from this prior study, power analysis via G*Power (Version 3.1)56 indicated that a sample size of 48 (24 per group) would provide greater than 99% power (α = 0.05) to detect the interaction effect. The stimuli and apparatus were largely the same as those used in the training task in Experiment 1a and 2a.

All participants provided written informed consent approved by the Institutional Review Board at Zhejiang University (refs: 2023-007). All participants had normal or corrected-to-normal vision and were right-handed. They were paid on average ¥61 for their participation, a portion of this payment was based on their reward-based training performance.

Stimuli and apparatus

The stimuli were filled circles (2.4° × 2.4°) with a black oriented bar inside the circle. The colors of circles were selected from the following set for the training and test sessions: red (u’ = 0.463, v’= 0.526; 14.5 cd/m2), green (u’ = 0.127, v’= 0.565; 26.6 cd/m2), magenta (u’ = 0.304, v’= 0.330; 12.9 cd/m2), yellow (u′ = 0.219, v′ = 0.555; 33.2 cd/m2), red-orange (u’ = 0.377, v’= 0.537; 20.1 cd/m2), orange (u′ = 0.346, v′ = 0.540; 23.4 cd/m2), yellow-orange (u′ = 0.302, v′ = 0.546; 18.2 cd/m2), blue (u′ = 0.173, v′ = 0.310; 18.0 cd/m2), purple (u′ = 0.215, v′ = 0.321; 20.2 cd/m2), cyan (u′ = 0.140, v′ = 0.457; 19.0 cd/m2) and gray (u′ = 0.205, v′ = 0.470; 20.5 cd/m2).

All stimuli were generated in Matlab version 2022b (The MathWorks, Natick, MA) using Psychtoolbox59. Stimuli were presented against a black background on a 21.06-inch LCD monitor (resolution: 1024 × 768, refresh rate: 100 Hz) at a distance of 60 cm in a dim-lit room.

Experimental procedure and tasks

Participants completed a training session and a test session on two consecutive days. Using visual search tasks, we first trained participants to establish the reward-color association and then tested the reward effect in a separate test session.

In the training session of the main experiments (Fig. 1a), each trial began with a central fixation for 0.5 s, followed by a search display for 0.5 s. The search display comprised eight oriented bars appearing inside colored circles, at an eccentricity of 5°. In Experiment 1a, the search arrays comprised either a red singleton among green distractors or a yellow singleton among magenta distractors (low target-distractor similarity); in Experiment 1b, the search arrays comprised either a red singleton among red-orange non-singletons or a yellow singleton among yellow-orange non-singletons (high target-distractor similarity); In Experiment 2a, the search arrays comprised either a red or yellow target among target-dissimilar distractors (green, blue, purple, magenta, cyan, gray; low target-distractor similarity); in Experiments 2b and 2c, the search arrays comprised either a red or yellow target among target-similar distractors (red-orange, orange, yellow-orange) and two filler distractors (green and blue). We used fixed filler distractors—rather than randomly sampling from a dissimilar set—to maintain consistency with the fixed target-similar distractors. The task was to report the oriented bar appearing inside the target color (red or yellow). Participants were instructed to use a keypress to indicate the orientation of the target bar. Note that in Experiments 1a, 1b, 2a and 2b, the target bar was horizontal or vertical, while others were diagonally oriented bars (45° or 135°), following the design in previous studies2,14. In Experiment 2c, we changed the target bar to a diagonal orientation (45° or 135°) because searching for a specific feature (e.g., red) among similar features (e.g., red-orange, orange, yellow-orange) likely caused participants to partially rely on the uniquely orientation to perform visual search, thus weakening the formation of color-reward contingency (Supplementary Materials). Participants received on-screen monetary feedback after a correct response. An incorrect response was followed by a black screen and auditory feedback. For half of the participants, red was predefined to be associated with a high probability (80%) of high reward (¥10) and a low probability (20%) of low reward (¥1) feedback, whereas yellow was associated with a high probability (80%) of low reward (¥1) and a low probability (20%) of high reward (¥10) feedback. For the other half of the participants, the color-reward association was reversed. Participants were not informed about the color-reward association and they were encouraged to perform as well as they could to maximize the total amount of earnings. They would receive a portion of their final accumulated monetary reward (up to ¥42). Each participant completed eight blocks during the training (100 trials/block).

In the test session of the main experiments (Fig. 1b), each trial consisted of a fixation (0.5 s), a search display (1.5 s or until response), and a blank intertrial interval (ITI). Participants were asked to find a horizontally or vertically oriented bar. The task comprised of two types of conditions. In the feature-match condition, all items were different colors (blue, purple, cyan, green, magenta, gray), with a previously rewarded color distractor (red or yellow) being present on 50% of the trials and absent on the other 50%. In the relation-match condition, all items were either red or yellow, with a color singleton distractor being present on 50% of the trials and absent on the other 50%. The color of the singleton distractor matched reward history in feature relationships (redder: yellow-orange among yellows; yellower: red-orange among reds). The target bar never appeared in the color distractor that matched the reward history either in feature value or feature relation. Each participant completed six blocks (160 trials/block) in the test session. Each type of condition was equally probable and randomly interleaved across trials to vary the predictability of trial type.

To validate our manipulation of feature similarity during the training task in Experiment 1, we conducted Control Experiment 1 where participants performed a singleton search task under low or high target-distractor similarity conditions. Using the same visual search task as those in the training session in Experiment 1a and 1b, each trial of the visual search task comprised of a central fixation (0.5 s), a search display (0.5 s), a response display (up to 1.5 s) and a feedback display (1.5 s). The search arrays varied in target-distractor similarity (Supplementary Figure 1): (1) low similarity: a red singleton among green distractors or a yellow singleton among magenta distractors (replicating Experiment 1a); or (2) high similarity: a red singleton among red-orange non-singletons or a yellow singleton among yellow-orange non-singletons (replicating Experiment 1b). These conditions were randomly interleaved across trials. No reward feedback was given. An incorrect response triggered an auditory tone during the feedback display (“beep”). Each participant completed two blocks (100 trials/block).

To validate our manipulation of search mode during the training task in Experiments 1 and 2, we conducted Control Experiment 2 to compare the search efficiency as a function of set size between search modes. Two different patterns are expected based on findings from the literature29,30: flat RT functions for singleton search (parallel process) versus linearly increasing RT functions for feature search (serial process). We used a visual search task modeled after the training tasks in Experiments 1a and 2a (Supplementary Fig. 2). Each trial comprised of a central fixation (0.5 s), a search display (0.5 s), a response display (0–1.5 s) and a feedback display (1.5 s). In singleton search, participants viewed either a red target among green distractors or a yellow target among magenta distractors (replicating Experiment 1a). In feature search, participants viewed a red or yellow target among heterogeneous distractors (replicating Experiment 2a) randomly selected from seven colors (green, blue, purple, magenta, cyan, brown, gray). Across both tasks, set sizes varied randomly between 4, 6 and 8 items. Participants judged the bar orientation (horizontal or vertical) inside the target color in both tasks. No reward feedback was given. An incorrect response triggered an auditory tone (“beep”). Each participant completed four blocks (90 trials/block).

Statistical analysis

Correct responses were defined as appropriate keypresses made within 0.2–1.5 s after the onset of the search display. Search RTs that were outside this specified window or above three standard deviations of the mean were discarded. We compared search performance between high and low reward conditions during training using paired t-tests. Then, we examined the effect of reward history in the feature-match and relation-match conditions. To evaluate the strength of evidence for the lack of significant effects, we conducted parallel Bayesian analyses60 using standard priors as implemented in JASP Version 0.17.261. We reported Bayes factors (BF01) to provide evidence in favor of the null hypothesis when it was greater than three. All statistical analyses were performed in MATLAB and JASP software.

Data availability

All data and task codes have been made publicly available via the Open Science Framework at https://osf.io/q7dsm/.

Code availability

The analyses were performed in MATLAB. The code for all analyses has been made publicly available via the Open Science Framework at https://osf.io/q7dsm/.

References

Rescorla, R. A. & Solomon, R. L. Two-process learning theory: Relationships between Pavlovian conditioning and instrumental learning. Psychol. Rev. 74, 151–182 (1967).

Anderson, B. A., Laurent, P. A. & Yantis, S. Value-driven attentional capture. PNAS 108, 10367–10371 (2011).

Gong, M. & Liu, T. Reward differentially interacts with physical salience in feature-based attention. J. Vis. 18, 12 (2018).

Gong, M., Jia, K. & Li, S. Perceptual competition promotes suppression of reward salience in behavioral selection and neural representation. J. Neurosci 37, 6242–6252 (2017).

Wang, L., Yu, H. & Zhou, X. Interaction between value and perceptual salience in value-driven attentional capture. J. Vis. 13, 5 (2013).

Hickey, C. & Peelen, M. Neural mechanisms of incentive salience in naturalistic human vision. Neuron 85, 512–518 (2015).

Failing, M. & Theeuwes, J. Selection history: How reward modulates selectivity of visual attention. Psychon. Bull. Rev. 25, 514–538 (2018).

Anderson, B. A. et al. The past, present, and future of selection history. Neurosci. Biobehav. Rev. 130, 326–350 (2021).

Pearson, D. et al. Attentional economics links value-modulated attentional capture and decision-making. Nat. Rev. Psychol. 1, 320–333 (2022).

Anderson, B. A., Laurent, P. A. & Yantis, S. Generalization of value-based attentional priority. Vis. Cogn. 20, 647–658 (2012).

Lee, J. & Shomstein, S. Reward-based transfer from bottom-up to top-down search tasks. Psychol. Sci. 25, 466–475 (2014).

Gong, M. & Li, S. Learned reward association improves visual working memory. J. Exp. Psychol. Hum. Percept. Perform. 40, 841–856 (2014).

Anderson, B. A. On the feature specificity of value-driven attention. PLoS ONE 12, e0177491 (2017).

Chen, Y. et al. Reward history modulates attention based on feature relationship. J. Exp. Psychol. Gen. 152, 1937–1950 (2023).

Becker, S. I. The role of target-distractor relationships in guiding attention and the eyes in visual search. J. Exp. Psychol. Gen. 139, 247–265 (2010).

Becker, S. I. Why you cannot map attention: A relational theory of attention and eye movements. Aust. Psychol. 48, 389–398 (2013).

Hafri, A. & Firestone, C. The perception of relations. Trends Cogn. Sci. 25, 475–492 (2021).

Summerfield, C. et al. Structure learning and the posterior parietal cortex. Prog. Neurobiol. 184, 101717 (2020).

Navalpakkam, V. & Itti, L. Search goal tunes visual features optimally. Neuron 53, 605–617 (2007).

Scolari, M. & Serences, J. T. Adaptive allocation of attentional gain. J. Neurosci. 29, 11933–11942 (2009).

Geng, J. J. & Witkowski, P. Template-to-distractor distinctiveness regulates visual search efficiency. Curr. Opin. Psychol. 29, 119–125 (2019).

Yu, X., Rahim, R. A. & Geng, J. J. Task-adaptive changes to the target template in response to distractor context: Separability versus similarity. J. Exp. Psychol. Gen. 153, 564–572 (2024).

Anderson, B. A. & Halpern, M. On the value-dependence of value-driven attentional capture. Atten. Percept. Psychophys. 79, 1001–1011 (2017).

Gong, M., Yang, F. & Li, S. Reward association facilitates distractor suppression in human visual search. Eur. J. Neurosci. 43, 942–953 (2016).

Sha, L. Z. & Jiang, Y. V. Components of reward-driven attentional capture. Atten. Percept. Psychophys. 78, 403–414 (2016).

Hickey, C., Chelazzi, L. & Theeuwes, J. Reward modulates salience processing in human vision through the anterior cingulate. J. Neurosci. 30, 11096–11103 (2010).

Hamblin-Frohman, Z. & Becker, S. I. The attentional template in high and low similarity search: Optimal tuning or tuning to relations?. Cognition 212, 104732 (2021).

Yu, X., Hanks, T. D. & Geng, J. J. Attentional guidance and match decisions rely on different template information during visual search. Psychol. Sci. 33, 105–120 (2022).

Yu, X. et al. Good-enough attentional guidance. Trends Cogn. Sci. 27, 391–403 (2023).

Wolfe, J. M. Guided search 2.0 a revised model of visual search. Psychon. Bull. Rev. 1, 202–238 (1994).

Liesefeld, H. R. et al. Search efficiency as a function of target saliency: The transition from inefficient to efficient search and beyond. J. Exp. Psychol. Hum. Percept. Perform. 42, 821–836 (2016).

Huang, L. Color is processed less efficiently than orientation in change detection but more efficiently in visual search. Psychol. Sci. 26, 646–652 (2015).

Gaspelin, N. & Luck, S. J. The role of inhibition in avoiding distraction by salient stimuli. Trends Cogn. Sci. 22, 79–92 (2018).

Wöstmann, M. et al. Ten simple rules to study distractor suppression. Prog. Neurobiol. 213, 102269 (2022).

Kerzel, D. The precision of attentional selection is far worse than the precision of the underlying memory representation. Cognition 186, 20–31 (2019).

Le Pelley, M. E. et al. When goals conflict with values: Counterproductive attentional and oculomotor capture by reward-related stimuli. J. Exp. Psychol. Gen. 144, 158–171 (2015).

York, A. & Becker, S. I. Top-down modulation of gaze capture: Feature similarity, optimal tuning, or tuning to relative features?. J. Vis. 20, 6 (2020).

Wolfe, J. M. Guided search 6.0: An updated model of visual search. Psychon. Bull. Rev. 28, 1060–1092 (2021).

Poggio, T. et al. General conditions for predictivity in learning theory. Nature 428, 419–422 (2004).

Tan, Q. et al. Reward history alters priority map based on spatial relationship, but not absolute location. Psychon. Bull. Rev. (2025).

Failing, M. F. & Theeuwes, J. Nonspatial attentional capture by previously rewarded scene semantics. Vis. Cogn. 23, 82–104 (2015).

Brown, T. et al. Size is relative: Use of relational concepts by wild hummingbirds. Proc. R. Soc. B 289, 20212508 (2022).

Avarguès-Weber, A. & Giurfa, M. Conceptual learning by miniature brains. Proc. R. Soc. B 280, 20131907 (2013).

De Valois, R. L. et al. Some transformations of color information from lateral geniculate nucleus to striate cortex. PNAS 97, 4997–5002 (2000).

Conway, B. R. Spatial structure of cone inputs to color cells in alert macaque primary visual cortex (V1). J. Neurosci. 21, 2768–2783 (2001).

Peck, C. J. et al. Reward modulates attention independently of action value in posterior parietal cortex. J. Neurosci. 29, 11182–11191 (2009).

Anderson, B. A. Neurobiology of value-driven attention. Curr. Opin. Psychol. 29, 27–33 (2019).

Bisley, J. W. & Goldberg, M. E. Attention, intention, and priority in the parietal lobe. Annu. Rev. Neurosci. 33, 1–21 (2010).

Jia, K. et al. A protocol for ultra-high field laminar fMRI in the human brain. STAR Protoc. 2, 100415 (2021).

Jia, K., Goebel, R. & Kourtzi, Z. Ultra-high field imaging of human visual cognition. Annu. Rev. Vis. Sci. 9, 479–500 (2023).

Jia, K. et al. Recurrent inhibition refines mental templates to optimize perceptual decisions. Sci. Adv. 10, eado7378 (2024).

Awh, E., Belopolsky, A. V. & Theeuwes, J. Top-down versus bottom-up attentional control: A failed theoretical dichotomy. Trends Cogn. Sci. 16, 437–443 (2012).

Anderson, B. A. Relating value-driven attention to psychopathology. Curr. Opin. Psychol. 39, 48–54 (2021).

Li, T. et al. Reward learning modulates the attentional processing of faces in children with and without autism spectrum disorder. Autism Res 10, 1797–1807 (2017).

Lucantonio, F. et al. Effects of prior cocaine versus morphine or heroin self-administration on extinction learning driven by overexpectation versus omission of reward. Biol. Psychiatry 77, 912–920 (2015).

Faul, F. et al. G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39, 175–191 (2007).

Duncan, J. & Humphreys, G. W. Visual search and stimulus similarity. Psychol. Rev. 96, 433–458 (1989).

Kerzel, D. & Huynh Cong, S. Search mode, not the attentional window, determines the magnitude of attentional capture. Atten. Percept. Psychophys. 86, 457–470 (2024).

Brainard, D. H. The psychophysics toolbox. Spat. Vis. 10, 433–436 (1997).

Wagenmakers, E.-J. A practical solution to the pervasive problems of p values. Psychon. Bull. Rev. 14, 779–804 (2007).

JASP Team. JASP (Version 0.17.2) (2023).

Acknowledgements

This work was supported by National Natural Science Foundation of China (32371087, 32300855), Fundamental Research Funds for the Central University (226-2024-00118), National Science and Technology Innovation 2030—Major Project 2021ZD0200409, and a grant from the MOE Frontiers Science Center for Brain Science & Brain-Machine Integration at Zhejiang University and Non-profit Central Research Institute Fund of Chinese Academy of Medical Sciences 2023-PT310-01.

Author information

Authors and Affiliations

Contributions

Original idea by O.J. and M.G. Experiment programmed by O.J. and Q.T. Data collected by O.J. and S.Z. Analysis programmed by O.J. and Q.T. Manuscript drafted by O.J., Q.T., and M.G. Manuscript edited and revised by Q.J., Q.T., S.Z., K.J., and M.G.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Jia, O., Tan, Q., Zhang, S. et al. The precision of attention selection during reward learning influences the mechanisms of value-driven attention. npj Sci. Learn. 10, 49 (2025). https://doi.org/10.1038/s41539-025-00342-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41539-025-00342-1