Abstract

The protection of private data is a hot research issue in the era of big data. Differential privacy is a strong privacy guarantees in data analysis. In this paper, we propose DP-MSNM, a parametric density estimation algorithm using multivariate skew-normal mixtures (MSNM) model to differential privacy. MSNM can solve the asymmetric problem of data sets, and it is could approximate any distribution through expectation–maximization (EM) algorithm. In this model, we add two extra steps on the estimated parameters in the M step of each iteration. The first step is adding calibrated noise to the estimated parameters based on Laplacian mechanism. The second step is post-processes those noisy parameters to ensure their intrinsic characteristics based on the theory of vector normalize and positive semi definition matrix. Extensive experiments using both real data sets evaluate the performance of DP-MSNM, and demonstrate that the proposed method outperforms DPGMM.

Similar content being viewed by others

Introduction

With the rapid developments of big data technology, data privacy protection has become a important issue. Differential privacy is an attack-resistant model with strict theoretical proof and good quantitative representation. Differential private density estimation has been done on mixtures model. The most of them are considered in the Gaussian mixtures model (GMM)1,2, but the data can presents skewness or heavy tailed behavior. Nearly, the vulnerability of pre-trained models to the attack is not uniform when the training data itself is skewed, Trues et al.3 shows that risk from some attacks is routinely increased when models use skewed training data. This risk may be caused by the reduction of the accuracy of the model density estimation on assumed data normality.

To the best of our knowledge no existing work has concern in the skew-normal mixtures model with differential privacy. In skew-normal mixtures model, all data are assumed to be drawn by randomly sampling from one of component of the skew-normal mixture model, and the learning task is to estimate the proportion parameters, location parameters, scale parameters and skewness parameters of each component distribution in model. In particular, we need to consider some problem of skew-normal mixtures model, where (1) skew-normal distribution compose the skew-normal mixtures model, and (2) we not clear the sample belongs to which component, and (3) we just only have perturbed data, and (4) we hope to get estimate values of all parameters.

Addressing on these problems, we propose DP-MSNM, which appends two extra steps (noise adding step and post-processing step) to the general method of density estimation with MSNM in each iteration like. The noise adding step is adding classical Laplace noise to the original estimated parameters in each iteration to achieve differential privacy. The post-processing step will through eigen decomposition on scale parameter to prevent its not positive semi-definite and use normalize method to ensure sum the weight parameter as to 1.

The remainder of the paper is organized as follows. Section ”Preliminaries” introduces preliminaries. Given DP-MSNM details in Section “Differentially private MSNM”. Section “Experimental setup” evaluates the experimental performance. Finally , Section “Conclusions” concludes the work and discusses future works.

Preliminaries

Let D be a data set that contains n records \(x_{1},\ldots ,x_{n}\) with p attributes, and \(x_{i}=(x_{i1},\ldots ,x_{ip})\). There \(\Vert x\Vert _{p},\Vert A\Vert _{p}\) are denote the \(L_{p}\) norms of vector x and matrix A.

Differential privacy

The privacy definition is due to Dwork et al.4. The inform definition is given follows.

Definition 1

(Differential privacy) A randomized algorithm mechanism \({\mathscr {M}}\) provides \(\varepsilon\)-differential privacy, if for all Output O of \({\mathscr {M}}\) and for any two neighbor data sets D and \(D'\), we have

We called \(\varepsilon\) as privacy budget, the smaller \(\varepsilon\) means the higher level of privacy provides. Where we adding calibrated random noise to the output to achieved privacy. We use Laplacian mechanism and \(L_{1}\)-sensitivity to output perturbation.

Definition 2

(\(L_{1}\)-sensitivity) The \(L_{1}\) sensitivity of a query function \({\mathscr {M}}\) as the maximum change in the \(L_{1}\)-norm of the query output on the neighbor data sets D and \(D'\). That is ,

where we use Hamming distance to measure the distance between two data sets D and \(D'\), and its distance is expressed as \(d(D,D')=\{i:x_{i} \ne y_{i},x_{i}\in D , y_{i}\in D'\}\). We denote the noisy vector or matrix are follows Laplace distribution \(Lap(0,\frac{S({\mathscr {M}})}{\varepsilon })\), where \(\frac{S({\mathscr {M}})}{\varepsilon }\) is scale parameter. Given \(L_{1}\) sensitivity \(S({\mathscr {M}})\) and the privacy budget \(\varepsilon\) , this mechanism can ensure differential privacy.

Density estimation with MSNM

In the multivariate skew-normal mixture models (MSNM), we assumed sample \(x_{i}\) is i.i.d. with a density \(f_{MSN}(x_{i})\) that can be written as a linear composition of skew-normal distributions5 in the form

where \(x=(x_{1},\ldots ,x_{n}),\) and \(x_{i},i=1,\ldots ,n\) is a p-dimensional vector. \(\Theta =(\theta _{1},\ldots ,\theta _{G}),\theta _{k}=(\xi _{k},\Sigma _{k}, \alpha _{k}),k=1,\ldots ,G\). where \(\pi _{k} \ge 0\) is mixture proportion and satisfied that \(\sum _{k=1}^G\pi _{k} =1\). \(\xi _{k},\Sigma _{k},\alpha _{k}\) are parameter in the k-th component of the mixture models, and \(\alpha _{k}\) is the p-dimensional skewness parameter, \(\Sigma _{k}\) is a \(p \times p\) positive semi-definite(PSD) correlation matrix, \(\xi _{k}\) is the p-dimensional local parameter. \(\phi _{d}(x;\xi , \Sigma )\) is the density of a normal distribution \(N(\xi , \Sigma )\), \(\Phi (\cdot )\) is the cumulate distribution function of standard normal. The function \(f_{MSN}(x; \xi ,\Sigma ,\alpha )\) can be present by

where \(\sigma =(\Sigma \odot I_{p})^{\frac{1}{2}}\), \(\odot\) denote Hadamard product. If a random variable X has density function like Eq. (2), we called the variable X is a Multivariate skew-normal distribution, and denote \(X \sim SN_{p}(\xi , \Sigma , \alpha )\) like as Contreras-Reyes and Cortés, 20166.

Recalling the variables \(X_{0} \sim SN_{p}(0,{\bar{\Sigma }},\alpha )\) and \(X\sim SN_{p}(\xi ,\Sigma ,\alpha )\), we can obtain an additional representation for multivariate skew-normal distribution from the results of Azzalini et al.5 as follows:

where \(\delta = (\delta _{1}, \delta _{2}, \ldots , \delta _{p})^{T}\) is a vector with the elements in \((-1, 1)\), and

\(U_{0}\) and \(U_{1}\) are the normal variables of dimension p and 1, the joint distribution is

\({\bar{\Psi }}\) is a full-rank correlation matrix.

By the transformation \(X = \xi + \sigma X_{0}\), we have

Let \(|Z_{1}|=\tau\). Given \(\tau\), we can obtain the condition representation, that is,

For a p-dimensional SN distribution, the parameters have the following relationships by simple algebraic work (See5):

Furthermore, we have

Thus, let \(\eta =\sigma \delta\), by the relation between \(\Sigma\) and \({\bar{\Sigma }}\), we have

Therefore

Let \(Z_{ik}\) be the membership indicator variable such that it equals 1 when \(x_{i}\) is from the k-th component of the MSNM model, and equals 0 otherwise. Consider the complete data \((X,Z)=\{X_{i},Z_{i}\}_{i=1}^n\), where the latent component-indicators vector \(Z_{i}=(Z_{1i},\ldots ,Z_{Gi})\) follows a multi-nomial distribution with the trial and cell probabilities of \(\pi _{1},\ldots ,\pi _{G}\). Let us write it as \(Z_{i}\sim M(1;\pi _{1},\ldots ,\pi _{G})\). Based on the component indicators, for each \(X_{i}\), \(i=1,\ldots ,n\), the hierarchical representation for MSNMs can be given by Cabral et al.7

where \(TN_{[0,+\infty )}(0,1)\) denotes the half normal distribution. According to the hierarchical representation (6), ignoring the added constants and denoting

the complete data log-likelihood function is

According to the Bayesian theorem, we have

where

Thus, for the current parameters \(\Theta ^{(t)}\), let

The EM algorithm then proceeds as follows: E-step: Let us compute the conditional expectations as

where \(\Delta _{ik}^{(t)}=\phi \left( \dfrac{\mu _{\tau _{ik}}^{(t)}}{\sigma _{\tau _{ik}}^{(t)}}\right) \bigg / \Phi \left( \dfrac{\mu _{\tau _{ik}}^{(t)}}{\sigma _{\tau _{ik}}^{(t)}}\right)\). Thus, we get \(E(Z_{ik}\tau _{i}|X_{i}=x_{i},\Theta ^{(t)})=r_{0ik}^{(t)}r_{1ik}^{(t)},\) and \(E(Z_{ik}\tau _{i}^{2}|X_{i}=x_{i},\Theta ^{(t)})=r_{0ik}^{(t)}r_{2ik}^{(t)}\).

Therefore, the Q function can be written as

M-Step: Let us maximize \(Q(\Theta ,\Theta ^{(t)})\) with respect to \(\Theta\) under the restriction with \(\sum _{k=1}^G\pi _{k}=1\).

-

1.

Update \(\pi _{k}^{(t)}\) by \(\pi _{k}^{(t+1)}=n^{-1}\sum _{i=1}^nr_{0ik}^{(t)}\).

-

2.

Update \(\xi _{k}^{(t)}\) by

$$\begin{aligned} \xi _{k}^{(t+1)}=\frac{\sum _{i=1}^nr_{0ik}^{(t)}x_{i}-\eta _{k}^{(t)}\sum _{i=1}^nr_{0ik}^{(t)}r_{1ik}^{(t)}}{\sum _{i=1}^nr_{0ik}^{(t)}}. \end{aligned}$$(8) -

3.

Update \(\eta _{k}^{(t)}\) by

$$\begin{aligned} \eta _{k}^{(t+1)}=\frac{\sum _{i=1}^nr_{0ik}^{(t)}\big (x_{i}-\xi _{k}^{(t+1)}\big )r_{1ik}^{(t)}}{\sum _{i=1}^nr_{0ik}^{(t)}}. \end{aligned}$$(9) -

4.

Update \(\Lambda _{k}^{(t)}\) by

$$\begin{aligned} \begin{aligned} \Lambda _{k}^{(t+1)}&=\frac{1}{\sum _{i=1}^nr_{0ik}^{(t)}}\left\{ \sum _{i=1}^nr_{0ik}^{(t)}\big (x_{i}-\xi _{k}^{(t+1)}\big )\big (x_{i}-\xi _{k}^{(t+1)}\big )^{T} \right. \\&\quad \left. -2 \eta _{k}^{(t+1)}\sum _{i=1}^nr_{0ik}^{(t)}r_{1ik}^{(t)}\big (x_{i}-\xi _{k}^{(t+1)}\big ) + \eta _{k}^{(t+1)}\sum _{i=1}^nr_{0ik}^{(t)}r_{2ik}^{(t)}\big (\eta _{k}^{(t+1)}\big )^{T}\right\} . \end{aligned} \end{aligned}$$(10)

Differentially private MSNM

In this section, we present DP-MSNM method which is a differentially private density estimation algorithm with MSNM.

Main idea

Let G denotes the component order of the MSNM. The main idea of DP-MSNM is add to two extra steps after getting the original estimated parameters in the M-step of each iteration. The original estimated parameters include mixture proportion (\(\pi\)), local parameter vector (\(\xi _{k}\)), scale parameter matrix (\(\Sigma _{k}\)) and skewness parameter vector (\(\alpha _{k}\)) of each Skew-Normal distribution, where \(\pi =(\pi _{1},\ldots ,\pi _{G})\) and \(k\in \{1,\ldots ,G\}\). First, we need to get noise of each parameter by \(L_{1}\)-sensitivity and allocated privacy budget, and add these noises to the original estimated parameters. We get noisy parameters \({\bar{\pi }}\) and \({\bar{\xi }}_{k},{\bar{\Lambda }}_{k},{\bar{\eta }}_{k}\) . The second step is post-processes \({\bar{\pi }}\) and \({\bar{\xi }}_{k},{\bar{\Lambda }}_{k},{\bar{\eta }}_{k}\), since the noise added will break some intrinsic characteristics of weight parameter and component parameters, this step will output \({\hat{\pi }}\) and \({\hat{\xi }}_{k},{\hat{\Lambda }}_{k},{\hat{\eta }}_{k}\)

Noise adding step

In this part, we will analyze the \(L_{1}\) sensitivities of \(\pi _{k},\xi _{k},\Lambda _{k},\eta _{k}\), and we will add calibrated noise to the original estimated parameters. We suppose that \(D=\{x_{1},\ldots ,x_{n}\}\) and \(D'=\{x_{1},\ldots ,x_{n-1}\}\) are two neighbor data sets,where \(D'\) has the same \(n-1\) records as \(D'\), but not have the n-th record. Let \(N_{k}=\sum _{i=1}^n r_{0ik}\), we also assume the data set is well-separated8 and \(N_{k}\ge \frac{n}{2G},k=1,\ldots ,G\). We use differentially private k-means algorithm9to get the centers of clusters,and get \(\eta _{k}\) form skew parameter \(\alpha _{k}\) through Mardia measure method10. We denote \(S(\pi ),S(\xi ),S(\Lambda ),S(\eta )\) as the \(L_{1}\)-sensitivities of \(\pi ,\xi _{k},\Lambda _{k},\eta _{k}\) in each iteration. According to Eqs. (8)–(10), we give \(S(\pi ),S(\xi ),S(\Lambda ),S(\eta )\) in Lemmas 1, 2, 3 and 4.

Lemma 1

In each iteration, the \(L_{1}\)-sensitivity of \(\pi\) is \(S(\pi )=\frac{G}{n}\).

The proof process of Lemma 1 is identical as Wu et al.1. In order to make the proof of subsequent lemmas clear, we still write it down as follows.

Proof

Since \(\pi =(\pi _{1},\ldots ,\pi _{G})\), let \(\pi (D)\) and \(\pi (D')\) denote the weights of data sets D and \(D'\). Then the k-th elements of \(\pi (D)-\pi (D')\) is \(\frac{\sum _{i=1}^nr_{0ik}}{n} - \frac{\sum _{i=1}^{n-1}r_{0ik}}{n-1} =\frac{\sum _{i=1}^{n-1}(r_{0nk}-r_{0ik})}{n(n-1)}\). Since \(|r_{0nk}-r_{0ik}|<1\), we have \(\Vert \pi (D) - \pi (D')\Vert _{1} = \sum _{k=1}^G |\frac{\sum _{i=1}^{n-1}(r_{0nk}-r_{0ik})}{n(n-1)}| \le \sum _{k=1}^G \frac{\sum _{i=1}^{n-1}|r_{0nk}-r_{0ik}|}{n(n-1)}\le \frac{G}{n}\). \(\square\)

Lemma 2

In each iteration, if \(\Vert x_{i}\Vert _{1}\le R,N_{k}\ge \frac{n}{2G}\) and \(\Vert \eta _{k}\Vert _{1}\le R_{3}\),the \(L_{1}\)-sensitivity of \(\xi _{k}\) is \(S(\xi )=\frac{4G(R-R_{3})}{n}\).

Proof

Let \(\xi (D)\) and \(\xi (D')\) denote the local parameter of data sets D and \(D'\). The k-th elements of \(\xi (D)-\xi (D')\) is

Because of\(\Vert x_{i}\Vert _{1}\le R,N_{k}\ge \frac{n}{2G},\Vert \eta _{k}\Vert _{1}\le R_{3}\), we have

Lemma 3

In each iteration, if \(\Vert x_{i}\Vert _{1}\le R,N_{k}\ge \frac{n}{2G}\) and \(\Vert r_{1ik}\Vert _{1}\le R_{1},\Vert r_{2ik}\Vert _{1}\le R_{2}\), the \(L_{1}\)-sensitivity of \(\eta _{k}\) is \(S(\eta )=\frac{2GRR_{1}}{nR_{2}}\).

Proof

let \(\eta (D)\) and \(\eta (D')\) denote the parameter in the Eq. (9) D and \(D'\). The k-th elements of \(\eta (D)-\eta (D')\) is

Then

\(\square\)

Lemma 4

In each iteration, if \(\Vert x_{i}\Vert _{1}\le R,N_{k}\ge \frac{n}{2G},\Vert r_{1ik}\Vert _{1}\le R_{1},\Vert r_{2ik}\Vert _{1}\le R_{2},\Vert \eta _{k}\Vert _{1}\le R_{3}\), the \(L_{1}\)-sensitivity of \(\Lambda _{k}\) is \(S(\Lambda )=\frac{2G(R^{2}+4RR_{1}R_{3}+4R_{2}R_{3}^{2})}{n}\).

Proof

let \(\Lambda (D)\) and \(\Lambda (D')\) denote the parameter in the Eq. (10) D and \(D'\). The k-th element of vector \(\Lambda (D)-\Lambda (D')\) is

Then

In each iteration, let \(\varepsilon _{\pi },\varepsilon _{\xi },\varepsilon _{\Lambda },\varepsilon _{\alpha }\) present the privacy budget allocated to \(\pi ,\xi _{k},\Lambda _{k},\alpha _{k}\) respectively, where \(k=1,\ldots ,G\). So, we know the privacy budget in each iteration is \(\varepsilon _{\pi } + G(\varepsilon _{\xi }+\varepsilon _{\Lambda }+\varepsilon _{\alpha })\) from composite theorem. Next we can get the noisy parameters \({\bar{\pi }},{\bar{\xi }}_{k},{\bar{\Lambda }}_{k},{\bar{\alpha }}_{k}\) through Lemmas 1, 2, 3 and 4 and the Laplace mechanism, it was given in follows:

where \(e_{\pi _{k}}\sim Lap(0,\frac{S(\pi )}{\varepsilon _{\pi }}),e_{\xi _{k}}\sim Lap(0,\frac{S(\xi )}{\varepsilon _{\xi }}),e_{\Lambda _{k}}\sim Lap(0,\frac{S(\Lambda )}{\varepsilon _{\Lambda }}),e_{\eta _{k}}\sim Lap(0,\frac{S(\eta )}{\varepsilon _{\eta }})\) denote noise respect to \(\pi _{k},\xi _{k},\Lambda _{k},\eta _{k}\).

Post-processing step

The noise parameters \({\bar{\pi }},{\bar{\xi }}_{k},{\bar{\Lambda }}_{k},{\bar{\eta }}_{k}\) which obtained in the Noise adding step may not satisfy some of the basic properties of the original parameters. Firstly, \({\bar{\pi }}\) not satisfied \(\pi _{k} \ge 0\) and \(\sum _{k=1}^n =1\) generally. Secondly, \({\bar{\Lambda }}_{k}\) would not be a positive semi-definite matrix after adding noisy. If the noisy covariance matrix \({\bar{\Lambda }}_{k}\) is not PSD matrix, the other parameters, for instance \({\bar{\xi }}_{k},{\bar{\alpha }}_{k}\) can not be calculated exactly. We need to solve these problems as follow.

First, we use the method of normalized to the \({\bar{\pi }}\). We let \({\bar{\pi }}_{k} =\frac{{\bar{\pi }}_{k} -\min \{{\bar{\pi }}\}}{\max \{{\bar{\pi }}\}-\min \{{\bar{\pi }}\}}\), and make them sum to one by \({\hat{\pi }}_{k} =\frac{{\bar{\pi }}_{k} +\zeta }{\sum _{k=1}^G{\bar{\pi }}_{k}+ G\zeta }\). There \(\zeta\) is a small number, it can be smooth \({\bar{\pi }}\)

Secondly, we can use theory of Higham11 to ensure the covariance matrix \({\bar{\Sigma }}_{k}\) to be PSD through post-processing \({\bar{\Lambda }}_{k}\). Like Wu et al.1, we use the follow equation

where \(\varrho ({\bar{\Lambda }}_{k}) = \min \{r\ge 0:{\bar{\Lambda }}_{k} +rI \succeq 0\}\), \(\succeq 0\) means that all the eigenvalues are not less than 0, I is the identity matrix. We use \({\hat{\Lambda }}_{k}\) to get \({\hat{\xi }}_{k}\), and next we will get \({\hat{\eta }}_{k},{\hat{\Sigma }}_{k}\) and \({\hat{\alpha }}_{k}\) by Eqs. (5) and (7). The parameters \({\hat{\xi }}_{k},{\hat{\Sigma }}_{k},{\hat{\alpha }}_{k}\) will be join into the next iteration calculation.

Although adding two extra steps on original parameters, we can know the parameters \({\hat{\pi }},{\hat{\xi }},{\hat{\alpha }},{\hat{\Sigma }}\) also satisfied \(\varepsilon\)-differential privacy12.

DP-MSNM

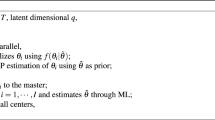

From what discussed above, we give the DP-MSNM algorithm in Algorithm 2, where lines 1–2 allocate the privacy budget and initialize the parameters, lines 3 calculates the \(L_{1}\)-sensitivities of all parameters, lines 5–6 execute the normal E-step and M-step, lines 7–12 execute the noise adding step, lines 13–20 execute the post-processing step. Given total privacy budget \(\varepsilon\), Wu et al.1shows that under the maximum iteration T, Algorithm 2 satisfies \(\varepsilon\)-differential privacy. for \(k=1,\ldots ,G\), we allocate the privacy budget \(\varepsilon _{\Lambda } = \frac{0.6}{T*G}\varepsilon\) to \(\Lambda _{k}\),\(\varepsilon _{\xi } = \frac{0.13}{T*G}\varepsilon\) and \(\varepsilon _{\eta } = \frac{0.13}{T*G}\varepsilon\) to \(\xi _{k}\) and \(\eta _{k}\) respectively, because of the higher \(\varepsilon _{\Lambda }\) can reduce the amount of noise added to the \(\Lambda _{k}\).

Experimental evaluation

Our experimental implemented in R version 3.6.313 based on mixsmsn package7 to get DP-MSNM results, and all experiments are performed on a laptop with Intel Core i7-9750H and 2.60 GHz CPU and 6.00 RAM.

Experimental setup

We evaluate DP-MSNM on two real data sets, the Australian Institute of Sport data set and the 3DRoad Network data set, which from UCI Machine Learning Repository. We choose the columns 2–3 in AIS data set and the columns 2–4 in 3DRoad data set. For convenience, we’ll still assume that the default values for both data sets are the same as those in Wu et al.1. In this article, we use the complete data set, and the default values are recorded in the Table 1.

We use log-likelihood mean \(\frac{1}{n} \log \{ f_{MSNM}(x;\pi ,\xi ,\Sigma ,\alpha )\}\) which is the average log-likelihood of the whole data set to evaluate the performance of DP-MSNM. A larger logarithmic likelihood mean means that the parameters estimated by the model have a better performance. We compare the density estimation effects of SAGMM, DPGMM and DP-MSNM with log-likelihood mean models for the two data sets respectively (see Figs. 1 and 2). In particular, we use AIC and BIC criteria6 to compare the fitting effect of DPGMM algorithm and DP-MSNM algorithm on two data sets.

Results

Compare with DP-GMM and SAGMM

We implement SAGMM by applying the privacy preserving platform GUPT . The default values are the same as that of DP-MSNM . For the number of partitions, and the spherical covariance in SAGMM which is assumed known before the experiment. We set the number of partitions as 5. Different variances are applied and the best spherical covariance is chosen for each experiment. From Figs. 1 and 2 we observed that the performance of the three methods improve when B increases, DP-MSNM outperforms SAGMM and DPGMM. In terms of the shape of the curve in Fig. 1, our results are different from those of Wu et al.1, mainly because we used a larger amount of data in the data set 3DRoad, and in order not to lose data information, we did not normalized each attribute of the data set.

We implement DPGMM by software R with the same environment as the DP-MSNM. From the Figs. 1 and 2 we find the DP-MSNM outperforms DPGMM.

Effect of number of skew-normal distribution (G)

For AIS data set, as can be seen from Table 2, using DPGMM algorithm, the values of AIC and BIC are the minimum when k = 3. By using DP-MSNM algorithm, the values of AIC and BIC are the smallest when k = 2. For 3DRoad data set, as can be seen from Table 3, using DPGMM algorithm, the values of AIC and BIC are the minimum when k = 8. By using DP-MSNM algorithm, the values of AIC and BIC are the smallest when k = 7.

These results is consistent with the results we can see from Figs. 3 and 4, because for AIS data, the log-likelihood mean of DPGMM algorithm at k = 3 is close to that of DP-MSNM algorithm (Fig. 3).A similar thing happened with 3Droad data (Fig. 4).

So, we can conclude that the order of the component has great effect to our results.

Conclusions

In this paper, we proposed DP-MSNM, which is a effective and accurate privacy-preserving mechanism to estimate density. We added noise to original parameters and through post-processing step to ensure weight and covariance parameter have it’s intrinsic characteristics. In the future, we will extend the DP-MSNM model to high-dimensional data. We also plan to construct other Skew-family distributions to differential privacy.

References

Wu, Y., Wu, Y. & Peng, H. Differentially private density estimation via gaussian mixtures model. In IEEE/ACM 24th International Symposium 1–6 (2016).

Kamath, G., Sheffet, O. & Singhal, V. Differentially private algorithms for learning mixtures of separated gaussians. In NeurIPS 1–62 (2019).

Truex, S., Liu, L. & Gursoy, M. Effects of differential privacy and data skewness on membership inference vulnerability. arXiv.org 1–16 (2019).

Dwork, C. & Aaron, R. The algorithmic foundations of differential privacy. Found. Trends Theor. Comput. Sci. 9, 211–407 (2014).

Azzalini, A. & DallaValle, A. The multivariate skew-normal distribution. Biometrika 83, 715–726 (1996).

Contreras-Reyes, J.E. & Cortes, D.D. Bounds on Renyi and Shannon entropies for finite mixtures of multivariate skew-normal distributions: application to swordfish (Xiphias gladius linnaeus). Entropy 18, 382 (2016).

Cabral, C., Lachos, V. & Prates, M. Multivariate mixture modeling using skew-normal independent distributions. Comput. Stat. Data Anal. 56, 126–142 (2012).

Nissim, K., Raskhodnikova, S. & Smith, A. Smooth sensitivity and sampling in private data analysis. STOC 75–84 (2007).

Zhang, J., Xiao, X. & Yang, Y. Privgene: Differentially private model fitting using genetic algorithms. In SIGMOD 665–676 (2013).

Mardia, K. V. Measures of multivariate skewness and kurtosis with applications. Biometrika 57, 519–530 (1970).

Higham, N. Computing a nearest symmetric positive semidefinite matrix. Linear Algebra Appl. 139, 103–118 (1988).

Hay, M., Rastogi, V. & Miklau, G. Boosting the accuracy of differential private histograms through consistency. PVLDB 3, 1021–1032 (2010).

R Core Team. R: A Language and Environment for Statistical Computing. (R Foundation for Statistical Computing, Vienna, 2020).

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant No. 11701071).

Author information

Authors and Affiliations

Contributions

W.W. is the only author and wrote the manuscript text.

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wu, W. Differentially private density estimation with skew-normal mixtures model. Sci Rep 11, 11009 (2021). https://doi.org/10.1038/s41598-021-90276-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-021-90276-6

This article is cited by

-

A federated learning differential privacy algorithm for non-Gaussian heterogeneous data

Scientific Reports (2023)